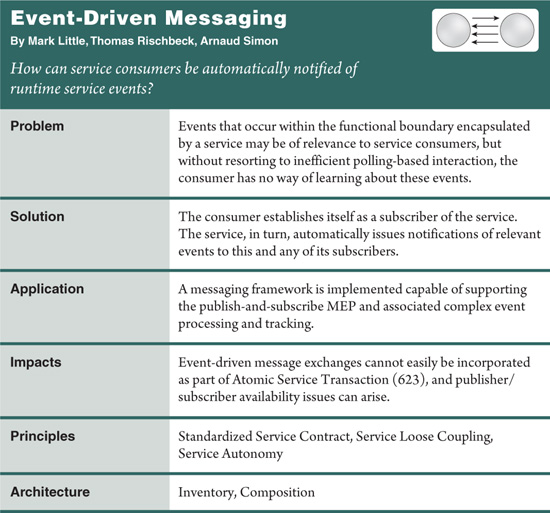

Numerous factors can come into play when designing the possible runtime activity that can occur between services within a composition. These patterns provide various techniques for processing and coordinating data exchanges between services.

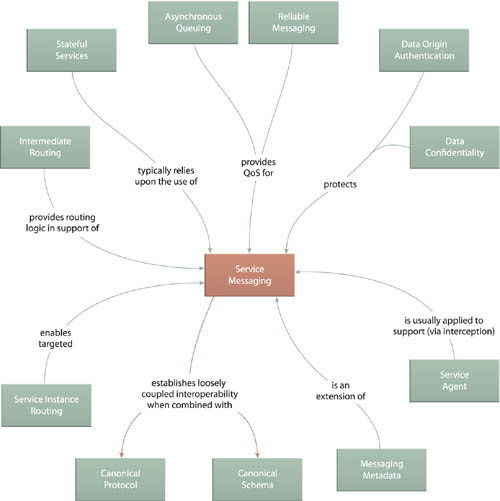

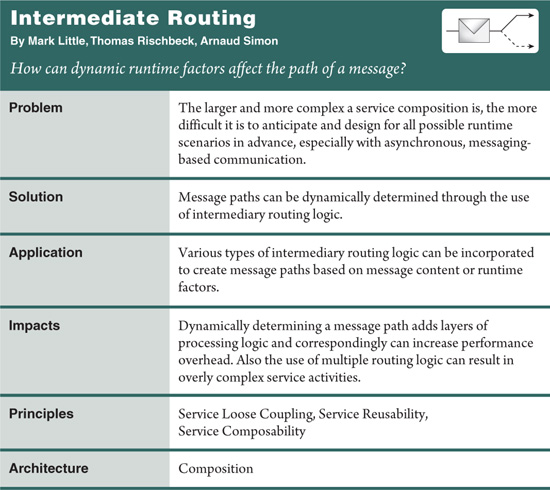

Service Messaging (533) establishes the base pattern that others in this chapter further specialize and build upon. Messaging Metadata (538), for example, extends Service Messaging (533) by providing the opportunity to supplement messages with additional meta details. Transparent intermediary processing is provided by Service Agent (543) as well as the more specialized Intermediate Routing (549).

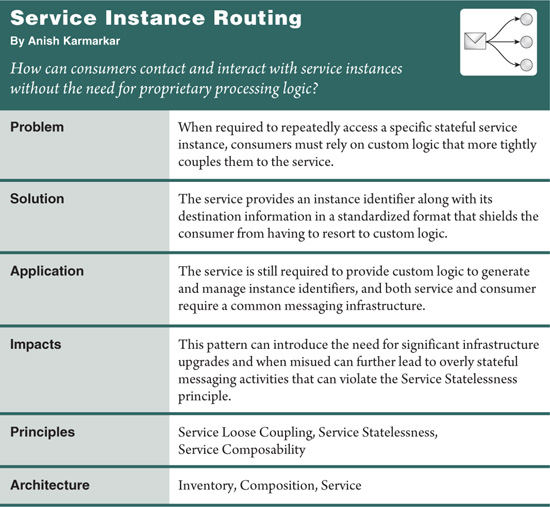

The Service Instance Routing (574), Service Callback (566), and State Messaging (557) patterns explore creative ways to leverage a messaging framework in order to communicate between service instances, form asynchronous messaging interactions, and defer state data to the message layer, respectively.

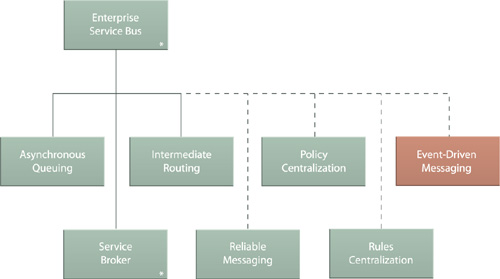

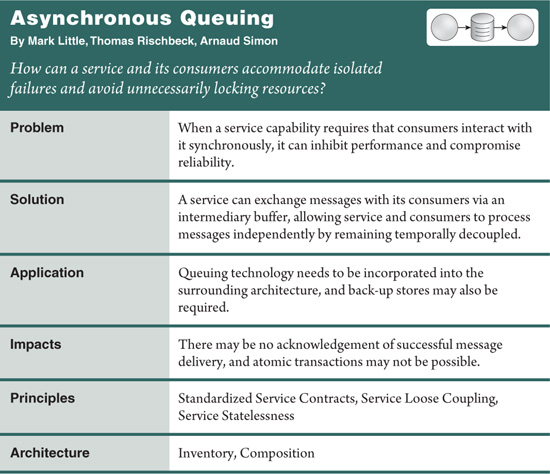

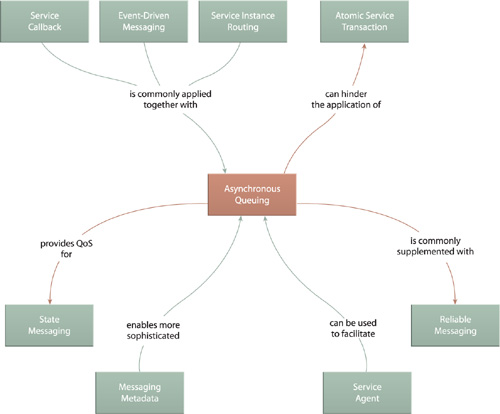

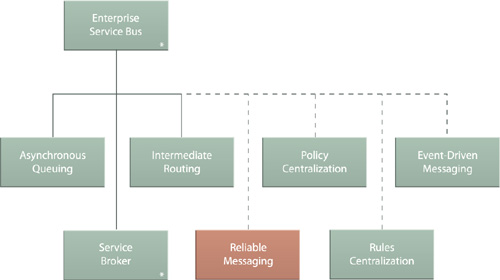

Finally, Asynchronous Queuing (582) and Reliable Messaging (592) provide inventory-level extensions that can improve the quality and integrity of message-based communication, and Event-Driven Messaging (599) establishes the well-known publish-and-subscribe messaging model in support of service interaction.

Common implementations of distributed solutions rely on remote invocation frameworks, such as those based on RPC technology. These communication systems establish persistent connections based on binary protocols to enable the exchange of data between units of logic.

Although efficient and reliable, they are primarily utilized within the boundaries of application environments and for select integration purposes. Positioning an RPC-based component as an enterprise resource with multiple potential consumers can lower its concurrency threshold because of the overhead associated with creating, sustaining, and terminating the required persistent RPC binary connections.

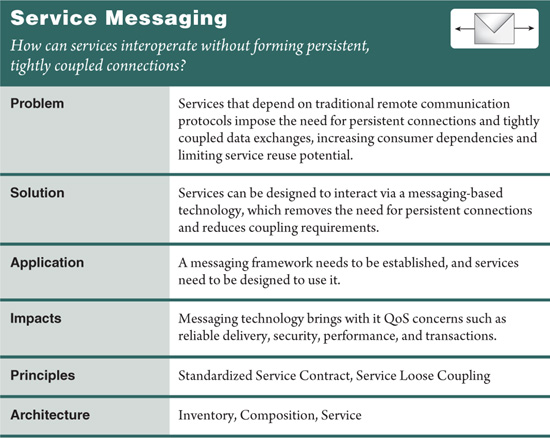

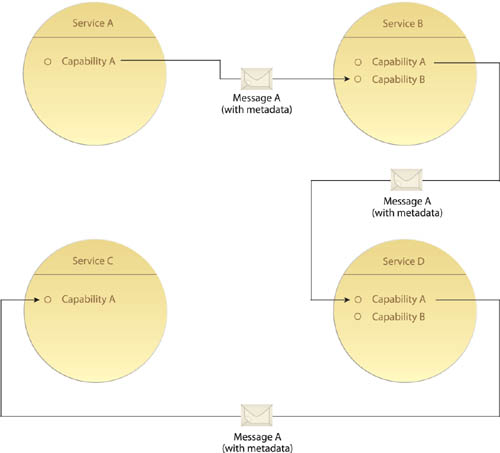

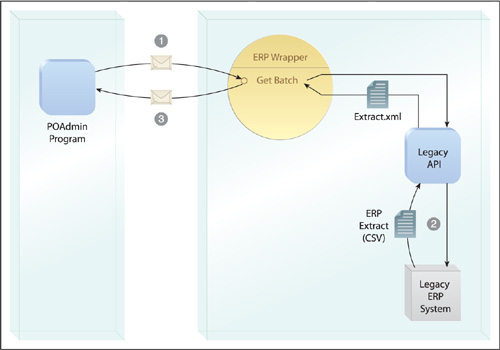

Messaging provides an alternative communications framework that does not rely on persistent connections. Instead, messages are transmitted as independent units of communication routed via the underlying infrastructure (Figure 18.1).

A messaging framework supported by the enterprise’s technical environment needs to be implemented to an extent that it is capable of supporting service interaction requirements. Many established design patterns for messaging frameworks exist, most of which emerged from experience with enterprise integration platforms.

Some messaging frameworks cannot provide an adequate level of QoS to support the high demands that can be placed on services positioned as reusable enterprise resources.

To fully enable the application of Capability Recomposition (526) and many of the supporting patterns, the message framework must provide a means of:

• guaranteeing the delivery of each message or guaranteeing a notification of failed deliveries

• securing message contents beyond the transport

• managing state and context data across a service activity

• transmitting messages efficiently as part of real-time interactions

• coordinating cross-service transactions

Without these types of extensions in place, the availability, reliability, and reusability of services will impose limitations that can undermine the strategic goals associated with SOA in general. As explained in the upcoming Relationships section, several, more specialized patterns address these individual issues.

As one of the most fundamental design patterns in this catalog, Service Messaging ties directly into interoperability design considerations. The success of this pattern is therefore often dependent on the extent to which Canonical Protocol (150) and Canonical Schema (158) are applied within a given inventory.

Service Agent (543) forms a functional relationship with Service Messaging in that event-driven agent programs transparently intercept and process message contents. Messaging Metadata (538) is also closely related because it essentially extends the typical message to incorporate meta details.

Note

As mentioned in Chapter 5, this pattern is related to several patterns documented in Hohpe and Woolf’s book Enterprise Integration Patterns, including Message, Messaging, and Document Message and can further be linked to Message Channel and Message Endpoint. Numerous additional specialized messaging patterns documented in this book were established during the EAI era and can still help solve design problems in support of service-oriented solutions, especially in relation to enabling asynchronous message exchanges.

Persistent binary connections between a service and its consumer place various types of state and context data about the current service activity into memory, allowing routines within service capabilities to access this information as required.

Moving from RPC-based communication toward a messaging-based framework removes this option, as persistent connections are no longer available. This can place the burden of runtime activity management onto the services themselves.

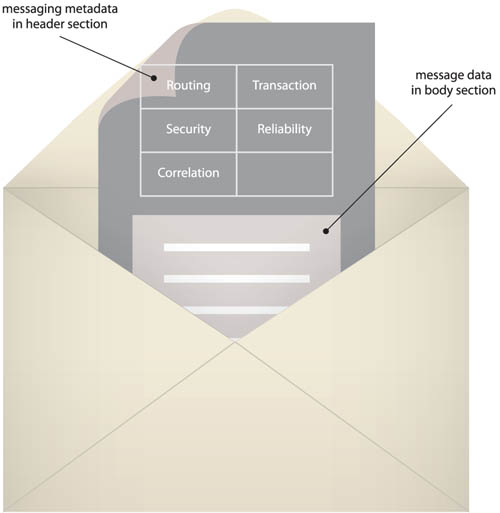

State data, rules, and even processing instructions can be located within the messages. This reduces the need for services to share activity-specific logic (Figure 18.3).

The messaging technology used for service communication needs to support message headers or properties so that the messaging metadata can be consistently located within a reserved part of the message document, as pointed out in Figure 18.4.

Platform-specific technologies, such as JMS, provide support for message headers and properties, as do Web service-related standards, such as SOAP. In fact, many types of messaging metadata have been standardized through the emergence of WS-* extensions that define industry standard SOAP header blocks, as demonstrated in a number of the case studies in this chapter.

Although overall memory consumption is lowered by avoiding a persistent binary connection, performance demands are increased by the requirement for services to interpret and process metadata at runtime. Agnostic services especially can impose more runtime cycles, as they may need to be outfitted with highly generic routines capable of interpreting and processing different types of messaging headers so as to participate effectively in multiple composition activities.

Due to the prevalence and range of technology standards that intrinsically support and are based on Messaging Metadata, a wide variety of sophisticated message exchanges can be designed. This can lead to overly creative and complex message paths that may be difficult to govern and evolve.

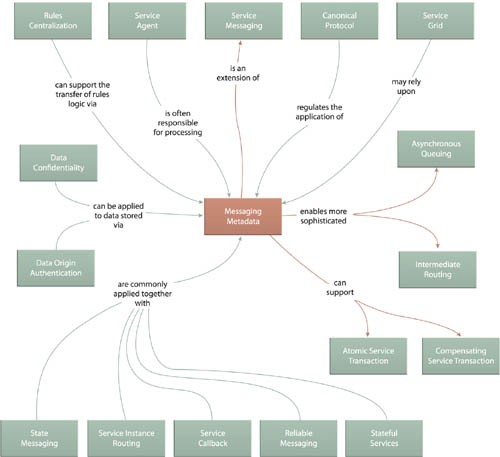

This fundamental pattern can be seen as an extension of Service Messaging (533). Service compositions that rely on industry standard transaction management, security, routing and reliable messaging will utilize specialized implementations of this pattern, as represented by the variety of message-related patterns shown in Figure 18.5.

Note

For more examples of how this pattern is applied with Web services and WS-* standards, see Chapters 6, 7, and 17 in Service-Oriented Architecture: Concepts, Technology, and Design and Chapters 4, 11, 15, 18, and 19 in Web Service Contract Design and Versioning for SOA.

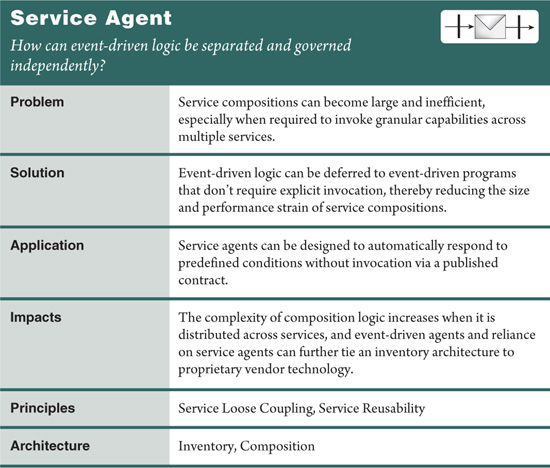

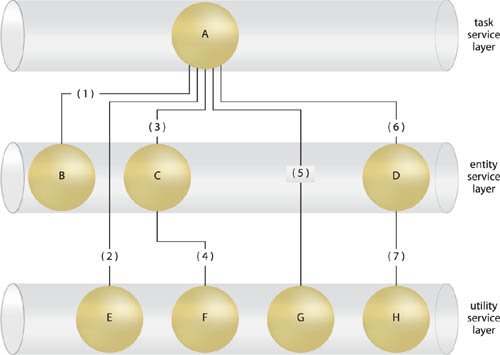

Service composition logic consists of a series of service invocations; each invocation enlisting a service to carry out a segment of the overall parent business process logic. Larger business processes can be enormously complex, especially when having to incorporate numerous “what if” conditions via compensation and exception handling sub-processes. As a result, service compositions can grow correspondingly large (Figure 18.6).

Furthermore, each service invocation comes with a performance hit resulting from having to explicitly invoke and communicate with the service itself. The performance of larger compositions can suffer from the collective overhead of having to invoke multiple services to automate a single task.

Service logic that is triggered by a predictable event can be isolated into a separate program especially designed for automatic invocation upon the occurrence of the event (Figure 18.7). This reduces the amount of composition logic that needs to reside within services and further decreases the quantity of services (or service invocations) required for a given composition.

The event-driven logic is implemented as a service agent—a program with no published contract that is capable of intercepting and processing messages at runtime. Service agents are typically lightweight programs with modest footprints and generally contain common utility-centric processing logic.

For example, vendor runtime platforms commonly provide “system-level” agents that carry out utility functions such as authentication, logging, and load balancing. Service agents can also be custom-developed to provide business-centric and/or single-purpose logic as well.

As first introduced in Figure 4.14 in the Service Architecture section of Chapter 4, the message processing logic that is a natural part of any Web service implementation actually consists of a series of system (and perhaps custom) service agents that collectively carry out necessary runtime actions.

Note

Service agents are most commonly deployed to facilitate inter-service communication, but they can also be utilized within service architectures. In fact, intra-service use of agents can avoid some of the vendor dependency issues that arise with inter-service agent usage, as explained next in the Impacts section.

Event-driven agents provide yet another layer of abstraction to which multiple service compositions can form dependencies. Although the perceived size of the composition may be reduced, the actual complexity of the composition itself does not decrease. Composition logic is simply more decentralized as it now also encompasses service agents that automatically perform portions of the overall task.

Overuse of this design pattern can result in an inventory architecture that is difficult to build services for. With too many service agents transparently performing a range of functions, it can become too challenging to design composition architectures that take all possible agent-related processing scenarios into account. Furthermore, some service agent programs may end up conflicting with other service agents or other service logic.

Governance can also become an issue in that service agents will need to be owned and maintained by a separate group that needs to understand the inventory-wide impacts of any changes made to agent logic. For example, system service agents can be subject to behavioral changes as a result of runtime platform upgrades. An agent versioning system will be further required to address these challenges.

The event-driven programs created as a result of applying this pattern become a common and intrinsic part of service-oriented inventory architectures. The type of logic they encapsulate is comparable to utility logic, and therefore similar design considerations are most commonly applied. Either way, Service Agent’s most fundamental relationships are with Service Messaging (533) and Messaging Metadata (538).

As previously mentioned, the overuse of this pattern can lead to an undesirably high level of dependency on a vendor platform. This can be due to the need to build custom service agents with proprietary programming languages or because services rely too heavily on the proprietary agents provided by vendor runtime environments. Canonical Resources (237) can alleviate this, but it does not directly regulate the quantity of produced agents.

A service composition can be viewed as a chain of point-to-point data exchanges between composition participants. Collectively, these exchanges end up automating a parent business process.

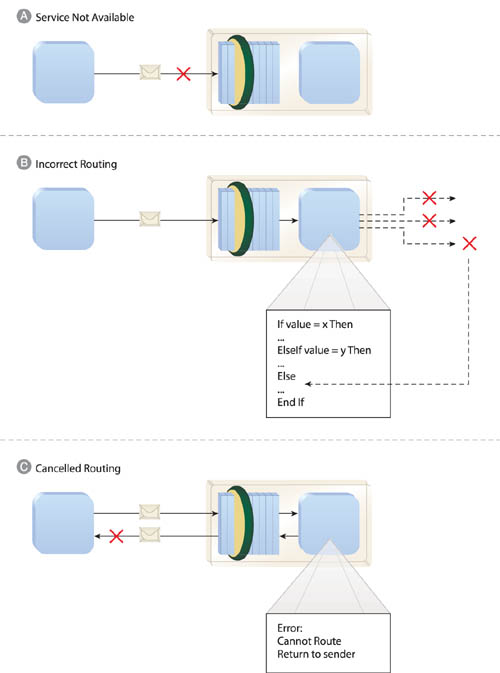

The message routing logic (the decision logic that determines how messages are passed from one service to another) can be embedded within the logic of each service in a composition. This allows for the successful execution of predetermined message paths. However, there may be unforeseen factors that are not accounted for in the embedded routing logic, which can lead to unanticipated system failure.

For example:

• The destination service a message is being transmitted to is temporarily (or even permanently) unavailable.

• The embedded routing logic contains a “catch all” condition to handle exceptions, but the resulting message destination is still incorrect.

• The originally planned message path cannot be carried out, resulting in a rejection of the message from the service’s previous consumer.

Figure 18.10 illustrates these scenarios.

Figure 18.10 A message transmission fails because the service is not available (A). Internal service routing logic is insufficient and ends up sending the message to the wrong destination (B). Internal service logic is incapable of routing the message and simply rejects it (C), effectively terminating the service activity.

Alternatively, there may simply be functional requirements that are dynamic in nature and for which services cannot be designed in advance.

Generic, multi-purpose routing logic can be abstracted so that it exists as a separate part of the architecture in support of multiple services and service compositions. Most commonly this is achieved through the use of event-driven service agents that transparently intercept messages and dynamically determine their paths (Figure 18.11).

Figure 18.11 A message passes through two router agents before it arrives at its destination. The Rules-Based Router identifies the target service based on a business rule that the agent dynamically retrieves and interprets, as a consequence of Rules Centralization (216). The Load Balancing Router then checks current usage statistics for that service before it decides which instance or redundant implementation of the service to send the message to.

This pattern is usually applied as a specialized implementation of Service Agent (543). Routing-centric agents required to perform dynamic routing are often provided by messaging middleware and are a fundamental component of ESB products. These types of out-of-the-box agents can be configured to carry out a range of routing functions. However, the creation of custom routing agents is also possible and not uncommon, especially in environments that need to support complex service compositions with special requirements.

Common forms of routing functionality include:

• Content-Based Routing – Essentially, this type of routing determines a message’s path based on its contents. Content-based routing can be used to model complex business processes and provide an efficient way to recompose services on the fly. Such routing decisions may need to involve access to a business rules engine to accurately assess message destinations.

• Load Balancing – This form of routing agent has become an important part of environments where concurrent usage demands are commonplace. A load balancing router is capable of directing a message to one or more identical service instances in order to help the service activity be carried out as efficiently as possible.

• 1:1 Routing – In this case, the routing agent is directly wired to a single physical service at any point in time. When messages arrive, the agent is capable of routing them to different service instances or redundant service implementations. This accommodates standard fail-over requirements and allows services to be maintained or upgraded without risking “disruption of service” to consumers.

Regardless of the nature of the routing logic, it is desirable to be able to update and modify routing parameters dynamically—ideally even by business analysts so that they can adapt and control the business logic in real-time. This is particularly important when business logic is subject to frequent change. If changes are extremely frequent, it can be further beneficial to model the routing logic through the extraction of complex business rules that describe declarative logic on top of the message content and use the outcome to make the routing decision.

A more frugal alternative is to employ content-based routing using XPath or XQuery expressions. However, these languages require technically more involved personnel for their control and maintenance.

The usage of routing agents allows the automation of complex decisions and the quick adaptation to changing business requirements. However, the complexity and flexibility of incorporating intermediate routing logic into composition architectures is not without disadvantages:

• Dynamic modification of routing rules at runtime can introduce the risk of having previously untested logic set into production. If possible, routing rule-set changes should first be put through a conventional staging process.

• Dynamic routing paths can be elaborate and therefore difficult to manage and update, leading to a risk of unexpected failure conditions. A centralized routing rule management system can help alleviate the risk of introducing potential points of failure.

• Physically separated routing logic will naturally add performance overhead when compared to direct service-to-service communication, where the routing logic is embedded within the consumer program.

Additionally, security can be a concern when applying this pattern. You may want to control who will and will not process a message containing sensitive data. An inventory architecture with many built-in intermediate routing agents can provide native functionality that conflicts with some security requirements.

Routing functionality is a fundamental part of messaging frameworks and can therefore be associated with most messaging-related design patterns. The separation of metadata provided by Messaging Metadata (538) allows for the more advanced forms of routing described earlier in the Application section. Also when implementing routing logic with Service Agent (543), Canonical Resources (237) can influence the platform and technologies used to build and host the agent programs.

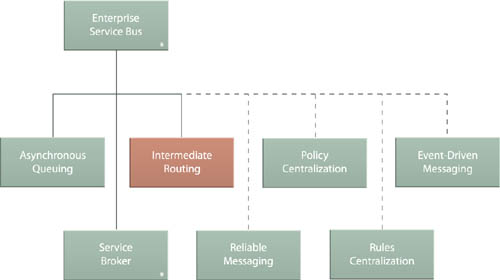

Because of their messaging-centric feature-sets, ESB platforms are fully expected to carry out routing functionality in support of sophisticated service activity process. Intermediate Routing is therefore one of the three core patterns that represent Enterprise Service Bus (704).

Figure 18.13 Intermediate Routing is one of the patterns that comprise Enterprise Service Bus (704).

Note

Depending on how it is applied, the runtime routing logic established by Intermediate Routing is comparable to Content-Based Router (Hohpe, Woolf), Dynamic Router (Hohpe, Woolf), and Message Router (Hohpe, Woolf). Several more message routing patterns are described in the Enterprise Integration Patterns catalog. Intermediate Routing highlights the most common routing options used for service composition architectures.

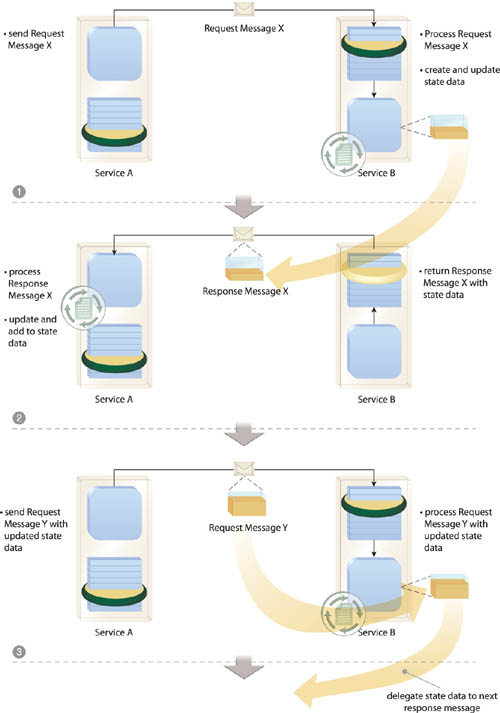

Services are sometimes required to be involved in runtime activities that span multiple message exchanges. In these cases, a service may need to retain state information until the overarching task is completed. This is especially common with services that act as composition controllers.

By default, services are often designed to keep this state data in memory so that it is easily accessible and essentially remains alive for as long as the service instance is active (Figure 18.15). However, this design approach can lead to serious scalability problems and further runs contrary to the Service Statelessness design principle.

Figure 18.15 This figure shows just a part of a larger conversational exchange betwen two services. Service A, acting as a service consumer, issues a Request Message X to Service B (1). Service B creates the necessary data structures to maintain the state associated with processing Request Message X and updates the data structures after processing is completed. Service A then issues another request to Service B (2), which Service B then processes, resulting in an update of the state data that also increases the quantity of state data Service B must retain (which, ultimately, results in scalability problems).

Instead of the service maintaining state data in memory, it moves the data to the message. During a conversational interaction, the service retrieves the latest state data from the next input message (Figure 18.16).

Figure 18.16 Service A, acting as a service consumer, issues Request Message X to Service B (1). Service B creates the necessary data structures to maintain the necessary state and updates the data structures after processing this message. Service B then adds the state data to Response Message X, which it then returns back to Service A (2). Service A processes the response and then generates Request Message Y containing updated state data, which is then received and processed by Service B (3).

There are two common approaches to applying this pattern, both of which affect how the service consumer relates to the state data:

• The consumer retains a copy of the latest state data in memory and only the service benefits from delegating the state data to the message. This approach is suitable for when this pattern is implemented using WS-Addressing, due to the one-way conversational nature of Endpoint References (EPRs).

• Both the consumer and the service use messages to temporarily off-load state data. This two-way interaction with state data may be appropriate when both consumer and service are actual services within a larger composition. This technique can be achieved using custom message headers.

With either approach, this pattern requires that the messaging infrastructure be capable of distinguishing between message body content (or payload data) and supplementary metadata commonly stored in message headers. Whereas with WS-Addressing these message headers can be processed by many modern messaging products and platforms, the custom header approach requires extra custom development effort.

It is important to note that both techniques introduce the need for proprietary service logic. While WS-Addressing standardizes the EPR wrapper elements used to house state data, it does not standardize the expression of the state data itself. When using custom headers, the need for proprietary processing logic required to extract and process state data from messages will span to both consumer and service.

Note

For examples of pre-defined SOAP headers that are suitable for sophisticated, two-way conversational message exchanges, view the WS-Context specification accessible via SOASpecs.com.

When following the two-way model with custom headers, messages that are lost due to runtime failure or exception conditions will further lose the state data, thereby placing the overarching task in jeopardy.

It is also important to consider the security implications of state data placed on the messaging layer. For services that handle sensitive or private data, the corresponding state information should either be suitably encrypted and/or digitally signed, and it is not uncommon for the consumer to not gain access to protected state data.

Furthermore, because this pattern requires that state data be stored within messages that are passed back and forth with every request and response, it is important to consider the size of this information and the implications on bandwidth and runtime latency. As with other patterns that require new infrastructure extensions, establishing inventory-wide support for State Messaging will introduce cost and effort associated with the necessary infrastructure upgrades.

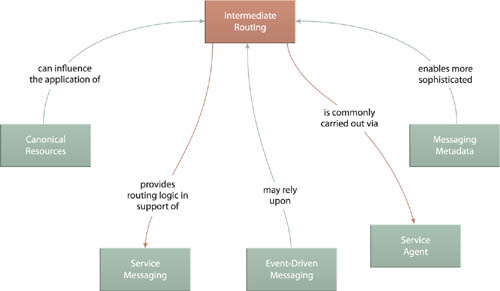

State Messaging is based on Service Messaging (533) and further utilizes Messaging Metadata (538) to represent state information within header blocks. This pattern can also be used in conjunction with Service Instance Routing (574) in such a way that only part of the state is maintained by the consumer, while the other part is managed by the service. This can lead to reduced message sizes and memory requirements.

Asynchronous Queuing (582) and Reliable Messaging (592) can be further utilized to provide increased robustness within the underlying infrastructure so that the state data remains protected against runtime failures and errors, even while in transit.

Also, as mentioned earlier, the use of state data with security requirements may demand that this pattern be combined with Data Confidentiality (641) and/or Data Origin Authentication (649).

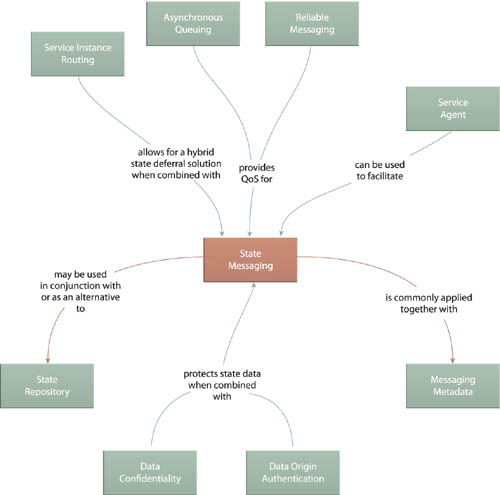

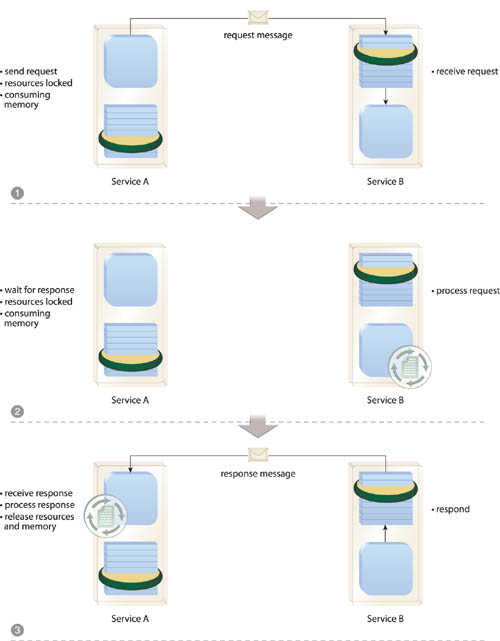

When service logic requires that a consumer request be responded to with multiple messages, a standard request-response messaging exchange is not appropriate. Similarly, when a given consumer request requires that the service perform prolonged processing before being able to respond, synchronous communication is not possible without jeopardizing scalability and reliability of the service and its surrounding architecture (Figure 18.18).

Figure 18.18 Service A, acting as the service consumer, issues a request message to Service B (1), and because it is part of a synchronous data exchange, Service A is required to wait (2) until Service B processes the request message and then transmits a response (3). During this waiting period, both service and consumer must be available and continue to use up memory.

Services are designed in such a manner that consumers provide them with a callback address at which they can be contacted by the service at some point after the service receives the initial consumer request message (Figure 18.19). Consumers are furthermore asked to supply correlation details that allow the service to send an identifier within future messages so that consumers can associate them with the original task.

Figure 18.19 Service A sends a message containing the callback address and correlation information to Service B (1). While Service B is processing the message, Service A is unblocked (2). Service B, at some later point in time, sends a response containing the correlation information to the callback address to Service A (3). While Service B retains this callback address, it can continue to issue subsequent response messages to Service A.

Services designed to support this pattern must be able to preserve callback addresses and associated correlation data, especially when using this technique for longer running service-side processes that may include extended periods of inactivity (such as when waiting for human interaction to occur). While this may be built into the actual service architecture, the management of this information is often assumed by the surrounding inventory infrastructure, especially when implementing this pattern with established standards, such as WS-Addressing.

On the consumer side, this pattern can be supported through the use of event-driven agent programs that are positioned to listen for service response messages. This again may be provided by the infrastructure extensions themselves.

Furthermore, because a consumer will not be expecting an immediate response to its original request, it will commonly move on to other tasks while the service continues with its processing. The consumer architecture may therefore need to be able to support the scenario where service requests are received and temporarily stored until the consumer is ready to process them. This is why this pattern is often implemented with the support of messaging queues.

Also, because the callback address does the job of redirecting service responses, the service needs to ensure that the callback address is a trusted destination for its messages.

The asynchronous nature of the messaging introduced by this pattern can reduce reliability due to the absence of the immediate feedback received with standard synchronous exchanges. Reliable Messaging (592) can be applied to alleviate this risk.

Establishing an architecture whereby all services within a given inventory support WS-Addressing can introduce significant costs associated with necessary infrastructure upgrades. However, not all services will likely require this pattern, which may allow for its application to be limited to select composition architectures.

Service Callback is based on the use of Service Messaging (533) and will normally require the application of Messaging Metadata (538) to represent the callback and correlation details separately from the message body content. In fact, Messaging Metadata (538) can be considered a critical requirement with respect to transferring the callback address and correlation information.

Furthermore, the need to coordinate asynchronous requests and possible multiple responses will typically involve Service Agent (543), especially when this pattern is applied as a result of infrastructure extensions.

While Service Callback may be considered an alternative to Asynchronous Queuing (582), it is common to use message queues together with issuing messages that contain callback addresses. This pattern can be supported through the use of Event-Driven Messaging (599) and Reliable Messaging (592).

There are cases where a consumer sends multiple messages to a service and the messages need to be processed within the same runtime context. Such services are intentionally designed to remain stateful so that they can carry out conversational or session-centric message exchanges.

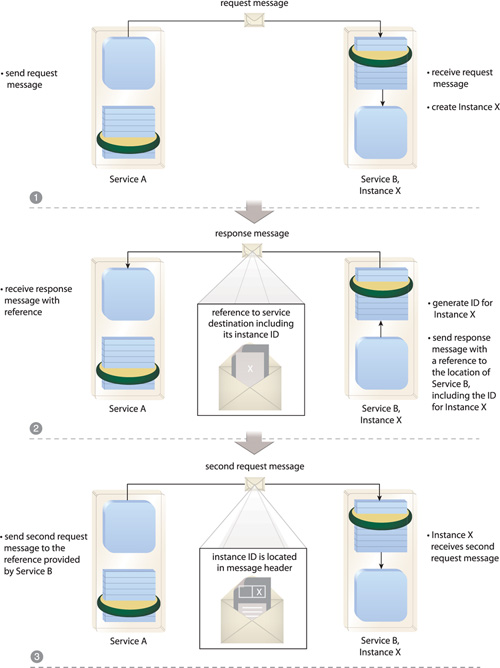

However, service contracts generally do not provide a standardized means of representing or targeting instances of services. Therefore, consumer and service designers need to resort to passing proprietary instance identifiers as part of the regular message data, which results in the need for proprietary instance processing logic (Figure 18.21).

Figure 18.21 Service A, acting as a service consumer, issues a request message to Service B. An instance of Service B is created (1) using proprietary internal service logic that labels the instance as “Instance X.” Service B returns an identifier for Instance X as part of the response message body back to Service A (2). Proprietary processing logic within Service A locates and extracts the embedded instance identifier and then embeds it into a second message that it sends to Instance X of Service B (3).

Note that in the scenario depicted in Figure 18.21, the lifecycle of the instances and the routing of the messages are managed by Service B. Throughout this exchange, Service A remains aware of any instance identifiers generated by Service B. Given that every such conversation can be different, there is no uniformity, and instance details are always required to be processed by custom logic that ends up increasing the coupling between the service and any of its consumers.

The underlying infrastructure is extended to support the processing of message metadata that enables a service instance identifier to be placed into a reference to the overall destination of the service (Figure 18.22). This reference (also referred to as an endpoint reference) is managed by the messaging infrastructure so that messages issued by the consumer are automatically routed to the destination represented by the reference.

Figure 18.22 Service A, acting as a service consumer, issues a request message to Service B. Instance X of Service B is created (1), and a new message containing a reference to the destination of Service B (which includes the Instance X identifier) is returned back to Service A (2). Service A issues a second message that is routed to Instance X of Service B (3) without the need for proprietary logic. The instance identifier is located in the header of this message and is therefore kept separate from the message body.

As a result, the processing of instance IDs does not negatively affect consumer-to-service coupling because consumers are not required to contain proprietary service instance processing logic. Because the instance identifiers are part of a reference that is managed by the infrastructure, they are opaque to consumers. This means that consumers do not need to be aware of whether they are sending messages to a service or one of its instances because this is the responsibility of the routing logic within the messaging infrastructure.

The echoing of the service instance identifier in conversational messages needs to be incorporated on the consumer-side messaging framework and architecture. An infrastructure that supports service instance routing is therefore required. When building services as Web services, this pattern is typically applied using infrastructure extensions compliant with the WS-Addressing specification.

Applying this pattern across an entire service inventory requires that the necessary infrastructure extensions be established as part of the inventory architecture. This can lead to increased costs and governance effort.

Service Instance Routing can be used to create highly stateful services designed to carry out prolonged conversational message exchanges. While stateful interaction is often required, it is easy to apply this pattern to such an extent that it runs contrary to the Service Statelessness principle, thereby undermining the importance of long-term service scalability.

Furthermore, because service instance identifiers are valid only during the lifecycle of the instance, there is the danger that stale identifiers may be inadvertently used for invocation. Controls are required to ensure that identifiers are destroyed after the end of each service instance.

Service Instance Routing is naturally related to Messaging Metadata (538) because it applies to messages that generally require the use of message headers. The actual mechanics behind the implementation of the infrastructure extensions necessary for this pattern often rely on the use of event-driven intermediaries to carry out the message header process, which is why this pattern is frequently associated with Service Agent (543).

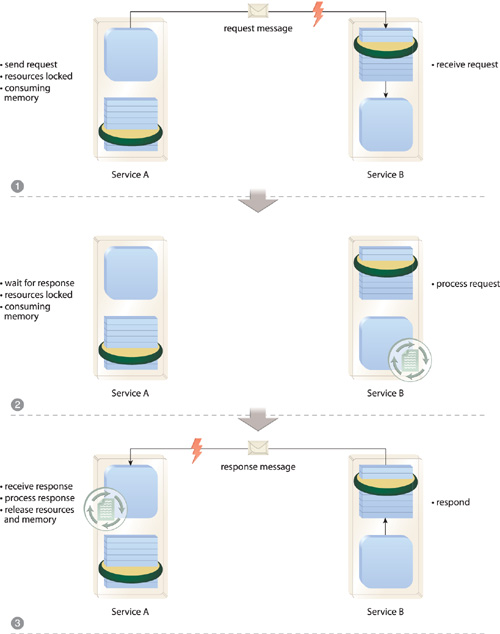

Synchronous communication requires an immediate response to each request and therefore forces two-way data exchange for every service interaction. When services need to carry out synchronous communication, both service and service consumer must be available and ready to complete the data exchange. This can introduce reliability issues when either the service cannot guarantee its availability to receive the request message or the service consumer cannot guarantee its availability to receive the response to its request.

Because of its sequential nature, synchronous message exchanges can further impose processing overhead, as the service consumer needs to wait until it receives a response from its original request before proceeding to its next action. As shown in Figure 18.24, prolonged responses can introduce latency by temporally locking both consumer and service.

Figure 18.24 Service A, acting as the service consumer, issues a request message to Service B (1), and because it is part of a synchronous data exchange, Service A is required to wait (2) until Service B processes the request message and then transmits a response (3). During this waiting period, both service and consumer must be available and continue to use up memory. Because Asynchronous Queuing and Service Callback (566) both enable asynchronous messaging as an alternative to synchronous communication, this figure is identical to Figure 18.18, except for the red lightning bolt symbols which hint at the reliability problem also addressed by this pattern.

Another problem forced synchronous communication can cause is an overload of services required to facilitate a great deal of concurrent access. Because services are expected to process requests as soon as they are received, usage thresholds can be more easily reached, thereby exposing the service to multi-consumer latency or overall failure.

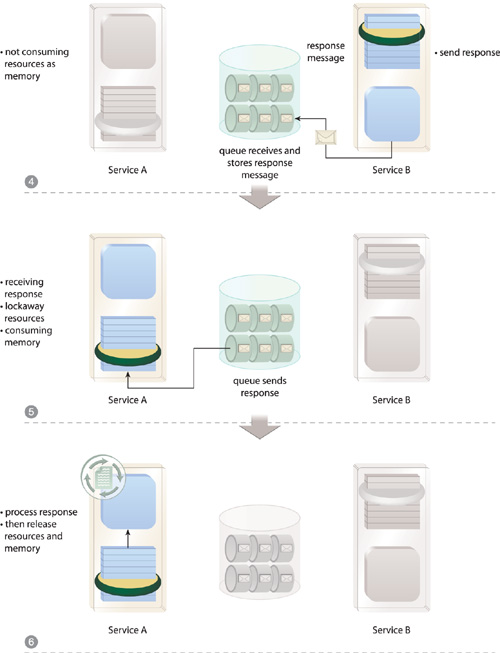

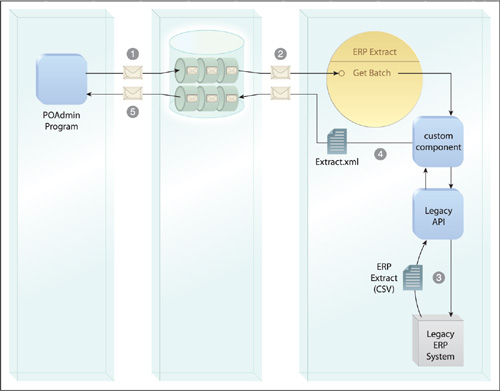

A queue is introduced as an intermediary buffer that receives request messages and then forwards them on behalf of the service consumers (Figure 18.25). If the target service is unavailable, the queue acts as temporary storage and retains the message. It then periodically attempts retransmission.

Similarly, if there is a response, it can be issued through the same queue that will forward it back to the service consumer when the consumer is available. While either service or consumer is processing message contents, the other can deactivate itself (or move on to other processing) in order to minimize memory consumption (Figure 18.26).

In modern ESB platforms, the use of a queue can be completely transparent, meaning that neither consumer nor service may know that a queue was involved in a data exchange. The queuing framework can be supported by intelligent service agents that detect when a queue is required and intercept message transmissions accordingly.

The queue can be configured to process messages in different ways and is typically set up to poll an unavailable target recipient periodically until it becomes available or until the message transmission is considered to have failed. Queues can further be used to leverage asynchronous message exchange by incorporating topics and message broadcasts to multiple consumers, as per Event-Driven Messaging (599).

Many vendor queues are equipped with a back-up store so that messages in transit are not lost should a system failure occur. Especially when supporting more complex compositions, Asynchronous Queuing is commonly applied in conjunction with Reliable Messaging (592).

Note

In some platforms, services to which the queue is expected to forward messages need to be pre-registered with the queue in advance. There are other common characteristics about the use of messaging queues that are not explained in this pattern, such as a “pull” based architecture wherein services are required to poll the queue to retrieve messages instead of the “push” model described so far. This pattern does not intend to describe the usage of messaging queues in general; it is focused solely on asynchronous messaging in response to the problem defined in the preceding Problem section.

The use of intermediary queues allows for creative asynchronous message exchange patterns that can optimize service interaction by eliminating the need for a required response to each request. However, asynchronous message exchanges can also lead to more complex service activities that are difficult to design. It may be challenging to anticipate all of the possible runtime scenarios at design-time, and therefore extra exception handling logic may be necessary.

An asynchronous data exchange that involves a queue can also be more difficult to control and monitor. It may not be possible to protect asynchronous activities with Atomic Service Transaction (623) because of the time-response constraints usually associated with transactions and their requirements to hold resources in suspension until either commit or rollback instructions are issued.

Furthermore, an advantage to synchronous messaging is that because a response is always required, it acts as an immediate acknowledgement that the initial request message was successfully delivered and processed. With asynchronous message exchange patterns, no response is expected, and the message issuer therefore is not necessarily notified of successful or failed deliveries. However, most queuing systems allow the monitoring and administration of in-flight message transmissions. Messages in the queue can be further examined and managed during transit, which in larger systems can greatly simplify administrative control and the isolation of communication faults.

Asynchronous Queuing is a design pattern dedicated to accommodating message exchanges and therefore is naturally related to Service Messaging (533). Event-driven agents form a fundamental part of the queuing framework, which explains the relevance of Service Agent (543). Furthermore, Messaging Metadata (538) can play a role in how messages are processed, stored, or routed via these agents.

ESB platforms are fundamentally about decreasing the coupling between different parts of a service-oriented solution, which is why this pattern is a core part of Enterprise Service Bus (704), as shown in Figure 18.28.

Figure 18.28 Asynchronous Queuing is a design pattern that can be applied independently but also represents one of the core patterns that comprise Enterprise Service Bus (704).

An optional design pattern associated with the ESB is Reliable Messaging (592), which is a pattern commonly applied in conjunction with Asynchronous Queuing. Together, these two patterns provide key QoS extensions that make the use of ESB products attractive, especially in support of complex service compositions.

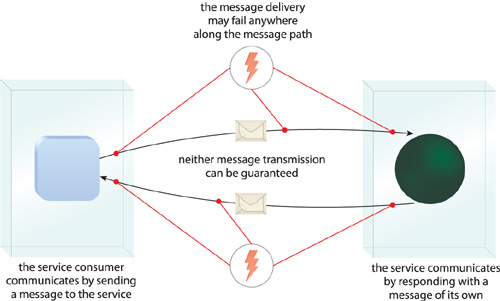

When services are designed to communicate via messages, there is a natural loss of quality-of-service due to the stateless nature of underlying messaging protocols, such as HTTP. Unlike with binary communication protocols where a persistent connection is maintained until the data transmission between a sender and receiver is completed, with message exchanges the runtime platform may not be able to provide feedback to the sender as to whether or not a message was successfully delivered (Figure 18.31).

Furthermore, because the probability of failure is exacerbated as the service count (and the number of corresponding network links) grows with service compositions increasing in size and complexity, the inability of an infrastructure to introduce guaranteed message delivery can introduce measurable risk factors into service composition architectures (especially those that rely heavily on agnostic services).

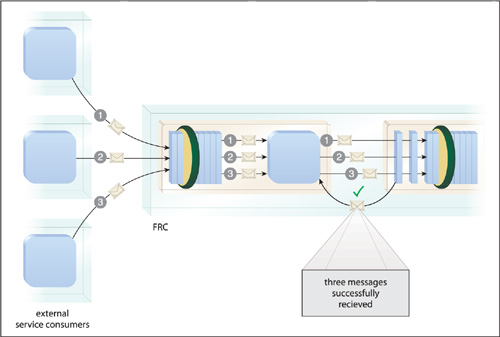

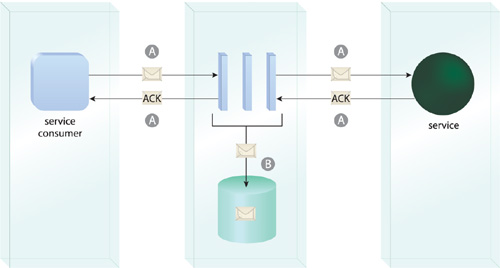

The inventory architecture is equipped with a reliability framework that tracks and temporarily persists message transmissions and issues positive and negative acknowledgements to communicate successful and failed transmissions to message senders.

A complete reliability framework is typically comprised of infrastructure and intermediary processing logic capable of:

• guaranteeing message delivery during failure conditions via the use of a persistence store

• tracking messages at runtime

• issuing acknowledgements for individual or sequences of messages

The repository used for guaranteed delivery may provide the option to store messages in memory or on disk so as to act as a back-up mechanism for when message transmissions fail. This central storage also eases the management and administration of service-oriented solutions because it allows administrators to track the status of messages and trace the causes behind unresolved delivery problems.

Reliability agents further manage the confirmation of successful and failed message deliveries via positive (ACK) and negative (NACK) acknowledgement notifications. Messages may be transmitted and acknowledged individually, or they may be bundled into message sequences that are acknowledged in groups (and may also have sequence-related delivery rules).

Figure 18.32 When building services as Web services, this pattern is commonly applied by implementing a combination of the WS-ReliableMessaging standard (A) and guaranteed delivery extensions, such as a persistent repository (B). This figure highlights the typical moving parts of the resulting reliability framework.

Reliable Messaging introduces a layer of processing that includes runtime message capture, persistence, tracking, and acknowledgement notification issuance. All of these features add moving parts to an inventory architecture that demand additional performance and guarantee requirements and increase the complexity of service-oriented solutions proportional to the size of their service compositions.

Furthermore, due to the temporary storage of messages, the incorporation of positive and negative acknowledgement notifications, and the use of various delivery rules (including those based on group message delivery via sequences), it may not be possible to wrap services using reliability features into atomic transactions, as per Atomic Service Transaction (623).

Applying this pattern directly affects messaging-related patterns in that it changes how messages are transmitted and delivered. The quality of Service Messaging (533) is improved, and Messaging Metadata (538) is commonly utilized to manage and track messages via reliability agents that can be considered specialized implementations of Service Agent (543). Considerations arise from the application of Canonical Resources (237) can help ensure that an inventory architecture standardizes on a single reliability framework.

Because of the importance of guaranteeing message delivery and improving the overall quality of base messaging frameworks, the runtime functionality established by applying Reliable Messaging is typically associated with ESB platforms (Figure 18.34).

Figure 18.34 Reliable Messaging can be applied by itself, but is also a pattern commonly realized via Enterprise Service Bus (704).

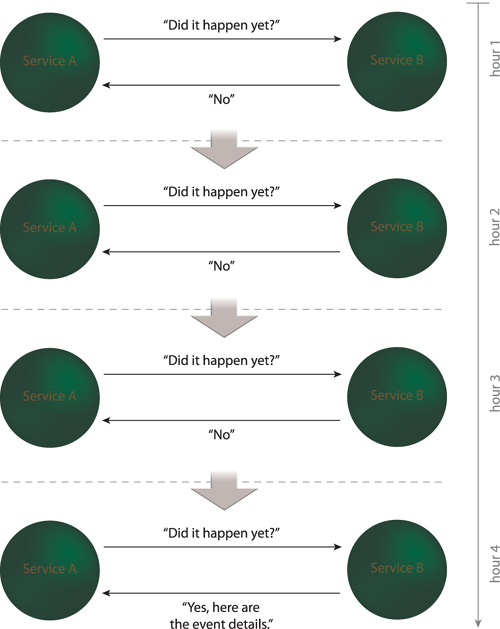

In typical messaging environments, service consumers can choose between one-way and request-response message exchange patterns (MEPs), but both need to originate from the consumer. Events may occur within the service provider’s functional boundary that are of interest to the consumer. Following traditional MEPs, the consumer would need to continually poll the service in order to find out whether such an event had occurred (and to then retrieve the corresponding event details).

This model is inefficient because it leads to numerous unnecessary service invocations and data exchanges (Figure 18.36). It can further introduce delays as to when the consumer receives the event information because it may be only able to check for the event at predetermined polling intervals.

Figure 18.36 Service A (acting as a service consumer) polls Service B on an hourly basis for information about an event that Service A is interested in. Each polling cycle involves a synchronous, request-response message exchange. After the fourth hour, Service A learns that the event has occurred and receives the event information.

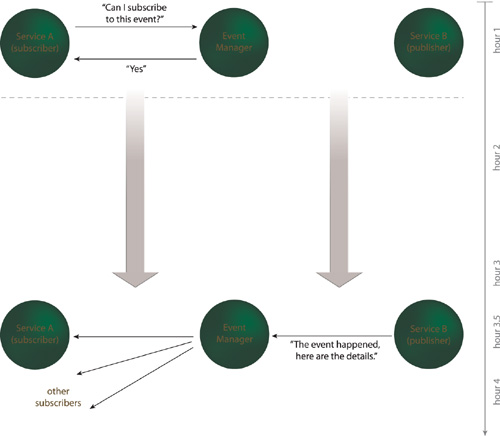

An event management program is introduced, allowing the service consumer to set itself up as a subscriber to events associated with a service that assumes the role of publisher. There may be different types of events that the service makes available, and consumers can choose which they would like to be subscribed to.

When such an event occurs, the service (acting as publisher) automatically sends the event details to the event management program, which then broadcasts an event notification to all of the consumers registered as subscribers of the event (Figure 18.37).

Figure 18.37 Service A requests that it be set up as a subscriber to the event it is interested in by interacting with an event manager. Once the event occurs, Service B forwards the details to the event manager which, in turn, notifies Service A (and all other subscribers) via a one-way, asynchronous data transfer. Note that in this case, Service A also receives the event information earlier because the event details can be transmitted as soon as they’re available.

Note

The solution proposed by this pattern is closely related to the event-driven architecture (EDA) model. The upcoming ESB Architecture for SOA title that will be released as part of this book series will explore how event-driven messaging is supported via Enterprise Service Bus (704) and will further provide more detailed, ESB-specific design patterns.

An event-driven messaging framework is implemented as an extension to the service inventory. Runtime platforms, messaging middleware, and ESB products commonly provide the necessary infrastructure for message processing and tracing capabilities, along with service agents that supply complex event processing, filtering, and correlation.

Event-Driven Messaging is based on asynchronous message exchanges that can occur sporadically, depending on whenever the service-side events actually occur. It therefore may not be possible to wrap these exchanges within controlled runtime transactions.

Furthermore, because notification broadcasts cannot be predicted, the consumer must always be available to receive the notification message transmissions. Also, messages are typically issued via the one-way MEP, which does not require an acknowledgement response from the consumer.

Both of these drawbacks can raise serious reliability issues that can be addressed through the application of Asynchronous Queuing (582) and Reliable Messaging (592).

The unique messaging model established by Event-Driven Messaging extends the base model provided by Service Messaging (533) and is itself often extended via other specialized messaging patterns, such as Asynchronous Queuing (582) and Reliable Messaging (592).

The publish-and-subscribe model that underlies Event-Driven Messaging provides advanced, asynchronous messaging functionality that can build upon the routing and messaging logic provided natively by ESB platforms (Figure 18.39).

Figure 18.39 Event-Driven Messaging is considered an optional extension to Enterprise Service Bus (704).