Chapter 11

Faster Iterations

According to Voltaire, “The best is the enemy of the good.” In Agile circles, iteration means one thing: It’s the timebox in which product increments are made. For Scrum, the iteration is a Sprint. For game developers, the term iteration means something more. It refers to the practice of creating an initial version of something (artwork, code, or a design), examining it, and then revising it until it’s sufficiently improved.

Unfortunately, iterating isn’t free. It takes time to revisit a bit of art, code, design, audio, or other game element. The challenge facing all teams is to find ways to reduce the cost of iteration. A team that reduces the cost of iteration benefits in two ways: They iterate over gameplay elements more often, and they do so more frequently. These benefits result in an increase in their velocity.

Scrum focuses game developers on improving iteration time everywhere. The benefits of doing this are reinforced daily among cross-discipline teams and over the course of Sprints through the measurement of velocity. As described in Chapter 9, “Agile Release Planning,” velocity is measured as the average amount of scope, which means a Definition of Done is accomplished every Sprint. Product Backlog Items (PBIs) not only require coding and asset creation but also debugging, tuning, and a degree of polishing to be considered done. These additional requirements drive the need for more iterations, and the longer the iteration time, the slower the velocity. Faster iteration improves velocity.

This chapter examines where the overhead of iterating code, assets, and tuning comes from and ways that a team can reduce this overhead and greatly increase its velocity on a project.

A Sea Story

One of the best things about developing games is that mistakes don’t kill people or cost tens of thousands of dollars. The pace of development can be slowed under these conditions.

My last job before I joined the game development industry was developing autonomous underwater vehicles. These vehicles were meant to perform dangerous operations such as searching for underwater mines. When launched, these multimillion-dollar vehicles were counted on to conduct their mission and return to us.

No matter how much we tested the software and hardware before a mission, some problems always surfaced at sea (no pun intended). Often these problems resulted in the vehicle’s failing to return at the appointed time or location. When this happens, you realize how big the ocean really is.

As a result, changes to the vehicle were very carefully tested over the course of weeks. Even the smallest mishap would “scrub” a day at sea. This long iteration cycle slowed development progress to a crawl.

The Solutions in This Chapter

This chapter explores ways of balancing the drive for quality with its cost by

Measuring and reducing the cost of iterations

Reducing the cost of defects by finding them quickly, or simply not creating them in the first place

Increasing the speed and effectiveness of testing through automation and more strategic quality assurance (QA)

Where Does Iteration Overhead Come From?

Iteration overhead comes from many places:

Compile and link times: How long does it take to make a code change and see the change in the game?

Tuning changes: How long does it take to change a tuning parameter, such as bullet damage?

Asset changes: How many steps does it take to change an animation and see it in the game?

Approvals: What are the delays in receiving art direction approval for a texture change?

Integrating change from other teams: How long do changes (new features and bug fixes) from other teams take to reach your team?

Defects: How much time is lost to crashes or just trying to create a stable build?

These time delays between iterations last seconds to weeks in duration. Generally, the longer the time between the iterations, the more time is wasted either waiting or having to find lower-priority work to do before another iteration is attempted.

Measuring and Displaying Iteration Time

The complexity of a game, asset database, build environment, and pipeline grows over time. While this happens, iteration times tend to grow—there is more code to execute, and more assets in the database to sort through. Iterations are rapid at the start of a project but grow unacceptable over time. Before you know it, half your day is spent waiting for compiles, exports, baking,1 or game loads.

1. Baking refers to the process of translating exported assets into a particular platform’s native format.

The key to reducing iteration overhead is to measure, display, and address ways of reducing iterations continually.

Measuring Iteration Times

Iteration times should be measured frequently. To ensure that such measurements are performed frequently, the measurement process should be automated. A simple automated tool2 should do this on a build server with a test asset, for example. A nice feature to add to this tool is to have it alert someone when this time spikes.

2. If not automated, it is easily skipped or forgotten.

Note

One time a bug nearly doubled the bake time for a game, yet no one reported it during any of the Daily Scrums for a week. It seemed that people became immune to long iterations, which was more worrying than the bug itself.

Displaying Iteration Times

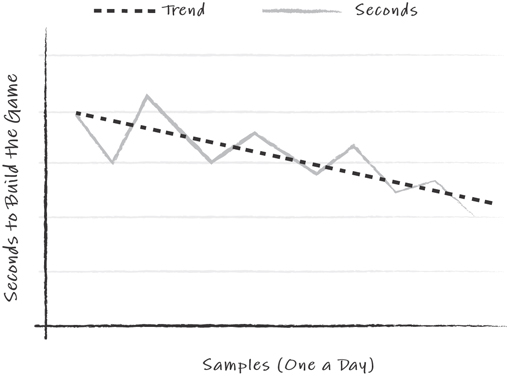

An iteration time trend chart displays the amount of time that an individual iteration time, such as build time, is taking and shows its trend over time. Like the Sprint burndown, this chart displays a metric and its recent trend. Figure 11.1 shows an example iteration trend chart.

Figure 11.1 Recent iteration times and trend line

Showing a longer-term trend of iteration time is important because it is often hidden in daily noise. How frequently the chart is updated depends on how often a particular iteration time is measured and the need for updating it. Significant iteration metrics (based on how many people execute the iteration and how often) should be calculated daily, and the charts should be updated weekly.

Note

A tool that automatically measures iteration time should also alert someone immediately when that time spikes.

Experience: Playstation Asset Baking

When iterating on art assets, the largest amount of the time it takes to iterate on changes typically comes from baking or exporting an asset to a target platform’s native format. On a PlayStation project I worked on, every asset change required a 30-minute bake. Because the actual game executable was used to perform the baking, this time continued to creep up as features were added. It reached the point where everyone working with the PlayStation spent half the day waiting for this process!

The team began by plotting asset iteration time on a daily basis. Over time, it dedicated a portion of its Sprint Backlog toward optimizing the baking tools and process. During a release, the team saw the trend of bake times slowly declining (33 percent in three months).

Without this regular measurement and display, it would have been easy for the team to lose track of the overall trend. Without keeping an eye on the metric, the bake times would have crept up gradually. Equally important was the value of introducing a large number of very small optimizations and seeing the effect over time. Often the “one big fix” simply doesn’t exist, and nothing else is done because a significant benefit isn’t immediately visible. The burndown demonstrates the value of “a lot of small fixes.”

Personal and Build Iteration

It’s useful to consider three types of iteration: personal iterations for each developer iterating at his own development station, the build iterations when code and asset changes are shared across the entire project, and deployment iteration time for live games. All require constant monitoring and improvement. We’ll look at deployment iteration time in Chapter 22, “Live Game Development.”

Improving personal iteration is mainly a matter of improving tools and skills. Build iteration requires not only tool improvements but also attention to the practices shared across teams to reduce the overhead inherent in sharing changes with many developers.

Personal Iteration

Personal iteration time includes the time it takes to do the following:

Exporting and baking assets for a target platform a developer is iterating on

Changing a design parameter (for example, bullet damage level) and trying it in the game

Changing a line of code and testing it in the game

These are the smallest iteration times, but because they happen most frequently, they represent the greatest iteration overhead. Removing even five minutes from an export process used a dozen times a day improves velocity by more than 20 percent!3

3. This is based on four to five hours of useful work done per day.

Some common improvements can speed up personal iteration times:

Upgrade development machines: More memory, faster CPUs, and more cores increase the speed of tools. This is usually a short-term solution because it hides the real causes of delay.

Distributed build tools: Distributed code-building packages reduce code iteration time by recompiling large amounts of code across many PCs. Tools also exist for distributed asset baking.

Parameter editing built into the game: Many developers build a simple developer user interface into the game for altering parameters that need to be iterated.

Asset hot loading: Changed assets are loaded directly into a running game without requiring a restart or level reload.

Note

The ideal iteration is instantaneous! Achieving this ideal after a game engine has been created is far more difficult. For a new engine in development, I consider zero iteration time and hot loading to be necessary parts of the engine that must be maintained from the start of development.

Build Iteration

Build iteration is the process that spreads changes from one developer to all the other developers on the team. Sometimes this cycle is weeks long, but because members of the team do other things while waiting for a new build, it is not given as much attention as personal iteration times. However, the larger the team, the more impact the build iteration has on the team’s effectiveness. It often results in a near disaster when there is a rush to get a working build out for a Sprint or release. Bottlenecks and conflicts inevitably occur when everyone is trying to commit changes at the same time. The solution is to reduce this overhead so builds are safely iterated more frequently.

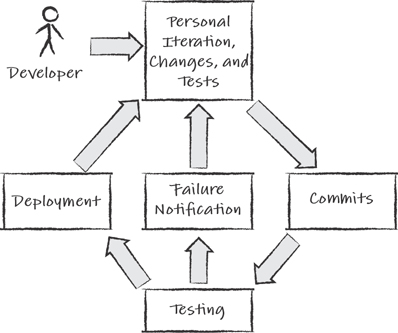

Figure 11.2 shows the build iteration cycle discussed.

Figure 11.2 A build iteration cycle

Following personal iteration, a developer commits changes to a repository or revision control system. This is followed by a battery of tests. If these tests find a problem, the developer is notified and asked to fix the problem. Otherwise, if the tests pass, the build is shared with the team.4

4. If your project is not doing this, stop reading and implement it now!

Commits

Commits are changes made to a project repository for the rest of the team to access. For example, an animator commits a new set of animations for a character to the repository, and subsequent builds show characters using those new animations.

There are two main concerns regarding commits:

The commits should be safe and not break the build.

The build is in a working condition so that any failure is more likely to be tied to the last commit and quickly fixed.

The developer must first synchronize with the latest build and test his changes with it. This is done to avoid any conflicts that arise with other recent commits. If the latest build is broken, it must be fixed before any commit is made.

When builds are chronically broken, they slow the frequency of commits, which means that larger commits are made. Because larger commits are more likely to break the build, they create a vicious cycle that dramatically impacts a team’s velocity.

Note

Chapter 12, “Agile Technology,” discusses continuous integration strategies to minimize the size of code changes commits.

Testing

After changes are committed, there should be a flurry of more extensive tests made to ensure that those commits haven’t broken anything in the build. There are two competing factors to consider. First, we want to ensure that the build is solidly tested before it is released to the team. Second, a full suite of tests often takes the better portion of a day to run, which is too long. We need to balance testing needs with the need to iterate quickly on build changes.

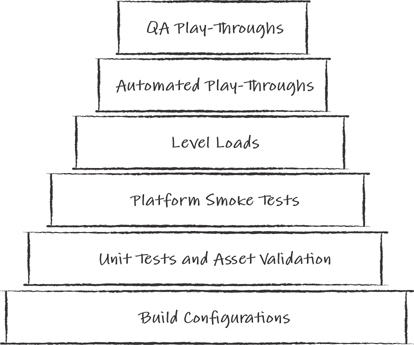

Test Strategies

A multifaceted approach to testing is best. A combination of automated and QA-run testing catches a broad range of defects.

Figure 11.3 shows a pyramid of tests run in order from bottom to top. The tests at the bottom of the pyramid run quickly and catch the more common defects. As each test passes, the next higher test is run until we get to the top where QA approves the build by playing it.

Figure 11.3 A testing pyramid

The tests are as follows:

Build configurations: This testing simply creates a build (executable and assets) for each platform. This discovers whether the code compiles on all your target platforms with multiple build configurations (for example, debug, beta, and final). This could cover dozens of platforms for a mobile game.

Unit tests and asset validation: This includes some of the unit tests (if they exist) and any asset validation tests. Asset validation tests individual assets before they are baked and/or loaded in the game. These are examples of asset validation tools:

Naming convention checks

Construction checks, such as testing for degenerate triangles

Platform resource budget checks (for example, polygon count or memory size)

Note

Unit testing is described in more detail in Chapter 12.

The list of validation tests should be built up as problems are discovered.

Platform smoke tests: These tests ensure that the build loads and starts running on all the platforms without any crashes.

Level loads: One or more of the levels are loaded to ensure that they run on all the platforms and stay within their resource budgets. Usually only the levels affected by change are loaded, but all of them are tested overnight.

Automated play-throughs: A game that “plays itself” through scripting or a replay mechanism benefits testing. In fact, implementing this type of feature into the game from the start is worth the investment. If conditions at the end of the play-through do not meet expectations (such as all the AI cars in a racing game crossing the finish line in a preset range of time), an error is flagged.

QA play-throughs: If the build passes all previous tests, then QA plays through portions of the game. QA is not only looking for problems that were missed in the previous tests but also looking for problems that tests could not catch such as unlit portions of the geometry or AI characters that are behaving strangely.

Robotic Phone Interface Testing

One of the most challenging aspects of mobile game QA is the overhead of testing the interface on all the various phones. It’s a slow manual process, but we’re starting to see companies introduce “robotic hands” to automate even this.5

Test Frequency

As development progresses, the time required for running all of these tests grows to the point where keeping up with every commit is impossible. At this point, the scope of build approval needs to be tiered. The following list shows four tiers of build tests that apply increasing levels of testing:

Continuous build tests: These builds have passed the unit and asset tests for the committed modules or assets. These tests take minutes.

Hourly build tests: These builds have passed every test up to the level load test.

Semi-daily build tests: Two or three times a day, QA selects the latest hourly build and plays through it for 30 minutes.

Daily build tests: These are builds that have been completely rebuilt (code and asset cooking) and for which every possible automated test has been run. These take hours to run and are usually done overnight.

As each build is approved, it is flagged (or renamed, and so on) to reflect the testing tier it passed. This lets the team know how extensively the build was tested and to what degree they should trust it.

Note

Sprint Review builds should pass all the daily build tests!

Failure Notification

When a commit is made that breaks the build, two things must happen:

The person(s) who made the last commit must be notified immediately in a way they can’t ignore. This can take the form of a dialog box that pops up on top of all other windows.

The rest of the team should be notified that the build is broken and that they shouldn’t “get the latest” code and assets until the problem is fixed. This notification requires less intrusive means to communicate. An example of a notification is an icon in the system tray that turns red.

Note

At High Moon, whenever the build was broken, every development machine warned its user by playing a sound bite. One time I broke the build and 100 PCs started playing the Swedish Chef’s theme song from the Muppet Show.

The Loaf of Questionable Freshness

Most of the time when a commit is broken, it is because of someone ignoring the established testing practices. Teams often devise “motivational tools” to help ensure that teammates remember to perform these practices. An example of this took place on the Midtown Madness team in the late nineties at Angel Studios. We didn’t have extensive build testing automation then. We had a dedicated PC, the “build monkey,” where any change committed had to be tested separately following every commit. Verifying the build on the build monkey could be a tedious task. Some people occasionally found excuses to skip it, sometimes to the detriment of the team.

After a while, I thought of a cure. I purchased a loaf of Wonder bread, and we instituted a new practice: If you broke the build monkey, you had to host the loaf of bread on top of your monitor (everyone had CRT monitors back then, with plenty of warm space on top) until someone else broke the monkey and took ownership of the loaf.

At first things didn’t change. At first, no one seemed to mind a loaf of bread on their monitor. However, as time passed, this changed; the bread became stale and then moldy. Someone on the team started calling it “the loaf of questionable freshness.” Eventually, we all desperately wanted to avoid being the owner of the loaf. As a result, build discipline improved, the monkey stayed unbroken, and eventually the loaf of questionable freshness was given a proper burial.

Technology has changed these practices a bit (in other words, we can’t fit a loaf of bread on top of an LCD monitor). These days automated test tools play embarrassing music, or the team holds impromptu ceremonies for team members who break the build (have you ever come back to your workspace to find it completely wrapped in Saran wrap?). It’s all done with a sense of fun, but it works.

Stable Builds

The last step in build iteration is to share a stable build with the developers. There are two main considerations here: communicating stability and reducing transfer time.

Communicate Stability

Communicate the level of testing performed on working builds to reflect the testing tier each one passed.

In the past, we’ve used a simple homegrown tool that shows all the builds available on the server, their build date, and the test status. The developer selects a build to download based on their needs. Usually, the developer downloads the latest build that was fully tested (usually the daily build). When developers want very recent changes, they select the hourly build.

Note

Keeping a few weeks’ history of builds around is useful in case a subtle bug needs to be regressed to discover which commit introduced it.

Reduce Transfer Time

Reduce the amount of time to transfer the build from the servers to personal development machines.

Games require many gigabytes of space, and when 100 developers transfer builds daily, it challenges the company network. It’s common for a new build to take 30+ minutes to transfer from a server to a personal machine. Reducing this time is crucial. A number of ways exist to attack this problem:

Server/client compression/decompression: A number of tools are available to improve the transfer speed of large amounts of data over a network.

Partial transfer: Does everyone need to transfer every asset? Do some developers need just a new executable? Make it possible to do selective transfers.

Overnight transfer: Set up a tool for pushing daily builds (if working!) to all the developer stations overnight.

Upgrade your network: Move to higher-bandwidth switches and mirrored servers. This is costly, but a simple return-on-investment calculation usually shows that the reduced time to share a build and assets pays for itself in productivity gains.

Focusing a Team on Build Iteration

When I was a child, I desperately avoided cleaning my room. As I became older, my mother told me that “She was not put on Earth to clean up after me.” Imagine my surprise! However, when I had to clean up after myself, I quickly learned that it was better to avoid making the mess in the first place. At the very least, I learned to clean up a mess before it dried on the carpet!

Improving build iteration and debugging is the responsibility of every team. Making it the responsibility of one group of people removes some of that sense of responsibility. However, creating a better build system to catch problems and share stable builds requires specialists and expertise beyond the capabilities of any one team. Having every team create its own system isn’t efficient. They are better off adopting a studio-maintained system and focusing on their game.

The role of creating and maintaining the build system is often the responsibility of an engine or tools team. Members of this team see patterns of failure and work to plug any holes that enable problems to slip through. Additional build server tests are the easiest remedy, but teams often need to improve their practices to avoid commit failures. An example of this is to show individuals how to write better unit tests or improve their unit test coverage or to work with an artist to avoid using nonstandard texture sizes or formats.

The team that supports the build servers and tools also needs a metric that shows its progress. A useful metric used for measuring build stability and availability is to record the percentage of time a working build is available to everyone on the team. The ideal is 100 percent. A perfect level of stability will not be maintained forever, but it is a powerful goal.

Note

On one team, the “working build availability percentage” started at 25 percent. The team recorded this metric daily and plotted it on a burn-up chart. After a year, it averaged 95 percent. Some weeks after a major middleware integration, the availability rate dropped back to near zero, but the burn-up chart enabled the teams to see the long-term picture and slowly improve the trend.

A Word about Revision Control

A detailed discussion of revision control is outside the scope of this book. A good article to read further can be found at https://www.gamasutra.com/blogs/AshDavis/20161011/283058/Version_control_Effective_use_issues_and_thoughts_from_a_gamedev_perspective.php

What Good Looks Like

When visiting teams, I often explore where the responsibility for quality lies. For effective teams, responsibility lies with them, not with a group of testers in another building. Helping teams accept this responsibility is a major purpose of the Definition of Done and the reason for continually and slowly raising the bar on what done means; it drives cultural and practice changes that often result in the practices you see in this chapter. It influences developers to care more about quality and not just task completion.

Summary

The source of iteration overhead comes from many factors. These sources must be relentlessly tracked down and reduced. Left unattended, they will grow to consume more time and bring velocity to a crawl.

Faster iteration time is a big win. It improves velocity and quality by enabling more iterations of features and assets. Faster iterations improve the life of a developer and the quality of the game. When a designer has to wait 10 minutes to test the effects of a wheel friction tweak on a vehicle, they’ll likely find a value that is “good enough” and move on. When the iteration time is instantaneous, they’ll tweak the value until it’s “just right.”

Additional Reading

Crispin, L., and J. Gregory. 2009. Agile Testing: A Practical Guide for Testers and Agile Teams. Boston, MA: Addison-Wesley.

Lakos, J. 1997. Large-Scale C++ Software Design. Reading, MA: Addison-Wesley.