Chapter 4. Service and API Design

Services require a little more up-front thought than a typical Rails application. Taking the time to think about design decisions before you start creating services can give your application the advantages of flexibility, scalability, and robustness in the long run. One of the keys to ensuring that you reap the benefits of services is proper design.

This chapter covers strategies and examples for designing services. It discusses segmenting functionality into separate services, upgrading and versioning services, and designing APIs that provide sensible and flexible functionality. The goal is to develop services that cover the functionality of the social feed reader described in Chapter 3, “Case Study: Social Feed Reader.”

Partitioning Functionality into Separate Services

The first step when considering the design of services is to look at the application as a whole and to make some decisions about how to split up the functionality. This partitioning is one of the toughest design decisions you need to make. The partitioning at this point lies mostly at the model layer of the application. This chapter looks at four different strategies for splitting up the functionality of the models:

• Partitioning on iteration speed

• Partitioning on logical function

• Partitioning on read/write frequency

• Partitioning on join frequency

The following sections look at each of these strategies to separate the social feed reader’s model layer into services.

Partitioning on Iteration Speed

The services approach works best when you’re dealing with well-defined requirements and an API that will remain fairly stable. In these cases, you can design a service and optimize its performance behind the scenes. In cases where things are more dynamic, there is a little extra cost in iterating over a service. This stems from the need to update both the service side and the clients. When developing an application where you’re not sure about the stability of the list of features and needs, it might be best to develop a hybrid system.

Existing Rails applications that gradually get converted to services are a perfect example of this type of partitioning. In the beginning, the entire application is built as a single monolithic Rails project. As parts of the application become more mature and stable, they can be refactored out of the app into services.

The social feed reader offers a good example of this type of hybrid approach. A few functions within this application are fairly static. The application needs to consume feeds and store the entries somewhere. Users have reading lists based on subscriptions. These pieces are at the core of the application and will exist regardless of the other iterations that occur around user interaction. A good first step in converting the feed reader application over to services would be to move the storage and updating of feeds and their entries behind a service. This would remove those models, the update logic, and daemon code from the application. More importantly, you could then improve the feed update and storage system without touching the user-facing Rails app.

For sections of the application that may change frequently, it makes more sense to keep them within the standard Rails framework. Features that need quick iterations will have the regular controllers, views, and ActiveRecord models. This might mean keeping users, comments, votes, and their associated data within the standard model. With this type of setup, the feed storage and updating systems can be developed as two separate services. Meanwhile, quick Rails-style iterations can be made over everything else.

The key to this type of partitioning is to identify the parts of the application that are core and unlikely to change. You should avoid the temptation to just stuff everything back into a single monolithic application. With the hybrid approach to the social feed reader, you can make changes to the feed-crawling system without updating the rest of the application. The first version of this service may use a MySQL database to store the feeds. Later, you can change this to a more appropriate document database or key value store without touching any of the other application code. Thus, the application reaps the benefits of services in some areas while still maintaining the flexibility of the Rails rapid development style where appropriate.

Partitioning on Logical Function

Generally, you want to partition services based on their logical function. This means you have to consider each piece of functionality in an application and determine which parts fit logically together. For some boundaries, this is a straightforward decision.

In the social feed reader, one service decision stands out as being driven by logical function: updating feeds. This service really sits apart from the application. Its only purpose is to download XML files, parse them, and insert the new entries into the data store. It doesn’t make sense to include this in the application server, user service, or any other service. Even as part of the Rails application, the updating of feeds exists as a process that runs outside the request/response life cycle of the user.

In some cases, a feed update may be triggered by user interaction, while that update still occurs outside the request by getting routed to the Delayed Job queue. Given that this separation already exists, it makes sense to pull the feed updater into its own service so that it can remain isolated. In this way, you can optimize how and when feeds get updated without any other part of the system knowing about these changes. The feed updater service highlights the advantages of isolated testability. When the application is updated, the updater service need not be tested; conversely, when the feed update system is improved or updated, the application layer doesn’t need to be redeployed or tested.

The feed and feed entry storage service is also separated logically. Users can subscribe to feeds, but they don’t own them. All users of the system share feed and entry data, so this data can be seen as logically separate from users. Other examples of services that are logically separated in applications are those for sending email, monitoring systems, collecting statistics, or connecting with external data entities (such as feed updaters, crawlers, or other external APIs).

Lower-level services are also partitioned based on logical function. As part of your infrastructure, you may decide that a shared key value store is useful for multiple services. Similarly, a shared messaging system or queue system might be useful for many services. These types of services have a single function that they serve that can be shared across all your other services. Amazon provides these in the form of S3 for key value storage and SQS for message queues.

The higher-level parts of an application, such as views and controllers, can also be partitioned based on logical function. For example, a comment thread or discussion could exist across many pages of a site. Instead of using a partial in the application to render HTML, a call could be made to a comment service that renders the full HTML snippet for the comments section of the page. Generally, services are responsible for data and business logic. However, in applications that contain many pages, it may be necessary to split the partial page rendering out into services that can be run and tested independently.

Partitioning on Read/Write Frequencies

Looking at the read and write frequency of the data in an application is another method that can help determine the best way to partition services. There may be data that gets updated very frequently and read less often, while other data may be inserted once and read many times. A good reason to partition along these lines is that different data stores are optimized for different behavior. Ideally, a service will have to work only with a single data store to manage all its data.

Future implementation choices should partly drive your partitioning choices. For data with a high read frequency, the service should optimize a caching strategy. For high-write-frequency data, the service may not want to bother with caching. Splitting these out to different services makes the implementation of each individual service easier later. Instead of having to optimize both reads and writes in a single place, you can simply make one service handle the read case really well while the other service handles the write case.

Handling both reads and writes usually requires a trade-off of some sort. Services that are designed to primarily handle one case need not make this trade-off. The choice is made in advance by designating that data be stored in one location instead of another.

The social feed reader involves data that crosses all ends of the spectrum: high read and low write, high write and low read, and high read and high write. For instance, user metadata would probably not be updated very frequently. Meanwhile, the user reading list, voting information, and social stream all have frequent updates. The reading list is probably the most frequently updated data in the system. As a user reads each entry, it must be removed from his or her list. Further, when each feed is updated, the new entries must be added to every subscriber’s reading list.

It could be argued that the reading list belongs in the same service as the user data. It is data owned by the user. Logically, it makes sense to put it with the user data. However, the reading list has a high write frequency, while the other user data probably has different characteristics. Thus, it makes sense to split the reading list out into a separate service that can be run on its own.

It might make sense to keep the vote data with the user data or the entry data. From the perspective of when the data will most likely be read, it might belong with the entry. The list of the user’s previous votes also needs to be pulled up later.

There are a few approaches to storing and exposing the vote data. The first is to create a single-purpose service to answer all queries that have to do with votes. This service would be responsible for storing aggregate statistics for entries as well as for individual users. Thus, given a user, it could return the votes. It could also return the aggregate stats for a list of entries. Finally, another service call could be exposed to return the aggregate stats for a list of entries along with the user’s vote when given a user ID. This kind of service is very specific but has the advantage of being optimizable. Vote data in this application is an example of high-read and high-write data. Keeping it behind a single service gives you the ability to try out multiple approaches and iterate on the best solution.

The other method is to replicate or duplicate data where it is needed. For example, the entries need to know the aggregate voting statistics. Thus, when a new vote is created, the entry service should be notified of this either through the messaging system or through a direct HTTP call. The vote itself could reside with the regular user data since the individual votes are always pulled up in reference to a specific user. In the old ActiveRecord models, this method was taken through a counter cache. Chapter 11, “Messaging,” shows how to enable this kind of cross-service replication with minimal effort and without slowing down the saving of the primary data. Which approach to take really depends on the other needs. Both are legitimate ways to solve the problem.

Partitioning on Join Frequency

One of the most tempting methods of partitioning services is to minimize cross-service joins. For a developer familiar with relational databases, this makes sense. Joins are expensive, and you don’t want to have to cross over to another service to join necessary data. You’ll make fewer requests if you can perform joins inside a service boundary.

The caveat is that almost all data in an application is joined to something else. So which joins should you minimize? If you took the concept of minimizing joins to its logical conclusion, you’d end up with a single monolithic application. If you’re going to separate into services, you need to make some tough decisions about joins.

The previous two ways of partitioning—by read/write frequency and logical separation—are a good starting point. First, data should be grouped together using those criteria. For joins, it is best to consider how often particular joins occur. Take, as an example, the activity stream part of the social feed reader. Whenever a user takes an action such as voting, commenting, or following, all the followers must be notified in the stream. If separate services were created for the activities and the users, the data about which users are following others would need to be accessible from both places. If the “follows” information is not replicated to both places, then it should be put in the single place where it is needed most frequently. Thus, you could minimize the joins that have to occur from the other service.

The other option for minimizing joins is to replicate data across services. In normal relational database jargon, this is referred to as denormalization. This is the more sensible course of action when looking at the activity stream and users services. The information about which users are following each other should be replicated to the activity stream service. This data represents a good candidate for replication because it has a much higher read frequency than write frequency. High writes would mean duplicating all those messages and getting little return. However, with a smaller number of writes than reads, the replicated data can make a difference. Further, the write of the follow can be pushed and replicated through a messaging system, and the data will be replicated to both services seamlessly. This method is covered in detail in Chapter 11, “Messaging.”

Versioning Services

Services can and should change throughout their lifetimes. In order to keep previous clients functioning and to make upgrades possible, service interfaces must be versioned. To facilitate upgrading services without having to simultaneously upgrade clients, you need to support running a couple versions in parallel. This section looks at two approaches for identifying different versions of services and making them accessible to clients.

Including a Version in URIs

One of the most common methods of indicating service versions is to include a version number in the URI of every service request. Amazon includes service versions as one of the arguments in a query string. The version number can also be made part of the path of a URI. Here are some examples of URIs with versions:

• http://localhost:3000/api/v1/users/1

• http://localhost:3000/users/1?version=1

• http://localhost:3000/api/v2/users/1

These examples show the URI for a specific user object with an ID of 1. The first two URIs refer to the user with an ID of 1 on version 1 of the API. The third example uses version 2 of the API. It’s important to note that with different versions of APIs, breaking changes can occur. Possible changes might include the addition or removal of data included in the response or the modification of the underlying business logic that validates requests.

One advantage to putting the version number in the query string is that you can make it an optional parameter. In cases where clients don’t specify a version, they could get the latest. However, this also creates a problem because updating your service may break clients that aren’t explicitly setting version numbers in their requests.

Ideally, you won’t have to run multiple versions for very long. Once a new service version has been deployed and is running, the service clients should be updated quickly to phase out the old version. For external APIs, this may not be a valid option. For example, Amazon has been running multiple versions of the SimpleDB and SQS APIs for a while. Internal APIs may need to run multiple versions only during the time it takes to do a rolling deployment of the service and then the clients. However, it is a good idea to enable a longer lag time between the update of a service and its clients.

REST purists argue that putting the version in the URI is a poor approach. They say this because the URI is ultimately referring to the same resource, regardless of the version. Thus, it should not be a part of the URI. The approach that is generally considered to be RESTful is to use the Accept headers in a request to specify the version of an API. Then the URI of a resource remains the same across versions of an API.

Using Accept Headers for Versioning

In addition to including a version number in URIs, another method for specifying API versions is to include them in the Accept header for each request. This means defining a new MIME type for each version of the API. The following examples show requesting a specific user with either version 1 or version 2.

The following is the HTTP header for requesting the user with the ID 1 accepting version 1 of the API:

![]()

The following is the HTTP header for requesting the user with the ID 1 accepting version 2 of the API:

![]()

These examples use a MIME type that you define in the vendor tree. There are a few disadvantages to this approach. The first and most important is that not all caching servers look at request headers as part of their caching. Therefore, running multiple versions in parallel may cause unpredictable caching behavior. The only fix is to then turn off caching completely while you’re running multiple versions.

The second problem with using Accept headers is that many developers aren’t comfortable or familiar with the idea of setting headers on their requests. From the standpoint of making an API usable to the outside world, most developers are likely to find a version number in the URI easier to work with. Of course, it is a trade-off. If client developers have to specify the version in the URI, they also probably have to build URIs themselves, which increases the complexity of client libraries slightly.

URIs and Interface Design

Consistency across APIs makes it easier to develop clients for each service. Because URIs represent the public interface of a service, they should be as consistent as possible. The URIs for resources in services should follow a convention that can be programmed against. Rails starts with some conventions that should look familiar. For example, here are some URIs and HTTP methods that you might see in a typical Rails application:

• GET, POST /users

• GET, PUT, DELETE /users/1

• GET, POST /users/1/comments

• GET, PUT, DELETE /users/1/comments/2

• GET, POST /users/1/follows

• GET, PUT, DELETE /users/1/follows/23

The first two examples show the URIs for the typical Rails CRUD (create, update, destroy) style. When combined with the HTTP methods, those two URIs represent the full interface for a basic user. GET /users maps to returning a list of all users. POST /users maps to creating a new user. GET /users/1 is the show action for a specific user. PUT is an update, and DELETE is destroy.

The next two examples show a nested resource. Comments are always attached to a user, so they can be nested within the context of a user. The GET, POST, PUT, and DELETE actions all correspond to the CRUD operations for comments.

The final two examples are also for a nested resource called follows. These are a little different in that the resource really represents a join to another user. In this case, it is the follows information of one user following another. GET /users/1/follows/23 would pull back the join information. This might include information about the follows relationship, such as a group the user has been put in or the date of the follow, in addition to the user ID that is following and the user ID that has been followed.

A simple generalization would be to say that these URIs follow a pattern: /:collection then an optional /:id followed by another collection and ID combo, and so on. In this pattern, :collection maps to the plural of an actual model. In the specific examples in the list, users maps to the user model, comments maps to the comment model, and so on. The :id element of the pattern is, of course, the ID for the model. For nested models, the ID of the parent should be contained within the nested model. Thus, a comment should have a user ID associated with it.

This is all fairly standard Rails design, and the convention works for most situations. However, some API elements may not fit in with this kind of resource-based model. Take, as an example, the feed-crawling system in the social feed reader. How could the request to crawl a specific feed be represented as a resource? How would you represent a list of feeds with a specific sort order? How do you represent pagination for long lists? Some of these can be mapped as resources using Rails conventions, while others will have to break convention a little. Here are some examples:

• GET, POST /feeds/1/crawls—Returns information about each time a feed has been crawled or posts to request a crawl right away.

• GET /feeds/1/crawls/last—Returns the information for the last crawl on a specific feed. This could include information on whether the feed was modified, how many new entries were found, or whether the crawler hit a redirect.

• GET /feeds?page=2&per_page=20&sort=updated_desc—Gets a list of feeds, sorted by their updated date in descending order with the requested pagination parameters.

The first example turns crawls into a resource, thereby fitting into the convention. When accessing information about the last crawl, this example must depart a little bit from convention because last doesn’t represent an actual ID. However, it represents a specific singular crawl resource. This means that in the case of using either a real ID or the string last, the client can expect a single object to be returned.

The final example shows the request for a collection, with options for sorting and pagination. Here, the query parameters look quite different from other URIs that have been used. This could be changed so that the query parameters are placed in the path of the URI before the query string. However, it’s usually easier to manage multiple optional parameters as query parameters. The one concession when using query parameters is that the API should require that they be sorted alphabetically. This can improve the cache hit ratio if caching is employed.

Successful Responses

In addition to standardizing URI design, APIs should also standardize, as much as possible, successful HTTP responses. This section describes some standards that an API may want to include for successful responses.

HTTP Status Codes

RESTful APIs should use the following HTTP status codes to reflect the outcomes of service responses. In the case of successful responses, the first three status codes are the most important and are generally the only ones you need:

• 200 OK—The request has succeeded. This is the generic success case, and most successful responses use this code.

• 201 Created—The request has been fulfilled, and the resource has been created. The response can include a URI for the created resource. Further, the server must have actually created the resource.

• 202 Accepted—The request has been accepted for processing but has not actually been completed. This is a loose response because the actual request may be invalid when the processing occurs. This could be a response from a POST that queues up an operation of some kind.

• 304 Not Modified—The request isn’t exactly a success, but it isn’t an error either. This could happen when a client requests a resource with an If-Modified-Since header. The client should expect that this is a possible response and use a locally cached copy.

HTTP Caching

HTTP caching information should be included in the service’s response headers. This caching data can be used not only by services but also by client programs, proxy caching servers, and content delivery networks (CDNs) to speed up the application and reduce the load on your servers. Cache headers can also be used to specify when you don’t want a response to be cached. The following are the specific headers that service APIs may want to include:

• Cache-Control: public—This header lets your server tell caching proxies and the requesting client whether a response may be cached. A list of directives can be found in the HTTP 1.1 header fields spec at http://www.w3.org/Protocols/rfc2616/rfc2616-sec14.html#sec14.9.

• ETag: 737060cd8c284d8af7ad3082f209582d—ETag is an identifier for the resource that also represents a specific version. It is an arbitrary string of characters. It could be something like the ID of an object and its last modified date and time combined into a SHA1 hex digest. This can be sent in later requests to verify that a client’s cached version of a resource is current.

• Expires: Wed, 02 Sep 2009 08:37:12 GMT—This header gives the date and time after which the cached resource is considered stale. This allows clients and proxies to forgo requests for which their cached resources are still fresh. After the expired time, a new request can be issued to refresh the cached resource.

• Last-Modified: Mon, 31 Aug 2009 12:48:02 GMT—This header specifies the last time the requested resource was modified. Clients issuing requests can send this time to the server to verify whether they have the most up-to-date version of a resource.

Successful Response Bodies

The response bodies hold the actual resource data and, if necessary, pointers to other resources. First, a response format must be selected for the API. JSON is the obvious choice because of its simplicity and ubiquity. Legacy services commonly use XML, but JSON is quickly becoming the standard.

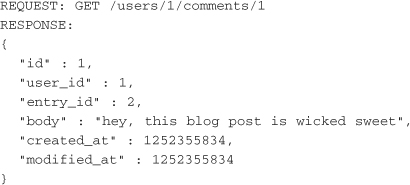

Here is an example of comments resources from the feed reader. Specifically, this example looks at returning a list of comments for a user or a single comment:

For a single object, the response body is simple. It consists only of a JSON object (or hash) of the requested comment. The keys are the field names, and the values are the field values. One thing to note is that this model returns only the ID of the associated user and entry. This means that the service client would need to have knowledge of the API to construct URIs to request the associated resources.

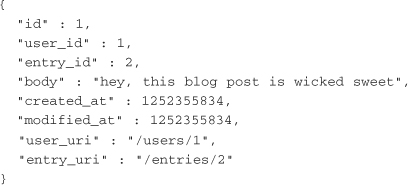

From an API standpoint, it makes sense to also give a URI pointer to the user and entry in addition to the raw IDs. Remember that services should avoid having clients construct URIs whenever possible. Here is the comment resource updated with those additional values:

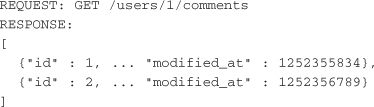

Now here is an example of a request and response for a collection of comments:

Most of the fields of the individual comments are excluded here for brevity. The fields should be the same as those used previously for a single comment resource. For retrieving all the comments from a user, this works well. However, in most cases when requesting a list, only a page of the list should be returned. This means that the API needs to include pagination links in the response. The service should not require the client program to construct URIs for pagination.

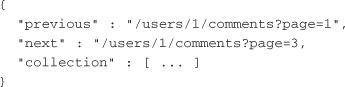

Here’s what that list response looks like:

The pagination links are in the "previous" and "next" values. In cases where there are no previous or next pages, either those keys could be excluded or their values could be set to null. The ellipsis in between the brackets is where the comment objects for the response would go. Other options not listed that the API may want to include are the total number of comments or the number of pages. By responding to a collection request with a hash that contains the collection, the API can add more information for the service clients to use besides the raw collection itself.

Error Responses

Service APIs should standardize on how error conditions are conveyed. Some error responses could indicate problems with the server. Other responses could indicate validation errors on a model or permissions restrictions.

HTTP Status Codes

Service APIs should use some standard HTTP status codes:

• 400 Bad Request—Used as a general catchall for bad requests. For example, if there is a validation error on a model, a service could respond with a 400 error and a response body that indicates what validations failed.

• 401 Unauthorized—Used when a resource is requested that requires authentication.

• 404 Not Found—Used when requesting a resource that the server doesn’t know about.

• 405 Method Not Allowed—Used when a client attempts to perform an operation on a resource that it doesn’t support (for example, performing a GET on a resource that requires a POST).

• 406 Not Acceptable—Used when the request is not acceptable according to the Accept headers in the request (for example, if the service uses the Accept headers to indicate a requested API version and a client requests a version that is no longer supported).

• 409 Conflict—Used when a client attempts to update a resource that has an edit conflict. For example, say that two clients request a blog post at the same time. One client makes changes and submits the post. The second client also makes changes and submits the post, but the server has found that the second client’s copy is now out of date. The second client should request the resource again and inform the user of the now-updated post, allowing the user to make changes.

Error Response Bodies

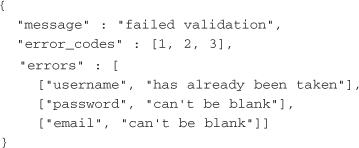

The response bodies for error conditions should be fairly simple. The 400 responses represent the most complex and overloaded error conditions. These errors should include more application-specific error codes and a human-readable error message. These responses also include data validation errors. The following example shows the response body for a request to create a user:

The validation errors show that the user name in the request already exists, the email was blank, and the password was blank. All three failed validation, so the user was not created. Notice also that error codes are contained in the response. These application-specific codes are chosen when designing the API. They make it easier for client library authors to catch specific errors. For example, if you change the user name error to read “user already exists,” it shouldn’t break properly authored client libraries.

For error conditions other than the application-specific 400 codes, the response bodies are generally blank.

Handling Joins

Joins across services are sometimes a necessary evil. You need to consider a few issues when designing the set of services that make up an entire application. First, the API design must account for how to store references to joined data. Second, the API design determines when and where joins should occur. Third, the overall design should not create the need for too many nested joins.

Storing References

In a typical Rails database, the reference to a joined model consists of the model name followed by an underscore and ID. So a comment in the feed reader is joined to a user through the user_id field. The value is the integer ID of the user. With services, the references can be stored in a similar way. If the ID of the joined model is stored, the responsibility of resolving the join is left to the service client.

In an ideal REST system, the client libraries do not have to construct URIs for resources. If a client sends a request for a comment, the client should also be able to request the user the comment belongs to without having knowledge of how to construct a user URI. One solution is to contain this logic within the comment service. The underlying database model can store only the user_id field. The comment service then needs to ensure that every time a comment resource is returned, it returns the user_uri field in addition to the user_id field.

This puts the responsibility for constructing URIs to joined data inside each service. It’s a slight departure from REST because the service is not storing the actual URI. However, it enables easy updates for resources that put version numbers in the URI. When the user service updates its version, the comment service has to update its URI generation logic. Of course, the update to the user system should allow the upgrade of the comment service to happen over time rather than having to update both systems in lock step.

Still another argument can be made for passing raw IDs in the responses. With some services, it makes sense to have the client contain business logic that wraps the data instead of just passing the raw data to the service. A pure RESTful approach assumes that no business logic will be written around the responses. Take basic data validation as an example. When attempting to create a user, you may want to ensure that the email address is in the proper form, the user name isn’t blank, and the password isn’t blank. With a pure RESTful approach, these validations would occur only in the service. However, if a service client wraps the data with a little bit of business logic, it can perform these kinds of data validations before sending to the service. So if you’re already writing clients with business logic, it isn’t that much of a stretch to have a client also be responsible for URI construction.

Joining at the Highest Level

The highest level for a join is the place in the service call stack where joins should occur. For example, with the social feed reader, consider a service that returns the five most recent reading items for a user. The application server will probably make calls to the user service for the basic user data, the subscription service for the list of reading items in a specific user’s list, the feed entry service for the actual entries, and the ratings service for ratings on the list of entries. There are many places where the joins of all this data could possibly occur.

The subscriptions and ratings services both store references to feed entries and users. One method would be to perform the joins within each service. However, doing so would result in redundant calls to multiple services. The ratings and reading lists would both request specific entries and the user. To avoid these redundant calls, the joins on data should occur at the level of the application server instead of within the service.

There are two additional reasons that joins should be performed at the application server. The first is that it makes data caching easier. Services should have to cache data only from their own data stores. The application server can cache data from any service. Splitting up caching responsibility makes the overall caching strategy simpler and leads to more cache hits. The second reason is that some service calls may not need all the join data. Keeping the join logic in the application server makes it easier to perform joins on only the data needed.

Beware of Call Depth

Call depth refers to the number of nested joins that must be performed to service a request. For example, the request to bring back the reading list of a user would have to call out to the user, subscription, feed storage, and ratings services. The call chain takes the following steps:

1. It makes simultaneous requests to the user and subscription services.

2. It uses the results of the subscription service to make simultaneous requests to the feed storage and ratings services.

This example has a call depth of two. There are calls that have to wait for a response from the subscription service before they can start. This means that if the subscription service takes 50 milliseconds to respond and the entry and ratings services complete their calls in 50 milliseconds, a total time of 100 milliseconds is needed to pull together all the data needed to service the request.

As the call depth increases, the services involved must respond faster in order to keep the total request time within a reasonable limit. It’s a good idea to think about how many sequential service calls have to be made when designing the overall service architecture. If there is a request with a call depth of seven, the design should be restructured so that the data is stored in closer proximity.

API Complexity

Service APIs can be designed to be as specific or as general as needed. As an API gets more specific, it generally needs to include more entry points and calls, raising the overall complexity. In the beginning, it’s a good idea to design for the general case. As users of a service request more specific functionality, the service can be built up. APIs that take into account the most general cases with calls that contain the simplest business logic are said to be atomic. More complicated APIs may enable the retrieval of multiple IDs in a single call (multi-gets) or the retrieval or updating of multiple models within a single service call.

Atomic APIs

The initial version of an API should be atomic. That is, it should expose only basic functions that can be used to compose more advanced functionality. For example, an API that has a single GET that returns the data for different models would not be considered atomic. The atomic version of the API would return only a single type of model in each GET request and force the client to make additional requests for other related models. However, as an API matures, it makes sense to include convenience methods that include more data and functionality.

However, simple atomic APIs can be taken too far. For example, the interface for retrieving the list of comments for a single user in the social feed reader is non-atomic. It returns the actual comments in the response. A more atomic version of the API would return only the comment IDs and the URIs to get each comment. The expectation is that the client will get the comments it needs. As long as these GETs can be done in parallel, it doesn’t matter that you have to do many to get a full list of comments.

In theory, the parallel request model works. However, in practice it’s more convenient to just return the list of comments in the actual request. It makes the most sense for clients because in almost all cases, they want the full comments instead of just the IDs.

Atomicity is about returning only the data that a client needs and not stuffing the response with other objects. This is similar to performing joins at the highest level. As you develop an application and services, it may make sense to have special-case requests that return more data. In the beginning, it pays to make only the simplest API and expand as you find you need to.

Multi-Gets

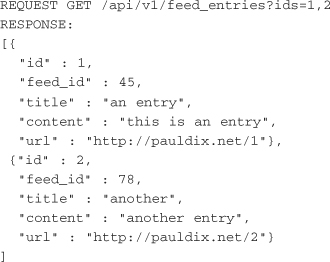

You might want to provide a multi-get API when you commonly have to request multiple single resources at one time. You provide a list of IDs and get a response with those objects. For example, the social feed reader application should have multi-get functionality to return a list of feed entry objects, given a list of IDs. Here is an example of a request/response:

For service clients, the advantage of using this approach is that the clients are able to get all the entries with a single request. However, there are potential disadvantages. First, how do you deal with error conditions? What if one of the requested IDs was not present in the system? One solution is to return a hash with the requested IDs as the keys and have the values look the same as they would if they were responses from a single resource request.

The second possible disadvantage is that it may complicate caching logic for the service. Take, for example, a service that runs on multiple servers. To ensure a high cache hit ratio, you have a load balancer in front of these servers that routes requests based on the URL. This ensures that a request for a single resource will always go to the same server. With multi-get requests, the resources being requested may not be cached on the server the request gets routed to. At that point, you either have to make a request to the server that has the cached item or store another copy. The workaround for this is to have the servers within a service communicate to all of the service’s caching servers.

Multiple Models

A multiple-model API call is a single call that returns the data for multiple models in the response. An API that represents a basic social network with a user model and a follows model is a good example that shows where this would be useful. The follows model contains information about which user is the follower, which user is being followed, when the follow occurred, and so on. To retrieve a user’s full information, the atomic API would require two requests: one to get the user data and one to get all the follows data for that user. A multiple-model API could reduce that to a single request that includes data from both the user and follows models.

Multiple-model APIs are very similar to multi-gets in that they reduce the number of requests that a client needs to make to get data. Multiple-model APIs are usually best left for later-stage development. You should take the step of including multiple models within the API only when you’re optimizing calls that are made very frequently or when there is a pressing need. Otherwise, your API can quickly become very complex.

Conclusion

When designing an overall services architecture, you need to make some decisions up front about the services and their APIs. First, the functionality of the application must be partitioned into services. Second, standards should be developed that dictate how to make requests to service APIs and what those responses should look like. Third, the design should account for how many joins have to occur across services. Finally, the APIs should provide a consistent level of functionality.

APIs should take into account the partitioning of the application, consistency in their design, and versioning. Strategies for partitioning application into services include partitioning based on iteration speed, on logical function, on read/write frequency, and on join frequency. Developing standards in the URI design and service responses makes it easier to develop client libraries to access all services. The service APIs should aim for consistency to make URI and response parsing easier to extract into shared libraries for use by everyone. Services should be versioned and be able run multiple versions in parallel. This gives service clients time to upgrade rather than having to upgrade both client and server in lock step.