Earlier in this chapter, we discussed several of the architectural challenges that face developers when building high-performance Web applications. Many of these challenges stem from the basic interaction model between Web browsers and Web servers. They include such issues as network latency and the quality of the connection, payload size and round trips between client and server, as well as the way code is written and organized. These issues generally transcend multiple development platforms and affect every Web application developer, whether they are developing ASP.NET and managed code Web applications or using an alternative technology like PHP or Java. It is important for developers to understand these issues and incorporate performance considerations in their application designs. Performance bugs that are discovered late in the release cycle can create significant code churn and add risk to delivering a stable and high-quality application.

There are several best practices for improving the performance of a Web application, which have been categorized into four basic principles below. These principles are intended to help organize very specific, tactical best practices into simple, high-level concepts. They include the following:

Reduce payload size. Application developers should optimize Web applications to ensure the smallest possible data transfer footprint on the network.

Cache effectively. Performance can be improved when application developers reduce the number of HTTP requests required for the application to function by caching content effectively.

Optimize network traffic. Application developers should ensure that their application uses the bandwidth as efficiently as possible by optimizing the interactions between the Web browser and the server.

Organize and write code for better performance. It is important to organize Web application code in a way that improves gradual or progressive page rendering and ensures reductions in HTTP requests.

Let us review each of these principles thematically and discuss more specific, tactical examples for applying performance best practices to several facets of your Web application.

As reviewed earlier in this chapter, one of the primary challenges to delivering high-performance Web applications is the bandwidth and network latency between the client and the server. Both will vary between users and most certainly vary by locale. To ensure that users of your Web application have an optimal browsing experience, application developers should optimize each page to create the smallest possible footprint on the network between the Web server and the user’s browser. There are a number of best practices that developers can leverage to accomplish this. Let us review each of these in greater detail.

Web servers like IIS, Apache, and others offer the ability to compress both static and dynamic content using standard compression methods like gzip and deflate. This practice ensures that static content (JavaScript files, CSS, and HTML files) and dynamic content (ASP and ASPX files) are compressed by the Web server prior to being delivered to the client browser. Once delivered to the client, the browser will decompress the files and leverage their contents from the local cache. This ensures that the size of the data in transit is as small as possible, which contributes to a faster retrieval experience and an improved browsing experience for the user overall. In the example illustrated in 4-2, compression reduced the payload size by 13.5 KB, or by approximately 45 percent, which is a modest reduction.

Minification is the practice of evaluating code like JavaScript and CSS and reducing its size by removing unnecessary characters, white space, and comments. This ensures that the size of the code being transferred between the Web server and the client is as small as possible, thus improving the performance of the page load time. There are several minifier utility programs available on the Internet today such as YUI Compressor for CSS or JSMin for JavaScript, and many teams at Microsoft, for instance, share a common minifier utility program for condensing JavaScript and CSS. This practice is very effective at reducing JavaScript and CSS file sizes, but it often renders the JavaScript and CSS unreadable from a debugging perspective. Application developers should not incorporate a minification process into debug builds but rather into application builds that are to be deployed to performance testing environments or live production servers.

Another way to reduce the payload size of a Web page is to reduce the size of the images that are being transmitted for use within the page. When coupled with the use of CSS Sprites, which will be discussed later in this chapter, this technique can further optimize the transmission of data between the Web server and the user’s computer. Adobe published a whitepaper[1] that provides insight into how reducing the color palette in iconography and static images can have a dramatic savings on the size of an image. By simply reducing the color palette in an image from 32 bit to 16 bit to 8 bit colors, it is possible to reduce the image size by upwards of 40 percent without degrading the quality of the image. This can produce dramatic results when extrapolated out to hundreds of thousands of requests for the same image.

As we have seen, Web application performance is improved significantly by incorporating various strategies for reducing the payload size over the network. In addition to shrinking the footprint of the data over the wire, application developers can also leverage page caching strategies that will help reduce the number of HTTP requests sent between the server and the client. Incorporating caching within your application will ensure that the browser does not unnecessarily retrieve data that is locally cached, thereby reducing the amount of data being transferred and the number of required HTTP requests.

A Web server uses several HTTP headers to inform the requesting client that it can leverage the copy of the resource it has in its local cache. For example, if certain cache headers are returned for a specific image or script on the page, then the browser will not request the image or script again until that content is deemed stale. There are several examples of these HTTP headers, including Expires, Cache-Control, and ETag. By leveraging these headers effectively, application developers can ensure that HTTP requests sent between the server and the client will be reduced as the resource remains cached. It is important for developers to set this value to a date that is far enough in the future that expiration is unlikely. Let’s consider a simple example.

Expires: Fri, 14 May 2010 14:00 GMT

Note

The preceding code is an example of setting an Expires header on a specific page resource like a JavaScript file. This header tells the browser that it can use the current copy of the resource until the specified time. Note the specified time is far in the future to ensure that subsequent requests for this resource are avoided for the foreseeable future. Although this is a simple method for reducing the number of HTTP requests through caching, it does require that all page resources, like JavaScript, CSS, or image files, incorporate some form of a versioning scheme to allow for future updates to the site. Without versioning, browsers and proxies will not be able to acquire new versions of the resource until the expiration date passes. To address this, developers can append a version number to the file name of the resource to ensure that resources can be revised in future versions of the application. This is just one example of ways to apply caching to your application’s page resources. As mentioned, leveraging Cache-Control or ETag headers can also help achieve similar results.

Note

Each of these HTTP headers requires in-depth knowledge of correct usage patterns. I recommend reading High Performance Web Sites (O’Reilly, 2007), by Steve Souders, or Caching Tutorial for Web Authors and Web Masters, by Mark Nottingham[2] before incorporating them in your application.

The network on which application data is being transferred between the server and the Web browser is one element within the end-to-end Web application pipeline that developers have the least control over, in terms of architecture or implementation. As developers, we must trust that network engineers have done their best to implement the fastest and most efficient networks so that the data we transmit is leveraging the most optimal route between the client and the server. However, the quality of the connection between our Web applications and our users is not always known. Therefore, we need to apply various tactics that both reduce the payload size of the data being transmitted as well as reduce the number of requests being sent and received. Application developers can accomplish this by incorporating the following best practices.

If your Web application requires a large number of files to render pages, then increasing the number of parallel TCP ports will allow more page content to be downloaded in parallel. This is a great way to speed up the time it takes to load the pages in your Web application. We discussed earlier how the HTTP/1.1 specification suggests that browsers download only two resources at a time in parallel for a given hostname. Web application developers must utilize additional hostnames within their application to allow the browser to open additional connections for parallel downloading. The simplest way to accomplish this is to organize your static content (e.g., images, videos, etc.) by unique hostname. The following code snippet is a recommendation for how best to accomplish this.

<img src="http://images.contoso.com/v1/image1.gif"/> <embed src="http://video.contoso.com/v1/solidcode.wmv" width="100%" height="60" align="center"/>

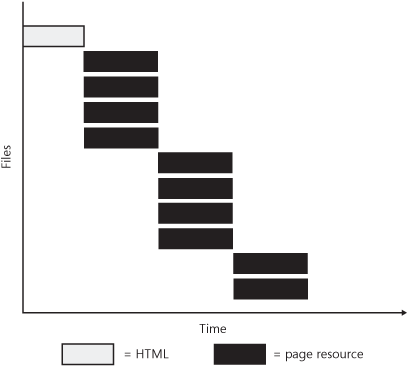

By leveraging multiple hostnames, parallel downloading of content by the browser will be encouraged. 4-1, shown previously, illustrated how page content is downloaded when a single hostname is used. 4-3 contrasts that by illustrating how the addition of multiple hostnames affects the downloading of content.

Keep-Alives is the way in which servers and Web browsers use TCP sockets more efficiently when communicating with one another. This was brought about to address an inefficiency with HTTP/1.0 whereby each HTTP request required a new TCP socket connection. Keep-Alives let Web browsers make multiple HTTP requests over a single connection, which increases the efficiency of the network traffic between the browser and the server by reducing the number of connections being opened and closed. This is accomplished by leveraging the Connection header that is passed between the server and the browser. The following example is an HTTP response header, which illustrates how Keep-Alives are enabled for Microsoft’s Live Search service.

HTTP/1.1 200 OK Content-Type: text/html; charset=utf-8 X-Powered-By: ASP.NET P3P: CP="NON UNI COM NAV STA LOC CURa DEVa PSAa PSDa OUR IND", policyref="http://privacy.msn.com/w3c/p3p.xml" Vary: Accept-Encoding Content-Encoding: gzip Cache-Control: private, max-age=0 Date: Tue, 30 Sep 2008 04:30:19 GMT Content-Length: 8152 Connection: keep-alive

In this example, we notice a set of HTTP headers and their respective values were returned. This header indicates that an HTTP 200 response was received by the browser from the request to the host Uniform Resource Identifier (URI), which is www.live.com. In addition to other interesting information, such as the content encoding or content length, we also notice there is an explicit header called Connection, which indicates that Keep-Alives are enabled on the server.

Previously, we discussed how DNS lookups are the result of the Web browser being unable to locate the IP address for a given hostname in either its cache or the operating system’s cache. These lookups require calls to Internet-based DNS servers and can take up to 120 ms to complete. This can adversely affect the performance of a Web page if there are a large number of unique hostnames found in any of the JavaScript, CSS, or inline code required to render that page. Reducing DNS lookups can improve the response time of a page, but it must be done judiciously as it can also have a negative effect on parallel downloading of content. As a general guideline, it is not recommended to utilize more than four to six unique hostnames within your Web application. This compromise will maintain a small number of DNS lookups while still leveraging the benefits of increased parallel downloading. Furthermore, if your application does not contain a large number of assets, it is generally better to leverage a single hostname.

Web page redirects are used to route a user from one URL to another. While redirects are often necessary, they delay the start of the page load until the redirect is complete. In some cases, this could be acceptable performance degradation, but overuse could cause undesirable effects on the user experience. Generally, redirects should be avoided if possible, but understandably they are useful in circumstances where application developers need to support legacy URLs or certain vanity URLs used to make remembering a page’s location fairly easy. In the sample redirect below, we see how the Live Search team at Microsoft redirects the URL http://search.live.com to http://www.live.com. On my computer, this redirect added an additional 0.210 seconds to the total page load time, as measured with HTTPWatch.

HTTP/1.1 302 Moved Temporarily Content-Length: 0 Location: http://www.live.com/?searchonly=true&mkt=en-US

In this example, we notice that the HTTP response code is a 302, which indicates that the requested URI has moved temporarily to another location. The new location is specified in the Location header, which informs the browser where to direct the user’s request. The browser will then automatically redirect the user to the new location.

Earlier in this chapter, we discussed how incorporating caching in Web applications can reduce the number of HTTP requests. In addition to caching, leveraging the services of a Content Delivery Network (CDN) provides a complementary solution that also improves the speed at which static content is delivered to your users. CDNs like those offered by Akamai Technologies or Limelight Networks allow application developers to host static content, such as JavaScript, CSS, or Flash objects on globally distributed servers. Users who request this content required by a particular Web page are dynamically routed to the content that is closest to the originating request. This not only increases the speed of content delivery, but it also offers a level of redundancy for the data being served. Although there can be a high cost associated with implementing a CDN solution, the results are far and away worthwhile for Web applications that typically use a large volume of static content and require global reach.

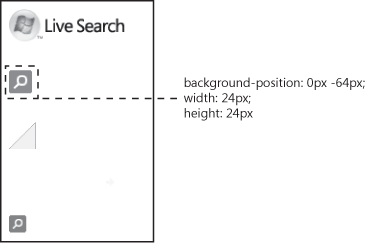

CSS Sprites group several smaller images into one composite image and display them using CSS background positioning. This technique is recommended for improving Web application performance, as it promotes a more effective use of bandwidth when compared with downloading several smaller images independently during a page load. This practice is very effective because it leverages the sliding windows algorithms used by TCP. Sliding windows algorithms are used by TCP as a way to control the flow of packets between computers on the Internet. Generally, TCP requires that all transmitted data be acknowledged by the computer that is receiving the data from the initiating computer. Sliding window algorithms are methods that enable multiple packets of data to be acknowledged with a single acknowledgement instead of multiple. Therefore, sliding windows will work better for transmission of fewer, larger files rather than several smaller ones. This means that application developers who build Web applications that require a number of small files like iconography, or other small static artwork, should cluster images together and display those using CSS Sprites. Let us explore an example of how CSS Sprites are utilized.

Consider the following code snippet from Microsoft’s Live Search site in conjunction with 4-4. Notice that 4-4 is a collection of four small icon files that have been combined into a single vertical strip of images. The code below loads the single file of three images known as asset4.gif and uses CSS positioning to display them as what appears to be singular images. This methodology ensures that only one HTTP request is made for the single image file, instead of four. Although this is a method of rendering images that is very different from what most Web developers have been taught, it promotes a much better performing experience than traditional image rendering. Therefore, this practice is recommended for Web applications that require several small, single images.

<style type="text/css">

input.sw_qbtn

{

background-color: #549C00;

background-image: url(/s/live/asset4.gif);

background-position: 0 -64px;

background-repeat: no-repeat;

border: none;

cursor: pointer;

height: 24px;

margin: .14em;

margin-right: .2em;

vertical-align: middle;

width: 24px; padding-top:24px;line-height:500%

}

</style>Thus far, we have discussed some examples of architectural and coding best practices for developing high performance Web applications. Let us review a few additional practices related to the organization and writing of application code that will also help improve the performance of your Web application pages.

Application developers have two basic options for incorporating JavaScript and CSS in their Web applications. They can choose to separate the scripts into external files or add the script inline within the page markup. Generally, in terms of pure speed, inserting JavaScript and CSS inline is faster in terms of page load rendering, but there are other factors that make this the incorrect choice. By making JavaScript and CSS external, application users will benefit from the inherent caching of these files within the Web browser, so subsequent requests for the application will be faster. However, the downside to this approach is that the user incurs the additional HTTP requests for fetching the file or files. Clearly there are tradeoffs to making scripts external for initial page loads, but in the long run where users are continuously returning to your application, making scripts external is a much better solution than inserting script inline within the page.

Progressively rendering a Web page is an important visual progress indicator for users of your Web application, especially on slower connections. Ideally, we want the browser to display the page content as quickly as it is received from the server. Unfortunately, some browsers will prohibit progressive rendering of the page if Style Sheets are placed near the bottom of the document. They do this to avoid redrawing elements of the page if their respective styles change. Application developers should always reference the required CSS files within the HEAD section of the HTML document so that the browser knows how to properly display the content and can do so gradually. If CSS files are present outside the HEAD section of the HTML document, then the browser will block progressive rendering until it finds the necessary styles. This produces a more poorly performing Web browsing experience.

When Web browsers load JavaScript files, they block additional downloading of other content, including any content being downloaded on parallel TCP ports. The browser behaves in this manner to ensure that the scripts being downloaded execute in the proper order and do not need to alter the page through document.write operations. While this makes perfect sense from a page processing perspective, it does little to help the performance of the page load. Therefore, application developers should defer the loading of any JavaScript until the end of the page, which will ensure that the application users get the benefit of progressive page loading.

Note

It is worth mentioning that the release of Internet Explorer 8.0 addresses this problem. However, application developers should be cognizant of the browser types that are being utilized to use their application and design accordingly.

Throughout this chapter, we have discussed a variety of common challenges and circumstances that lead to poorly performing Web applications. We have also seen how, with each challenge, there is a corresponding mitigation strategy or technique for ensuring that Web application pages perform well. While these techniques and strategies provide a tactical means to improve the performance of your Web applications, performance best practices must also be incorporated into day-to-day engineering processes and procedures. The key to implementing engineering best practices, such as those associated with performance, is to ensure that they are properly complemented by a sound set of engineering processes that incorporate them into the normal rhythm of software development. Let’s review how best to accomplish this.