Some of us will do our jobs well and some will not, but we will all be judged by only one thing—the result.

As you have probably guessed by now, writing solid code is about more than just writing application code. Investing in thoughtful designs, writing efficient programs, and incorporating development best practices are all important aspects of developing software applications. However, code requires exercise and analysis to ensure that the features and functionality being built work as expected. The users of our applications will, as the quote suggests, judge our efforts by the results that we deliver them. Therefore, it is important that we understand the quality of our application code before releasing it to them. By incorporating automated code analysis and testing into day-to-day engineering processes, we are better able to predict application quality and find and address bugs earlier in the release cycle.

Automated testing, sometimes referred to as test automation, is the use of software to control the execution of tests. This involves using programming frameworks such as the one found in Visual Studio or open source projects like NUnit to automate testing against various sections of the application code. These automated tests help to determine whether the targeted areas of code are functioning as expected. With these frameworks, developers and testers can programmatically control test preconditions, execution conditions, or post conditions such as automated bug filing when tests fail. Additionally, these frameworks provide the building blocks for developing a rich suite of automated tests. These suites of tests can be used to reduce the cost of application testing while improving the reliability and repeatability of the test effort.

Incorporating programmatic, or automated, testing within the application development process is fundamental to understanding how an application will perform when users interact with it. Manual testing remains a great method for discovering usability centric issues, or problems with complex user interaction flows, and should not be absent from the software-testing process. However, programmatic testing almost always helps find bugs earlier in the release cycle, which helps to improve overall code quality, especially when release cycles are short.

When automated code analysis, testing, and code coverage are applied within software development teams, the result is generally higher code quality, fewer regression bugs, and more stable application builds. Applying this level of automated rigor in the development process accrues value with every feature that gets added to the application. As software complexity increases, automated test execution and coverage keeps pace and helps maintain the quality of the application. Throughout this chapter, we will discuss the importance of investing in test automation and focus on tactics and tools for helping application development teams streamline their testing processes and address the quality of application code earlier in the release cycle. Additionally, we will highlight key metrics that can provide actionable indicators for code quality during the development cycle, which further helps to manage application quality. This chapter is for both developers and testers alike as both are equally critical to the process of managing application quality. Let’s start by discussing ways to streamline the testing process.

Throughout this book, we have discussed the "cost" associated with finding and fixing bugs within the application development life cycle. In Chapter 2, we cited "The Economic Impacts of Inadequate Infrastructure for Software Testing" report as one example of the cost associated with finding bugs late in the development cycle versus earlier. These findings also correlate with the Windows Live Hotmail case study of Chapter 1, which found that, as the team improved its testing during the development cycle, the team noted fewer bugs during the later phases of the release. As more development teams adopt increasingly agile development models and shorter release cycles, it is a significant challenge to ensure high levels of quality with less time to develop and test. To address this, software engineering teams must invest in building a set of automated test cases that will both increase the amount of test coverage being applied over a shorter period of time and improve the consistency and repeatability of the testing.

As with any investment in automation, the benefits of increased efficiency, productivity, and repeatability of the process being automated are more than worth the investment required to get there. We can simply look back at some of the large-scale successes of the industrial revolution in the United States to understand the benefits that the automation of the assembly line brought to automobile manufacturing. Investing in test automation has a very similar value proposition. Once a test framework is in place and the corpus of automated tests is established and running, evaluating changes to the application code requires significantly less work and is accomplished in a fraction of the time it would take to test manually. This not only reduces the overall cost of developing a feature, but it also increases the probability of finding issues very early in the feature development cycle. That said, automation does not represent a replacement for real, live, breathing, and thinking human testers and manual testing. There remain many testing scenarios, especially with user interfaces, where manual testing is the only way to find certain classes of bugs. Therefore, it is important to adopt the right balance of automated and manual testing within your overall testing philosophy.

There is clear value to application quality that originates from investments in testing processes and practices. Although we have briefly started discussing automated testing, there are also other ways to approach improving the testing process from an end-to-end perspective. Let’s begin by discussing the importance of establishing a test rhythm for the team.

For any software development team, clear processes and procedures help increase efficiency, control costs, and, in general, improve the quality of the output. This principle applies broadly across the overall software development process and is especially important to critical activities like testing. Defining a structured and repeatable test rhythm for your project team not only provides a framework for managing the project’s quality gates but ultimately helps improve the consistency and repeatability of the testing effort. This involves identifying key points in the application development cycle and interleaving the appropriate testing processes so that specific quality objectives are achieved as a result of the testing. Accomplishing this requires that we first review some of the common types of testing that can be applied at various steps in the development process.

Unit testing. This is a method for examining and verifying the functionality of the individual units of code that make up the system. Typically, unit tests are written to exercise individual methods and properties of a class to ensure that the functionality has been implemented as specified and is working correctly. Unit tests are generally written by the application feature developers and often used to verify the quality of a feature before it is checked in to source control. This is sometimes referred to as white-box testing.

Build Verification Testing (BVT). Typically, BVTs are represented by a suite of individual test cases that are collectively used to verify the quality of an application’s build before being released for broader testing. The BVT test cases are often representative of major application functionality and focused on providing a quick examination of build quality. If an application build fails any of the test cases within the BVT suite of tests, the entire build is rejected from being tested any further. If an application build passes the BVT suite of tests, it is believed to be of sufficient quality to warrant broader, more focused testing. This type of testing is often referred to as black-box testing.

Functional testing. This type of testing evaluates the entire application in the context of specific functional requirements. It is intended to examine the limits of the application features both from the design and customer perspectives and to essentially find the quality boundaries. This type of testing can be very broadly scoped and also include other aspects of testing like stress, security, or performance testing of the application features.

Load testing. Sometimes referred to as scalability testing or stress testing, load testing is used to measure an application’s ability to perform under an increased user load. This type of testing is predominately used to determine the resource consumption behavior of an application, such as how much memory or CPU it utilizes, and its ability to scale out to meet increased user demand. Load testing is often performed a few times within a typical testing cycle and, when the outcome is compared with previous results, can inform development teams of the effects of recent feature additions on application performance overall.

Security testing. This is a much more specialized form of testing that is designed to evaluate applications for flaws in security that could either compromise the integrity of a user’s personal information or expose the user or software to other malicious attacks. In recent years, security testing has become increasingly important as software becomes more globally connected and subject to a wide variety of predatory practices. Security testing is often used in conjunction with functional testing to ensure that the key security concepts of confidentiality, integrity, authentication, authorization, availability, and non-repudiation are addressed. In Chapter 6, we discussed threat modeling as a mechanism for analyzing application security threats during application design. The results of threat models are a valuable starting point for creating a security test plan and strategy and should be incorporated as part of the security testing effort.

Performance testing. Also used in conjunction with functional testing, performance testing is utilized to determine the speed of an application or specific functionality within an application. In Chapter 4, performance testing was mentioned in the context of measuring page load times or the speed at which Web application pages were being delivered to an end user’s browser. While this is one application of performance analysis, other methods may evaluate the speed of connections to data sources or even the number of specific calculations over a given interval of time.

Integration testing. This type of testing is broadly defined as the phase of software testing where all components of the application are brought together and evaluated for end-to-end quality. In some cases, this includes testing across all integrated systems upon which the application relies. The goal of this phase of testing is to ensure that functional scenarios are tested across the application and that all components of the system interact with one another appropriately.

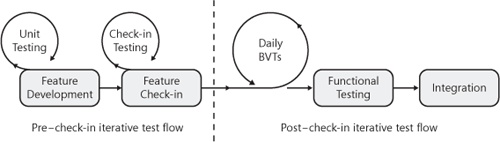

Having reviewed a few of the common types of testing that can be applied during the development process, it’s important that we examine how to apply them to a structured testing rhythm. As an example, let’s consider the testing process illustrated in 10-1.

This example illustrates how we can interleave testing into day-to-day application development processes as a means to not only improve quality but to maintain agility in feature development. In this diagram, you may notice the line that separates the testing processes that occur before feature check-in from those that occur after check-in. During feature development, specific testing procedures such as automated unit testing and check-in testing can be used to iteratively evaluate quality as a means to prevent low-quality code from being introduced into the application’s source repository. Additionally, after feature code has been checked in, daily build verification testing and a regimen of functional and integration testing can be used to further evaluate the overall quality of the application. This balance of testing in our hypothetical process intends to achieve higher check-in and build quality, which translates into finding and addressing quality issues earlier in the release cycle.

It is important that the appropriate test processes and rhythms be established and incorporated into the overall software development life cycle within your organization. Without the correct mechanisms for finding, fixing, and tracking issues within your software applications, it will be nearly impossible to fully understand the quality level of your product. Because we have already established the need for a formal testing process that integrates into the day-to-day development efforts, let’s discuss the importance of managing the test work load.

We have already established that delivering successful products that are of high quality is about more than just coding. It is very important to ensure that all aspects of the project are aligned so that the team members are assured that they have performed their jobs effectively. This implies that the requirements we identified, the code we wrote, and the testing we have completed all reconcile with one another. Effective project teams recognize this and establish the processes required to align work items across the program management, development, and test disciplines. Fortunately, Visual Studio Team Foundation (VSTF), which is part of the Visual Studio Team System suite of client and server products, allows teams to accomplish this with relative ease.

In VSTF, all team participants across the aforementioned disciplines can track their respective work individually and relate work items through linking. For testers on the project team, this means making sure that work items for each test case being written are clearly identified and entered into VSTF. These test work items can then be linked to other work items, such as those that represent the coding effort or the scenario that defined the feature. This allows test teams to make sure that they are building automated tests for each feature within the project. Additionally, this makes building queries to track test progress across all features, or within a specific feature, possible.

Note

Visual Studio Team Foundation 2008 does not make a "Test Work Item" template available out of the box, which would be useful for tracking work item properties that are specific to test work items as opposed to more generic work items. Fortunately, VSTF extensibility allows you to create custom work item types for the project template your team is using. Thus, you can create custom work item types for coding, testing, or any other function that might require extended work item properties.

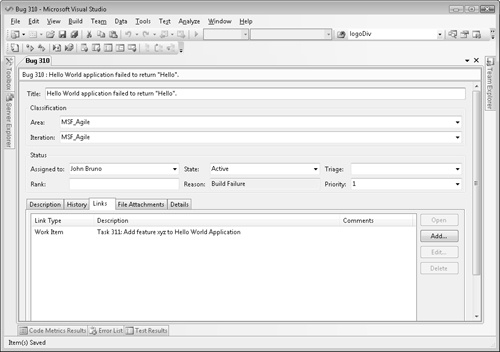

In addition to tracking test case development work items, test teams also need to ensure they are appropriately tracking any bugs found as a result of testing efforts. Bug tracking is perhaps the most important mechanism for understanding the state of quality in an application at any stage of the testing process. It is imperative that application teams have a process in place for logging application bugs and appropriately triaging and fixing them. To accommodate these practices, VSTF provides a "Bug" work item template as one of the default work item types. Like any other work item type within VSTF, bugs can be linked to other work items, dependent bugs, or the results of an automated test run. This allows a more granular level of quality tracking and reporting. Thus, teams can periodically review the bugs that have been logged, assess their priority, and assign them to other team members for resolution. An example of a bug that has been linked to an associative work item in VSTF is shown in 10-2.

Thus far, we have established that defining a structured and repeatable test rhythm for your project team not only provides a framework for managing the project’s quality gates but ultimately helps improve the consistency and repeatability of the testing effort. Accomplishing this includes defining a test rhythm and process that integrates into the development cycle in a way that pushes quality upstream. We reviewed an example of one such process in 10-1, and we discussed the importance of leveraging different forms of automated testing at different stages of the development process. Investing in this type of process for your team will pay dividends in terms of team efficiency, effectiveness, and overall application quality. Tightly coupling a formal testing process with the appropriate tracking mechanisms will give the team a more granular understanding of its progress and thus help the team govern its efforts more effectively. Once the appropriate test rhythm and tracking mechanisms have been instituted, test teams can focus the bulk of their energy on the most important aspects of their job, which is evaluating application features and code and finding bugs. Let’s review some common approaches to performing the code analysis necessary to find quality issues within the application code.