Let us change our traditional attitude to the construction of programs. Instead of imagining that our main task is to instruct a computer what to do, let us concentrate rather on explaining to human beings what we want a computer to do.

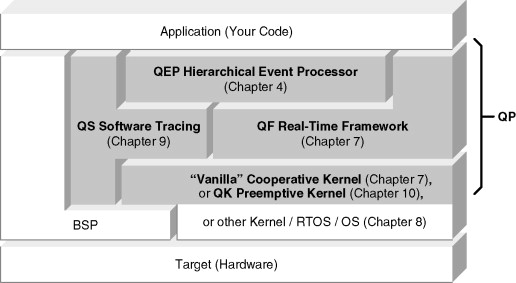

In this chapter I describe the implementation of a lightweight real-time framework called QF. As shown in Figure 7.1, QF is the central component of the QP event-driven platform, which also includes the QEP hierarchical event processor (described in Part I of this book) as well as the preemptive run-to-completion (RTC) kernel (QK) and the software tracing instrumentation (QS).

The focus of this chapter is on the generic, platform-independent QF source code. I devote Chapter 8 entirely to describing the platform-specific code that depends on the specific processor, compiler, and operating system/RTOS (including the case where QF is used without an RTOS).

I describe QF in the top-down fashion beginning with an overview of the QF features, then presenting the framework structure, both logical (partitioning into classes) and physical (partitioning into files). In the remaining bulk of the chapter I explain the implementation of the QF services. As usual, I mostly refer to the C source code (located in the <qp>qpc directory in the accompanying code). I mention the C++ version (located in the <qp>qpcpp directory) only when the differences from C become important.

QF is a generic, portable, scalable, lightweight, real-time framework designed specifically for the domain of real-time embedded systems (RTES). QF can manage up to 63 concurrently executing active objects,1 This does not mean that your application is limited to 63 state machines. Each active object can manage an unlimited number of stateful components, as described in the “Orthogonal Component” state pattern in Chapter 5. which are encapsulated tasks (each embedding a state machine and an event queue) that communicate with one another asynchronously by sending and receiving events.

The “embedded” design mindset means that QF is efficient in both time and space. Moreover, QF uses only deterministic algorithms, so you can always determine the upper bound on the execution time, the maximum interrupt disabling time, and the required stack space of any given QF service. QF also does not call any external code, not even the standard C or C++ libraries. In particular, QF does not use the standard heap (malloc() or the C++ operator new). Instead, the framework leaves to the clients the instantiation of any framework-derived objects and the initialization of the framework with the memory it needs for operation. All this memory could be allocated statically in hard real-time applications, but you could also use the standard heap or any combination of memory allocation mechanisms in other types of applications.

The companion Website to this book at www.quantum-leaps.com/psicc2/ contains the complete source code for all QP components, including QF. I hope that you will find the source code very clean and consistent. The code has been written in strict adherence to the coding standard documented at www.quantum-leaps.com/doc/AN_QL_Coding_Standard.pdf.

All QP source code is “lint-free.” The compliance was checked with PC-lint/FlexLint static analysis tool from Gimpel Software (www.gimpel.com). The QP distribution includes the <qp>qpcportslint subdirectory, which contains the batch script make.bat for compiling all the QP components with PC-lint.

The QP source code is also 98 percent compliant with the Motor Industry Software Reliability Association (MISRA) Guidelines for the Use of the C Language in Vehicle-Based Software [MISRA 98]. MISRA created these standards to improve the reliability and predictability of C programs in critical automotive systems. Full details of this standard can be obtained directly from the MISRA Website at www.misra.org.uk. The PC-lint configuration used to analyze QP code includes the MISRA rule checker.

Finally and most important, I believe that simply giving you the source code is not enough. To gain real confidence in event-driven programming, you need to understand how a real-time framework is ultimately implemented and how the different pieces fit together. This book, and especially this chapter, provides this kind of information.

All QF source code is written in portable ANSI-C, or in the Embedded C++ subset2 Embedded C++ subset is defined online at www.caravan.net/ec2plus/. in case of QF/C++, with all processor-specific, compiler-specific, or operating system-specific code abstracted into a clearly defined platform abstraction layer (PAL).

In the simplest standalone configurations, QF runs on “bare-metal” target CPU completely replacing the traditional RTOS. As shown in Figure 7.1, the QP event-driven platform includes the simple nonpreemptive “vanilla” scheduler as well as the fully preemptive kernel QK. To date, the standalone QF configurations have been ported to over 10 different CPU architectures, ranging from 8-bit (e.g., 8051, PIC, AVR, 68H(S)08), through 16-bit (e.g., MSP430, M16C, x86-real mode) to 32-bit architectures (e.g., traditional ARM, ARM Cortex-M3, Cold Fire Altera Nios II, x86).

The QF framework can also work with a traditional OS/RTOS to take advantage of the existing device drivers, communication stacks, middleware, or any legacy code that requires a conventional “blocking” kernel. To date, QF has been ported to six major operating systems and RTOSs, including Linux (POSIX) and Win32.

As you'll see in the course of this chapter, high portability is the main challenge in writing a widely useable real-time framework like QF. Obviously, coming up with an efficient PAL that would correctly capture all possible platform variances required many iterations and actually porting the framework to several CPUs, operating systems, and compilers. (I describe porting the QF framework in Chapter 8.) The www.quantum-leaps.com Website contains the steadily growing number of QF ports, examples, and documentation.

All components of the QP event-driven platform, especially the QF real-time framework, are designed for scalability so that your final application image contains only the services that you actually use. QF is designed for deployment as a fine-granularity object library that you statically link with your applications. This strategy puts the onus on the linker to eliminate any unused code automatically at link time instead of burdening the application programmer with configuring the QF code for each application at compile time.

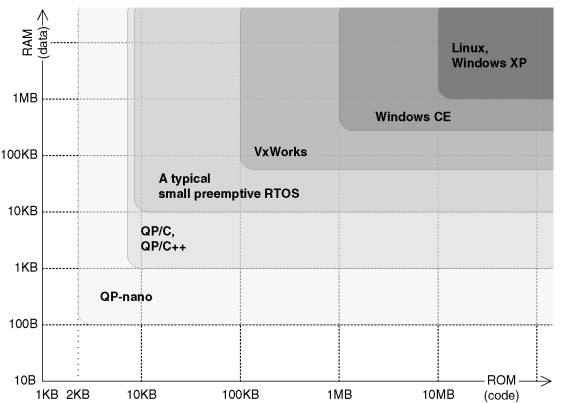

As shown in Figure 7.2, a minimal QP/C or QP/C++ system requires some 8KB of code space (ROM) and about 1KB of data space (RAM) to leave enough room for a meaningful application code and data. This code size corresponds to the footprint of a typical, small, bare-bones RTOS application except that the RTOS approach typically requires more RAM for the stacks.

Note

A typical, standalone QP configuration with QEP, QF, and the “vanilla” scheduler or the QK preemptive kernel, with all major features enabled, requires around 2-4KB of code. Obviously you need to budget additional ROM and RAM for your own application code and data. Figure 7.2 shows the application footprint.

However, the event-driven approach scales down even further, beyond the reach of any conventional RTOS. To address still smaller systems, a reduced QP version called QP-nano implements a subset of features provided in QP/C or QP/C++. QP-nano has been specifically designed to enable active object computing with hierarchical state machines on low-end 8- and 16-bit embedded MCUs. As shown in Figure 7.2, a meaningful QP-nano application starts from about 100 bytes of RAM and 2KB of ROM. I describe QP-nano in Chapter 11.

On the opposite end of the complexity spectrum, QP applications can also scale up to very big systems with gigabytes of RAM and multiple or multicore CPUs. The large-scale applications, such as various servers, have often large numbers of stateful components to manage, so the efficiency per component becomes critical. It turns out that the lightweight, event-driven, state machine-based approach easily scales up and offers many benefits over the traditional thread-per-component paradigm.

As shown in Figure 7.1, the QF real-time framework is designed to work closely with the QEP hierarchical event processor (Chapter 4). The two components complement each other in that QEP provides the UML-compliant state machine implementation, whereas QF provides the infrastructure of executing such state machines concurrently.

The design of QF leaves a lot of flexibility, however. You can configure the base class for derivation of active objects to be either the QHsm hierarchical state machine (Section 4.5 in Chapter 4), the simpler QFsm nonhierarchical state machine (Section 3.6 in Chapter 3), or even your own base class not defined in the QEP event processor. The latter option allows you to use QF with your own event processor.

The QF real-time framework supports direct event posting to specific active objects with first-in, first-out (FIFO) and last-in, first-out (LIFO) policies. QF also supports the more advanced publish-subscribe event delivery mechanism, as described in Section 6.4 in Chapter 6. Both mechanisms can coexist in a single application.

Perhaps that most valuable feature provided by the QF real-time framework is the efficient “zero-copy” event memory management, as described in Section 6.5 in Chapter 6. QF supports event multicasting based on the reference-counting algorithm, automatic garbage collection for events, efficient static events, “zero-copy” event deferral, and up to three event pools with different block sizes for optimal memory utilization.

QF can manage an open-ended number of time events (timers). QF time events are extensible via structure derivation (inheritance in C++). Each time event can be armed as a one-shot or a periodic timeout generator. Only armed (active) time events consume CPU cycles.

QF provides two versions of native event queues. The first version is optimized for active objects and contains a portability layer to adapt it for either blocking kernels, the simple cooperative “vanilla” kernel (Section 6.3.7), or the QK preemptive kernel (Section 6.3.8 in Chapter 6). The second native queue version is a simple “thread-safe” queue not capable of blocking and designed for sending events to interrupts as well as storing deferred events. Both native QF event queue types are lightweight, efficient, deterministic, and thread-safe. They are optimized for passing just the pointers to events and are probably smaller and faster than full-blown message queues available in a typical RTOS.

QF provides a fast, deterministic, and thread-safe memory pool. Internally, QF uses memory pools as event pools for managing dynamic events, but you can also use memory pools for allocating any other objects in your application.

The QF real-time framework contains a portable, cooperative “vanilla” kernel, as described in Section 6.3.7 of Chapter 6. Chapter 8 presents the QF port to the “vanilla” kernel.

The QF real-time framework can also work with a deterministic, preemptive, nonblocking QK kernel. As described in Section 6.3.8 in Chapter 6, run-to-completion kernels, like QK, provide preemptive multitasking to event-driven systems at a fraction of the cost in CPU and stack usage compared to traditional blocking kernels/RTOSs. I describe QK implementation in Chapter 10.

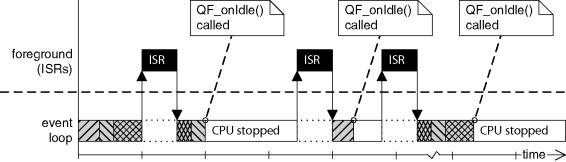

Most modern embedded microcontrollers (MCUs) provide an assortment of low-power sleep modes designed to conserve power by gating the clock to the CPU and various peripherals. The sleep modes are entered under the software control and are exited upon an external interrupt.

The event-driven paradigm is particularly suitable for taking advantage of these power-savings features because every event-driven system can easily detect situations in which the system has no more events to process, called the idle condition (Section 6.3.7). In both standalone QF configurations, either with the cooperative “vanilla” kernel or with the QK preemptive kernel, the QF framework provides callback functions for handling the idle condition. These callbacks are carefully designed to place the MCU into a low-power sleep mode safely and without creating race conditions with active interrupts.

The QF real-time framework consistently uses the Design by Contract (DbC) philosophy described in Section 6.7 in Chapter 6. QF constantly monitors the application by means of assertions built into the framework. Among others, QF uses assertions to enforce the event delivery guarantee, which immensely simplifies event-driven application design.

As described in Section 6.8 in Chapter 6, a real-time framework can use software-tracing techniques to provide more comprehensive and detailed information about the running application than any traditional RTOS. The QF code contains the software-tracing instrumentation so it can provide unprecedented visibility into the system. Nominally the instrumentation is inactive, meaning that it does not add any code size or runtime overhead. But by defining the macro Q_SPY, you can activate the instrumentation. I devote all of Chapter 11 to software tracing.

Note

The QF code is instrumented with QS (Q-Spy) macros to generate software trace output from active object execution. However, the instrumentation is disabled by default and for better clarity will not be shown in the listings discussed in this chapter. Refer to Chapter 11 for more information about the QS software-tracing implementation.

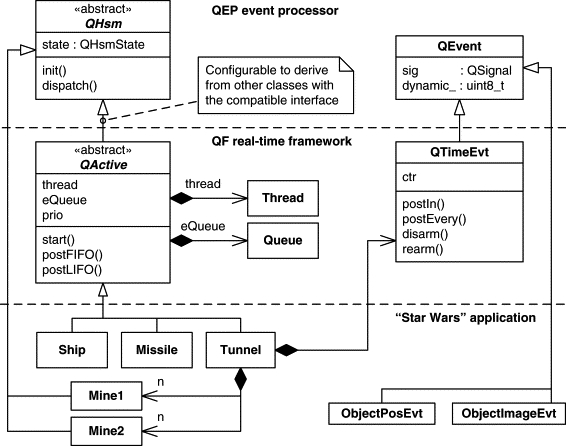

Figure 7.3 shows the main QF classes and their relation to the application-level code, such as the “Fly ‘n’ Shoot” game example from Chapter 1. As all real-time frameworks, QF provides the central base class QActive for derivation of active object classes. The QActive class is abstract, which means that it is not intended for direct instantiation but rather only for derivation of concrete3 Concrete class is the OOP term and denotes a class that has no abstract operations or protected constructors. Concrete class can be instantiated, as opposed to abstract class, which cannot be instantiated. active object classes, such as Ship, Missile, and Tunnel shown in Figure 7.3.

By default, the QActive class derives from the QHsm hierarchical state machine class defined in the QEP event processor (Chapter 4). This means that by virtue of inheritance active objects are HSMs and inherit the init() and dispatch() state machine interface. QActive also contains a thread of execution and an event queue, which can be native QF classes, or might be coming from the underlying RTOS.

QF uses the same QEvent class for representing events as the QEP event processor. Additionally, the framework supplies the time event class QTimeEvt, with which the applications make timeout requests.

QF provides also several services to the applications, which are not shown in the class diagram in Figure 7.3. These additional QF services include generating new dynamic events (Q_NEW()), publishing events (QF_publish()), the native QF event queue class (QEQueue), the native QF memory pool class (QMPool), and the built-in cooperative “vanilla” kernel (see Chapter 6, Section 6.3.7).

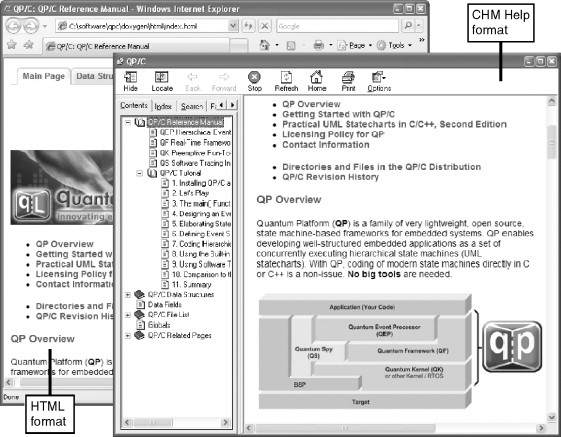

Listing 7.1 shows the platform-independent directories and files comprising the QF real-time framework in C. The structure of the C++ version is almost identical except that the implementation files have the .cpp extension.

Listing 7.1. Platform-independent QF source code organization

qpc - QP/C root directory (qpcpp for QP/C++)

+-doxygen - QP/C documentation generated with Doxygen

| +-html - “QP/C Reference Manual” in HTML format

| | +-index.html - The starting HTML page for the “QP/C Reference Manual”

| +-Doxyfile - Doxygen configuration file to generate the Manual

| +-qpc.chm - “QP/C Reference Manual” in CHM Help format

| +-qpc_rev.h - QP/C revision history

+-include - QP platform-independent header files

| +-qf.h - QF platform-independent interface

| +-qequeue.h - QF native event queue facility

| +-qmpool.h - QF native memory pool facility

| +-qpset.h - QF native priority set facility

| +-qvanilla.h - QF native “vanilla” cooperative kernel interface

| +-source - QF platform-independent source code (*.C files)

| | +-qf_pkg.h - internal, interface for the QF implementation

| | +-qa_defer.c - definition of QActive_defer()/QActive_recall()

| | +-qa_ctor.c - definition of QActive_ctor()

| | +-qa_fifo.c - definition of QActive_postFIFO()

| | +-qa_fifo_.c - definition of QActive_postFIFO_()

| | +-qa_get_.c - definition of QActive_get_()

| | +-qa_lifo.c - definition of QActive_postLIFO()

| | +-qa_lifo_.c - definition of QActive_postLIFO_()

| | +-qa_sub.c - definition of QActive_subscribe()

| | +-qa_usub.c - definition of QActive_unsubscribe()

| | +-qa_usuba.c - definition of QActive_unsubscribeAll()

| | +-qeq_fifo.c - definition of QEQueue_postFIFO()

| | +-qeq_get.c - definition of QEQueue_get()

| | +-qeq_init.c - definition of QEQueue_init()

| | +-qeq_lifo.c - definition of QEQueue_postLIFO()

| | +-qf_act.c - definition of QF_active_[]

| | +-qf_gc.c - definition of QF_gc_()

| | +-qf_log2.c - definition of QF_log2Lkup[]

| | +-qf_new.c - definition of QF_new_()

| | +-qf_pool.c - definition of QF_poolInit()

| | +-qf_psini.c - definition of QF_psInit()

| | +-qf_pspub.c - definition of QF_publish()

| | +-qf_pwr2.c - definition of QF_pwr2Lkup_[]

| | +-qf_tick.c - definition of QF_tick()

| | +-qmp_get.c - definition of QMPool_get()

| | +-qmp_init.c - definition of QMPool_init()

| | +-qmp_put.c - definition of QMPool_put()

| | +-qte_arm.c - definition of QTimeEvt_arm_()

| | +-qte_ctor.c - definition of QTimeEvt_ctor()

| | +-qte_darm.c - definition of QTimeEvt_darm()

| | +-qte_rarm.c - definition of QTimeEvt_rearm()

| | +-qvanilla.c - “vanilla” cooperative kernel implementation

| +-lint - QF options for lint

| | +-opt_qf.lnt - PC-lint options for linting QF

+-ports - Platform-specific QP ports

The QF source files contain typically just one function or a data structure definition per file. This design aims at deploying QF as a fine-granularity library that you statically link with your applications. Fine granularity means that the QF library consists of several small, loosely coupled modules (object files) rather than a single module that contains all functionality. For example, a separate module qa_lifo.c implements the QActive_postLIFO() function; therefore, if your application never calls this function, the linker will not pull in the qa_lifo.obj module. This strategy puts the burden on the linker to do the “heavy lifting” of automatically eliminating any unused code at link time, rather than on the application programmer to configure the QF code for each application at compile time.

QF, just like any other system-level software, must protect certain sequences of instructions against preemptions to guarantee thread-safe operation. The sections of code that must be executed indivisibly are called critical sections.

In an embedded system environment, QF uses the simplest and most efficient way to protect a section of code from disruptions, which is to lock interrupts on entry to the critical section and unlock interrupts at the exit from the critical section. In systems where locking interrupts is not allowed, QF can employ other mechanisms supported by the underlying operating system, such as a mutex.

Note

The maximum time spent in a critical section directly affects the system's responsiveness to external events (interrupt latency). All QF critical sections are carefully designed to be as short as possible and are of the same order as critical sections in any commercial RTOS. Of course, the length of critical sections depends on the processor architecture and the quality of the code generated by the compiler.

To hide the actual critical section implementation method available for a particular processor, compiler, and operating system, the QF platform abstraction layer includes two macros, QF_INT_LOCK() and QF_INT_UNLOCK(), to lock and unlock interrupts, respectively.

The most general critical section implementation involves saving the interrupt status before entering the critical section and restoring the status upon exit from the critical section. Listing 7.2 illustrates the use of this critical section type.

(1) The temporary variable

lock_keyholds the interrupt status across the critical section.(2) Right before entering the critical section, the current interrupt status is obtained from the CPU and saved in the

lock_keyvariable. Of course, the name of the actual function to obtain the interrupt status can be different in your system. This function could actually be a macro or inline assembly statement.(3) Interrupts are locked using the mechanism provided by the compiler.

(4) This section of code executes indivisibly because it cannot be interrupted.

(5) The original interrupt status is restored from the

lock_keyvariable. This step unlocks interrupts only if they were unlocked at step 2. Otherwise, interrupts remain locked.

Listing 7.3 shows an example of the “saving and restoring interrupt status” policy.

(1) The macro

QF_INT_KEY_TYPEdenotes a data type of the “interrupt key” variable, which holds the interrupt status. Defining this macro in theqf_port.hheader file indicates to the QF framework that the policy of “saving and restoring interrupt status” is used, as opposed to the policy of “unconditional locking and unlocking interrupts” described in the next section.(2) The macro

QF_INT_LOCK()encapsulates the mechanism of interrupt locking. The macro takes the parameterkey_, into which it saves the interrupt lock status.

Note

The

do {…} while (0)loop around theQF_INT_LOCK()macro is the standard practice for syntactically correct grouping of instructions. You should convince yourself that the macro can be used safely inside theif-elsestatement (with the semicolon after the macro) without causing the “dangling-else” problem. I use this technique extensively in many QF macros.

The main advantage of the “saving and restoring interrupt status” policy is the ability to nest critical sections. The QF real-time framework is carefully designed to never nest critical sections internally. However, nesting of critical sections can easily occur when QF functions are invoked from within an already established critical section, such as an interrupt service routine (ISR). Most processors lock interrupts in hardware upon the interrupt entry and unlock upon the interrupt exit, so the whole ISR is a critical section. Sometimes you can unlock interrupts inside ISRs, but often you cannot. In the latter case, you have no choice but to invoke QF services, such as event posting or publishing, with interrupts locked. This is exactly when you must use this type of critical section.

The simpler and faster critical section policy is to always unconditionally unlock interrupts in QF_INT_UNLOCK(). Listing 7.4 provides an example of the QF macro definitions to specify this type of critical section.

(1) The macro

QF_INT_KEY_TYPEis not defined in this case. The absence of theQF_INT_KEY_TYPEmacro indicates to the QF framework that the interrupt status is not saved across the critical section.(2) The macro

QF_INT_LOCK()encapsulates the mechanism of interrupt locking. The macro takes the parameterkey_,but this parameter is not used in this case.(3) The macro

QF_INT_UNLOCK()encapsulates the mechanism of unlocking interrupts. The macro always unconditionally unlocks interrupts. The parameterkey_is ignored in this case.

The policy of “unconditional locking and unlocking interrupts” is simple and fast, but it does not allow nesting of critical sections, because interrupts are always unlocked upon exit from a critical section, regardless of whether interrupts were already locked on entry.

The inability to nest critical sections does not necessarily mean that you cannot nest interrupts. Many processors are equipped with a prioritized interrupt controller, such as the Intel 8259A Programmable Interrupt Controller (PIC) in the PC or the Nested Vectored Interrupt Controller (NVIC) integrated inside the ARM Cortex-M3. Such interrupt controllers handle interrupt prioritization and nesting before the interrupts reach the processor core. Therefore, you can safely unlock interrupts at the processor level, thus avoiding nesting of critical sections inside ISRs. Listing 7.5 shows the general structure of an ISR in the presence of an interrupt controller.

Listing 7.5. General structure of an ISR in the presence of a prioritized interrupt controller

(1) void interrupt ISR(void) { /* entered with interrupts locked in hardware */

(2)

Acknowledge the interrupt to the interrupt controller (optional)(4) QF_INT_UNLOCK(dummy); /* unlock the interrupts at the processor level */

(5)

Handle the interrupt, use QF calls, e.g., QF_tick(), Q_NEW or QF_publish()(6) QF_INT_LOCK(dummy); /* lock the interrupts at the processor level */

(7)

Write End-Of-Interrupt (EOI) instruction to the Interrupt Controller

(1) Most processors enter the ISR with interrupts locked in hardware.

(2) The interrupt controller must be notified about entering the interrupt. Often this notification happens automatically in hardware before vectoring (jumping) to the ISR. However, sometimes the interrupt controller requires a specific notification from the software. Check your processor's datasheet.

(3) You need to explicitly clear the interrupt source, if it is level triggered. Typically you do it before unlocking interrupts at the CPU level, but a prioritized interrupt controller will prevent the same interrupt from preempting itself, so it really does not matter if you clear the source before or after unlocking interrupts.

(4) Interrupts are explicitly unlocked at the CPU level, which is the key step of this ISR. Enabling interrupts allows the interrupt controller to do its job, that is, to prioritize interrupts. At the same time, enabling interrupts terminates the critical section established upon the interrupt entry. Note that this step is only necessary when the hardware actually locks interrupts upon the interrupt entry (e.g., the ARM Cortex-M3 leaves interrupts unlocked).

(5) The main ISR body executes outside the critical section, so QF services can be safely invoked without nesting critical sections.

Note

The prioritized interrupt controller remembers the priority of the currently serviced interrupt and allows only interrupts of higher priority than the current priority to preempt the ISR. Lower- and same-priority interrupts are locked at the interrupt controller level, even though the interrupts are unlocked at the processor level. The interrupt prioritization happens in the interrupt controller hardware until the interrupt controller receives the end-of-interrupt (EOI) instruction.

The QF platform abstraction layer (PAL) uses the interrupt locking/unlocking macros QF_INT_LOCK(), QF_INT_UNLOCK(), and QF_INT_KEY_TYPE in a slightly modified form. The PAL defines internally the parameterless macros, shown in Listing 7.6. Please note the trailing underscores in the internal macros’ names.

Listing 7.6. Internal macros for interrupt locking/unlocking (file <qp>qpcqfsourceqf_pkg.h)

The internal macros QF_INT_LOCK_KEY_, QF_INT_LOCK_(), and QF_INT_UNLOCK_() enable writing the same code for the case when the interrupt key is defined and when it is not. The following code snippet shows the usage of the internal QF macros. Convince yourself that this code works correctly for both interrupt-locking policies.

As shown in Figure 7.3, the QF real-time framework provides the base structure QActive for deriving application-specific active objects. QActive combines the following three essential elements:

Listing 7.7 shows the declaration of the QActive base structure and related functions.

Listing 7.7. The QActive base class for derivation of active objects (file <qp>qpcincludeqf.h)

(3)

#define QF_ACTIVE_CTOR_(me_, initial_) QHsm_ctor((me_), (initial_))(5)

#define QF_ACTIVE_DISPATCH_(me_, e_) QHsm_dispatch((me_), (e_))(7)

QF_ACTIVE_SUPER_ super; /* derives from QF_ACTIVE_SUPER_ */(8)

QF_EQUEUE_TYPE eQueue; /* event queue of active object */(9)

QF_OS_OBJECT_TYPE osObject;/* OS-object for blocking the queue */(10)

QF_THREAD_TYPE thread; /* execution thread of the active object */(12)

uint8_t running; /* flag indicating if the AO is running */(13)

void QActive_start(QActive *me, uint8_t prio,(13)

QEvent const *qSto[], uint32_t qLen,(18)

void QActive_subscribe(QActive const *me, QSignal sig);(19)

void QActive_unsubscribe(QActive const *me, QSignal sig);(21)

void QActive_defer(QActive *me, QEQueue *eq, QEvent const *e);(22)

QEvent const *QActive_recall(QActive *me, QEQueue *eq);

(1) The macro

QF_ACTIVE_SUPER_specifies the ultimate base class for deriving active objects. This macro lets you define (in the QF port) any base class forQActiveas long as the base class supports the state machine interface. (See Chapter 3, “Generic State Machine Interface.”)(2) When the macro

QF_ACTIVE_SUPER_is not defined in the QF port, the default is theQHsmclass provided in the QEP hierarchical event processor.(3) The macro

QF_ACTIVE_CTOR_()specifies the name of the base class constructor.(4) The macro

QF_ACTIVE_INIT_()specifies the name of the base classinit()function.(5) The macro

QF_ACTIVE_DISPATCH_()specifies the name of the base classdispatch()function.(6) The macro

QF_ACTIVE_STATE_specifies the type of the parameter for the base class constructor.

By defining the macros QF_ACTIVE_XXX_ to your own class, you can eliminate the dependencies between the QF framework and the QEP event processor. In other words, you can replace QEP with your own event processor, perhaps based on one of the techniques discussed in Chapter 3, or not based on state machines at all (e.g., you might want to try protothreads [Dunkels+ 06]). Consider the following definitions:

(7) The first member

superspecifies the base class forQActive(see the sidebar “Single Inheritance in C” in Chapter 1).(8) The type of the event queue member

eQueueis platform-specific. For example, in the standalone QF configurations, the macroQF_EQUEUE_TYPEis defined as the native QF event queueQEqueue(see Section 7.8). However, when QF is based on an external RTOS, the event queue might be implemented with a message queue of the underlying RTOS.(9) The data member

osObjectis used in some QF ports to block the native QF event queue. TheosObjectdata member is necessary when the underlying OS does not provide an adequate queue facility, so the native QF queue must be used. In that case theosObjectdata member holds an OS-specific primitive to efficiently block the native QF event queue when the queue is empty. See Chapter 8, “POSIX QF Port,” for an example of using the osObject data member.(10) The data member

threadis used in some QF ports to hold the thread handle associated with the active object.(11) The data member

prioholds the priority of the active object. In QF, each active object has a unique priority. The lowest possible task priority is 1 and higher-priority values correspond to higher-urgency active objects. The maximum allowed active object priority is determined by the macroQF_MAX_ACTIVE, which currently cannot exceed 63.(12) The data member

runningis used in some QF ports to represent whether the active object isrunning. In these ports, writing zero to the running member causes exit from the active object's event loop and cleanly terminates the active object thread.(13) The function

QActive_start()starts the active object thread. This function is platform-specific and is explained in Section 7.4.3.(14) The function

QActive_postFIFO()is used for direct event posting to the active object's event queue using the FIFO policy.(15) The function

QActive_postLIFO()is used for direct event posting to the active object's event queue using the LIFO policy.(16) The function

QActive_ctor()is the “constructor” of theQActiveclass. This constructor has the same signature as the constructor ofQHsmorQFsm(see Section 4.5.1 in Chapter 4). In fact, the main job of theQActiveconstructor is to initialize the state machine base class (the membersuper).

(17) The function

QActive_stop()stops the execution thread of the active object. This function is platform-specific and is discussed in Chapter 8. Not all QF ports need to define this function.

Note

In the C++ version, the

QActiveconstructor is protected. This prevents direct instantiation of theQActiveclass, since it is intended only for derivation (the abstract class).

Note

In the C++ version,

QActive::stop()is not equivalent to the active object destructor. The function merely causes the active object thread to eventually terminate, which might not happen immediately.

(18-20) The functions

QActive_subscribe(), QActive_usubscribe(),andQActive_unsubscribeAll()are used for subscribing and unsubscribing to events. I discuss these functions in the upcoming Section 7.6.2.(21,22) The functions

QActive_defer()andQActive_recall()are used for efficient (“zero copy”) deferring and recalling of events, respectively. I describe these functions in the upcoming Section 7.5.4.(23) The function

QActive_get_()is used to remove one event at a time from the active object's event queue. This function is used only inside QF and never at the application level. In some QF ports the functionQActive_get_()can block. I describe this function in the upcoming Section 7.4.2.

As shown in Figure 7.3, every concrete active object, such as Ship, Missile, or Tunnel in the “Fly ‘n’ Shoot” game example from Chapter 1, is a state machine because it derives indirectly from the QHsm base class or a class that supports a generic state machine interface (see the data member super in Listing 7.7(7)). Derivation means simply that every pointer to QActive or a structure derived from QActive can always be safely used as a pointer to the base structure QHsm. Such a pointer can therefore always be passed to any function designed to work with the state machine structure. At the application level, you can mostly ignore the other aspects of your active objects and view them predominantly as state machines. In fact, your main job in developing a QF application consists of elaborating the state machines of your active objects.

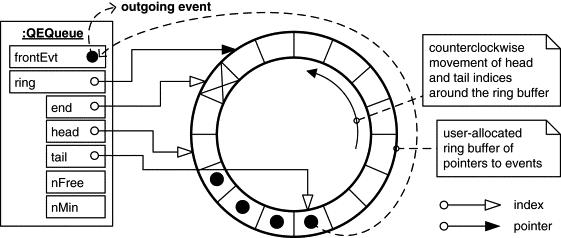

Event queues are essential components of any event-driven system because they reconcile the asynchronous production of events with the RTC semantics of their consumption. An event queue makes the corresponding active object appear to always be responsive to events, even though the internal state machine can accept events only between RTC steps. Additionally, the event queue provides buffer space that protects the internal state machine from bursts in event production that can, at times, exceed the available processing capacity.

You can view the active object's event queue as an outer rind that provides an external interface for injecting events into the active object and at the same time protects the internal state machine during RTC processing. To perform these functions, the event queue must allow any thread of execution (as well as an ISR) to asynchronously post events, but only one thread—the local thread of the active object—needs to be able to remove events from the queue. In other words, the event queue in QF needs multiple-write but only single-read access.

From the description so far, it should be clear that the event queue is quite a sophisticated mechanism. One end of the queue—the end where producers insert events—is obviously shared among many tasks and interrupts and must provide an adequate mutual exclusion mechanism to protect the internal consistency of the queue. The other end—the end from which the local active object thread extracts events—must provide a mechanism for blocking the active object when the queue is empty. In addition, an event queue must manage a buffer of events, typically organized as a ring buffer.

As shown in Figure 6.13 in Chapter 6, the “zero copy” event queues do not store actual events, only pointers to event instances. Typically these pointers point to event instances allocated dynamically from event pools (see Section 7.5.2), but they can also point to statically allocated events. You need to specify the maximum number of event pointers that a queue can hold at any one time when you start the active object with the QActive_start() function (see the next section). The correct sizing of event queues depends on many factors and generally is not a trivial task. I discuss sizing event queues in Chapter 9.

Many commercial RTOSs natively support queuing mechanisms in the form of message queues. Standard message queues are far more complex than required by active objects because they typically allow multiple-write as well as multiple-read access (the QF requires only single-read access) and often support variable-length data (not only pointer-sized data). Usually message queues also allow blocking when the queue is empty and when the queue is full, and both types of blocking can be timed out. Naturally, all this extra functionality, which you don't really need in QF, comes at an extra cost in CPU and memory usage. The QF port to the μC/OS-II RTOS described in Chapter 8 provides an example of an event queue implemented with a message queue of an RTOS. The standalone QF ports to x86/DOS and ARM Cortex-M3 (used in the “Fly ‘n’ Shoot” game from Chapter 1) provide examples of using the native QF event queue. I discuss the native QF active object queue implementation in Section 7.8.3.

Every QF active object executes in its own thread. The actual control flow within the active object thread depends on the multitasking model actually used, but the event processing always consists of the three essential steps shown in Listing 7.8.

(1) The event is extracted from the active object's event queue by means of the function

QActive_get_(). This function might block in blocking kernels. In Section 7.8.3 I describe the implementation ofQActive_get_()for the native QF active object queue. In Chapter 8 I describe theQActive_get_()implementation when a message queue of an RTOS is used instead of the native QF event queue.(2) The event is dispatched to the active object's state machine for processing (see Listing 7.7(5) for the definition of the

QF_ACTIVE_DISPATCH_()macro).

Note

Step 2 constitutes the RTC processing of the active object's state machine. The active object's thread continues only after step 2 completes.

In the presence of a traditional RTOS (e.g., VxWorks) or a multitasking operating system (e.g., Linux), the three event processing steps just explained are enclosed by the usual endless loop, as shown in Figure 6.12(A) in Chapter 6. Under a cooperative “vanilla” kernel (Figure 6.12(B)) or an RTC kernel (Figure 6.12(C)), the three steps are executed in one-shot fashion for every event.

The QActive_start() function creates the active object's thread and notifies QF to start managing the active object. A QF application needs to call the QActive_start() function on behalf of every active object in the system. In principle, active objects can be started and stopped (with QActive_stop()) multiple times during the lifetime of the application. However, in most cases, all active objects are started just once during the system initialization.

The QActive_start() function is one of the central elements of the framework, but obviously it strongly depends on the underlying multitasking kernel. Listing 7.9 shows the pseudocode of QActive_start().

Listing 7.9. QActive_start() function pseudocode

(1) The argument ‘

me’ is the pointer to the active object being started.(2) The argument ‘

prio’ is the priority you assign to the active object. In QF, every active object must have a unique priority, which you assign at startup and cannot change later. QF uses a priority numbering system in which priority 1 is the lowest and higher numbers correspond to higher priorities.

Note

You can think of QF priority 0 as corresponding to the idle task, which has the absolute lowest priority not accessible to the application-level tasks.

Note

The “initialization event” ‘

ie’ gives you an opportunity to provide some information to the active object, which is only known later in the initialization sequence (e.g., a window handle in a GUI system). Note that the active object constructor runs even beforemain()(in C++), at which time you typically don't have all the information to initialize all aspects of an active object.

QF implements the efficient “zero-copy” event delivery scheme, as described in Section 6.5 in Chapter 6. QF supports two kinds of events: (1) dynamic events managed by the framework, and (2) other events (typically statically allocated) not managed by QF. For each dynamic event, QF keeps track of the reference counter of the event (to know when to recycle the event) as well as the event pool from which the dynamic event was allocated (to recycle the event back to the same pool).

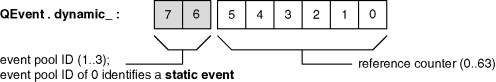

QF uses the same event representation as the QEP event processor described in Part I. Events in QF are represented as instances of the QEvent structure (shown in Listing 7.10), which contains the event signal sig and a byte dynamic_ to represent the internal “bookkeeping” information about the event.

As shown in Figure 7.4, the QF framework uses the QEvent.dynamic_ data byte in the following way.4 I avoid using bit fields because they are not quite portable. Also, the use of bit fields would be against the required MISRA rule 111. The six least-significant bits [0..5] represent the reference counter of the event, which has the dynamic range of 0..63. The two most significant bits [6..7] represent the event pool ID of the event, which has the dynamic range of 1..3. The pool ID of zero is reserved for static events, that is, events that do not come from any event pool. With this representation, a static event has a unique, easy-to-check signature (QEvent.dynamic_ == 0). Conversely, the signature (QEvent.dynamic_ != 0) unambiguously identifies a dynamic event.

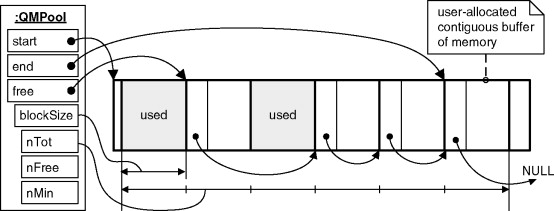

Dynamic events allow reusing the same memory over and over again for passing different events. QF allocates such events dynamically from one of the event pools managed by the framework. An event pool in QF is a fixed-block-size heap, also known as a memory partition or memory pool.

The most obvious drawback of a fixed-block-size heap is that it does not support variable-sized blocks. Consequently, the blocks have to be oversized to handle the biggest possible allocation. A good compromise to avoid wasting memory is to use not one but a few heaps with blocks of different sizes. QF can manage up to three event pools (e.g., small, medium, and large events, like shirt sizes).

Event pools require initialization through QF_poolInit() function shown in Listing 7.11. An application may call this function up to three times to initialize up to three event pools in QF.

Listing 7.11. Initializing an event pool to be managed by QF (file <qp>qpcqfsourceqf_pool.c)

/* Package-scope objects --------------------------------------------------*/(1)

QF_EPOOL_TYPE_ QF_pool_[3]; /* allocate 3 event pools */(2)

uint8_t QF_maxPool_; /* number of initialized event pools */

/*........................................................................*/(3)

void QF_poolInit(void *poolSto, uint32_t poolSize, QEventSize evtSize) {(3)

/* cannot exceed the number of available memory pools */(4)

Q_REQUIRE(QF_maxPool_ < (uint8_t)Q_DIM(QF_pool_));(4)

/* please initialize event pools in ascending order of evtSize: */(5)

Q_REQUIRE((QF_maxPool_ == (uint8_t)0)(5) || (QF_EPOOL_EVENT_SIZE_(QF_pool_[QF_maxPool_ - 1]) < evtSize));

(5)

/* perfom the platform-dependent initialization of the pool */(6) QF_EPOOL_INIT_(QF_pool_[QF_maxPool_], poolSto, poolSize, evtSize);

(1) The macro

QF_EPOOL_TYPE_represents the QF event pool type. This macro lets the QF port define a particular memory pool (fixed-size heap) implementation that might be already provided with the underlying kernel or RTOS. If QF is used standalone or if the underlying RTOS does not provide an adequate memory pool, the QF framework provides the efficient nativeQMPoolclass. Note that an event pool object is quite small because it does not contain the actual memory managed by the pool (see Section 7.9).(2) The variable

QF_maxPool_holds the number of pools actually used, which can be 0 through 3.

Note

All QP components, including the QF framework, consistently assume that variables without an explicit initialization value are initialized to zero upon system startup, which is a requirement of the ANSI-C standard. In embedded systems, this initialization step corresponds to clearing the

.BSSsection. You should make sure that in your system the.BSSsection is indeed cleared beforemain()is called.

(3) According to the general policy of QF, all memory needed for the framework operation is provided to the framework by the application. Therefore, the first parameter ‘

poolSto’ ofQF_poolInit()is a pointer to the contiguous chunk of storage for the pool. The second parameter ‘poolSize’ is the size of the pool storage in bytes, and finally, the last parameter ‘evtSize’ is the maximum event size that can be allocated from this pool.

Note

The number of events in the pool might be smaller than the ratio

poolSize/evtSizebecause the pool might choose to internally align the memory blocks. However, the pool is guaranteed to hold events of at least the specified sizeevtSize.

Note

The subsequent calls to

QF_poolInit()function must be made with progressively increasing values of theevtSizeparameter.

Listing 7.12 shows the implementation of the QF_new_() function, which allocates a dynamic event from one of the event pools managed by QF. The basic policy is to allocate the event from the first pool that has a block size big enough to fit the requested event size.

Listing 7.12. Simple policy of allocating an event from the smallest event-size pool (file <qp>qpcqfsourceqf_new.c)

(1)

QEvent *QF_new_(QEventSize evtSize, QSignal sig) {(1)

/* find the pool id that fits the requested event size … */(3)

Q_ASSERT(idx < QF_maxPool_); /* cannot run out of registered pools */(4) QF_EPOOL_GET_(QF_pool_[idx], e); /* get e -- platform-dependent */

(5)

Q_ASSERT(e != (QEvent *)0); /* pool must not run out of events */(6)

e->sig = sig; /* set signal for this event */(6)

/* store the dynamic attributes of the event:

(1) The function

QF_new_()allocates a dynamic event of the requested size ‘evtSize’ and sets the signal ‘sig’ in the newly allocated event. The function returns a pointer to the event.(2) This

whileloop scans through theQF_pool_[]array starting from poolid = 0in search of a pool that would fit the requested event size. Obtaining the event size of a pool is a platform-specific operation because various RTOSs that support fixed-size heaps might report the event size in a different way. This platform dependency is hidden in the QF code by the indirection layer of the macroQF_EPOOL_EVENT_SIZE_().(3) This assertion fires when the

whileloop runs out of the event pools, which means that the requested event is too big for all initialized event pools.(4) The macro

QF_EPOOL_GET_()obtains a memory block from the pool found in the previous step.(5) The assertion fires when the pool returns the

NULLpointer, which indicates depletion of this pool.

Note

The QF framework treats the inability to allocate an event as an error. The assertions in lines 3 and 5 are part of the event delivery guarantee policy. It is the application designer's responsibility to size the event pools adequately so that they never run out of events.

Typically, you will not use QF_new_() directly but through the Q_NEW() macro defined as follows:

The Q_NEW() macro dynamically creates a new event of type evT_ with the signal sig_. It returns a pointer to the event already cast to the event type (evT_*). Here is an example of dynamic event allocation with the macro Q_NEW():

The assertions inside QF_new_() guarantee that the pointer is valid, so you don't need to check the pointer returned from Q_NEW(), unlike the value returned from malloc(), which you should check.

Note

In C++, the

Q_NEW()macro does not invoke the constructor of the event. This is not a problem for theQEventbasestructand simplestructsderived from it. However, you need to keep in mind that subclasses ofQEventshould not introduce virtual functions because the virtual pointer won't be set up during the dynamic allocation throughQ_NEW().5 A simple solution would be to use the placementnew()operator inside theQ_NEW()macro to enforce full instantiation of an event object, but it is currently not used, for better efficiency and compatibility with older C++ compilers, which might not support placementnew().

Most of the time, you don't need to worry about recycling dynamic events, because QF does it automatically when it detects that an event is no longer referenced.

Note

The explicit garbage collection step is necessary only in the code that is out of the framework's control, such as ISRs receiving events from “raw” thread-safe queues (see upcoming Section 7.8.4).

QF uses the standard reference-counting algorithm to keep track of the outstanding references to each dynamic event managed by the framework. The reference counter for each event is stored in the six least significant bits of the event attribute dynamic_. Note that the data member dynamic_ of a dynamic event cannot be zero because the two most significant bits of the byte hold the pool ID, with valid values of 1, 2, or 3.

The reference counter of each event is always updated and tested in a critical section of code to prevent data corruption. The counter is incremented whenever a dynamic event is inserted into an event queue. The counter is decremented by the QF garbage collector, which is called after every RTC step (see Listing 7.8(3)). When the reference counter of a dynamic event drops to zero, the QF garbage collector recycles the event back to the event pool number stored in the two most significant bits of the dynamic_ attribute.

(2)

if (e->dynamic_ != (uint8_t)0) { /* is it a dynamic event? */(5)

if ((e->dynamic_ & 0x3F) > 1) { /* isn't this the last reference? */(6)

--((QEvent *)e)->dynamic_; /* decrement the reference counter */(8)

else { /* this is the last reference to this event, recycle it */(11)

Q_ASSERT(idx < QF_maxPool_); /* index must be in range */

(1) The function

QF_gc()garbage-collects one event at a time.(2) The function checks the unique signature of a dynamic event. The garbage collector handles only dynamic events.

(3) The critical section status is allocated on the stack (see Section 7.3.3).

(4) Interrupts are locked to examine and decrement the reference count.

(5) If the reference count (lowest 6 bits of the

e->dynamic_byte) is greater than 1, the event should not be recycled.(6) The reference count is decremented. Note that the

constattribute of the event pointer is “cast away,” but this is safe after checking that this must be a dynamic event (and not a static event possibly placed in ROM).(8) Otherwise, reference count is becoming zero and the event must be recycled.

(9) The pool ID is extracted from the two most significant bits of the

e->dynamic_byte and decremented by one to form the index into theQF_pool_[]array.(10) Interrupts are unlocked for the

elsebranch. It is safe at this point because you know for sure that the event is not referenced by anybody else, so it is exclusively owned by the garbage collector thread.(11) The index must be in the expected range of initialized event pools.

(12) The macro

QF_EPOOL_PU_()recycles the event to the poolQF_pool_[idx].The explicit cast removes the const attribute.

Event deferral comes in very handy when an event arrives in a particularly inconvenient moment but can be deferred for some later time, when the system is in a much better position to handle the event (see “Deferred Event” state pattern in Chapter 5). QF supports very efficient event deferring and recalling mechanisms consistent with the “zero-copy” policy.

QF implements explicit event deferring and recalling through QActive class functions QActive_defer() and QActive_recall(), respectively. These functions work in conjunction with the native “raw” event queue provided in QF (see upcoming Section 7.8.4). Listing 7.13 shows the implementation.

Listing 7.13. QF event deferring and recalling (file <qp>qpcqfsourceqa_defer.c)

void QActive_defer(QActive *me, QEQueue *eq, QEvent const *e) {(1)

QEQueue_postFIFO(eq, e); /* increments ref-count of a dynamic event */(1)

/*.....................................................................*/(2)

QEvent const *QActive_recall(QActive *me, QEQueue *eq) {(3)

QEvent const *e = QEQueue_get(eq); /* get an event from deferred queue */(4)

QActive_postLIFO(me, e); /* post it to the front of the AO's queue */(6)

if (e->dynamic_ != (uint8_t)0) { /* is it a dynamic event? */(8)

--((QEvent *)e)->dynamic_; /* decrement the reference counter */(10)

return e;/*pass the recalled event to the caller (NULL if not recalled) */

(1) The function

QActive_defer()takes posts the deferred event into the given “raw” queue ‘eq.’ The event posting increments the reference counter of a dynamic event, so the event is not recycled at the end of the current RTC step (because it is referenced by the “raw” queue).(2) The function

QActive_recall()attempts recalling an event from the provided “raw” thread-safe queue ‘eq.’ The function returns the pointer to the recalled event orNULLif the provided queue is empty.(3) The event is extracted from the queue. The “raw” queue never blocks and returns

NULLif it is empty.(4) If an event is available, it is posted using the last-in, first-out (LIFO) policy into the event queue of the active object. The LIFO policy is employed to guarantee that the recalled event will be the very next to process. If other already queued events were allowed to precede the recalled event, the state machine might transition to a state where the recalled event would no longer be convenient.

(5) Interrupts are locked to decrement the reference counter of the event, to account for removing the event from the “raw” thread-safe queue.

(7) The reference counter must be at this point at least 2 because the event is referenced by at least two event queues (the deferred queue and the active object's queue).

(8) The reference counter is decremented by one to account for removing the event from the deferred queue.

Note

Even though you can “peek” inside the event right at the point it is recalled, you should typically handle the event only after it arrives through the active object's queue. See the “Deferred Event” state pattern in Chapter 5.

QF supports only asynchronous event exchange within the application, meaning that the producers post events into event queues, but do not wait for the actual processing of the events. QF supports two types of asynchronous event delivery:

1 The simple mechanism of direct event posting, when the producer of an event directly posts the event to the event queue of the consumer active object.

2 A more sophisticated publish-subscribe event delivery mechanism, where the producers of events publish them to the framework and the framework then delivers the events to all active objects that had subscribed to this event.

QF supports direct event posting through the QActive_postFIFO() and QActive_postLIFO() functions. These functions depend on the active object's employed queue mechanism. In the upcoming Section 7.8.3, I show how these functions are implemented when the native QF active object queue is used. In Chapter 8, I demonstrate how to implement these functions to use a message queue of a traditional RTOS.

Note

Direct event posting should not be confused with event dispatching. In contrast to asynchronous event posting through event queues, direct event dispatching is a simple synchronous function call. Event dispatching occurs when you call the

QHsm_dispatch()function, as in Listing 7.8(2), for example.

Direct event posting is illustrated in the “Fly ‘n’ Shoot” example from Chapter 1, when an ISR posts a PLAYER_SHIP_MOVE event directly to the Ship active object:

Note that the producer of the event (ISR) in this case must only “know” the recipient (Ship) by an “opaque pointer” QActive*, and the specific definition of the Ship active object structure is not required. The AO_ship pointer is declared in the game.h header file as:

The Ship structure definition is in fact entirely encapsulated in the ship.c module and is inaccessible to the rest of the application. I recommend using this variation of the “opaque pointer” technique in your applications.

QF implements publish-subscribe event delivery through the following services:

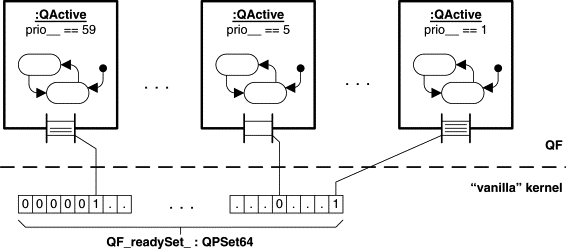

Delivering events is the most frequently performed function of the framework; therefore, it is important to implement it efficiently. As shown in Figure 7.5, QF uses a lookup table indexed by the event signal to efficiently find all subscribers to a given signal. For each event signal index (e->sig), the lookup table stores a subscriber list. A subscriber list (typedef’d to QSubscrList) is just a densely packed bitmask where each bit corresponds to the unique priority of the active object. If the bit is set, the corresponding active object is the subscriber to the signal, otherwise the active object is not the subscriber.

The actual size of the QSubscrList bitmask is determined by the macro QF_MAX_ACTIVE, which specifies the maximum active objects in the system (the current range of QF_MAX_ACTIVE is 1..63). Subscriber list type QSubscrList is typedef’ed in Listing 7.14.

To reduce the memory taken by the subscriber lookup table, you have options to reduce the number of published signals and reduce the number of potential subscribers QF_MAX_ACTIVE. Typically, however, the table is quite small. For example, the table for a complete real-life GPS receiver application with 50 different signals and up to eight active objects costs 50 bytes of RAM.

Note

Not all signals in the system are published. To conserve memory, you can enumerate the published signals before other nonpublished signals and thus arrive at a lower limit for the number of published signals.

Before you can publish any event, you need to initialize the subscriber lookup table by calling the function QF_psInit(), which is shown in Listing 7.15. This function simply initializes the pointer to the lookup table QF_subsrcrList_ and the number of published signals QF_maxSignal.

Active objects subscribe to signals through QActive_subscribe(), shown in Listing 7.16.

Listing 7.16. QActive_subscribe() function (file <qp>qpcqfsourceqa_sub.c)

(1) The function

QActive_subscribe()subscribes a given active object ‘me’ to the event signal ‘sig.’.(2) The index ‘

i’ represents the byte index into the multibyteQSubscrListbitmask (see Listing 7.14). The arrayQF_div8Lkup[]is a lookup table that stores the precomputed values of the following expression:QF_div8Lkup[p] = (p – 1)/8, where0 < p < 64.TheQF_div8Lkup[]lookup table is defined in the file<qp>qpcqfsourceqf_pwr2.cand occupies 64 bytes of ROM.

Note

Obviously, you don't want to use precious RAM for storing constant lookup tables. However, some compilers for Harvard architecture MCUs (e.g., GCC for AVR) cannot generate code for accessing data allocated in the program space (ROM), even though the compiler can allocate constants in ROM. The workaround for such compilers is to explicitly add assembly code to access data allocated in the program space. The macro

Q_ROM_BYTE()retrieves a byte from the given ROM address. This macro is transparent (i.e., copies its argument) for compilers that can correctly access data in ROM.

I don't explicitly discuss the mirror function QActive_unsubscribe(), but it is virtually identical to QActive_subscribe() except that it clears the appropriate bit in the subscriber bitmask. Note that both QActive_subscribe() and QActive_unsubscribe() require an active object as the first parameter “ me,” which means that only active objects are capable of subscribing or unsubscribing to events.

The QF real-time framework implements event publishing with the function QF_publish() shown in Listing 7.17. This function performs efficient “zero-copy” event multicasting. QF_publish() is designed to be callable from both the task level and the interrupt level.

Listing 7.17. QF_publish() function (file <qp>qpcqfsourceqa_pspub.c)

(1)

void QF_publish(QEvent const *e) {(1)

/* make sure that the published signal is within the configured range */(2)

Q_REQUIRE(e->sig < QF_maxSignal_);(2)

if (e->dynamic_ != (uint8_t)0) { /* is it a dynamic event? */(3)

++((QEvent *)e)->dynamic_; /* increment reference counter, NOTE01 */(8)

tmp &= Q_ROM_BYTE(QF_invPwr2Lkup[p]); /* clear subscriber bit */(9)

Q_ASSERT(QF_active_[p] != (QActive *)0); /* must be registered */(12)

uint8_t i = Q_DIM(QF_subscrList_[0].bits);(12)

do { /* go through all bytes in the subscription list */(13)

tmp = QF_subscrList_[e->sig].bits[i];(13)

while (tmp != (uint8_t)0) {(13)

uint8_t p = Q_ROM_BYTE(QF_log2Lkup[tmp]);(13)

tmp &= Q_ROM_BYTE(QF_invPwr2Lkup[p]);/*clear subscriber bit */(14)

p = (uint8_t)(p + (i << 3)); /* adjust the priority */(14)

Q_ASSERT(QF_active_[p] != (QActive *)0);/*must be registered*/(14)

/* internally asserts if the queue overflows */(14)

QActive_postFIFO(QF_active_[p], e);

(1) The function

QF_publish()publishes a given event ‘e’ to all subscribers.(2) The precondition checks that the published signal is in initialized range (see Listing 7.15).

(3) The reference counter of a dynamic event is incremented in a critical section. This protects the event from being prematurely recycled before it reaches all subscribers.

(3) The

QF_publish()function must ensure that the event is not recycled by a subscriber before all the subscribers receive the event. For example, consider the following scenario: A low-priority active object dynamically allocates an event withQ_NEW()and publishes it by callingQF_publish()in its own thread of execution. In the course of multicasting the event,QF_publish() posts the event to a high-priority active object, which immediately preempts the current thread and starts processing the event. After the RTC step, the high-priority active object calls the garbage collector (see Listing 7.8(3)). IfQF_publish()did not increment the event counter in step 3, the counter would be only 1 because the event has only been posted once. The high-priority active object would recycle the event. After resuming the low-priority thread, theQF_publish()might want to keep posting the event to some other subscribers, but the event would be already recycled.(4) The conditional compilation is used to distinguish the simpler and faster case of single-byte

QSubscrList(see Listing 7.14).(5) The entire subscriber bitmask is placed in a temporary byte.

(6) The

whileloop runs over all 1 bits set in the subscriber bitmask until the bitmask becomes empty.(7) The log-base-2 lookup quickly determines the most significant 1 bit in the bitmask, which corresponds to the highest-priority subscriber. The structure of the lookup table

QF_log2Lkup[tmp], where0 < tmp <= 255, is shown in Figure 7.6. TheQF_log2Lkup[]lookup table is defined in the file<qp>qpcqfsourceqf_log2.cand occupies 256 bytes of ROM.

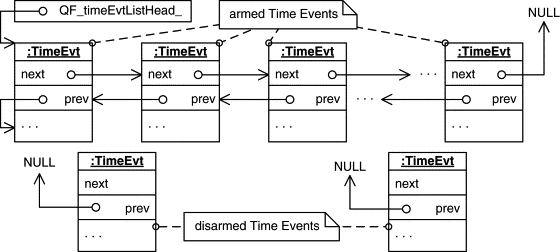

QF manages time through time events, as described in Section 6.6.1 of Chapter 6. In the current QF version, time events cannot be dynamic and must be allocated statically. Also, a time event must be assigned a signal upon instantiation (in the constructor) and the signal cannot be changed later. This latter restriction prevents unexpected changes of the time event while it still might be held inside an event queue.

QF represents time events as instances of the QTimeEvt class (see Figure 7.3). QTimeEvt, as all events in QF, derives from the QEvent base structure. Typically, you will instantiate the QTimeEvt structure directly, but you might also further derive more specialized time events from it to add some more data members and/or specialized functions that operate on the derived time events. Listing 7.18 shows the QTimeEvt class, that is, the QTimeEvt structure declaration and the functions to manipulate it.

Listing 7.18. QTimeEvt structure and interface (file <qp>qpcincludeqf.h)

(2)

struct QTimeEvtTag *prev;/* link to the previous time event in the list */(3)

struct QTimeEvtTag *next; /* link to the next time event in the list */(4)

QActive *act; /* the active object that receives the time event */(5)

QTimeEvtCtr ctr; /* the internal down-counter of the time event */(6)

QTimeEvtCtr interval; /* the interval for the periodic time event */(8)

#define QTimeEvt_postIn(me_, act_, nTicks_) do {(8)

(me_)->interval = (QTimeEvtCtr)0;(9)

#define QTimeEvt_postEvery(me_, act_, nTicks_) do {(9)

(me_)->interval = (nTicks_);(11)

uint8_t QTimeEvt_rearm(QTimeEvt *me, QTimeEvtCtr nTicks);(12)

void QTimeEvt_arm_(QTimeEvt *me, QActive *act, QTimeEvtCtr nTicks);

(2,3) The two pointers ‘

prev’ and ‘next’ are used as links to chain the time events into a bidirectional list (see Figure 7.7).(4) The active object pointer ‘

act’ stores the recipient of the time event.(5) The member ‘

ctr’ is the internal down-counter decremented in everyQF_tick()invocation (see the next section). The time event is posted when the down-counter reaches zero.(6) The member ‘

interval’ is used for the periodic time event (it is set to zero for the one-shot time event). The value of the interval is reloaded to the ‘ctr’ down-counter when the time event expires, so the time event keeps timing out periodically.(7) Every time event must be initialized with the constructor

QTimeEvt_ctor(). You should call the constructor exactly once for every time event object before arming the time event. The most important action performed in this function is assigning a signal to the time event. You can reuse the time event any number of times, but you should not change the signal. This is because a pointer to the time event might still be held in an event queue and changing the signal could lead to subtle and hard-to-find errors.(8) The macro

QTimeEvt_postIn()arms a time event ‘me_’ to fire once in ‘nTicks_’ clock ticks (a one-shot time event). The time event gets directly posted (using the FIFO policy) into the event queue of the active object ‘act_.’ After posting, a one-shot time event gets automatically disarmed and can be reused for a one-shot or periodic timeout requests.(9) The macro

QTimeEvt_postEvery()arms a time event ‘me_’ to fire periodically every ‘nTicks_’ clock ticks (periodic time event). The time event gets directly posted (using the FIFO policy) into the event queue of the active object ‘act_’. After posting, the periodic time event gets automatically rearmed to fire again in the specified ‘nTicks_’ clock ticks.(10) The function

QTimeEvt_disarm()explicitly disarms any time event (one-shot or periodic). The time event can be reused immediately after the call toQTimeEvt_disarm().The function returns the status of the disarming operation: 1 if the time event has been actually disarmed and 0 if the time event has already been disarmed.(11) The function

QTimeEvt_rearm()reloads the down-counter ‘ctr’ with the specified number of clock ticks. The function returns the status of the rearming operation: 1 if the time event has been actually armed and 0 if the time event has been disarmed. In the latter case, theQTimeEvt_rearm()function arms the time event.(12) The helper function

QTimeEvt_arm_()inserts the time event into the linked list of armed timers. This function is used in theQTimeEvt_postIn()andQTimeEvt_postEvery()macros.

Note

An attempt to arm an already armed time event (one-shot or periodic) raises an assertion. If you're not sure that the time event is disarmed, call the

QTimeEvt_disarm()function before reusing the time event.

Figure 7.7 shows the internal representation of armed and disarmed time events. QF chains all armed time events in a bidirectional linked list. The list is scanned from the head at every system clock tick. The list is not sorted in any way. Newly armed time events are always inserted at the head. When a time event gets disarmed, either automatically when a one-shot timer expires or explicitly when the application calls QTimeEvt_disarm(), the time event is simply removed from the list. Removing an object from a bidirectional list is a quick, deterministic operation. In particular, the list does not need to be rescanned from the head. Disarmed time events remain outside the list and don't consume any CPU cycles.

To manage time events, QF requires that you invoke the QF_tick() function from a periodic time source called the system clock tick (see Chapter 6, “System Clock Tick”). The system clock tick typically runs at a rate between 10Hz and 100Hz.

Listing 7.19 shows the implementation of QF_tick(). This function is designed to be called from both the interrupt context and the task-level context, in case the underlying OS/RTOS does not allow accessing interrupts or you want to keep the ISRs very short. QF_tick() must always run to completion and never preempt itself. In particular, if QF_tick() runs in an ISR context, the ISR must not be allowed to preempt itself. In addition, QF_tick() should not be called from two different ISRs, which potentially could preempt each other. When executed in a task context, QF_tick() should be called by one task only, ideally by the highest-priority task.

Listing 7.19. QF_tick() function (file <qp>qpcqfsourceqf_tick.c)

(2)

t = QF_timeEvtListHead_; /* start scanning the list from the head */(4)

if (--t->ctr == (QTimeEvtCtr)0) { /* is time evt about to expire? */(5)

if (t->interval != (QTimeEvtCtr)0) { /* is it periodic timeout? */(7)

else { /* one-shot timeout, disarm by removing it from the list */(11)

if (t->next != (QTimeEvt *)0) { /* not the last event? */(15)

QF_INT_UNLOCK_();/* unlock interrupts before calling QF service */(15)

/* postFIFO() asserts internally that the event was accepted */(19)

dummy = (uint8_t)0; /* execute a few instructions, see NOTE02 */(20)

QF_INT_LOCK_(); /* lock interrupts again to advance the link */

(1) Interrupts are locked before accessing the linked list of time events.

(2) The internal QF variable

QF_timeEvtListHead_holds the head of the linked list.(3) The loop continues until the end of the linked list is reached (see Figure 7.7).

(4) The down-counter of each time event is decremented. When the counter reaches zero, the time event expires.

(5) The ‘

interval’ member is nonzero only for a periodic time event.(6) The down-counter of a periodic time event is simply reset to the interval value. The time event remains armed in the list.

(7) Otherwise the time event is a one-shot and must be disarmed by removing it from the list.

(8-13) These lines of code implement the standard algorithm of removing a link from a bidirectional list.

(14) A time event is internally marked as disarmed by writing

NULLto the ‘prev’ link.(15) Interrupts can be unlocked after the bookkeeping of the linked list is done.

(16) The time event posts itself to the event queue of the active object.

(17) The

elsebranch is taken when the time event is not expiring on this tick.(19) On many CPUs, the interrupt unlocking takes effect only on the next machine instruction, which happens here to be an interrupt lock instruction (line (20)). The assignment of the volatile ‘

dummy’ variable requires a few machine instructions, which the compiler cannot optimize away. This ensures that the interrupts actually get unlocked so that the interrupt latency stays low.

Listing 7.20 shows the helper function QTimeEvt_arm_() for arming a time event. This function is used inside the macros QTimeEvt_postIn() and QTimeEvt_postEvery() for arming a one-shot or periodic time event, respectively.

Listing 7.20. QTimeEvt_arm_() (file <qp>qpcqfsourceqte_arm.c)

void QTimeEvt_arm_(QTimeEvt *me, QActive *act, QTimeEvtCtr nTicks) {

Q_REQUIRE((nTicks > (QTimeEvtCtr)0) /* cannot arm a timer with 0 ticks */

&& (((QEvent *)me)->sig >= (QSignal)Q_USER_SIG)/*valid signal */(1)

&& (me->prev == (QTimeEvt *)0) /* time evt must NOT be used */(1)

&& (act != (QActive *)0)); /* active object must be provided */

(1) The preconditions include checking that the time event is not already in use. A used time event has always the ‘

prev’ pointer set to non-NULL value.(2) The ‘

prev’ pointer is initialized to point to self, to mark the time event in use (see also Figure 7.7).(3) Interrupts are locked to insert the time event into the linked list. Note that until that point the time event is not armed, so it cannot unexpectedly change due to asynchronous tick processing.

(3-6) These lines of code implement the standard algorithm of inserting a link into a bidirectional list at the head position.

Listing 7.21 shows the function QTimeEvt_disarm() for explicitly disarming a time event.

Listing 7.21. QTimeEvt_disarm() (file <qp>qpcqfsourceqte_darm.c)

(2)

if (me->prev != (QTimeEvt *)0) { /* is the time event actually armed? */(6)

if (me->next != (QTimeEvt *)0) { /* not the last in the list? */(9)

me->prev = (QTimeEvt *)0; /* mark the time event as disarmed */

(2) The time event is still armed if the ‘

prev’ pointer is notNULL.(3-8) These lines of code implement the standard algorithm of removing a link from a bidirectional list (compare also Listing 7.19(8-13)).

(9) A time event is internally marked as disarmed by writing

NULLto the ‘prev’ link.(10) The function returns the status: 1 if the time event was still armed at the time of the call and 0 if the time event was disarmed before the function

QTimeEvt_disarm()was called. In other words, the return value of 1 ensures the caller that the time event has not been posted and never will be, because disarming takes effect immediately. Conversely, the return value of 0 informs the caller that the time event has been posted to the event queue of the recipient active object and was automatically disarmed.

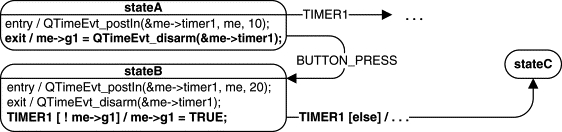

The status information returned from QTimeEvt_disarm() could be useful in the state machine design. For example, consider a state machine fragment shown in Figure 7.8. The entry action to “stateA” arms a one-shot time event me->timer1. Upon expiration, the time event generates signal TIMER1, which causes some internal or regular transition. However, another event, say BUTTON_PRESS, triggers a transition to “stateB.” The events BUTTON_PRESS and TIMER1 are inherently set up to race each other and so it is possible that they arrive very close in time. In particular, when the BUTTON_PRESS event arrives, the TIMER1 event could potentially follow very shortly thereafter and might get queued as well. If that happens, the state machine receives both events. This might be a problem if, for example, the next state tries to reuse the time event for a different purpose.

Figure 7.8 shows the solution. The exit action from “stateA” stores the return value of QTimeEvt_disarm() in the extended state variable me->g1. Subsequently, the variable is used as a guard condition on transition TIMER1 in “stateB.” The guard allows the transition only if the me->g1 flag is set. However, when the flag is zero, it means that the TIMER1 event was already posted. In this case the TIMER1 event sets only the flag but otherwise is ignored. Only in the next TIMER1 instance is the true timeout event requested in “stateB.”

Many RTOSs natively support message queues, which provide a superset of functionality needed for event queues of active objects. QF is designed up front for easy integration of such external message queues. However, in case no such support exists or the available implementation is inefficient or inadequate, QF provides a robust and efficient native event queue that you can easily adapt to virtually any underlying operating system or kernel.

The native QF event queues come in two flavors, which share the same data structure (QEQueue) and initialization but differ significantly in behavior. The first variant is the event queue specifically designed and optimized for active objects (see Section 7.8.3). The implementation omits several commonly supported features of traditional message queues, such as variable-size messages (native QF event queues store only pointers to events), blocking on a full queue (QF event queue cannot block on insertion), and timed blocking on empty queues (QF event queues block indefinitely), to name just a few. In exchange, the native QF event queue implementation is small and probably faster than any full-blown message queue of an RTOS.

The other, simpler variant of the native QF event queue is a generic “raw” thread-safe queue not capable of blocking but useful for thread-safe event delivery from active objects to other parts of the system that lie outside the framework, such as ISRs or device drivers. I explain the “raw” queue in Section 7.8.4.