2

Human Operator Expertise in Diagnosis, Decision-Making, and Time Management

CNRS - University of Valenciennes

Centre d’Études et Recherches en Medecine Aérospatiale

University of Manchester

Although there are abundant theoretical frameworks for diagnosis in the laboratory or in the field, they present serious limitations in Dynamic Environment Supervision (DES). However, empirical data collected on expert operators can lead toward a more extended model which takes dynamic features of the environment into account. A functional analysis of the supervisory activity is delineated that stresses the link between diagnosis and overall operator activity (especially decision-making). The main theoretical contributions and limitations of current models of diagnosis are presented. Two aspects of diagnosis are examined: (a) the declarative basis — knowledge bases and representations on which it is established (in particular time knowledge); (b) the procedural basis — diagnostic strategies. This chapter ends with a proposal for an extended model of diagnosis and decision-making in DES.

Although everybody agrees that diagnosis is a central feature of Dynamic Environment Supervision (DES), the notion remains largely ill defined. One of the reasons is a positive aspect of research conducted in the field: It tries to study psychological activity as a whole, before attempting to decompose it into components between which interactions can be further investigated.

After Rasmussen’s pioneering work (1986, for a recent overview) in the domain, it has become clear that studying diagnosis in process supervision, as well as troubleshooting, cannot be done without treating its relationships with action decision-making and implementation (Hoc & Amalberti, in press). Thus, experimental approaches that isolate diagnosis from other kinds of activities are no longer tenable while trying to model real situations. Here, the point is not so much to devise a task where what is termed diagnosis is well defined, but to make an attempt to identify a basic component within a complex activity which integrates diagnosing, monitoring, and acting.

Moreover, in DES, diagnosis more often than not is closely interwoven with prognosis. The situation is very different from the repair of electronic apparatus, which is generally taken out of service after a malfunction has occurred and is dealt with off-line. In the latter case, diagnosing a failed component and replacing it is generally sufficient to return the equipment to a satisfactory state. However, with continuous process supervision, the human operator is responsible for maintaining the system’s safety while it is in operation, including reacting to transitions from normal to abnormal states which may occur only gradually. Thus, expertise should encompass early stages in the development of malfunctions, not just the final stage when the system breaks down. Moreover, because it is more important to avoid breakdowns than to recover from them, the support system should make this the prime objective. Automation, and especially computerized automation, sometimes results in providing operators with more and more means to anticipate future events, as well as to maintain systems within sharp bounds where systems are not only safer but also more productive and efficient. Prognosis thus becomes integrated with diagnosis. In the remainder of this paper, we shall use the term diagnosis as a generic expression which encompasses understanding the current state of the system, and at the same time its past and future evolution.

We will not be so reckless as to impose a definition without any discussion. But we will extract a workable conception from studies conducted in experimental settings as well as in a variety of field situations: troubleshooting, medical diagnosis, and process control, or more broadly DES, including for example crisis management (e.g., fire-fighting). This will lead us to consider diagnosis as a generic concept, while noting that this component of activity goes from skill-based identification of parameters immediately leading to action to harder knowledge-based productive activity combining hypothesis generation and test. Following the line of Bainbridge’s (1984) reflections on Diagnostic Skill in Process Operation, we will consider action feedback as an intrinsic part of diagnosis, when action or the decision to “do-nothing-but-see-what-will-happen” is partly or mainly aimed at testing hypotheses.

If we consider DES as an activity which develops in an environment that also evolves without any operator intervention, as Bainbridge (1988) argued, two kinds of knowledge are necessary in supervision: (i) knowledge of the structure and operation of the environment under supervision; (ii) knowledge of operator goals and actions. The former clearly needs diagnosis to regularly update a mental representation of the state and evolution of the process, whereas the latter could be supported by predefined knowledge, at least at an expert level.

Section 1 is a proposal for an operational definition of diagnosis — operational in the sense of its link to action — within the framework of a functional analysis of supervisory activity. Then, we will briefly examine some theoretical frameworks available for understanding diagnosis, stressing both their values and limitations. In the three following and separate sections we will treat the dual nature of this psychological activity component. In Section 2, diagnosis will be seen as relying on a variety of knowledge representation formats which could be supported by suitable displays or information sources, not necessarily wholly computerized. In Section 3 we will separately address the question of time representation and management in DES, where this feature plays a crucial role. In particular, we will show that time management does not always depend on time representation. In Section 4, several kinds of well-known diagnosis strategies will be presented in relation to other activity components, and implications will be derived for the necessity of taking these links into account when designing support for diagnosis. Finally, in Section 5, we will propose a general framework to integrate knowledge, representations, and diagnosis strategies.

FUNCTIONAL ANALYSIS OF SUPERVISORY ACTIVITY

A functional perspective on diagnosis emphasizes its contribution to the overall performance of the system. This implies adopting criteria for designing and evaluating diagnostic systems which go far beyond the engineering requirements for physical operation. Instead, the appropriateness of diagnostic approaches must be measured in terms of their contribution to the commercial and/or social value of systems whose performance is being monitored.

Diagnosis must thus be viewed as a goal-directed activity, subordinate to the broader purposes of the system which is being diagnosed. A physician diagnoses not as an end in itself, but to assist in maintaining the patient’s health. In some cases, the appropriate medical treatment may be selected without the need to undertake a formal diagnosis: for example, the choice of a broad spectrum antibiotic to cure an infection without identifying the exact organism which is responsible. In general, a diagnostic investigation will not usually be taken beyond the point when it ceases to refine the choice of treatment. This point may be reached before a complete scientific explanation of the cause of the problem has been attained. Similarly, a troubleshooter may not need to diagnose the precise component which has caused a domestic appliance to malfunction, if it can be restored to working order more efficiently by replacing a whole unit, such as the motor or control panel. Experts on inquiry boards after a disaster will be concerned to learn lessons for the future: The direction taken by their investigations will reflect this goal, which may lead into areas far removed from the technological failures which have occurred. The implication is that the diagnostic task cannot be defined solely in terms of finding the scientific explanation for a system malfunction, within purely technological parameters. If we are to specify a diagnostic procedure for training or decision support, we must do so in relation to the ultimate purpose of the system whose performance is being supervised.

Diagnosis will almost certainly incur costs. Collecting data takes an appreciable amount of time, and control actions may have to be postponed to permit observations to be made. While the consequent accretion of information will make a scientific explanation of the malfunction easier, in terms of contributing to overall system effectiveness, the costs may be too high. For instance, while the investigation is under way the system may move into a more dangerous state, or even sustain damage, and the costs of this may exceed the benefits of being able to make a scientifically accurate diagnosis. Another consideration is the financial cost of gathering the data, which has to be set against the financial benefit of maintaining the system in running order. Many diagnostic investigations involve operations which are very costly, yet in designing decision support these costs are not always taken into account. Finally, diagnosis is often invasive, reducing the system’s performance, or even causing damage, in order to gain information. Under such conditions, a scientific diagnosis may do more harm than leaving the system undisturbed, albeit operating at a suboptimal level.

It is therefore necessary to move away from the traditional view of diagnosis as a cognitive problem-solving activity which establishes a complete scientific explanation of the cause of a malfunction before the operator chooses a course of action. Instead, a theory of diagnosis must be a theory of optimal behavior, in which some control actions may be performed prior to information gathering, and information gathering may be curtailed before a complete picture has been established in the interest of maximizing utility.

With these points in mind, there are several general characteristics of diagnosis in a complex, dynamic environment which need to be considered when designing the diagnostic activity required of a human operator. The first is the fact that the state of the system is evolving, which creates options for the human operator to intervene at alternative points in time. Of course, if the degradation of a system is catastrophic, this choice will not exist. However, the latencies in most continuous processes allow the operator to delay action while learning more about the state of the system. The significance of this for the design of diagnostic activities is that the intervention will have different goals at different stages in the evolution of a fault, ranging from prevention early in the process, through correction at an intermediate stage, to damage limitation if the intervention is made later. Table 1 demonstrates the different diagnostic goals of an operator at different times in the evolution of a malfunction — early, middle and late.

In general, then, understanding the system’s problems early in the evolution of a malfunction implies identifying threat factors before they have progressed far enough to cause a malfunction. Understanding the problems at intermediate stages will focus on deciding how to get the destructive processes under control. Understanding problems when they have progressed to an advanced stage is a matter of identifying an intervention which will repair damage.

The second general characteristic of diagnosis in a complex, dynamic environment which needs to be considered when designing a diagnostic role for the human operator is the increase of information with time. Information about a system malfunction will accumulate, relatively little information being available early on, larger amounts later. The different diagnostic strategies adopted at different stages in the evolution of a malfunction will reflect these different amounts of information. In general, diagnosis attempted early in the development of a fault will use broader search strategies to compensate for the relative lack of pathognomic information at that stage. By considering the overall context, expectations can be formed which reduce uncertainty. This point is discussed at greater detail by Boreham, in Chapter 6 of the present volume.

Different goals and sub-goals of diagnosis at different times in the evolution of a fault in a dynamic system

LIMITATIONS OF CURRENT MODELS OF DIAGNOSIS AND DECISION-MAKING

Two main research trends may be considered as possible sources of theoretical frameworks in experimental psychology: (a) interpretative or understanding activities in problem-solving situations (Anderson, 1983; Richard et al., 1990); (b) structure induction (Klahr & Dunbar, 1988; Simon & Lea, 1974). The first trend drives our endeavor to avoid considering diagnosis as a specific psychological activity separate from other kinds of cognitive processes. The second trend provides us with a more precise framework which will be examined in a more detailed way.

The main contribution of the structure induction paradigm to the study of diagnosis is the definition of diagnosis within two dual spaces. This idea was first introduced by Simon and Lea (1974) in their well-known GRI (General Rule Inducer) and later refined by Klahr and Dunbar (1988) in their model SDDS (Scientific Discovery as Dual Search). The typical situation referred to by these models is concept identification (learning of the meaning of the command of a robot by doing, in the Klahr & Dunbar study). Search for a meaningful structure or rule is supposed to develop within two dual spaces — an example space from where facts are selected (examples of a concept or behavior of a robot responding to a particular command), and a rule or structure space where hypotheses on relevant properties of facts can be found.

Although this conception is of great value, it has to be modified to fit the diagnosis situation better. We therefore describe the two dual spaces as a fact space (FS) and a structure space (SS). However, there is a link between search in FS and SS since facts are not raw data and have to be interpreted by the means of meaningful structures (e.g., if a variable value is considered too low, such an identification relies on the context). In addition, hypotheses not only concern structures (e.g., syndromes) but also facts (e.g., application of a well-known syndrome to a patient may only be possible if some hypothesis is generated on the occurrence of a past fact such a trauma). Finally, following Klahr and Dunbar (1988) the SS has to be considered hierarchically organized, enabling the generation of hypotheses at diverse levels of schematization. Here, two dimensions have to be considered (Hoc, 1988; Rasmussen, 1986): (a) a refinement hierarchy which divides a structure into substructures; (b) an implementation hierarchy which bears on relationships between functions (or ends) and implementation (or means). These hierarchies can account for the common distinction between general and specific hypotheses (Elstein et al., 1978).

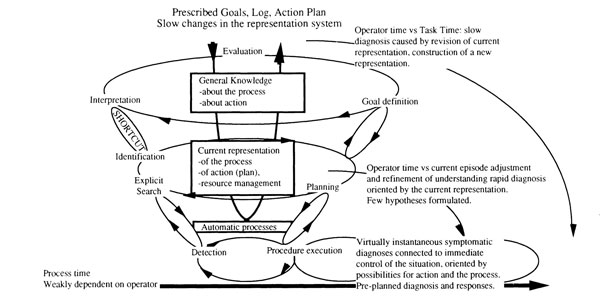

Seen at first as a model, and more and more as a generic cognitive architecture generating a class of models, Rasmussen’s decision ladder partly picks up the link between diagnosis and decision-making (see Rasmussen, 1981, 1984, 1986). It is typical of the procedural and serial models elaborated in the work context (see also Rasmussen & Rouse, 1981). The route through the two entire portions of the ladder (Figure 1) corresponds to a fully developed and serial decision-making process. However, we have modified the original presentation of the model to overcome some of its main drawbacks (Figure 1):

(a) Situation analysis stage (left leg of the ladder):

Three successive modules lead to diagnosis (from bottom to top): detection of abnormal conditions, explicit search for information, and situation identification. Furthermore, interpretation and evaluation of consequences are interpolated between the two stages (top of the figure).

(b) Planning stage (right leg of the ladder):

Three other modules (from top to bottom) contribute to action: definition of a task, formulation of a procedure, and execution.

This architecture highlights the links between diagnosis and decision-making. Moreover, it supports possible shortcuts (from left to right) which correspond to different kinds of diagnosis, in regard to deepness (e.g., detection of abnormal — or normal, since identification of normal operation is also important — conditions can be sufficiently operational to trigger an appropriate action, for example in the case of emergency medicine). Nevertheless, the drawings in Figure 1 diverge from Rasmussen’s original figure in three respects:

(i) Situation identification can result in an identification of the current system state (as in the original architecture), but now additionally in an anticipation of the system’s future evolution (prognosis). Originally, this architecture was devised to account for elementary troubleshooting tasks, but we need this extension to introduce prognosis in DES (in blast furnace control, for example, about half of the verbal reports on representation concern evolutions rather than states: Hoc, 1989b; in gas grid control, 28% of the verbal reports are devoted to predictions of gas demand: Umbers, 1979; in modeling the supervision of a very rapid process like fighter aircraft piloting, anticipation of diverse time span is put at the center (Amalberti & Deblon, 1992). Iosif (1968) showed that process operators paid more attention to parameters on which anticipation could be based (and also to frequently out-of-order parameters). This phenomenon was confirmed in blast furnace supervision (Hoc, 1989a). Anticipation also plays a crucial role in stepwise adjustment of doses of medicine to prevent epilepsy attacks (Boreham, 1992) and constitutes a central training topic in crisis management (Samurçay & Rogalski, 1988). The implication for diagnosis/prognosis support of these studies is clear: Designers must pay attention to parameters which have a high anticipation value.

FIGURE 1. Rasmussen’s step-ladder revisited (after Hoc & Amalberti, in press)

The left part of the ladder corresponds to diagnosis and prognosis, the upper part goal setting, and the right part action planning. Rectangles represent processes, lines information flow (dotted lines represent some possible shortcuts from information to action).

(ii) Hypothesis generation and test strategies imply adding transfer of information from identification (or anticipation) to information search. This loop is crucial in problem-solving situations where the first hypothesis is not necessarily correct. However, the adequacy of the explanation of the system state is not the only criterion which determines how long the decision system stays in the loop. Because of the risks of delaying intervention while diagnosis is made, the operator may take provisional action before a full explanation is achieved. Moreover, it is difficult to represent on this kind of figure the dynamic features of the process to be supervised. Some hypothesis testing activities have to be postponed, waiting for the right time window when information is relevant to test hypotheses. Due to response latencies and spontaneous evolution of the process, timing does not rely on operator implementation of the architecture, but also on process timing (especially with long response latency processes like a blast furnace).

(iii) As Bainbridge (1984) put it, feedback loops are truly necessary to account for expectation effects: Operators are not passive devices simply waiting for information which they filter. When formulating a procedure (or a diagnosis) a number of expectations can be diffused through the network towards diverse control levels: detection (lower level processes), search for information, or diagnosis (upper level processes). Here again, time lags have to be taken into account when relevant information is waited for. In some cases expectations are preselected by a previous analysis before taking charge of the system in charge (e.g., shift change-over, mission planning in fighter aircraft piloting as shown by Amalberti & Deblon, 1992).

KNOWLEDGE BASES AND KNOWLEDGE REPRESENTATIONS

As compared to static situations, where operators control the whole environment, dynamic situations need a wider variety of knowledge representation formats for diagnosis. Many empirical studies have been devoted to eliciting these formats from operators, by means of diverse techniques (interviews, questionnaires, observations in problem-solving situations, etc.), for example, polystyrene mill operators (Cuny, 1979), nuclear power plant operators (Alengry, 1987), and blast furnace operators (Hoc & Samurçay, 1992).

Although terminology differs from one theoretical contribution to another (Baudet & Denhière, 1991; Brajnik et al., 1989), mental models or Representation and Processing Systems (RPS: see Hoc, 1988) can be classified into five main categories: causal, functional, transformational, topographical, and life-cycle RPSs.

(a) Causal RPS

Probably due to the fact that, for the most part, knowledge elicitation techniques have been directed towards knowledge underlying procedures and strategies, empirical data are richest on causal RPSs. Usually, after Crossman (1965), such a RPS is represented by an “influence graph”, which connects input variables (which operators can act upon) to intervening variables, and the latter to output variables, such as product quality, and the like. Examples can be found in Cuny (1979) or Hoc (1989a).

More often than not, this kind of network serves as a rationale for inductive inference (to causes) or deductive inference (to the consequences), the latter integrating temporal anticipation. Nevertheless, knowledge of this kind is not purely of a declarative kind, in the sense of a structure which would be very far from concrete implementation in action. For example, in blast furnace supervision, knowledge integrated into operator networks is mainly relevant to action (Hoc & Samurçay, 1992): (a) variables describing internal phenomena which operators can directly and plentifully act upon are more commonly reported and used than others; (b) each basic phenomenon is linked to available actions on it.

(b) Functional RPS

In situations where procedures play a major role, another representational format can be found for use by operators. Alengry (1987), for example, has elicited this kind of knowledge from NPP operators, who were asked to specify what they were doing while trying to implement some global goal, and why. He has collected causal representations as well as functional knowledge linking operator goals to operator actions, but also a causal rationale for the procedures. Systems are artifacts specifically designed to fulfill goals which are defined when structuring components and/or control loops in them. When a system is in nominal operation, these functional specifications play a major role in understanding and in diagnosing the system state or evolution. But, on the other hand, in abnormal conditions such a functional rationale can become invalid. Therefore, in this case, operators must rely upon more general knowledge of a causal kind.

(c) Transformational RPS

Representation of the system operation can also be in terms of transformations taking place in the system. But it is clearer in discontinuous processes, such as integrated manufacturing, than in continuous ones. In this case, diagnosis is largely determined by transformations, as shown by Guillermain (1988): operators pay less attention to the reasons behind a breakdown than to the specific transformation which has failed, trying to reset it before searching for causes (very often resetting is sufficient to recover from a breakdown diagnosed by probabilistic interference, for example, between a soldering robot operation and some dust).

Another way of supporting diagnosis is using topographic references, when the system can be broken into distinct components. Much work has been done in the troubleshooting domain, trying to vary features of information displays (like mimic boards), especially giving hierarchical access to such decompositions to help locate deficient components (Brooke & Duncan, 1983; Morrison & Duncan, 1988; Patrick, 1993).

(e) Life cycle RPS

In dynamic contexts such as blast furnace supervision and the management of human illness, an important representational format is the life cycle, including the life cycle of the system and the subcycles of its constituent processes. Regularities can be observed indicating successive phases in the history of a plant, such as start-up, early maladaptations to the environment, trouble-free operation, occasional turbulences, cumulative multiple malfunctions, and obsolescence. Knowledge of the stage in the life cycle is crucial for making prognoses. It is also important for the interpretation of symptoms, as the same symptom may have different meanings at different stages in the life cycle.

It could be too simplistic to hope to be able to identify the operator’s current strategy, and in real time, to decide what kind of support is needed. Diagnosis can have causal, functional, transformational, and topographical components. It is tempting to design mimic boards when the structure of the system is easy to break into well-identified components, but usually such decomposition provides operators with insufficient support. In certain cases, causal, functional, transformational, and topographical relationships cannot be integrated into the same kind of structure.

This is why Goodstein (Goodstein, 1983; Goodstein & Rasmussen, 1988) introduced the principle of multi-windowed displays that enable operators to quickly switch from one structure to another, making apparent the links between the diverse structures. Improvements have been further introduced in the implementation of this principle by Lind (Lind, 1991; Lind et al., 1989; Lind, in chapter 16 of the present volume), with his MFM (Multilevel Flow Models) interface.

To a great extent, knowledge embedded in the RPS described above is of a deep nature and can provide the operator with a basis for high level (knowledge-based) diagnosis. Expert operators are very often performing lower level (rule-based, skill-based) diagnosis, due to automatization of their activity. However empirical data (especially in medicine) show that deep knowledge (e.g., biomedical knowledge) is neither lost nor inert, but simply encapsulated in procedures (Boshuizen et al., 1992). Expert operators can access this knowledge, either in parallel when applying rules or triggering automatic procedures, or during interviews with the observer.

Thus the current representation of the situation, which diagnosis regularly updates, takes its inputs from diverse RPSs as well as from information gathering. This representation is hierarchically organized so that diverse levels of diagnosis can be performed concurrently. However, in the case of automatic processes (skill-based behavior) external information plays a major role and short cuts the symbolic representation level.

The diverse RPS refer to the operator’s knowledge of the system per se. However, such a knowledge base is useless for diagnosis without a knowledge of specific failure modes. That this is a distinct body of knowledge is most clearly apparent in medicine, where “normal” physiology is one scientific specialism and pathology another. Typically, a failure mode will be designated in terms of four primitives: (a) the symptoms (e.g., abnormal display); (b) how the failed components generate these symptoms (i.e., causal links between the type of component failure and its external manifestations); (c) the way in which the components may fail (e.g., fissure, overheating, swelling, shrinking); and (d) the causes of the component failure (either extrinsic factors: corrosion, speed of operation, safety violations; or intrinsic factors: quality of materials, demagnetization). In general, operator knowledge of failure modes tends to emphasize knowledge of symptoms, and there has been considerable research into whether knowledge of the other factors is necessary at the immediate supervisory level of employment. However, decision support can represent failure modes in all four of the aspects listed above, and data can be collected about these in the absence of data about symptoms. In general, data on (c) and (d) are important for prognosis and preventive action.

TIME MANAGEMENT

Embedded in every RPS is knowledge of temporal changes in the process, especially when there are time lags along causal chains of events or between operator intervention and actual effects. This suggests that expertise is associated with improved time processing.

However time still remains one of the most difficult problems to understand. Different schools of thought approach the problem in specific contexts and have reached a range of solutions which are difficult to integrate into a coherent framework.

In Artificial Intelligence, several authors have proposed complex computer models for the processing of temporal information. But the main concern of these studies is text comprehension and production, which may be why linguistics was the main source of inspiration for A1 systems. Three entities are brought into play (Bestougeff & Ligozat, 1989):

-abstract representational systems which model temporal features of entities;

-natural language which expresses temporal notions by temporal markers and grammatical tense;

-the real world, which is represented.

Hence, representational systems are models of previously expressed temporal contents. They deal with temporal functions relating properties of events to intervals (Allen, 1984), or to instants and intervals (McDermott, 1982). They mainly establish coherence testing and proposition validity checking. This formal approach is very different from the psychological approach, which does not presuppose that people explicitly process representations of time at this level. In addition, this approach makes no attempt to design models of psychological time processing. Nevertheless, it could be useful in approaching some aspects of psychological time.

Two recent books cover the state of the art in this domain (Block, 1990; Macar et al., 1992). Both volumes are striking for their reserve. The authors are cautious when discussing the range of applicability of their results as regards to specific situations (e.g., very short or long time psychophysics) and methodologies used (e.g., prospective evaluations where subjects are informed about their evaluation task beforehand or retrospective judgments without preparation). The major difficulty inherent to this issue is the tight relationship between the temporal and semantic (e.g., causality) aspects of events. Time is not easy to isolate.

However a consensus may be emerging from these studies, as shown by Michon (1990) — one of the best known researchers in this domain — who argues that there is adaptation to time which precedes the experience of time, although some circularity is possible. Temporal representation is thus a conscious product of an implicit tuning to the temporal dynamics of the environment. If temporal adaptation is successful, time need not be represented, in particular at a conceptual level. Awareness and symbolic representations only come into play when problem-solving or communication activities are involved, but processing can be solely based on verisimilitude — some kind of a posteriori reconstruction based on similarity with well-known situations — or metaphor (many linguistic expressions of time are metaphoric).

Hence, even in process control, operator behavior may appear to be very well adapted to temporal aspects of the process, without there being any conscious processing of time. Thus researchers must cope with the same methodological difficulties as those encountered in experimental approaches.

Major contributions to this have been made by the De Keyser research team (mainly accessible through a substantial report by Richelle & De Keyser, 1992). The originality of this approach lies in the assumption that operators can use a number of different “Temporal Reference Systems” (conventional, task-specific, or emerging from action regularities) which may be modeled by abstract representational systems. However, although the authors acknowledge implicit processing of time, there is no clear distinction between explicit and implicit processing in their formal approach. Data collected by their team, which are specific to time processing, are very scarce, as is the case in the process control literature, and restricted to system start-up (see also Decortis, 1988). Expertise appears to be related to knowledge enrichment of process variable evolutions, which enables operators to elaborate typical scripts and use them as reference frameworks. An extensive analysis of such scripts has been performed in a rapid process control situation (fighter aircraft piloting) by Amalberti and Deblon (1992).

Another study shows an effect of expertise in bus traffic regulation (Mariné et al., 1988). These authors report more frequent temporal markers in experts’ verbal reports than in novices’ (however, authors only report frequencies and not percentages). According to Michon’s line of reasoning, this could have been caused by communication needs inherent to this kind of activity. As a matter of fact, the bus traffic controller needs to communicate time representations to drivers. Before generalizing this result to process control, more data are needed. A recent study of time expressions in verbal protocols collected during blast furnace supervision does not show any difference between experts and beginners (Hoc, in press). These data are compatible with Michon’s point of view on the automatization of expert behavior (implicit tuning to the temporal features of the process under supervision).

In DES situations, the temporal dimension can cover a variety of cognitive variables which call for specifications. Three main ones will be discussed here:

a)Time can be used as an activity support, freeing operators from processing complex representations of causal relationships among variables. For example, it may be sufficient to memorize a malfunction script, with temporal landmarks, to act at the skill- or rule-based level, without any need for a causal interpretation of the script or calculation of latencies. Date and period memory can replace causal reasoning and calculation. External synchronizers may have high utility.

b)In dynamic situations, operators need to deal with evolutions (changes in variables). In this case, time is not processed in isolation. Rather temporal functions are processed, that is relationships between variables and time. Response latencies are processed at this level, as well as forms of response.

c)The speed of the (technical) process can have an effect on the course of cognitive processes. Temporal pressure introduces what are now well-known effects on cognitive strategies, for example, the introduction of planning before task execution in fighter aircraft piloting (Amalberti & Deblon, 1992). In blast furnace supervision, this aspect of time is not relevant in most cases, since decision-making can take ten to thirty minutes without any risk of hazard.

DIAGNOSTIC STRATEGIES

Central to decision support is the reduction of uncertainty. Strategies of diagnosis can be represented as ways of resolving uncertainty about which control actions are most appropriate. Uncertainty can arise from the unreliability of data, resulting in failure to detect the true state and evolution of the system. Alternatively, it may be due to inadequacies of the representational tools which are instantiated by these data, resulting in failure to interpret system states and evolutions as significant (i.e., as justifying action or further investigation).

We owe the most extensive descriptions of diagnostic strategies to Jens Rasmussen (1981, 1984, 1986), who mainly based his theoretical work on observations collected in electronic troubleshooting (Rasmussen & Jensen, 1974). He proposed a distinction between two broad kinds of strategies: topographic and symptomatic search.

(a) Topographic search relies upon system decomposition based on the topography of physical components. Thus search is supported by topological RPSs, following diverse kinds of flow passing through the system, considered as a network of mass, energy, or information flow; the system outputs being identified as deficient, as regards to available knowledge on normal operation, and search consisting of attempting to identify the individual component that has to be changed on the same basis (comparison between normal output and actual output). At times, this kind of search can be supported by a functional search based on functional RPSs. In this case, knowledge of functions can orient operators to a function which is not properly performed, and knowledge of relationships between this function and components.

(b) Symptomatic search relies on knowledge of relationships between symptoms and causes or syndromes. It can take one of the three following forms.

Pattern matching is abundantly described in the literature, recently conceived within a schema approach (Amalberti & Deblon, 1992; Govindaraj & Su, 1988; Hoc, 1989b). Environmental cues (the current situation) are matched to a well-known schema and, at the same time, produce a diagnosis and an action decision. This mechanism is considered as implying two basic cognitive components: similarity matching (which retains the best fitted schemata) and frequency gambling (which selects the more frequently encountered schema when there is competition, especially in the case of underspecification).

These two primitives have been introduced by Reason (1990), and some extension is necessary to apply them to DES. Selection among competitive schemata does not depend only on frequency. Other factors are influential, all the more in situations where breakdowns, faults or malfunctions are infrequent. Among them, representativeness and availability are perhaps the best known (Kahneman & Tversky, 1972), closely related to typicality (Rosch, 1975). The degree of belief that an exemplar belongs to a class is not only related to the frequency of the exemplar, but also to the richness of the matching between the specific properties of the exemplar and the general properties of the class. For instance, it is difficult to admit a bird that does not fly (such as an ostrich) in the class of birds.

(ii)search in a decision table linking symptoms to causes

It integrates probabilistic search that relies on knowledge of relationships between malfunctions and their temporal precursors. Bayes’ theorem permits the representation of the conditional probabilities of various malfunctions, given certain prior system states. The calculation of these values is an important way in which the entire history of the system and others like it can aid the supervisory task through decision support. Much probabilistic search is a process of screening an asymptomatic system for signs of impending malfunction. In this kind of search, it is crucial to collect and interpret system data in a way which achieves reliable negative results, that is, which performs tests whose statistical properties include a high probability that there will not be a malfunction if the prior system state does not indicate one. Where a malfunction is indicated, decision support should continue to revise the prediction as the situation develops, but not necessarily on the basis of the same kinds of data. For once a specific malfunction is suspected, it is necessary to focus search on that particular eventuality. This requires a strategy which achieves reliable positive results, that is, which performs tests whose statistical properties include a high probability that there will be a malfunction if the system state indicates this.

However, there is a controversy on the Bayesian nature of expert strategies which are subject to the same bias as other kinds of probabilistic reasoning. Nevertheless, in the diagnostic context, Rigney (1969) showed that a Bayesian model could account for the data if applied to the actual problem space used by the operators, which is very often different from the frameworks used for theoretical analyses.

(iii)hypothesis generation and test strategies Great attention has been paid to hypothesis generation and test strategies, in more novel situations. Research on medical diagnosis has provided us with rich empirical evidence about these strategies (Elstein et al., 1978; Groen & Patel, 1988; Johnson et al., 1981; Kuipers & Kassirer, 1984; Patel & Groen, 1986), likewise blast furnace supervision (Hoc, 1989b). Two findings have to be stressed here: (a) confirmation biases, orienting operators to positive information (confirming current hypotheses) rather than negative information (disconfirming them); (b) the use of hypotheses formulated at different levels (from general to specific). More controversial data have been collected on the use of hypothetico-deductive strategies. Some data support the hypothesis of a wider use of this strategy in beginners than in experts (Groen & Patel, 1988), whereas others do not show any difference (Elstein et al., 1978) or identify the implementation conditions of this strategy in situations necessitating the formulation of a hypothesis to guide the gathering of information (Hoc, 1991).

The terminology adopted by Rasmussen for these strategies (topographic and symptomatic) is influenced by the original data collected in troubleshooting. If we wish to extend this framework to other kinds of situations (DES, computer program debugging, or medical diagnosis) we need to import a more psychological interpretation of the phenomena. Clearly, the main feature of topographic search is its orientation by knowledge of normal operation of the system, while symptomatic search relies on knowledge of abnormal operation (failure modes). So the data bases are very different, but it is quite unreasonable to imagine expert operators relying only on one type of knowledge. Rasmussen and Jensen’s original study, together with many studies conducted in training settings (especially Duncan, 1985, 1987a, 1987b), show that topographic search is a novice strategy that enables a fault to be diagnosed with minimal knowledge of the system.

Rasmussen’s two strategies relate primarily to the task of fault localization. In the supervision of dynamic environments, where prognosis is as important as state diagnosis, it is necessary to consider other strategies. Topographic and symptomatic search both depend on the system having been degraded sufficiently to generate diagnosable symptoms. However, early in the development of a malfunction, the causal chain linking failed component with symptom will not yet have established itself. Any pre-emptive action must therefore be based on probabilistic search.

TOWARD AN EXTENDED MODEL OF DIAGNOSIS AND DECISION-MAKING IN DYNAMIC SITUATIONS

For many years, models of diagnosis were dominated by a strong serial (vs. parallel) and rigid (invariant succession of phases) assumption about human activity. In addition, the human operator has long been considered as reacting to unexpected events, rather than proactive — anticipating events. The contribution of information theory and the psychological models which were derived from it — in particular the metaphor of the brain as a computer and the idea of limited channel — permeated the information gathering/analysis and diagnosis/decision-making/action chain.

In recent years cognitive psychology, although often critical of the simplistic models of the 1950s, has continued to adhere to the notion of serial work decomposable into goals and subgoals (Newell & Simon, 1972; Rumelhart, 1975; Schank & Abelson, 1977). On the level of diagnosis, this sequentiality is clearly illustrated in the Klahr and Dunbar (1988) model mentioned earlier: search for facts/hypothesis generation/hypothesis testing for diagnosis, which is clearly distinct from decision-making and action.

Two major changes have taken place in research over the last ten years, which have altered this sequential picture of activity.

First, there is a more molar vision of human activity, with a prevalence of performance models rather than skill models (Hollnagel, 1993; Woods & Roth, 1988), and dynamic rather than static models. This change, which can be attributed to the influence of ergonomic psychology, and which has been supported by a more ecological orientation of investigative methods (a shift from the laboratory to the field), has revealed numerous interactions between phases of activities which had previously been viewed as independent and successive. For example, the Rasmussen model presented above sparked debate about the effects of backward loops from decision-making to diagnostic activities. Interactions appear at many other levels: between the action repertory and the choice of hypotheses (blast furnaces, aircraft piloting, medicine), pre-selection of hypotheses (before an incident) and fact recovery, and hypothesis formulation (risky process control such as airplane piloting). In all cases, the dynamic nature of the process introduces specific constraints on activity and resource management.

The second change touches on a more polymorphous vision of diagnosis prompted by the range of applications of the concept of diagnosis. The variety of points in common between diagnosis in process control, breakdown diagnosis, medical expertise, inquiry board diagnosis, or a diagnostic model embedded in an A1 machine have been reduced: Actors’ social roles are not the same and the stakes are different. Some (process control operators) are oriented toward the future and toward explanations which lead to action, others (inquiry boards, medical expertise) are oriented towards the past — a causal diagnosis based on the identification of the historical causal chain. Breakdown diagnosis, and doubtless diagnostic models installed in machines, are midway between these two extremes.

It is beyond the scope of this chapter to describe these different domains, but it is obvious that diagnostic models differ radically as a function of activity. It is worth pointing out that this plurality (and perhaps ambiguity) of domains associated with the concept of diagnosis is a frequent cause of an erroneous overgeneralization in psychological studies from one particular type of diagnosis to another. This is the case, for example, when an operator is given the role of a superexpert evaluating a protocol produced by other operators (a change of role from actor to judge). This is also the case, for example, when an operator is restricted to diagnosis without action (paper and pencil work), which deprives the researcher of interaction effects and modifies the stakes of the task.

The diagnosis and decision-making model presented in the remainder of this chapter is restricted to cases where the operator executes work whose main objective is not the diagnosis of problems (for example process control, breakdown and repair, etc.). These activities contrast with situations where the actual nature of the work is causal diagnosis itself, as is the case in medical or technical superexpertise, inquiry boards, and so on.

The findings presented in earlier sections of this chapter show that diagnosis in these conditions reveals itself to be an activity which is: (i) neither independent and separable from other activities (the diagnosis is part of a goal-oriented activity and is not the prime goal of the activity itself), and (ii) neither strictly contemporaneous to the problem (diagnosis can start before the problem occurs or on the contrary be postponed for a variety of reasons such as different current priorities or expectations on upcoming supplementary information).

These evolutions in the concept of diagnosis in goal-oriented activities can be described by three additional dimensions:

(a) The Parallelism/Seriality Dimension of activity covers the simultaneous management of a diagnostic loop and a process control loop (in particular in dynamic situations where this system continues to evolve). These two loops are not necessarily synchronous, and process control actions may continue with some degree of cognitive autonomy, whereas a diagnosis activity with information search at the conceptual or symbolic level is taking place in parallel on features of the specific situation. This parallelism dimension is governed by the representation of available resources and cost/efficiency trade-offs at each point in time; regulation evolves constantly, but there is a priority for the control loop over the diagnosis loop. This dimension can lead to shortcuts in diagnostic activities. In all cases, priority of control results in evolutions in the situation and at times its return to normal without causal diagnosis. These dynamic evolutions interfere considerably with the set of givens and hypotheses.

(b) The Autonomy/Dependence on diagnosis outcome and in particular its implications in terms of actions. This dimension is the feedback of actions on the level of diagnosis and type of hypothesis entertained. On the one hand, diagnosis can take place with no consideration as to future actions (prospective diagnosis in the strict sense). The situation and the operator’s knowhow can considerably reduce action possibilities (the link with action repertory). In this case, the fact space is filtered and viewed through the knowledge of these possible actions which exist prior to the knowledge of the incidents. Diagnosis thus consists of restricting the hypotheses to ones that are compatible with the available actions (operationalization of diagnosis). For example, in the final approach below 150 meters in a transport plane the only choices are between stepping on the gas or landing. If the pilot receives a warning, the target level of diagnosis, the fact search and the working hypotheses will be the possibilities for stepping on the gas. This dimension is governed by the representation of the (action) plan and its flexibility.

(c) Diagnosis at the Time of the Event/Differed Diagnosis Dimension. Diagnosis was long viewed as an activity that was reactive and contemporary to the onset of problems. Studies on rapid process control (Amalberti & Deblon, 1992) show that diagnosis can also be anticipatory by simulating types of events in advance, imagining diagnostic problems, and preparing response plans. In this case, the problem is shifted and the activity consists of maintaining the process on the basis of expectations in order to keep the preplanned diagnosis and responses valid. In contrast, diagnosis can be voluntarily postponed to give priority to control in risky phases or because the temporal evolution of the situation may itself provide the key to diagnosis and thus economize resources compared to the cost of an immediate diagnosis (for example, diagnosis of a breakdown in a plant: Decortis, 1992; diagnosis of bus traffic incidents: Cellier, 1987; diagnosis of pathological pregnancies: Sébillotte, 1982; or relationships between diagnosis and action in rapid subway system control: Sénach, 1982).

The dimensions listed above are compatible with a diagnostic model (Figure 2) based on a system of symbolic representations built up by the operator for the circumstances and containing three facets: (i) a representation of the process and its goals (which enables anticipation), (ii) a representation of possible actions (which serves to orient the diagnosis towards a level of understanding and a decision adapted to the available options, and avoids unnecessary loss of resources) and (iii) a representation of the available resources (which enables regulation of cognitive activities and a compromise on the desired level of understanding).

The core of this extended model of diagnostic activities is risk-management and the level of understanding of the situation.

Because their resources are limited, operators need to strike a balance between two conflicting risks: an objective risk resulting from context (risk of accident) and a cognitive risk resulting from personal resource management (risk of overload and deterioration of mental performance).

To keep resource management feasible, the solution consists of increasing outside risks, simplifying situations, dealing only with a few hypotheses, and schematizing the real. To keep the outside risk within acceptable limits, the solution is to adjust the real to fit as much as possible with the simplifications set up during job preparation. This fit between simplification and reality is the outcome of anticipation.

FIGURE 2. Extended model of Diagnosis and Decision-Making (after Hoc & Amalberti, in press)

Activity is organized and coordinated by a symbolic representation system of the current situation. Rasmussen’s ladder has been incorporated (but reversed to symbolize the rising cost in resources when there is a need to revise the representation). The conception of a one-level diagnosis has been abandoned and replaced by several (possibly parallel) diagnosis loops characterized by different purposes and time perspectives as regards process evolution.

However, because of the mental cost of anticipation, the operator’s final hurdle is to share resources between short-term behavior and long term anticipations. The tuning between these two activities is accomplished by heuristics which again rely upon another sort of personal risk-taking (Amalberti, 1992); as soon as the short-term situation is stabilized, operators invest resources in anticipations and leave the short term situation under the control of automatic behavior.

Any breakdown in this fragile and active equilibrium can result in unprepared situations, and turn to reactive behavior with a high demand on the level of understanding, a typical situation where operators are poor performers.

REFERENCES

Alengry, P. (1987). The analysis of knowledge representation of nuclear power plant control room operators. In H.J. Bullinger, & B. Shackel (Eds.), Human-computer interaction: INTERACT’x87 (pp. 209–214). Amsterdam: North-Holland.

Allen, J.F. (1984). Towards a general theory of action and time. Artificial Intelligence, 23, 123–154.

Amalberti, R. (1992). Safety in risky process-control: An operator centred point of view. Reliability Engineering & System Safety, 38, 99–108.

Amalberti, R., & Deblon, F. (1992). Cognitive modelling of fighter aircraft’s process control: A step towards an intelligent onboard assistance system. International Journal of Man-Machine Studies, 36, 639–671.

Anderson, J.R. (1983). The architecture of cognition. Cambridge, MA: Harvard University Press.

Bainbridge, L. (1984). Diagnostic skill in process operation. Paper presented at the meeting of the International Conference on Occupational Ergonomics. Toronto, Canada.

Bainbridge, L. (1988). Types of representation. In L.P. Goodstein, H.B. Anderson, S.E. Olsen (Eds.), Tasks, errors, and mental models (pp. 70–91). London: Taylor & Francis.

Baudet, S., & Denhière, G. (1991). Mental models and acquisition of knowledge from text: Representation and acquisition of functional systems. In G. Denhière, & J.P. Rossi (Eds.), Text and text processing (pp. 55–75). Amsterdam, North-Holland.

Bestougeff, H., & Ligozat, G. (1989). Outils logiques pour le traitement du temps [Logical tools for time processing]. Paris: Masson.

Block, R.A. (Ed.). (1990). Cognitive models of psychological time. Hillsdale, NJ: Lawrence Erlbaum Associates.

Boreham, N., Foster, R.W., & Mawer, G.E. (1992). Strategies and knowledge in the control of the symptoms of a chronic illness. Le Travail Humain, 55, 15–34.

Boshuizen, H.P.A., & Schmidt, H.G. (1992). On the role of biomedical knowledge in clinical reasoning by experts, intermediates and novices. Cognitive Science, 16, 153–184.

Brajnik, G., Chittaro, L., Guida, G., Tasso, C., & Toppano, E. (1989, October). The use of many diverse models of an artifact in the design of cognitive aids.

Brajnik, G., Chittaro, L., Guida, G., Tasso, C., & Toppano, E. (1989, October). The use of many diverse models of an artifact in the design of cognitive aids. Paper presented at the Second European Conference on Cognitive Science Approaches to Process Control, Siena, Italy.

Brooke, J.B., & Duncan, K.D. (1983). A comparison of hierarchically paged and scrolling displays for fault finding. Ergonomics, 26, 465–477.

Cellier, J.M. (1987). Study of some determining elements of control orientation in a bus traffic regulation. Ergonomics International (pp. 766–768). London: Taylor & Francis.

Crossman, E.R.F.W. (1965). The use of signal flow graphs for dynamics analysis of man-machine systems. Oxford: Oxford University, Institute of Experimental Psychology.

Cuny, X. (1979). Different levels of analysing process control tasks. Ergonomics, 22, 415–425.

Decortis, F. (1988). Dimension temporelle de l’activité cognitive lors des démarrages de systèmes complexes [Temporal dimension of the cognitive activity during complex system start-up]. Le Travail Humain, 51, 125–138.

Decortis, F. (1992). Processus cognitifs de résolution d’incidents spécifiés et peu spécifiés en relation avec un modèle théorique [Cognitive processes solving specified and underspecified malfunctions in relation to a theoretical model]. Unpublished doctoral dissertation, University of Liège, Liège, Belgium.

Duncan, K.D. (1985). Representation of fault-finding problems and development of fault-finding strategies. PLET, 22, 125–131.

Duncan, K.D. (1987a). Fault diagnosis training for advanced continuous process installation. In J. Rasmussen, K.D. Duncan, & J. Leplat (Eds.), New technology and human error (pp. 209–221). Chichester: Wiley.

Duncan, K.D. (1987b). Reflections on fault diagnostic expertise. In J. Rasmussen, K.D. Duncan, & J. Leplat (Eds.), New technology and human error (pp. 261–269). Chichester: Wiley.

Elstein, A.S., Shulman, L.S., & Sprafka, S.A. (1978). Medical problemsolving. Cambridge, MA: Harvard University Press.

Goodstein, L.P. (1983). An integrated display set for process operators. In G. Johannsen, & J.E. Rijnsdorp (Eds.), IFAC (pp. 63–70). Oxford: Pergamon.

Goodstein, L.P., & Rasmussen, J. (1988). Representation of process state, structure and control. Le Travail Humain, 51, 19–37.

Govindaraj, T., & Su, Y.L. (1988). A model of fault diagnosis performance of expert marine engineers. International Journal of Man-Machine Studies, 29, 1–20.

Groen, G.J., & Patel, V.L. (1988). The relationship between comprehension and reasoning in medical expertise. In M.T.H. Chi, R. Glaser, & M.J. Farr (Eds.), The nature of expertise (pp. 287–310). Hillsdale, NJ: Lawrence Erlbaum Associates.

Guillermain, H. (1988, October). Aide logicielle au diagnostic sur processus robotisé et évolution possible vers une extraction automatique des connaissances de base des experts [Computer support to diagnosing an automated manufacturing process and possible evolution toward an automatic expert basic knowledge elicitation]. Paper presented at ERGOIA’88, Biarritz, France.

Hoc, J.M. (1988). Cognitive psychology of planning. London: Academic Press.

Hoc, J.M. (1989a). La conduite d’un processus à longs délais de réponse: Une activité de diagnostic [Controlling a continuous process with long response latencies: A diagnosis activity]. Le Travail Humain, 52, 289–316.

Hoc, J.M. (1989b). Strategies in controlling a continuous process with long response latencies: Needs for computer support to diagnosis. International Journal of Man-Machine Studies, 30, 47–67.

Hoc, J.M. (1991). Effet de l’expertise des opérateurs et de la complexité de la situation dans la conduite d’un processus continu à long délais de réponse: Le haut fourneau [Effects of operator expertise and task complexity upon the supercision of a continuous process with long time lags: A blast furnace]. Le Travail Humain, 54, 225–249.

Hoc, J.M. (in press). Operator expertise and verbal reports of temporal data: supervision of a long time lag process (blast furnace). Ergonomics.

Hoc, J.M., & Amalberti, R. (in press). Diagnosis: Some theoretical questions raised by applied research. Current Psychology of Cognition.

Hoc, J.M., & Samurçay, R. (1992). An ergonomic approach to knowledge representation. Reliability Engineering and System Safety, 36, 217–230.

Hollnagel, E. (1993). Models of cognition: Procedural prototypes and contextual control. Le Travail Humain, 56, 27–51.

Iosif, G.H. (1968). La stratégie dans la surveillance des tableaux de commande. I. Quelques facteurs déterminants de caractère objectif [The control panel monitoring strategy. I. Some objective determining factors]. Revue Roumaine de Sciences Sociales, 12, 147–163.

Johnson, P.E., Duran, A.S., Hassebrock, F., Moller, J., Prietula, M., Feltovich, P.J., & Swanson, D.B. (1981). Expertise and error in diagnostic reasoning. Cognitive Science, 5, 235–283.

Kahneman, D., & Tversky, A. (1972). Subjective probability: A judgment of representativeness. Cognitive Psychology, 3, 430–454.

Klahr, D., & Dunbar, K. (1988). Dual space space search during scientific reasoning. Cognitive Science, 12, 1–48.

Kuipers, B., & Kassirer, J.P. (1984) Causal reasoning in medecine: Analysis of a protocol. Cognitive Science, 8, 363–385.

Lind, M. (1991, September). On the modelling of diagnostic tasks. Paper presented at the Third European Conference on Cognitive Science Approaches to Process Control, Cardiff, U.K.

Lind, M., Osman, A., Agger, S., & Jensen, H. (1989, October). Human-machine interface for diagnosis based on multilevel flow modelling. Paper presented at the Second European Conference on Cognitive Science Approaches to Process Control, Siena, Italy.

Macar, F., Pouthas, V., & Friedman, W.J. (Eds.). (1992). Time, action, and cognition. Dordrecht: Kluwer.

Mariné, C., Cellier, J.M., & Valax, M.F. (1988). Dimensions de l’expertise dans une tâche de régulation de trafic: Règles de traitement et profondeur du champ spatio-temporel [Dimensions of expertise in a traffic control task: Processing rules and spatiotemporal field deepness]. Psychologie Française, 33, 151–160.

Michon, J.A. (1990). Implicit and explicit representation of time. In R.A. Block (Ed.), Cognitive models of psychological time (pp. 37–58). Hillsdale, NJ: Lawrence Erlbaum Associates.

Morrison, D.L., & Duncan, K.D. (1988). The effect of scrolling, hierarchically paged displays and ability on fault diagnosis performance. Ergonomics, 31, 889–904.

Newell, A., & Simon, H.A. (1972). Human problem-solving. Englewood Cliffs, NJ: Prentice-Hall.

Patel, V.L., & Groen, G.J. (1986). Knowledge-based solution strategies in medical reasoning. Cognitive Science, 10, 91–116.

Patrick, J. (1993). Cognitive aspects of fault-finding training and transfer. Le Travail Humain, 56, 187–209.

Rasmussen, J. (1981). Models of mental strategies in process plant diagnosis. In J. Rasmussen & W.B. Rouse (Eds.), Human detection and diagnosis of system failures (pp. 241–258). New York: Plenum.

Rasmussen, J. (1984). Strategies for state identification and diagnosis in supervisory control tasks, and design of computer-based support systems. Advances in Man-Machine Systems Research, 1, 139–193.

Rasmussen, J. (1986). Information processing and human-machine interaction. Amsterdam: North-Holland.

Rasmussen, J., & Jensen, A. (1974). Mental procedures in real life tasks: A case study of electronic troubleshooting. Ergonomics, 17, 293–307.

Rasmussen, J., & Rouse, W.B. (Eds.). (1981). Human detection and diagnosis of system failures. New York: Plenum.

Reason, J. (1990). Human error. Cambridge: Cambridge University Press.

Richard, J.F., Bonnet, C., & Ghiglione, R. (Eds.). (1990). Traité de psychologie cognitive [Handbook of cognitive psychology]. Paris: Dunod.

Richelle, M., & De Keyser, V. (1992). The nature of human expertise (report RFO/AI/18). University of Liège, Liège, Belgium.

Rigney, J.W. (1969). Simulation of corrective maintenance behavior. Proceedings of the NATO Symposium “Simulation of human behavior” (pp. 419–428). Paris: Dunod.

Rosch, E. (1975) Cognitive representation of semantic categories. Journal of Experimental Psychology: General, 3, 192–233.

Rumelhart, D. (1975). Notes on a schema for stories. In D. Bobrow & A. Collins (Eds.), Language, thought and culture (pp. 211–236). New York: Academic Press.

Samurçay, R., & Rogalski, J. (1988). Analysis of operator’s cognitive activities in learning and using a method for decision making in public safety. In J. Patrick & K.D. Duncan (Eds.), Human decision-making and control (pp. 133–152). Amsterdam: North-Holland.

Schank, R.C., & Abelson, R. (1977). Scripts, plans, goals, and understanding. Hillsdale, NJ: Lawrence Erlbaum Associates.

Sébillotte, S. (1982). Les processus de diagnostic au cours du déroulement de la grossesse [The diagnosis process during pregnancy development]. Unpublished doctoral dissertation, University of Paris V, Paris, France.

Sénach, B. (1982). Aide à la résolution de problèmes par la présentation graphique des données [Supporting problem-solving by data graphical display] (Tech. Rep. No. CRER 8202R06). Rocquencourt, France: INRIA.

Simon, H.A., & Lea, G. (1974). Problem-solving and rule induction: a unified view. In L.W. Gregg (Ed.), Knowledge and cognition (pp. 105–128). Potomac, MD: Lawrence Erlbaum Associates.

Umbers, I.G. (1979). A study of the control skills of gas grid control engineers. Ergonomics, 22, 557–571.

Woods, D., & Roth, E. (1988). Aiding human performance (II): From cognitive analysis to support systems. Le Travail Humain, 51, 139–159.