13

Man-Machine Cooperative Organizations: Formal and Pragmatic Implementation Methods

CNRS - University of Valenciennes

The present increase of decisional capabilities of Artificial Intelligence (AI) tools makes the problem of cooperation between humans and Intelligent Support Systems more and more actual in the high-level decision tasks for process supervision and control. This chapter tries to bring some elements for modeling and implementing cooperative organizations involving heterogeneous decision-makers: human and artificial. Distributed Artificial Intelligence approaches are first analyzed and illustrated through an example in the air traffic control domain (ATC). Then Human Engineering and Ergonomics approaches are described and analyzed in the same application domain. A comparison of both approaches is made through conceptual points of view and also through pragmatic criteria concerned by the implementation possibilities in concrete complex application domains such ATC.

INTRODUCTION

The present increase of decisional capabilities of Artificial Intelligence (AI) tools makes the problem of cooperation between humans and Intelligent Support Systems more crucial. This new kind of cooperative work at higher level decision-making tasks mandates reflection on how to model the organization of an heterogeneous team of decision-makers. For instance, the integration of decision support systems in a control room of a complex process creates a new situation in which human supervisors who already cooperate among themselves also have to cooperate with the support system. This situation should result in a cross-cooperation between men and machines (Bellorini et al., 1991). But this objective does not seem easily reached, not only for technical reasons linked with the present limitations of A1 systems or for human reasons due, for instance, to their knowledge level on the process and on the A1 system, but also for psychological reasons due, for instance, to the lack of operator’s trust in the A1 system (Moray, Hiskes, Lee, & Muir, chapter 11, this volume).

Therefore a new way of research seems to emerge for modeling these cooperative organizations. This chapter tries to bring some elements derived from Distributed Artificial Intelligence approaches (DAI) on one hand, and from on-site human engineering approaches in the field of man-machine cooperation. The first part discusses some characteristics of distributed decision making and illustrates this problem through the example of Air Traffic Control (ATC). The second part presents the solutions proposed by the DAI approaches. The last part deals with a more pragmatic solution in the ATC domain.

DISTRIBUTED DECISION-MAKING

The problem of distributed decision making has been investigated since the early eighties by the DAI community, with a view to modeling and implementing multi agent organizations. An agent is an autonomous artificial decision-maker (for instance an expert system) having its own source of knowledge, its own control mechanism (for instance an inference engine) and capabilities of communications with the other agents of the organization. The proposed organization models are often derived from the human society and can therefore be transposed to the heterogeneous organizations involving A1 agents as well as human agents.

The cooperative process of decision making in a group of agents involves the interaction between multiple goals, each having different scope and nature as well as different heuristics. The distributed decision making in a cooperative work is a decomposition according to the nature of the problem. For instance we can give two forms of cooperation corresponding to two different hierarchical organizations:

- In the first organization the decision-makers are at the same level, and the tasks as well as the actions resulting from them can be distributed between them. This form of cooperation simply augments the information processing capacities of individual agents and thus enables a group to perform a task that would have been impossible to each of them individually within acceptable time. In this case, the agents have equivalent abilities. However, the principal problem consists in searching for a task allocation method between the participants for coordinating the problem solving.

- In the second organization, one decision-maker is responsible for all the tasks and if necessary he can call other agents. It integrates the contributions of multiple agents which are devoted to the operation of different specialized tasks. In this case, the abilities are different but complementary. However, it requires a great adaptivity so as to insure consistence between the cognitive level of agents.

Let us see the example of Air Traffic Control (ATC) in which these two kinds of organizations can be proposed. The ATC objectives consist in monitoring and controlling the traffic in such a way that aircrafts cross the air space with the maximum safety level.

In the present real organization, the ATC Centers monitor each aircraft and detect preventively the risks of conflicts (i.e., when two or more planes transgress the separation norms, which could result in a collision). When such a risk is detected the ATC controllers give to one of the pilots involved in the conflict, the order to modify his plane trajectory. This organization corresponds to the second one presented earlier in which the ATC controller is at the higher level of the hierarchy and the planes are the specialized agents.

In a more futuristic view of the ATC organization, some researchers have proposed a DAI approach in which each plane would be fitted with radar detection facilities and communications with the other planes and conflict solving support systems. In this organization the centralized ATC centers would no more be needed and the problem would be seen as a distributed decision-making between the planes (agents) involved in the conflict. This corresponds to the first organization described earlier in which the different agents must cooperate and coordinate themselves for solving the problem.

Through this example we can foresee the difficulties to be dealt with, in particular the work conducted collectively and directly may require the interaction of agents with multiple goals of different scope and nature. First, they can prefer different problem solving strategies or heuristics. Thus the cooperative decision making involves a continuous process of assessing the validity of the information produced by the others. Secondly, decisions are always generated within a specific conceptual framework, as answers to specific questions. Thus, a system supporting cooperative work involving decision-making should enhance the ability of cooperating agents to interrelate their partial and domain knowledge and facilitate the expression and communication of alternative perspectives on a given problem. The cooperation underlines different levels of problem. It thus addresses the organizational view.

We can now see two kinds of approaches and relevant problems for implementing a cooperative organization: the DAI approaches and the different kinds of formal solutions they propose, and a more pragmatic human engineering approach; these two kinds of approaches are both illustrated by the ATC problems.

DISTRIBUTED ARTIFICIAL INTELLIGENCE APPROACHES

In a multiagent environment, an organizational structure (Corkill, 1983) is the pattern of information and control relationships that exist between the agents, and the distribution of problem solving capabilities among the agents. In cooperative distributed problem solving, for example, a structure gives each agent a high-level view of how the group solves problems and the role that the agent plays within this structure. Generally, the structure must specify roles and relationships to meet these conditions: For. example, to ensure the problem coverage, the structure could assign roles to the agents according to their competences and knowledge for a given subproblem. The structure must then also indicate the connectivity information to the agents so that they can distribute subproblems to competent agents.

DAI approaches raise and study 2 kinds of problems to be solved for choosing and implementing the structure of the cooperative organization:

- the nature and modes of communications this structure needs,

- the degrees of cooperation this structure and these communication modes allow.

Furthermore, another concept does exist in DAI, linked with the flexibility of a structure, which allows a dynamic evolution of the organization from a structure to another one, according to the nature of the problem to be solved. These concepts will be illustrated trough the example of ATC.

Communication

In DAI the possible solutions to the communication problem range between those involving no communication to those involving high-level, sophisticated communication.

- No communication: Here the agent rationally infers the other agents’ plans without communicating with them. To study this behavior, Genesereth & al. (1986) use a game-theory approach characterized by pay-off matrices that contain the agents’ payoffs for each possible outcome of an interaction. Indeed, the agents must rely on sophisticated local reasoning compensating the lack of communication to decide appropriate actions and interactions.

- Plan Passing: In this approach, an agent A1 communicates its total plan to an agent A2, and A2 communicates its total plan to Al. Whichever plan arrives first is accepted (Rosenschein et al., 1985). While this method can achieve cooperative action, it leads to several problems. The total plan passing is difficult to achieve in a real world application because there is a great deal of uncertainty about the present state of the world, as well as its future. Consequently, for real life situations, total plans cannot be formulated in advance, and general strategies must be communicated to a recipient.

- Information Exchanges through a Blackboard: In AI, the blackboard model is most often used as a shared global memory (Nii, 1986) on which agents write messages, post partial results, and find information. It is usually partitioned into levels of abstraction of the problem, and agents working at a particular level of abstraction see a corresponding level of the blackboard within the adjacent levels. In this way, data that have been synthesized at any level can be communicated to the higher levels, while higher level goals can be filtered down to drive the expectations of lower level agents.

- Message Passing: Undoubtedly, the work of Hewitt and his colleagues (Kornfeld & Hewitt, 1981) on actor languages is an excellent representation of message passing application to DAI. In this work however, the agents have an extremely simple structure, and several works in DAI have used classical message passing with a protocol and a precise content (Cammarata et al., 1983).

- High-Level Communication: Research on natural language understanding and particularly research on intentions in communication (Cohen et al., 1990) is relevant to DAI research because both research areas must investigate reasoning about multiple agents with distinct and possibly contradictory intentional states such as beliefs and intentions. An agent must interpret messages from other agents, including what the messages imply about the agent’s mental states, and must generate messages to change the mental states of other agents, taking into account its own potential actions and those of others.

Degree of Cooperation

The Degree of cooperation characterizes the amount of cooperation between agents that can range from fully cooperation (i.e., total cooperation) to antagonism. Fully cooperative agents which are able to resolve non-independent problems, often pay a price in high communications costs. These agents may change their goals to suit the needs of other agents in order to ensure cohesion and coordination. Conversely, the antagonistic systems may not cooperate at all and may even block each other’s goals. Communication cost required by these systems is generally minimal. In the middle of the spectrum lie traditional systems that lack a clearly articulated set of goals. We can notice that most real systems are cooperative to some small degree.

- hierarchical approach: A total cooperation is done in the Cooperative Distributed Problem Solving studies (Durfee, 1989), where a loosely coupled network of agents can work together to solve problems that are beyond their individual capabilities. In this network, each agent is capable of resolving a complex problem and can work independently, but the problems faced by the agents cannot be completed without total cooperation. Here, total cooperation is necessary because no single agent has sufficient knowledge and resources to solve a problem, and different agents might have expertise for solving different subproblems of the given problem. Generally, agents cooperatively solve a problem 1) by using their local knowledge and resources needed for solving individually subproblems, and 2) by integrating these subproblem solutions into an overall solution.

- autonomous approach: The fully cooperative-to-antagonistic spectrum has been researched by Genesereth et al. (1986). They note that the situation of total cooperation, known as the benevolent agent assumption, is accepted by most DAI researchers but is not always true in the real world where agents may have conflicting goals. To study this type of interaction, Genesereth defines agents as entities operating under the constraints of various rationality axioms that restrict their choices in interactions. Each agent considers its own interest instead of the group one.

Dynamics

Given a distributed problem solving system, how does one define a control organization for it? In other words, how does one achieve coherent behavior and coordination between participant agents? This section deals with these two characteristics. In fact, a consistent and coordinated behavior creates dynamically a hierarchy. The goal develops a slave-master relationship.

In DAI, how agents should coordinate their distributed resources, which might be physical (such as communication capabilities) or informational (such as information about the problem decomposition), have a direct influence on the coordination. Clearly, agents must find an appropriate technique for working together harmoniously.

Generally, researchers in DAI use the negotiation process to coordinate a group of agents. Unfortunately, negotiation, like other human concepts, is difficult to define. Sycara (1989) for example, states that “the negotiation process involves identifying potential interactions either through communication or through reasoning about the current states and intentions of other agents in the system and modifying the intentions of these agents to avoid harmful interactions or create cooperative situations” (p. 120). Durfee (1989) defines the negotiation as “the process of improving agreement (reducing inconsistency and uncertainty) on common viewpoints or plans through the structured exchange of relevant information” (p. 64). Although these two descriptions of negotiation capture many of our intuitions about human negotiation, they are too vague to provide techniques for how to get agents to negotiate.

Smith’s work on the Contract-Net (Smith, 1980) introduced a form of simple negotiation among cooperating agents by a protocol of task sharing. Precisely, an agent, who needs help, decomposes a large problem into subproblems, announces the subproblems to the group of agents, collects bids from agents, and awards the subproblem to the most suitable bidders. In fact, this protocol gives us the best negotiation process for dynamically decomposing problems because it is designed to support task allocation. It is also a way of providing dynamically opportunistic control for the coherence and the coordination.

Examples of DAI approaches in Air Traffic Control

The precedent characteristics highlight several difficulties: 1) the decomposition of the problem to be solved into sub-problems, and 2) the coordination of the different sub-problems assigned to agents especially in the cases of interconnected sub-problems. Let us see the problem of conflict avoidance in air traffic according to the multi-agent organization evoked previously: that is, each aircraft involved in the conflict is an agent and there is no ground air traffic control center which could constitute the higher level of the hierarchy.

When faced with such a problem it is necessary to define the problem solving strategy and the cooperative structure to be implemented for that purpose.

The problem solving strategy consists in that the agents adopt the problem as a goal and elaborate a plan to achieve it. Thus, the planning, that is, the choice of actions, is the main activity of the agents. Of course, each agent must have necessary knowledge which allows it to reason with other agents for sharing knowledge or sharing tasks. A1 researchers consider the intelligent behavior like a choice of actions in terms of contribution to the satisfaction of the agent’s goal. This choice may be considered as deliberation among competing desires of planes. Notice also that the knowledge of each plane is not necessarily objective, and in this case, we call it beliefs. In fact, an agent structure includes a belief base, a desire base, and a reasoning mechanism. The role of the reasoning mechanism consists first in choosing pertinent goals and in producing persistent goals. These persistent goals express the way in which each agent is committed to its goal. Furthermore, the reasoning mechanism must also produce intentions to force the agent to act. Beliefs, commitments, and intentions, which constitutes the satisfaction of selected desire are so combined into a plan. These concepts are introduced by a formal theory which has been developed by Chaib Draa (1990).

For defining the cooperative structure to be implemented, Cammarata et al. (1983) have proposed four different structures. Three of the four structures embedded a task centralized policy for one agent: They differ in how to choose the agent that is the most appropriate for detecting the conflict, fixing a new flight plan, sending the new plan to the nearby aircrafts, and finally carrying out the new plan. The fourth structure is a task sharing policy. While in the centralized policy, a single negotiation determines the agent that is the most appropriate to avoid the conflict; in the task sharing policy, two rounds of negotiation are necessary: the first round determines the planner agent and the second one determines the plan executors. Therefore this task sharing policy is an extension of the centralized policy. Let us see it in further detail.

The negotiation is based on the exchange of two factors between the aircrafts involved in a potential conflict; one is the knowledgeable factor and the other one is the constraint factor:

- The knowledgeable factor is specific to each aircraft, and concerns the aircraft’s beliefs about intentions of nearby aircrafts, and allows the planner-agent to be determined, since an aircraft having the best knowledgeable factor is able to elaborate a new plan to avoid the conflict, taking into account the intentions of nearby aircrafts. In fact, the most knowledgeable aircraft is the best one for fixing a new plan and evaluating its implications.

- The constraint factor, specific to each aircraft, is an aggregation of considerations such as the number of other nearby aircrafts, the fuel level and distance remaining, the emergency in the aircraft, that is, mechanical default. It allows an aircraft concerned by the conflict to determine the plan executor, since the lowest constrained aircraft has probably the most degrees of freedom for modifying its plan without any risk.

The sequence of tasks under this policy is depicted here. Let us consider the case where only two aircrafts, A1 and A2, are concerned by the conflict. We suppose that A1 is the most knowledgeable one and A2 is the least constrained one. In this case, when A1 (or A2) suspects a conflict, it sends its knowledgeable factor to A2 (or to Al) according to the “Inform Plan.”

After the knowledgeable factor exchange, both aircrafts exchange their constraint factor in a similar fashion. At this time, they know who is the most knowledgeable and who is the least constrained. The aircraft A1 (the most knowledgeable) must follow a “Solving Plan.” According to this plane, A1 fixes a new plan for A2, before sending it to A2. Parallely, A2 which is the least constrained, sends its actual flight plan to A1, and continues its routine activities while awaiting the new plan from Al. When it receives the new plan, it sends it to the nearby aircraft concerned by the same conflict and finally executes it to resolve the conflict.

Therefore implementing such a flexible task sharing policy raises several difficulties for modeling the organization in a formal way :

to describe the agent actions and their effects in its environment,

- to represent beliefs,

- to take into account the agent intentions,

- to represent plans,

- to formulate communicative acts between the agents.

Solving these difficulties constitutes presently an important source of research in the A1 field which also meet general difficulties for modeling high level processes of reasoning. Nevertheless it can bring about formal way for modeling cooperative structures involving not only artificial agents but also human decision-makers (see Benchekroun & Pavard, chapter 10, this volume).

For the present, a parallel way of thinking based on human engineering approaches can be followed for studying and implementing cooperative organizations. The next part deals with this approach.

HUMAN ENGINEERING APPROACHES

This approach first investigated relationships between one single human decision maker and one single A1 support system, according to two possible structures called respectively “vertical” and “horizontal” cooperations (Millot, 1988). Let us see first these two structures separately and next, the multi-level cooperative organization which can be proposed. These concepts are deduced from studies in the realistic context of Air Traffic Control.

Man-machine cooperation principles

The vertical and horizontal cooperation principles are briefly recalled here.

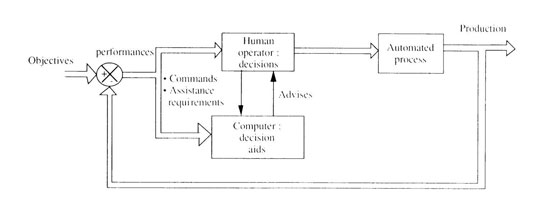

In the vertical cooperation, the operator is responsible for all the process variables and if necessary he can call upon the decision support tool, which will supply him with advice (Figure 1). It is a simplified form of the hierarchized organization proposed in the first section of this chapter, in which the human operator is the decision-maker placed at the higher level of the hierarchy and the A1 support system is an assistant. Therefore the human operator must be able to control the A1 system by using its explanation and justification capabilities, that is, to solve what we called “decisional conflicts.” For that purpose a justification graphical interface has been studied and integrated into the supervision system of a simulated power plant (Taborin & Millot, 1989).

Therefore, in this human engineering approach to vertical cooperation, we meet all the problems evoked in the DAI approach especially for the nature and modes of communications between both agents given that the structure and the degree of cooperation are already chosen. But the communication problem is particularly expressed in this heterogeneous mutli-agent organization, due to the presence of a human operator, which needs to take into account cognitive ergonomics concepts.

FIGURE 1. Man-machine vertical cooperation principle

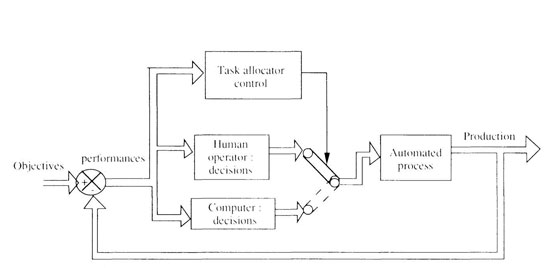

In the horizontal cooperation, the A1 decision tool outputs are directly connected to the process control system, Figure 2. Both agents, human, and A1 tool are at the same hierarchical level, and the supervision tasks, as well as the actions resulting from them, can be dynamically distributed between them, according to performance and workload criteria. It is a form of horizontal organization proposed in the first section, but reduced to two agents, and which corresponds moreover to the task sharing policy proposed by Cammarata et al. (1983). But the main difference with Cammarata’s organization concerns the control modes of the organization. Indeed in the DAI’s approach each agent is a priori able to take in charge the coordination of the organization which needs high level cognitive capabilities, that is, planning capabilities, thus the whole tasks, that is high level planning tasks for coordination as well as tactical execution tasks, can be dynamically shared between the agents, according to the nature of the problem to be solved. While this idea appears very enticing, its implementation in real complex contexts raises many problems especially due to the present limitations of A1 tools.

Therefore the organization we propose consists in reducing the dynamic allocation to the tactical level tasks, the coordination strategic tasks being fixed a priori. For that purpose a task allocation control is introduced at the higher level of the hierarchy (Figure 2). That needs the choice a priori of the actor responsible of the coordination, that is, the task allocation control, which can be:

• a dedicated artificial decisional stage which needs capabilities for assessing human workload and performance; it is called an implicit dynamic task allocation (Millot & Kamoun, 1988),

• the human operator himself who plays here a second role dealing with strategic and organizational tasks; it is called an explicit dynamic task allocation (Kamoun et al., 1989).

FIGURE 2. Man-machine horizontal cooperation principles

For extending these two principles we can foresee a multi-level organization in which the strategic level tasks could be performed by a second human operator and furthermore he could be supported by A1 tools with prediction capabilities, for the tactical level tasks’ allocation (Figure 3) (Vanderhaegen et al., 1992). This organization is then a combination of vertical and horizontal cooperations: a vertical cooperation at the strategic level and a horizontal one at the tactical level. The principles for explicit and implicit dynamic allocations have been implemented and evaluated in the air traffic control domain according to the real constraints and the already existing organization in this domain. The experiments and first results are briefly described below.

FIGURE 3. Multi-level Man-machine cooperation

Horizontal cooperation study in air traffic control

The air traffic control objectives are globally the same as indicated in the first sections of this chapter. The major difference with the Cammarata’s theoretical approach being the hierarchized overall organization in which the ATC Centers (the human controllers) are at the higher level of the hierarchy and the aircrafts (their pilots) must follow their orders. Then the flexible task sharing structure we deal with here concerns exclusively the organization of the ATC Center, but under the constraints imposed by the traffic, that is, the aircrafts. The ATC Center activities and organization are now described, followed by the dynamic allocation experiments at the tactical level of the ATC.

Air Traffic control center activities and organization

The air space is divided into geographical sectors that are managed by two controllers. The first one is a tactical controller, called “radar controller.” Through a radar screen, he supervises the traffic and dialogues with the aircraft pilots. The supervision consists in detecting possible conflicts between planes that may transgress separation norms, and then in solving them. The dialogue with pilots consists in informing them about traffic and asking them to modify their flight level, cape, or speed in order to avoid a conflict.

The second one is a strategic controller, called “organic controller.” First, he makes coordination between sectors in order to avoid unsolvable conflicts at the sectors’ borders. Secondly, he anticipates the traffic density and regulates the radar controller workload. Third, he helps the radar controller in loaded traffic contexts, by taking charge of some tactical tasks.

In fact, these tasks are assumed according to the traffic density:

- For low traffic case, one controller manages the strategic and tactical tasks.

- For normal traffic case, there are two controllers: an organic one and a radar one.

- For loaded traffic case, the organic controller takes charge of some tactical tasks.

- For overloaded traffic case, there is an organic controller and two radar controllers.

- For saturated traffic case, the controlled sector is divided if it is possible.

At present, it seems difficult to reduce the main control tasks. For instance, the geographical sectors’ size can’t be reduced anymore, because both the input and output coordinations between sectors would increase and the conflict solving would be more difficult. So, we have implemented in a first step a dynamic task allocation at the tactical level.

The principle consists in inserting in the control and supervisory loop, a task allocation which shares control tasks between the radar controller and an expert system, called SAINTEX. This aims at regulating the human workload and at improving the performance and the reliability of the tactical controller-SAINTEX team.

Dynamic task allocation implementations at the tactical level

Both the implementation and tests of such a new organization of flexible task allocation cannot be made directly in a real control room without a sufficient validation in a simulated context. For these reasons, we have built a simplified but realistic experimental platform for air traffic control called SPECTRA (French acronym for Experimental System for the Air Traffic Control Tasks Allocation).

According to this principle, the two types of dynamic allocation managements have been integrated in SPECTRA structure (Figure 4). In the “explicit” task allocation the radar controller manages himself the task allocator through a dialogue interface. He is his own estimator of performance and workload, and he allocates tasks either to himself or to SAINTEX. The “implicit” task allocation is controlled by an automatic management system implemented on the calculator. This allocation depends on the capabilities of the two decision-makers. For SAINTEX, those abilities are functional ones: Only conflicts between two planes can be treated (solvable conflicts). For the human radar controller, these abilities are linked with his workload. At present, only the tasks demands are assessed. So, when these demands are too high and exceed a maximum level, the solvable conflicts are allocated to SAINTEX.

FIGURE 4. Explicit and implicit modes integrated into SPECTRA

Moreover, the performance estimator for the human controller-SAINTEX team is based on economic (comparison between the real and theoretical consumption) and security (the number of airmiss) criteria. This global performance criterion is not used in the control policy of the task allocator, but it is used to compare the results of the experiments.

The tasks demand estimator is a summation of the tasks demands to which the air traffic controllers are submitted. These functional demands are calculated in affecting a demand weight for each event that is appearing during the control tasks. Events are classified in two classes: one for the aircraft states and the second for the conflict states.

Experimental context and results (Debernard, Vanderhaegen, & Millot, 1992)

Nine qualified air traffic controllers and six novice controllers, who are Air Traffic engineers with great theoretical expertise but with a low level of practice, took part in a series of experiments. Each controller performed four experiments a training one, without assistance, in explicit mode, and in implicit mode using different scenarios in order to prevent learning effects. The scenarios involved a great number of planes and generated a lot of conflicts.

Three classes of parameters have been measured and recorded during each experiment:

- The first class concerns all the data needed to calculate the global performance.

- The second class consists of all the data needed to calculate the task demands.

- The last one concerns the subjective workload. One module was added to SPECTRA for that purpose. It concerns the workload estimation by the operator. The air traffic controller has to try to estimate his own workload, clicking with a mouse, on a small scale, graduated from 1 (lower load) to 10 (higher load). This board appears regularly every 5 minutes. It does not disturb the air traffic control because the operator answers when he wants to do. Those subjective workload estimations, are used for validating the tasks demands calculation and improving the workload estimator.

In the final step of each experiment, two types of questionnaires have been submitted to the controller. The first class of questionnaires is the Task Load indeX (TLX) method (NASA, 1988) that permits calculation of a subjective global workload. The second class of questionnaires is a series of questions about general information, workload, comparisons between explicit and implicit modes that allowed us an oriented discussion with the controller.

Results have been analyzed for all the controllers who performed each simulated scenario seriously as in real control context. Conclusions are convincing:

- Our tasks demands calculation is validated.

- The dynamic allocation modes are real helps for loaded air traffic control.

- Globally, the implicit mode is more efficient than the explicit one.

- SPECTRA interface does not modify usual controllers strategies.

Synthesis

A lot of results can be deduced from these experiments and the analysis of the data is not yet over, especially concerning workload and performance assessment, but also concerning decisional strategies and operative modes used by the human controllers and the degree of cooperation they performed with SAINTEX in the explicit mode. Nevertheless it is possible to draw some conclusions about the explicit mode.

First, we have to notice that the human controller played a double role: a tactical role (solving conflicts for example) and a strategic role (anticipating possible conflicts and planning tasks in order to regulate his workload, for example). Therefore, he played the organic controller’s role as well as the radar controller’s role. That’s why we plan to include another cooperation level on SPECTRA at the strategic level in order to implement and test the multi-level cooperative organization proposed in Figure 3. For that purpose a planning support system is studied.

Secondly, when studying the controllers’ decisional strategies and operative modes, we first remarked differences in the degrees of cooperation performed with SAINTEX by the qualified controllers on the one hand and by the novice controllers on the other hand. In fact some qualified controllers did not really cooperate with SAINTEX and allocated very few air conflicts to it. Their answers to the questionnaires showed a very limited trust in SAINTEX. Further investigations will be pursued in order to know why and particularly if this behavior is due to a lack of knowledge about the capacities and structure of SAINTEX.

At the opposite end, novice controllers generally showed a high degree of cooperation with SAINTEX, and the performance of the teams they constituted with SAINTEX were similar to the teams involving qualified controllers.

These first qualitative statements must be confirmed by further analyses but it seems to confirm other results by Neville Moray et al. (chapter 11, this volume) on the importance of trust and self-confidence in human computer relationships.

Finally the choice of an organizational structure for Man-Machines cooperation is strongly dependent on the nature and the characteristics of the tasks to be shared between the different agents (either human or artificial) and needs a deep analysis of these tasks. This would result in a taxonomy of the tasks or subtasks according to different levels such as strategic and tactical. In our opinion, methodological and conceptual research is also needed in this way.

CONCLUSION

This chapter has proposed several approaches for designing cooperative organizations. Conceptual frameworks coming from the Distributed Artificial Intelligence community can be very helpful given the advanced reflection which has been made for identifying and analyzing the possible structures for these organizations and the constraints they raise: communication needs, degrees of cooperation needs. The organizations developed in parallel by the human engineering and ergonomics community who seems to deal more deeply with realistic on site application domains have also been described. Comparing these two kinds of approaches shows the important similarities of both approaches but also underlines some differences especially linked with implementation constraints. Nevertheless, it seems that some new ways of research could be born from these complementary points of view.

REFERENCES

Bellorini, A., Cacciabue, P.C., & Decortis, F. (1991, August). Validation and development of a cognitive model by field experiments: Results and lesson learned. Paper presented at the Third European Conference Cognitive Approaches to Process Control. Cardiff, UK.

Cammarata, S., McArthur, D., & Steeb, R. (1983, August). Strategies of cooperation in distributed problem solving. Paper presented at the Eighth International Joint Conference on Artificial Intelligence, Karlsruhe, Germany.

Chaib-Draa, B. (1990). Contribution à la Résolution Distribuée de Problème: Une approche basée sur les états intentionnels [Contribution to distributed problem-solving: an approach based on intentional states], Unpublished doctoral dissertation, Université de Valenciennes, France.

Cohen, P. R., & Levesque, H. J. (1990). Intention is choice with commitment. Artificial Intelligence, 42, 213–261.

Corkill, D.D., & Lesser, V.R. (1983, August). The use of meta-level control for coordination in a distributed problem solving network. Paper presented at the Eighth International Joint Conference on Artificial Intelligence, Karlsruhe, Germany.

Debernard, S., Vanderhaegen, F., & Millot, P. (1992, June). An experimental dynamic tasks allocation between air traffic controller and A.I. system. Paper presented at the 5th IFAC/IFIP/IFORS/IEA. Symposium on Analysis, Design and Evaluation of Man-Machine Systems. MMS’92, The Hague, The Netherlands.

Durfee, E. H., Lesser, V. R., & Corkill, D. D. (1989). Trends in cooperative distributed problem solving. IEEE Transaction on Knowledge and Data engineering, 1, 63–83.

Genesereth, M. R., Ginsberg, M. L., & Rosenschein, J. S. (1986, October). Cooperation without communication. Paper presented at the Fifth National Conference on Artificial Intelligence, Philadelphia, PA.

Kamoun, A., Debernard, S., & Millot, P. (1989, October). Comparison between two dynamic task allocations. Paper presented at the Second European meeting on Cognitive Science Approaches to Process Control, Siena, Italy.

Kornfeld, W. A., & Hewitt, C. (1981). The Scientific community metaphor. IEEE Transactions on Systems, Man and Cybernetics, 11, 24–33.

Millot, P. (1988). Supervision des procédés automatisés [Supervisory control of automated processes]. Paris, Hermès.

Millot, P., & Kamoun, A. (1988, June). An implicit method for dynamic allocation between man and computer in supervision posts of automated processes. Paper presented at the Third IFAC Congress on Analysis Design and Evaluation of Man Machine Systems, Oulu, Finland.

NASA. (1988) version 1.0. Booklet containing the theoretical background of the Task Load indeX NASA Ames research center. T.L.X. Human Performance Group.

Nii, H. P. (1986). Blackboard systems: The blackboard model of problem solving and the evolution of blackboard architectures (part I). AI Magazine, 7, 38–53.

Rosenschein, J. S., & Genesereth, M. R. (1985, October). Deals among rational agents. Paper presented at the Ninth International Joint Conference on Artificial Intelligence, Washington, DC.

Schmidt, K. (1989, October). Modelling cooperative work in complex environments. Paper presented at the Second European Meeting on Cognitive Sciences Approaches to Process Control, Siena, Italy.

Smith, R. G. (1980). The contract-net protocol: High-level communication and control in a distributed problem solver. IEEE Transactions on Computers, 29, 1104–1113.

Sycara, K. R., (1989). Multiagent compromise via negotiation. In L. Gasser & M. N. Huhns (Eds), Distributed Artificial Intelligence 2 (pp. 119–137). Los Altos, CA: Morgan Kaufmann Publishers.

Taborin, V., & Millot, P. (1989, June). Cooperation between man and decision aid system in supervisory loop of continuous processes. Paper presented at the Eighth European Annual Conference on Decision making and Manual Control, Copenhagen, Denmark.

Vanderhaegen, F., Crevits, I., Debernard, S., & Millot, P. (1992, August). Multi-level cooperative organization in air traffic control. Paper presented at the Third International Conference on Human Aspects of Advanced Manufacturing and Hybrid Automation, Gelsenkirchen, Germany.