14

The Art of Efficient Man-Machine Interaction: Improving the coupling between man and machine

Human Reliability Associates

Efficient and safe man-machine interaction requires an efficient coupling between man and machine, in the sense that the machine performs and responds as the operator expects it to. Rather than force the user to adapt to the system, the system should be adapted to the user. This can be achieved either through design, during performance, or by management. Each of these cases are considered and evaluated against a common set of criteria.

THE NEED FOR ADAPTATION

There are many ways in which the current state of man-machine interaction can be characterized. On one side it is quite common to focus on how things can - and often do - go wrong, and lament on how miserable the situation of the human operator has become (e.g., Perrow, 1984). Although examples of accidents are many, this view may easily lead to a picture that is too gloomy. On the other side there are also people who see the state of man-machine interaction as being quite satisfactory, due not least to the technological developments. The only drawback is the continued presence of the operator as an unpredictable and uncontrollable source of disturbances, but the belief is that this will be only a temporary predicament. Yet even the most sanguine assessment cannot deny that there are a number of cases where things do go wrong and therefore, presumably, an even larger number of cases where they almost go wrong.

Human factors engineering and ergonomics arose as scientific disciplines because human adaptation alone was incapable of obtaining a satisfactory fit between unaided operators and machines. The notion of trying to improve the coupling between the work and the people involved is at least a century old (e.g., the Scientific Management movement, cf. Taylor, 1911) but human factors engineering did not start until the Forties. Since then the growth of the technology has accelerated and increased the gap between what people can do and what systems require. The general solution to reduce this gap is to enhance the functionality of the man-machine interaction by providing the operator with some kind of computerized support. But this solution may create more problems than it solves, unless an efficient coupling is obtained between the operator and the support. There is consequently ample reason to consider the ways in which this coupling between man and machine can be improved - not only as a theoretical exercise but also in terms of practical measures.

To be a little more precise, I will define efficient coupling to exist if the machine performs and responds as the operator expects it to - in terms of how it reacts to specific input (feedback to control actions) as well as in terms of the output it produces. This definition does not imply that the responsibility for the coupling rests completely with the machine. The operator’s expectations are obviously shaped by the training, the design of the interface, and the dynamics of the interaction; the coupling may therefore also be achieved by operator conformance. Coupling should furthermore be considered for a representative range of tasks rather than for a small number of specific tasks.

THE USELESS AUTOMATON ANALOGY

In order to achieve a more efficient coupling between man and machine it is necessary to describe what the actual deficiencies are, such as they can be found in existing systems. This, in turn, requires that either part (man and machine) can be described in sufficient detail and with sufficient precision, and that the two descriptions either use the same semantics or can be translated into a common format or mode of representation.

Since the systematic study of MSS started from the need to solve practical, technological problems the basis for the description was the engineering view of men and machines. The technical and engineering fields had developed a powerful vocabulary to describe how machines worked and it was therefore natural to apply the same vocabulary to how people worked, that is, as a basis for modeling human performance (e.g., Stassen, 1986). I will refer to this as the automaton analogy.

The automaton analogy denotes how one can think of or describe a human being as an automaton or a machine. A particular case is the use of the information processing metaphor (Newell & Simon, 1972) - or even worse, assuming that a human being is an information processing system (as exemplified by Simon, 1972; Newell, 1990). But the automaton analogy has been used in practically all cases where explanations of human performance were sought, for example, by behaviorism or psychoanalytic theory (cf., e.g., Weizenbaum, 1976).

In the field of MMS studies the automaton analogy is, however, useless and even misleading. I will not argue that the automaton analogy is ineffectual as a basis for describing human performance per se; I simply take that for granted. (This point of view is certainly not always generally accepted and often not even explicitly stated, for instance, by the mainstream of American Cognitive Science; it is nevertheless a view which is fairly easy to support.) Instead I will go even further and argue that the automaton analogy is useless even for machines when the context is man-machine systems, that is, when the functioning of the machine must be seen together with the functioning of a human being.

In general, an automaton can be described by a set of inputs, outputs, internal states, and the corresponding state transitions; a classical example of this is the Turing machine. More formally, a finite automaton is a quintuple (e.g., Arbib, 1964; Lerner, 1975):

A = (I, O, S,,)

where |

I is the set of inputs, |

O is the set of outputs |

|

S is the set of internal states |

|

: S x I —> S is the next state function, and |

|

: S x I —> O is the next output function. |

Assume, for instance, that the automaton is in a certain state Sj. From that state it can progress to a pre-defined set of other states (Sk, …, Sm) or produce a pre-defined output (Oj) only if it gets the correct input (Ij). If the system in question is a joint man-machine system then the input to the automaton must in most cases be provided by the user. Therefore, the user must reply or respond in a way that corresponds to the categories of input that the automaton can interpret. Any other response by the user will lead to one of two cases:

° The input may not be understood by the machine, that is., transition functions are not defined for the input. In this case the machine may either do nothing or move to a default state.

° The input may be misunderstood by the machine, for instance if the semantics or the syntax of the response have not been rigorously defined. In this case the machine may possibly malfunction, that is, be forced to a state which has not been anticipated. This happens in particular if the input was a physical control action or manipulation, for example, like switching something on or off, opening a valve, and so on.

If the machine therefore is to function properly the user must provide a response that falls within a pre-defined set of possible responses (the set of inputs). But the determination of the user’s response is at least partly determined by the information that is available to him. The output from the machine constitutes an essential part of the input to the user and the output is partly determined by the previous input that is, what the user decided to do. The content and structure of the machine’s output must therefore be such that it can be correctly understood by the user, that is, correctly interpreted or mapped onto one of the predefined answer options. In order to do that it is, however, necessary that the designer considers the user as a finite state automaton. We know from practice that people may interpret information in an infinite number of ways. We also realize that there is no way in which we can possibly account for this infinity of interpretations. Therefore we assume that the user only interprets the information in a limited number of specified ways - and as designers we of course take every precaution to prevent misinterpretations from happening. In other words, as designers we try to force the user to function as a finite automaton and we therefore think of the user in terms of a finite automaton. A good example of that is the graphical user interfaces, which basically serve to restrict the user’s degrees of freedom.

This means that the starting point thinking of the machine as a finite automaton has forced us willy-nilly to think of the user as a finite automaton because there is no other conceptualization or model of the user that will fit the requirements of the design. Yet this is clearly unacceptable, no matter how sophisticated we assume the automaton to be (even if the number of states is exceedingly large and the state transitions are stochastic or multi-valued). It probably is the case that whatever analogy we use for one (machine, man) we will have to use for the other (man, machine) as well. Because we want to retain some distinct human elements in the description of the user we are forced by this argument to apply the same elements to the description of the machine. One proposal for an alternative description is the notion of a cognitive system as defined by Hollnagel and Woods (1983):

A cognitive system produces intelligent action, that is, its behavior is goal oriented, based on symbol manipulation and uses knowledge of the world (heuristic knowledge) for guidance. Furthermore a cognitive system is adaptive and able to view a problem in more than one way. A cognitive system operates using knowledge about itself and the environment in the sense that it is able to plan and modify its actions on the basis of that knowledge. (Hollnagel and Woods, 1983, p. 589)

The idea of a cognitive system was based on extensive practical experience rather than on formal arguments. The empirical evidence gathered since then has corroborated this definition. In relation to the present discussion the important aspect is that of adaptation. Effective coupling between man and machine can be achieved if either adapt to the other. In order to achieve this it is therefore necessary that the language of description supports notions of adaptation and adaptivity. In the remaining part of this chapter I will consider the notion of adaptation and how it can be achieved in man-machine systems.

ADAPTATION

The problem of establishing efficient man-machine interaction can usefully be described in terms of control. The goal is to maintain system performance within a specified envelope, avoiding that critical functions go beyond (above or below) given limits. The purpose of system design is to make this control possible. The control is carried out by the system, by the operator, or by the two together. If the variety of the engineering (automatic) control system can be made large enough, it may handle the situation by itself. But if the requisite variety of the process exceeds that of the automatic control system, then the operator is needed. I shall assume that this is the case.

In order for the operator to work efficiently, that is, to serve as an efficient controller, he must have the necessary facilities to obtain information about the situation and to exercise judiciously control options. This requires that the designer is able to anticipate the possible situations and to invent means by which to deal with them. The tacit assumption of the design is that the operator can handle the situation if he has the means to provide the required control. Therefore, the designer must provide the operator with the proper facilities for getting the information needed (retrieval), transforming it into a presentation form which is adequate for the problem at hand (transformation), combining it on the presentation surface (display) so that it is well suited - or adapted - to the current needs, and effectuating the intended control actions (control and feedback).

It is practically impossible to anticipate more than a limited set of situations, and it is therefore important that the operator is able to control how information is used. The actual interface must consequently be flexible enough to enable the operator to achieve his goals when unanticipated situations occur. The designer’s job is to adapt the usage of the equipment to operator characteristics (human-factors design) and to the gross characteristics of the possible performance modes (event types). The design should provide the options for adaptation although it is, in fact, usually the operator who carries out the adaptation.

In order to achieve an efficient coupling between humans and machines (operators and systems) it is necessary that some kind of adaptation takes place. The experience to date indicates that the adaptation mostly has been made by the humans. It can be said, with some justification, that the reason why most systems function at all is that the human operator is able to adapt to the design. In other words, systems may sometimes work despite their design rather than because of it. Adaptation is an integral part of human life and something that comes natural to us. But the human ability to adapt may easily lead designers astray. The situation should preferably be changed to the opposite; the attitude should be that the machine must adapt to the human, rather than the other way around. In order to achieve this it is, however, necessary that one knows how to perform this adaptation. The rest of this chapter will discuss three different ways of doing this.

ADAPTATION THROUGH DESIGN

The basis for adaptation through design is the ability to predict the consequences of specific design decisions. If a designer wants to achieve a certain effect, he must be able to foresee what will happen if the system is used in specific ways under specific circumstances. Design is essentially decision making.

“…The choice of a design must be optimal among the available alternatives; the selection of a manifestation of the chosen design concept must be optimal among all permissible manifestations… Optimality must be established relative to a design criterion which represents the designer’s compromise among possibly conflicting value judgments that include… his own.” (Asimov, 1962, p. 5)

The fundamental art of design is to increase the constraints until only one solution or alternative is left. If the predominant design criteria relate only to technical or economic aspects of the system, then the outcome may easily be one that puts undue requirements on the operator’s ability to adapt. (It is presumably Utopian to hope for a system that does not require some kind of adjustment - at least if it is made to be used by more than one individual.) It follows that the dominant design criteria should include some that address the man-machine interaction, thereby hopefully making it easier for the operator to adjust to the system.

In order to achieve this goal, in order to make correct predictions of what the design will accomplish, it is necessary to have sufficient knowledge about how the operator will respond. Such descriptions are commonly referred to as “user models” (although they properly speaking should be called “models of the user”). In some cases user models are used prescriptively as a basis for promoting a specific form of performance through the design: An example of that is the notion of ecological interface design (e.g., Rasmussen & Vicente, 1987). In other cases user models are applied more sparingly, as condensed descriptions of how one can expect a user to respond for a given set of circumstances (e.g., Smith et al., 1992)

In both cases the fundamental requirement is that an adequate model description of operator characteristics can be found and that sufficiently powerful design guidelines can be derived from this model. The latter might even be achieved by means of human factors guidelines alone - without an explicit model - provided the situation in itself can be sufficiently well predicted. Examples of that are very constrained working environments such as train driving, word processing, and the like. When models are used they are generally static, that is, verbal or graphical descriptions from which specific (expected) reactions to specific input conditions can be estimated or predicted. It may even be suggested that static adaptation is the noble goal of HCI as a scientific movement - although it is open to discussion whether that goal actually can be achieved.

The very complexity of system design has frequently made it evident that static models are inadequate. This seems to be the case at least for descriptive models; prescriptive or normative models usually simplify the situation so much that the limitations are less easy to see. The logical alternative is to use animated or dynamic models. Although this is significantly more difficult, it is nevertheless a worthwhile undertaking because it forces the designer to make everything explicit. A dynamic model of the user must describe in computational terms how a user will respond to a given development, whether that response is internal (as a “change of state”) or external (as an action). This is tantamount to using dynamic models or simulations of the user. An example of this can be found in the System Response Generator project (Hollnagel et al., 1992). A more general discussion of uses of dynamic modeling or simulation is found in Cacciabue & Hollnagel (Chapter 4, this volume).

ADAPTATION DURING PERFORMANCE

Neither the best of models nor abundant resources available for system development may be enough to ensure sufficient adaptation through design. Firstly, because knowledge about the operator necessarily is incomplete, and secondly because it is impossible to predict all the conditions that may obtain Even in a completely deterministic world with complete information about the system and the user(s), the sheer number of possibilities may easily exhaust the resources that are available for design purposes. It is therefore customary to design the system with the most frequently occurring (or imagined) situations in mind, and leave the remaining problems to be solved in different ways, either to rely on human adaptation and ingenuity or to achieve some kind of adaptation during performance. The first of these solutions, while frequently applied, is clearly not desirable - and perhaps not even acceptable. To some extent this solution has been a necessary default because the other options have been limited. Until a few years ago adaptation during performance required technical solutions that were practically impossible to implement.

The notion of adaptive systems is well established in control theory. The nature of adaptive systems has been treated in detail in cybernetics, which also has contributed many concrete examples (e.g., Ashby, 1956). Adaptive control theory has, however, been concerned with improving the control of a process or a plant. The emphasis has therefore been on how the controller could adapt to changes or variations in the physical environment (input signals) rather than to changes in the operator.

If the purpose becomes one of facilitating the work of the operator or improving the coupling between man and machine, the adaptation must look towards the interaction rather than the process. The concept of an adaptive controller must therefore be extended to include the interaction between the operator and the process, and to cover an interaction policy in addition to a control policy. Extending the principle is a simple matter, but accomplishing it in practice is considerably harder. The main problems are that it is very difficult to identify a well-defined set of on-line performance measures for an operator; further, that it is equally difficult to specify a set of operational interaction policies which can be matched to the identified operator conditions; and finally, that it is (again) difficult to evaluate the effect of implementing a specific interaction policy.

The practical work on adaptive man-machine interaction was negligible until Artificial Intelligence started to apply inference techniques to plan recognition (e.g., Kautz & Allen, 1986). (It was quite characteristic that Artificial Intelligence (AI) saw this as an original invention and neglected to consider the substantial amount of work that had been done in adaptive control theory. Apparently the analogue and the digital worlds did not meet on this occasion.) Coupled with notable advances in the development and use of knowledge-based systems, it suddenly became possible to build convincing demonstrations of adaptive man-machine interfaces (e.g., Peddie and al., 1990; Hollnagel, 1992). Other applications are found in the field of Intelligent Tutoring Systems (ITS).

The option of achieving some kind of adaptation during performance is therefore a reality. Unfortunately, it does not make the designer’s task any simpler. Unlike human adaptation, artificial adaptation must be designed in detail. Basically, the system must change its performance such that it matches the needs of the operator as far as they can be ascertained in a dynamic fashion - and A1 techniques obviously set a limit for that. The use of A1 technology, such as plan recognition and pattern identification, has partly overcome the need to define simple performance measurements. The limitations are nevertheless more or less the same, because the designer has to anticipate the possible situations. Unless plan recognition is supplemented by machine learning (and that is still a distant goal) the scope is obviously limited by the plans that are represented in the system. Similarly, the interaction policies must also be known in advance The designer therefore must consider not only, as before, the passive design of the interface but also the design of the active adaptive facilities. This increases the demands to, for example, modeling of the operator and maintaining an overview of the design. It may even, paradoxically, make the designer more dependent on his own support!

ADAPTATION THROUGH MANAGEMENT

The design of an efficient man-machine interaction, the development of effective operator support systems that not only work but also are used (!), does not occur in a vacuum. The context is the organization where the work takes place, hence also the way this organization is managed. Conversely, the way in which this management is carried out may in itself contribute to (or detract from) the efficiency of the man-machine interaction (Lucas, 1992; Wreathall & Reason, 1992).

Unlike the two previous ways of achieving adaptation, management cannot be designed in the same way. But management can serve to adapt the working environment to the specific task requirements (through interface designs, etc.). The efficient use of an interface or a support system depends not only on the systems in themselves but also on, for example, the procedures, the team structure, the communication links and command chains, and the atmosphere. The task is determined by a combination of the situation specific goals, the facilities for interaction and support (interfaces to the process), the local organization of work, and so on. Management can effectively counterbalance design oversights and compensate for structural or functional changes in the system that occur after the design has been completed. Management can also provide the short-term adaptations that may be needed until a part of the system can be redesigned. The design process in itself is usually rather lengthy and not easily started. Changes in the system, small and large, unfortunately do not wait patiently for a redesign to come about but appear randomly over time. The most effective way to compensate for them, and thereby maintain the efficient man-machine interaction, is through management.

Adaptation through management requires a continuous monitoring of effects, data collection, and analysis. It can be seen as adaptation by continuous redesign, hence working over and above what active adaptation may achieve. In other words, adaptation through design occurs at long intervals when the system is designed/redesigned. Adaptation during performance occurs continuously and rapidly but can only compensate for smaller deviations (and only for those that have been anticipated). Adaptation through management also occurs continuously, although with some delay. On the other hand, adaptation through management is able to cope with large deviations, even if they are completely unanticipated. Put differently, adaptation through management is the on-line use of human intelligence. The others are the use of off-line and “canned” intelligence, respectively.

DISCUSSION

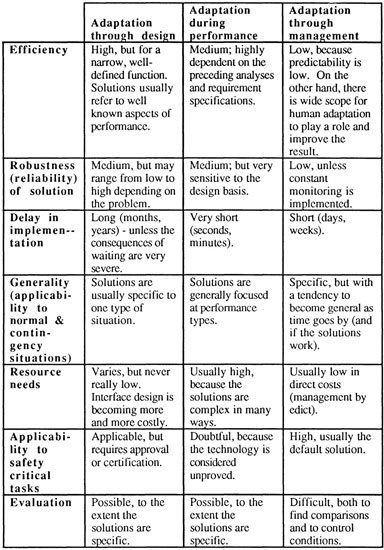

One way to summarize the issues discussed above is to consider the three different ways of achieving adaptation against a common set of criteria. The criteria have all been mentioned in the preceding:

° Efficiency of adaptation. This criterion refers to how efficient the adaptation is expected to be. It is closely related to how well it is possible to predict correctly the effects of the specific solution. Presumably, if an accurate prediction is possible, then the solution should be expected to be efficient - since it otherwise would not have been chosen. Conversely if the predictability is low one may expect that the solution is less efficient.

° Robustness. This refers to how reliable and resistant the solution is, that is, how well it can withstand the deviations that may occur (working conditions, degraded information or noise, unexpected events, etc.).

° Delay in implementation. The time from when a deviation or undesired condition is noted until something is done about it is the delay in implementation. Another view on that is how easy it is to implement the solution, not in terms of the resources and efforts that actually go into doing it but in terms of the difficulties that must be overcome before the implementation can be begun.

° Generality. This concerns the general applicability of solutions, that is, whether solutions address a single issue or have a wider scope. Generality also refers to whether a solution is applicable to normal and contingency situations alike or only to one of these categories.

° Resources required. The resources are those needed to implement the solution (to design and develop it) rather than the resources needed to use it.

° Applicability to safety critical tasks. Safety critical tasks play a special role in man-machine systems. On the one hand they are the occasions where specific improvements of the man-machine interaction may be mostly needed; on the other hand they usually are subject to a number of restrictions from either management or regulatory bodies.

° Evaluation. Evaluation is important in order to determine whether the coupling between man and machine works as expected and to gauge the efficiency of the adaptation. The evaluation can be considered with regard to how easy it is to accomplish (in terms of, e.g., money, manpower time) and in terms of whether suitable methods are available. A specific issue in evaluation is the need to separate the effects of the specific solutions from the effects of training in their use.

The result of applying these criteria to the three types of adaptation are shown in Table 1.

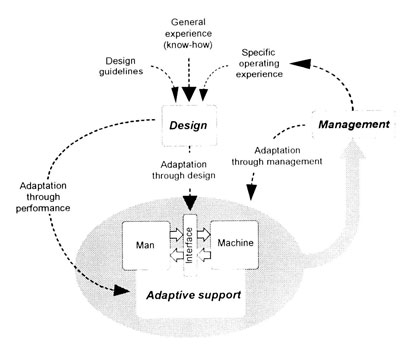

The classification provided earlier is only tentative. It does, however, serve to emphasize that there are no simple answers to how a more efficient man-machine interaction can be established, nor to which solutions are the best (or cheapest, or safest, etc.). As the discussion of adaptation through management pointed out, it is probably an over-simplification even to think of the three types of adaptation as distinct. It is more reasonable to assume that a proper solution will involve all three aspects. On way of indicating the coupling between the three types of adaptation is shown in Figure 1.

FIGURE 1. The coupling between the three types of adaptation

Evaluation of the three types of adaptation

In scientific terms the coupling between man and machine is an issue where there are more questions than answers. We know something about basic human factors and basic ergonomics, but when we turn to a consideration of tasks where human cognition plays a major role, the lack of proven theories or methods is deplorable. Concerted efforts are increasing our knowledge bit by bit, but we are still far from having an adequate design basis. This is mainly because comprehensive theories for human action are few and far between. The situation is analogous to the state of human reliability analysis, as exposed by Dougherty (1990). There are many practitioners, and they all have their little flock of faithful followers. But when the methods are examined more closely they turn out to depend less on their own merits and more on the experience and know-how of those who use them. The same goes for design of efficient man-machine interaction when it goes beyond basic ergonomics. This does not imply that the current methods on the whole are ineffective. But it does mean that it may be difficult to generalize from them or to identify the common conceptual bases.

The coupling between man and machine is not simply a question of advancing human-computer interaction or of refining interface design. The essential coupling is to the process itself rather than to the support systems or the interface. It is a general experience that people who work with automated systems, such as flight management systems, often have great problems in understanding what the systems are doing (Wiener et at., 1991). In these cases the coupling to the underlying process is deficient and should therefore be improved. That can only be achieved if the interaction is considered in a larger context, rather than looked at in narrow terms of interface or support system design. Solutions that address specific problems tend, on the whole, to impair the coupling between man and machine rather than to improve it.

REFERENCES

Arbib, M. A. (1964). Brains, machines and mathematics. New York: McGraw-Hill.

Ashby, W. R. (1956). An introduction to cybernetics. London: Methuen & Co.

Asimov, M. (1962). Introduction to design. Englewood Cliffs, NJ: Prentice-Hall.

Dougherty, E. (1990). Human reliability analysis: Where shouldst thou turn? Reliability Engineering and System Safety, 29, 54–65.

Hollnagel, E. (1992). The design of fault tolerant systems: Prevention is better than cure. Reliability Engineering and System Safety, 36, 231–237.

Hollnagel, E., Cacciabue, P. C., & Rouhet, J.-C. (1992). The use of an integrated system simulation for risk analysis and reliability assessment. Paper presented at the Seventh International Symposium on Loss Prevention, Taormina, Italy.

Hollnagel, E., & Woods, D. D. (1983). Cognitive systems engineering: New wine in new bottles. International Journal of Man-Machine Studies, 18, 583–600.

Kautz, H. & Allen, J. (1986, Oct.). Generalized plan recognition. Paper presented at AAAI-86, Philadelphia, PA.

Lerner, A. Y. (1975). Fundamentals of cybernetics. London: Plenum.

Lucas, D. (1992). Understanding the human factor in disasters. Interdisciplinary Science Reviews, 17, 1185–1190.

Newell, A. (1990). Unified theory of cognition. Cambridge, MA: Harvard University Press.

Newell, A., & Simon, H. A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice-Hall.

Peddie, H., Filz, A. Y., Arnott, J. L., & Newell, A. F. (1990, September). Extra-ordinary computer human operation (ECHO). Paper presented at the Second Joint GAF/RAF/USAF Workshop on Electronic Crew Teamwork, Ingolstadt, Germany.

Perrow, C. (1984). Normal accidents: Living with high-risk technologies. New York: Basic Books.

Rasmussen, J., & Vicente, K. J. (1987). Cognitive control of human activities and errors: Implications for ecological interface design (Tech. Rep. No. Risø-M-2660). Roskilde, Denmark: Risø National Laboratory.

Simon, H. A. (1972). The sciences of the artificial. Cambridge, MA: The MIT Press.

Smith, W., Hill, B., Long, J., & Whitefield, A. (1992, March). The planning and control of multiple task work: A study of secretarial office administration. Paper presented at the Second interdisciplinary Workshop on Mental Models, Cambridge, UK.

Stassen, H. G. (1986). Decision demands and task requirements in work environments: What can be learned from human operator modeling? In E. Hollnagel, G. Mancini, & D. D. Woods (Eds.), Intelligent decision support in process environments (pp. 90–112). Berlin: Springer Verlag.

Taylor, F. W. (1911). The principles of scientific management. New York: Harper.

Weizenbaum, J. (1976). Computer power and human reason: From judgment to calculation. San Francisco: W. H. Freeman

Wiener, E. L., Chidester, T. R., Kanki, B. G., Palmer, E. A., Curry, R. E., & Gregorich, S. E. (1991). The impact of cockpit automation on crew coordination and communication: I. Overview, LOFT evaluations, error severity, and questionnaire data. (Tech. Rep. No. NCC2–581-177587). Moffet Field, CA: NASA, Ames Research Center.

Wreathall, J., & Reason, J. (1992). Human errors and disasters. Paper presented at the Fifth IEEE Conference on Human Factors and Power Plants, Monterey, CA.