15

“Human–like” System Certification and Evaluation

European Institute of Cognitive Sciences and Engineering

This chapter presents a novel view of certification and evaluation in the specific case of “human-like” systems (HLS). Such systems are knowledge intensive. Thus, knowledge acquisition is a key issue to understanding, implementing, and evaluating related knowledge bases. Evaluation is incremental and situational. Experiences on the MESSAGE and HORSES projects have lead to the Situation Recognition Analytical Reasoning model that is proposed for use in knowledge acquisition and evaluation. This approach leads to the concept of Integrated Human-Machine Intelligence where HLS certification is viewed as an incremental process of operational knowledge acquisition.

INTRODUCTION

In this paper, we will take the view that mankind distinguishes itself from other species because it invents and builds tools to perform very sophisticated tasks. These tools are becoming so complex that people do not understand how they work anymore even if they use them routinely; for example, many people drive cars without knowing how a carburetor works. People need to trust these tools, therefore it follows that certification of these tools is an essential issue.

When can we declare that a tool is usable and safe for use? Certifying that a tool will be satisfactory in any situation for a (very) large group of users where it is intended to be used is a very serious enterprise. Usually, the difficulty is to define criteria or norms that will be socially acceptable to a wide range of domain experts and other people who will be connected to the use of the tool. Responsibility for the definition of such norms is taken by recognized national and international authorities. For instance, it is not unusual to see “well-known” norm labels on some home appliances. These labels warranty that the appliance will work under well-specified conditions. Such norms evolve with time. Furthermore, it is often difficult to test the tool against preexisting norms when the tool is fairly innovative. In reality, the norms are usually redefined with respect to the tool. An interesting question is: Would it make sense to anticipate evaluation criteria (norms) early on during design? Some answers are already available in the human-computer interaction (HCI) literature (Nielsen, 1993). They relate to user criteria such as learnability, efficiency, memorability, errors, and satisfaction. In any case, it is very difficult and often impossible to know if a tool is usable until we actually use it and access it.

Modem tools become more knowledge intensive in the sense that they include combined expertise of a few selected people. The more a tool improves by incremental additions of knowledge from an increasing number of users (usually domain experts), the more it is perceived as “intelligent.” Completeness and consistency assessment are two major problems. However, the perceived complexity of the resulting envelope is the key factor to be grasped. The major difficulty comes from the fact that the perceived complexity is a function of the background of the user who performs the related task. Thus, the certification of resulting systems is extremely user specific. Certification is then necessarily incremental. Humans are evaluated through exams during their student and training periods, and throughout their lives humans continually evaluate themselves and are evaluated by others. Taking the viewpoint of Ford and Agnew (1992), expertise (i.e., knowledge) is socially situated, personally constructed, and “reality” relevant. This makes the process of validation of tools including expertise extremely difficult. Even if we take the computational view of model validation developed by Pylyshyn (1989), it is difficult to justify methods such as intermediate state evidence, complexity–equivalence, or cognitive penetrability when they are not situated (this makes them incremental). Indeed, users (even domain experts) usually have constructs different from those of the expert–designers.

The tools that we are studying here are complex (in structure and function) and difficult to understand for a non specialist. For instance, complex tools include modem airplanes, nuclear power plants, computers, electronic documentation, and so on. The evolution of such systems leads to the concept of human–like systems. It seems that tools evolve from physical to intellectual interactions. They tend to increase the distance between humans and the physical world. “Human-like” systems are electronic extensions of the capabilities of the user. They mediate between the mechanical parts of a system and share some of the intelligent functions with the user. Automated copilots, on-line “intelligent” user manuals, and “intelligent” notebooks are examples of such tools.

Turing’s classic paper (Turing, 1950) raised an issue that lies at the heart of cognitive science: Can a machine1 display the same kind of intelligence as does a human being? User-friendly systems such as current microcomputers allow humans to extend their cognitive capabilities. Such tools do not qualify as being intelligent in the human sense, but they permit “intelligent” interaction with users. In artificial intelligence (AI), intelligent behavior is defined in the following manner: faced with a goal, a set of action principles must be used to pass from an initial situation to a final situation which satisfies that goal. In accordance with this model, one of the goals of A1 is to build “intelligent” machines which interact with the environment (Boy, 1991a). A crucial question is: How is the A1 of these tools perceived and understood by humans? In this perspective, the concept of an agent is essential. Agents can be both humans and machines. Modem work activities address a variety of issues concerning the distribution of tasks and responsibilities between human and machine agents. An initial model of interaction between human and machine agents was developed for the certification of modern commercial aircraft in the MESSAGE methodology (Boy & Tessier, 1985). Tools that were analyzed do not qualify as “intelligent” in the current A1 sense. However, they include most of the latest advanced automation features, such as flight management tools for navigation control. Such features take over some very important cognitive tasks that were handled by humans in the past. These tasks have been replaced by new ones that are more information intensive, leading to new kinds of expertise.

Expertise is very difficult to elicit from experts (Feigenbaum & Barr, 1982; Leplat, 1985). What happens in the knowledge acquisition community is not so much the elicitation of an expert’s preexisting knowledge, but rather the support of the expert’s processes in overtly modeling the basis for his or her own skilled performance. Such a line of argument is reported by Gaines (1992), and strongly supported by Clancey (1990). In fact, “the shift from behaviorism to cognitive science has brought many changes in our methodologies, experimental techniques, and models of human activities” (Gaines, 1992, personal communication). Technology has followed the same track. Tools are less force intensive (sensory-motoric) and more information based. Furthermore, we will take the view that cognitive processes are not in agents but in the interaction between agents (Hegel, 1929). Providing an explanation for the fact that intelligence emerges from non intelligent components, Minsky (1985) defends the interactionist view of intelligence. The interactionist approach is very recent. It is based on the principle that knowledge is acquired and structured incrementally through interactions with other actors or autonomous agents. This approach is sociological (versus psychological). Interaction and auto organization are the key factors for the construction of general concepts. There are three main currents: automata networks (McCulloch & Pitts, 1943; Rosenblatt, 1958; von Neumann, 1966) and connectionism (McClelland et al., 1986; Rumelhart et al., 1986); and distributed A1 (Hewitt, 1977; Lesser & Corkill, 1983; Minsky, 1985).

The problem of evaluating “human-like” systems also faces the general problem of measurement in psychology and cognitive science. Since we have adopted the view that intelligence is in the interactions, can we find measures that identify the quality of such interactions? Cognitive behaviors (like physical things) are perceived through their properties and attributes. Human factors measurements are usually grounded on analogy and inference. (1) Certification of a new tool may be accomplished by measuring analogies with an existing similar working tool already certified and explaining the differences. This approach is commonly used in aircraft cockpit certification. It does not allow drastic changes between the reference tool and the new tool. (2) Such abstract properties or attributes as intelligence are never directly measured but must be inferred from observable behavior; for instance, measuring temperature by feeling someone’s forehead, or by reading a thermometer to infer fever. An important question is: Who defines or ratifies such measures? Who can qualify for being a certification authority?

Interaction between a human being and “human-like” systems assumes the definition of a structure and a function that allow this interaction. The structure allowing interaction is usually called the user interface, cockpit, control panel, and so on. The function takes the form of procedures, checklists, and so forth. There is always a tradeoff between structure and function. User-friendly interfaces better extend human cognitive capabilities, and thus augment intelligence of the interactions (Engelbart, 1963). For instance, hypertext systems are considered cognitive extensions of the associative memory of the user. Although, procedures are always used when safety is a key factor in routine control, the better the user interface is designed (e.g., insures the transparency of processes being controlled), the shorter and less strict the required procedures will be.

This paper proposes an approach to certification of “human-like” systems. It is based on the paradigm that agent models are essential in such assessment processes. The cockpit certification experience is taken as an example to show that highly interactive systems are necessary to incrementally store knowledge that will become useful at evaluation time. Furthermore, the concept of an agent is shown to be very useful in evaluation of cognitive human–machine interaction. The Situation Recognition/Analytical Reasoning (SRAR) model is useful for conceptualizing various types of agent behavior and interaction. In addition, the concept of Integrated Human-Machine Intelligence (IHMI) is useful for the validation of human-“human-like” system teams. The block representation has been developed to provide an appropriate language that supports contextual analysis of IHMI systems. In the balance of the chapter, we start a discussion of possible extensions of this view to certification problems in a variety of high-tech environments.

THE COCKPIT CERTIFICATION EXPERIENCE

A method has been developed to analyze and evaluate interactions between several agents including aircraft, flight crew, and air traffic control (Boy, 1983; Boy & Tessier, 1985), based on a system called MESSAGE (French acronym for “Model for Crew and Aircraft Sub-System Equipment Management”). MESSAGE is based on heuristics given by experts. In this sense, it is a knowledge-based system.

MESSAGE is a computerized analysis tool that provides the time-line of a mission, and the different evolution of indices representing different loads related to various agents. MESSAGE has been used to measure workload and performance indices, and to better understand information transfer and processing in crew-cockpit environments. Inputs are processing knowledge (in the form of rules) and data of missions to be analyzed. Outputs are crew performance and criticality (tolerance function), task demands (static functions), the perceptions of each agent versus time, and the simulated mission. The data of the mission to be analyzed includes continuous parameters recorded during a flight simulation and preprocessed, and discrete events from video and audio records. The simulated mission is a recognized (sometimes smaller) sequence of the previous input mission augmented by new messages generated by MESSAGE. The processing rules are acquired and modified incrementally, taking into account the relevance and accuracy of the simulated mission and the significance of the computed indices versus time. This knowledge acquisition and refinement process must be performed by domain experts.

MESSAGE is based on a five-level agent model (Boy, 1983): the human–vehicle interface for information acquisition, the coordination and management of emitting and receiving channels, the situation recognition, the strategic decision, and the plan execution processes. This model is an extension of the classical information processing model of Newell and Simon (1972). The MESSAGE agent model uses a supervisor which manages three types of processes called channels, that is, receiving, emitting, and cognitive. The function of the supervisor is analogous to that of the blackboard of Nii (1986). Each channel exchanges information with the supervisor. The concept of automatic and controlled processes, introduced and observed experimentally by Schneider and Shiffrin (1977a-b), was implemented at the level of the supervisor in the MESSAGE system (Tessier, 1984). This allows the generation and execution of tasks either in parallel (automatisms) or in sequence (controlled acts). The automatic processes or automatisms call upon a type of knowledge representation which we will describe as situational representation. The controlled processes may be defined by an analytical representation.

A “human-like” agent should be capable of anticipating the reactions of the environment as well as the consequences of its own actions. This anticipation will operate on the messages coming from either the environment (external inputs or observations) or the agent itself (internal inputs or inferred messages). A cockpit, for example, is a network of agents that relate to each other. The pilot interacts with a well-defined network of agents including his/her crew partners, air traffic control, and other airplanes. He/she knows complex relationships between these agents and constantly interprets from their behavior the overall behavior of the agent network. As a metaphor, the pilot is in a similar position to a manager dealing with his/her collaborators to carry out a job requiring a team effort. Usually, each crew member tries to get a vivid representation of the social field he/she is in. This notion of agent has been extended to various degrees of granularity that better describe the current situation. In this view, an agency (Minsky, 1986) is a vivid network of agents that can be human or machine channels (in the MESSAGE terminology). Through an analysis of audio and video recordings of the behavior of real airline flight crews performing in a high fidelity flight simulator, Hutchins and Klausen (1990) demonstrate that the expertise in this system resides not only in the knowledge and skills of the human actors, but in the organization of the tools in the work environment as well.

Because context changes all the time, one important issue brought up by the MESSAGE project is that the MESSAGE system is both a certification aid and a knowledge acquisition system. MESSAGE knowledge is case based and very context dependent. A new aircraft cannot be fully certified by using certification knowledge used for certification of other aircraft. New certification knowledge is being constructed during the certification process. MESSAGE brought up the first requirements for a knowledge representation (KR) that allows the capture of such contextual knowledge. In the next section, we develop the model that gives the foundations for such a KR.

THE SITUATION RECOGNITION ANALYTICAL REASONING MODEL

The term situation is used here to characterize the state of the environment with respect to an agent. In general, it refers to the state of the given world. A situation is described by a set of components which we will call world facts. For a generic world fact, at a given instant, it is possible to distinguish three types of situations (Boy, 1983). The real situation characterizes the true state of the world. It is often only partly available to the agent. The real situation is an attribute of the environment. It can be seen as the point of view of an external “objective” observer. The situation perceived by the agent characterizes an image of the world. It is the part of the world accessible to the agent. It is characterized by components which are incomplete, uncertain, and imprecise. A situation pattern is an element of the agent’s long-term memory. It is composed of conditions, each of which applies to a finite set of world facts. It is the result of a long period of learning. Situation patterns can be seen as situated knowledge chunks. The situation expected by the agent at a given time, t, is the set of situation patterns included in the short-term memory of the agent. It is often called the focus of attention. At each time interval, the vigilance of the agent is characterized by the number and pertinence of the situation patterns present in short-term memory.

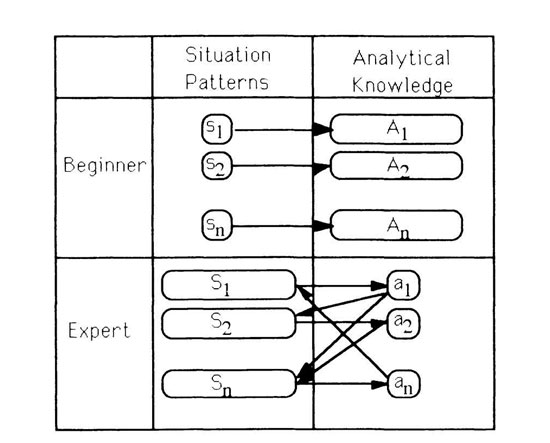

The Situation recognition / analytical reasoning (SRAR) model is an evolutionary model of the user (Figure 1). A chunk of knowledge is fired by the matching of a situation pattern with a perceived critical situation. This matching is either total or partial. After situation recognition, analytical reasoning is generally implemented. Let us describe SRAR in the fault identification domain. The Orbital Refueling System (ORS) is used in the space shuttle to refuel satellites and is controlled by the astronauts. A “human-like” system, called Human-ORS-Expert System (HORSES), was developed to perform interactive fault diagnosis (Boy, 1986). HORSES was tested in a series of experiments with users working in a simulated ORS environment. A malfunction generator runs concurrently and generates fault scenarios for the ORS at appropriate times in the simulations. The experiments produced data showing how users adapted to the task, and how their performance varied with different parameters. From these data, we developed the original SRAR model.

When a situation is recognized, it generally suggests how to solve an associated problem. We assume, and have experimentally confirmed in specific tasks such as fault identification (Boy, 1986, 1987a, 1987b), telerobotics (Boy & Mathé, 1989), and information retrieval (Boy, 1991b), that people use chunks of knowledge. It seems reasonable to envisage that situation patterns (i.e., situational knowledge) are compiled because they are the result of training. We have shown (Boy, 1987a, 1987b), in a particular case of fault diagnosis on a physical system, that the situational knowledge of an expert results mainly from the compilation, over time, of the analytical knowledge he relied on as a beginner. This situational knowledge is the essence of expertise. It corresponds to skill-based behavior in Rasmussen’s terminology (1986). “Decompilation,” that is, explanation of the intrinsic basic knowledge in each situation pattern, is a very difficult task, and is sometimes impossible. Such knowledge can be elicited only by an incremental observation process. Analytical knowledge can be decomposed into two types: procedures or know-how, and theoretical knowledge.

The chunks of knowledge are very different between beginners and experts. The situation patterns of beginners are simple, precise, and static, for example, “The pressure P1 is less than 50 psia.” Subsequent analytical reasoning is generally major and time-consuming. When a beginner uses an operation manual to make a diagnosis, his behavior is based on the precompiled engineering logic he has previously learned. In contrast, when he tries to solve the problem directly, the approach is very declarative and uses the first principles of the domain. Beginner subjects were observed to develop, with practice, a personal procedural logic (operator logic), either from the precompiled engineering logic or from a direct problem-solving approach. This process is called knowledge compilation. Conversely, the situation patterns of experts are sophisticated, fuzzy and dynamic, for example, “During fuel transfer, one of the fuel pressures is close to the isothermal limit and this pressure is decreasing.”

FIGURE 1. The situation recognition / analytical reasoning model.

Beginners have small, static, and crisp situation patterns associated with large analytical knowledge chunks. Experts have larger, dynamic, and fuzzy situation patterns associated with small analytical knowledge chunks. The number of beginner chunks is much smaller than the number of expert chunks.

This situation pattern includes many implicit variables defined in another context, for example, “during fuel transfer” means “in launch configuration, valves V1 and V2 closed, and V3, V4, V7 open.” Also, “a fuel pressure” is a more general statement than “the pressure P1”. The statement “isothermal limit” includes a dynamic mathematical model, that is, at each instant, actual values of fuel pressure are compared fuzzily (“close to”) to a time-varying limit [Pisoth = f(Quantity, Time)]. Moreover, experts take this situation pattern into account only if “the pressure is decreasing,” which is another dynamic and fuzzy pattern. It is obvious that experts have transferred part of analytical reasoning into situation patterns. This part seems to be concerned with dynamic aspects.

SRAR is interesting in the context of certification because it provides a framework for analyzing interface situation patterns from incremental situational testing and analytical knowledge (usually in the form of operational procedures).

INTEGRATED HUMAN-MACHINE INTELLIGENCE

This research effort has led to the concept of integrated human-machine intelligence (IHMI). The IHMI certification process can be seen as (Shalin & Boy, 1989): (1) parsing the operator’s actions in order to understand what he/she is doing; (2) providing him/her with the appropriate level of information and proposing appropriate actions or procedures for him/her to execute. The IHMI model includes two loops: a short-term supervisory control loop and a long-term evaluation loop. A technical domain knowledge base includes descriptions of various objects relevant and necessary in the domain of expertise. The operational knowledge base includes available procedures necessary to handle various environmental situations that have already been encountered. The learning knowledge base includes necessary knowledge for upgrading operational procedures. If we assume that technical domain knowledge is a given, evaluation of an IHMI system (Boy & Gruber, 1990) is mainly concerned with operational knowledge. Such knowledge is very difficult both to construct and to validate. Its construction is necessarily incremental. A designer cannot imagine such knowledge in the first place. He/she can give guidelines for users to follow. However, the behavior of an IHMI system is discovered incrementally by operating it. An important question for the future is: Can we anticipate the behavior of such IHMI systems at design time? It seems that there is not yet enough knowledge to give general rules for this. We need to accumulate more research results and experience to get classes of behavior of IHMI systems.

Following this approach, we have started research on knowledge representation to analyze, design, and evaluate the functional part of IHMI systems. The type of knowledge that we are concerned with is composed of operational procedures. Since procedures are taken as the essential communication support between humans and “human-like” systems, we propose an appropriate knowledge representation (KR) which is tractable both for explaining human intelligence and implementing artificial intelligence. The KR currently in use was designed as a representation framework for operation manuals, procedures checklists, user guides (Boy, 1987b) and other on-line tools useful for controlling complex dynamic systems (Boy & Mathé, 1989). A body of procedures is thus represented by a network of knowledge blocks. Knowledge blocks are the basic representational structures for an alternative symbolic cognitive architecture.

The basic KR entity is a “knowledge block” (or “block” for short), which includes five characteristics: a goal, a procedure, preconditions, abnormal conditions, and a context (Boy & Caminel, 1989). Block components are its name, its hierarchical level, a list of preconditions, a list of actions, a list of goals with a list of associated blocks, a list of abnormal conditions (ACs) with their lists of associated blocks. Depending on its level of abstraction, a procedure may be represented by a single block, a hierarchy of blocks, or a network of blocks. These features have been extensively analyzed, implemented, and tested by Mathé (1990-1991). The block representation takes Pierce’s view that “when we speak of truth and falsity, we refer to the possibility of proposition being refuted.” (in Coplestone, 1985, p. 306). When a block is refuted, that is, it does not work in one situation called an abnormal situation, an appropriate abnormal condition (or exception) is added to the block. In a well-known paper on How to make ideas clear, Pierce asserts that “the essence of belief is the establishment of a habit, and different beliefs are distinguished by the different modes of action to which they give rise”. (in Coplestone, 1985, p. 306). Contextual and abnormal conditions of a block characterize positive and negative habits attached to the various actions of the block.

CONCLUSION

One of the primary jobs of a theory is to help us look for answers to questions in the right places. Even if there is no formal theory of certification of “human–like” systems, this chapter gives some directions which come from experience in real–world domains. Humans typically “tailor” their perception and action to the social field. This tailoring occurs at all levels of interaction: people say the “right” words at the “right” moment; they remember things from past experience; they try to preserve themselves from danger; they sometimes display a cumbersome sense of humor, and so on. Is there any artificial system to date that has such capabilities? The answer is no. As shown in this chapter, an important issue is the search for intelligence in the interaction, in contrast to intelligence in the individual system. Consequently, the concept of IHMI is essential.

Situational knowledge is difficult to elicit. However, it is crucial in the certification of “human-like” systems. We have developed the block representation to capture this situational knowledge. The resulting cognitive architecture differs from other attempts (e.g., Newell et al., 1989) in the way situational knowledge is incrementally built by block context reinforcement and considering abnormal situations as a model of imperfection. Indeed, actual procedures show that the same action may have various effects depending on the situation and the expected social field, giving rise to interactions with different content, structures, and syntactic forms. For example, giving a tutorial will give rise to a different interaction than providing an operational checklist. A procedure for an expert will be different than one for a layman. This concept of context is an essential feature to analyze, design and evaluate “human-like” systems. Indeed, when a machine is capable of “human-like” interaction, it is necessary to ensure that its language is appropriate for its intended social field. It is thus necessary for computational systems to be capable of appropriately tailoring their interactions. This is especially important as computer systems often need to communicate with an increasingly varied user community, across an ever more extensive range of situations. This emerging feature will complicate the certification process in the traditional sense. In particular, finding measures that provide good assessments of the resulting IHMI is a context-dependent knowledge acquisition process. Thus, it makes sense to anticipate evaluation criteria (norms) at the outset of design with the reservation that these criteria will be revised during the design cycle as more situational knowledge is acquired.

REFERENCES

Boy, G.A. (1983). The MESSAGE system: A first step towards a computer–assisted analysis of human–machine interactions. Le Travail Ilumain, 46, 58–65. (in French).

Boy, G.A. (1986, Jan.). An expert system for fault diagnosis in orbital refueling operations. Paper presented at the AIAA 24th Aerospace Science Meeting, Reno, Nevada.

Boy, G.A. (1987a, May). Analytical representation and situational representation. Paper presented at the Seminar on Human Errors and Automation., ENAC, Toulouse. (in French).

Boy, G.A. (1987b). Operator assistant systems. International Journal of Man–Machine Studies, 27, 541–554.

Boy, G.A. (1991a). Intelligent assistant systems. London: Academic Press.

Boy, G.A. (1991b, December). Indexing hypertext documents in context, Paper presented at the Hypertext’91 Conference, San Antonio, Texas.

Boy, G.A., & Caminel, T. (1989, July). Situation pattern acquisition improves the control of complex dynamic systems. Paper presented at the Third European Workshop on Knowledge Acquisition for Knowledge–Based Systems, Paris, France.

Boy, G.A., & Gruber, T. (1990, March). Intelligent assistant systems: support for integrated human–machine systems. Paper presented at the AAAI Spring Symposium on Knowledge–Based Human–Computer Communication, Stanford, CA.

Boy, G.A., & Mathé, N. (1989, August). Operator assistant systems: an experimental approach using a telerobotics application. Paper presented at the IJCAI Workshop on Integrated Human–Machine Intelligence in Aerospace Systems, Detroit, Michigan, USA.

Boy, G.A. & Tessier, C. (1985, September). Cockpit Analysis and Assessment by the MESSAGE Methodology. Paper presented at the Second IFAC/IFIP/IFORS/IEA Conference on Analysis, Design and Evaluation of Man–Machine Systems, Varese, Italy.

Clancey, W.J. (1990). The frame of reference problem in the design of intelligent machines. In K. Van Lehn & A. Newell (Eds.), Architectures for Intelligence: The 22nd Carnegie Symposium of Cognition (pp. 103–107). Hillsdale, NJ: Lawrence Erlbaum Associates.

Copleston, F. (1985). A history of philosophy. Part IV. The Pragmatist Movement, Chapter XIV, The Philosophy of C.S. Pierce (pp. 304–329). New York: Doubleday.

Engelbart, D.C. (1963). A conceptual framework for the augmentation of man’s intellect. Unpublished manuscript.

Feigenbaum, E.A., & Barr, A. (1982). The handbook of artificial intelligence, Vols 1–2. Los Altos, CA: Kaufmann.

Ford, K.M., & Agnew, N.M. (1992, March). Expertise: Socially situated, personally constructed, and “reality” relevant. Paper presented at the AAAI 1992 Spring Symposium on the Cognitive Aspects of Knowledge Acquisition, Stanford University, California.

Gaines, B.R. (1992, March). The species as a cognitive agent. Paper presented at the AAAI 1992 Spring Symposium on the Cognitive Aspects of Knowledge Acquisition, Stanford University, California.

Hegel, G.W.F. (1929). Science of logic. London: George Allen & Unwin.

Hewitt, C. (1977). Viewing control structures as patterns of message passing. Artificial Intelligence, 8, 115–121.

Hutchins, E., & Klausen, T. (1990). Distributed cognition in an airline cockpit. San Diego, CA: UCSD, Department of Cognitive Science.

Leplat, J. (1985, September). The elicitation of expert knowledge. Paper presented at the NATO Workshop on Intelligent Decision Support in Process Environments, Rome, Italy.

Lesser, V., & Corkill, D. (1983). The distributed vehicle monitoring testbed: a tool for investigating distributed solving networks. Al Magazine, 4, 25–29.

Mathé, N. (1990). Assistance intelligente au contrôle de processus: Application a la télemanipulation spatiale [Intelligent support to process control: application to spatial telemanipulating]. Unpublished doctoral dissertation, ENSAE, Toulouse, France.

Mathé, N. (1991). Procedures management and maintenance (Second ESA Grant Progress Report). NASA Ames Research Center, California.

McClelland, J.L., Rumelhart, D.E., & The PDP Research Group (1986). Parallel Distributed Processing. Exploration in the Microstructure of Cognition. Volume 2 : Psychological and Biological Models. Cambridge, MA: The MIT Press.

McCulloch, W., & Pitts, W. (1943). A logical calculus of the ideas imminent in nervous activity. Bulletin of Mathematical Biophysics, 5, 53–67.

Minsky, M. (1986). The society of minds. New York: Simon & Schuster.

Newell, A., & Simon, H.A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice Hall.

Newell, A., Rosenbloom, P.S., & Laird, J.E. (1989). Symbolic architectures of cognition. In M.I. Posner (Ed.), Foundations of Cognitive Science. Cambridge, MA: The MIT Press.

Nielsen, J. (1993). Usability engineering. London: Academic Press.

Nii, P. (1986). Blackboard systems. Al Magazine, 7, 38–53.

Pylyshyn, Z.W. (1989). Computing in Cognitive Science. In M.I. Posner (Ed.), Foundations of Cognitive Science (pp. 200–230). Cambridge, MA: The MIT Press.

Rasmussen, J. (1986). Information pocessing and human–machine interaction: An approach to cognitive engineering. Amsterdam: North Holland.

Rosenblatt, F. (1958). The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 65, 101–105.

Rumelhart, D.E., McClelland, J.L., & The PDP Research Group (1986). Parallel distributed processing. Exploration in the microstructure of cognition. Volume 1 : Foundations. Cambridge, MA: The MIT Press.

Schneider, W., & Shiffrin, R.M. (1977a). Controlled and automatic human information processing: I. Detection, search, and attention. Psychological Review, 84, 1–66.

Schneider, W., & Shiffrin, R.M. (1977b). Controlled and automatic human information processing: II. Perceptual learning, automatic attending, and a general theory. Psychological Review, 84, 127–190.

Shalin, V.L. & Boy, G.A. (1989, August). Integrated human-machine intelligence in aerospace systems. Paper presented at the IJCAI Workshop, Detroit, MI.

Tessier, C. (1984). MESSAGE: A human factors tool for fight management analysis. Unpublished doctoral dissertation, ENSAE, Toulouse, France (in French).

Turing, A.M. (1950). Computing machinery and intelligence. Mind, 5, 433–460.

von Neumann, J. (1966). The theory of self–reproducing automata. Urbana, IL: University of Illinois Press.

ACKNOWLEDGMENTS

Claude Tessier, Nathalie Mathé, and I developed some of these ideas together when I worked in the Automation Department of ONERA/CERT. I also would like to acknowledge very rich interactions I had with my colleagues at the NASA Ames Research Center both in the Human Factors Research Division and the Information Science Division.

1We will consider machines as a subset of tools.