11

Trust and Human Intervention in Automated Systems

University of Illinois

University of Illinois

Battle Human Resources Center

University of Toronto

As automation becomes increasingly common in industrial systems, the role of the operator becomes increasingly that of a supervisor. Nonetheless, operators are required to intervene in the control of automated systems, either to cope with emergencies or to improve the productivity of discrete manufacturing processes. Although there are many claims in the literature that the combination of human and computer is more effective than either alone, there is little quantitative modeling of what makes humans intervene, and hence little support for a scientific approach to the design of the role of operators in automated or hybrid systems. In this paper we describe a series of experiments which show that intervention is governed to a large extent by the operators’ trust in the efficacy of the automated systems, and their self confidence in their abilities as manual controllers. A quantitative model for the operator in continuous process control is described, and some preliminary data are introduced in the field of discrete manufacturing.

INTRODUCTION

Ever since the introduction of the idea of supervisory control by Sheridan (1976, 1987) there has been speculation about the role that trust plays in the relation of humans to automated systems. Despite this, very little has been done to develop quantitative theories of human-machine relations which could help a designer to allocate functions between human and machines in modem automated systems. It is important to note that the notion of “Fitts’ Lists” is no longer adequate, if indeed it ever was. It makes no sense to draw up lists describing which activities are best performed by humans and which best performed by machines. Today almost any function can be automated if one is prepared to pay the price. One should not approach function allocation as a once-and-for-all activity which is performed when the system is designed and implemented. Rather, one should see it as a dynamic process in which, from moment to moment, the human decides whether to intervene or to leave the system running under automation. Function allocation should be seen as part of adaptive behavior in the face of system and environmental dynamics (Rasmussen, 1993, personal communication).

Although one can find examples of design engineers who have expressed their intention to remove humans completely from automated systems and develop “lights out” factories, there is now a growing realization that it is important to include the abilities of humans as part of industrial processes (see Sanderson, 1989, for a review in the context of discrete manufacturing). The problem is that complex systems, whether for continuous process control or discrete manufacturing, are generally optimized for automatic control. Hence one does not want humans to intervene unless it is necessary. On the other hand, one certainly does want them to intervene if the plant develops faults, or if there is a window of opportunity which long term optimization misses but which humans, with their excellent pattern perception, can notice.

What then is it that makes operators decide to intervene manually, and then to return control to automatic controllers? What kind of model can account for such nonlinear control by operators? We believe that one way to approach this problem is through the concept of the psychological relationships between humans and their machines, in particular through the concept of trust between human and machine. Zuboff (1988) has drawn attention to the importance of trust in the successful introduction of automation in various settings, and Sheridan (e.g., 1987) has frequently mentioned its importance in supervisory control.

In this paper we will describe the current status of trust and self confidence as intervening variables in human operator decisions to intervene in the control of automated systems. We begin by reviewing the concept of trust as applied to the relation between humans and machines, and then describe some experiments in which operators had to decide when to intervene to compensate for faults in the automated controllers of a simulated process control plant. We show that a quantitative time series model can be developed to predict such intervention, and that such a model must include the operators’ trust of the machine and their self-confidence in their ability to control it manually. Finally we briefly describe recent work which shows that the results apply also to discrete manufacturing, suggesting that the model of the roles of trust and self-confidence can be generalized to many complex human-machine systems.

THE PSYCHOLOGY OF TRUST BETWEEN HUMANS AND MACHINES

A search through the literature of engineering psychology by Muir (1987, 1989) revealed almost no research on trust between humans and machines. She was able to show that models which had been proposed for the development of trust between humans could be applied to humans’ attitudes to machines (Muir, 1987, 1989, and in press). In particular she found that the suggestions of Remple, Holmes, & Zanner (1985) and of Barber (1983) can provide a framework to describe the development of trust between humans and machines. She suggested that Barber’s dimensions of Persistence of Natural Laws, Technical Competence, and Fiduciary Responsibility, which Barber claimed underlie why one person trusts another, could be identified respectively with the reliability of machines, the predictability of machines’ behavior, and with the degree to which the behavior of machines is in accordance with their designer’s stated intentions for their properties. She likewise suggested that the dimensions of trust suggested by Remple et al. (1985), namely predictability, dependability of disposition, and faith in the other’s motives could be interpreted readily in the framework of machine performance. She suggested that inappropriate levels of trust in machines may lead to inappropriate strategies of control and function allocation. The development of trust and faith by an operator in a machine revealed the importance of predictability, reliability and extrapolation to faith in future performance of the Other which Remple et al. applied to humans. In particular she found a very strong relation between an operator’s judgment of the “Competence” of a machine, defined as the extent to which it does its job properly, and the amount of trust placed in the machine.

We believe that there is good reason to think that the social psychology of trust can be applied to relations between humans and machines in general, mutatis mutandis, and that this is an area which deserves more research. To support this claim we will summarize work by Lee (1992) and Lee & Moray (1994) have developed her ideas further.

Experiments On Trust And Intervention In Continuous Process Control

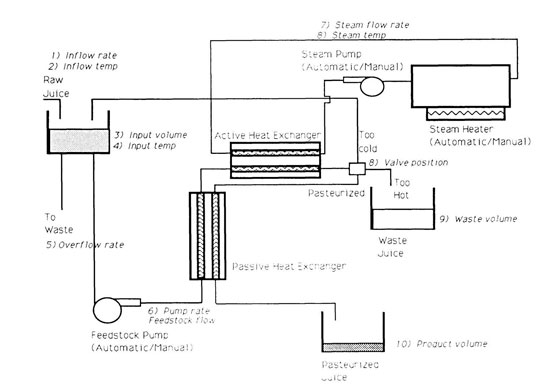

Muir’s work suggested that one should be able to understand the relation of humans and automated machines by asking for ratings of trust in the machines with which they work. In our laboratory first at Toronto and then in Illinois Muir, Lee, and Hiskes have pursued these ideas. We have used a combination of objective measures of system state and operator behavior together with subjective ratings by operators of their attitude towards the components of the plant over which they are exercising supervisory control. In addition we have measured their subjective ratings of self confidence in their manual control ability, the impact of faulty plant components, and productivity. The main testbed for the research has been a simulated pasteurizing plant (Figure 1).

FIGURE 1. The simulated pasteurizer and the state and control variables of each subsystem. (From Lee, 1992)

The task of the operators is to maximize production of pasteurized juice. A slightly variable input arrives through the pipe in the top left corner of the screen, and is held in the vat below. From here it is pumped by the feedstock pump through a passive and then an active heat exchanger. In the active heat exchanger it is heated by superheated steam.

The feedstock pump, the steam pump and the heater can be either manually or automatically controlled. Operators can change from manual to automatic control and back by typing appropriate commands on the keyboard, and when in manual mode can type in the required settings for the pumps or heater. The heat transfer equations are realistic, and changes in pump rates take several seconds to reach their target values. Heat is derived from a boiler (“heater”) by means of a steam pump. These three subsystems are the controllers, and can be run either automatically or under manual control. If they are adjusted appropriately the temperature of the feedstock leaving the active heat exchanger is between 75 and 85 degrees Celsius, and the pasteurized juice is passed through the pipe leading back to the passive heat exchanger where it gives up its energy to preheat the incoming feedstock, and is then collected in the output vat. If the temperature rises above 85 degrees Celsius the juice is spoiled and is passed to the waste vat. If it is below 75 degrees Celsius it must be reheated and it is recirculated to the input vat where it alters the temperature of the feedstock.

Muir trained her operators for many hours on a normal plant until they were very skilled at controlling it. She then exposed them to a variety of faults in the plant. Faults affected both trust and behavior. There was a strong tendency for operators to change to the manual mode of control when the plant became unreliable, a strong tendency for trust to decline progressively as the plant became more unreliable, and a strong tendency to monitor more frequently those subsystems which were identified by the operators as being the source of the unreliability. Reliable subsystems tended to be ignored, which may be a case of rational cognitive tunnel vision (Moray, 1981). Her work provides a strong basis for empirical work on trust between humans and machines.

The same pasteurizer, slightly modified and with improved controllers, was next used by Huey (1989) to study operator workload, but this work will not be reviewed in this paper. Finally Lee performed a series of experiments with an improved automated system, starting from the lead given by Muir, with the intention of developing a quantitative model of supervisory control (Lee, 1992; Lee & Moray, 1994).

Whereas Muir trained her operators to a high level of skill, Lee was interested in the acquisition of process control skills, in the effects of transient faults, and in developing a model which would predict the proportion of time spent in manual versus automatic control. His experiments consisted of giving each operator some 60 relatively short trials, (each approximately 6 minutes long,) and asking for a rating of trust after each. The first ten trials alternated between fully manual and fully automatic control, so that operators were certain to understand the dynamics of each. They then continued to operate the plant until on Trial 26 a transient fault occurred. This fault, as in Muir’s work, consisted of the feedstock pump suffering random disturbances so that when either the automatic controllers or the manual controllers called for a particular setting, the actual obtained speed might be randomly too high or too low. In the next trial the fault disappeared, and on Trial 40 it reappeared and remained for the rest of the experiment. In later experiments Lee used an experimental design in which faults occurred either only in manual mode or only in automatic mode. In all cases, operators were asked to rate their trust in the quality of the plant at the end of each run, and in later experiments also to rate their self confidence in their ability to run the plant in manual control mode.

Several striking results appeared which have been reported elsewhere (Lee, 1992; Lee & Moray, 1994). Even when the fault was chronically present performance showed only a small decline of about 10% below the level it had attained prior to the occurrence of the fault. Operators adopted a wide variety of strategies to cope with the faults, and managed to maintain productivity at the cost of a very great increase in workload, which would probably be intolerable over a sustained period. Trust also declined (as would be expected from Muir’s earlier work) and then recovered slowly and to a much lesser degree than did performance, still being some 50% lower on the final trial than just before the chronic fault appeared. Furthermore, the fall and rise in trust did not appear to be instantaneous, but took several trials to recover after the transient fault and to reach its minimum after the chronic fault began. Lee (1992) identified the causal factors in trust using a multiple regression model, and found that trust varied as a function of the occurrence of a fault and the system performance level measured as % productivity. He then went on to develop a time series ARMAV model for the dynamics of trust, and found that trust could be predicted by the equation shown in Figure 2, which describes not just the causal factors but the dynamics of trust over trials (see Figure 2).

FIGURE 2. A time series model for the dynamics of trust, and the related times series transfer function.

Subscripts t, t-1, refer to the current and immediately prior trials. Φ’s are backshift operators in the ARMAV model. A’s are weighting coefficients. a is a random noise element.

The model accounted for up to 80% of the variance in the ratings of trust, a highly satisfactory result. A particularly interesting finding was that unlike Muir’s operators, Lee’s did not tend to change to manual mode when the automatic system became unreliable. This was at first puzzling because of the very strong effect which Muir obtained and the very stable level of training which her operators had reached. Lee found on close inspection that the failure to replicate was only apparent. Many of his operators were already using manual control at the moment when the fault occurred, and hence it was impossible to detect a switch into manual mode. His later experiments, in which faults initially occurred either in manual mode or in automatic mode, but not in both, revealed that what was actually happening was that operators tended to shift away from the mode in which they were operating at the time that the fault was noticed. As a result Lee actually found in his first experiment that there was a tendency to switch into automatic mode when the plant became unreliable, but this was because, unlike Muir’s operators, most were in manual control mode at the time the fault occurred.

These results suggested that there were more subjective intervening variables then just trust. In his later experiments Lee obtained ratings of self confidence in manual operations as well as trust in the automatic systems. He again was able to identify the variables which accounted for automatic control, and found that as we expected, it was the difference between trust and self confidence that was the driving force in deciding which mode to use.

He again developed ARMAV models, one of which is shown in Figure 3. In his experiments the operators were able to choose to control any of the three control subsystems in either automatic or manual mode, whereas in Muir’s experiment only the feedstock pump could be manually controlled. Figure 3 shows the transfer function for the steam heater and steam pump.

FIGURE 3. The transfer function and equations for allocation of function.

%(time in automatic mode) = (F1(%automatic(t-1)) + F2(%automatic(t-2)) + A1(T-SC(t)) + A3(Individual Bias) + a(t))

where T is the rating of trust on trial (t); SC is the rating of self confidence on trial t; and Individual Bias is a factor reflecting the tendency of some operators inherently to prefer using one mode of control rather than the other.

The ARMAV models again accounted for over 70% and sometimes as much as 80% of the variance in the use of automatic control. The use of automatic control can be plotted against the T-SC term and results in a very beautiful “threshold curve” reminiscent of classical psychophysical functions and fitted closely by a logistic function (See Figure 4).

As an example of the phenomena which Lee was able to model, Figure 5 shows the history of a single operator over a series of trials in one of his later experiments (Lee, 1992; Lee & Moray, 1992).

FIGURE 4. The use of automation as a function of the difference between Trust and Self Confidence

FIGURE 5. Control mode and system unreliability

Until Trial 16 the system is reliable. Trust is low and self confidence is high, and the operator is using manual control. After Trial 16 faults appear when the operator is in manual control. Self confidence declines, trust rises, and on Trial 21 the operator adopts 100% automation. On Trial 26 faults appear in the automated mode, but no longer in the manual mode. Trust falls, self confidence rises, and as they cross over the operator returns progressively to manual control.

CONCLUSIONS FROM THE WORK OF MUIR AND LEE

Lee found a rich set of data concerning individual strategies of control, in the pattern of intervention over time, and in what appear to be reflections of the mental models of operators. The results on trust support the initial work of Muir, including increased monitoring of unreliable components. It is important to note that we have shown that trust is indeed a genuine causal variable. From Muir’s results it was not quite certain whether plant unreliability caused trust to change, which in turn caused a change in the control mode, or whether the unreliability of the plant caused both trust to alter and the control mode to alter. The form of the regression equations and transfer functions found by Lee make it quite clear that the subjective variables are indeed a causal factor, and are not just an effect of unreliability. Unreliability changes productivity, trust, and self confidence, and those variables in turn change the mode of control. It is not sufficient to use merely productivity and the presence of a fault to predict operator performance. Subjective relationships to the machines must be included.

The practical importance of this work is in relation to the specification of a role for humans in the supervisory control of automated systems. First, it shows that it is possible to develop a quantitative basis for predicting when operators will intervene in the control of systems. From this it follows that we have a better starting point to discuss the extent to which systems design should be optimized only with respect to automatic control, or how much provision should be made for human intervention. The latter implies the need for displays and command languages which are optimized for human information processing. A further consequence of this work is that we must begin to consider how to include the question of social relations with machines in training. If trust and self-confidence play such an important role in determining the nature of the interaction between humans and machines, we need to design training programs which will support an appropriate calibration of the strength of these relationships and feelings. This suggests training scenarios which will make operators think in terms of whether intervention is appropriate, or whether a desire to intervene may simply be a result of a misperception of how reliable the system is.

We have found that the first experience of unreliability in a system which has been very reliable has a devastating effect on some operators. They initially assume that the error must be due to something they have done, especially if the control mode is predominately manual. Only when errors appear repeatedly do they accept the fact that the system is at fault. These dynamics of social interaction with the machines have never been taken into account in training. Finally, the work probably has applications of more generality. It is well known that people prefer “expert systems” which explain their intent and motivation. It is also well known that many such systems are abandoned once their designer departs, because people do not trust them. It appears from our work that there may be important research methodologies which can be brought to bear to understand this loss of trust, and thus lead to improved use of such expert systems, and it is therefore important to see how far our results can be generalized to other systems.

EXTENDING THE PARADIGM: THE DOMAIN OF DISCRETE MANUFACTURING

For this reason we have recently completed the first of a series of experiments using a simulated computer integrated manufacturing system (CIM) to investigate how operators decide to intervene to reschedule or otherwise tune a discrete system which is being monitored under supervisory control (Hiskes, 1994; Moray, Lee & Hiskes, 1994).

We have found changes in trust and in manual intervention with the appearance of unreliability in the CIM system that are very similar to those seen in the pasteurization plant. The psychology of decision making in discrete systems is a field much less well plowed than that of process control where there is a long history of modeling human intervention. (For work on continuous process control see Edwards & Lees, 1974; Crossman & Cooke, 1974; Bainbridge, 1981, 1992; Moray, Lootsteen & Pajak, 1986; Moray & Rotenberg, 1989. For an introduction to work on discrete manufacturing, see Sanderson, 1989; Sanderson & Moray, 1990.) There are many aspects of discrete systems which make it a difficult environment for the human operator. The nature of causality is different from that in process control, being “final” causality rather than “material” or “efficient” causality, purposeful rather than Newtonian. The subsystems of the plant are far less tightly coupled than is the case in continuous process control. Many states of the system are not readily observable - what feature of system performance is a sign now that the production run will be successfully completed in, say, 48 hours? Activity in different subsystems is asynchronous. The way in which faulty components make themselves apparent is quite different. All in all it is a fascinating new area for cognitive ergonomics, and one with very great practical implications for productivity and industrial efficiency.

Although for technical rather than conceptual reasons we have not been able to develop time series models for the discrete manufacturing case, the regression models are very similar, and include similar effects of faults, productivity, and the difference between trust and self confidence. Perhaps the most important result of this new work is that it proves that it is possible to generalize strong quantitative models relevant to the dynamic allocation of function from continuous to discrete complex industrial systems. This opens up the possibility of a widely applicable approach to supervisory control, one which we hope can lead to the fulfillment of the promise of Sheridan’s original insights.

REFERENCES

Bainbridge, L. (1981). Mathematical equations or processing routines? In J. Rasmussen & W.B. Rouse (Eds.), Human detection and diagnosis of system failures, (pp. 259–286). New York: Plenum Press.

Bainbridge, L. (1992). Mental models in cognitive skill: the case of industrial process operation. In Y. Rogers, A. Rutherford, & P. Bibby (Eds.), Models in the mind. (pp. 119–143). New York: Academic Press.

Barber, B. (1983). Logic and the limits of trust. New Brunswick, NJ: Rutgers University Press.

Crossman, E.R., & Cooke, F.W. (1974). Manual control of slow response systems. In E. Edwards & F. Lees, (Eds.), The human operator in process control, (pp. 51–56). London: Taylor & Francis.

Edwards, E. & Lees, F. (1974). The human operator in process control. London: Taylor & Francis.

Hiskes, D. (1994). Fault detection and human intervention in discrete manufacturing systems. Unpublished doctoral dissertation, University of Illinois at Urbana-Champaign.

Huey, B.M. (1989). The effect of function allocation schemes on operator performance in supervisory control systems. Unpublished doctoral dissertation, George Mason University, Washington, D.C.

Lee, J.D. (1992). Trust, self confidence, and operators’ adaptation to automation. Unpublished doctoral dissertation, University of Illinois at Urbana-Champaign.

Lee, J.D., & Moray, N. (1992). Trust and the allocation of function in human-machine systems. Ergonomics, 35, 1243–1270.

Lee, J.D., & Moray, N. (1994). Trust, self confidence and operators’ adaptation to automation. International Journal of Human-Computer Studies, 40, 153–184.

Moray, N. (1981). The role of attention in the detection of errors and the diagnosis of failures in man-machine systems. In J. Rasmussen, & W. Rouse (Eds.), Human detection and diagnosis of system failures (pp. 185–199). New York: Plenum Press.

Moray, N., Lee, J. D., & Hiskes, D. (1994, April). Why do people intervene in the control of automated systems? Paper presented at the First Automation and Technology Conference. Washington, DC.

Moray, N., Lootsteen, P., & Pajak J. (1986). Acquisition of process control skills. IEEE Transactions on Systems, Man and Cybernetics, SMC-16, 497–504.

Moray, N., & Rotenberg, I. (1989). Fault management in process control: eyemovements and action. Ergonomics, 32, 1319–1342.

Muir, B.M. (1987). Trust between humans and machines, and the design of decision aides. International Journal of Man-Machine Studies, 27, 527–539.

Muir, B.M. (1989). Operators’ trust in and percentage of time spent using the automatic controllers in a supervisory process control task. Unpublished doctoral dissertation, University of Toronto.

Muir. B.M. (in press). Trust in automation: Part 1 - Theoretical issues in the study of trust and human intervention in automated systems. Ergonomics.

Remple, J.K., Holmes, J.G., and Zanna, M.P. (1985). Trust in close relationships. Journal of Personality and Social Psychology, 49, 95–112.

Sanderson, P.M. (1989). The human planning and scheduling role in advanced manufacturing systems: an emerging human factors role. Human Factors, 31, 635–666.

Sanderson, P.M. (1991). Towards the model human scheduler. International Journal of Human Factors in Manufacturing. 1, 195–219.

Sanderson, P.M., & Moray, N. (1990). The human factors of scheduling behavior. In W. Karwowski and M. Rahimi (Eds.), Ergonomics of hybrid automated systems II (pp.399–406). Amsterdam: Elsevier.

Sheridan, T.B. (1976). Towards a general model of Supervisory Control. In T.B. Sheridan and G. Johannsen (Eds.), Monitoring behavior and supervisory control (pp. 271–282). New York: Plenum Press.

Sheridan, T.B. (1987). Supervisory control. In G. Salvendy, (Ed.). Handbook of human factors. (pp. 1243–1268). New York: Wiley.

Zuboff, S. (1988). In the age of the smart machine. New York: Basic Books.

ACKNOWLEDGMENTS

This work was supported in part by grants from the Canadian National Science and Engineering Research Council, and in part by a grant from the University of Illinois Research Board and the Beckman Bequest.