Reward Versus Nonreward

In the world outside the laboratory, 100% reward and 100% punishment must be very rare, if they exist at all. In buying radios or fruit, planning careers or weekends in the country, practicing tennis strokes or studying textbooks, hardly anything succeeds or fails every time. Even perfectly correct responses only earn rewards some of the time, often after long periods of persistence with little or no reward. Experiments on the effects of consequences that are only occasionally rewarding or punishing are more ecologically valid and relevant to life outside the laboratory than any other kind of research on learning.

OPERANT PROCEDURE

In the Skinner box, the subject operates the lever or key in bursts interspersed with pauses. During pauses the subject engages in other kinds of behavior. Usually responses are measured in frequency per unit time (number of responses in say 60, 30, or 10 minutes) or rate of responding (per hour, per minute, per second, etc.). By contrast, the procedure in a straight alley or T-maze is called discrete trials because the beginning of each trial is controlled by the experimenter and the latency or amplitude of response is measured separately on each trial.

Some experimenters have used discrete trials procedures in a Skinner box. These experimenters mount the lever on a contraption that inserts and withdraws the lever from the box. The apparatus measures the latency between each insertion and the first response. After each response, the lever is withdrawn until the beginning of the next trial. In a discrete trials procedure, the experimenter controls the beginning of each trial, and in an operant procedure, the subject controls the frequency and rate of a series of responses.

Partial Reward During Acquisition

Skinner first introduced operant procedures in the 1930s and soon reported the now well-known fact that, in operant procedures, rats and pigeons maintain a robust rate of responding when only a small fraction of their responses receive contingent reward. This was an impressive finding when most psychologists taught that all learning depended on the reinforcing effects of response contingent rewards and punishments. Recognizing that a mixture of reward and nonreward is also much more typical of the world outside of the laboratory, Skinner and his followers promoted this as a special virtue of operant conditioning. But, the finding that responding goes up as contingent reward goes down actually reverses the principles of Skinner’s theory and the whole family of reinforcement theories.

Innumerable experiments have replicated the effects of partial or intermittent reward in operant conditioning. Rate of responding increases as rate of reward decreases down to some very low rate of reward. The smaller the number of responses that actually get rewarded, the greater the rate of responding. At some point the animals get so few rewards that responding begins to decrease but still stays higher than responding with 100% reward. Eventually, if there are hardly any rewards at all, extinction sets in, but vigorous rates of responding can be supported by schedules that require more than 100 responses for every bit of food reward (Catania, 1984, pp. 161–164).

The superiority of low levels of reward appears only in extremely constrained training situations, as in a Skinner box, a prison, a mental hospital, or other similarly constrained environments. Even in a Skinner box, the availability of only two alternative responses dramatically alters the situation. If there are two levers or two keys and one pays off every time while the other pays off only some of the time, rats and pigeons soon respond only to the one that pays off every time. Similarly, if both levers or keys pay off only part of the time, but one pays off more often than the other, rats and pigeons learn to respond more to the one that pays off more frequently.

Now that autoshaping, freefeeding, and misbehavior are well documented and the ethology of operant conditioning is also well documented (chap. 8), it is easier to understand the effects of partial reward in operant conditioning. To begin with, we know now that food evokes lever-pressing and key-pecking in the Skinner box without any contingency at all. After a stimulus situation becomes a feeding situation through association with feeding, rats and pigeons and many other animals persist in prefeeding behavior between each S*. Food actually interferes with prefeeding under these conditions because the animals have to stop prefeeding in order to eat the food. The less food they get, the less that feeding interrupts prefeeding. Of course, if there are too few S*s then at some point there is too little association with feeding and this produces extinction.

Extinction After Partial Reward

Because animals stop to eat when they find food, they have more time for lever-pressing and key-pecking when the apparatus delivers less food. More lever-pressing or key-pecking under lower rates of reward could only mean that fewer rewards interferes less than more rewards. Consequently, experimentally sound tests of the difference between partial and 100% reward must measure differences in extinction.

Skinner soon demonstrated that after partial reward, lever-pressing and key-pecking are more resistant to extinction than after 100% reward. If resistance to extinction measures the strength of conditioning, then we must conclude that less reward is more reinforcing than more reward. But, this is the opposite of the principle that the strength of conditioning depends on response contingent reward. Skinner and his followers seem to avoid the antireinforcement implications of this major finding by attributing it to the superiority of operant procedures over other kinds of conditioning. It is certainly true that the most dramatic demonstrations of the positive effects of partial reward have appeared in the Skinner box, but the finding that less reward is more reinforcing than more reward remains the strongest evidence against all theories based on response contingent reinforcement.

Amount of Practice

Greater resistance to extinction after partial reward in a Skinner box is confounded with amount of practice. That is, in a Skinner box the fewer rewards they get, the more lever-pressing or key-pecking responses the animals must make per reward. Consequently, experimental subjects practice the criterion response many more times during acquisition or maintenance under partial reward schedules. Therefore, as far as operant conditioning is concerned, the additional practice alone should create greater resistance to extinction. Consequently, the operant procedure is poorly suited for comparing the effects of different rates of reward.

DISCRETE TRIALS

The chief apparatus used to study partial reward with discrete trials has been the straight alley, in which speed of running from start to goal box measures response strength. The positive effects of partial reward contradict reinforcement theory even more clearly in discrete trials experiments. Also, behavior outside the laboratory is more like the discrete trials procedure. Human beings and other animals tend to make discrete responses in nature. They seldom repeat the same response over and over again the way rats press levers and pigeons peck keys in the Skinner box.

According to reinforcement theory, 50 trials of reward strengthens by a positive process of reinforcement and 50 trials of extinction weakens by a negative process of inhibition. Partial reward intersperses 50 trials of nonreward with 50 trials of reward during acquisition. To be consistent, reinforcement theory should predict that the effect of the 50 nonrewarded trials must subtract in some way from the 50 rewarded trials. If the strengthening effect of reward is greater than the weakening effect of nonreward, then partially rewarded subjects should still improve with practice, but their improvement should be less than the improvement of subjects that get 50 trials of reward without any negative effects of nonreward.

The earliest experiments with discrete trials controlled for total number of rewards but confounded percentage of rewarded trials with total number of trials. Table 11.1 shows how a typical experiment would start with Phase I consisting of 50 trials at 100% reward for all conditions and end with Phase III consisting of an extinction test for all conditions. In Phase II, the partial reward condition receives 50 rewarded trials randomly mixed with 50 nonrewarded trials, while the Control 1 condition receives the same number of rewarded trials without any nonrewarded trials. The surprising finding (for those who believed in reinforcement based on response contingent reward) was that the additional 50 unrewarded trials in the partial condition not only failed to subtract from the effect of the 50 rewarded trials, but actually added resistance to extinction.

TABLE 11.1

Comparing Partial With 100% Reward in a Discrete Trials Procedure

Experiments like Karn and Porter (1946) discussed in chapter 5 showed that total amount of experience in an experimental situation is critical. This could be the advantage of partial reward in any experiment that failed to equate total number of trials. That is, if the 100% reward group got 50 rewarded trials and the 50% reward group got 50 rewarded trials plus 50 nonrewarded trials, then the 50% reward group got twice as many trials in the apparatus as the 100% reward group. Later experiments remedied this problem by comparing groups that had the same number of trials, one with 100% reward, others with 50% reward or less. This is illustrated by Control 2 in Table which received 50 rewarded trials plus 50 more rewarded trials in order to equate total number of trials with the partial condition. In these comparisons, partially rewarded groups virtually always have more resistance to extinction than a 100% rewarded group. This result contradicts the implications of contingency theories more directly and more clearly than the original findings. If resistance to extinction measures strength of conditioning, then this must mean that 100 rewards are less reinforcing than 50 rewards minus 50 nonrewards.

Even the complex twists and turns of Hull’s reinforcement theory, with its two types of inhibition (described in chap. 10), cannot cope with the positive, apparently strengthening, effects of nonrewarded trials when they are interspersed with rewarded trials during acquisition in a discrete trials procedure. This has to be a major problem for reinforcement theories, because partial reward in a discrete trials experiment resembles learning in the world outside the laboratory more than any other laboratory procedure.

Generality

A series of proposals that might preserve reinforcement theory in the face of the paradoxical effects of partial reward have appeared over the years. The next section discusses two prominent examples, the discrimination hypothesis and the frustration hypothesis. Kimble (1961, pp. 291–320) and Hall (1976, pp. 281–297) review a larger sample.

When these proposals led to experiments, they extended the generality of the phenomeon. To test a hypothesis about massed and distributed practice, Weinstock (1954, 1958) compared 100% reward with partial reward for rats running in a straight alley for 300 trials at the rate of only one trial per day. If, as some said, the extinction effect depends on massed practice (V. Sheffield, 1949), then Weinstock’s extreme distribution of practice should wipe out the difference between 100% reward and partial reward. In both experiments, Weinstock demonstrated much greater resistance to extinction after partial reward. This finding after such an extreme degree of spacing between trials killed off the Sheffield hypothesis along with a number of other once popular artifactual explanations of the partial reward extinction effect. For example, partial reward animals are hungrier on the average than 100% animals on any given trial after the first trial. All of the animals get to eat their full daily ration after training each day and are equally hungry on the first trial of each day. Since 100% animals get food reward on every trial they must be less hungry than the partial animals after the first trial. With only one trial per day, however, all animals get only first trials so all are equally hungry on every trial.

Crum, Brown, and Bitterman (1951) varied delay of reward to test an aftereffects hypothesis. Instead of a mixture of rewarded and nonrewarded trials, they varied the delay of reward so that one group received immediate reward on all trials and the other received immediate reward for half of their trials and reward delayed by 30 seconds for the other half. Crum et al. became the first to demonstrate that delayed reward has the same effect as nonreward in a discrete trials procedure. Note that in partially delayed reward, the 50% animals get rewarded every trial also so they begin each trial after eating just as many rewards as the 100% animals.

Discrimination

A popular explanation of the superiority of partial reward as an experimental artifact is the discrimination hypothesis. The difference between extinction and partial reward is less than the difference between extinction and 100% reward. During extinction after 100% reward, an animal should be able to tell immediately that reward has stopped completely. After acquisition with relatively few rewards per trial, it should take longer for an animal to detect the fact that reward has stopped. At a low rate of reward, say one reward for every ten trials, randomly interspersed with unrewarded trials, it might take more than twenty unrewarded trials to detect the shift to extinction.

There must be some truth in the discrimination hypothesis, but it is a very limited explanation. To begin with, discrimination only applies to laboratory conditions in which there is a shift from acquisition to extinction. Outside the laboratory, payoffs are not so neatly divided into periods of acquisition and extinction. If the whole phenomenon were only an artifact of the difference between acquisition and extinction under laboratory conditions, then it would be much less interesting. In addition, the discrimination hypothesis only applies to the effect of partial reward on resistance to extinction. Evidence later in this section shows that partial reward also leads to stronger responding during acquisition, even in discrete trial conditions when the effect in acquisition cannot be attributed to some artifact of operant procedures.

Early experimenters assumed that 100% reward was necessary for the beginning of acquisition. They virtually always began experiments with a series of 100% reward trials for all groups as shown in Table 11.1. After an initial phase of acquisition at 100% reward, they divided subjects into groups that received various percentages of rewarded trials. Usually, there was only a short partial reward phase in these experiments before extinction of all groups. A brief partial reward phase seemed appropriate because experimenters assumed that the partial reward groups would fall behind during acquisition. They feared that after a long series of partially rewarded trials the performance of the partial groups would fall significantly far behind the performance of the 100% groups. This would create difficulties in statistical comparisons during the extinction phase. That is, experimenters wanted the groups to be equal or nearly equal just before the start of extinction.

The assumption that partial reward during acquisition must retard performance remained untested for many years. Later, however, Capaldi, Ziff, and Godbout (1970) demonstrated successful, actually robust, acquisition in the runway when conditioning began with one or even a few unrewarded trials. Weinstock (1954, 1958) and Goodrich (1959) demonstrated that, after a substantial number of trials, partially rewarded rats ran faster than 100% rewarded rats during acquisition even with discrete trials. This result eliminates all of the proposed explanations that invoke an artifact of measuring performance during extinction. It also makes the phenomenon more general, hence more generally interesting. A true advantage of partial reward during acquisition indicates that it could be a useful training technique outside of the laboratory.

Frustration

Amsel (1958, 1962, 1992, 1994) proposed that the paradoxically positive effects of partial reward arise from the frustration that rats feel when they arrive at a formerly rewarding goal box and find it empty. Amsel proposed that the frustration of not finding S* in a formerly rewarding goal box evoked a frustration response. The first effect of frustration is disruptive. During extinction, therefore, the frustration response should accelerate the decline in running. Under partial reward schedules, however, rats often receive reward for running after finding the goal box empty—that is while experiencing the stimulus aftereffects of frustration. When they make disruptive responses that slow them down, they get to the goal box later and rewards (when present) are delayed. Frustration also energizes. When the energizing effect of frustration is channeled into more intense running, animals get rewarded faster. Gradually, according to Amsel’s theory, the partially rewarded animals become conditioned to run faster under the influence of frustration.

The 100% animals experience frustration for the first time on the first trial of extinction. They only experience extinction, so they only show the disruptive effects of frustration and they extinguish rapidly. The rapid running habits that the partially rewarded rats learned during acquisition transfer readily to the frustrating experience of extinction and they continue to run rapidly for many trials.

According to Amsel’s frustration hypothesis, the empty goal box is aversive. This agrees with the informal observations of many experimenters. After many trials of feeding in the goal box, rats become agitated when they find the goal box empty. They often scream out in the empty goal box and they can be difficult to handle. Prudent experimenters wear protective gloves on extinction days.

To test for the aversiveness of the empty goal box in extinction, Adelman and Maatsch (1955) ran rats in a straight alley for food reward and then extinguished them by three different procedures. One group received a normal extinction series in which they were confined in the empty goal box for 20 seconds after running through the alley. A second group was allowed to jump out of the empty goal box, thus escaping from the frustration of nonreward. The animals in the third group were allowed to escape from the empty goal box by running back into the alley. Adelman and Maatsch called this group the “recoil” group. Results of this experiment in terms of running time appear in Fig. 11.1. Allowing the jump group to escape from the empty goal box maintained running in extinction at the same level as food reward in acquisition.

Adelman and Maatsch (1955) and many others have explained the performance of the jump group in terms of the reward of getting out of the frustrating goal box. In a feed forward system, the chain of responses that got them to the goal box should be maintained by any strong response that is the last response to the goal box, whether the rats promptly find and eat food, or promptly jump out of the box. Adelman and Maatsch also rewarded the recoil group with escape from the frustrating goal box, but that group actually extinguished faster than the normally confined group. Feed forward theory would predict faster extinction in the recoil group because that group gets to run back through the maze in the wrong direction. Consequently, the last thing that they did when they were in the maze near the goal box was to run back away from the goal box. Conditioning them to run back should slow their forward movement, considerably, on the next trials.

FIG. 11.1. The effect of three different treatments in the goal box during extinction. From Adelman and Maatsch (1955).

Because Amsel’s frustration energizes, partially rewarded rats should run faster during acquisition as well as during extinction. Weinstock (1958) and Goodrich (1959) confirmed this prediction when they ran rats under partial reward for an extended series of trials. This makes Amsel’s frustration hypothesis superior to hypotheses such as the discrimination hypothesis that only account for superior resistance to extinction.

Hall (1976, pp. 291–297) reviewed evidence for and against Amsel’s account of partial reward and found evidence both for and against the frustration hypothesis, which remains the most successful account that is compatible with response contingent reinforcement. Amsel (1992, 1994) summarized frustration theory in more detail and in the light of more recent experiments. This chapter presents only the basics of the theory and the evidence. Amsel’s frustration hypothesis is notable because it has interesting implications for life outside the laboratory. Learning to persist in the face of frustrating nonreward and to channel the tension into productive behavior would be a very useful skill.

The frustration hypothesis shares a basic weakness with all explanations based on inferences about pleasure and pain. Consider the defining operations of frustration. An empty goal box, by itself, cannot arouse frustration. Animals must experience the pleasure of food in the goal box before the empty goal box arouses the displeasure of frustration. Compare this with the defining operations of secondary reward. In the case of Sr the goal box is neutral by itself, but acquires positive value when animals experience the pleasure of food there. Eventually, the empty goal box becomes an Sr that rewards the animals for running.

How can the same empty goal box have a pleasurable secondary rewarding effect that maintains responding in extinction and, at the same time, have an aversive frustrating effect that disrupts responding in extinction? The problem here is that both secondary reward and frustration are based on projection of subjective human feelings of pleasure and frustration into the minds of rats and pigeons. Both of these cognitive, hedonistic notions are reasonable. The trouble with this sort of subjective, projective reasoning is that it can and often does lead to flatly contradictory conclusions. The feed forward principle, by contrast, predicts behavior by observing what the subject does rather than imagining how the subject might feel.

WHAT IS WRONG WITH 100% REWARD?

The superior performance of partially rewarded animals in an apparatus like the straight alley flatly contradicts traditional reinforcement theory. This is because 100% reward should always be the best possible schedule. Any rate of reward that is less than 100% should add less reinforcement and more inhibition than 100% reward. All reinforcement accounts of this phenomenon must then account for the superior effects of partial reward as an experimental artifact that distorts the true difference between 100% and partial reward. This chapter has taken up two of these artifactual accounts: discrimination between acquisition and extinction, and Amsel’s frustration hypothesis. Both attempt to explain why 100% reward really would be superior if it were not for some experimental artifact that distorts the picture.

And yet, all of the animals that serve as subjects in these experiments evolved in a world in which 100% reward is rare and partial reward, even sparsely distributed partial reward, is common. From an ecological point of view, there is something basically wrong-headed about a theory that treats the most unlikely condition found in nature as the normal case and the most common condition found in nature as the artifact. This wrong-headedness is even more serious if you want to apply the results of laboratory experiments to practical situations in the outside world.

Feed forward theories are based on switching between alternatives rather than positive strengthening through reinforcement and negative weakening through inhibition. In a feed forward theory, if 100% animals run slower than 50% animals, it must be because they are doing something else that interferes with running. The feed forward principle tells us to look for a disadvantage of 100% reward in terms of competing responses. What could this be?

Anticipatory Goal Responses

If the S* is food, and the criterion response, Rc, is a prefeeding response, then partial reward only increases the amount of prefeeding, because animals repeat anticipatory prefeeding responses until they get an Sd that evokes a feeding response. Foraging in burrows and fields is also a prefeeding response of rats; that is why it is so easy to train hungry rats to run in alleys with food incentives. Running through a maze or an alley and finding food in the dish at the end of the goal box involves a more extended and heterogeneous sequence of responses than pressing a lever over and over again. In this sense, rats running in mazes or straight alleys are much more like human beings practicing skilled sequences of behavior. In skilled behavior, the timing of each step in a sequence is critical. Anticipatory behavior disrupts the sequence and skill deteriorates.

Consider the case of a tennis player winning a match against a relatively inferior opponent. Becoming more and more confident, the tennis player starts to imagine jumping over the net at the end of the match to shake hands with the defeated opponent and accept the congratulations of the crowd. Distracted from the game at hand, the tennis player starts to miss shots and loses the match. Anticipation of reward disrupts the skill.

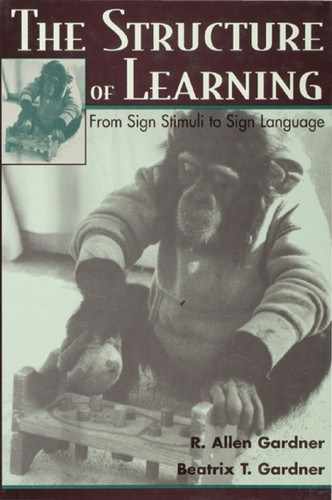

In the film, Behavioral Development of the Cross-Fostered Chimpanzee Washoe, there is a scene showing Washoe when she was about 3 years old stacking 1-inch children’s building blocks. At that time, Washoe had become fairly good at stacking these blocks. She could build towers of up to 12 blocks fairly easily (our limit was 10 blocks). Essentially, Washoe taught herself to build these towers after we showed her that it could be done. Challenged by the task itself, she needed no external reward. During the filming Susan, then a graduate student and firm believer in Skinnerian reinforcement, started to tickle Washoe as a reward. Tickling seems to give infant chimpanzees more pleasure than just about anything else you can do for them. In the film, you can see Washoe stacking about four blocks rather carelessly with her eyes shifting from the blocks to Susan, and then starting to giggle in anticipation of the tickling. She starts to jump up and down and cover the most ticklish spots on her body. Actual stacking stops prematurely after only a few blocks and Washoe even knocks over the small tower in her anticipatory excitement.

Anticipatory behavior like this seems to appear when rats run in mazes and straight alleys. Figure 5.5 (discussed in chap. 5 in connection with the goal gradient phenomenon) is a plot of running time in a straight alley and shows rats actually slowing down as they near the goal box. This is the typical finding when experimenters compare running near and far from the goal box. Goodrich (1959) replicated this finding and found that hungry rats slowed down considerably as they entered the goal box. Very likely, behavior in the goal box consisted of responses, such as seeking out the food dish and preparing to eat, that disrupted the full-speed-ahead running in the alley. It seems likely that if this prefeeding behavior appeared too early in the sequence, it would slow the rats down earlier in the alley.

Goodrich (1959) compared 100% reward with 50% reward and found that both groups slowed dramatically when they entered the goal box and approached the food dish at the end of the goal box. This is what we would expect if there is prefeeding behavior in the goal box that interferes with rapid running. Goodrich’s 100% animals reached the food dish faster than his 50% animals. This is what we would expect if the 100% animals made the terminal responses faster than the 50% animals. Meanwhile, Goodrich’s 100% animals ran slower in the alley than his 50% animals. This is what we would expect if the 100% animals were making more anticipatory goal responses in the alley. The terminal responses that they had learned so well with 100% reward interfered with running in the rest of the straight alley. Could this be the advantage of partial reward in skilled sequences of behavior that require different responses at different points in a sequence?

Chen and Amsel (1980) used conditioned taste aversion to study the effect of anticipatory goal responses in a runway. Chapter 6 described the evidence that after experimenters pair a flavor with illness induced by injections of lithium chloride rats reject the experimental flavor. Further evidence discussed in chapter 6 identified fractional anticipatory responses to the illness as the mechanism of rejection. Conditioned responses are most pronounced when the rats taste the experimental flavor, but these fractional anticipatory responses also interfere with a well-practiced predrinking response such as lever-pressing. Deaux and Patten (1964), as illustrated in Fig. 6.4, showed how anticipatory licking in a straight alley increases with 100% reward at the end of the alley.

In a feed forward system, it is anticipatory goal responses that slow the 100% animals in the runway and speed them up in the goal box, just as Goodrich (1959) found. After conditioned taste aversion, anticipatory licking should evoke anticipatory rejection, which should interfere with running. In a feed forward system, 50% reward markedly reduces, even eliminates, anticipatory goal responses in the alley. Conditioned taste aversion should then cause much slower running after 100% reward than after 50% reward.

Chen and Amsel (1980) tested this implication of feed forward by training thirsty rats to run in a straight alley with vinegar-flavored water in the goal box. Half of the animals received 100% reward and half 50% reward. After 30 trials in the alley, the experimenters paired vinegar-flavored water with illness by injecting half of the animals in each group with lithium chloride. For the control half of each group of rats, they paired vinegar-flavored water with an injection of a neutral saline solution. Next they ran all of the rats in the alley under extinction. These animals never received any water, flavored or unflavored, in the alley again. Figure 11.2 shows that both groups of 100% animals extinguished much faster than both groups of 50% animals, which is the usual effect of 50% reward. Illness paired with the flavor of the rewards hastened the extinction of the 100% group without affecting the extinction of the poisoned 50% group. This is just what we would expect if 100% reward induces much more anticipatory drinking in the alley than 50% reward. Indeed, Chen and Amsel’s results suggest that after 50% reward there may be hardly any anticipatory drinking to interfere with the habit of running in the alley.

Anticipatory Errors

In a feed forward system, the trouble with 100% reward is that terminal goal responses are learned too well. The advantage of the partially rewarded animals is that they concentrate on running and make fewer anticipatory goal responses in the alley. Ecologically significant skills outside the laboratory usually consist of a series of responses that must be made in a correct sequence and with correct timing. If 100% reward induces anticipatory errors, then partial reward is more appropriate for learning skilled sequences.

FIG. 11.2. Extinction in a straight alley after 100% and 50% reward. Vinegar-flavored rewards were paired with illness-inducing poison or neutral saline. Reprinted with permission from Chen, & Amsel, (1980). Copyright © 1980 by American Association for the Advancement of Science. Reprinted with permission.

The linear maze (discussed and illustrated in chap. 5, Figs. 5.3 and 5.4, in connection with the goal gradient phenomenon) is a way to observe anticipatory goal responses, directly. As a first step in testing this hypothesis, R. A. Gardner and Gamboni (1971) ran two groups of thirsty rats with water reward in a similar linear maze illustrated in Fig. 11.3. For one group, All Same, all four correct choices were the same, LLLL for half of the animals in the group and RRRR for the other half. For a second group, Terminal Opposite, the first three choices were the same but the terminal choice was opposite, LLLR for half the animals and RRRL for the other half. Figure 11.4 shows that the results were very similar to results found in earlier experiments (illustrated in Fig. 5.4). The All Same group performed uniformly well in all four units of the linear maze, while the Terminal Opposite group performed about as well as the All Same group in the unit closest to the goal box, but made many anticipatory errors in the earlier units. That is, the Terminal Opposite group made anticipatory left errors when the correct terminal choice was left and anticipatory right errors when the correct terminal choice was right. Figure 11.4 also shows that anticipatory errors depended on distance from the reward. The closer to the terminal unit the greater the amount of anticipatory error.

FIG. 11.3. Diagram of the linear maze used by R. A. Gardner and Gamboni (1971) and Gamboni (1973). Copyright © 1997 by R. Allen Gardner.

FIG. 11.4. Mean errors at each choice point in a linear maze, as in R. A. Gardner and Gamboni (1971). Copyright © 1997 by R. Allen Gardner.

After Gardner and Gamboni (1971) replicated earlier work with 100% water reward in the new apparatus, Gamboni (1973) studied the effect of partial delay of reward on anticipatory responses. Gamboni varied partial delay of reward rather than partial nonreward to take advantage of the technique discovered by Crum et al. (1951) and discussed earlier in this chapter. With partial delay of reward rather than partial nonreward, all animals in all groups are equally thirsty throughout each day’s series of trials, which eliminates that possible source of bias.

Gamboni (1973) gave five groups of rats either 100%, 75%, 50%, 25%, or 0% immediate rewards and delayed rewards on the rest of their trials. Delayed reward consisted of 30 seconds of confinement in the goal box before access to the drinking cup.

Figure 11.5 shows that the more often the animals received immediate reward the fewer the errors they made in the fourth and last unit before the reward. In the second and third units, this pattern was reversed. The more often the animals received immediate reward the more errors—now anticipatory errors—they made in the two units before the last unit, and this anticipatory effect was more marked in the unit closest to the last unit. Figure 11.6 shows percentage of anticipatory errors for the first three units combined. The curve is flat for the first unit shown in Fig. 11.5. Delay of reward failed to affect the unit farthest from reward.

FIG. 11.5. Percentage of errors in Units 1, 2, 3, and 4. From Gamboni (1973). Copyright © 1973 by the American Psychological Association. Adapted with permission.

These results confirm the notion that partial reward improves learning and performance when the task consists of a sequence of responses. Immediate 100% reward at the end of a sequence strengthens the later responses in the sequence to the point where they anticipate and interfere with earlier responses in the sequence.

FIG. 11.6. Percentage of anticipatory errors in Units 1–3. From Gamboni (1973). Copyright © 1973 by the American Psychological Association. Adapted with permission.

SUMMARY

The superiority of partial reward over 100% reward in both acquisition and extinction contradicts both contingent reinforcement theory and cognitive expectancy theory. In early experiments it seemed as if the superiority of partial reward only appeared in extinction, and early writers attributed the partial reward extinction effect to artifacts of experimental extinction. Later experiments demonstrated that partial reward is also superior during acquisition. It is a general phenomenon rather than a laboratory curiosity. Amsel’s extension of Hull’s theory by means of a frustration principle is the most successful attempt so far to explain the superiority of partial reward in both acquisition and extinction within traditional reinforcement theory.

The defining operations of Amsel’s frustrating stimuli, however, are the same as the defining operations of secondary rewarding stimuli within the same theory. The two effects of the same experimental operations are clearly contradictory and this indicates that there must be some flaw in the reasoning. As usual, the error arises from basing theoretical principles on slippery foundations, such as subjective, projective notions of how human theorists would feel if they were rats in a maze.

The last section of this chapter describes a feed forward, contiguity interpretation of the effects of unrewarded trials. This interpretation emerges from experiments and direct ethological observations described in earlier chapters. Because feed forward conditioning takes place without building up habit strength or expectancy, it avoids the need for an inhibitory mechanism to counteract excitation. A feed forward system also offers a much simpler and more realistic way to deal with competing responses. It indicates, and experimental results confirm, that the positive effect of less frequent and less immediate reward depends on the disruptive effect of reward on sequential skill. This result has implications for learning skills in practical applications. The more frequent, the more immediate, and the more intense the end reward, the more reward should interfere with skilled sequences in practical situations.