CHAPTER 3

Instrumental Conditioning

Plainly, classical conditioning is both a highly effective laboratory procedure and an essential means of learning for human and nonhuman animals in common situations outside of the laboratory. In the traditional view, classical conditioning may be sufficient for simple, obligatory, physiological responses like salivation, heart rate, and eyeblinks, but a larger class of learning requires a more advanced procedure. In this view, instrumental conditioning seems more appropriate for learning skills, particularly complex skills such as playing a violin, programming a computer, or conducting an experiment.

In classical conditioning the experimenter presents, or omits, the UCS according to a predetermined experimental procedure that is independent of anything that the learner does. In instrumental conditioning the experimenter presents the UCS if, and only if, the learner makes, or omits, some arbitrary response. From the point of view of the experimenter, the procedural difference is clear. Do these two procedures also represent two different principles of learning? This book takes a hard experimental look at that question.

B. F. Skinner was probably the strongest and most famous advocate of the position that there are two different principles of learning. To emphasize the distinction, Skinner introduced two new terms, respondent conditioning for the procedure that others called classical or Pavlovian conditioning, and operant conditioning for the procedure that others called instrumental conditioning. The only difference is that the words respondent and operant (particularly operant) are closely associated with Skinner’s view of the learning process.

TYPICAL PROCEDURES

The terms for the principle types of instrumental conditioning refer to different kinds of contingency.

Reward

Thorndike (1898) published the first systematic, scientific accounts of instrumental conditioning. He trained cats to escape from specially designed boxes and rewarded them with food. The cats could see the food through the spaces between the vertical slats that made up the walls of the puzzle boxes. A door opened as soon as the cat pulled on a cord hanging from the top of the box or pressed on a latch. At first, hungry cats engaged in various natural but ineffective activities: reaching between the slats toward the food, scratching at the sides, moving all about the box. Eventually, each cat made the arbitrary response that operated the release mechanism. The door opened immediately, and the cat escaped and got the food. The first successful response appeared to be largely a matter of chance. On successive trials cats concentrated their activity near the release mechanism. Extraneous behavior gradually dropped out until they made the correct response as soon as they found themselves in the box.

The two most common types of apparatus for studying instrumental conditioning are mazes and Skinner boxes. In mazes, a hungry or thirsty learner, usually a laboratory rat and almost always a nonhuman being, typically finds food or water in a goal box at the end of the maze. In Skinner boxes, a hungry or thirsty learner, usually a laboratory rat or pigeon, operates an arbitrary device, typically for food or water reward.

Escape

In escape, the instrumental response turns off a painful or noxious stimulus. In a typical experiment, the floor of the conditioning chamber is an electric grid and a low barrier divides the chamber into two compartments. At intervals the experimenter turns on the current, which delivers painful shocks to the rat’s feet. If the rat jumps over the barrier, the experimenter turns off the shock. In this way, the rat escapes the shock by making a correct response.

Avoidance

In avoidance, the learner can prevent or postpone a painful or noxious stimulus. In a typical experiment, a human subject places one hand on an electrode that delivers a mildly painful electric shock when the experimenter turns on the current. Three seconds before turning on the shock, the experimenter sounds a tone. If the learner raises his or her index finger within the 3-second warning period, the experimenter omits the shock on that trial. Thus, the subject avoids the shock by responding within the warning period.

A standard experiment compares escape and avoidance by testing rats one at a time in a chamber with a grid floor and a signal light mounted in the ceiling. For the escape group, the light comes on followed in 10 seconds by painful shock from the grid floor on every trial. As soon as the rat starts running, the shock stops. Thus, the rat can escape shock by running, but only after the shock begins. For the avoidance group, the light also comes on followed in 10 seconds by painful shock from the grid floor. The avoidance rat, however, can avoid the shock entirely by running before the end of the 10-second warning period.

Punishment

In this procedure, learners receive a painful or noxious stimulus if they make a specified response. Traditionally, punishment is the opposite of reward. In reward, the learner receives something positive that strengthens a certain response. In punishment, the learner receives something negative that weakens a certain response.

BASIC TERMS

Most of the terms of classical conditioning have parallel meanings in instrumental conditioning. A few terms of classical conditioning are inappropriate for instrumental conditioning and vice versa. The following definitions are mostly parallel to the definitions of the same terms introduced in chapter 2.

Unconditioned Stimulus

Of the four terms used to describe classical conditioning—UCS, CS, UCR, and CR—only UCS (referring to food, water, shock, etc., used in reward training and punishment training) appears in any significant number of descriptions of instrumental conditioning. CR appears occasionally. CS and UCR appear very rarely, if at all.

Response Measures

Experimenters record both the frequency of correct and incorrect choices in a maze and the amount of time required to run from the start box to the end of the maze. Running speed is a measure of latency in one sense, but it is also a measure of amplitude in another sense because the harder the animal runs the quicker it gets to the end of the maze. The frequency of correct responses is often expressed as the percentage or probability of a correct response.

In a Skinner box, experimenters measure the frequency (number of lever-presses in an experimental period), or the rate (average number of lever-presses per minute). Lever-presses must activate some device, usually an electric switch, attached to the lever. The animal must press the lever with sufficient force to move it, and for a sufficient distance to activate the switch. If the switch is too sensitive, the apparatus will record minor movements of the animal that happen to jiggle the lever. If the switch requires too much force or travel, the animal may rarely hit the lever hard enough or long enough to activate the recorder. Both force and distance are measures of amplitude in a Skinner box.

Experimenters sometimes measure latency of lever-pressing by retracting the lever from the box as soon as the animal presses it, then reinserting the lever and measuring the interval between reinsertion and the next response. In a maze-running apparatus, the experimenter usually places the animal in a starting box of some kind and starts the trial by opening the door that allows the animal to enter the maze. Experimenters often measure latency as the interval between the time the door opens and the time the animal leaves the start box.

Response Variability

Variability is a basic dimension of all responses. We have to expect live animals to vary their behavior from moment to moment even under the highly constant conditions of the experimental chamber. Without some variability animals cannot learn anything new. The following example illustrates the insights that emerge from experiments that report variability.

The switch at the end of the lever in a Skinner box is usually spring-loaded, so that it returns to its resting position after the rat releases the lever. The experimenter can vary the amount of the spring loading, hence the amount of force that the rat must exert in order to operate the switch. A common loading requires the rat to exert about 10 grams of force. Less than 10 grams fails to operate the switch; more than 10 grams earns no additional reward.

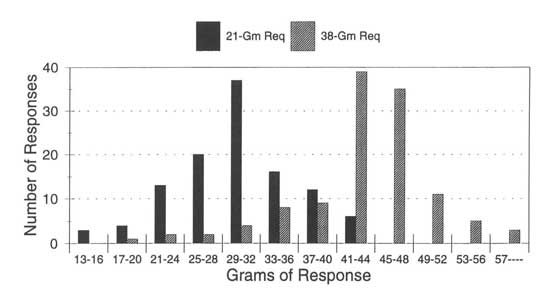

Figure 3.1 shows the results of an experiment that raised the force required to operate the reward mechanism first to 21 grams and then to 38 grams and also reported the force exerted on each lever-press. The black bars in Fig. 3.1 show the frequency of different amounts of force under the requirement of at least 21 grams to earn a reward. Under that condition, the rat mostly pressed the lever with 21 grams of force or more, but sometimes it pressed the lever with less than 21 grams and failed to earn a reward. The actual distribution of forces ranged from 13 grams to 44 grams in this condition.

The gray bars in Fig. 3.1 show what happened when the experimenters next raised the requirement to 38 grams. The distribution of forces shifted upward. Now, the rat pressed the lever with more than 38 grams of force most of the time. More often than not the rat still pressed the lever harder than necessary even though this required much more effort than before. Note that the 38-gram requirement could only affect the rat if 38 grams of force was already within the distribution of response forces under the 21-gram requirement. Response variability is necessary for all new learning.

FIG. 3.1. Force exerted by a rat to press a lever in a Skinner box. Black bars show the distribution of frequencies for a 21-gram minimum force requirement. Gray bars show the distribution for a 38-gram minimum requirement. Copyright © 1997 by R. Allen Gardner.

Acquisition

Acquisition is a series of trials with the UCS.

Extinction

Extinction is a series of trials without the UCS.

Resistance to Extinction

Usually, the response weakens or ceases entirely during extinction. The rate of decline is called resistance to extinction and can be measured by one of the standard response measures, such as latency, amplitude, probability, or rate of responding. Some experiments measure resistance to extinction by response during a fixed number of extinction trials and others run extinction trials until the measure of responding drops below some criterion.

There are cases where resistance to extinction is the only measure that can test certain theoretical questions. Later chapters in this book take up these cases as they become relevant.

Spontaneous Recovery

Extinguished responses can recover substantially, sometimes completely, after a rest. Spontaneous recovery can be tested with or without the UCS. With the UCS, it is called reacquisition; without the UCS it is called reextinction (see Fig. 10.1). Spontaneous recovery is a robust phenomenon that appears in virtually all experiments. Obviously, the CR is neither forgotten nor eliminated by extinction. Chapter 10 discusses the theoretical significance of spontaneous recovery.

GENERALITY

Instrumental conditioning is a much more versatile procedure than classical conditioning and most experiments in instrumental conditioning are less expensive and more convenient to perform. Consequently, instrumental experiments have included a much wider range of subjects, responses, and stimuli.

For example, Gelber conditioned paramecia to approach an arbitrary object. First, she sterilized a platinum wire and then dipped it into a dish of freely swimming paramecia. Then, she counted the number of paramecia that adhered to the sterilized wire. Next, she swabbed the wire with a fluid containing bacteria (the usual food of these animals) and dipped the wire into the dish again. She removed the wire again, and now counted the number of paramecia adhering to the wire. This number increased steadily over a series of 40 trials. In a series of experiments Gelber (1952, 1956, 1957, 1958, 1965) obtained and thoroughly replicated this evidence for instrumental conditioning. D. D. Jensen (1957) and Katz and Deterline (1958) disputed this result, but their experiments omitted safeguards and controls that Gelber included in her experiments. For example, Gelber, unlike her critics, stirred the liquid in the dish to skatter the paramecia between trials and used independent observers who reported that the paramecia swam actively toward the wire.

French (1940) sucked paramecia one at a time up into a capillary tube 0.6 mm in diameter. Paramecia apparently prefer to swim horizontally, which makes a capillary tube into a sort of trap. The lower end of the tube opened into a dish filled with a culture medium. The only way that a paramecium could escape was by swimming down to the open end of the tube. Half of French’s paramecia showed a significant improvement in performance in terms of time taken to swim down to the culture medium. Over a series of trials, French observed that his paramecia gradually improved by eliminating ineffective responses such as swimming upward until stopped by the surface and diving downward but stopping before reaching the mouth of the tube. Later, Huber, Rucker, and McDiarmid (1974) replicated French’s experiment, extensively.

Much earlier, Fleure and Walton (1907) placed pieces of filter paper on the tentacles of sea anemones at 24-hour intervals. At first the tentacles grasped the filter paper and carried it to the mouth where it was swallowed and, later, rejected. After two to five trials, however, trained animals rejected the paper before carrying it to the mouth and the tentacles failed to grasp the paper at all in later trials.

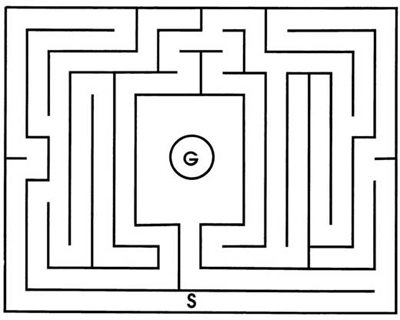

MAZE LEARNING

Hampton Court Palace just outside of London was an important royal residence from the 16th to the 18th century and remains a fine place to visit. Its most famous attraction is an elaborate maze with tall hedges for walls. Visitors can still try to solve the maze for themselves. An attendant sitting in a wooden tower above the maze used to direct people if they asked for help, but hard times have reduced the palace labor force and this post is usually vacant now. In the early 1900s, experimenters tested rats in mazes that copied the plan of the Hampton Court maze shown in Fig. 3.2. Hungry rats started from the same peripheral point on every trial and found food in the goal box at the center.

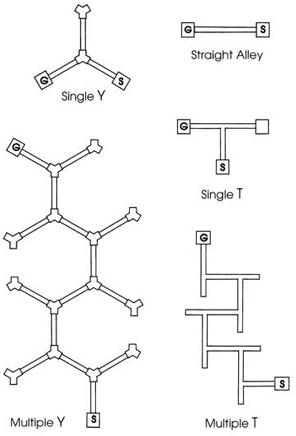

Replicas of mazes that wealthy people used to build for human amusement introduce complications that modern experimenters attempt to avoid when searching for general laws of learning. The earliest improvements consisted of a series of uniform T-or Y-shapes with one arm of the T or Y opening into the stem of the next unit and the other arm serving as a cul-de-sac. Gradually, even these were reduced to a single T or Y with only one choice.

Figure 3.3 shows the evolution of maze plans through the first half of the 20th century. The earlier, more elaborate mazes appear at the bottom of Fig. 3.3, the simpler, more modern mazes at the top. The single T or Y became the most popular maze after World War II. In many laboratories, even these simple mazes gave way to the straight alley runway in which learners, usually rats, run from one end to the other of a single alley with no choice except to run or to dawdle.

FIG. 3.2. Plan of the Hampton Court maze used with laboratory rats. The experimenter places the rat at S. The rat can find food by reaching G. From Small (1900).

FIG. 3.3. Modern maze plans used with laboratory rats. Copyright © 1997 by R. Allen Gardner.

SKINNER BOX

In the operant conditioning chamber or Skinner box, the learners, usually rats or pigeons, are deprived to make them hungry or thirsty. The chamber for rats usually has a lever protruding from one wall. The chamber for pigeons usually has a key placed at about the level of a pigeon’s beak. When a rat depresses its lever, or a pigeon pecks its key, the apparatus automatically delivers a small portion of a vital commodity, such as food or water. It may take some time before the first lever-press or key-peck, but after a few rewards the rate of responding rises rapidly.

The pattern of responding depends on the pattern of food or water delivery. If the chamber has more than one lever or key, each lever or key can deliver a different amount or rate of food or water. If the chamber has visual and auditory displays, each display can be correlated with a different amount or rate of food or water. With several levers or keys and several displays, experimenters can introduce virtually any level of complexity into the basic procedure.

The only limit on this procedure seems to be the ingenuity of the experimenters. One food-deprived rat learned to go through a doorway, climb a spiral staircase, push down a drawbridge, cross the bridge, climb a ladder, pedal a model railroad car over a track, climb a flight of stairs, play on a toy piano, run through a tunnel, step into an elevator, pull on a chain which lowered the elevator to the starting level, and finally press a lever and receive a small pellet of food (Bachrach & Karen, 1969). In another variant, pigeons learned to push a cube to a point below a suspended lure, climb on the cube, peck the lure, and then receive access to grain. The starting placement of the cube varied randomly from trial to trial and components of the sequence—pushing, climbing, pecking—were learned separately so that the pigeon had to put them together for the first time in test trials. The lure was a miniature yellow plastic banana, perhaps to suggest Köhler’s (1925/1959) famous demonstrations of insightful problem-solving by chimpanzees (Epstein, Kirshnit, Lanza, & Rubin, 1984).

Schedules

Variations in the temporal pattern of food or water delivery are called schedules of reinforcement. When a Skinner box delivers rewards after every correct response, the schedule is called continuous reinforcement. When the apparatus only delivers rewards after some fraction of correct responses, the schedule is called partial (or intermittent) reinforcement.

In modern laboratories, the term schedules of reinforcement almost always refers to the delivery of reward in a Skinner box or some analogous experimental situation. The schedules studied by Skinner and his followers fall into two basic types depending on whether the schedules deliver reward on the basis of time or responses since the last reward. Time-based schedules are called interval schedules; response-based schedules are called ratio schedules. Regular schedules are called fixed schedules; irregular schedules are called variable schedules.

Fixed Interval

In a fixed interval schedule, rewards follow the first response, which occurs after some specified period of time since the last reward. In a 1-minute fixed-interval schedule, for example, at least 1 minute must elapse between each reward. After each reward a clock measures out 1 minute and at the end of that time sets the apparatus to deliver a reward for the next response. The next response also resets the clock to measure out the next interval. In experimental reports, the abbreviation for a 1-minute fixed interval schedule is “FI 60.”

Variable Interval

In a variable interval schedule, the time between rewards varies, unpredictably. In a 1-minute variable interval schedule, an average of 1 minute must elapse between rewards. After each reward a clock measures out a particular time interval, say 20, 40, 60, 80, or 100 seconds. An unpredictable program selects one of the intervals before each reward with the restriction that the selected intervals have a mean of 60 seconds. At the end of each interval, the clock sets the apparatus to deliver a reward for the next response. The next response also resets the clock to measure out the next interval. In experimental reports, the abbreviation for a 1-minute variable interval schedule is “VI 60.”

Fixed Ratio

A fixed ratio schedule delivers a reward immediately after the last of a fixed number of responses, such as 15. The abbreviation for a fixed ratio of 15 responses is “FR 15.”

Variable Ratio

A variable ratio schedule delivers a reward immediately after the last of a variable number of responses. A “VR 15” schedule could be produced by delivering a reward immediately after the 5th, 10th, 15th, 20th, or 25th response, with the actual number varying unpredictably from reward to reward.

There are many more, mixed types, of schedule that experimenters introduce for special purposes.

Maintenance Versus Conditioning

Each of Skinner’s schedules of reinforcement produces a characteristic pattern of responding in the Skinner box. Skinner and his followers have always pointed to the regularity and replicability of these patterns as evidence for the scientific lawfulness of operant conditioning. Closer inspection of these findings, however, leads to serious questions about the relevance of the patterns to laws of conditioning. The major effects look much more like momentary effects of receiving small units of food and water in patterns rather than long-term effects of conditioning. Because the characteristic effects of Skinner’s schedules of reinforcement are so closely tied to the procedure of the operant conditioning chamber, they may be irrelevant to behavior outside of the chamber.

Under fixed interval and fixed ratio schedules, each reward signals the beginning of a period without rewards. During these times animals shift to other behavior, such as grooming and exploring the apparatus, and this appears as a pause in lever-pressing or key-pecking. When the passage of time signals the approach of the next reward, the animals shift back to lever-pressing and key-pecking and this appears as a resumption of responding. The animals pause longer when the interval between rewards is greater. Skinner described the records of responding under fixed interval and fixed ratio schedules as “scalloped.” That is, they show a pause followed by slow responding followed by more and more rapid responding until the next reward, which is followed by the next pause.

In variable interval and variable ratio schedules, each reward also signals the beginning of a period in which reward is unlikely, but the length of the period of nonreward is less predictable. The pause after each reward is shorter and the overall rate of responding between rewards is steadier than in fixed schedules. Because responding is more uniform under variable interval schedules, they are often used for training as a preliminary to some other treatment, such as a test for stimulus generalization (Thomas, 1993) or the effects of drugs (Schaal, McDonald, Miller, & Reilly, 1996).

Actually, animals must always stop pressing the lever or pecking the key, briefly at least, to consume a reward so there is always some pause after each reward. After that, pauses and acceleration depend on the likelihood of the next reward.

Virtually all of the investigations of schedules of reinforcement have been conducted in a particular sort of experiment. In these experiments, the original conditioning consists of reward for each response. Then, the number of responses required for each reward is gradually increased to some intermediate number. After the animal reaches a reliable rate of responding for the intermediate schedule, the experimenters vary the schedule from day to day. Every time the experimenters change the schedule, they allow the animal some time to adapt and then, when the rate of responding seems to stabilize, they compare the rates induced by different schedules during these stable periods.

In most experiments each experimental subject goes through many shifts in schedule, cycling through the whole range of schedules many times. The results show that animals respond in characteristic patterns to each schedule regardless of the number of cycles or the number and type of other schedules in each cycle. That is to say, the effects of the schedules are localized and independent of each other. They look more like patterns maintained by the short-term effects of each reward than like the long-term effects of conditioning. They are at best only indirectly related to any basic laws of conditioning.

This book describes these four types of schedule for historic interest and also for reference so that readers can decode abbreviations such as “FR 10” and “VI 30” when they appear in professional descriptions of experimental procedure.

Time Schedules

Recently, experimenters have added two types of schedule that deliver rewards after a predetermined time, regardless of response. These are called fixed time and variable time schedules. In interval schedules, the first response after the end of a predetermined interval earns a reward. By contrast, in time schedules the apparatus delivers rewards precisely at the end of a predetermined interval whether or not the rat ever pressed the lever during the interval. Consequently, time schedules are noncontingent schedules. Strictly speaking, time schedules resemble classical conditioning more than instrumental conditioning. Yet, time schedules maintain robust rates of lever-pressing and key-pecking. If classical and instrumental conditioning are two distinct forms of learning suitable for two distinct forms of response, this finding is very puzzling. This book looks more closely at this puzzle and other puzzles like it in later chapters.

DISCRIMINATION

Animals must learn to discriminate between different stimuli as well as to generalize from one stimulus to another. They must be able to respond differentially, depending on the stimulus situation. Laboratory procedures for studying discrimination learning have used two basic procedures: successive and simultaneous presentation.

Successive Procedure

Successive discrimination is the most common discrimination procedure that experimenters use in the Skinner box. A single stimulus, usually a light or a sound, serves as S+ and a second stimulus serves as S–. The two stimuli appear one at a time in an unpredictable sequence and the apparatus delivers S* after responses to S+ and no rewards or less frequent rewards after responses to S–. The experimenter scores results in terms of the differential frequency or rate of response during the positive and negative stimulus periods. In the Skinner box for pigeons, visual stimuli are often projected directly on the key that the pigeons peck as in Hanson’s experiments on the peak shift (chap. 13). Touch-sensitive video screens replace keys in many modern laboratories (Wright, Cook, Rivera, Sands, & Delius, 1988).

Simultaneous Procedure

In the simultaneous procedure, S+ and S– appear at the same time, usually side by side, and the subject chooses between them by responding to one side or the other. In a single-unit T-or U-maze, for example, one arm can be black and the other white. Experimenters prevent subjects from seeing into the goal boxes by placing curtains at the entrance to the goal boxes, or by making them turn a corner to enter the goal box (converting the T-shape to a U-shape as shown in Fig. 7.1). For half of the animals, white is S+ and black is S–, and for the other half black is S+ and white is S–. Reward is in the goal box at the end of the S+ arm and reward is absent from the goal box at the end of the S– arm. The experimenter alternates the left-right arrangement of S+ and S– in a counterbalanced and unpredictable sequence, and measures discrimination in terms of percentage choice of the S+ arm.

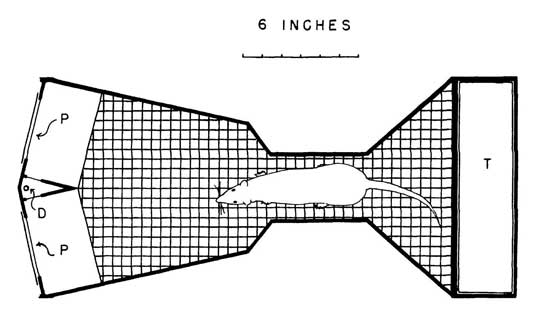

The diagram in Fig. 3.4 shows an automatic two-choice apparatus. Coate and R. A. Gardner (1965) designed this device to expose rats to visual stimuli projected on panels as the rats pushed the panels with their noses. Effective exposure is necessary, because rats are nocturnal animals with poor vision and they are much less interested in visual stimuli than the human beings who design the experiments.

In a Skinner box for pigeons there can be two keys—one green, the other red, for example—with the left-right arrangement of green and red alternated in a balanced but unpredictable sequence. An automatic device delivers rewards for pecking at the S+ key, but delivers no rewards or fewer rewards for pecking at the S– key.

Multiple Stimuli and Responses

Experimenters can present more than one S+ and more than one S–, or present more than one pair of stimuli to the same subject in the same discrimination task depending on the experimental objectives (Coate & R. A. Gardner, 1964; R. A. Gardner & Coate, 1965).

FIG. 3.4. An automatic apparatus used by Coate and R. A. Gardner (1965) to study simultaneous discrimination. At the beginning of a trial the apparatus is dark. When the rat steps on the treadle (T) S+ and S– appear side by side projected on the plastic panels (P) by two projectors (not shown) just beyond the panels. When the rat pushes the panel lighted with S+, a dipper (D) delivers a drop of water and both projector lights turn off again. When the rat pushes the panel with S– the projectors turn off, but the dipper does not deliver any water. The rat can start the next trial by stepping on the treadle again. Copyright © 1997 by R. Allen Gardner.

REINFORCEMENT AND INHIBITION VERSUS DIFFERENTIAL RESPONSE

In reinforcement theories, reward for responding to S+ builds up excitation for responding to S+ and nonreward builds up inhibition against responding to S–. As a result, the strength of the criterion response, Rc, to S+ is greater than the strength of Rc to S–. In a successive discrimination in the Skinner box, however, pigeons never just stand in front of the key, pecking it when S+ is lighted and waiting for S+ to reappear when S– is lighted. They engage in other behavior—preening, flapping their wings, walking about the box, and so forth—whether S+ or S– appears on the key. With discrimination training, they do more pecking when S+ is lighted and more other behaviors when S– is lighted. They seem to be learning one set of responses to S+ and a different set of responses to S–.

In a simultaneous discrimination, animals could be learning to approach S+ and avoid S–, or they could be learning two different habits. In an apparatus like the one in Fig. 3.4, the two stimuli appear side by side and there are only two possible spatial arrangements: S+ on the left and S– on the right, S+/S–, and S– on the left and S+ on the right, S–/S+. Instead of approaching S+ and avoiding S–, the animals could be learning to go left when they see the arrangement S+/S– and right when they see the arrangement S–/S+, as in a successive discrimination. Although this seems intuitively unlikely, several experiments indicate that rats and pigeons learn a simultaneous discrimination as two different spatial habits rather than as reinforcement of an approach to S+ and avoidance of S– (Pullen & Turney, 1977; Siegel, 1967; Wright & Sands, 1981).

SUMMARY

Instrumental conditioning is a versatile technique that has generated an enormous number and variety of learning tasks for laboratory studies. This chapter only introduces the most basic procedures and terms. Later chapters describe additional variations of this technique that experimenters have introduced for specific experimental problems. Later chapters also question the traditional claim that there are two principles of conditioning: one principle of conditioning by contiguity sufficient for classical conditioning, and a second principle of conditioning by contingency necessary for instrumental conditioning.