CHAPTER 4

Mechanisms of Conditioning

THEORY AND EXPERIMENT

In the 1970s, the John Hancock insurance company erected the tallest building ever attempted in the city of Boston up to that time. They had to level a portion of historical Copley Square to make room for it, and this dramatically altered the historical neighborhood. Some grand old structures look puny and insignificant beside the new monster. Soon after it was completed, windows began to fall out of their frames in the upper stories. The sight of the modernistic marvel with random boarded patches where windows should have been, and with the sidewalk cordoned off below to protect pedestrians, must have given some satisfaction to the many enemies of the Hancock Tower. It certainly troubled the owners and builders and cost them a lot of money and prestige.

What went wrong with the Hancock Tower? Well, modern skyscrapers actually sway in the wind, and the upper stories sway considerably more than the middle stories. The structure must be able to withstand the force of the wind and materials must be flexible enough to stretch with the forces of distortion and then return to their original shape. In the Hancock Tower, the rigidity of the glass windows was improperly matched with the flexibility of their metal frames, so many of the windows just popped out when the wind blew.

Grand disasters like the John Hancock Tower seldom happen in modern times because scientists have mastered most of the physical principles of structural engineering and also because they usually test new design features as they are invented and before a new structure goes up. This is a basic principle of modern technology: First, the theory, next the laboratory tests, and only then the practical structure. But, the practical test is the object of all of the theory and laboratory testing that went before.

In ancient times, trial and error in architecture was costly and builders had to be conservative. It was too dangerous to try anything very far beyond the last successful design. The great cathedrals of Europe were marvels of engineering in their day and they were based on principles of physics that scientists and engineers had discovered. But, the buildings themselves were the laboratories and testing grounds for new principles.

The basic principles of conditioning and learning could yield immensely more profit than the grandest dreams of the most ambitious architects. Driving, reading, writing, athletics, computer repair and programming, problem solving in every field, require skills that must be learned. Scientists study elementary forms of conditioning and learning to discover the laws of all skilled behavior. The strategy of analyzing the complex phenomena of the practical world into relatively simple components in order to discover the most basic and most powerful laws of physics, chemistry, and biology, has paid handsomely in every field of modern life. This is the objective of the theories and experiments covered in this book.

This is why so many experiments concentrate on relatively simple animals and relatively simple learning tasks. The strategy is to discover the most basic principles and build step by step to the most complex forms of learning. This is a bottom-up strategy. The eventual objective is a practical outcome in the real world. Theories are about the real world and a theory fails in a fundamental way if it only works on paper or in a computer.

STIMULI AND RESPONSES

It should be clear, even from the brief reviews of experiments in chapters 1 through 3, that many quite different things are called stimuli and responses. Stimuli can be lights, sounds, pictures, songs, odors, tastes, an entire situation, electric shock, even time itself. Responses can be lever-presses, problem-solving, muscle twitches, glandular secretions, dilation of blood vessels, electrical impulses in a neuron, eyeblinks, even withholding response for a period of time.

Most experimenters write as if any reasonable reader must agree that they are correct in calling this or that in their experiment a stimulus and this or that other a response. By and large, reasonable readers do agree with the experimenters. The trouble comes when readers disagree in their interpretations. This section of the book defines stimuli and responses in terms of a general system that receives inputs from its environment and responds with outputs into its environment.

In modern times, computers as well as animals respond to stimuli and they also solve problems and modify their own behavior on the basis of past experience. Computers can do many things that once seemed to be exclusive abilities of living animals and even some things that seemed to be exclusively human abilities until quite recently. The complex behavior of humans and other animals challenges computer scientists the way flying birds once challenged engineers.

In the case of bird flight, biologists only discovered how birds fly after engineers discovered how to build machines that fly. To build flying machines, engineers had to master the same laws of physics that govern the flight of birds. In modern times, many scientists hope to discover how humans and other animals learn and think by studying computer science. Whether or not this is a sound strategy, the practical, bottom-up problem-solving approach of the engineer can be useful for defining biological problems.

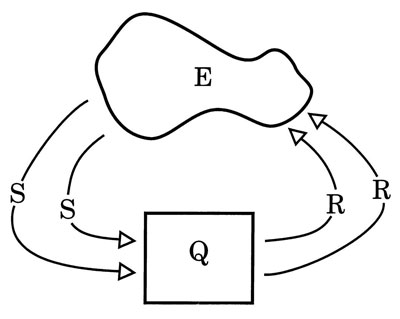

Some version of the diagram in Fig. 4.1 appears in many textbooks on computer science. It is a good place to start talking about stimuli and responses. Let’s call it the E-S-Q-R paradigm. The free-form figure represents the environment, E, and the box represents the system (a machine or an animal).

FIG. 4.1. The E-S-Q-R paradigm. Copyright © 1997 by R. Allen Gardner.

S stands for an input into the system. All inputs are stimuli and all stimuli are inputs. They come from the environment.

R stands for an output from the system. All outputs are responses and all responses are outputs. Outputs into the environment often change the environment, which may change the inputs from the environment, which may then change the outputs.

Q stands for the state of the system. In a digital computer, the state Q is the pattern of on-off switches at any given moment. Presumably, the state Q of a living animal is an analogous pattern of on-off elements in neural circuitry. The response to a given stimulus or input depends on the state Q of the system. At any given time, Q depends on the way the system was originally constructed and programmed and also on the past history of the system, which is written with many tiny on-off switches.

FOUR BASIC MECHANISMS

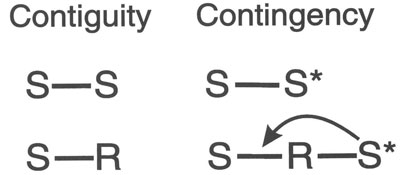

In roughly one century of theorizing about the basic mechanism of all learning, only four candidates have emerged. Considering the intelligence and knowledge of the theorists and the amount of thought and experimentation devoted to this question, the four mechanisms shown in Fig. 4.2 probably exhaust the possiblilities.

FIG. 4.2. Four mechanisms of conditioning. Copyright © 1997 by R. Allen Gardner.

S-S represents conditioning by contiguity between two stimuli. By S-S contiguity, the dog associates an arbitrary stimulus Sa (such as a light or a tone) with another stimulus Sb (which could be food) because they have appeared together.

S-R represents conditioning by contiguity between a stimulus and a response. A dog makes the obligatory response of salivating to the food soon after hearing the tone Sa. This contiguity between tone and salivation associates salivation to the tone.

S-S* represents conditioning by contingency between a biologically significant stimulus, S* (such as food or painful shock), and the prior appearance of an Sa (such as a light or a tone). According to this mechanism the animal learns that Sa predicts S*. The symbol S* can refer to an appetitive S* such as food or an aversive S* such as painful shock. The difference between S-S* and S-S is that any two stimuli can be associated by S-S contiguity, but S-S* conditioning requires a biologically significant S* that the Sa predicts.

S-R-S* represents conditioning by contingency in which an S* is contingent on the performance of a particular response to an Sa. As shown in the diagram, the S* acts backward (arrow) to strengthen or weaken the S-R association. In the case of an appetitive S* like food, the effect is to strengthen the bond between the Sa and the response. In the case of an aversive S* like shock, the effect is to weaken the bond between the Sa and the response. In this mechanism, the S* is a consequence of the response to Sa; hence it is also called learning by consequences. Traditional theorists reserve this mechanism for instrumental conditioning.

Common Sense

Most students and former students have already thought about many of the questions in this book. After all, learning is the main business of a student. Informally, most students have already considered the four mechanisms on this list. Students memorizing the vocabulary of a foreign language usually spend some time with a paired list of English and, say, French words, reading them in pairs one pair at a time as if forming S-S associations. People have argued that S-S association will not work. You have to say the words as you read them, preferably out loud if you really want to learn to speak the foreign language. That would be an S-R mechanism. On the other hand, cognitive psychologists who stress the mental representation of stimuli in the brain tend to stress S-S association. There are cognitive sports psychologists who claim that even athletes like tennis players can improve just by playing moves “in their heads” (e.g., Savoy, 1993).

Mechanisms that include a special biologically significant stimulus (S*) start with the notion that learning tends to be biologically adaptive. According to S-S* contingency, an arbitrary stimulus, Sa, becomes associated with a biologically significant stimulus, S*, such as food or painful shock. In this cognitive view, the learner learns that the Sa predicts the S*. The S-R-S* version of this is that the learner learns that S* is contingent on a particular response, Ra, to the Sa. If the S* is appetitive, like food or water, contingency strengthens the association between Sa and Ra, thus increasing the probability that Ra is the response to Sa. If the S* is aversive, like painful shock, the contingency weakens the association, decreasing the probability that Ra is the response to Sa. In S* theories learning is always motivated, and this agrees with common sense.

Parsimony

Since each of the four proposed mechanisms of learning seems to have some merit in some situations, a reasonable student might ask why we cannot use all four principles and apply each to the learning situations that seem to fit best. The trouble with this strategy is that it is unparsimonious. Why limit the number of conditioning mechanisms to four? Why not add three, four, five, or any number of additional mechanisms as the need arises? Why not have mixed cases in which more than one mechanism applies at the same time? Common sense looks at each case of learning—memorizing French nouns, studying college economics, skating on ice, absorbing social values—one at a time and this allows the common sense interpreter to find a new mechanism or combination of mechanisms for each case. There are no limits to the number of mechanisms that can agree with common sense. There can be as many different mechanisms as there are different types of learning. Common sense is unparsimonious.

Many large organizations are governed in this way. Anyone who has ever dealt with tax laws, or welfare laws, or the U.S. Army has had firsthand experience with unparsimonious rules. Large organizations set down rules. As exceptions and unexpected cases arise, they add rules. To satisfy politicians, clients, and interest groups, they add still more rules. Eventually, there are so many rules that the rules start to contradict each other. The mass of rules becomes so entangled that it is extremely difficult, if not impossible, to tell which rule or which mixed set of rules applies in any given situation. Decisions have to be made on a case-by-case basis by bureaucrats. Soon, rather than government by laws we have government by bureaucracy, often with hideous consequences for citizens and clients.

Scientists apply the principle of parsimony to avoid this bureaucratic result of common sense. The objective is the smallest set of principles that cover the largest set of findings. Much of the difference between scientific discovery and bureaucracy depends on this strategy.

IMPLICATIONS

There are too many mechanisms in Fig. 4.2. The scientist who obeys the rule of parsimony must eliminate some of them, hopefully all except one. In this the scientist is like the detective who cannot solve the crime because there are too many likely suspects. Hunches, intuition, and common sense are helpful as a start, but, they tend to produce too many likely suspects in scientific work as in detective work. Fortunately, neither the scientist nor the detective needs to depend on hunches, intuition, and common sense. Both can find out more facts about the suspects and the crime.

In the classic detective story, for example, the wife of the murder victim may be a prime suspect. She was abused by her husband for years and she stands to inherit a great deal of money. The detective finds that the wife is frail, confined to a wheelchair, and left-handed. If the victim was bludgeoned to death by a tall, right-handed assailant, this eliminates the wife as a suspect.

In the same way, each of the proposed mechanisms in Fig. 4.2 has necessary implications that can be checked against the evidence of basic experiments in classical conditioning. By comparing these implications against the evidence, scientists can narrow the list of possibilities.

S-S CONTIGUITY

This principle is familiar to most readers because it is based on traditional philosophical and psychological notions about the association of ideas in the mind. Even though S-S association is firmly grounded in folk wisdom and cognitive psychology, the history of science teaches us that an idea can be repeated by wise folk for hundreds, even thousands, of years and still be quite false.

Introspective reports of human beings contain vivid descriptions of memory by the association of ideas or images in the mind. Is this evidence? Remember the blind and blindfolded students in Dallenbach’s experiments. They reported vivid images of obstacles in their path, experienced as impressions on the skin of their faces. Yet, sound experiments described in chapter 1 revealed that personal impressions are as unreliable as any other kind of armchair philosophizing. The problem of this section of this chapter is to compare the implications of S-S association by contiguity with experimental evidence.

Pavlov argued for S-S contiguity. According to Pavlov, conditioning consists of a connection between the neural representations of two stimuli in the brain. In this view, a dog salivates to a tone as if it is the food, after associating the sound of the tone with sight of the food. Through conditioning, the tone becomes a substitute for the sight of the food. The principle of stimulus substitution was a fundamental part of Pavlov’s theory and it is widely popular as this chapter is being written, in spite of the well-known, long-standing, contrary evidence reviewed here.

The mechanism of S-S contiguity has clear implications. How do these compare with the evidence accumulated in a century of research on classical conditioning?

Fractional Anticipatory Response

If the CS becomes a substitute for the UCS, then the dog should respond to the tone in the same way that it reponded to the food. As chapter 2 showed, however, a dog in Pavlov’s procedure responds to the food in one way and to the light in a very different way. At best the CR is a fraction of the UCR to the food. This fact only appears when experimenters like Zener (1937) report learning in ethologically rich detail.

If it existed, stimulus substitution would be maladaptive under most natural conditions. It is adaptive to associate smoke with fire, but it would be maladaptive to respond to smoke as if it were the same as fire. It is adaptive to associate the word apple with apples, but it would be maladaptive to try to eat the word, or peel it and slice it.

Length of the Interstimulus Interval

If conditioning connects the CS and the UCS by contiguity alone, then closer contiguity should make easier and stronger connnections. Thus, a second implication of S-S contiguity is that the closer the contiguity in space and time the better the conditioning; the shortest possible separation should be the best. The shortest possible ISI is zero and this is called simultaneous conditioning. Chapter 2 describes the results of experiments that looked for the most favorable ISI. Although shorter intervals are generally more favorable than longer intervals, the most favorable ISI is much greater than zero. Indeed, the most favorable interval is long compared to the speed of neural conduction, at least three to four times longer than simple reaction time, and often longer than that. The offset is so much longer than necessary that it implicates some additional process beyond S-S contiguity.

Direction of the Interstimulus Interval

Sometimes in nature the effect of a variable is continuous over a wide range, but discontinuous at certain special points called cusps. A possible defense of S-S contiguity is that a simultaneous ISI is a special point. In this view, the sight of food overshadows the tone when both appear simultaneously. The sight of food is so exciting that the dog fails to notice the tone.

This version of S-S contiguity also implies that shorter ISIs should be more favorable than longer ISIs, even though the shortest possible intervals are unfavorable because of overshadowing. This allows for an offset between the CS and UCS, but still implies that the shorter the interval the greater the conditioning. Even allowing for overshadowing at the cusp of zero, S-S contiguity still implies that the ISI curve should be symmetrical: Short negative intervals (UCS before CS) should be as favorable as equally short positive intervals (CS before UCS). As the evidence reviewed in chapter 2 shows, however, the ISI curve is definitely asymmetrical. The ISI curves in Fig. 2.3 are typical. Instead of a cusp at zero, the curve is continuous with a maximum at about a half-second. Short positive intervals are plainly more favorable than short negative intervals.

Many experiments on length and direction of favorable intervals contradict the temporal implications of the S-S mechanism. These contradictions should eliminate S-S contiguity from the list of suspects in Fig. 4.2.

S-S* CONTINGENCY

Pavlov’s basic theory can be preserved by claiming that a UCS such as food, shock, air puff to the eye, and so on, is a special biologically significant stimulus, an S*, while the CS is an arbitrary stimulus, an Sa. In this view, the arbitrary tone predicts the biologically significant food. By learning the contingency between Sa and S*, the dog can respond appropriately to the food before the food arrives. This S-S* contingency is also compatible with the finding that the CR is usually only a fractional part of the UCR. An animal can only profit from such a contingency, of course, if the Sa predicts food, shock, or air puffs. Thus, S-S* contingency is compatible with an offset between Sa and S* and the asymmetry of the ISI curve.

In this version of the traditional doctrine of the association of ideas, some ideas are more important than others. These privileged ideas are the UCSs of classical conditioning, while the lesser ideas are the arbitrary CSs. Conditioning takes place when a lesser stimulus predicts a privileged (biologically significant) stimulus, such as food or shock. There is a clear implication of this mechanism. If S-S* contingency is the mechanism of classical conditioning, then conditioning should be impossible without an S*. Before considering the evidence on this point, let us first consider the other mechanism of Fig. 4.2 that requires an S*.

S-R-S* CONTINGENCY

The ISI curve shows that Sa and S* need to be offset and that forward conditioning is much better than backward conditioning. Those facts agree with the principle of S* contingency. Even so, why should the most favorable ISI be so long, at least three to four times the length of simple reaction time? If the association of ideas takes place entirely within the brain, then it should be very rapid. Perhaps, the ISI must be long enough to permit the learner to make an anticipatory response before the S* arrives.

In classical conditioning, the experimenter presents the UCS after the CS regardless of anything that the subject does between CS and UCS. Nevertheless, the CR can alter the UCS when it arrives and this alteration can be a significant consequence: If the dog’s mouth is wet with saliva when food arrives, the food will be easier to eat; if the college student’s eye is blinking when the air puff arrives, the air puff will feel less irritating; defensive responses such as leg withdrawal and heart rate acceleration may reduce the pain of shocks; and so on, through any list of common UCSs. It is easy to think of a reasonable source of reward for anticipatory responses in just about any type of classical conditioning experiment.

If classical conditioning depends on the consequences of response during the interval between Sa and S*, that might very well require an appreciably long ISI. The process would require enough time for the animal to respond and then to receive and process information about the consequence of the response. This interpretation of the ISI curve would imply that, in spite of traditional views, both classical and instrumental conditioning are based on S-R-S* contingency.

A procedure called omission contingency tests this implication of S-R-S* contingency. The traditional distinction between classical and instrumental conditioning depends on the difference between obligatory association by contiguity and arbitrary association by consequences. In classical conditioning, salivation, leg withdrawal, eyeblink, and so on, are supposed to be obligatory responses to the S*, or anticipatory fractions of obligatory responses. In contrast, the responses in instrumental conditioning, pressing levers, running mazes, playing tennis, driving cars, and so on, are supposed to be arbitrary responses that become conditioned by the arbitrary rewarding effect of an S*.

If, in classical conditioning, food rewards salivation then it should be possible to condition both increases and decreases in salivation by arbitrarily altering the consequences. Under the standard procedure, Sa, say a tone, appears and then food appears whatever the dog does. Under the omission contingency, food follows tone, if and only if the dog fails to salivate. If the dog salivates before the food, the food is omitted. An omission contingency rewards the dog with food for withholding salivation immediately after the CS.

At first, under omission contingency, a dog salivates when the food appears and then salivates after the tone but before the food, as in ordinary conditioning. Under an omission contingency, however, the experimenter omits the food on those trials in which the dog salivates before the food. When food is omitted, the dog is on extinction and salivation decreases. When salivation after the CS decreases through extinction, lack of salivation satisfies the omission requirement and the experimenter delivers food again. After trials with food, salivation again increases, food is again withheld, and salivation again decreases. This can go on indefinitely.

Dogs seem to be unable to learn to withhold salivation in order to obtain food under conditions in which a strictly noncontingent procedure yields robust increases in salivation (Konorski, 1948; Sheffield, 1965). This result indicates strongly that the responses that increase in classical conditioning are obligatory rather than arbitrary. If salivation were an arbitrary response for a hungry dog, then experimenters could use food as an arbitrary reward to condition dogs either to salivate or to refrain from salivating (for replication, see Gormezano, 1965, on aversive conditioning of rabbits; Gormezano & Hiller, 1972, on appetitive conditioning of rabbits; and Patten & Rudy, 1967, on appetitive conditioning of rats).

ROLE OF S*

Hedonism, the pleasure principle, is deeply embedded in Western culture. Learning to seek pleasure and avoid pain appears in the Old Testament and the New Testament, in the writings of Freud and the writings of Skinner, in the profit motive and the criminal code. Can such a strong cultural tradition fail to agree with experimental evidence?

Higher-Order Conditioning

An obvious problem with the pleasure principle is that adult human beings and many other animals learn all sorts of things without eating, drinking, or escaping from pain. Obviously, arbitrary stimuli such as money and scores on exams are removed from any immediate satisfaction of biological needs by several steps at least. How can these arbitrary stimuli evoke pleasure and pain? In S-S* and S-R-S* theories of learning, Pavlov’s principle of higher-order conditioning is supposed to bridge the gap between biological need and the arbitrary stimuli in daily life.

Experimenters have conditioned dogs to salivate at the arbitrary sound of a tone or the arbitrary lighting of a light by pairing the arbitrary stimulus with food. After conditioning a dog to salivate to a light, experimenters can try to condition the dog to salivate to a tone by pairing the light with a tone, that is, without pairing the tone with food. Pairing the CS of one phase of an experiment with a new CS in a second phase of the experiment is called second-order conditioning. Conditioning by pairing the CS of the second phase with still a third CS in a third phase, and so on, is called higher-order conditioning.

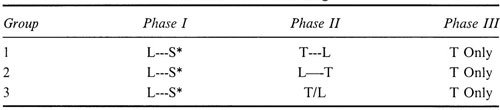

In higher-order conditioning, the first-order CS becomes a second-order UCS by pairing with the first-order UCS as shown in Table 4.1. By a series of intermediate CS-UCS steps, a still higher order CS becomes a UCS even though the original biologically significant UCS never appears after the first phase of conditioning. The whole system of CS-UCS pairs, each one built on the one before, depends on the initial pairing of the first arbitrary CS with a biological UCS. Higher order conditioning is vital to S* contingency. Without higher order conditioning, contingency could apply only to behavior that is directly and immediately associated with an S* such as food, water, or escape from pain.

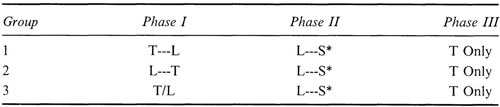

TABLE 4.1

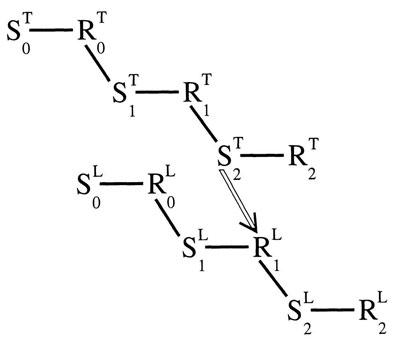

Second-Order Conditioning

Table 4.1 shows the standard paradigm for second-order conditioning. Tone (T) is Sa and light (L) is Sb. In Phase I (Monday) all three groups are treated the same; a shock (S*) follows the light. In Phase II (Tuesday) the groups are treated differently. For Group 1, the light follows the tone. For Group 2, the tone follows the light. For Group 3, both light and tone appear as often as for Groups 1 and 2, but they are unpaired. In Phase III (Wednesday) there is a test in which T appears alone for all three groups. Finally, there is a second parallel set of experimental groups (not shown in Table 4.1) in which the roles of T and L are reversed to show that Sa and Sb are arbitrary stimuli.

Groups 2 and 3 are control groups that receive the same amount of exposure to tone and light in Phase II as Group 1. For Group 2 the light precedes the tone and for Group 3 the sequence is random. These are both unfavorable temporal relations for conditioning an anticipatory response, as shown in Fig. 2.3 and in the section on backward conditioning later in this chapter. Groups 2 and 3 test for the effects of habituation and sensitization discussed in chapter 2. If the response of Group 1 in the test phase is greater than the response of Groups 2 and 3, then the experiment demonstrates second-order conditioning. The subjects in Group 2 should respond less than the subjects in Group 3 because Group 2 is a backward conditioning group.

Pavlov and others (Brogden, 1939a, 1939c; Murphy & Miller, 1957; Razran, 1955) tried using a CS from one experiment as the UCS in a second experiment with the same animal, but with limited success. In one of Pavlov’s demonstrations the original CS was a metronome. When salivation to this CS was firmly established, a black square was presented briefly just before the metronome. On the 10th pairing of the black square and the metronome, there was a salivary response to the black square that was about half as strong as the response to the metronome. This is an example of second-order conditioning. Pavlov found third-order conditioning possible, but only with defensive reflexes such as responses to shock. He failed to establish any evidence for fourth-order conditioning in his laboratory (Pavlov, 1927/1960, pp. 34–35).

Evidence for second-order conditioning is acceptable, but unimpressive. Evidence for higher-order conditioning is weak at best. In Pavlov’s experiments and others since, the higher order CRs had typically low amplitudes and long latencies, and soon faded out entirely. The evidence reviewed in chapter 2 shows that first-order classical conditioning is a versatile and powerful phenomoneon of learning. The weakness of evidence for higher-order conditioning contradicts Pavlov’s principle of S* contingency as the mechanism of classical conditioning.

Preconditioning

By Pavlov’s principle of higher-order conditioning, arbitrary stimuli such as praise and money acquire their motivating S* properties by a chain of associations that begins with a biologically significant UCS. The UCS is the anchor that secures the chain of stimuli and responses to biological motivation. This implies that conditioning must be impossible without an S*. This implication can be tested directly by reversing the procedure of Table 4.1 and pairing the arbitrary tone and light in Phase I (Monday) before pairing light and shock in Phase II (Tuesday). The shock cannot start the chain of association if it appears after the pairing of light and tone. Any conditioning that occurs in Phase I of the experiment clearly indicates that S* and hence S* contingency is unnecessary for classical conditioning. This experimental paradigm, called preconditioning, appears in Table 4.2. Notice how it reverses Pavlov’s prescribed paradigm illustrated in Table 4.1.

In Table 4.2, tone (T) is Sa and light (L) is Sb. In Phase I (Monday) the groups are treated differently. For Group 1, the light follows the tone. For Group 2, the tone follows the light. For Group 3, both light and tone appear as often as for Groups 1 and 2, but they are unpaired. In Phase II (Tuesday) all three groups are treated the same; a shock (S*) follows the light. In Phase III (Wednesday) there is a test in which T appears alone for all three groups. Finally, there is a second parallel set of experimental groups (not shown in Table 4.1) in which the roles of T and L are reversed to show that Sa and Sb are arbitrary stimuli.

As in higher-order conditioning (Table 4.1) Groups 2 and 3 are control groups that receive the same amount of exposure to tone and light in Phase I as Group 1. They test for the effects of habituation and sensitization discussed in chapter 2. If the response of Group 1 in the test phase is greater than the response of Groups 2 and 3, then the experiment demonstrates preconditioning. By analogy with the usual training phase of classical conditioning, Group 2 is a backward conditioning group and the subjects in Group 2 should respond less than the subjects in Group 3.

TABLE 4.2

Preconditioning

In spite of the fact that it directly contradicts Pavlov’s theory, preconditioning is easy to demonstrate experimentally. Brogden (1939b) published the first clear demonstration of preconditioning using dogs as subjects, buzzer and tone as Sa and Sb, and shock as S*. There were earlier demonstrations of the same phenomenon (Bogoslovski, 1937; Cason, 1936; Kelly, 1934; Prokofiev & Zeliony, 1926), but these could be questioned by skeptics because they lacked necessary control groups. After World War II, several experiments confirmed and extended Brogden’s (1939a) findings (Bahrick, 1952; Bitterman, Reed, & Kubala, 1953; Brogden, 1947; Chernikoff & Brogden, 1949; Coppock, 1958; D. Hall & Suboski, 1995; Hoffeld, Thompson, & Brogden, 1958; Karn, 1947; Seidel, 1958; Silver & Meyer, 1954; Tait & Suboski, 1972). The preconditioning procedure is the reverse of the higher-order conditioning procedure prescribed by conventional learning theories, yet preconditioning is not only possible but it is easier to demonstrate than higher-order conditioning.

Preconditioning should be impossible if classical conditioning depends on S* contingency. The light and tone are arbitrary stimuli. Neither one can be privileged over the other because either one can serve as the preconditioning stimulus in Phase I of Table 4.2 as long as one starts before the other. Control Group 2 shows that the one that comes first in Phase I acquires the power to evoke the UCR in Phase III. Prediction of S* is impossible in Phase I because the S* appears for the first time in Phase II. Therefore conditioning is possible without any S*. The evidence of preconditioning eliminates both S-S* and S-R-S* contingency as necessary mechanisms for classical conditioning.

A Hybrid Paradigm

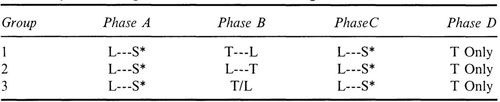

A latter day revival of Pavlov’s teaching stimulated a series of experiments on second-order conditioning (Hittesdorf & Richards, 1982; Holland & Rescorla, 1975; Leyland, 1977; Nairne & Rescorla, 1981; Rashotte, Griffin, & Sisk, 1977; Rescorla, 1979; Rescorla & Cunningham, 1979; Rizley & Rescorla, 1972). These latter day Pavlovians attributed the weakness of second-order conditioning in the experimental paradigm of Table 4.1 to extinction of the first-order CS in Phase II. To overcome this extinction, they inserted what they called “refresher” trials of light paired with shock just before the test trials. The refresher trials appear as Phase C in Table 4.3.

The trouble with the refresher Phase C is that it violates the operational definition of second-order conditioning. In Table 4.3, Phases A, B, and D are the same as Phases I, II, and III of the standard paradigm for second-order conditioning that appears in Table 4.1. At the same time, Phases B, C, and D are precisely the same as the standard paradigm for preconditioning that appears in Table 4.2.

TABLE 4.3

Hybrid Paradigm: Second-Order Conditioning With Refresher Trials

We know from many other experiments using the standard paradigm of Table 4.2 that Phases B, C, and D produce strong evidence of preconditioning without Phase A. Meanwhile, experiments using the standard paradigm of Table 4.1 show that Phases A, B, and D produce, at best, weak evidence of second-order conditioning. The need for refresher Phase C in the hybrid paradigm only confirms the failure of the standard paradigm of second-order conditioning. On the evidence then, Phase A is weak or ineffective without the refresher trials of Phase C. Phase A probably had little or no effect on the conditioning shown in Phase D. All or nearly all of the test results in Phase D should be attributed to Phase B followed by Phase C, but this is precisely the standard paradigm of preconditioning.

If, as Pavlov taught, classical conditioning must be anchored by the original pairing of CS with UCS, then second-order conditioning should be robust and preconditioning should be impossible. Rather than adding support for the power of second-order conditioning, the need for refresher trials confirms instead the usual finding that second-order conditioning is weak in comparison with preconditioning. The results of the hybrid paradigm add to the weight of evidence against S* contingency as the mechanism of classical conditioning.

There is a lesson in experimental design to be learned here. The interpretation of experimental results depends on experimental operations. It is probably impossible to design a perfect experiment that controls for all possible variables. It is possible, however, to design experiments that answer specific questions. When an experiment jumbles together the defining operations of two opposing principles, as in the case of the hybrid paradigm of Table 4.3, then the experiment fails to answer any specific question about either principle. Taken together with the results of the sound paradigms of Tables 4.1 and 4.2, however, these experimental findings support the conclusion that conditioning is both possible and robust without any S* contingency.

A Misnomer

In classical conditioning, the experimenter only manipulates the Ss and the S*s, their pairing and their timing. This led Pavlov and latter day Pavlovians who followed him to describe classical conditioning in terms of stimulus associations, either S-S or S-S*. In preconditioning, the UCR cannot appear until Phase II, which is the first time that the UCS appears. For this reason Pavlov’s followers usually called the experimental paradigm in Table 4.2 sensory preconditioning or sensory conditioning. The sensory label, however, fails to recognize the critical role of the control groups in defining the phenomenon. If all that happened in Phase I was that the subjects associated a tone with a light somewhere in their brains, then backward conditioning would be as easy as forward conditioning and the most favorable ISI would be very short, almost zero. In actual experiments, however, the control groups show instead that the offset between the arbitrary stimuli in Phase I is critical as in all classical conditioning.

The preconditioning experiments that have appeared so far have used constant ISIs, mostly in the range between 0.5 seconds and 5 seconds, which is a favorable range for aversive conditioning. These are surprisingly long units in neuroelectric time. The implications of the need for long ISIs appears earlier in this chapter and also in chapter 2. If ISIs of this length are necessary, it must be because something has to happen between Sa and Sb that is critical for conditioning; mere exposure to the stimuli is insufficient. Since the experimenter does nothing during the ISI, the critical thing that happens must be something that the subject does. The amount of time is long enough for the subject to make a response; indeed, it is long enough for three to four consecutive rounds of responses and stimuli. It is highly unlikely that preconditioning is based on sensory association. The success of the preconditioning paradigm is evidence for conditioning by S-R contiguity.

S-R CONTIGUITY

Experimental evidence eliminates three of the four prime suspects in Fig. 4.1. This leaves S-R contiguity as the only surviving possibility. As in any good detective story, it is insufficient to eliminate the other suspects. There must also be positive lines of evidence that implicate the remaining alternative. The comparison between Group 1 and Group 2 in preconditioning implicates S-R contiguity. Additional positive lines of evidence depend on an ethological alternative to S* contingency.

Sign Stimuli

Traditional learning theories are based on hedonism, the principle that pleasure and pain govern behavior. In this view, the S* is essential for learning because it causes pleasure or pain and the Sa becomes significant because it predicts the pleasure or pain of the S*. By contrast in ethology, S* is a sign stimulus that plays its vital role in behavior whether or not it evokes pleasure or pain. The essential property of a sign stimulus is that it evokes a response or a pattern of responses called an action pattern. In ethology what matters is what the S* makes the animal do rather than how good or how bad the S* makes the animal feel. An ethological S* acts forward rather than backward.

The three-spined stickleback, for example, is a traditional subject of ethological studies in the field and in the laboratory. The stickleback is a small fish commonly found in English streams and ponds. During the breeding season, male sticklebacks establish individual breeding territories which they defend vigorously against all other male sticklebacks.

How do these simple, little fish know that the invader is another male stickleback? Tinbergen noted that during the breeding season, the underside of males turns a fiery red color. To see if this is the cue that sets off territorial defense, Tinbergen placed individual males in laboratory fish tanks, gave them some time to establish themselves as territorial owners of the tanks, and then placed various objects in the tanks with the fish. The fish promptly attacked any red object. They usually ignored the same objects when they were painted some other color, even when the objects were exact replicas of living male sticklebacks, except for the color (Collias, 1990). Tinbergen also noticed that male sticklebacks living in tanks in front of the windows of the laboratory would launch furious attacks against the window side of their tanks when the fiery red vans of the Royal Mail Service rode past the windows (Tinbergen, 1953b, pp. 65–66). During the breeding season, the redness of objects that invade a territory is a sign stimulus that evokes attack from resident male sticklebacks.

Action Patterns

Ethological action patterns are true patterns in the sense that individual elements can vary while the pattern of behavior remains constant. Variations in the pattern of head-bobbing threat in different species of anoline lizards, for example, were first noted by Darwin. Within a species the extent and timing of the bobbing movements can vary significantly even though the species-specific pattern remains constant (Barlow, 1977). Birds weave string into their species-specific nests and sticklebacks threaten the red vans of the Royal Mail Service but we easily recognize the species-specific pattern of their behavior. These patterns appear in spite of variations in their elements. It is the patterns rather than the individual movements or muscle twitches that are constant in ethological action patterns.

TEMPORAL PATTERNS

The clue that points most directly to S-R contiguity as the mechanism of classical conditioning is the contrast between Group 1 and Group 2 in preconditioning. Why must one stimulus come before the other in classical conditioning and why such a long interval between stimuli? Clearly, something vital happens during the ISI. Since the experimenter does nothing during the ISI, the vital event or events must depend on something that the subject does.

Feedback Systems

The common combination of a furnace and a thermostat is a simple example of a machine that receives inputs from the environment and responds in a way that alters the environment, which in turn alters the input. When the temperature indoors drops below a set point, a switch is thrown that starts the furnace. When the heat from the furnace raises the temperature above another set point, the switch is thrown off, which stops the furnace. This is an effective way to keep the temperature of a home within a comfortable range. It is called a feedback system because the furnace cycles back and forth between on and off phases on the basis of information about cold and hot phases of the environment. Each change in input from the environment switches the machine back to the opposite phase. This feedback system is satisfactory for most homes and offices.

Thermostatic control by feedback is far from ideal, however. In most home furnaces, the fire cannot heat the house directly. It must first heat a chamber called the fire box. A second thermostat turns on the fan when the fire box reaches a suitable temperature. Otherwise, the fan would blow cold air into the house at first. The second thermostat keeps the fan blowing over the hot fire box after the fire goes out and until the fire box cools below another set point. There are better and worse systems, but all systems involve appreciable time lags of this sort. The result, as most people who live with similar systems know, is that there are appreciable fluctuations in the temperature of the house. Sometimes it is hotter and sometimes colder than desirable.

Feed Forward Systems

It would be much better to place the thermostat outside of the house. Then, the signal would arrive at the furnace when the outside temperature fell below a set point, but before the inside temperature had the chance to fall in response to the outside temperature. The furnace action would then be ahead of the environment. Similarly, the outdoor thermostat could turn the furnace off when the outside temperature was rising, thus predicting a rise in indoor temperature and again allowing the furnace to get ahead of the environment. In a truly efficient system, the rate of rise and fall in the outdoor temperature would control the amount of furnace action. Such a system would be a feed forward system and it would keep the indoor temperature much more constant.

To deliver the amount of heat required to compensate for any predicted drop in temperature, a feed forward system would have to take into account the individual size and furnishings of each house, the number of residents and their activities at any time, and so on. Even though it would keep people more comfortable, a feed forward system is too expensive to be cost-effective for most families and offices, so far. There are industrial situations such as oil refineries, however, where a small deviation from the required temperature can create a very expensive mess. Relatively simple, feed forward systems are cost-effective in many complex and demanding industrial applications.

Machines that fit the E-S-Q-R paradigm are devices that synchronize themselves with the environment. A machine that responds to what has just happened can be useful, but a machine that responds to what is about to happen is much better. If the environment fluctuates in predictable cycles, machines can respond to what is about to happen. In modern times, machines can learn regularly repeating cycles and program themselves to anticipate the environment.

Living systems also respond to regularly repeating cycles in the environment. New England maple trees transplanted to California lose their leaves at about the same time as their conspecifics back in New England, as if in sympathy, even though winters are much milder in California. The trees are responding to changes in the proportion of daylight to darkness because that predicts temperature changes back in New England. Most species of animals come into breeding condition when changes in the day-night cycle predict favorable weather for breeding and raising their young. There are long seasonal cycles and shorter daily cycles. In the jet age, many human beings experience jet lag when suddenly transported to a point on the globe that is out of sync with the cycle of night and day back home.

There are also shorter cycles. Pavlov’s dogs could anticipate food fairly accurately when it regularly arrived 10, 20, or even 30 minutes after the onset of a CS. Human beings often estimate intervals of 10, 20, or 30 minutes with reasonable accuracy without consulting external clocks. There must be internal cycles of physiological events that permit animals to “tell time.” Indeed, a tone or a light evokes a whole series of responses in most animals including human beings. With dogs in the harness of a conditioning experiment, for example, the onset of a sound evokes heart rate and breathing rate changes. Slightly later, the head and ears orient toward the source of sound. Depending on what the dog sees or hears next, other responses follow, including a general damping of the physiological responses and return to a relaxed posture if no particular stimulus follows. Quite regular patterns of this sort appear in all of the animals studied and these could easily mark the time between the onset of the CS and the beginning of the UCR, or the time between any two arbitrary stimuli, Sa and Sb.

Anticipatory Responses

Anticipation seems to imply a cognitive, top-down principle whereby complex decisions in the brain result in commands to lower motor centers. On the contrary, however, relatively simple, bottom-up, feed forward systems can control temperature under the complex and demanding conditions of an oil refinery. Many common biological action patterns also depend on relatively simple, bottom-up, feed forward anticipation.

To jump, human beings and other animals must extend their leg muscles, but they cannot extend their leg muscles without first flexing them. Jumping is impossible without anticipatory leg flexion. To throw a ball or to hit it with a racket, athletes must swing an arm forward, but swinging forward is impossible or very weak without first swinging backward. These are only simple cases; almost any significant act consists of a series of movements that must be made in sequence. When serious athletes and musicians practice, they practice the rhythmic sequences of their performances; the more complex the sequences the more they must practice them.

Backward Conditioning

When the UCS comes before the CS, the ISI is negative and the procedure is called backward conditioning. If, as Pavlov and so many latter day Pavlovians have insisted, the CS must be a signal that predicts the UCS, then backward conditioning should be impossible. Until quite recently, there was virtually unanimous agreement that backward conditioning is impossible.

In spite of the traditional view, a series of modern experiments have demonstrated robust backward conditioning (Ayres, Axelrod, Mercker, Muchnik, & Vigorito, 1985; Ayres et al., 1987; Keith-Lucas & Guttman, 1975; Spetch, Wilkie, & Pinel, 1981; Van Willigen et al., 1987). The result is easy to demonstrate experimentally. The secret is in the number of trials. Backward conditioning readily appears after only one presentation of CS and UCS and within the first few trials (Heth, 1976). With repeated trials, the almost universal procedure since Pavlov, backward conditioning disappears and a negative ISI usually reduces responding.

It makes little difference what the ISI is within the first few trials. All intervals seem to be equally favorable if the CS and the UCS are not too far apart. Pavlov and his students and practically everyone else in this field until recent times believed that the first few trials represented unreliable performance, which gradually stabilized to form the traditional curve relating ISI to strength of conditioning. The stable curve that they got after dozens, often hundreds, of trials was the true curve for them. If the CS is acting as a signal for the coming of the UCS, then conditioning should be stronger as the time between CS and UCS gets shorter up to some relatively brief interval, and then decline steeply to a level near zero when the CS comes after the UCS.

RHYTHMIC PATTERNS

Modern experiments on backward conditioning show that the typical ISI curve shown in Fig. 2.3 only appears after many trials. Why is repetition so important? It is misleading to think of the dog in Pavlov’s experiment in abstract terms, either responding or not responding. As we have seen in Zener’s rich descriptions, live dogs in an actual experiment do much more than salivate or stop salivating. They fidget and stretch and scratch themselves and even fall asleep in the harness between trials.

“Between trials” is the critical concept. From the dog’s point of view the experimental period is punctuated by the UCS and the CS, probably mostly by the UCS. Each appearance of the UCS is also a signal that the UCS, be it food or shock, will not appear for a while. Even when the time between trials varies randomly, the UCS is still unlikely to be followed immediately by a second UCS. So, the dog is free to stretch and scratch and so on, for a little while at least. If that is what the dog is doing when a backward CS arrives, then that is the response that gets conditioned to the CS by S-R contiguity. If the only CR that the experimenter measures is salivation or leg withdrawal, then increases in another response appear on the record as decreases in the CR. The result looks as though a backward ISI depresses or even inhibits conditioning. When experimenters measure other responses, however, it becomes clear that the backward CS is evoking other conditioned responses (Janssen, Farley, & Hearst, 1995).

The traditional ISI curve requires many trials because the dogs must learn the rhythm of the experimental procedure. By definition, only repeating temporal patterns can form rhythms. That is why the traditional curve fails to appear after only one or a very few trials. Backward conditioning is only “backward” if the experiment only measures a single type of response and that is an anticipatory response such as salivation or leg withdrawal. When UCSs follow each other at intervals in a rhythmically repeating pattern, then each UCS ends just before the beginning of an interval without any UCS. Responses, such as fidgeting or relaxing, that appear during the interval after a UCS become conditioned to a CS that appears during that interval. This conditioning is as “forward” as the conditioning of anticipatory responses to a CS that appears just before the UCS.

Delay Conditioning

Longer delays between CS and UCS further illustrate the role of temporal patterns in conditioning. Pavlov discovered delay conditioning in the following way. After conditioning dogs to salivate with an interstimulus interval of about 2 seconds between a metronome CS and food UCS, he increased the ISI to 6 seconds. At first the dogs salivated within 2 seconds of the metronome as they had with the original ISI. With repeated trials, the dogs salivated later until they were salivating about 4 seconds after the CS, just before the time that the food arrived with the new ISI. Pavlov repeated this process, gradually increasing the interval, in some cases increasing it to 30 minutes. Each time Pavlov increased the interval, the dogs at first salivated just before the time that the food used to arrive and then adjusted to the new rhythm so that again they were salivating just before the new arrival time.

Delay conditioning shows that time can serve as a stimulus. Most animals can judge intervals of time, on the basis of regularly repeating physiological cycles. Outside the laboratory, dogs must synchronize their behavior to the critical events of daily life. In the conditioning laboratory, dogs synchronize themselves to the rhythm of the experimental procedure. When the CS is reliably related to the time of arrival of the UCS, then the CS helps the dog to synchronize his behavior. If the food regularly arrives a fraction of a second after the CS, then the dog salivates almost immediately after the arrival of the CS. If the food regularly arrives 10 minutes after the CS, then the dog salivates about 10 minutes after the CS and does something else while waiting. The dog is always responding to the food or to the absence of food.

The early experiments that failed to show backward conditioning only measured CRs that appeared within a short time after the CS, usually about half a second, seldom more than one second. This is because these experimenters always defined the CR as a response to the CS, rather than as a response to the food. Figure 2.2 is typical of most conditioning records in showing that the latency of the CR is significantly longer than the latency of the OR or the UCR. This is only slow if the CR is supposed to be a response to the CS. As an anticipatory response to the UCS, the CR is well timed. An anticipatory response to the UCS would be ill timed indeed if it appeared 10 or 20 minutes before the UCS.

S-R SEQUENCES

Conditioning is a mechanism that synchronizes the animal to its environment. If food regularly follows a stimulus like a light, then the animal can salivate at a favorable time. If the food follows very soon after the light, then the dog can start salivating right away. If the food follows 20 minutes after the light, then the animal can salivate about 20 minutes after the light and do other things in the interim. Viewed in this way, conditioning permits human and nonhuman animals to get in step with the regularly repeating events of their lives (see also Akins, Domjan, & Guitiérrez, 1994; Kehoe, P. S. Horne, & A. J. Horne, 1993; Kehoe, P. S. Horne, Macrae, & A. J. Horne, 1993). This section shows how conditioning by S-R contiguity can accomplish this.

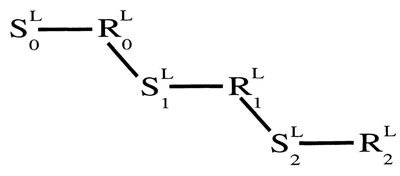

Most, probably all, stimuli evoke a series of reactions that appear in a regular pattern. Each response alters the external or internal environment in some way. Increases in breathing or heart rate create stimuli. Looking toward the source of the light or turning the ears in that direction also create new stimuli, and so on. The stimulus created by each response is the stimulus that evokes the next response in the chain. Different initiating stimuli (lights, tones, shocks) initiate distinctively different S—R chains, which could serve as timing devices in temporal conditioning. Animals could use this feed forward mechanism to anticipate future events.

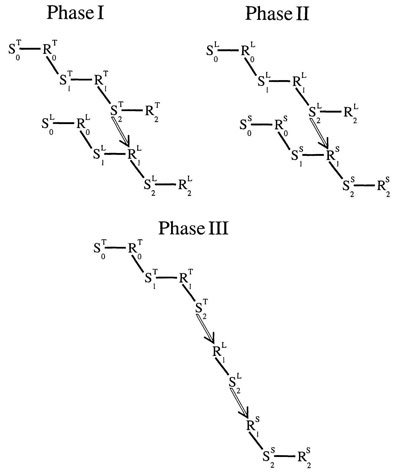

Simultaneous conditioning, when CS and UCS start at the same time, is very difficult if not impossible. The most favorable ISIs are a half a second or more. Simple reaction times are between one eighth and one sixth of a second. An ISI of half a second allows enough time for three or more units, of the form: S0—R0—S1—R1—S2—R2—S3—R3.

Figure 4.3 illustrates a chain of S—R units that might be evoked by a light. The illustrations in the remainder of this chapter show only three links in a chain. That is an arbitrary number chosen for convenience of illustration. There is time for at least three such links in the ISI (as explained in chap. 2). Very likely there are more than three links in most cases of conditioning.

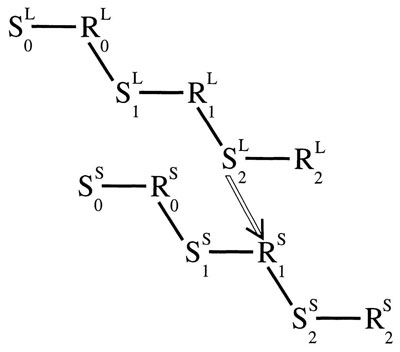

Figure 4.4 shows the same type of chain initiated by a light in Fig. 4.3 with a similar chain initiated slightly later by a shock. The links in the shock chain could be a startle posture followed by a sharp rise in breathing and heart rate, followed by agitation, followed by relaxation, and so on. In Fig. 4.4, S2 in the light chain comes just before R1 in the shock chain. In this way, the chain initiated by the light could mark the time that R1 in the shock chain usually happens. There is a hollow line drawn between S2 of the light chain and R1 of the shock chain to indicate conditioning. According to this view, R1 (from the shock chain) is conditioned to S2 (from the light chain) by S-R contiguity, alone. Once evoked, however, R1 of the shock results in S2 of the shock, which in turn evokes the rest of the shock chain. In this way, a part of the shock chain is evoked whenever the light chain is evoked.

The alternative is the S-S* view that the response to the shock becomes conditioned to the light because the arbitrary stimulus of the light predicts the arrival of the biologically significant shock. But, as the preconditioning experiments demonstrate, conditioning can occur between two arbitrary stimuli in Phase I of preconditioning before the experimenter introduces any biologically significant S*.

FIG. 4.3. A chain of S—R links initiated by a light. Copyright © 1997 by R. Allen Gardner.

FIG. 4.4. A conditioned S—R link between a light and a shock. Copyright © 1997 by R. Allen Gardner.

Figure 4.5 shows how the same process could take place with a tone and a light without any privileged, biologically significant stimulus, such as painful shock. With S-R contiguity, all that is necessary is a regularly repeating temporal pattern. An earlier link in one chain can always be conditioned to a later link in another chain. Perhaps, this is because an earlier S-R link is stronger than a later link; an R1 in the chain that starts earlier is always stronger than an R2 in the chain that starts later. The hollow line between S2 in the tone chain and R1 in the light chain represents the conditioned link between the two chains.

The alternative to this view is the S-S contiguity view that any two stimuli can be associated by contiguity. But, if this is true, why do the two stimuli have to be offset by an ISI as long as one half of a second? Unlike the S-S mechanism, the S-R contiguity mechanism is compatible with the need for a forward interval of at least a half second between Sa and Sb.

Now, the conditions in Fig. 4.5 are the conditions of Phase I of the preconditioning paradigm pairing a tone and a light before introducing any S*, and the conditions in Fig. 4.4 are the conditions of Phase II, which is when the experimenter first introduces S*.

Figure 4.6 puts together all three phases of the preconditioning experiment to show how the tone could evoke the shock by a conditioned link between the tone chain and the light chain followed by a conditioned link between the light chain and the shock chain—even though the tone and shock never appeared together.

FIG. 4.5. A conditioned S—R link between a tone and a light. Copyright © 1997 by R. Allen Gardner.

FIG. 4.6. Conditioning by contiguity in the preconditioning paradigm. Copyright © 1997 by R. Allen Gardner.

Contrast With Stimulus Substitution

The S-R mechanism frees conditioning from Pavlov’s principle of stimulus substitution. In everyday life, stimulus substitution would inevitably lead to maladaptive behavior. It may be adaptive to begin salivating to Sa if food is coming in about half a second. And, it may also be adaptive to begin salivating to Sb if Sa is coming in half a second. But, a point must come when there is an Sn that actually predicts that food will not arrive for some time. That is, it is adaptive for a dog to begin salivating when it is just about to eat, but it would be maladaptive for a dog to begin salivating at the beginning of a hunt or on first detecting prey.

Guests dining at their favorite restaurant may very well begin salivating when their waiter brings their food to the table. Should they begin salivating before that? Should they salivate when they see the waiter go to the kitchen with their orders? Should they salivate when the waiter writes their orders? When they read the menu? When the hostess seats them? When they enter the restuarant? When they drive into the parking lot? When they see the resaurant down the street? When they start out from home? When they decide to go out for dinner? All of these stimuli certainly predict a good dinner, but most occur at inappropriate times for salivation. In fact, most of them predict a significantly long interval before the arrival of food. Not only the experimental evidence, but also the common sequences of learned behavior in daily life contradict Pavlov’s principle of higher-order conditioning.

The mechanism of S-R contiguity outlined here shows how the learner can make appropriately different responses to different stimuli in a predictable sequence. This mechanism can extend forward and backward in either direction and it can incorporate new links into established sequences. In the view of this book, that is the function of conditioning.

SUMMARY

Scientists are looking for the most basic unit of learning, the atom of conditioning. With this basic unit they can build up a system that applies to all forms of learning, to training, teaching, and even problem-solving strategies. In a century of research and theorizing, four basic mechanisms emerged as candidates for this role in classical conditioning, and these four probably exhaust the possibilities. These mechanisms are S-S, S-S*, S-R, and S-R-S*.

This chapter considers a mass of evidence from studies of the ISI. These studies show that, after repeated pairing, the most favorable interval between CS and UCS is long in neural time and asymmetrical; CS before UCS. These abundantly documented findings should eliminate one candidate, S-S.

The three remaining candidates each make use of the ISI. The S-S* mechanism needs the interval so that CS can signal the coming of UCS. The S-R-S* mechanism needs the interval so that the animal can do something that the S* can reward—something that increases the pleasure or decreases the pain of the S* when it comes. The S-R mechanism also needs the interval for the animal to make a response, but an S-R mechanism avoids the need for contingent pleasure or pain.

The evidence of omission experiments and preconditioning experiments eliminates S* contingency as necessary for classical conditioning. This should eliminate S-S* and S-R-S* as candidates.

According to S-R contiguity, it is a stimulus and a response that must be paired. The dog in Pavlov’s experiment first salivates after food arrives. With repeated feeding, the dog starts to salivate before the food arrives. If the tone precedes the food by a favorable interval, then the procedure induces the dog to salivate soon after the tone. According to S-R contiguity, the salivation becomes conditioned to the tone because it occurs soon after the tone. That contiguity alone is sufficient for conditioning. With S-R contiguity alone, an animal can synchronize its behavior to rhythmically repeating events in its environment.

There is a fifth mechanism, R-S* contingency, first proposed by Skinner (1938), that continues to receive some support (Mackintosh, 1983; Rescorla, 1987). The R-S* mechanism is only appropriate for instrumental conditioning, so this book deals with it in later chapters concerned with the mechanisms of instrumental conditioning.