CHAPTER 7: The Binary Search Tree ADT

© Ake13bk/Shutterstock

Chapter 5 introduced the Collection ADT with core operations add, remove, get, and contains. Chapter 6 presented the List ADT—a list is a collection that also supports iteration and indexing. In this chapter we present another variation of a collection. The distinguishing features of a binary search tree are that it maintains its elements in increasing order and it provides, in the general case, efficient implementations of all the core operations. How can it do this?

The binary search tree can be understood by comparing a sorted linked list to a sorted array:

Consider the steps needed to add the value 985 to each of these structures. For the linked list we must perform a linear search of the structure in order to find the point of insertion. That is an O(N) operation. However, once the insertion point is discovered, we can add the new value by creating a new node and rearranging a few references, an O(1) operation. On the other hand, the sorted array supports the binary search algorithm (see Sections 1.6 and 3.3, “Comparing Algorithms: Order of Growth Analysis” and “Recursive Processing of Arrays”) allowing us to quickly find the point of insertion, an O(log2N) operation. Even though we quickly find the insertion point we still need to shift all the elements “to the right” of the location one index higher in the array, an O(N) operation. In summation, the linked list exhibits a slow find but a fast insertion, whereas the array is the opposite, a fast find but a slow insertion.

Can we combine the fast search of the array with the fast insertion of the linked list? The answer is yes!—with a binary search tree. A binary search tree is a linked structure that allows for quick insertion (or removal) of elements. But instead of one link per node it uses two links per node as shown below on the right where we display the sorted list 5, 8, 12, 20, and 27 as a binary search tree, along with equivalent array and linked list representations.

As you will see in this chapter, the availability of two links allows us to embed a binary search within the linked structure, thus combining fast binary search with fast node insertion—the best of both worlds. The binary search tree provides us with a data structure that retains the flexibility of a linked list while allowing quicker [O(log2N) in the general case] access to any node in the list.

A binary search tree is a special case of a more general data structure, the tree. Many variations of the tree structure exist. We begin this chapter with an overview of tree terminology and briefly discuss general tree implementation strategies and applications. We then concentrate on the design and use of a binary search tree.

7.1 Trees

Each node in a singly linked list may point to one other node: the one that follows it. Thus, a singly linked list is a linear structure; each node in the list (except the last) has a unique successor. A tree is a nonlinear structure in which each node is capable of having multiple successor nodes, called children. Each of the children, being nodes in a tree, can also have multiple child nodes, and these children can in turn have many children, and so on, giving the tree its branching structure. The “beginning” of the tree is a unique starting node called the root.

Trees are useful for representing lots of varied relationships. Figure 7.1 shows four example trees. The first represents the hierarchical inheritance relationship among a set of Java classes, the second shows a naturally occurring tree—a tree of cellular organisms, the third is a game tree used to analyze choices in a turn-taking game, and the fourth shows how a simply connected maze (no loops) can be represented as a tree.

Trees are recursive structures. We can view any tree node as being the root of its own tree; such a tree is called a subtree of the original tree. For example, in Figure 7.1a the node labeled “Abstract List” is the root of a subtree containing all of the Java list-related classes.

There is one more defining quality of a tree—a tree’s subtrees are disjoint; that is, they do not share any nodes. Another way of expressing this property is to say that there is a unique path from the root of a tree to any other node of the tree. In the structure below these rules are violated. The subtrees of A are not disjoint as they both contain the node D; there are two paths from the root to the node containing D. Therefore, this structure is not a tree.

As you can already see, our tree terminology borrows from both genealogy; for example, we speak of “child” nodes, and from botany, for example, we have the “root” node. Computer scientists tend to switch back and forth between these two terminological models seamlessly. Let us expand our tree vocabulary using the tree of characters in Figure 7.2 as an example.

Figure 7.1 Example trees

The root of the tree is A (from the botany point of view we usually draw our trees upside down!). The children of A are B, F, and X. Since they are A’s children it is only natural to say that A is their parent. We also say that B, F, and X are siblings. Continuing with the genealogical trend, we can speak of a node’s descendants (the children of a node, and their children, and so on recursively) and the ancestors of a node (the parent of the node, and their parent, and so on recursively). In our example, the descendants of X are H, Q, Z, and P, and the ancestors of P are H, X, and A. Obviously, the root of the tree is the ancestor of every other node in the tree, but the root node has no ancestors itself.

Figure 7.2 Sample tree

Figure 7.3 Tree terminology

A node can have many children but only a single parent. In fact, every node (except the root) must have a single parent. This is a consequence of the “all subtrees of a node are disjoint” rule—you can see that in the “not a tree” example on page 423 that D has two parents.

If a node in the tree has no children, it is called a leaf. In our example tree in Figure 7.2 the nodes C, T, F, P, Q, and Z are leaf nodes. Sometimes we refer to the nonleaf nodes as interior nodes. The interior nodes in our example are A, B, X, and H.

The level of a node refers to its distance from the root. In Figure 7.2, the level of the node containing A (the root node) is 0 (zero), the level of the nodes containing B, F, and X is 1, the level of the nodes containing C, T, H, Q, and Z is 2, and the level of the node containing P is 3. The maximum level in a tree determines its height so our example tree has a height of 3. The height is equal to the highest number of links between the root and a leaf.

Tree Traversals

To traverse a linear linked list, we set a temporary reference to the beginning of the list and then follow the list references from one node to the other, until reaching a node whose reference value is null. Similarly, to traverse a tree, we initialize our temporaryreference to the root of the tree. But where do we go from there? There are as many choices as there are children of the node being visited.

Breadth-First Traversal

Let us look at two important related approaches to traversing a general tree structure: breadth-first and depth-first. We think of the breadth of a tree as being from side-to-side and the depth of a tree as being from top-to-bottom. Accordingly, in a breadth-first traversal we first visit the root of the tree.1 Next we visit, in turn, the children of the root (typically from leftmost to rightmost), followed by visiting the children of the children of the root and so on until all of the nodes have been visited. Because we sweep across the breadth of the tree, level by level, this is also sometimes called a level-order traversal.

Figure 7.4a shows that in a breadth-first traversal of our sample tree the order the nodes are visited is A B F X C T H Q Z P. But, how can we implement this traversal? As part of visiting a node of the tree we must store references to its children so that we can visit them later in the traversal. In our example, when visiting node A, we need to store references to nodes B, F, and X. When we next visit node B we need to store references to nodes C and T—but they must be stored “behind” the previously stored F and X nodes so that nodes are visited in the desired order. What ADT supports this first in, first out (FIFO) approach? The Queue ADT. Here is a breadth-first traversal algorithm:

Figure 7.4 Tree Traversals

The Breadth-First Traversal algorithm begins by making sure the tree is not empty—if it is, no processing occurs. It is almost always a good idea to check for the degenerate case of an empty tree like this at the start of tree processing. Once it is determined that the tree is not empty, the algorithm enqueues the root node of the tree. We could just visit the root node at this point, but by enqueuing it, we set up the queue for the processing loop that follows. That loop repeatedly pulls a node off the queue, visits it, and then enqueues its children, until it runs out of nodes to process—that is, until the queue is empty.

Table 7.1 shows a trace of the algorithm on the sample tree of Figure 7.2. Trace the algorithm yourself and verify the contents of the table.

Traversal of our collection structures are needed in case we must visit every node in the structure to perform some processing. Suppose, for example, we had a tree of bank account information and wanted to sum all the amounts of all the accounts. A breadth-first traversal of the tree in order to retrieve all the account information is as good as any other traversal approach in this situation.

Besides allowing us to visit every node, for certain applications the breadth-first approach can provide additional benefits. For example, if our tree represents choices in a game (see Figure 7.1c) a breadth-first approach could provide a fast way to determine our next move. It amounts to looking at all possible next moves, and if desired all possible responses, and so on one level at a time. The point is we can avoid getting stuck traveling deeply down a tree path that represents a never-ending sequence of moves if we use breadth-first search. Using trees to represent sequences of alternatives and their ramifications, such as those found in a game, and traversing such trees using the breadth-first search to make a good choice among the alternatives, is a common artificial intelligence technique.

Table 7.1 Breadth-First Search Algorithm Trace

| After Loop Iteration | Node | Visited So Far | Queue |

|---|---|---|---|

| 0 | A | ||

| 1 | A | A | B F X |

| 2 | B | A B | F X C T |

| 3 | F | A B F | X C T |

| 4 | X | A B F X | C T H Q Z |

| 5 | C | A B F X C | T H Q Z |

| 6 | T | A B F X C T | H Q Z |

| 7 | H | A B F X C T H | Q Z P |

| 8 | Q | A B F X C T H Q | Z P |

| 9 | Z | A B F X C T H Q Z | P |

| 10 | P | A B F X C T H Q Z P | null |

Depth-First Traversal

A depth-first traversal, as might be expected, is the counterpart of the breadth-first traversal. Rather than visiting the tree level by level, expanding gradually away from the root, with a depth-first traversal we move quickly away from the root, traversing as far as possible along the leftmost path, until reaching a leaf, and then “backing up” as little as needed before traversing again down to a leaf. A depth-first traversal of a maze tree (see Figure 7.1d) represents going as far as possible down one corridor of the maze before backing up a little and trying another path—this might be a good approach if we need to escape the maze as soon as possible and are willing to gamble on being lucky, as opposed to a breadth-first approach that is almost guaranteed to take a long time unless we are already very close to an exit.

Figure 7.4b shows the order in which we visit nodes with a depth-first traversal of our sample tree: A B C T F X H P Q Z.

The algorithm is similar to that of the breadth-first traversal. Again, as part of visiting a node of the tree, we must store references to its children so that they can be visited later in the traversal. In our example, when visiting node A, we again need to store references to nodes B, F, and X. Upon visiting node B we need to store references to nodes C and T— but they must be stored “ahead of” the previously stored F and X nodes so that nodes are visited in the desired order. What ADT supports this last in, first out (LIFO) approach? The Stack ADT. When pushing the children of a node onto the stack we will do so from “right to left” so that they are removed from the stack in the desired “left to right” order. Here is a depth-first traversal algorithm:

Tracing the algorithm on our sample tree is left as an exercise.

When we study graphs in Chapter 10 we will again use a queue to support a breadth-first traversal and a stack to support a depth-first traversal.

7.2 Binary Search Trees

As demonstrated in Figure 7.1, trees are very expressive structures, used to represent lots of different kinds of relationships. In this chapter, we concentrate on a particular form of tree: the binary tree. In fact, we concentrate on a particular type of binary tree: the binary search tree. The binary search tree provides an efficient implementation of a sorted collection.

Binary Trees

A binary tree is a tree where each node is capable of having at most two children. Figure 7.5 depicts a binary tree. The root node of this binary tree contains the value A. Each node in the tree may have zero, one, or two children. The node to the left of a node, if it exists, is called its left child. For instance, the left child of the root node of our example tree contains the value B. The node to the right of a node, if it exists, is its right child. The right child of the root node contains the value C.

In Figure 7.5, each of the root node’s children is itself the root of a smaller binary tree, or subtree. The root node’s left child, containing B, is the root of its left subtree, whereas the right child, containing C, is the root of its right subtree. In fact, any node in the tree can be considered the root node of a binary subtree. The subtree whose root node has the value B also includes the nodes with values D, G, H, and E.

Figure 7.5 A binary tree

Our general tree terminology also applies to binary trees. The level of a node refers to its distance from the root. In Figure 7.5, the level of the node containing A (the root node) is 0 (zero), the level of the nodes containing B and C is 1, the level of the nodes containing D, E, and F is 2, and the level of the nodes containing G, H, I, and J is 3. The maximum level in a tree determines its height.

For a binary tree the maximum number of nodes at any level N is 2N. Often, however, levels do not contain the maximum number of nodes. For instance, in Figure 7.5, level 2 could contain four nodes, but because the node containing C in level 1 has only one child, level 2 contains only three nodes. Level 3, which could contain eight nodes, has only four. We could make many differently shaped binary trees out of the 10 nodes in this tree. A few variations are illustrated in Figure 7.6. It is easy to see that the maximum number of levels in a binary tree with N nodes is N (counting level 0 as one of the levels). But what is the minimum number of levels? If we fill the tree by giving every node in each level two children until running out of nodes, the tree has ∟log2N∠ + 1 levels (Figure 7.6a).2 Demonstrate this fact to yourself by drawing “full” trees with 8 and 16 nodes. What if there are 7, 12, or 18 nodes?

The height of a tree is the critical factor in determining the efficiency of searching for elements. Consider the maximum-height tree shown in Figure 7.6c. If we begin searching at the root node and follow the references from one node to the next, accessing the node with the value J (the farthest from the root) is an O(N) operation—no better than searching a linear list! Conversely, given the minimum-height tree depicted in Figure 7.6a, to access the node containing J, we have to look at only three other nodes—the ones containing E, A, and G—before finding J. Thus, if the tree is of minimum height, its structure supports O(log2N) access to any element.

Figure 7.6 Binary trees

The arrangement of the values in the tree pictured in Figure 7.6a, however, does not lend itself to quick searching. Suppose we want to find the value G. We begin searching at the root of the tree. This node contains E, not G, so the search continues. But which of its children should be inspected next, the right or the left? There is no special order to the nodes, so both subtrees must be checked. We could use a breadth-first search, searching the tree level by level, until coming across the value we are searching for. But that is an O(N) search operation, which is no more efficient than searching a linear linked list.

Binary Search Trees

To support O(log2N) searching, we add a special property to our binary tree, the binary search property, based on the relationship among the values of its elements. We put all of the nodes with values smaller than or equal to the value in the root into its left subtree, and all of the nodes with values larger than the value in the root into its right subtree. Figure 7.7 shows the nodes from Figure 7.6a rearranged to satisfy this property. The root node, which contains E, references two subtrees. The left subtree contains all values smaller than or equal to E, and the right subtree contains all values larger than E.

Figure 7.7 A binary search tree

When searching for the value G, we look first in the root node. G is larger than E, so G must be in the root node’s right subtree. The right child of the root node contains H. Now what? Do we go to the right or to the left? This subtree is also arranged according to the binary search property: The nodes with smaller or equal values are to the left and the nodes with larger values are to the right. The value of this node, H, is greater than G, so we search to its left. The left child of this node contains the value F, which is smaller than G, so we reapply the rule and move to the right. The node to the right contains G—success.

A binary tree with the binary search property is called a binary search tree. As with any binary tree, it gets its branching structure by allowing each node to have a maximum of two child nodes. It gets its easy-to-search structure by maintaining the binary search property: The left child of any node (if one exists) is the root of a subtree that contains only values smaller than or equal to the node. The right child of any node (if one exists) is the root of a subtree that contains only values that are larger than the node.

Four comparisons instead of a maximum of ten does not sound like such a big deal, but as the number of elements in the structure increases, the difference becomes impressive. In the worst case—searching for the last node in a linear linked list—we must look at every node in the list; on average, we must search half of the list. If the list contains 1,000 nodes, it takes 1,000 comparisons to find the last node. If the 1,000 nodes were arranged in a binary search tree of minimum height, it takes no more than 10 comparisons— └log21000┘ + 1 = 10—no matter which node we were seeking.

Binary Tree Traversals

We discussed two well-known traversal approaches for the general tree structure, the breadth-first and depth-first traversals. Due to the special structure of binary trees, where each node has a left subtree and a right subtree, there are additional traversal orders available for binary trees. Three common ones are defined in this subsection.

Our traversal definitions depend on the relative order in which we visit a root and its subtrees. Here are three possibilities:

Preorder traversal. Visit the root, visit the left subtree, visit the right subtree

Inorder traversal. Visit the left subtree, visit the root, visit the right subtree

Postorder traversal. Visit the left subtree, visit the right subtree, visit the root

The name given to each traversal specifies where the root itself is processed in relation to its subtrees. You might note that these definitions are recursive—we define traversing a tree in terms of traversing subtrees.

We can visualize each of these traversal orders by drawing a “loop” around a binary tree as shown in Figure 7.8. Before drawing the loop, extend the nodes of the tree that have fewer than two children with short lines so that every node has two “edges.” Then draw the loop from the root of the tree, down the left subtree, and back up again, hugging the shape of the tree as you go. Each node of the tree is “touched” three times by the loop (the touches are numbered in Figure 7.8): once on the way down before the left subtree is reached; once after finishing the left subtree but before starting the right subtree; and once on the way up, after finishing the right subtree. To generate a preorder traversal, follow the loop and visit each node the first time it is touched (before visiting the left subtree). To generate an inorder traversal, follow the loop and visit each node the second time it is touched (in between visiting the two subtrees). To generate a postorder traversal, follow the loop and visit each node the third time it is touched (after visiting the right subtree). Use this method on the example tree in Figure 7.9 and see whether you agree with the listed traversal orders.

You may have noticed that an inorder traversal of a binary search tree visits the nodes in order from the smallest to the largest. Obviously, this type of traversal would be useful when we need to access the elements in ascending key order—for example, to print a sorted list of the elements. There are also useful applications of the other traversal orders. For example, the preorder traversal (which is identical to depth-first order) can be used to duplicate the search tree—traversing a binary search tree in preorder and adding the visited elements to a new binary search tree as you go, will recreate the tree in the same exact shape. Since postorder traversal starts at the leaves and works backwards toward the root, it can be used to delete the tree, node by node, without losing access to the rest of the tree while doing so—this is analogous to the way a tree surgeon brings down a tree branch by branch, starting way out at the leaves and working backwards toward the ground, and is especially important when using a language without automatic garbage collection. As another example, preorder and postorder traversals can be used to translate infix arithmetic expressions into their prefix and postfix counterparts.

Figure 7.8 Visualizing binary tree traversals

Figure 7.9 Three binary tree traversals

We mentioned that the preorder traversal results in visiting the nodes of the binary tree in a depth-first order. What about breadth-first (also known as level) order? We do not implement a breadth-first search for our binary search trees. However, an exercise asks you to explore and implement that option.

7.3 The Binary Search Tree Interface

In this section, we specify our Binary Search Tree ADT. As for stacks, queues, collections, and lists, we use the Java interface construct to formalize the specification.

Our binary search trees are defined to be similar to the lists of Chapter 6. Like the lists, our binary search trees will implement this text’s CollectionInterface and the Java Library’s Iterable interface. We make the same basic assumptions for our binary search trees as we did for our lists—they are unbounded, allow duplicate elements, and disallow null elements. In fact, our binary search trees act very much like sorted lists because the default iteration returns elements in increasing natural order. They can be used by some applications that require a sorted list.

So, what are the differences between the sorted list and the binary search tree? First, our binary search tree does not support the indexed operations defined for our lists. Second, we add two required operations (min and max) to the binary search tree interface that return respectively the smallest element in the tree and the largest element in the tree. Finally, as we have seen, binary search trees allow for multiple traversal orders so a getIterator method, that is passed an argument indicating which kind of iterator is desired—preorder, inorder, or postorder—and returns a corresponding Iterator object, is also required.

The Interface

Here is the interface. Some discussion follows.

//--------------------------------------------------------------------------- // BSTInterface.java by Dale/Joyce/Weems Chapter 7 // // Interface for a class that implements a binary search tree (BST). // // The trees are unbounded and allow duplicate elements, but do not allow // null elements. As a general precondition, null elements are not passed as // arguments to any of the methods. //--------------------------------------------------------------------------- package ch07.trees; import ch05.collections.CollectionInterface; import java.util.Iterator; public interface BSTInterface<T> extends CollectionInterface<T>, Iterable<T> { // Used to specify traversal order. public enum Traversal {Inorder, Preorder, Postorder}; T min(); // If this BST is empty, returns null; // otherwise returns the smallest element of the tree. T max(); // If this BST is empty, returns null; // otherwise returns the largest element of the tree. public Iterator<T> getIterator(Traversal orderType); // Creates and returns an Iterator providing a traversal of a "snapshot" // of the current tree in the order indicated by the argument. }

The BSTInterface extends both the CollectionInterface from the ch05.collections package and the Iterable interface from the Java library. Due to the former, classes that implement BSTInterface must provide add, get, contains, remove, isFull, isEmpty, and size methods. Due to the latter, they must provide an iterator method that returns an Iterator object that allows a client to iterate through the binary search tree.

The min and max methods are self-explanatory. We include them in the interface because they are useful operations and should be reasonably easy to implement in an efficient manner for a binary search tree.

The BSTInterface makes public an enum class Traversal that enumerates the three kinds of supported binary search tree traversals. The getIterator method accepts an argument from the client of type Traversal that indicates which tree traversal is desired. The method should return an appropriate Iterator object. For example, if a client wants to print the contents of a binary search tree named mySearchTree, which contains strings, using an inorder traversal the code could be:

Iterator<String> iter; iter = mySearchTree.getIterator(BSTInterface.Traversal.Inorder); while (iter.hasNext()) System.out.println(iter.next());

In addition to the getIterator method, a class that implements the BSTInterface must provide a separate iterator method, because BSTInterface extends Iterable. This method should return an Iterator that provides iteration in the “natural” order of the tree elements. For most applications this would be an inorder traversal, and we make that assumption in our implementation. Therefore, for the above example, an alternate way to print the contents of the tree using an inorder traversal is to use the for-each loop, available to Iterable classes:

for (String s: mySearchTree) System.out.println(s);

We intend the iterators created and returned by getIterator and iterator to provide a snapshot of the tree as it exists at the time the iterator is requested. They represent the state of the tree at that time and subsequent changes to the tree should not affect the results returned by the iterator’s hasNext and next methods. Because the iterators are using a snapshot of the tree it does not make sense for them to support the standard iterator remove method. Our iterators will throw an UnsupportedOperationException if remove is invoked.

To demonstrate the use of iterators with our binary search tree we provide an example application that first generates the tree shown in Figure 7.9 and then outputs the contents of the tree using each of the traversal orders. Next, the application demonstrates how to use the for-each loop, resulting in a repeat of the inorder traversal. Finally, it shows that adding elements to a tree after obtaining an iterator does not affect the results of the iteration. This example uses the binary search tree implementation developed in the upcoming chapter sections. Here is the code, and the example output follows:

//-------------------------------------------------------------------------- // BSTExample.java by Dale/Joyce/Weems Chapter 7 // // Creates a BST to match Figure 7.9 and demonstrates use of iteration. //-------------------------------------------------------------------------- package ch07.apps; import ch07.trees.*; import java.util.Iterator; public class BSTExample { public static void main(String[] args) { BinarySearchTree<Character> example = new BinarySearchTree<Character>(); Iterator<Character> iter; example.add('P'); example.add('F'); example.add('S'); example.add('B'); example.add('H'); example.add('R'); example.add('Y'); example.add('G'); example.add('T'); example.add('Z'); example.add('W'); // Inorder System.out.print("Inorder: "); iter = example.getIterator(BSTInterface.Traversal.Inorder); while (iter.hasNext()) System.out.print(iter.next()); // Preorder System.out.print(" Preorder: "); iter = example.getIterator(BSTInterface.Traversal.Preorder); while (iter.hasNext()) System.out.print(iter.next()); // Postorder System.out.print(" Postorder: "); iter = example.getIterator(BSTInterface.Traversal.Postorder); while (iter.hasNext()) System.out.print(iter.next()); // Inorder again System.out.print(" Inorder: "); for (Character ch: example) System.out.print(ch); // Inorder again System.out.print(" Inorder: "); iter = example.getIterator(BSTInterface.Traversal.Inorder); example.add('A'); example.add('A'); example.add('A'); while (iter.hasNext()) System.out.print(iter.next()); // Inorder again System.out.print(" Inorder: "); iter = example.getIterator(BSTInterface.Traversal.Inorder); while (iter.hasNext()) System.out.print(iter.next()); } }

Note that we recreate the tree in the figure within the application by adding the elements in “level order.” The implementation details, presented in the next several sections, should clarify that this approach will indeed create a model of the tree shown in the figure. Here is the output from the BSTExample application:

Inorder: BFGHPRSTWYZ Preorder: PFBHGSRYTWZ Postorder: BGHFRWTZYSP Inorder: BFGHPRSTWYZ Inorder: BFGHPRSTWYZ Inorder: AAABFGHPRSTWYZ

7.4 The Implementation Level: Basics

We represent a tree as a linked structure whose nodes are allocated dynamically. Before continuing, we need to decide exactly what a node of the tree is. In our earlier discussion of binary trees, we talked about left and right children. These children are the structural references in the tree; they hold the tree together. We also need a place to store the client’s data in the node and will continue to call it info. Figure 7.10 shows how to visualize a node.

Here is the definition of a BSTNode class that corresponds to the picture in Figure 7.10.

//--------------------------------------------------------------------------- // BSTNode.java by Dale/Joyce/Weems Chapter 7 // // Implements nodes holding info of class <T> for a binary search tree. //--------------------------------------------------------------------------- package support; public class BSTNode<T> { private T info; // The node info private BSTNode<T> left; // A link to the left child node private BSTNode<T> right; // A link to the right child node public BSTNode(T info) { this.info = info; left = null; right = null; } public void setInfo(T info){this.info = info;} public T getInfo(){return info;} public void setLeft(BSTNode<T> link){left = link;} public void setRight(BSTNode<T> link){right = link;} public BSTNode<T> getLeft(){return left;} public BSTNode<T> getRight(){return right;} }

Figure 7.10 Binary tree nodes

The careful reader may notice that the above class is similar to the DLLNode class introduced in the Variations section of Chapter 4. In fact, the two classes are essentially equivalent, with the left/right references of the BSTNode class corresponding to the back/forward references of the DLLNode class. Instead of creating a new node class for our Binary Search Tree ADT we could reuse the DLLNode class. Although reuse is good to take advantage of when possible, in this case we create an entirely new class. Our intent when creating the DLLNode class was to support doubly linked lists that are linear structures and not the same as binary search trees. Our intent when creating the BSTNode class is to support binary search trees. Furthermore, creating a new node class allows us to use appropriate method names for each structure, for example, getBack and setForward for a doubly linked list and getLeft and setRight for the binary search tree.

Now that the node class is defined, we turn our attention to the implementation. We will call our implementation class BinarySearchTree. It implements the BST-Interface and uses BSTNode. The relationships among our binary search tree classes and interfaces are depicted in Figure 7.24 in this chapter’s “Summary” section.

The instance variable root references the root node of the tree. It is set to null by the constructor. The beginning of the class definition follows:

//--------------------------------------------------------------------------- // BinarySearchTree.java by Dale/Joyce/Weems Chapter 7 // // Defines all constructs for a reference-based BST. // Supports three traversal orders Preorder, Postorder, & Inorder ("natural") //--------------------------------------------------------------------------- package ch07.trees; import java.util.*; // Iterator, Comparator import ch04.queues.*; import ch02.stacks.*; import support.BSTNode; public class BinarySearchTree<T> implements BSTInterface<T> { protected BSTNode<T> root; // reference to the root of this BST protected Comparator<T> comp; // used for all comparisons protected boolean found; // used by remove public BinarySearchTree() // Precondition: T implements Comparable // Creates an empty BST object - uses the natural order of elements. { root = null; comp = new Comparator<T>() { public int compare(T element1, T element2) { return ((Comparable)element1).compareTo(element2); } }; } public BinarySearchTree(Comparator<T> comp) // Creates an empty BST object - uses Comparator comp for order // of elements. { root = null; this.comp = comp; }

The class is part of the ch07.trees package. The reason for importing queues and stacks will become apparent as the rest of the class is developed. We call the variable that references the tree structure root, because it is a link to the root of the tree.

As we did with the sorted list implementation in Section 6.4, “Sorted Array-Based List Implementation,” we allow the client to pass a Comparator to one of the two constructors. In that case the argument Comparator is used when determining relative order of the tree elements. This provides a versatile binary search tree class that can be used at different times with different keys for any given object class. For example, name, student number, age, or test score average could be used as the key when storing/retrieving objects that represent a student. The other constructor has no parameter. Instantiating a tree with this constructor indicates the client wishes to use the “natural” order of the elements, that is, the order defined by their compareTo method. A precondition on this constructor requires that type T implements Comparable, ensuring the existence of the compareTo method.

Next we look at the observer methods isFull, isEmpty, min, and max:

public boolean isFull() // Returns false; this link-based BST is never full. { return false; } public boolean isEmpty() // Returns true if this BST is empty; otherwise, returns false. { return (root == null); } public T min() // If this BST is empty, returns null; // otherwise returns the smallest element of the tree. { if (isEmpty()) return null; else { BSTNode<T> node = root; while (node.getLeft() != null) node = node.getLeft(); return node.getInfo(); } } public T max() // If this BST is empty, returns null; // otherwise returns the largest element of the tree. { if (isEmpty()) return null; else { BSTNode<T> node = root; while (node.getRight() != null) node = node.getRight(); return node.getInfo(); } }

The isFull method is trivial—as we have seen before, a link-based structure need never be full. The isEmpty method is almost as easy. One approach is to use the size method: If it returns 0, isEmpty returns true; otherwise, it returns false. But the size method will count the nodes on the tree each time it is called. This task takes at least O(N) steps, where N is the number of nodes (as we see in Section 7.5, “Iterative Versus Recursive Method Implementations”). Is there a more efficient way to determine whether the list is empty? Yes, just see whether the root of the tree is currently null. This approach takes only O(1) steps.

Now consider the min method. Examine any of the binary search trees seen so far and ask yourself, where is the minimum element? It is the leftmost element in the tree. In any binary search tree, if you start at the root and move downward left as far as possible you arrive at the minimum element. This is a result of the binary search property—elements to the left are less than or equal to their ancestors. To find the smallest element one must move left down the tree as far as possible. This is equivalent to traversing a linked list (the linked list that starts at root and continues through the left links) until reaching the end. The code shows the linked list traversal pattern we have seen many times before— while not at the end of the list, move to the next node. The code for the max method is equivalent, although movement is to the right through the tree levels instead of left.

7.5 Iterative Versus Recursive Method Implementations

Binary search trees provide us with a good opportunity to compare iterative and recursive approaches to a problem. You may have noticed that trees are inherently recursive: Trees are composed of subtrees, which are themselves trees. We even use recursive definitions when talking about properties of trees—for example, “A node is the ancestor of another node if it is the parent of the node, or the parent of some other ancestor of that node.” Of course, the formal definition of a binary tree node, embodied in the class BSTNode, is itself recursive. Thus, recursive solutions will likely work well when dealing with trees. This section addresses that hypothesis.

First, we develop recursive and iterative implementations of the size method. The size method could be implemented by maintaining a running count of tree nodes (incrementing it for every add operation and decrementing it for every remove operation). In fact, that approach was used for our collections and lists. The alternative approach of traversing the tree and counting the nodes each time the number is needed is also viable, and we use it here.

After looking at the two implementations of the size method, we discuss the benefits of recursion versus iteration for this problem.

Recursive Approach to the size Method

As we have done in previous cases when implementing an ADT operation using recursion, we must use a public method to access the size operation and a private recursive method to do all the work.

The public method, size, calls the private recursive method, recSize, and passes it a reference to the root of the tree. We design the recursive method to return the number of nodes in the subtree referenced by the argument passed to it. Because size passes it the root of the tree, recSize returns the number of nodes in the entire tree to size, which in turn returns it to the client program. The code for size is very simple:

public int size()

// Returns the number of elements in this BST.

{

return recSize(root);

}

In the introduction to recursion in Chapter 3 it states that the factorial of N can be computed if the factorial of N – 1 is known. The analogous statement here is that the number of nodes in a tree can be computed if the number of nodes in its left subtree and the number of nodes in its right subtree are known. That is, the number of nodes in a nonempty tree is

1 + number of nodes in left subtree + number of nodes in right subtree

This is easy. Given a method recSize and a reference to a tree node, we know how to calculate the number of nodes in the subtree indicated by the node: call recSize recursively with the reference to the subtree node as the argument. That takes care of the general case. What about the base case? A leaf node has no children, so the number of nodes in a subtree consisting of a leaf is 1. How do we determine that a node is a leaf? The references to its children are null. Let us summarize these observations into an algorithm, where node is a reference to a tree node.

Let us try this algorithm on a couple of examples to be sure that it works (see Figure 7.11).

Figure 7.11 Two binary search trees

We call recSize with the tree in Figure 7.11a, using a reference to the node with element M as an argument. We evaluate the boolean expression and because the left and right children of the root node (M) are not null it evaluates to false. So we execute the else statement, returning

1 + recSize(reference to A) + recSize(reference to Q)

The result of the call to recSize(reference to A) is 1—when the boolean expression is evaluated using a reference to node A, both its left and right children are null, activating the return 1 statement. By the same reasoning, recSize(reference to Q) returns 1 and therefore the original call to recSize(reference to M) returns 1 + 1 + 1 = 3. Perfect.

One test is not enough. Let us try again using the tree in Figure 7.11b. The left subtree of its root is empty; we need to see if this condition proves to be a problem. It is not true that both children of the root (L) are null, so the else statement is executed again, this time returning

1 + recSize(null reference) + recSize(reference to P)

The result of the call to recSize(null reference) is . . . Oops! We do have a problem. If recSize is invoked with a null argument, a “null reference exception” is thrown when the method attempts to use the null reference to access an object. The method crashes when trying to access node.getLeft() with node equals null.

We need to check if the argument is null before doing anything else. As pointed out earlier, it is almost always a good idea to check for the degenerate case of an empty tree at the start of tree processing. If the argument is null then there are no elements so 0 is returned. Here is a new version of our algorithm:

Version 2 works correctly. It breaks the problem down into three cases:

The argument represents an empty tree—return 0.

The argument references a leaf—return 1.

The argument references an interior node—return 1 + the number of nodes in its subtrees.

Version 2 of the algorithm is good. It is clear, efficient, and correct. It is also a little bit redundant. Do you see why? The section of the algorithm that handles the case of a leaf node is now superfluous. If we drop that case (the first else clause) and let the code fall through to the third case when processing a leaf node, it will return “1 + the number of nodes in its subtrees,” which is 1 + 0 + 0 = 1. This is correct! The first else clause is unnecessary. This leads to a third and final version of the algorithm:

We have taken the time to work through the versions containing errors and unnecessary complications because they illustrate two important points about recursion with trees: (1) Always check for the empty tree first, and (2) leaf nodes do not need to be treated as separate cases. Here is the code:

private int recSize(BSTNode<T> node)

// Returns the number of elements in subtree rooted at node.

{

if (node == null)

return 0;

else

return 1 + recSize(node.getLeft()) + recSize(node.getRight());

}

Iterative Approach to the size Method

An iterative method to count the nodes on a linked list is simple to write:

count = 0;

while (list != null)

{

count++;

list = list.getLink();

}

return count;

However, taking a similar approach to develop an iterative method to count the nodes in a binary tree quickly runs into trouble. We start at the root and increment the count. Now what? Should we count the nodes in the left subtree or the right subtree? Suppose we decide to count the nodes in the left subtree. We must remember to come back later and count the nodes in the right subtree. In fact, every time we make a decision on which subtree to count, we must remember to return to that node and count the nodes of its other subtree. How can we remember all of this?

In the recursive version, we did not have to record explicitly which subtrees still needed to process. The trail of unfinished business was maintained on the system stack for us automatically. For the iterative version, that information must be maintained explicitly, on our own stack. Whenever processing a subtree is postponed, we push a reference to the root node of that subtree on a stack of references. Then, when we are finished with our current processing, we remove the reference that is on the top of the stack and continue our processing with it. This is the depth-first traversal algorithm discussed in Section 7.1, “Trees,” for general trees. Visiting a node during the traversal amounts to incrementing a count of the nodes.

Each node in the tree should be processed exactly once. To ensure this we follow these rules:

Process a node immediately after removing it from the stack.

Do not process nodes at any other time.

Once a node is removed from the stack, do not push it back onto the stack.

To initiate processing the tree root is pushed onto the stack (unless the tree is empty in which case we just return 0). We could just count the tree root node and push its children onto the stack, but pushing it primes the stack for processing. It is best to attempt to eliminate special cases if possible, and pushing the tree root node onto the stack allows us to treat it like any other node. Once the stack is initialized with the root, we repeatedly remove a node from the stack, add 1 to our count, and push the children of the node onto the stack. This guarantees that all descendants of the root are eventually pushed onto the stack—in other words, it guarantees that all nodes are processed.

Finally, we push only references to actual tree nodes; we do not push any null references. This way, when removing a reference from the stack, we can increment the count of nodes and access the left and right links of the referenced node without worrying about null reference errors. Here is the code:

public int size()

// Returns the number of elements in this BST.

{

int count = 0;

if (root != null)

{

LinkedStack<BSTNode<T>> nodeStack = new LinkedStack<BSTNode<T>>;

BSTNode<T> currNode;

nodeStack.push(root);

while (!nodeStack.isEmpty())

{

currNode = nodeStack.top();

nodeStack.pop();

count++;

if (currNode.getLeft() != null)

nodeStack.push(currNode.getLeft());

if (currNode.getRight() != null)

nodeStack.push(currNode.getRight());

}

}

return count;

}

Recursion or Iteration?

After examining both the recursive and the iterative versions of counting nodes, can we determine which is a better choice? Section 3.8, “When to Use a Recursive Solution,” discussed some guidelines for determining when recursion is appropriate. Let us apply these guidelines to the use of recursion for counting nodes.

Is the depth of recursion relatively shallow?

Yes. The depth of recursion depends on the height of the tree. If the tree is well balanced (relatively short and bushy, not tall and stringy), the depth of recursion is closer to O(log2N) than to O(N).

Is the recursive solution shorter or clearer than the nonrecursive version?

Yes. The recursive solution is shorter than the iterative method, especially if we count the code for implementing the stack against the iterative approach. Is the recursive solution clearer? In our opinion, yes. The recursive version is intuitively obvious. It is very easy to see that the number of nodes in a binary tree that has a root is 1 plus the number of nodes in its two subtrees. The iterative version is not as clear. Compare the code for the two approaches and see what you think.

Is the recursive version much less efficient than the nonrecursive version?

No. Both the recursive and the nonrecursive versions of

sizeare O(N) operations. Both have to count every node.

We give the recursive version of the method an “A”; it is a good use of recursion.

7.6 The Implementation Level: Remaining Observers

We still need to implement observer operations: contains, get, and the traversal-related iterator and getIterator, plus transformer operations add and remove. Note that nonrecursive approaches to most of these operations are also viable and, for some programmers, may even be easier to understand. These are left as exercises. We choose to use the recursive approach here because it does work well and many students need practice with recursion.

The contains and get Operations

At the beginning of this chapter, we discussed how to search for an element in a binary search tree. First check whether the target element searched for is in the root. If it is not, compare the target element with the root element and based on the results of the comparison search either the left or the right subtree.

This is a recursive algorithm since the left and right subtrees are also binary search trees. Our search terminates upon finding the desired element or attempting to search an empty subtree. Thus for the recursive contains algorithm there are two base cases, one returning true and one returning false. There are also two recursive cases, one with the search continuing in the left subtree and the other with it continuing in the right subtree.

We implement contains using a private recursive method called recContains. This method is passed the element being searched for and a reference to a subtree in which to search. It follows the algorithm described above in a straightforward manner.

public boolean contains (T target) // Returns true if this BST contains a node with info i such that // comp.compare(target, i) == 0; otherwise, returns false. { return recContains(target, root); } private boolean recContains(T target, BSTNode<T> node) // Returns true if the subtree rooted at node contains info i such that // comp.compare(target, i) == 0; otherwise, returns false. { if (node == null) return false; // target is not found else if (comp.compare(target, node.getInfo()) < 0) return recContains(target, node.getLeft()); // Search left subtree else if (comp.compare(target, node.getInfo()) > 0) return recContains(target, node.getRight()); // Search right subtree else return true; // target is found }

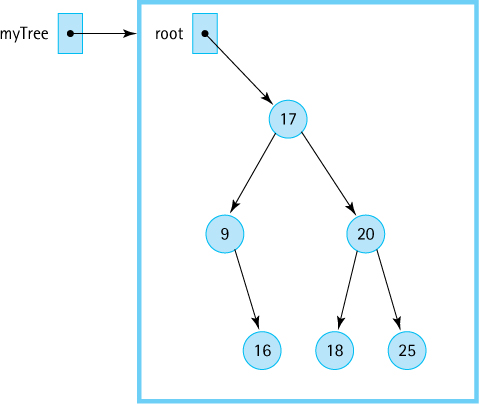

Figure 7.12 Tracing the contains operation

Here we trace this operation using the tree in Figure 7.12. In our trace we substitute actual arguments for the method parameters. It is assumed we can work with integers and are using their natural order. We want to search for the element with the key 18 in a tree myTree, so the call to the public method is

myTree.contains(18);

The contains method, in turn, immediately calls the recursive method:

return recContains(18, root);

Because root is not null and 18 > node.getInfo()—that is, 18 is greater than 17— the third if clause is executed and the method issues the recursive call:

return recContains(18, node.getRight());

Now node references the node whose key is 20, so because 18 < node.getInfo() the next recursive call is

return recContains(18, node.getLeft());

Now node references the node with the key 18, so processing falls through to the last else statement:

return true;

This halts the recursive descent, and the value true is passed back up the line of recursive calls until it is returned to the original contains method and then to the client program.

Next, we look at an example where the key is not found in the tree. We want to find the element with the key 7. The public method call is

myTree.contains(7);

followed immediately by

recContains(7, root)

Because node is not null and 7 < node.getInfo(), the first recursive call is

recContains(7, node.getLeft())

Now node is pointing to the node that contains 9. The second recursive call is issued

recContains(7, node.getLeft())

Now node is null, and the return value of false makes its way back to the original caller.

The get method is very similar to the contains operation. For both contains and get the tree is searched recursively to locate the tree element that matches the target element. However, there is one difference. Instead of returning a boolean value, a reference to the tree element that matches target is returned. Recall that the actual tree element is the info of the tree node; thus, a reference to the info object is returned. If the target is not in the tree null is returned.

public T get(T target) // Returns info i from node of this BST where comp.compare(target, i) == 0; // if no such node exists, returns null. { return recGet(target, root); } private T recGet(T target, BSTNode<T> node) // Returns info i from the subtree rooted at node such that // comp.compare(target, i) == 0; if no such info exists, returns null. { if (node == null) return null; // target is not found else if (comp.compare(target, node.getInfo()) < 0) return recGet(target, node.getLeft()); // get from left subtree else if (comp.compare(target, node.getInfo()) > 0) return recGet(target, node.getRight()); // get from right subtree else return node.getInfo(); // target is found }

The Traversals

The BSTExample.java application at the end of Section 7.3, “The Binary Search Tree Interface,” demonstrated our Binary Search Tree ADTs support for tree traversal. You may want to review that code and its output before continuing here.

Let us review our traversal definitions:

Preorder traversal. Visit the root, visit the left subtree, visit the right subtree.

Inorder traversal. Visit the left subtree, visit the root, visit the right subtree.

Postorder traversal. Visit the left subtree, visit the right subtree, visit the root.

Recall that the name given to each traversal specifies where the root itself is processed in relation to its subtrees.

Our Binary Search Tree ADT supports all three traversal orders through its getIterator method. As we saw in the BSTExample.java application, the client program passes getIterator an argument indicating which of the three traversal orders it wants. The getIterator method then creates the appropriate iterator and returns it. The returned iterator represents a snapshot of the tree at the time getIterator is invoked. The getIterator method accomplishes this by traversing the tree in the desired order, and as it visits each node it enqueues a reference to the node’s information in a queue of T. It then creates an iterator using the anonymous inner class approach we used before for our list iterators. The instantiated iterator has access to the queue of T and uses that queue to provide its hasNext and next methods.

The generated queue is called infoQueue. It must be declared final in order to be used by the anonymous inner class—anonymous inner classes work with copies of the local variables of their surrounding methods and therefore must be assured that the original variables will not be changed. As a result, the returned iterator cannot support the remove method. Because we are creating a snapshot of the tree for iteration, the removal of nodes during a traversal is not appropriate anyway. Here is the code for getIterator:

public Iterator<T> getIterator(BSTInterface.Traversal orderType) // Creates and returns an Iterator providing a traversal of a "snapshot" // of the current tree in the order indicated by the argument. // Supports Preorder, Postorder, and Inorder traversal. { final LinkedQueue<T> infoQueue = new LinkedQueue<T>(); if (orderType == BSTInterface.Traversal.Preorder) preOrder(root, infoQueue); else if (orderType == BSTInterface.Traversal.Inorder) inOrder(root, infoQueue); else if (orderType == BSTInterface.Traversal.Postorder) postOrder(root, infoQueue); return new Iterator<T>() { public boolean hasNext() // Returns true if iteration has more elements; otherwise returns false. { return !infoQueue.isEmpty(); } public T next() // Returns the next element in the iteration. // Throws NoSuchElementException - if the iteration has no more elements { if (!hasNext()) throw new IndexOutOfBoundsException("illegal invocation of next " + " in BinarySearchTree iterator. "); return infoQueue.dequeue(); } public void remove() // Throws UnsupportedOperationException. // Not supported. Removal from snapshot iteration is meaningless. { throw new UnsupportedOperationException("Unsupported remove attempted " + "on BinarySearchTree iterator. "); } }; }

As you can see, the queue holding the information for the iteration is initialized by passing it, along with a reference to the root of the tree, to a private method—a separate method for each traversal type. All that is left to do is to define the three traversal methods to store the required information from the tree into the queue in the correct order.

We start with the inorder traversal. We first need to visit the root’s left subtree, which contains all the values in the tree that are smaller than or equal to the value in the root node. Then the root node is visited by enqueuing its information in our infoQueue. Finally, we visit the root’s right subtree, which contains all the values in the tree that are larger than the value in the root node (see Figure 7.13).

Figure 7.13 Visiting all the nodes in order

Let us describe this problem again, developing our algorithm as we proceed. Our method is named inOrder and is passed arguments root and infoQueue. The goal is to visit the elements in the binary search tree rooted at root inorder; that is, first visit the left subtree inorder, then visit the root, and finally visit the right subtree inorder. As we visit a tree node we want to enqueue its information into infoQueue. Visiting the subtrees inorder is accomplished with a recursive call to inOrder, passing it the root of the appropriate subtree. This works because the subtrees are also binary search trees. When inOrder finishes visiting the left subtree, we enqueue the information of the root node and then call method inOrder to visit the right subtree. What happens if a subtree is empty? In this case the incoming argument is null and the method is exited—clearly there’s no point to visiting an empty subtree. That is our base case.

private void inOrder(BSTNode<T> node, LinkedQueue<T> q)

// Enqueues the elements from the subtree rooted at node into q in inOrder.

{

if (node != null)

{

inOrder(node.getLeft(), q);

q.enqueue(node.getInfo());

inOrder(node.getRight(), q);

}

}

The remaining two traversals are approached in exactly the same way, except that the relative order in which they visit the root and the subtrees is changed. Recursion certainly allows for an elegant solution to the binary tree traversal problem.

private void preOrder(BSTNode<T> node, LinkedQueue<T> q) // Enqueues the elements from the subtree rooted at node into q in preOrder. { if (node != null) { q.enqueue(node.getInfo()); preOrder(node.getLeft(), q); preOrder(node.getRight(), q); } } private void postOrder(BSTNode<T> node, LinkedQueue<T> q) // Enqueues the elements from the subtree rooted at node into q in postOrder. { if (node != null) { postOrder(node.getLeft(), q); postOrder(node.getRight(), q); q.enqueue(node.getInfo()); } }

7.7 The Implementation Level: Transformers

To complete our implementation of the Binary Search Tree ADT we need to create the transformer methods add and remove. These are the most complex operations. The reader might benefit from a review of the subsection “Transforming a Linked List” in Section 3.4, “Recursive Processing of Linked Lists,” because a similar approach is used here as was introduced in that subsection.

The add Operation

To create and maintain the information stored in a binary search tree, we must have an operation that inserts new nodes into the tree. A new node is always inserted into its appropriate position in the tree as a leaf. Figure 7.14 shows a series of insertions into a binary tree.

For the implementation we use the same pattern that we used for contains and get. A public method, add, is passed the element for insertion. The add method invokes the recursive method, recAdd, and passes it the element plus a reference to the root of the tree. The CollectionInterface requires that our add method return a boolean indicating success or failure. Given that our tree is unbounded the add method simply returns true—it is always successful.

Figure 7.14 Insertions into a binary search tree

public boolean add (T element)

// Adds element to this BST. The tree retains its BST property.

{

root = recAdd(element, root);

return true;

}

The call to recAdd returns a BSTNode. It returns a reference to the new tree—that is, to the tree that now includes element. The statement

root = recAdd(element, root);

can be interpreted as “Set the reference of the root of this tree to the root of the tree that is generated when the element is added to this tree.” At first glance this might seem inefficient or redundant. We always perform insertions as leaves, so why do we have to change the root of the tree? Look again at the sequence of insertions in Figure 7.14. Do any of the insertions affect the value of the root of the tree? Yes, the original insertion into the empty tree changes the value held in the root. In the case of all the other insertions, the statement in the add method just copies the current value of the root onto itself; however, we still need the assignment statement to handle the special case of insertion into an empty tree. When does the assignment statement occur? After all the recursive calls to recAdd have been processed and have returned.

Before beginning the development of recAdd, we reiterate that every node in a binary search tree is the root node of a binary search tree. In Figure 7.15a we want to insert a node with the key value 13 into our tree whose root is the node containing 7. Because 13 is greater than 7, the new node belongs in the root node’s right subtree. We have defined a smaller version of our original problem—insert a node with the key value 13 into the tree whose root is root.right. Note that to make this example easier to follow, in both the figure and the discussion here, we use the actual arguments rather than the formal parameters, so we use “13” rather than “element” and “root.right” rather than “node.getRight()“—”root.right” is a shorthand way of writing “the right attribute of the BSTNode object referenced by the root variable.”

We have a method to insert elements into a binary search tree: recAdd. The recAdd method is called recursively:

root.right = recAdd(13, root.right);

Of course, recAdd still returns a reference to a BSTNode; it is the same recAdd method that was originally called from add, so it must behave in the same way. The above statement says “Set the reference of the right subtree of the tree to the root of the tree that is generated when 13 is inserted into the right subtree of the tree.” See Figure 7.15b. Once again, the actual assignment statement does not occur until after the remaining recursive calls to recAdd have finished processing and have returned.

The latest invocation of the recAdd method begins its execution, looking for the place to insert 13 in the tree whose root is the node with the key value 15. The method compares 13 to the key of the root node; 13 is less than 15, so the new element belongs in the tree’s left subtree. Again, we have obtained a smaller version of the problem—insert a node with the key value 13 into the tree whose root is root.right.left; that is, the subtree rooted at 10 (Figure 7.15c). Again recAdd is invoked to perform this task.

Figure 7.15 The recursive add operation

Where does it all end? There must be a base case, in which space for the new element is allocated and 13 copied into it. This case occurs when the subtree being searched is null—that is, when the subtree we wish to insert into is empty. (Remember, 13 will be added as a leaf node.) Figure 7.15d illustrates the base case. We create the new node and return a reference to it to the most recent invocation of recAdd, where the reference is assigned to the right link of the node containing 10 (see Figure 7.15e). That invocation of recAdd is then finished; it returns a reference to its subtree to the previous invocation (see Figure 7.15f), where the reference is assigned to the left link of the node containing 15. This process continues until a reference to the entire tree is returned to the original add method, which assigns it to root, as shown in Figure 7.15g and h.

While backing out of the recursive calls, the only assignment statement that actually changes a value is the one at the deepest nested level; it changes the right subtree of the node containing 10 from null to a reference to the new node containing 13. All of the other assignment statements simply assign a reference to the variable that held that reference previously. This is a typical recursive approach. We do not know ahead of time at which level the crucial assignment takes place, so we perform the assignment at every level.

The recursive method for insertion into a binary search tree is summarized as follows:

Here is the code that implements this recursive algorithm:

private BSTNode<T> recAdd(T element, BSTNode<T> node) // Adds element to tree rooted at node; tree retains its BST property. { if (node == null) // Addition place found node = new BSTNode<T>(element); else if (comp.compare(element, node.getInfo()) <= 0) node.setLeft(recAdd(element, node.getLeft())); // Add in left subtree else node.setRight(recAdd(element, node.getRight())); // Add in right subtree return node; }

The remove Operation

The remove operation is the most complicated of the binary search tree operations and one of the most complex operations considered in this text. We must ensure that when we remove an element from the tree, the binary search tree property is maintained.

The setup of the remove operation is the same as that of the add operation. The private recRemove method is invoked by the public remove method with arguments equal to the target element to be removed and the subtree to remove it from. The recursive method returns a reference to the revised tree, just as it did for add. Here is the code for remove:

public boolean remove (T target) // Removes a node with info i from tree such that comp.compare(target,i) == 0 // and returns true; if no such node exists, returns false. { root = recRemove(target, root); return found; }

As with our recursive add approach, in most cases the root of the tree is not affected by the recRemove call, in which case the assignment statement is somewhat superfluous, as it is reassigning the current value of root to itself. If the node being removed happens to be the root node, however, then this assignment statement is crucial. The remove method returns the boolean value stored in found, indicating the result of the removal. The recRemove method sets the value of found to indicate whether the element was found in the tree. Obviously, if the element is not originally in the tree, then it cannot be removed.

The recRemove method receives a target and a reference to a tree node (essentially a reference to a subtree), finds and removes a node matching the target’s key from the subtree if possible, and returns a reference to the newly created subtree. We know how to determine whether the target is in the subtree; we did it for get. As with that operation, the recursive calls to recRemove progressively decrease the size of the subtree where the target node could be, until the node is located or it is determined that the node is not in the tree.

If located, we must remove the node and return a reference to the new subtree—this is somewhat complicated. This task varies according to the position of the target node in the tree. Obviously, it is simpler to remove a leaf node than to remove a nonleaf node. In fact, we can break down the removal operation into three cases, depending on the number of children linked to the node being removed:

Removing a leaf (no children). As shown in Figure 7.16, removing a leaf is simply a matter of setting the appropriate link of its parent to null.

Figure 7.16 Removing a leaf node

Figure 7.17 Removing a node with one child

Removing a node with only one child. The simple solution for removing a leaf does not suffice for removing a node with a child, because we do not want to lose all of its descendants in the tree. We want to make the reference from the parent skip over the removed node and point instead to the child of the node being removed (see Figure 7.17).

Removing a node with two children. This case is the most complicated because we cannot make the parent of the removed node point to both of the removed node’s children. The tree must remain a binary tree and the search property must remain intact. There are several ways to accomplish this removal. The method we use does not remove the node but rather replaces its

infowith theinfofrom another node in the tree so that the search property is retained. Then this other node is removed. Hmmm. That also sounds like a candidate for recursion. Let us see how this turns out.

Which tree element can be used to replace target so that the search property is retained? There are two choices: the elements whose keys immediately precede or follow the key of target—that is, the logical predecessor or successor of target. We elect to replace the info of the node being removed with the info of its logical predecessor—the node whose key is closest in value to, but less than or equal to, the key of the node to be removed.

Look back at Figure 7.14j and locate the logical predecessor of the interior nodes 5, 9, and 7. Do you see the pattern? The logical predecessor of the root node 5 is the leaf node 5, the largest value in the root’s left subtree. The logical predecessor of 9 is 8, the largest value in 9’s left subtree. The logical predecessor of 7 is 6, the largest value in 7’s left subtree. The replacement value is always in a node with either zero or one child. After copying the replacement value, it is easy to remove the node that the replacement value was in by changing one of its parent’s references (see Figure 7.18).

Examples of all three types of removals are shown in Figure 7.19.

It is clear that the removal task involves changing the reference from the parent node to the node to be removed. That explains why the recRemove method must return a reference to a BSTNode. Here we look at each of the three cases in terms of our implementation.

Figure 7.18 Removing a node with two children

Figure 7.19 Removals from a binary search tree

If both child references of the node to be removed are null, the node is a leaf and we just return null. The previous reference to this leaf node is replaced by null in the calling method, effectively removing the leaf node from the tree.

If one child reference is null, we return the other child reference. The previous reference to this node is replaced by a reference to the node’s only child, effectively jumping over the node and removing it from the tree (similar to the way we removed a node from a singly linked list).

If neither child reference is null, we replace the info of the node with the info of the node’s logical predecessor and remove the node containing the predecessor. The node containing the predecessor came from the left subtree of the current node, so we remove it from that subtree. We then return the original reference to the node (we have not created a new node with a new reference; we have just changed the node’s info reference).

Let us summarize all of this in algorithmic form as removeNode. Within the algorithm and the code, the reference to the node to be removed is node.

Now we can write the recursive definition and code for recRemove.

private BSTNode<T> recRemove(T target, BSTNode<T> node) // Removes element with info i from tree rooted at node such that // comp.compare(target, i) == 0 and returns true; // if no such node exists, returns false. { if (node == null) found = false; else if (comp.compare(target, node.getInfo()) < 0) node.setLeft(recRemove(target, node.getLeft())); else if (comp.compare(target, node.getInfo()) > 0) node.setRight(recRemove(target, node.getRight())); else { node = removeNode(node); found = true; } return node; }

Before we code removeNode, we look at its algorithm again. We can eliminate one of the tests by noticing that the action taken when the left child reference is null also takes care of the case in which both child references are null. When the left child reference is null, the right child reference is returned. If the right child reference is also null, then null is returned, which is what we want if both nodes are null.

Here we write the code for removeNode using getPredecessor as the name of an operation that returns the info reference of the predecessor of the node with two children.

private BSTNode<T> removeNode(BSTNode<T> node)

// Removes the information at node from the tree.

{

T data;

if (node.getLeft() == null)

return node.getRight();

else if (node.getRight() == null)

return node.getLeft();

else

{

data = getPredecessor(node.getLeft());

node.setInfo(data);

node.setLeft(recRemove(data, node.getLeft()));

return node;

}

}

Now we must look at the operation for finding the logical predecessor. The logical predecessor is the maximum value in node’s left subtree. Where is this value? The maximum value in a binary search tree is in its rightmost node. Therefore, starting from the root of node’s left subtree, just keep moving right until the right child is null. When this occurs, return the info reference of that node. There is no reason to look for the predecessor recursively in this case. A simple iteration moving as far rightward down the tree as possible suffices.

private T getPredecessor(BSTNode<T> subtree)

// Returns the information held in the rightmost node of subtree

{

BSTNode<T> temp = subtree;

while (temp.getRight() != null)

temp = temp.getRight();

return temp.getInfo();

}

That is it. We used four methods to implement the binary search tree’s remove operation! Note that we use both types of recursion in our solution: direct recursion (recRemove invokes itself) and indirect recursion (recRemove invokes removeNode, which in turn may invoke recRemove). Due to the nature of our approach, we are guaranteed to never go deeper than one level of recursion in this latter case. Whenever removeNode invokes recRemove, it passes a target element and a reference to a subtree such that the target matches the largest element in the subtree. Therefore, the element matches the rightmost element of the subtree, which does not have a right child. This situation is one of the base cases for the recRemove method, so the recursion stops there.

If duplicate copies of the largest element in the subtree are present, the code will stop at the first one it finds—the one closest to the root of the tree. The remaining duplicates must be in that element’s left subtree, based on the way we defined binary search trees and the way we implemented the add method. Thus, even in this case, the indirect recursion does not proceed deeper than one level of recursion.

7.8 Binary Search Tree Performance

A binary search tree is an appropriate structure for many of the same applications discussed previously in conjunction with other collection structures, especially those providing sorted lists. The special advantage of using a binary search tree is that it facilitates searching while conferring the benefits of linking the elements. It provides the best features of both the sorted array-based list and the linked list. Similar to a sorted array-based list, it can be searched quickly, using a binary search. Similar to a linked list, it allows insertions and removals without having to move large amounts of data. Thus, a binary search tree is particularly well suited for applications in which processing time must be minimized.

As usual, there is a trade-off. The binary search tree, with its extra reference in each node, takes up more memory space than a singly linked list. In addition, the algorithms for manipulating the tree are somewhat more complicated. If all of a list’s uses involve sequential rather than random processing of the elements, a tree may not be the best choice.

Text Analysis Experiment Revisited

Let us return to the text analysis project introduced in Chapter 5, the Vocabulary Density project, and see how our binary search tree compares to the array-based and sorted array-based list approaches presented previously. Because the text analysis statistics remain unchanged we do not repeat them here—see Table 5.1 for that information. Here we concentrate solely on the execution time using three structures: an array. a sorted array, and a binary search tree. Results of the updated experiment are shown in Table 7.2.

Table 7.2 Results of Vocabulary Density Experiment

| Source | File Size | Array | Sorted Array | Binary Search Tree |

|---|---|---|---|---|