Chapter 4

Content Creation 101

You have a vision in your head of how you want a certain projection to look and now you need to create it. Sometimes you know exactly how to do so. Other times you may need to watch tutorials and teach yourself a new method. Don’t despair—we all are constantly learning new techniques, new software, and new creation methods. It’s part of the profession. Each time you learn a new way to reproduce a historical photo or a new method to sculpt a 3D model you add to your quiver of creative arrows. By the end of a few designs you will be amazed at the new skills you’ve gained (and the new gray hairs you’ve gained as well).

Making great content can be an arduous and painstaking task. Quite simply, the perfect content isn’t just sitting out there waiting for you to download it. You need to make beautiful and appropriate art while ensuring you aren’t overtaxing your resources and missing deadlines. The proper research and a strong conceptual design provide a foundation and your cue list is your guide.

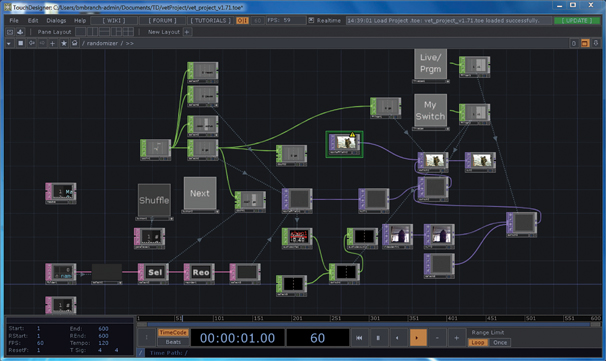

What is this content we are creating? As we’ve discussed previously, it can be anything from rerecorded live action video to 2D or 3D animation to sensor-driven, data-driven, real-time or algorithmic visuals to live video to user-generated texts to audience-manipulated avatars. As a designer, you must have broad knowledge of many types of possible content, across many mediums. This does not come without study and practice.

Our audiences have become expert multitaskers with the ability to work across multiple software packages on their desktops while rapidly flipping between many phone apps. Even though their attention may be split between tasks, it is still focused on a screen, which is framing all of the content they see. Everything within these screens are their sole focus. This is also true of viewing movies at the cineplex or at home via our favorite streaming service. In the theatre, while the content might still be framed within a screen or dwells within some other type of architecture, it also exists within the built environment of the set and lives alongside the onstage performers.

Content unfolds in relation to a real time performance and needs to complement, trade focus with, and not compete with the live action onstage. Audiences instinctively gravitate to looking at large projections and screens instead of the live action onstage if it is too frenetic in a calm moment, or not composed to maintain the audiences’ focus on the live action. Pay careful attention to the scale and motion of the content in order to keep the focus on an integrated stage picture at any given time.

One of the more difficult aspects of content creation is the fact that our audience is savvy at deciding what is a good and bad image. Modern audiences are easily dismissive of visuals that don’t pass their critique or don’t seem to fit the context. If the content lives outside the world of the onstage action, either because of style, technical considerations, or display choice, the audience might experience cognitive dissonance, causing them to wrestle in their minds with two different worlds: the world of the play and the world of the content. Choices you make in designing the content for the show, as simple as adding a color filter to a video clip, have an enormous impact on the way it is perceived. Make sure the images match the style of the production, fitting into the genres, periods, and visual language of the world the director and other artists have created.

Though the main focus of content creation is about creativity and design, there are many technical aspects involved in creating content. Learning to manipulate software interfaces, transcoding video files, working with cameras, and so forth are just some of the many technical aspects to content creation that you need to consider and that are explored in this chapter.

2D and 3D Content in a 3D World

In order to fully understand how our content is perceived it is vital to understand how video, a 2D type of content, is viewed and fits into the three-dimensional world of the theatrical architecture. The design elements of scale,line,visual flow, and forced perspective are highly relevant to how the dimensionality of a composition is perceived and manipulated.

The first thing to consider is the creation of 2D content. Descriptive geometry, which is a method to represent three-dimensional objects in two dimensions, reminds us that orthographic view (projection) is a type ofparallel projection where imagined lines are located perpendicular to a projection plane. To create forced perspective, the imaginary lines are not parallel to the projection plane and lead to a vanishing point. This allows us to perceive dimension, depth, and scale.

Unless you are working with stereographic 3D content where the audience wears 3D glasses, the content that the audiences sees, no matter if it is 2D or 3D, is displayed in 2D on an actual 3D object (projection screen, LED panel, performer, set piece, etc.). The same rule of geometry for the creation of the 2D content comes into play in the display of the content. This is further complicated by the audience’s viewing angle to the screen, which is determined by where they are located in physical space. This in turn is complicated one more time due to the actual 3D forms of actors, set pieces, and so forth, and where they are located in space in relation to the screen and the audience’s viewing angle.

That’s really hard to understand. Let’s try an experiment. Pretend your kitchen is a theatre. The kitchen table is the stage. Put a box of cereal on the stage (the table). The cereal box represents a screen. Even though it is kind of flat, it has some depth or dimension. It is a 3D object. The images on the cereal box are the content. They are in 2D, on a 3D object. Put the cereal box three feet away from the edge of the table. Place a pepper mill, your new leading lady, directly centered in front of the cereal box, about twelve inches away from it. Now place a chair directly across the table, perfectly in line with the cereal box and the pepper mill. Sit in the chair.

You are now sitting in what we call in the theatre the king’s seat. Congratulations. This is the best seat in the house. Everything looks great and perfectly aligned from here.

Now close your left eye. Then switch and close your right eye. Did you see how the salt shaker changed perceived location in relationship to the content on the cereal box? That is the off-axis viewing angle of every seat in the house that is not directly in line with center stage. This is how real-world viewing of your content in relationship to real-world objects and performers is perceived differently by members of the audiences sitting in different seats in the house. This variability in how 2D content is perceived is vital to understand and consider when your content needs to relate in a very particular manner to actual 3D objects and performers within the theatrical architecture.

Creating Content in Relation to the Theatrical Set and for Surfaces other than Projection Screens

We’ve covered this previously in Chapters 2 and 3, but it is worth repeating here. It is extremely important to know what surfaces the content will be displayed on and how they fit into the overall set and stage picture as early as possible. Ideally you will know these answers before you begin making content. The more you know about the final display and staging when creating content, the better the content will integrate into the

Figure 4.1Subtle differences between parallel projection and forced perspective rendered from the same angle in a Sketchup model of The Survivor’s Way (Arizona State University Mainstage, 2012)

Source: Alex Oliszewski

Figure 4.2Examples of king seat and off-center sight lines taken during construction of Forbidden Zones: The Great War , a devised new work, conceived, and directed by Lesley Ferris, codirected by Jeanine Thompson, with the MFA actors and designers. Set design by Cassandra Lentz. (The Ohio State University Mainstage, 2017)

Source: Alex Oliszewski

final stage picture. Here is a summary of key things to keep in mind:

- Is a projection or emissive screen better for the world of the play?

- Will the edges of the projection be blurred to make it look like the video is emerging from the architecture? Or will you create some sort of mask on the edges to blend it into the set? If so, be sure to keep important elements of the video away from the edges of the frame.

- Know the aspect ratio of the surface, such as 4:3, 16:9, or 16:10. This greatly influences the compositions you create. How does the aspect ratio of the video fit within the entire composition of the stage set?

- How will the performers interact with the content? What is their relationship to each other?

Here is a summary of key things to know about surfaces that affect content:

- Is content projected or displayed via emissive displays?

- The reflective quality of the surface you project onto. The more reflective the surface, the more light that hits it bounces back. There is a delicate balance between not enough and too much. Traditional projection screens are treated and have particular screen gain, which affects the reflective quality. How reflective a surface is affects colors, contrast, and perceived brightness. The more reflective the surface, the narrower the viewing angle for the audience becomes.

- The color of the surface being projected onto. This affects colors, contrast, and perceived brightness of the projection. If you are projecting onto a black scrim or black wall, you need to know that before you begin creating content, as you want to anticipate how the surface absorbs black and dark colors in the content.

- The porousness of the surface being projected onto. The more porous the material, the more light travels through.

- Textures on projection surfaces. This creates an uneven surface that can break up and distort projected images.

How much Content do you Need and how Long does it Take to Create?

A good place to start in figuring out how long your content needs to be is to use the general rule that one script page takes about one minute of stage time. So, if you have ten pages of script that you want to have moving images for, the content needs to be roughly ten minutes. But that is not always the case. Sometimes it may be quicker or slower, depending on the density of text and amount of stage action.

Saying that you will support the entire monologue on pages seven to eight is one thing; actually filling the amount of time the performer is going to take performing that text is another. It may be only two pages of text, but let’s say that the way it is played onstage takes ten minutes. Will that ten minutes of content be a single still image or moving images? If it is a moving image for the ten-minute sequence that is only two pages of the script, then you are responsible for 18,000 frames (30 frames per second × 60 seconds in a minute × 10 minutes). This can explode very quickly. If you have a total of thirty minutes of moving images, that number triples to 54,000 frames. That is a lot of video.

The cue list and conversations with the director are the best way to determine how much content you actually need. Regardless of the type of content you are creating it is time-consuming. Given the typical short window of time to create content for a theatrical production, you should know exactly what you are responsible for and about how long it will take to create the finished design. Reliably knowing the difference between a two-to-four-hour solution and a three-to-four-week solution can make or break you, allowing you to rule out certain types of content creation solely based on the time it will take to create.

Still images take less time to create than moving images, but there is still a good deal of time that can be spent on a single image. The amount of time varies depending on if you take the photograph yourself, are altering stock footage, or are creating a still image in some other format. You should plan on spending at least one to three hours per still image used in a production.

For video and animation, you can expect to spend anywhere between five and twenty hours in active production and postproduction for each minute of final video that you end up placing on the stage (this does not apply to twenty-second looping videos). It is an industry standard that one minute of video equals, on average, about three hours of editing time. This is for straightforward video without a lot of effects. If the video has a lot of effects or heavy animation, the editing time could be longer. The remaining time spent is on set, recording the video or creating the animation. The more complicated the video shoot or the animation, the longer it takes to make.

If you plan on using live video of actors or are setting up a live camera rig to project something else that is happening in real time onstage, you should plan on at least two to six hours of time to set up all the equipment and write live camera cues in the media server. If you are creating precise composited images with the live video feed and other assets or applying a lot of real-time video effects, the time spent to create the look may be longer.

When creating abstract generative content, it usually takes one to five hours for one minute of stage time. Like creating an animation using many key frames, the more parameters and/or key frames you use with generative content, the longer it takes to create.

Organization of Assets

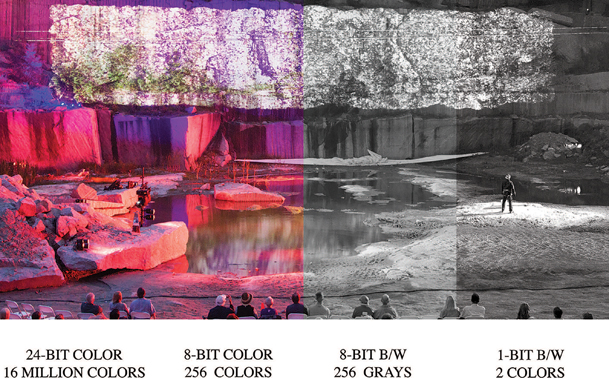

Per cue you create there may be different assets that you use. For instance, you may have an animation cue of a sky, in which the sun slowly moves, the clouds move, and a bird flies through. In this case the sun, sky, clouds, and the bird are independent assets that you create (or source) and that will all be part of the final cue. You might create all the assets in Adobe Illustrator and then import them into Adobe After Effects or the media server to animate.

Keeping track of all these assets and project files is vitally important, especially when working on shows where you have a lot of cues. If you download an image from the Internet with a filename of “1243Hg45567gf9. jpeg,” this will not be helpful to you later when you are flipping through the images on your hard drive, trying to locate it. So, make sure to name your files something meaningful, like what the image is, so at a glance you can easily find the thing you are seeking. Give it a name of what it actually is, like “gray_and_white_cat2.jpeg.”

We also recommend keeping yourself organized by using folders based on specific cues. Sub-folders are your friend to keep a logical organization structure of assets. This is especially true when there are multiple members of the team who may need to use the same assets.

Backing up your Data

We cannot stress enough how important it is to back up all your files when working on a show. Hard drives fail. Data gets corrupted. If you don’t want to lose hours of work that you may not have the time to replicate, make sure to back up all your files. We recommend using cloud-based storage in addition to physical hard drives. Additional bonuses to cloud-based storage are:

- Other members of the design team can all access the files, which keeps everything in one place and organized.

- Some cloud storage services allow you to go back in time to rescue a previous version of a file. This can be helpful if you made a lot of changes to something that you no longer like or if a file becomes corrupt Do not rely on cloud services as your only back up.

The Basics of Design

This section provides an introduction to core design principles that are vital to designers. We don’t have room here to go in-depth in any one area. Set some time aside to visit the local arts library and/or to search online resources for detailed analysis about the basics of design. Having a firm foundation in the basic principles of design provides a strong starting point for content creation.

Style

Styles arise from a deliberate combination of visual elements and principles or rules of image making used in a work or individual composition. It can also refer to specific recognized types of design, which can be used as templates for predictable results. Skeuomorphism, flat, minimalist, Victorian, mid-century modern, classical, and contemporary are examples of established styles. Style can be broken down into component parts, such as line, shape, composition, color, contrast, negative space, texture, rendering techniques, and pattern, among many possible others.

In theatrical design a show’s style is directly guided and emerges from the storytelling needs of a performance. Theatre designers collaborate with others and in turn need to be able to match their work to various styles and situations. Simply choosing to include projections in a show helps to define the overall style. Regardless of how those projections are incorporated, as an extension of the environment or separated out on a screen, a designer should always know why and how his or her projections affect the style of the show.

Some projection design tasks, such as the use of supertitles, have predictable styles. Supertitles demand to be legible and usually should not upstage the primary action taking place on the stage. Because of this, they are typically made of white text projected on a dark background just outside of the stage’s framing. This way they can be both large enough to be legible and within the peripheral vision of the audience.

Line

Figure 4.5 Example of the mid-century modern style and architecture of the Stahl House, designed by Pierre Koenig

Source: Ovs

Discretely visible or implied, lines refer to a mark or band of defined space. Lines can be straight, curved, textured, jagged, smooth, or any number of different variations. Straight lines feel unlike curved lines and delicate lines convey something different than bold lines. Lines can be defined in the negative space between different elements just as they can be deliberately placed objects. Line type and orientation help define spatial, emotional, and physical qualities of an environment. Vertical lines can be used for defining height and help in defining architectural or man-made buildings. Slightly curving horizontal lines evoke nature, balance, and organic forms. Diagonal lines convey movement and can be stimulating or even overwhelming when repeated or patterned. Flowing lines that meander about can be used to draw the eye along a path or help frame other elements in an image.

Theatre projections tend to be as large if not larger than the performers onstage and in turn tend to help define the qualities of environment. Lines can readily be used to define horizons and any variety of skyline. When working with a scenic designer, note how he or she is using lines both in the shapes and surfaces the projections and displays will be on and near.

In projection design, 3D effects are often achieved through the use of forced perspective and 3D animation techniques that use combinations of lines and shapes to create an illusion of depth and dimension. By combining horizontal, vertical, and diagonal lines set to specific vanishing points, you can create illusions of depth and expand the perceived volume of the content in the shared theatrical space.

![]() For More Info 4.1 Vanishing Points

For More Info 4.1 Vanishing Points

Refer to “2D and 3D Content in a 3D World” in Chapter 4.

Figure 4.6 Example of different line types. Digital media designs by Alex Oliszewski. Upper and lower left images from The House of the Spirits by Caridad Svich. Lighting design by Anthony Jannuzzi. Scene design by Brunella Provvidente. Costume design by Anastasia Schneider. Arizona State University Mainstage, 2012. Upper right image from Forbidden Zones: The Great War . Set design by Cassandra Lentz. Lighting design by Kelsey Gallagher. Costume design by Julianne Nogar. The Ohio State University Mainstage, 2017. Lower right image from Big Love by Charles Mee. Directed by Kim Weild. Set design by Jeannie Bierne. Lighting design by Troy Buckey. Costume design by Maci Hosler. (Arizona State University Mainstage, 2010)

Source: Alex Oliszewski

Shape

Shapes are made from enclosing an area with lines or otherwise defining it with color, texture, or contrast values. Shapes can be geometric, abstract, organic, evocative of a silhouette, stylized, or nonrepresentational.

One of the most common shapes found in conjunction with projections is that of a rectangular screen. Learning how to mask and feather the edges of the projections is key to creating projections that fade into their environments. Being able to frame video or even obfuscate the edges of the rectangular projection area with feathered edges or shapes that merge into an environment’s line and form helps the projections seem more integrated with the shapes of the surfaces you’re projecting on.

Composition

Arrangements of shapes, lines, and forms are described as compositions. An object’s relationship to another object creates meaning. A set of design principles has been developed that interprets and codifies these meanings into component parts that can be defined and used with predictable results. Some of the principles of compositional design are often listed as balance, unity, variety, pattern, repetition, scale and proportion, rhythm, emphasis, balance, space, and eye path.

When discussing composition there are a number of terms that come up quite often. These include contrast, alignment, proximity, and repetition. How objects are aligned with one another, above and below, beside and away, indicates the objects’ relationship to one another within a composition.

Rule of Thirds

The rule of thirds is a method for creating compositions. It involves mentally or literally overlaying lines that divide an image into a grid of three equal rows and columns and gives preference to placing emphasis on intersecting lines. This offsets the subject and is usually more visually

Figure 4.7 Example of shape types. A Brief Anniversary of Time by Lance Gharavi. Digital media design by Daniel Fine. Lighting/costume/set design by Anastacia Schneider. (Arizona State University, Marston Theatre, 2012)

Source: Matthew Ragan

Figure 4.8 Example of composition from The House of the Spirits by Caridad Svich. Digital media design by Alex Oliszewski. Lighting design by Anthony Jannuzzi. Scene design by Brunella Provvidente. (Arizona State University Mainstage, 2012)

Source: Alex Oliszewski

Figure 4.9 Example of rule of thirds between staging and projections from Good Kids by Naomi Iizuka. Digital media design by Alex Oliszewski. Lighting design by Josh Poston. Set design by Brad Steinmetz. Costume design by Travis Bihn. (The Ohio State University, 2015)

Source: Alex Oliszewski

Figure 4.10 Example of negative space from A Brief Anniversary of Time by Lance Gharavi. Digital media design by Daniel Fine. Lighting/costume/set design by Anastacia Schneider. (Arizona State University, Marston Theatre, 2012)

Source: Matthew Ragan

pleasing than images that place emphasis directly in the center of the image.

The rule of thirds translates nicely into digital media design but must be considered in three dimensions. When designing for the theatre there is a wide range of sight lines to consider that affects how an audience sees your compositions in relation to the set. On a thrust stage, you may find that elements of your design end up being in different thirds depending on where in the house you sit.

Negative Space

Negative space is a term that refers to empty space between and surrounding shapes and areas of detail or texture. This empty space can itself have a form and offer interesting or even hidden meanings.

Negative space is particularly useful when you want projections to blend into an environment. Projecting a bright white rectangular border around a projection reveals the edges and artificial nature of the images and their method of display. Conversely, the projections seem to emerge from the environment if you surround them with projector/video black or otherwise blend them into the existing light and ambient projection surfaces.

Unity

Unity in a design is achieved when each component element and subject of a composition are visually and aesthetically related to one another. Balance, alignment, repetition, color, juxtaposition, and so forth can be used to achieve unity in a composition. Unified compositions include only those visual elements that are needed to fulfill the goals of a design.

Onstage, digital media sometimes sacrifices unity of image complexity and detail. When you find yourself needing to work toward a more unified design with other design areas, we recommend you simplify and look for ways of balancing visual elements among all design areas.

Figure 4.11 Example of unity between the projection, lighting, the set, and the staging from Forbidden Zones: The Great War . Digital media design by Alex Oliszewski. Set design by Cassandra Lentz. Lighting design by Kelsey Gallagher. Costume design by Julianne Nogar. (The Ohio State University Mainstage, 2017)

Source: Alex Oliszewski

Figure 4.12 Example of variety from a workshop of Beneath: A Journey Within by Lance Gharavi. Digital media design by Daniel Fine. Costume and set design by Brunella Provvidente. Lighting design by Michael Bateman. (Arizona State University, Marston Theatre, 2016)

Source: Daniel Fine

Variety

Variety is to make something different. Variety works with unity to help create visual interest. Without variety, an image can become visually uninteresting. Seek a balance between unity and variety. Design elements need to be alike enough so they seem to belong together as part of a cohesive whole, yet varied enough to be visually interesting. Some ways to create visual variety within a unified design is to change the size, shape, color, texture, value, line, and so forth of individual elements.

Balance

Balance describes the visual weight, distribution, and proportion that elements such as textures, objects, colors, and empty space have in relation to one another. Many small objects can be grouped and arranged to balance one or two larger objects. Balance can be made to be symmetrical or follow any number of composition principles, such as the rule of thirds. Balance can be centered symmetrically, asymmetrically, and radially.

Onstage, performers and oftentimes set pieces constantly move. This shifting of onstage elements changes the balance of the projections unto itself and in relation to the physical world of the set and actors.

Color

Color is extremely important in design. Certain colors are often associated with specific emotions, times of day, and even specific storytelling contexts. Reds, yellows, and oranges are warm colors associated with fires, mornings, spring, and high-energy situations. Cool colors, such as green, blue, and purple, evoke plants, water, nighttime, and winter and are calmer and more constrained.

![]() For More Info 4.2

For More Info 4.2

Refer to “The Basics of Digital Content: CMYK and RGB Color, Bit Depth, and Alpha Channels” in Chapter 4.

Figure 4.13 Example of symmetrical, asymmetrical, and radial balance. Top left: Good Kids by Naomi Iizuka. Digital media design by Alex Oliszewski. Lighting design by Josh Poston. Set design by Brad Steinmetz. Costume design by Travis Bihn. (The Ohio State University, 2015) Top right: Workshop of Beneath: A Journey Within by Lance Gharavi. Digital media design by Daniel Fine. Costume and set design by Brunella Provvidente. Lighting design by Michael Bateman. (Arizona State University, Marston Theatre, 2016) Bottom: The Survivor’s Way by Alex Oliszewski. Digital media design by Alex Oliszewski and Daniel Fine. Light design by Adam Vachon. (Arizona State University, 2012)

Source: Alex Oliszewski

Any color on surfaces you are projecting onto changes the perceptions of the colors you are using. For instance, you may find it difficult, if not impossible, to project vibrant red outlines on a dark green surface. Create real-world tests using the actual projectors and sample surfaces provided by the scenic designer whenever possible.

Texture

Texture is an actual or implied tactile quality to a surface. It can be real and emerge from physical qualities of a surface, such as the layering of paint or the weaving of textiles, or it can be mimicked through the application of patterned highlights and shadows to create the impression of texture. Textures are visible because they cause patterns of shadow and light that the eye interprets as a tactile quality to a surface. Any number of textures can be digitally added to content to give the impression that the image is on a textured surface.

The texture of a surface changes how it takes and reflects a projection. Just as matte and glossy surfaces scatter light that strikes them differently, so do different textured surfaces. Test any textured surfaces you intend to project on early in the design process to ensure that they reflect the content appropriately.

Emphasis

Emphasis creates focus in a composed image. It draws your attention to a certain element. Areas of emphasis might be defined by a juxtaposed color, convergence of lines, or an area of contrast that draws the focus of the eye.

Contrast

In design, contrast refers to how elements are noticeably different than one another. The juxtaposing of different design elements against one another is key to establishing meanings between them. A large heading followed by small text and a bright white spot in a sea of dark blue are examples of high-contrast images. Contrast is used to grab attention and direct the eye to specific information. Objects that are contrasted become the focus and help eliminate visual details that are less important.

Figure 4.14 Example of texture from Soot & Spit by Charles Mee. Directed by Kim Weild. Digital media design by Boyd Branch. Lighting design by Adam Vachon. Set design by Brunella Provvidente. Costume design by Haley Peterson. (Arizona State University Mainstage, 2014)

Source: Boyd Branch

Scale and Proportion

Scale refers to the size of a visual element in relationship to another. Through intentional sizing of visual elements in a composition, scale can be used to manipulate the implied size of an object. In film, scale is used by juxtaposing close-up and wide-angle shots of a subject to create moments of intimacy or distance between the subject and the audience.

Proportion is the relative size of all the various parts that make up an object in relationship to the whole. If you draw a human body and desire all the parts to be in correct proportion and you draw a foot that is bigger than the torso, then the proportion would be wrong.

In theatrical projection and digital media design, compositions must be considered in relation to the human scale of performers. The scale of projections in relation to the performers defines the flexibility of the compositions you are able to make. This is one element of compositions that remains a constant concern when working in live performance. Additionally, the scale of the projections needs to fit within the architecture of the set and the venue.

When using live or prerecorded video to magnify an actor, small details of a performance are made visible. Scale can also be manipulated to juxtapose the size of the human body onstage. Performers can be embodied through digital media and rescaled so that they become larger than or smaller than life. This allows for digital avatars to perform at extreme scales from their onstage human form, the environment, and the audience.

Repetition and Pattern

Repetition describes the reuse of shapes, lines, colors, arrangements, ratios, and so forth of any single element or group of visual elements in a composition. Repetitions are perceived as patterns when their elements seem to loop back into one another. Patterns are regular, consistently repeating elements of a design.

The use of repetition is a powerful tool when working toward a unified design between different mediums and especially in creating meaning and context between images and real-world objects onstage.

Figure 4.16 Example of scale: projections in relation to actors from Everybody’s Talkin’: The Music of Harry Nilsson by Steve Gunderson and Javier Velasco. Digital media design by Daniel Fine. Set design by Sean Fanning. Lighting design by Philippe Bergman. Costume design by Gregg Barnes. (San Diego Rep, 2015)

Source: Daniel Fine

Figure 4.17 Example of repetition and pattern from Everybody’s Talkin’: The Music of Harry Nilsson by Steve Gunderson and Javier Velasco. Digital media design by Daniel Fine. Set design by Sean Fanning. Lighting design by Philippe Bergman. Costume design by Gregg Barnes. (San Diego Rep, 2015)

Source: Daniel Fine

Typography

A large portion of graphic design deals with the art and technical specifications of arranging text. All of the elements of design discussed earlier should be considered when rendering words. The art form focuses on ensuring the legibility and easy comprehension of text. The terms leading, kerning, tracking, hierarchy, justification, pica, serif, along with many others, all come from typography and its established techniques for formatting and manipulating text.

The use of rendered text is common in theatre and often prioritizes practical needs over qualities of abstraction or artistic details. The most common uses of text in theatre productions are to place time and location as films often do and for supertitles.

![]() For More Info 4.3 Supertitles

For More Info 4.3 Supertitles

See “Style” in Chapter 4.

The Basics of Digital Content

The following section provides an introduction to the core concepts of digital content. That is to say, what lies just above the surface of the ones and zeros. This information is the foundation to understanding how to create, manipulate, and display digital content. This is not meant to go in-depth in any one area, but rather to give you an overview to understand how the technical aspects of the digital realm affect content. It is vital to have a working knowledge and competency in these basics of digital matters in order to be successful as a digital media designer.

Bits and Bytes

A bit is the smallest unit of information in computing and digital video and has two values. These values are typically 1 and 0, but can be any two values that correspond to each other, such as on/off.

A byte is a group of binary bits that function as a unit and are counted in number groupings that double: 2, 4, 8, 16, 32, 64, and so forth.

Figure 4.18 Example of typography from Count of Monte Cristo by Frank Wildhorn and Jack Murphy. Digital media design by Daniel Fine. Set design by Rory Scanlon. Lighting design by Michael Kraczek. Costume design by La Beene. (Brigham Young University Mainstage, 2015)

Source: Daniel Fine

Bits and bytes are important to understand because they are the building blocks of computational information, especially in terms of rendering visual media.

Pixels, Rasters, and Resolution

The smallest visual element of a digital image is called a pixel. In digital media pixels are made up of bits. There are two primary pieces of information used to create a pixel: chrominance (color) value and luma (intensity) value. If you take all the chroma out of an image you are left with a black and white image. Remove the luma and you have only black.

Images formed on monitors or in the light of video projectors are made up of groupings of individual pixels. Digital files, digital display devices, and digital image capturing devices rely on the concept of pixels arranged in a grid and are commonly referred to as a raster.

The number of pixels you have in a raster is defined in terms of resolution. The more pixels there are in a raster the greater the resolution. An image’s resolution is represented and determined by two values that correspond to the number of pixels in a grid:

- The number of pixels along a row in the width (x) of an image

- The number of pixels along a column in the height (y) of an image

To calculate the number of pixels in an image you would times (×) the number of pixels wide by the number of pixels high. For instance, one of the most common resolutions currently used in commercial video equipment is 1920 × 1080. This means that the video image is 1920 pixels wide and 1080 pixels high. Think of 1920 × 1080 as a shorthand way of expressing the total number of pixels (resolution) per image as determined by the equation 1920(w) × 1080(h) = 2,073,600 total pixels in each image frame of video.

Beware that there are many different ways of describing the resolution of an image. Still camera companies use the term megapixel to describe one million pixels, meaning that the foregoing example resolution would be a 2-megapixel image. Projection and emissive display companies use terms such as HD, 4k, and others that imply a resolution but are imprecise. Whenever possible it is preferred to describe resolution in exact width and height.

![]() Tip 4.1 Tricks for Making the Resolution You Have Look the Best

Tip 4.1 Tricks for Making the Resolution You Have Look the Best

- Problem: Individual pixels are large, visible, and distracting.

- Solution: Try moving the projector ever so slightly out of focus. This can help bleed the edges of the pixels together and actually look better from a distance than a perfectly in-focus projection.

- Problem: The media server is having difficulty maintaining smooth playback of content when you start compositing multiple high-resolution media files.

- Solution 1: After you have locked down the exact placement and timing of the content, return to the content creation software and render the composited video down to a single piece of high-resolution media.

- Solution 2: If the media server allows, try turning down the resolution throughput of some of the content to anywhere between 15 percent and 33 percent. Depending on how close the audience is sitting to the display screen or projected content you can get substantial performance gains with little or no perceived loss in quality. This option is great if you have limited time for re-rendering or if you are trying to improve quality on the fly during a rehearsal.

Note: Always check with the manual and forums for the media server you are using to ensure you have encoded the content in the recommended codec(s).

Pixels Are Data

Pixels are recorded/stored/displayed as data. There is a wide range of file formats that are available for storing digital image data. The standard in digital photography and video is a raster-based storage method using pixels.

If an image is black and white (with no shades of gray) only one number is needed to correspond with each pixel in an image. White would be represented by a 1 and black by a 0.

Figure 4.19 Chart of different resolutions and aspect ratios

Source: Alex Oliszewski, adapted from Shutter Stock figure 162928304

![]() For More Info 4.4 Color and Alpha

For More Info 4.4 Color and Alpha

See “CMYK and RGB Color, Bit Depth, and Alpha Channels” in Chapter 4.

To represent the 16 million or so colors that can be displayed on modern digital displays requires more complicated code with greater combinations of numbers. Using 1920 × 1080 resolution, there is a total of 2,073,600 total pixels. If each of those pixels requires only the red, green, and blue color data, then for all the pixels there are 6,220,800 (2,073,600*3) bits of data that need to be rendered in the raster of a single image. Begin animating a 1920 × 1080 raster at the rate of 30 frames a second and you are up to 186,624,000 (6,220,800×30) pixels’ worth of data to be processed per second.

The encoding method you use affects how large the final video file ends up being. To help give a sense of what this means in terms of file size, consider the foregoing example of a 1920x1080 video rendered with Apple ProRes codec at 4:2:2, with no compression, which ends up being approximately 18.9 MB per second. Add intensity levels, layer information, alpha levels, and so forth and the data size of the bytes within pixels increases even more.

While greater resolution correlates with greater detail in an overall image it also means more information. This translates to larger files. While 4K and 8K video are wonderful, the amount of data involved makes these high resolutions more technically demanding to work with and store. This large mass of data explains the long hours experienced waiting for a video to render into the proper display format.

Pixels in Displays

There is a wide variety of shapes and size in pixels across the spectrum of digital video technologies. In displays, square pixels rule. Almost all display devices dictate the use of square pixels. Non-square pixels are specific to different types of video camera recording methods. You need to convert these pixels to square pixels for display. When working with digital video, always work with square pixels unless the camera or video capture device you are using demands otherwise. If creating content that is not camera-based, always choose square pixels in the creation software. Remember this when you are first configuring your compositions in software packages, such as After Effects or Final Cut Pro.

It is best to use square pixels from the beginning, rather than converting pixels afterwards, as this process alters your artwork. Each one of the elements in a physical display’s pixels is translated from the bytes of data recorded in a digital image file. If the shapes of the pixels in the display and the content match, the images will look as you intended.

![]() For More Info 4.5 Pixels in Projectors

For More Info 4.5 Pixels in Projectors

See “Calculating Pixel Size/PPI, and Approximate Perceived Pixel Size” in Chapter 5.

Pixelization

Pixelization is a term used to describe when jagged and stairstep-type shapes of individual pixels are visible in an image. Pixelization can be used as a stylistic and aesthetic choice but is often highly undesirable. It most commonly results from the use of heavy video compression techniques or the upscaling of low-resolution content into high-resolution rasters. In general, it is always a best practice to work in a high resolution. If you need a smaller resolution you can easily lower it, but you can’t raise it without risking pixelization.

Pixelization commonly occurs from configuring a projection display so that its pixels are large enough for an audience member to see. It can occur on any type of digital display but is surprisingly common in theatrical video projections.

Figure 4.20 Example of projected pixels (top) vs. LED computer monitor pixels (bottom)

Source: Alex Oliszewski and Pg8p at English Wikipedia

Depending on a show’s design aesthetics you need to decide if this type of artifact is appropriate. Theatrical projections are used at large physical scales and this often means that individual pixels in a projector’s raster end up being visible. You may certainly come across situations where the distinct digital quality that comes from being able to distinguish individual pixels is not desirable and proves to be a dramaturgical problem that should be considered.

Figure 4.21 Example of upscaling content to the point of seeing pixelization. Lower left: 250 percent. Lower right: 750 percent. Image from There Is No Silence , a devised work by the MFA Acting Cohort and Jennifer Schlueter with Max Glenn. Conceived and Directed by Jeanine Thompson. Digital media design by Alex Oliszewski. Image by Vita Berezina-Blackburn. 3D models by Sheri Larrimer and Jeremy Baker. Costume design by Natalie Cagle. Lighting design by Andy Baker. Scene design by Brad Steinmetz. (The Ohio State University, 2014)

Source: Matt Hazard

Raster vs. Vector

Raster and vector-based file formats are the two primary methods of creating digital images.

Raster-based graphics files are not infinitely scalable. Within a raster, you have only the information recorded per each pixel. Zooming out of a raster image removes pixel information as multiple pixels are combined into the same space. Zooming into an image requires the interpolation of new information to fill in pixels that didn’t exist. This leads to pixelization or distortions and degradations in image quality. Raster-based image formats include .bmp, .gif, .jpeg, and .png. All digital video formats are based on raster graphic image files.

Vector graphics rely on math and do not use a raster (or pixel-based) method to store or manipulate image data. Vectors rely on points, lines, and curves within a digital mathscape that is converted into a raster of pixels only when they are being displayed. This allows vector images to be infinitely scalable as all pixel data is rendered via math and not via pixel creation. Because the pixels are redrawn via math each time the scale changes, vector images do not become pixelated. Put another way, vector graphics always seem to have smooth lines along their edges no matter how far you zoom in or out.

Due to the versatility of scaling, vector graphics are commonly used in fonts, logos, and images that need to be scaled to many different extreme physical proportions. If you know you are going to change the scale of images in the media server, work with vectors. Vector-based image formats include .ai, .eps, .cdr, and .odg among others.

CMYK and RGB Color, Bit Depth, and Alpha Channels

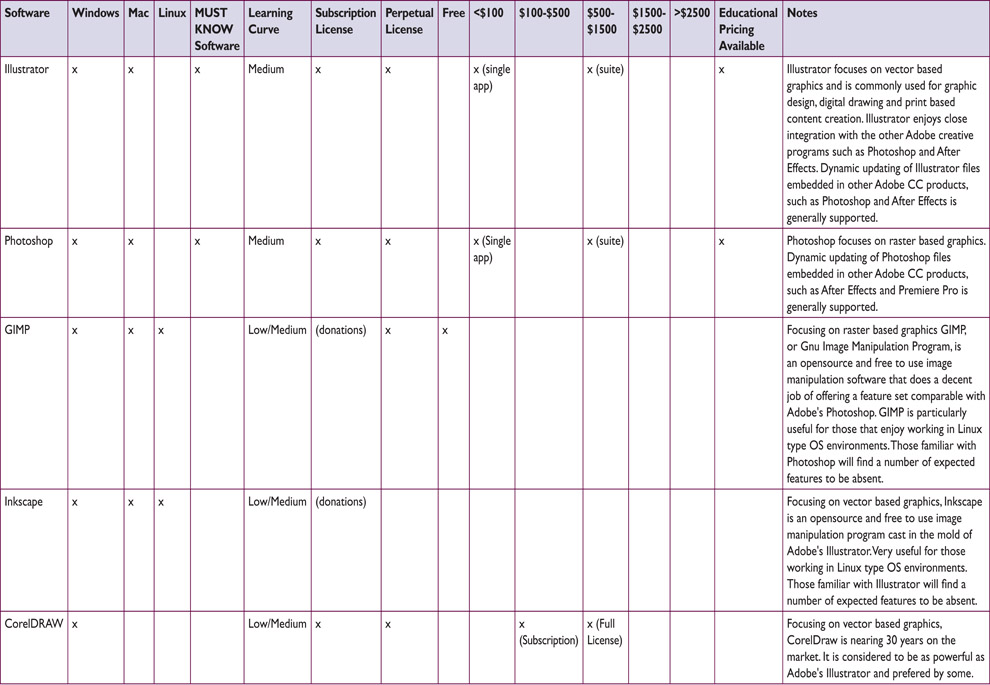

The two basic models of color are CMYK andRGB. Bit depth is the number of bits used to create color in a single pixel. Each bit can store up to two values. The greater the bit depth is, the more color it can store and so the greater range of different possible colors per pixel.

CMYK

Figure 4.23 Composite of different bit depths. Terra Tractus by Projects for a New Millennium. Digital media design by Daniel Fine and Matthew Ragan. Lighting by Jamie Burnett.

Source: Alex Oliszewski

In pigment-based color theory, used in printing and color mixing, there are three primary colors: cyan (blue), magenta (red), and yellow. These three colors are subtractive primaries and when mixed together form black (K). The theory is that these three hues are the parents of all other colors. No other colors can be mixed together to create these three. However, these three primary colors can mix together to create three secondary colors:

- Orange (yellow + red)

- Green (blue + yellow)

- Purple or violet (red + blue)

A third level of six tertiary colors is created by mixing a primary and its nearest secondary color together:

- Yellow-orange (yellow + orange)

- Red-orange (red + orange)

- Red-violet (red + violet)

- Blue-violet (blue + violet)

- Blue-green (blue + green)

- Yellow-green (yellow + green)

These twelve colors can then be mixed together to create endless varieties of color.

Figure 4.25 Primary, secondary, and tertiary color wheel

Source: Mallory Maria Prucha

In print, actual black pigments are sometimes used to reduce the amount of ink or other material to ensure a truer black or darker color than the mixing of physical pigments would otherwise allow.

RGB

Red, green and blue (RGB) are the three primary colors of light. In the light model, these three colors use an additive system and when mixed together create white. Black is created by the absence of light. Like the CMYK model, these three primary colors can be combined to create all other colors. The RGB model is used in lighting and digital imaging technologies, such as projectors, televisions, and cameras.

Hue, Saturation, and Value

There are a number of different properties of color. Three of the most important for a digital media designer to be aware of are hue, saturation, andvalue (HSV). Each of these three elements can typically be adjusted independently on a value scale.

Hue is the actual color. We all perceive color differently, so there is not an exact science to working with hues. You can think of different hues as having variations in tint, shade, and intensity. Hue is sometimes described in terms of temperature, with reds and oranges being warmer and blues and greens being cooler.

Saturation is the purity of a hue and is sometimes referred to as chroma. The more saturated a hue is the richer it appears. As a color becomes desaturated it appears duller and less vibrant. At a value of 100 (full) a hue is completely pure. At a value of 0 a hue appears grey.

Value refers to the lightness/darkness of a hue. The value is what controls the intensity of light in a hue. At a value of 100 (full) a hue is brighter. As the value approaches 0 the value becomes darker or appears blacker.

RGB(A): Alpha Channels

In digital files, it is common to include what is called an alpha channel to allow for the layering of rasters into a composite image. Images and videos that include alpha channels are referred to as having transparency. Alpha channels are used to record the translucency of a pixel. It can be thought of as its own channel of color, like red, green, or blue, because of its similar role in defining how a pixel is to be rendered. In this way, a pixel on a layer above another raster can be turned on or off, controlling how a pixel from a lower layer is visible.

Figure 4.27 Hue scale

Figure 4.28 Saturation and value scale

Source: Alex Oliszewski, based on the Munsell color system

Adobe Photoshop’s proprietary layered image file format (.psd) relies on the use of alpha channels to allow for the pixel-perfect blending of elements into composited images. Image file types that include an alpha channel are:

- .tff

- .png

The .hap alpha codec is a current gold standard for video with alpha channels. Working with alpha channels increases the computational complexity of an image and creates larger files that can have longer render times.

Digital Color Space

Color spaces have different bit depths and volumes of color. The colors available originate from a three- or four-dimensional mathematic color model. It establishes a coordinate system that defines every possible color that can be displayed within a given color space. This full range of any given color space is called a gamut.

Figure 4.29 RGB(A) image, with checkerboard background representing the transparent alpha channel and the same image composited with foreground and background assets

Source: Alex Oliszewski

In general, CMYK and sRGB (s is for standard and is commonly not written) are the two most commonly used digital color spaces. The CMYK color space is used in the print industry to create artwork that eventually is printed. Because of the emissive natures of light, RGB produces a larger color volume than CMYK. As a digital media designer, working with RGB light technologies, you should work in RGB color space. The CMYK color space has a more restricted gamut than RGB and images converted from RGB to CMYK normally need some form of color correction to ensure that blacks remain black and colors don’t seem to flatten out.

The number of specialized RGB color spaces available is dauntingly large. The most popular are Adobe RGB, ColorMatch RGB, and ProPhoto RGB. These color spaces are used a lot in digital photography in relation to various types of printing technologies and are rarely relevant to our work in the theatre. However, they deserve further research if you have not heard of them before.

If the color space of the display you are designing on is different than the one you are designing for, it may be hard to anticipate how content will actually be seen. Each file format and display device have their own idiosyncrasies when it comes to the gamut or volume of colors they are able to represent and display.

As a rule of thumb, you should be aware of the color space you are designing in and how it is different than the colors you will actually be displaying. Figure 4.30 was created using Apple’s OSX ColorSync utility from a computer used to design projection content. In these images, ColorSync shows how the color space of the designer’s display monitor can be compared to the color space of a projector used to display content. Notice how, in all but a small section of blue, the video projector has a noticeably smaller color space. This means that any images the designer creates that use colors outside of the projector’s color space are compressed into the color volume of the projector. All the color information compressed down into the smaller projector volume generally causes the overall images to have reduced color contrast and potentially lose some detail and image quality. Depending on how color compression is applied you may also encounter banding artifacts in gradients and areas of color that pass through the compressed color ranges.

We recommend using a utility such as ColorSync or otherwise researching the color space of the display and design devices you are working with in order to properly plan the image creation workflow. It is possible to reconfigure your design displays to represent any given projector or display device. However, it is difficult in the theatre to quantify everything required to simulate how color will be perceived by the audience. Knowing the theories behind the use and combination of color and how human perception automatically adjusts color to certain lighting situations is very useful.

Figure 4.30 3D visualization of RGB color space (gray form) in comparison to CMYK color space (colored form) as rendered by the Apple ColorSync application

Source: Alex Oliszewski

Chroma (Color) Subsampling or 4:2:0 vs 4:2:2 vs. 4:4:4

As noted earlier, digital media files can be quite large and computationally difficult to work with. Computer graphics engineers have developed chroma subsampling, a method that produces decent-quality images by compressing the amount of color information needed to encode an image while simultaneously boosting computing performance. It allows for each pixel in a raster to have its own luminance data, but groups pixels together to share chrominance data. This sharing of chrominance across pixels reduces the computational difficulty of displaying large amounts of pixels in each frame of video.

Subsampling is notated as a three- (or four if alpha is present) numeric formula that indicates the ratio of the pixel width of a sampling area compared to the total number of pixels from each row within said sampling area. The ratio is represented as J:a:b.

- J = total number of horizontal pixels in the sampling area, usually 4.

- a = total number of chrominance samples (Cr, Cb) in first vertical row of J pixels.

- b = total number of changes between the chrominance samples (Cr, Cb) of the first and second vertical rows of J pixels.

- If alpha is present, it is represented as a fourth number that is relative to J.

The most common levels of chroma sampling are described in order of low to high quality as:

- 4:2:0, every square group of 4 pixels is using the same chroma data.

- 4:2:2, every rectangular group of 2 pixels is using the same chroma data.

- 4:4:4, no chroma subsampling is used at all.

Different video codecs use different levels of chroma subsampling. When choosing a video codec, pay attention to how you are applying chroma subsampling to the images. As a rule of thumb, try to work with at least 4:2:2 content and sensors. 4:4:4 is prized for when you apply chroma keying to digital content or need to maintain the highest levels of image quality.

Because we work with large-scale projections and displays in theatre, using heavy chroma subsampling can lead to certain types of digital distortion. When groups of pixels act as a single homogenous unit it can make projected pixels stand out and create a pixelated effect.

Contrast and Dynamic Range

Contrast is the range between the darkest black (dark) and brightest white (light) in an image. Images with high contrast include elements across a full range of tones, from dark black shadows to bright white highlights. Low-contrast images have a small range between the brightest and the darkest areas and appear flatter or to have less depth when compared to a similar image with higher contrast.

Dynamic range is a way of describing the possible contrast levels on a camera or display and is defined by measuring the maximum and minimum values of the blacks and whites it is able to represent. When working with digital cameras, projectors, emissive displays, and so forth, you want to have the highest dynamic range possible in order to display the greatest variations in tone. Fine details and subtle textures in an image are quickly lost with reduced levels of dynamic range.

Regardless of how large the dynamic range is on digital devices, it is bound to be restricted in some way due to technical limitations, and no display technology yet matches the range of contrast that human eyes are capable of seeing.

Both contrast and dynamic range are important aspects of all phases of digital design because every display and capture sensor has its own dynamic range. When you are projecting digital camera content you will deal with dynamic range in every step of the process. The camera recording the scene has a maximum dynamic range, and so do the editing software and the editing display. Once in the theatre, the projector has its own dynamic range. The way contrast and dynamic range are actually perceived in the projected image is also affected by the projection surface.

Compositing

Figure 4.31 Comparison of dynamic range. Left: low dynamic range. Right: high dynamic range.

Source: Alex Oliszewski

Compositing refers to combining different visuals together. Oftentimes compositing is used to create an illusion that different elements are all part of the same image. The most common example of this is when a subject shot against a greenscreen is composited into another image. Compositing can also refer to how different layers of images/video are blended together. Digital editing tools have different blend/composite modes to choose from to achieve desired results. Most media servers have a limited set of blend modes compared to video and image manipulation software packages, such as Photoshop or After Effects, but do allow for compositing of images and videos. By leveraging the media server’s ability to composite you can keep assets more flexible and save time throughout the production process.

![]() For More Info 4.6 Compositing

For More Info 4.6 Compositing

See “Render vs. Real Time” in Chapter 4.

Types of Content

Content creation covers a wide array of design and style, but also considers the many different types of content that can be sourced, captured, scanned, developed, shot, key framed, edited, coded, and rendered. This section breaks down content creation into the categories of still images, moving images (video), generative art, data, and interactive digital media as content. For the majority of shows you design you may end up using some combination of these different types of content.

The categories are arranged in order of complexity, from less complex still images to more complex algorithmic, computer-generated images. This is definitely not definitive reasoning, as a still image may require hundreds of hours of labor, while a computer-generated animation may take just ten minutes to create by writing three lines of code. The world of content creation is a craft like many others: the more creativity, work, thought, and time you put into it, the better the end product becomes.

Custom Content

Most productions require custom content, specific to the style and design approach. Custom content is any type of asset (photo, video, illustration, text, etc.) that you personally create, cocreate, or hire/commission a designer/artist to create. Nearly every aspect of content creation is a field in itself. As a digital media designer for theatre when you create content you take on the roles of photographer, graphic designer, animator, film director, editor, and more. Each of these roles has a cadre of tools and techniques that take many years to master. But this isn’t a new concept, we’ve mentioned this before, and you’ve still kept reading. So, congratulations—you are up for the challenge.

There are many working designers who do not know how to create multiple types of content and others who know many methods. It is best to be well versed in as many techniques as you can when it comes to image and video creation. If you focus on one style or type of content, you may have to wait longer between jobs or you may work often, depending on the need or interest in the type of content you specialize in.

Found Content

To find content that already exists all you need to do is a simple Internet search with the correct keywords. There continues to be a rise of content creators freely sharing their work and making it available on the Internet for all to use under Creative Commons licensing. If you do some digging with Creative Commons search engines you may find content that is free to use as long as you follow the guidelines posted by the original creators.

It is rare, however, to use found content as is. You almost always need to alter it in order to match the specific style of the show. Everything in the show should intentionally fit into the style of the production.

Stock Content

Most designers have a database of their own stock content that they have created and/or purchased the rights to use, which might include large banks of textures, particle effects, photographs, graphics, videos, environments, and so forth. A digital media designer uses this bank of content in the same way a sound designer might use a large database of prerecorded sounds to pull from when creating a sound design.

There are many websites that sell stock photography, graphics, and video footage ranging from very affordable to extremely expensive. If you are primarily relying on stock content you need to have a budget for purchasing, because rarely is high-quality footage free.

More often than not, stock footage is used when the script calls for historical and/or period content. It certainly can be a lot cheaper to purchase footage of the Great Wall of China rather than flying to China for a video shoot. But this doesn’t mean you should be using the stock footage completely unchanged. Imagine sitting in a theatre watching a show that had only unaltered found and stock footage. How would you feel? Is this an artist at work or merely a good researcher who found a bunch of content? If the show requires a lot of stock photos and videos consider applying filters and color treatments or altering the framing, and so forth. This helps make them all live in the same world and feel like they are part of a unified design.

If you are doing any kind of large professional work and/or touring work, the producers may require a chain of rights proving that you have the permission to use anything you did not make. When purchasing stock content, make sure to buy the correct clearance for your needs. Stock houses have different prices and clearances for educational vs. commercial work, and so forth. Some licenses are perpetual and others are for a single use. Keep a record of all the purchases, permissions, and clearances you obtain.

Still Images

We are all familiar with still images in terms of photographs, as we see them and even create them on a daily basis every time we take a selfie or a photo of our lunch. This section introduces the basics of still images in the categories of photography, graphic design, text, and collage. This knowledge expands upon the basics of design and digital basics that you have already learned in previous sections.

Photography

Of the types of still images used in design, photography is one of the best ways to create content that provides a lot of information, such as context, mood, and period, in one single frame. The phrase “a picture is worth a thousand words” holds true onstage.

The history of photography tells a story of innovation and meaning making that has evolved over time with different image-making technologies. Today’s photographic technology expands beyond what our naked eyes can see, such as what lies in the depths of the oceans, in the far reaches of space, or the details of an atom. Each historic evolution in photography has left its mark on how we see and understand particular periods of time. By finding proper period photographs and making new images in the same style you can create a feeling onstage of a specific moment in time.

Photographs don’t always have to convey realism. They can be abstract and used to great effect in creating mood or atmosphere. For example, a close-up of an ant struggling to walk on tree bark might be exactly the right abstraction that underlies tension in a given scene.

The Ubiquity of the Photograph

Photography is one of the more prevalent art forms in use today, especially with the abundance of cell phone cameras. This means that the eyes of our audience are strongly attuned to pull information from photographs. Since the first photographic images made their way to the public, people have been obsessed by them. This is fortunate because its popularity has left us with a vast array of resources and styles to draw from for our designs.

![]() For More Info 4.7

For More Info 4.7

See “Cameras” in Chapter 5.

The Basics of Photography and Still Images

Creating the right photo for a moment may require you to shoot the photograph yourself, thus making you a photographer. You also need to edit and manipulate that photo. The practice of making still images bleeds over into video creation in nearly every way, as video is just a series of still images played back in sequence. So, we begin with a foundation on the basics of photography and photographic still images.

Types of Shots/Framing

There are a number of shots that make up the foundation of photography practices. These shots all apply to video as well. They include the following:

- Wide/long shot

- Camera is far away from subject (and/or wide-angle lens is used)

- Establishes context

- Emphasizes surroundings

- Medium shot

- Typically of a person

- From the waist up

- Subject and setting typically take up the same amount of space in the frame

- Leaves space for hand gestures

- Bust (medium close shot)

- Typically of a person

- From the chest up

- Close-up

- Shows detail of subject

- Face or detail of subject fills the entire frame

- Extreme close-up

- Shows a fine detail of subject

Angle

Camera angles are created by where the camera is placed to take a shot. How the audience understands the subject is directly related to angle. There are a few basic angles you should know. They include:

- High angle (camera looking down)

- Looks down at subject

- Used to make the subject feel small in the environment

- Used to indicate that the character in frame has less power

Figure 4.33 Examples of types of shots

Source: Alex Oliszewski

- Low angle (camera looking up)

- Looks up at subject

- Used to make the subject feel big

- Used to indicate that the character in frame has more power

- Eye level (camera lens is level with the actor’s eyes)

- Used to indicate the character has the same amount of power

- Can establish point of view

- Dutch/titled angle (camera is tilted to left or right)

- Used to give a sense or feeling that things are out of balance or to show tension

Lighting

Lighting can be available light (the sun, a streetlamp, etc.), created artificially by the photographer using lighting instruments, or a combination of both. The brightness, color, color temperature, and direction of the light all work together to affect the visual appearance of an image. The way in which light hits a subject creates highlights and shadows, which combined forms contrast. Lighting helps to create style and mood.

Usually, soft, diffused light is more flattering for human subjects. When you are using available light, shooting on an overcast day provides a softer, more even light since the sunlight is diffused by the clouds. A popular time to shoot is just before sunset or after sunrise. This is what is known as magic or golden hour, when the sun appears redder and lower in the sky, thus creating longer, softer shadows.

![]() For More Info 4.8 How to Adjust the Way a Camera Handles Light

For More Info 4.8 How to Adjust the Way a Camera Handles Light

See “Camera Basics” Chapter 5. For tips regarding three-point lighting and lighting for video see “Video Lighting” in Chapter 4. See also “Lighting for Live Cameras” in Chapter 5.

Lighting plays an important role in digital media design, especially when projecting atmospheric images to convey time of day. For example, if it is supposed to be morning in a scene onstage, you need to make sure that the lighting in the images looks like morning light.

Sharpness

Sharpness refers to clarity in terms of the quality of detail in edges of shapes and objects in an image. Sharpness of an image is created by many factors, including sensor size, lens type, and ISO. Sharpness is often subjective from person to person.

Noise/Grain

Noise is the random variation of information, such as color and brightness—that is, in an image, but not in the actual subject that was photographed. It is created in images during the capture process via the camera’s sensor, usually when using lower ISO settings or in low light. Film grain is like noise, but it appears as small, random particles on actual film stock.

Noise and grain usually are not a desired effect when photographing. However, adding noise or grain to an image in postproduction is popular. It can be used to help mute an image or add a gritty realism and texture to otherwise clean footage.

Moiré Patterns

Moiré patterns are not normally our friends. They most typically occur in digital media content when a physical grid or other repetitive texture or detail, such as a pinstripe suit, lines up with the pixels in an image raster. When the camera’s pixel sensor is in line with the pinstripes (or other detail) it can produce an interference pattern that adds a pixelated wavy pattern or a distorting rainbow-type effect. This type of distortion is exacerbated in video and can also create a flickering, zebra-like effect.

By changing the zoom factor on your subject, you may be able to make these patterns less visible. Some picture and video editing software packages offer specific filters that are designed to mitigate moiré patterns in an image.

This type of distortion also occurs when projecting on scrim or other surfaces that have grid-like structures on them that are similar in shape and size to the raster of pixels being cast on them. If you come across this image artifact in your work you can either attempt to change the size of the projections or throw the projectors slightly out of focus to mitigate a moiré pattern. Otherwise you need to recapture the images after eliminating the offending textures or use different capture settings.

Figure 4.37 Example of moiré effect created by the overlapping of two scrims

Source: Alex Oliszewski

Collage

In the traditional art world, a collage is a type of picture or image in which a composition is created by assembling different real-world materials or artifacts. A collage can be a powerful method to convey a lot of meaning in a single image or to bring a certain type of energy to a moment. When using collage imagery onstage, be careful as the visual elements can easily become busy and still need to balance with the stage design and action.

Moving Images

Moving images are a sequence of still images put together one after another. Our eyes and brain work together to interpret these sequences of still images as continuous movement, known as persistence of vision. Persistence of vision tricks our brain into seeing these multiple images as one when the sequence of still images moves at a fast-enough rate to induce the sensation of continuous motion. It also causes the trails of light left when we close our eyes after looking at a bright light source.

Figure 4.38 Example of collage projected onto a set from Soot & Spit by Charles Mee. Directed by Kim Weild. Digital media design by Boyd Branch. Lighting design by Adam Vachon. Set design by Brunella Provvidente. Costume design by Haley Peterson. Arizona State University Mainstage, 2014.

Source: Boyd Branch

This section looks at the basic process of creating moving images (digital video). A firm foundation in still image creation serves you well as you begin to make moving images. The elements that go into making a good still image are the building blocks to video. While there are a number of design considerations in creating video, there are also technical aspects to master.

Video Basics

Before you can master the art of making video, you have to understand the basic elements of video. Knowing the components of video ensures that the content you create is delivered, played back, and viewed by an audience the way you intend.

Analog vs. Digital

Moving images started out as an analog format in the late nineteenth century with experimenters like Eadweard Muybridge working with film. In the middle of the twentieth century the analog format continued when videotape was invented. Analog video records moving images as a set of continuous signals via the shifting values of luma and chrominance. These signals are stored as fluctuations in a field on a magnetic layer affixed to a tape. Digital video, on the other hand, 8-bit digital video in particular, records imagery via discrete numbers between zero and 255 that represent different values. So, a dark component of an image would be recorded as zero and a bright area would be recorded as 255.

Two of the major differences between analog and digital video are degradation and storage. When you make a copy of analog video—for instance, a VHS copy of a VHS tape—the copy is deteriorated. It has slight variations in signal strength compared to the original, because the recording/copying process introduces fluctuations in the signals that distort the image. When digital video is copied, there is no physical form to copy. What is copied is a set of numbers from the original to the copy. There is no degradation because each number that is copied is exact and discrete, so there is no noise introduced that causes distortion of the image.

Figure 4.39 Comparison between interlaced and progressive scanning

Source: Alex Oliszewski and Kien Hoang

Analog video has a particular quality of image, movement, resolution, degradation, and audio. This is also true of each of the different types of digital video. Knowing how to create content that looks and feels like a certain type of analog or digital video type is something that you can learn and implement as needed.

Interlaced/Progressive

Interlaced scan (indicated with an “i”—e.g., 60i) and progressive scan (indicated with a “p”—e.g., 24p) refer to methods for capturing, displaying, and transmitting video. Interlaced scanning divides each frame of video into two separate fields and draws the image in two steps or passes. The first pass scans all the odd number of horizontal video lines and then the second pass scans all the even numbers of horizontal lines. The second pass draws in 1/60th of a second after the first. This number varies based on the chosen frame rate. For instance, a frame rate of 50 draws in 1/50th of a second later.

In progressive scan video, all the lines of a frame are drawn in at once, in progressive order, starting with the first horizontal line at the top and ending with the last line at the bottom.

Frame Rates and Standards

Frame rate refers to the frequency at which consecutive frames of video (or film) are recorded and displayed. Frame rate is also referred to as frames per second (FPS). Typically, the greater the frame rate, the smoother and more realistic action looks when played back. When playing back video in a media server you should target 30–60 FPS for smooth playback. Once you dip below 30 FPS you may start to notice choppiness/staggering in the video. For fast-moving, real-time, generated animations it is common to aim for 60–90 FPS. Slow motion effects often rely on 120 FPS or higher.

NTSC (National Television System Committee) and PAL (Phase Alternating Line) are analog color encoding systems developed as television standards. NTSC is primarily used in the United States. In digital video, you shouldn’t have to worry about NTSC or PAL because these standards are being phased out and replaced with digital standards. However, digital standards and frame rates are based on the legacy of NTSC and PAL systems, so it is good to have an understanding of what they mean.

NTSC is in use by countries that have an electrical frequency of 60 HZ, with a corresponding frame rate of 60i or 30p and 480 lines of resolution (720x480). PAL on the other hand is used by countries that have an electrical frequency of 50 HZ, with a corresponding frame rate of 50i or 25p and 576 lines of resolution (720 × 576).