CHAPTER SIX

HOW TO AVOID COMMODITIZATION

What causes commoditization? Is it the inevitable end-state of all companies in competitive markets? Can companies take action at any point in their development that can arrest its onset? Once the tide of commoditization has swept through an industry, can the flow reverse back toward proprietary, differentiated, profitable products? How can I respond to this?

Many executives have resigned themselves to the belief that, no matter how miraculous their innovations, their inevitable fate is to be “commoditized.” These fears are grounded in painful experience. Here’s a frightening example: The first one-gigabyte 3.5-inch disk drives were introduced to the world in 1992 at prices that enabled their manufacturers to earn 60 percent gross margins. These days, disk drive companies are struggling to eke out 15 percent margins on drives that are sixty times better. This isn’t fair, because these things are mechanical and microelectronic marvels. How many of us could mechanically position the head so that it stored and retrieved data in circular tracks that are only 0.00008 inch apart on the surface of disks, without ever reading data off the wrong track? And yet disk drives of this genre are regarded today as undifferentiable commodities. If products this precise and complicated can be commoditized, is there any hope for the rest of us?

It turns out that there is hope. One of the most exciting insights from our research about commoditization is that whenever it is at work somewhere in a value chain, a reciprocal process of de-commoditization is at work somewhere else in the value chain.1 And whereas commoditization destroys a company’s ability to capture profits by undermining differentiability, de-commoditization affords opportunities to create and capture potentially enormous wealth. The reciprocality of these processes means that the locus of the ability to differentiate shifts continuously in a value chain as new waves of disruption wash over an industry. As this happens, companies that position themselves at a spot in the value chain where performance is not yet good enough will capture the profit.

Our purpose in this chapter is to help managers understand how these processes of commoditization and de-commoditization work, so that they can detect when and where they are beginning to happen. We hope that this understanding can help those who are building growth businesses to do so in a place in the value chain where the forces of de-commoditization are at work. We also hope it helps those who are running established businesses to reposition their firms in the value chain to catch these waves of de-commoditization as well. To return to Wayne Gretzky’s insight about great hockey playing, we want to help managers develop the intuition for skating not to where the money presently is in the value chain, but to where the money will be.2

The Processes of Commoditization and De-commoditization

The process that transforms a profitable, differentiated, proprietary product into a commodity is the process of overshooting and modularization we described in chapter 5. At the leftmost side of the disruption diagram, the companies that are most successful are integrated companies that design and assemble the not-good-enough enduse products. They make attractive profits for two reasons. First, the interdependent, proprietary architecture of their products makes differentiation straightforward. Second, the high ratio of fixed to variable costs that often is inherent in the design and manufacture of architecturally interdependent products creates steep economies of scale that give larger competitors strong cost advantages and create formidable entry barriers against new competitors.

This is why, for example, IBM, as the most integrated competitor in the mainframe computer industry, held a 70 percent market share but made 95 percent of the industry’s profits: It had proprietary products, strong cost advantages, and high entry barriers. For the same reasons, from the 1950s through the 1970s, General Motors, with about 55 percent of the U.S. automobile market, garnered 80 percent of the industry’s profits. Most of the firms that were suppliers to IBM and General Motors, in contrast, had to make do with subsistence profits year after year. These firms’ experiences are typical. Making highly differentiable products with strong cost advantages is a license to print money, and lots of it.3

We must emphasize that the reason many companies don’t reach this nirvana or remain there for long is that it is the not-good-enough circumstance that enables managers to offer products with proprietary architectures that can be made with strong cost advantages versus competitors. When that circumstance changes—when the dominant, profitable companies overshoot what their mainstream customers can use—then this game can no longer be played, and the tables begin to turn. Customers will not pay still-higher prices for products they already deem too good. Before long, modularity rules, and commoditization sets in. When the relevant dimensions of your product’s performance are determined not by you but by the subsystems that you procure from your suppliers, it becomes difficult to earn anything more than subsistence returns in a product category that used to make a lot of money. When your world becomes modular, you’ll need to look elsewhere in the value chain to make any serious money.

The natural and inescapable process of commoditization occurs in six steps:

- As a new market coalesces, a company develops a proprietary product that, while not good enough, comes closer to satisfying customers’ needs than any of its competitors. It does this through a proprietary architecture, and earns attractive profit margins.

- As the company strives to keep ahead of its direct competitors, it eventually overshoots the functionality and reliability that customers in lower tiers of the market can utilize.

- This precipitates a change in the basis of competition in those tiers, which . . .

- . . . precipitates an evolution toward modular architectures, which . . .

- . . . facilitates the dis-integration of the industry, which in turn . . .

- . . . makes it very difficult to differentiate the performance or costs of the product versus those of competitors, who have access to the same components and assemble according to the same standards. This condition begins at the bottom of the market, where functional overshoot occurs first, and then moves up inexorably to affect the higher tiers.

Note that it is overshooting—the more-than-good-enough circumstance—that connects disruption and the phenomenon of commoditization. Disruption and commoditization can be seen as two sides of the same coin. A company that finds itself in a more-than-good-enough circumstance simply can’t win: Either disruption will steal its markets, or commoditization will steal its profits. Most incumbents eventually end up the victim of both, because, although the pace of commoditization varies by industry, it is inevitable, and nimble new entrants rarely miss an opportunity to exploit a disruptive foothold.

There can still be prosperity around the corner, however. The attractive profits of the future are often to be earned elsewhere in the value chain, in different stages or layers of added value. That’s because the process of commoditization initiates a reciprocal process of de-commoditization. Ironically, this de-commoditization—with the attendant ability to earn lots of money—occurs in places in the value chain where attractive profits were hard to attain in the past: in the formerly modular and undifferentiable processes, components, or subsystems.4

To visualize the reciprocal process, remember the steel minimills from chapter 2. As long as the minimills were competing against integrated mills in the rebar market, they made a lot of money because they had a 20 percent cost advantage relative to the integrated mills. But as soon as they drove the last high-cost competitor out of the rebar market, the low-cost minimills found themselves slugging it out against equally low-cost minimills in a commodity market, and competition among them caused pricing to collapse. The assemblers of modular products generally receive the same reward for victory as the minimills did whenever they succeed in driving the higher-cost competitors and their proprietary architectures out of a tier in their market: The victorious disruptors are left to slug it out against equally low-cost disruptors who are assembling modular components procured from a common supplier base. Lacking any basis for competitive differentiation, only subsistence levels of profit remain. A low-cost strategy works only as long as there are higher-cost competitors left in the market.5

The only way that modular disruptors can keep profits healthy is to carry their low-cost business models up-market as fast as possible so that they can keep competing at the margin against higher-cost makers of proprietary products. Assemblers of modular products do this by finding the best performance-defining components and subsystems and incorporating them in their products faster than anyone else.6 The assemblers need the very best performance-defining components in order to race up-market where they can make money again. Their demand for improvements in performance-defining components, as a result, throws the suppliers of those components back to the not-good-enough side of the disruption diagram.

Competitive forces consequently compel suppliers of these performance-defining components to create architectures that, within the subsystems, are increasingly interdependent and proprietary. Hence, the performance-defining subsystems become de-commoditized as the result of the end-use products becoming modular and commoditized.

Let us summarize the steps in this reciprocal process of decommoditization:

- The low-cost strategy of modular product assemblers is only viable as long as they are competing against higher-cost opponents. This means that as soon as they drive the high-cost suppliers of proprietary products out of a tier of the market, they must move up-market to take them on again in order to continue to earn attractive profits.

- Because the mechanisms that constrain or determine how rapidly they can move up-market are the performance-defining subsystems, these elements become not good enough and are flipped to the left side of the disruption diagram.

- Competition among subsystem suppliers causes their engineers to devise designs that are increasingly proprietary and interdependent. They must do this as they strive to enable their customers to deliver better performance in their end-use products than the customers could if they used competitors’ subsystems.

- The leading providers of these subsystems therefore find themselves selling differentiated, proprietary products with attractive profitability.

- This creation of a profitable, proprietary product is the beginning, of course, of the next cycle of commoditization and decommoditization.

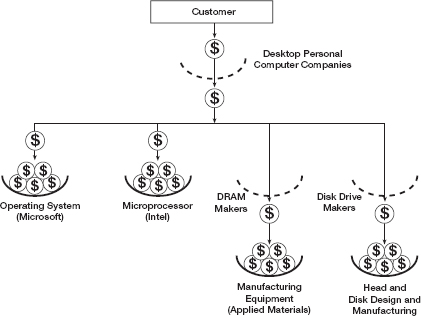

Figure 6-1 illustrates more generally how this worked in the product value chain of the personal computer industry in the 1990s. Starting at the top of the diagram, money flowed from the customer to the companies that designed and assembled computers; as the decade progressed, however, less and less of the total potential profit stayed with the computer makers—most of it flowed right through these companies to their suppliers.7

As a result, quite a bit of the money that the assemblers got from their customers flowed over to Microsoft and lodged there. Another chunk flowed to Intel and stopped there. Money also flowed to the makers of dynamic random access memory (DRAM), such as Samsung and Micron, but not much of it stopped at those stages in the value chain in the form of profit. It flowed through and accumulated instead at firms like Applied Materials, which supplied the manufacturing equipment that the DRAM makers used. Similarly, money flowed right through the assemblers of modular disk drives, such as Maxtor and Quantum, and tended to lodge at the stage of value added where heads and disks were made.

FIGURE 6 - 1

Where the Money Was Made in the PC Industry’s Product Value Chain

What is different about the baskets in the diagram that held money, versus those through which the money seemed to leak? The tight baskets in which profit accumulated for most of this period were products that were not yet good enough for what their immediate customers in the value chain needed. The architectures of those products therefore tended to be interdependent and proprietary. Firms in the leaky-basket situation could only hang onto subsistence profits because the functionality of their products tended to be more than good enough. Their architectures therefore were modular.

If a company supplies a performance-defining but not-yet-good-enough input for its customers’ products or processes, it has the power to capture attractive profit. Consider the DRAM industry as an example. While the architecture of their own chips was modular, DRAM makers could not be satisfied even with the very best manufacturing equipment available. In order to succeed, DRAM makers needed to make their products at ever-higher yields and ever-lower costs. This rendered the functionality of equipment made by firms such as Applied Materials not good enough. The architecture of this equipment became interdependent and proprietary as a consequence, as the equipment makers strove to inch closer to the functionality that their customers needed.

It is important never to conclude that an industry such as disk drives or DRAMs is inherently unprofitable, whereas others such as microprocessors or semiconductor manufacturing equipment are inherently profitable. “Industry” is usually a faulty categorization scheme.8 What makes an industry appear to be attractively profitable is the circumstance in which its companies happen to be at a particular point in time, at each point in the value-added chain, because the law of conservation of attractive profits is almost always at work (see the appendix to this chapter). Let’s take a deeper look at the disk drive industry to see why this is so.

For most of the 1990s, in the market tiers where disk drives were sold to makers of desktop personal computers, the capacity and access times of the drives were more than adequate. The drives’ architectures consequently became modular, and the gross margins that the nonintegrated assemblers of 3.5-inch drives could eke out in the desktop PC segment declined to around 12 percent. Nonintegrated disk drive assemblers such as Maxtor and Quantum dominated this market (their collective market share exceeded 90 percent) because integrated manufacturers such as IBM could not survive on such razor-thin margins.

The drives had adequate capacity, but the assemblers could not be satisfied even with the very best heads and disks available, because if they maximized the amount of data they could store per square inch of disk space, they could use fewer disks and heads in the drives—which was a powerful driver of cost. The heads and disks, consequently, became not good enough and evolved toward complex, interdependent subassemblies. Head and disk manufacturing became so profitable, in fact, that many major drive makers integrated backward into making their own heads and disks.9

But it wasn’t the disk drive industry that was marginally profitable—it was the modular circumstance in which the 3.5-inch drive makers found themselves. The evidence: The much smaller 2.5-inch disk drives used in notebook computers tended not to have enough capacity during this same era. True to form, their architectures were interdependent, and the products had to be made by integrated companies. As the most integrated manufacturer and the one with the most advanced head and disk technology in the 1990s, IBM made 40 percent gross margins in 2.5-inch drives and controlled 80 percent of that market. In contrast, IBM had less than 3 percent of the unit volume in drives sold to the desktop PC market, where its integration rendered it uncompetitive.10

At the time we first published our analysis of this situation in 1999, it appeared that the capacity of 2.5-inch disk drives was becoming more than good enough in the notebook computer application as well—presaging, for what had been a beautiful business for IBM, the onset of commoditization.11 We asserted that IBM, as the most integrated drive maker, actually was in a very attractive position if it played its cards right. It could skate to where the money would be by using the advent of modularity to decouple its head and disk operations from its disk drive design and assembly business. If IBM would begin to sell its most advanced heads and disks to competing 2.5-inch disk drive makers—aggressively putting them into the business of assembling modular 2.5-inch drives—it could eventually de-emphasize the assembly of drives and focus on the more profitable head and disk components. In so doing, IBM could continue to enjoy the most attractive levels of profit in the industry. In other words, on the not-good-enough side of the disruptive diagram, IBM could fight in the war and win. On the more-than-good-enough side, a better strategy is to sell bullets to the combatants.12

IBM made similar moves several years earlier in its computer business, through its decisions to decouple its vertical chain and to sell its technology, components, and subsystems aggressively in the open market. Simultaneously it created a consulting and systems integration business in the high end and moved to de-emphasize the design and assembly of computers. As IBM skated to those points in the value-added chain where complex, nonstandard integration needed to occur, it led to a remarkable—and remarkably profitable—transformation of a huge company in the 1990s.

The bedrock principle bears repeating: The companies that are positioned at a spot in a value chain where performance is not yet good enough will capture the profit. That is the circumstance where differentiable products, scale-based cost advantages, and high entry barriers can be created.

To the extent that an integrated company such as IBM can flexibly couple and decouple its operations, rather than irrevocably sell off operations, it has greater potential to thrive profitably for an extended period than does a nonintegrated firm such as Compaq. This is because the processes of commoditization and de-commoditization are continuously at work, causing the place where the money will be to shift across the value chain over time.

Core Competence and the ROA-Maximizing Death Spiral

Firms that are being commoditized often ignore the reciprocal process of de-commoditization that occurs simultaneously with commoditization, either a layer down in subsystems or next door in adjacent processes. They miss the opportunity to move where the money will be in the future, and get squeezed—or even killed—as different firms catch the growth made possible by de-commoditization. In fact, powerful but perverse investor pressure to increase returns on assets (ROA) creates strong incentives for assemblers to skate away from where the money will be. And having failed to recognize their modular, commoditized circumstance, the firms turn to attribute-based core competence theory to make decisions they may later regret.

How can firms that assemble modular products meet investors’ demands that they improve their return on assets or capital employed? They cannot improve the numerator of the ROA ratio because differentiating their product or producing it at lower costs than competitors is nearly impossible. Their only option is to shrink the denominator of the ROA ratio by getting rid of assets. This would be difficult in an interdependent world that demanded integration, but the modular architecture of the product actually facilitates dis-integration. We will illustrate how this happens using a disguised example of the interactions between a component supplier and an assembler of modular personal computers. We’ll call the two firms Components Corporation and Texas Computer Corporation (TCC), respectively.

Components Corporation begins by supplying simple circuit boards to TCC. As TCC wrestles with investor pressure to get its ROA up, Components Corp. comes up with an interesting proposition: “We’ve done a good job making these little boards for you. Let us supply the whole motherboard for your computers. We can easily beat your internal costs.”

“Gosh, that would be a great idea,” TCC’s management responds. “Circuit board fabrication isn’t our core competence anyway, and it is very asset intensive. This would reduce our costs and get all those assets off our balance sheet.” So Components Corp. takes on the additional value-added activity. Its revenues increase smartly, and its profitability improves because it is utilizing its manufacturing assets better. Its stock price improves accordingly. As TCC sheds those assets, its revenue line is unaffected. But its bottom line and its return on assets improve—and its stock price improves accordingly.

A short time later Components Corp. approaches TCC’s management again. “You know, the motherboard really is the guts of the computer. Let us assemble the whole computer for you. Assembling those products really isn’t your core competency, anyway, and we can easily beat your internal costs.”

“Gosh, that would be a great idea,” TCC’s management responds. “Assembly isn’t our core competence anyway, and if you did our product assembly, we could get all those manufacturing assets off our balance sheet.” Again, as Components Corp. takes on the additional value-added activity, its revenues increase smartly and its profitability improves because it is utilizing its manufacturing assets better. Its stock price improves accordingly. And as TCC sheds its manufacturing assets, its revenue line is unaffected. But its bottom line and its return on assets improve—and its stock price improves accordingly.

A short time later Components Corp. approaches TCC’s management again. “You know, as long as we’re assembling your computers, why do you need to deal with all the hassles of managing the inbound logistics of components and the outbound logistics of customer shipments? Let us deal with your suppliers and deliver the finished products to your customers. Supply chain management really isn’t your core competence, anyway, and we can easily beat your internal costs.”

“Gosh, that would be a great idea,” TCC’s management responds. “This would help us get those current assets off our balance sheet.” As Components Corp. takes on the additional value-added activity, its revenues increase smartly and its profitability improves because it is pulling higher value-added activities into its business model. Its stock price improves accordingly. And as TCC sheds its current assets, its revenue line is unaffected. But its profitability improves—and its stock price gets another bounce.

A short time later Components Corp. approaches TCC’s management again. “You know, as long as we’re dealing with your suppliers, how about you just let us design those computers for you? The design of modular products is little more than vendor selection anyway, and since we have closer relationships with vendors than you do, we could get better pricing and delivery if we can work with them from the beginning of the design cycle.”

“Gosh, that would be a great idea,” TCC’s management responds. “This would help us cut fixed and variable costs. Besides, our strength really is in our brand and our customer relationships, not in product design.” As Components Corp. takes on the additional value-added activity, its revenues increase further and its profitability improves because it is pulling higher value-added activities into its business model. Its stock price improves accordingly. And as TCC sheds cost, its revenue line is unaffected. But its profitability improves—and its stock price gets another nice little pop—until the analysts realize that the game is over.

Ironically, in this Greek tragedy Components Corp. ends up with a value chain that is actually more highly integrated than TCC’s was when this spiral began, but often with the pieces reconfigured to allow Components Corp. to deliver against the new basis of competition, which is speed to market and the ability to responsively configure what is delivered to customers in ever-smaller segments of the market. Each time TCC off-loaded assets and processes to Components Corp., it justified its decision in terms of its own “core competence.” It did not occur to TCC’s management that the activities in question weren’t Components Corporation’s core competencies, either. Whether or not something is a core competence is not the determining factor of who can skate to where the money will be.

This story illustrates another instance of asymmetric motivations—the component supplier is motivated to integrate forward into the very pieces of value-added activity that the modular assembler is motivated to get out of. It is not a story of incompetence. It is a story of perfectly rational, profit-maximizing decisions—and because of this, the ROA-maximizing death spiral traps many companies that find themselves assembling modular products in a too-good world. At the same time, it offers another avenue for creating new-growth businesses, in addition to the disruptive opportunities described in chapter 2. The assembler rids itself of assets, but it retains its revenues and often temporarily improves its bottom-line profit margins when it decides to outsource its back-end operations to contract suppliers. It feels good. When the supplier takes on the same pieces of business that the assembler was motivated to get out of, it also feels good, because it increases the back-end supplier’s revenues, profits, and stock price. For many suppliers, eating their way up the value chain creates opportunities to design subsystems with increasingly optimized internal architectures that become key performance drivers of the modular products that its customers assemble.

This is how Intel became a vendor of chipsets and motherboards, which constitute a much more critical proportion of a computer’s added value and performance than did the bare microprocessor. Nypro, Inc., a custom injection molder of precision plastic components whose history we will examine later in this book, has followed a similar growth strategy and has become a major manufacturer of ink-jet printer cartridges, computers, handheld wireless devices, and medical products. Nypro’s ability to precision-mold complex structures is interdependent with its abilities to simplify assembly.

Bloomberg L.P. has done the same thing, eating its way up Wall Street’s value chain. It started by providing simple data on securities prices and subsequently integrated forward, automating much of the analytics. Bloomberg has disruptively enabled an army of people to access insights that formerly only highly experienced securities analysts could derive. Bloomberg has continued to integrate forward from the back end, so that portfolio managers can now execute most trades from their Bloomberg terminals over a Bloomberg-owned electronic communications network (ECN) without needing a broker or a stock exchange. Issuers of certain government securities can now even auction their securities to institutional investors within Bloomberg’s proprietary system. Back-end suppliers such as First Data and State Street enjoy a similar position vis-à-vis commercial banks. Venerable Wall Street institutions are being disrupted and hollowed out—and they don’t even realize it because outsourcing the asset-intensive back end is a compelling mandate that feels good once the front end has become modular and commoditized.

Core competence, as it is used by many managers, is a dangerously inward-looking notion. Competitiveness is far more about doing what customers value than doing what you think you’re good at. And staying competitive as the basis of competition shifts necessarily requires a willingness and ability to learn new things rather than clinging hopefully to the sources of past glory. The challenge for incumbent companies is to rebuild their ships while at sea, rather than dismantling themselves plank by plank while someone else builds a new, faster boat with what they cast overboard as detritus.

What can growth-hungry managers do in situations like this? In many ways, the process is inevitable. Assemblers of modular products must, over time, shed assets in order to reduce costs and improve returns—financial market pressure leaves managers with few alternatives. However, knowing that this is likely to happen gives those same managers the opportunity to own or acquire, and manage as separate growth-oriented businesses, the component or subsystem suppliers that are positioned to eat their way up the value chain. This is the essence of skating to where the money will be.13

Good Enough, Not Good Enough, and the Value of Brands

Executives who seek to avoid commoditization often rely on the strength of their brands to sustain their profitability—but brands become commoditized and de-commoditized, too. Brands are most valuable when they are created at the stages of the value-added chain where things aren’t yet good enough. When customers aren’t yet certain whether a product’s performance will be satisfactory, a well-crafted brand can step in and close some of the gap between what customers need and what they fear they might get if they buy the product from a supplier of unknown reputation. The role of a good brand in closing this gap is apparent in the price premium that branded products are able to command in some situations. For similar logic, however, the ability of brands to command premium prices tends to atrophy when the performance of a class of products from multiple suppliers is manifestly more than adequate.

When overshooting occurs, the ability to command attractive profitability through a valuable brand often migrates to those points in the value-added chain where things have flipped into a not-yet-good-enough situation. These often will be the performance-defining subsystems within the product, or at the retail interface when it is the speed, simplicity, and convenience of getting exactly what you want that is not good enough. These shifts define the opportunities in branding.

For example, in the early decades of the computer industry, investment in complex and unreliable mainframe computer systems was an unnerving task for most managers. Because IBM’s servicing capability was unsurpassed, the brand of IBM had the power to command price premiums of 30 to 40 percent, compared with comparable equipment. No corporate IT director got fired for buying IBM. Hewlett-Packard’s brand commanded similar premiums.

How did the brands of Intel and Microsoft Windows subsequently steal the valuable branding power from IBM and Hewlett-Packard in the 1990s? It happened when computers came to pack good-enough functionality and reliability for mainstream business use, and modular, industry-standard architectures became predominant in those tiers of the market. At that point, the microprocessor inside and the operating system became not good enough, and the locus of the powerful brands migrated to those new locations.

The migration of branding power in a market that is composed of multiple tiers is a process, not an event. Accordingly, the brands of companies with proprietary products typically create value mapping upward from their position on the improvement trajectory—toward those customers who still are not satisfied with the functionality and reliability of the best that is available. But mapping downward from that same point—toward the world of modular products where speed, convenience, and responsiveness drive competitive success—the power to create profitable brands migrates away from the end-use product, toward the subsystems and the channel.14

This has happened in heavy trucks. There was a time when the valuable brand, Mack, was on the truck itself. Truckers paid a significant premium for Mack the bulldog on the hood. Mack achieved its preeminent reliability through its interdependent architecture and extensive vertical integration. As the architectures of large trucks have become modular, however, purchasers have come to care far more whether there is a Cummins or Caterpillar engine inside than whether the truck is assembled by Paccar, Navistar, or Freightliner.

Apparel is another industry in which the power to brand has begun to migrate to a different stage of the value-added chain. As elsewhere, it has happened because a changed basis of competition has redefined what is not good enough. A generation ago most of the valuable brands were on the products. Levi’s brand jeans and Gant brand shirts, for example, enjoyed strong and profitable market shares because many of the competing products were not nearly as sturdily made. These branded products were sold in department stores, which trumpeted their exclusive ability to sell the best brands in clothing.

Over the past fifteen years, however, the quality of clothing from a wide range of manufacturers has become assured, as producers in low-labor-cost countries have improved their capabilities to produce high-quality fabrics and clothing. The basis of competition in the apparel industry has changed as a consequence. Specialized retailers have stolen a significant share of market from the broad-line department stores because their focused merchandise mix allows the customers they target to find what they want more quickly and conveniently. What is not good enough in certain tiers of the apparel industry has shifted from the quality of the product to the simplicity and convenience of the purchasing experience. Much of the ability to create and maintain valuable brands, as a consequence, has migrated away from the product and to the channel because, for the present, it is the channel that addresses the piece of added value that is not yet good enough.15 We don’t even question who makes the dresses in Talbot’s, the sweaters for Abercrombie & Fitch, or the jeans at Gap and Old Navy. Much of the apparel sold in those channels carries the brand of the channel, not the manufacturer.16

A View of the Automobile Industry’s

Future Through the Lenses of This Model

Most of our examples of commoditization and de-commoditization have been drawn from the past. To show how this theory can be used to look into the future, this section discusses how this transformation is under way in the automobile industry, initiating a massive transfer of the ability to make attractive profits in the future away from automobile manufacturers and toward certain of their suppliers. Even the power to cultivate valuable brands is likely to migrate to the subsystems. This transformation will probably take a decade or two to fully accomplish, but once you know what to look for, it is easy to see that the processes already are irreversibly under way.

The functionality of many automobiles has overshot what customers in the mainstream markets can utilize. Lexus, BMW, Mercedes, and Cadillac owners will probably be willing to pay premium prices for more of everything for years to come. But in market tiers populated by middle- and lower-price-point models, car makers find themselves having to add more and better features just to hang onto their market share, and they struggle to convince customers to pay higher prices for these improvements. The reliability of models such as Toyota’s Camry and Honda’s Accord is so extraordinary that the cars often go out of style long before they wear out. As a result, the basis of competition—what is not good enough—is changing in many tiers of the auto market. Speed to market is important. Whereas it used to take five years to design a new-model car, today it takes two. Competing by customizing features and functions to the preferences of customers in smaller market niches is another fact of life. In the 1960s, it was not unusual for a single model’s sales to exceed a million units per year. Today the market is far more fragmented: Annual volumes of 200,000 units are attractive. Some makers now promise that you can walk into a dealership, custom-order a car, and have it delivered in five days—roughly the response time that Dell Computer offers.

In order to compete on speed and flexibility, automakers are evolving toward modular architectures for their mainstream models. Rather than uniquely designing and knitting together individual components procured from hundreds of suppliers, most auto companies now procure subsystems from a much narrower base of “tier one” suppliers of braking, steering, suspension, and interior cockpit subsystems. Much of this consolidation in the supplier base has been driven by the cost-saving opportunities that it affords—opportunities that often were identified and quantified by analytically astute consulting firms.

The integrated American automakers have been forced to dis-integrate in order to compete with the speed, flexibility, and reduced overhead cost structure that this new world demands. General Motors, for example, spun off its component operations into a separate publicly traded company, Delphi Automotive, and Ford spun off its component operations as Visteon Corporation. Hence, the same thing is happening to the auto industry that happened to computers: Overshooting has precipitated a change in the basis of competition, which precipitated a change in architecture, which forced the dominant, integrated firms to dis-integrate.

At the same time, the architecture is becoming progressively more interdependent within most of the subsystems. The models at lower price points in the market need improved performance from their subsystems in order to compete against higher-cost models and brands in the tiers of the market above them. If Kia and Hyundai used their low-cost Korean manufacturing base to conquer the subcompact tier of the market and then simply stayed there, competition would vaporize profits. They must move up, and once their architectures have become modular the only way to do this is to be fueled by ever-better subsystems.

The newly interdependent architectures of many subsystems are forcing the tier-one suppliers to be less flexible at their external interface. The automobile designers are increasingly needing to conform their designs to the specifications of the subsystems, just as desktop computer makers need to conform the designs of their computers to the external interfaces of Intel’s microprocessor and Microsoft’s operating system. As a consequence, we would expect that the ability to earn attractive profits is likely to migrate away from the auto assemblers, toward the subsystem vendors.17

In chapter 5 we recounted how IBM’s PC business outsourced its microprocessor to Intel and its operating system to Microsoft, in order to be fast and flexible. In the process, IBM hung on to where the money had been—design and assembly of the computer system—and put into business the two companies that were positioned where the money would be. General Motors and Ford, with the encouragement of their consultants and investment bankers, have just done the same thing. They had to decouple the vertical stages in their value chains in order to stay abreast of the changing basis of competition. But they have spun off the pieces of value-added activity where the money will be, in order to stay where the money has been.18

These findings have pervasive implications for managers seeking to build successful new-growth businesses and for those seeking to keep current businesses robust. The power to capture attractive profits will shift to those activities in the value chain where the immediate customer is not yet satisfied with the performance of available products. It is in these stages that complex, interdependent integration occurs—activities that create steeper scale economics and enable greater differentiability. Attractive returns shift away from activities where the immediate customer is more than satisfied, because it is there that standard, modular integration occurs. We hope that in describing this process in these terms, we might help managers to predict more accurately where new opportunities for profitable growth through proprietary products will emerge. These transitions begin on the trajectories of improvement where disruptors are at work, and proceed up-market tier by tier. This process creates opportunities for new companies that are integrated across these not-good-enough interfaces to thrive, and to grow by “eating their way up” from the back end of an end-use system. Managers of industry-leading businesses need to watch vigilantly in the right places to spot these trends as they begin, because the processes of commoditization and de-commoditization both begin at the periphery, not the core.

Appendix: The Law of Conservation of Attractive Profits

Having described these cycles of commoditization and de-commoditization in terms of products, we can now make a more general statement concerning the existence of a general phenomenon that we call the law of conservation of attractive profits. Our friend Chris Rowen, CEO of Tensilica, pointed out to us the existence of this law, whose appellation was inspired by the laws of conservation of energy and matter that we so fondly remember studying in physics class. Formally, the law of conservation of attractive profits states that in the value chain there is a requisite juxtaposition of modular and interdependent architectures, and of reciprocal processes of commoditization and decommoditization, that exists in order to optimize the performance of what is not good enough. The law states that when modularity and commoditization cause attractive profits to disappear at one stage in the value chain, the opportunity to earn attractive profits with proprietary products will usually emerge at an adjacent stage.19

We’ll first illustrate how this law operates by examining handheld devices such as the RIM BlackBerry and the Palm Pilot, which constitute the latest wave of disruption in the computing industry. The functionality of these products is not yet adequate, and as a consequence their architectures are interdependent. This is especially true for the BlackBerry, because its “always on” capability mandates extraordinarily efficient use of power. Because of this, the BlackBerry engineers cannot incorporate a one-size-fits-all Intel microprocessor into their device. It has far more capability than is needed. Rather, they need a modular microprocessor design—a system-on-a-chip that is custom-configured for the BlackBerry—so that they do not have to waste space, power, or cost on functionality that is not needed.

The microprocessor must be modular and conformable in order to permit engineers to optimize the performance of what is not good enough, which is the device itself. Note that this is the opposite situation from that of a desktop computer, where it is the microprocessor that is not good enough. The architecture of the computer must therefore be modular and conformable in order to allow engineers to optimize the performance of the microprocessor. Thus, one side or the other must be modular and conformable to allow for optimization of what is not good enough through an interdependent architecture.

In similar ways, application software programs that are written to run on Microsoft’s Windows operating systems need to be conformed to Windows’ external interface; the Linux operating system, on the other hand, is modular and conformable to optimize the performance of software that runs on it.

We have found this “law” to be a useful way to visualize where the money will migrate in the value chain in a number of industries. It is explored in greater depth in a forthcoming book by Clayton Christensen, Scott Anthony, and Erik Roth, Seeing What’s Next (Boston: Harvard Business School Press, 2004).

This law also has helped us understand the juxtaposition of modular products with interdependent services, because services provided with the products can go through similar cycles of commoditization and de-commoditization, with consequent implications for where attractive profitability will migrate.

We noted previously that when the functionality and reliability of a product become more than good enough, the basis of competition changes. What becomes not good enough are speed to market and the rapid and responsive ability to configure products to the specific needs of customers in ever-more-targeted market segments. The customer interface is the place in the value chain where the ability to excel on this new dimension of competition is determined. Hence, companies that are integrated in a proprietary way across the interface to the customer can compete on these not-good-enough dimensions more effectively (and be rewarded with better margins) than can those firms that interface with their customers only in an arm’s-length, “modular” manner. Companies that integrate across the retail interface to the customer, in this circumstance, can also earn above-average profits.

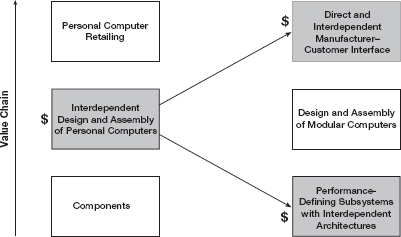

We would therefore not say that Dell Computer is a nonintegrated company, for example. Rather, Dell is integrated across the not-good-enough interface with the customer. The company is not integrated across the more-than-good-enough modular interfaces among the components within its computers. Figure 6-2 summarizes in a simplified way how the profitable points of proprietary integration have migrated in the personal computer industry.

On the left side of the diagram, which represents the earliest years of the desktop computer industry when product functionality was extremely limited, Apple Computer, with its proprietary architecture and integrated business model, was the most successful firm and was attractively profitable. The firms that supplied the bare components and materials to Apple, and the independent, arm’s-length retailers that sold the computers, were not in nearly as attractive a position. In the late 1990s, the processes of commoditization and de-commoditization had transferred the points at which proprietary integration could build proprietary competitive advantage to

FIGURE 6 - 2

The Shifting Locus of Advantage in the PC Industry’s Process Value Chain

We believe that this is an important factor that explains why Dell Computer was more successful than Compaq during the 1990s. Dell was integrated across an important not-good-enough interface, whereas Compaq was not. We also would expect that a proper cost accounting would show that Dell’s profits from retailing operations are far greater than the profits from its assembly operations.

Notes

1. There are two ways to think of a product or service’s value chain. It can be conceptualized in terms of its processes, that is, the value-added steps required to create or deliver it. For example, the processes of design, component manufacture, assembly, marketing, sales, and distribution are generic processes in a value chain. A value chain can also be thought of in terms of components, or the “bill of materials” that go into a product. For example, the engine block, chassis, braking systems, and electronic subassemblies that go into a car are components of a car’s value chain. It is helpful to keep both ways of thinking about a value chain in mind, since value chains are also “fractal”—that is, they are equally complex at every level of analysis. Specifically, for a given product that goes through the processes that define its value chain, various components must be used. Yet every component that is used has its own sequence of processes through which it must pass. The complexity of analyzing a product’s value chain is essentially irreducible. The question is which level of complexity one wishes to focus on.

2. This discussion builds heavily on Professor Michael Porter’s five forces framework and his characterization of the value chain. See Michael Porter, Competitive Strategy (New York: Free Press, 1980) and Competitive Advantage (New York: The Free Press, 1985). Analysts often use Porter’s five forces framework to determine which firms in a value-added system can wield the greatest power to appropriate profit from others. In many ways, our model in chapters 5 and 6 provides a dynamic overlay on his five forces model, suggesting that the strength of these forces is not invariant over time. It shows how the power to capture an above-average portion of the industry’s profit is likely to migrate to different stages of the value chain in a predictable way in response to the phenomena we describe here.

3. As a general observation, when you examine what seems to be the hey-day of most major companies, it was (or is) a period when the functionality and reliability of their products did not yet satisfy large numbers of customers. As a result, they had products with proprietary architectures, and made them with strong competitive cost advantages. Furthermore, when they introduced new and improved products, the new products could sustain a premium price because functionality was not yet good enough and the new products came closer to meeting what was needed. This can be said for the Bell telephone system; Mack trucks; Caterpillar earthmoving equipment; Xerox photocopiers; Nokia and Motorola mobile telephone handsets; Intel microprocessors; Microsoft operating systems; Cisco routers; the IT consulting businesses of EDS or IBM; the Harvard Business School; and many other companies.

4. In the following text we will use the term subsystem to mean, generally, an assembly of components and materials that provides a piece of the functionality required for an end-use system to be operational.

5. Once again, we see linkages to Professor Michael Porter’s notion that there are two viable “generic” strategies: differentiation and low cost (see chapter 2, note 12). Our model describes the mechanism that causes neither of these strategies to be sustainable. Differentiability is destroyed by the mechanism that leads to modularization and dis-integration. Low-cost strategies are viable only as long as the population of low-cost competitors does not have sufficient capacity to supply what customers in a given tier of the market demand. Price is set at the intersection of the supply and demand curves—at the cash cost of the marginal producer. When the marginal producer is a higher-cost disruptee, then the low-cost disruptors can make attractive profits. But when the high-cost competitors are gone and the entire market demand can be supplied by equally low-cost suppliers of modular products, then what was a low-cost strategy becomes an equal-cost strategy.

6. Not all the components or subsystems in a product contribute to the specific performance attributes of value to customers. Those that drive the performance that matters are the “performance-defining” components or subsystems. In the case of a personal computer, for example, the microprocessor, the operating system, and the applications have long been the performance-defining subsystems.

7. Analysts’ estimates of how much of the industry’s money stayed with computer assemblers and how much “leaked” through to back-end or subsystem suppliers are summarized in “Deconstructing the Computer Industry,” BusinessWeek, 23 November 1992, 90–96. As we note in the appendix to this chapter, we would expect that much of Dell’s profit comes from its direct-to-customer retailing operations, not from product assembly.

8. With just a few seconds’ reflection, it is easy to see that the investment management industry suffers from the problem of categorization along industry-defined lines that are irrelevant to profitability and growth. Hence, they create investment funds for “technology companies” and other funds for “health care companies.” Within those portfolios are disruptors and disruptees, companies on the verge of commoditization and those on the verge of decommoditization, and so on. Michael Mauboussin, chief investment strategist at Credit Suisse First Boston, recently wrote an article on this topic. It builds on the model of theory building that we have summarized in the introduction of this book, and its application to the world of investing is very insightful. See Michael Mauboussin, “No Context: The Importance of Circumstance-Based Categorization,” The Consiliant Observer, New York: Credit Suisse First Boston, 14 January 2003.

9. Those of our readers who are familiar with the disk drive industry might see a contradiction between our statement that much of the money in the industry was earned in head and disk manufacturing and the fact that the leading head and disk makers, such as Read-Rite and Komag, have not prospered. They have not prospered because most of the leading disk drive makers—particularly Seagate—integrated into their own head and disk making so that they could capture the profit instead of the independent suppliers.

10. IBM did have profitable volume in 3.5-inch drives, but it was at the highest-capacity tiers of that market, where capacity was not good enough and the product designs therefore had to be interdependent.

11. A more complete account of these developments has been published in Clayton M. Christensen, Matt Verlinden, and George Westerman, “Disruption, Disintegration and the Dissipation of Differentiability,” Industrial and Corporate Change 11, no. 5 (2002): 955–993. The first Harvard Business School working papers that summarized this analysis were written and broadly circulated in 1998 and 1999.

12. We have deliberately used present- and future-tense verbs in this paragraph. The reason is that at the time this account was first written and submitted to publishers, these statements were predictions. Subsequently, the gross margins in IBM’s 2.5-inch disk drive business deteriorated significantly, as this model predicted they would. However, IBM chose to sell off its entire disk drive business to Hitachi, giving to some other company the opportunity to sell the profitable, performance-enabling components for this class of disk drives.

13. We have written elsewhere that the Harvard Business School has an extraordinary opportunity to execute exactly this strategy in management education, if it will only seize it. Harvard writes and publishes the vast majority of the case studies and many of the articles that business school professors have used as components in courses whose architecture is of interdependent design. As on-the-job management training and corporate universities (which are nonintegrated assemblers of modular courses) disrupt traditional MBA programs, Harvard has a great opportunity to flip its business model through its publishing arm and sell not just case studies and articles as bare-bones components but also value-added subsystems as modules. These should be designed to make it simple for management trainers in the corporate setting to custom-assemble great material, deliver it exactly when it is needed, and teach it in a compelling way. (See Clayton M. Christensen, Michael E. Raynor, and Matthew Verlinden, “Skate to Where the Money Will Be,” Harvard Business Review, November 2001.)

14. This would suggest, for example, that Hewlett-Packard’s branding power would be strong mapping upward to not-yet-satisfied customers from the trajectory of improvement on which its products are positioned. And it suggests that the HP brand would be much weaker, compared with the brands of Intel and Microsoft, mapping downward from that same point to more-than-satisfied customers.

15. We are indebted to one of Professor Christensen’s Harvard MBA students, Alana Stevens, for many of these insights, which she developed in a research paper entitled “A House of Brands or a Branded House?” Stevens noted that branding power is gradually migrating away from the products to the channels in a variety of retailing categories. Manufacturers of branded food and personal care products such as Unilever and Procter & Gamble, for example, fight this battle of the brands with their channels every day, because many of their products are more than good enough. In Great Britain, disruptive channel brands such as Tesco and Sainsbury’s have decisively won this battle after first starting at the lower price points in each category and then moving up. In the United States, branded products have clung more tenaciously to shelf space, but often at the cost of exorbitant slotting fees. The migration of brands in good-enough categories is well under way in channels such as Home Depot and Staples. Where the products’ functionality and reliability have become more than good enough and it is the simplicity and convenience of purchase and use that is not good enough, then the power to brand has begun migrating to the channel whose business model is delivering on this as-yet-unsatisfied dimension.

Procter & Gamble appears to be following a sensible strategy by launching a series of new-market disruptions that simultaneously provide needed fuel for its channels’ efforts to move up-market, and preserve P&G’s power to keep the premium brand on the product. Its Dryel brand do-it-yourself home dry cleaning system, for example, is a new-market disruption because it enables individuals to do for themselves something that, historically, only a more expensive professional could do. Do-it-yourself dry cleaning is not yet good enough, so the power to build a profitable brand is likely to reside in the product for some time. What is more, just as Sony’s solid-state electronics products enabled discount merchandisers to compete against appliance stores, so P&G’s Dryel gives Wal-Mart a vehicle to move up-market and begin competing against dry cleaning establishments. P&G is doing the same thing with its introduction of its Crest brand do-it-yourself teeth whitening system, a new-market disruption of a service that historically could only be provided by professionals. We thank one of Professor Christensen’s former students, David Dintenfass, a global brand manager at Procter & Gamble, for pointing this out to us.

16. As we have shared these hypotheses with students, some of the more stylishly dressed among them have asked whether this applies also to the highest-fashion brands, such as Gucci, and in product categories such as cosmetics. Those who know us probably have observed that dressing ourselves in fashionable, branded merchandise just isn’t a job that we have been trying to get done in our lives. We confess, therefore, to having no intuition about the world of high fashion. It will probably persist profitably forever. Who are we to know?

17. Remaining competitive at the level of the process-defined value chain that the current auto assemblers dominate is likely to require that they move toward new distribution structures—an integration of supply chains and customer interfaces in a way that effectively exploits the modularity of the product itself. How this can be done and its performance implications are explored at length in the Deloitte Research study “Digital Loyalty Networks,” available for download at < http://www.dc.com/research>, or upon request from [email protected].

18. Those of our readers who believe in the efficiency of capital markets and the abilities of investors to diversify their portfolios will see no tragedy in these decisions. After these divestitures the shareholders of the two auto giants found themselves owning stock in companies that design and assemble cars, and in companies that supply performance-enabling subsystems. It is because we are writing this book for the benefit of managers in firms like General Motors and Ford that we characterize these decisions as unfortunate.

19. We say “usually” here because there are exceptions (most, but not all, of which prove the rule). We note in the text of this chapter, for example, that two modular stages of added value can be juxtaposed—as DRAM memory chips fit in modular personal computers. And there are instances where two interdependent architectures need to be integrated, such as when enterprise resource planning software from companies such as SAP needs to be interleaved into companies’ interdependent business processes. The fact that neither side is modular and configurable is what makes SAP implementations so technically and organizationally demanding.