CHAPTER FIVE

GETTING THE SCOPE OF

THE BUSINESS RIGHT

Which activities should a new-growth venture do internally in order to be as successful as possible as fast as possible, and which should it outsource to a supplier or a partner? Will success be best built around a proprietary product architecture, or should the venture embrace modular, open industry standards? What causes the evolution from closed and proprietary product architectures to open ones? Might companies need to adopt proprietary solutions again, once open standards have emerged?

Decisions about what to in-source and what to procure from suppliers and partners have a powerful impact on a new-growth venture’s chances for success. A widely used theory to guide this decision is built on categories of core and competence. If something fits your core competence, you should do it inside. If it’s not your core competence and another firm can do it better, the theory goes, you should rely on them to provide it.1

Right? Well, sometimes. The problem with the core-competence/ not-your-core-competence categorization is that what might seem to be a noncore activity today might become an absolutely critical competence to have mastered in a proprietary way in the future, and vice versa.

Consider, for example, IBM’s decision to outsource the microprocessor for its PC business to Intel, and its operating system to Microsoft. IBM made these decisions in the early 1980s in order to focus on what it did best—designing, assembling, and marketing computer systems. Given its history, these choices made perfect sense. Component suppliers to IBM historically had lived a miserable, profit-free existence, and the business press widely praised IBM’s decision to out-source these components of its PC. It dramatically reduced the cost and time required for development and launch. And yet in the process of outsourcing what it did not perceive to be core to the new business, IBM put into business the two companies that subsequently captured most of the profit in the industry.

How could IBM have known in advance that such a sensible decision would prove so costly? More broadly, how can any executive who is launching a new-growth business, as IBM was doing with its PC division in the early 1980s, know which value-added activities are those in which future competence needs to be mastered and kept inside? 2

Because evidence from the past can be such a misleading guide to the future, the only way to see accurately what the future will bring is to use theory. In this case, we need a circumstance-based theory to describe the mechanism by which activities become core or peripheral. Describing this mechanism and showing how managers can use the theory is the purpose of chapters 5 and 6.

Integrate or Outsource?

IBM and others have demonstrated—inadvertently, of course—that the core/noncore categorization can lead to serious and even fatal mistakes. Instead of asking what their company does best today, managers should ask, “What do we need to master today, and what will we need to master in the future, in order to excel on the trajectory of improvement that customers will define as important?”

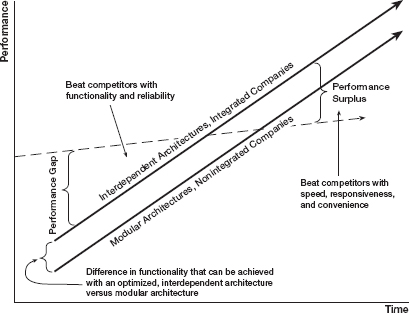

The answer begins with the job-to-be-done approach: Customers will not buy your product unless it solves an important problem for them. But what constitutes a “solution” differs across the two circumstances in figure 5-1: whether products are not good enough or are more than good enough. The advantage, we have found, goes to integration when products are not good enough, and to outsourcing—or specialization and dis-integration—when products are more than good enough.

FIGURE 5 - 1

Product Architectures and Integration

To explain, we need to explore the engineering concepts of interdependence and modularity and their importance in shaping a product’s design. We will then return to figure 5-1 to see these concepts at work in the disruption diagram.

Product Architecture and Interfaces

A product’s architecture determines its constituent components and subsystems and defines how they must interact—fit and work together—in order to achieve the targeted functionality. The place where any two components fit together is called an interface. Interfaces exist within a product, as well as between stages in the value-added chain. For example, there is an interface between design and manufacturing, and another between manufacturing and distribution.

An architecture is interdependent at an interface if one part cannot be created independently of the other part—if the way one is designed and made depends on the way the other is being designed and made. When there is an interface across which there are unpredictable interdependencies, then the same organization must simultaneously develop both of the components if it hopes to develop either component.

Interdependent architectures optimize performance, in terms of functionality and reliability. By definition, these architectures are proprietary because each company will develop its own interdependent design to optimize performance in a different way. When we use the term interdependent architecture in this chapter, readers can substitute as synonyms optimized and proprietary architecture.

In contrast, a modular interface is a clean one, in which there are no unpredictable interdependencies across components or stages of the value chain. Modular components fit and work together in well-understood and highly defined ways. A modular architecture specifies the fit and function of all elements so completely that it doesn’t matter who makes the components or subsystems, as long as they meet the specifications. Modular components can be developed in independent work groups or by different companies working at arm’s length.

Modular architectures optimize flexibility, but because they require tight specification, they give engineers fewer degrees of freedom in design. As a result, modular flexibility comes at the sacrifice of performance.3

Pure modularity and interdependence are the ends of a spectrum: Most products fall somewhere between these extremes. As we shall see, companies are more likely to succeed when they match product architecture to their competitive circumstances.

Competing with Interdependent Architecture in a Not-Good-Enough World

The left side of figure 5-1 indicates that when there is a performance gap—when product functionality and reliability are not yet good enough to address the needs of customers in a given tier of the market—companies must compete by making the best possible products. In the race to do this, firms that build their products around proprietary, interdependent architectures enjoy an important competitive advantage against competitors whose product architectures are modular, because the standardization inherent in modularity takes too many degrees of design freedom away from engineers, and they cannot optimize performance.

To close the performance gap with each new product generation, competitive forces compel engineers to fit the pieces of their systems together in ever-more-efficient ways in order to wring the most performance possible out of the technology that is available. When firms must compete by making the best possible products, they cannot simply assemble standardized components, because from an engineering point of view, standardization of interfaces (meaning fewer degrees of design freedom) would force them to back away from the frontier of what is technologically possible. When the product is not good enough, backing off from the best that can be done means that you’ll fall behind.

Companies that compete with proprietary, interdependent architectures must be integrated: They must control the design and manufacture of every critical component of the system in order to make any piece of the system. As an illustration, during the early days of the mainframe computer industry, when functionality and reliability were not yet good enough to satisfy the needs of mainstream customers, you could not have existed as an independent contract manufacturer of mainframe computers because the way the machines were designed depended on the art that would be used in manufacturing, and vice versa. There was no clean interface between design and manufacturing. Similarly, you could not have existed as an independent supplier of operating systems, core memory, or logic circuitry to the mainframe industry because these key subsystems had to be interdependently and iteratively designed, too.4

New, immature technologies are often drafted into use as sustaining improvements when functionality is not good enough. One reason why entrant companies rarely succeed in commercializing a radically new technology is that breakthrough sustaining technologies are rarely plug-compatible with existing systems of use.5 There are almost always many unforseen interdependencies that mandate change in other elements of the system before a viable product that incorporates a radically new technology can be sold. This makes the new product development cycle tortuously long when breakthrough technology is expected to be the foundation for improved performance. The use of advanced ceramics materials in engines, the deployment of high-bandwidth DSL lines at the “last mile” of the telecommunications infrastructure, the building of superconducting electric motors for ship propulsion, and the transition from analog to digital to all-optical telecommunications networks could all only be accomplished by extensively integrated companies whose scope could encompass all of the interdependencies that needed to be managed. This is treacherous terrain for entrants.

For these reasons it wasn’t just IBM that dominated the early computer industry by virtue of its integration. Ford and General Motors, as the most integrated companies, were the dominant competitors during the not-good-enough era of the automobile industry’s history. For the same reasons, RCA, Xerox, AT&T, Standard Oil, and US Steel dominated their industries at similar stages. These firms enjoyed near-monopoly power. Their market dominance was the result of the not-good-enough circumstance, which mandated interdependent product or value chain architectures and vertical integration.6 But their hegemony proved only temporary, because ultimately, companies that have excelled in the race to make the best possible products find themselves making products that are too good. When that happens, the intricate fabric of success of integrated companies like these begins to unravel.

Overshooting and Modularization

One symptom that these changes are afoot—that the functionality and reliability of a product have become too good—is that salespeople will return to the office cursing a customer: “Why can’t they see that our product is better than the competition? They’re treating it like a commodity!” This is evidence of overshooting. Such companies find themselves on the right side of figure 5-1, where there is a performance surplus. Customers are happy to accept improved products, but they’re unwilling to pay a premium price to get them.7

Overshooting does not mean that customers will no longer pay for improvements. It just means that the type of improvement for which they will pay a premium price will change. Once their requirements for functionality and reliability have been met, customers begin to redefine what is not good enough. What becomes not good enough is that customers can’t get exactly what they want exactly when they need it, as conveniently as possible. Customers become willing to pay premium prices for improved performance along this new trajectory of innovation in speed, convenience, and customization. When this happens, we say that the basis of competition in a tier of the market has changed.

The pressure of competing along this new trajectory of improvement forces a gradual evolution in product architecture, as depicted in figure 5-1—away from the interdependent, proprietary architectures that had the advantage in the not-good-enough era toward modular designs in the era of performance surplus. Modular architectures help companies to compete on the dimensions that matter in the lower-right portions of the disruption diagram. Companies can introduce new products faster because they can upgrade individual subsystems without having to redesign everything. Although standard interfaces invariably force compromise in system performance, firms have the slack to trade away some performance with these customers because functionality is more than good enough.

Modularity has a profound impact on industry structure because it enables independent, nonintegrated organizations to sell, buy, and assemble components and subsystems.8 Whereas in the interdependent world you had to make all of the key elements of the system in order to make any of them, in a modular world you can prosper by outsourcing or by supplying just one element. Ultimately, the specifications for modular interfaces will coalesce as industry standards. When that happens, companies can mix and match components from best-of-breed suppliers in order to respond conveniently to the specific needs of individual customers.

As depicted in figure 5-1, these nonintegrated competitors disrupt the integrated leader. Although we have drawn this diagram in two dimensions for simplicity, technically speaking they are hybrid disruptors because they compete with a modified metric of performance on the vertical axis of the disruption diagram, in that they strive to deliver rapidly exactly what each customer needs. Yet, because their nonintegrated structure gives them lower overhead costs, they can profitably pick off low-end customers with discount prices.

From Interdependent to Modular Design—and Back

The progression from integration to modularization plays itself out over and over as products improve enough to overshoot customers’ requirements.9 When wave after wave of sequential disruptions sweep through an industry, this progression repeats itself within each wave. In the original mainframe value network of the computer industry, for example, IBM enjoyed unquestioned dominance in the first decade with its interdependent architectures and vertical integration. In 1964, however, it responded to cost, complexity, and time-to-market pressure by creating a more modular design starting with its System 360. Modularization forced IBM to back away from the frontier of functionality, shifting from the left to the right trajectory of performance improvement in figure 5-1. This created space at the high end for competitors such as Control Data and Cray Research, whose interdependent architectures continued to push the bleeding edge of what was possible.

Opening its architecture was not a mistake for IBM: The economics of competition forced it to take these steps. Indeed, modularity reduced development and production costs and enabled IBM to custom-configure systems for each customer. This created a major new wave of growth in the industry. Another effect of modularization, however, was that nonintegrated companies could begin to compete effectively. A population of nonintegrated suppliers of plug-compatible components and subsystems such as disk drives, printers, and data input devices enjoyed lower overhead costs and began disrupting IBM en masse.10

This cycle repeated itself when minicomputers began their new-market disruption of mainframes. Digital Equipment Corporation initially dominated that industry with its proprietary architecture when minicomputers really weren’t very good, because its hardware and operating system software were interdependently designed to maximize performance. As functionality subsequently approached adequacy, however, other competitors such as Data General, Wang Laboratories, and Prime Computer that were far less integrated but much faster to market began taking significant share.11 As happened in mainframes, the minicomputer market boomed because of the better and less-expensive products that this intensified competition created.

The same sequence occurred in the personal computer wave of disruption. During the early years, Apple Computer—the most integrated company with a proprietary architecture—made by far the best desktop computers. They were easier to use and crashed much less often than computers of modular construction. Ultimately, when the functionality of desktop machines became good enough, IBM’s modular, open-standard architecture became dominant. Apple’s proprietary architecture, which in the not-good-enough circumstance was a competitive strength, became a competitive liability in the more-than-good-enough circumstance. Apple as a consequence was relegated to niche-player status as the growth explosion in personal computers was captured by the nonintegrated providers of modular machines.

The same transition will have occurred before long in the next two waves of disruptive computer products—notebook computers and hand-held wireless devices. The companies that are most successful in the beginning are those with optimized, interdependent architectures. Companies whose strategy is prematurely modular will struggle to be performance-competitive during the early years when performance is the basis of competition. Later, architectures and industry structures will evolve toward openness and dis-integration.

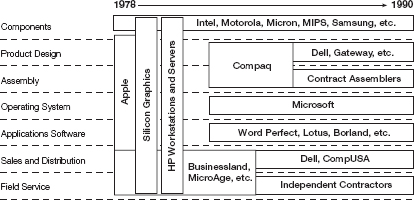

Figure 5-2 summarizes these transitions in the personal computer industry in a simplified way, showing how the proprietary systems and vertically integrated company that was strongest during the industry’s initial not-good-enough years gave way to a nonintegrated, horizontally stratified population of companies in its later years. It almost looks like the industry got pushed through a bologna slicer. The chart would look similar for each of the value networks in the industry. In each instance, the driver of modularization and dis-integration was not the passage of time or the “maturation” of the industry per se.12 What drives this process is this predictable causal sequence:

- The pace of technological improvement outstrips the ability of customers to utilize it, so that a product’s functionality and reliability that were not good enough at one point overshoot what customers can utilize at a later point.

- This forces companies to compete differently: The basis of competition changes. As customers become less and less willing to reward further improvements in functionality and reliability with premium prices, those suppliers that get better and better at conveniently giving customers exactly what they want when they need it are able to earn attractive margins.

- As competitive pressures force companies to be as fast and responsive as possible, they solve this problem by evolving the architecture of their products from being proprietary and interdependent toward being modular.

- Modularity enables the dis-integration of the industry. A population of nonintegrated firms can now outcompete the integrated firms that had dominated the industry. Whereas integration at one point was a competitive necessity, it later becomes a competitive disadvantage.13

FIGURE 5 - 2

The Transition from Vertical Integration to Horizontal Stratification in the Microprocessor-Based Computer Industry

Figure 5-2 is simplified, in that the integrated business model did not disappear overnight—rather, it became less dominant as the trajectory of performance improvement passed through each tier of each market and the modular model gradually became more dominant.

We emphasize that the circumstances of performance gaps and performance surpluses drive the viability of these strategies of architecture and integration. This means, of course, that if the circumstances change again, the strategic approach must also change. Indeed, after 1990 there has been some reintegration in the computer industry. We describe one factor that drives reintegration in the next section, and return to it in chapter 6.

The Drivers of Reintegration

Because the trajectory of technological improvement typically outstrips the ability of customers in any given tier of the market to utilize it, the general current flows from interdependent architectures and integrated companies toward modular architectures and nonintegrated companies. But remember, customers’ needs change too. Usually this happens at a relatively slower pace, as suggested by the dotted lines on the disruption diagram. On occasion there can be a discontinuous shift in the functionality that customers demand, essentially shifting the dotted line in figure 5-1 upward. This flips the industry back toward the left side of the diagram and resets the clock into an era in which integration once again is the source of competitive advantage.

For example, in the early 1980s Apple Computer’s products employed a proprietary architecture involving extensive interdependence within the software and across the hardware–software interface. By the mid-1980s, however, a population of specialized firms such as WordPerfect and Lotus, whose products plugged into Microsoft’s DOS operating system through a well-defined interface, had arisen to dethrone Apple’s dominance in software. Then in the early 1990s, the dotted lines of functionality that customers needed in PC software seemed to shift up as customers began demanding to transfer graphics and spreadsheet files into word processing documents, and so on. This created a performance gap, flipping the industry to the not-good-enough side of the world where fitting interdependent pieces of the system together became competitively critical again.

In response, Microsoft interdependently knitted its Office suite of products (and later its Web browser) into its Windows operating system. This helped it stretch so much closer to what customers needed than could the population of focused firms that the nonintegrated software companies, including WordPerfect and Lotus’s 123 spreadsheet, vaporized very quickly. Microsoft’s dominance did not arise from monopolistic malfeasance. Rather, its integrated value chain under not-good-enough conditions enabled it to make products whose performance came closer to what customers needed than could nonintegrated competitors under those conditions.14

Today, however, things may be poised to flip again. As computing becomes more Internet-centric, operating systems with modular architectures (such as Linux), and modular programming languages (such as Java) constitute hybrid disruptions relative to Microsoft. This modularity is enabling a population of specialized firms to begin making incursions into this industry.

In a similar way, fifteen years ago in optical telecommunications the bandwidth available over a fiber was more than good enough for voice communication; as a consequence, the industry structure was horizontally stratified, not vertically integrated. Corning made the optical fiber, Siemens cabled it, and other companies made the multiplexers, the amplifiers, and so on. As the screams for more bandwidth intensified in the late 1990s, the dotted line in figure 5-1 shifted up, and the industry flipped into a not-good-enough situation. Corning found that it could not even design its next generation of fiber if it did not interdependently design the amplifier, for example. It had to integrate across this interface in order to compete, and it did so. Within a few years, there was more than enough bandwidth over a fiber, and the rationale for being vertically integrated disappeared again.

The general rule is that companies will prosper when they are integrated across interfaces in the value chain where performance, however it is defined at that point, is not good enough relative to what customers require at the next stage of value addition. There are often several of these points in the complete value-added chain of an industry. This means that an industry will rarely be completely nonintegrated or integrated. Rather, the points at which integration and nonintegration are competitively important will predictably shift over time.15 We return to this notion in greater detail in chapter 6.

Aligning Your Architecture Strategy to Your Circumstances

In a modular world, supplying a component or assembling outsourced components are both appropriate “solutions.” In the interdependent world of inadequate functionality, attempting to provide one piece of the system doesn’t solve anybody’s problem. Knowing this, we can predict the failure or success of a growth business based on managers’ choices to compete with modular architectures when the circumstances mandate interdependence, and vice versa.

Attempting to Grow a Nonintegrated Business

When Functionality Isn’t Good Enough

It’s tempting to think you can launch a new-growth business by providing one piece of a modular product’s value. Managers often see specialization as a less daunting path to entry than providing an entire system solution. It costs less and allows the entrant to focus on what it does best, leaving the rest of the solution to other partners in the ecosystem. This works in the circumstances in the lower-right portions of the disruption diagram. But when functionality and reliability are inadequate, the seemingly lower hurdle that partnering or outsourcing seems to present usually proves illusory, and causes many growth ventures to fail. Modularity often is not technologically or competitively possible during the early stages of many disruptions.

To succeed with a nonintegrated, specialist strategy, you need to be certain you’re competing in a modular world. Three conditions must be met in order for a firm to procure something from a supplier or partner, or to sell it to a customer. First, both suppliers and customers need to know what to specify—which attributes of the component are crucial to the operation of the product system, and which are not. Second, they must be able to measure those attributes so that they can verify that the specifications have been met. Third, there cannot be any poorly understood or unpredictable interdependencies across the customer–supplier interface. The customer needs to understand how the subsystem will interact with the performance of other pieces of the system so that it can be used with predictable effect. These three conditions—specifiability, verifiability, and predictability—constitute an effective modular interface.

When product performance is not good enough—when competition forces companies to use new technologies in nonstandard product architectures to stretch performance as far as possible—these three conditions often are not met. When there are complex, reciprocal, unpredictable interdependencies in the system, a single organization’s boundaries must span those interfaces. People cannot efficiently resolve interdependent problems while working at arm’s length across an organizational boundary.16

Modular Failures in Interdependent Circumstances

In 1996 the United States government passed legislation to stimulate competition in local telecommunication services. The law mandated that independent companies be allowed to sell services to residential and business customers and then to plug into the switching infrastructure of the incumbent telephone companies. In response, many nonintegrated competitive local exchange carriers (CLECs) such as Northpoint Communications attempted to offer high-speed DSL access to the Internet. Corporations and venture capitalists funneled billions of dollars into these companies.

The vast majority of CLECs failed. This is because DSL service was in the interdependent realm of figure 5-1. There were too many subtle and unpredictable interdependencies between what the CLECs did when they installed service on a customer’s premises and what the telephone company had to do in response. It wasn’t necessarily the technical interface that was the problem. The architecture of the telephone companies’ billing system software, for example, was interdependent—making it very difficult to account and bill for the cost of a “plugged-in” CLEC customer. The fact that the telephone companies were integrated across these interdependent interfaces gave them a powerful advantage. They understood their own network and IT system architectures and could consequently deploy their offerings more quickly with fewer concerns about the unintended consequences of reconfiguring their own central office facilities.17

Similarly, in the eagerly anticipated wireless-access-to-data-over-the-Internet industry, most European and North American competitors tried to enter as nonintegrated specialists, providing one element of the system. They relied prematurely on industry standards such as Wireless Applications Protocol (WAP) to define the interfaces between the handset device, the network, and the new content being developed. Companies within each link in the value chain were left to their own devices to determine how best to exploit the wireless Internet. Almost no revenues and billions in losses have resulted. The “partnering” theology that had become de rigueur among telecommunications investors and entrepreneurs who had watched Cisco succeed by partnering turned out to be misapplied in a different circumstance in which it couldn’t work—with tragic consequences.

Appropriate Integration

In contrast, Japan’s NTT DoCoMo and J-Phone have approached the new-market disruptive opportunity of the wireless Internet with far greater integration across stages of the value chain. These growth ventures already claim tens of millions of customers and billions in revenue.18 Although they do not own every upstream or downstream connection in the value chain, DoCoMo and J-Phone carefully manage the interfaces with their content providers and handset manufacturers. Their interdependent approach allows them to surmount the technological limitations of wireless data and to create user interfaces, a revenue model, and a billing infrastructure that make the customer experience as seamless as possible.19

The DoCoMo and J-Phone networks comprise competing, proprietary systems. Isn’t this inefficient? Executives and investors indeed are often eager to hammer out the standards before they invest their money, to preempt wasteful duplication of competing standards and the possibility that a competitor’s approach might emerge as the industry’s standard. This works when functionality and reliability and the consequent competitive conditions permit it. But when they do not, then having competing proprietary systems is not wasteful.20 Far more is wasted when huge sums are spent on an architectural approach that does not fit the basis of competition. True, one system ultimately may define the standard, and those whose standards do not prevail may fall by the wayside after their initial success, or they may become niche players. Competition of this sort inspired Adam Smith and Charles Darwin to write their books.

Parenthetically, we note that in some of its ventures abroad, such as in its partnership with AT&T Wireless in the United States, DoCoMo has followed its partners’ strategy of adopting industry standards with less vertical integration and has stumbled badly, just like its American and European counterparts. It’s not DoCoMo that makes the difference. It’s employing the right strategy in the right circumstances that makes the difference.

Being in the Right Place at the Right Time

We noted earlier that the pure forms of interdependence and modularity are the extremes on a continuum, and companies may choose strategies anywhere along the spectrum at any point in time. A company may not necessarily fail if it starts with a prematurely modular architecture when the basis of competition is functionality and reliability. It will simply suffer from an important competitive disadvantage until the basis of competition shifts and modularity becomes the predominant architectural form. This was the experience of IBM and its clones in the personal computer industry. The superior performance of Apple’s computers did not preclude IBM from succeeding. IBM just had to fight its performance disadvantage because it opted prematurely for a modular architecture.

What happens to the initial leaders when they overshoot, after having jumped ahead of the pack with performance and reliability advantages that were grounded in proprietary architecture? The answer is that they need to modularize and open up their architectures and begin aggressively to sell their subsystems as modules to other companies whose low-cost assembly capability can help grow the market. Had good theory been available to provide guidance, for example, there is no reason why the executives of Apple Computer could not have modularized their design and have begun selling their operating system with its interdependent applications to other computer assemblers, preempting Microsoft’s development of Windows. Nokia appears today to be facing the same decision. We sense that adding even more features and functions to standard wireless handsets is overshooting what its less-demanding customers can utilize; and a dis-integrated handset industry that utilizes Symbian’s operating system is rapidly gaining traction. The next chapter will show that a company can begin with a proprietary architecture when disruptive circumstances mandate it, and then, when the basis of competition changes, open its architecture to become a supplier of key subsystems to low-cost assemblers. If it does this, it can avoid the traps of becoming a niche player on the one hand and the supplier of an undifferentiated commodity on the other. The company can become capitalism’s equivalent of Wayne Gretzky, the hockey great. Gretzky had an instinct not to skate to where the puck presently was on the ice, but instead to skate to where the puck was going to be. chapter 6 can help managers steer their companies not to the profitable businesses of the past, but to where the money will be.

There are few decisions in building and sustaining a new-growth business that scream more loudly for sound, circumstance-based theory than those addressed in this chapter. When the functionality and reliability of a product are not good enough to meet customers’ needs, then the companies that will enjoy significant competitive advantage are those whose product architectures are proprietary and that are integrated across the performance-limiting interfaces in the value chain. When functionality and reliability become more than adequate, so that speed and responsiveness are the dimensions of competition that are not now good enough, then the opposite is true. A population of nonintegrated, specialized companies whose rules of interaction are defined by modular architectures and industry standards holds the upper hand.

At the beginning of a wave of new-market disruption, the companies that initially will be the most successful will be integrated firms whose architectures are proprietary because the product isn’t yet good enough. After a few years of success in performance improvement, those disruptive pioneers themselves become susceptible to hybrid disruption by a faster and more flexible population of nonintegrated companies whose focus gives them lower overhead costs.

For a company that serves customers in multiple tiers of the market, managing the transition is tricky, because the strategy and business model that are required to successfully reach unsatisfied customers in higher tiers are very different from those that are necessary to compete with speed, flexibility, and low cost in lower tiers of the market. Pursuing both ends at once and in the right way often requires multiple business units—a topic that we address in the next two chapters.

Notes

1. We are indebted to a host of thoughtful researchers who have framed the existence and the role of core and competence in making these decisions. These include C. K. Prahalad and Gary Hamel, “The Core Competence of the Corporation,” Harvard Business Review, May–June 1990, 79–91; and Geoffrey Moore, Living on the Fault Line (New York: HarperBusiness, 2002). It is worth noting that “core competence,” as the term was originally coined by C. K. Prahalad and Gary Hamel in their seminal article, was actually an apology for the diversified firm. They were developing a view of diversification based on the exploitation of established capabilities, broadly defined. We interpret their work as consistent with a well-respected stream of research and theoretical development that goes all the way back to Edith Penrose’s 1959 book The Theory of the Growth of the Firm (New York: Wiley). This line of thinking is very powerful and useful. As it is used now, however, the term “core competence” has become synonymous with “focus”; that is, firms that seek to exploit their core competence do not diversify—if anything, they focus their business on those activities that they do particularly well. It is this “meaning in use” that we feel is misguided.

2. IBM arguably had much deeper technological capability in integrated circuit and operating system design and manufacturing than did Intel or Microsoft at the time IBM put these companies into business. It probably is more correct, therefore, to say that this decision was based more on what was core than what was competence. The sense that IBM needed to outsource was based on the correct perception of the new venture’s managers that they needed a far lower overhead cost structure to become acceptably profitable to the corporation and needed to be much faster in new-product development than the company’s established internal development processes, which had been honed in a world of complicated interdependent products with longer development cycles, could handle.

3. In the past decade there has been a flowering of important studies on these concepts. We have found the following ones to be particularly helpful: Rebecca Henderson and Kim B. Clark, “Architectural Innovation: The Reconfiguration of Existing Product Technologies and the Failure of Established Firms,” Administrative Science Quarterly 35 (1990): 9–30; K. Monteverde, “Technical Dialog as an Incentive for Vertical Integration in the Semiconductor Industry,” Management Science 41 (1995): 1624–1638; Karl Ulrich, “The Role of Product Architecture in the Manufacturing Firm,” Research Policy 24 (1995): 419–440; Ron Sanchez and J. T. Mahoney, “Modularity, Flexibility and Knowledge Management in Product and Organization Design,” Strategic Management Journal 17 (1996): 63–76; and Carliss Baldwin and Kim B. Clark, Design Rules: The Power of Modularity (Cambridge, MA: MIT Press, 2000).

4. The language we have used here characterizes the extremes of interdependence, and we have chosen the extreme end of the spectrum simply to make the concept as clear as possible. In complex product systems, there are varying degrees of interdependence, which differ over time, component by component. The challenges of interdependence can also be dealt with to some degree through the nature of supplier relationships. See, for example, Jeffrey Dyer, Collaborative Advantage: Winning Through Extended Enterprise Supplier Networks (New York: Oxford University Press, 2000).

5. Many readers have equated in their minds the terms disruptive and breakthrough. It is extremely important, for purposes of prediction and understanding, not to confuse the terms. Almost invariably, what prior writers have termed “breakthrough” technologies have, in our parlance, a sustaining impact on the trajectory of technological progress. Some sustaining innovations are simple, incremental year-to-year improvements. Other sustaining innovations are dramatic, breakthrough leapfrogs ahead of the competition, up the sustaining trajectory. For predictive purposes, however, the distinction between incremental and breakthrough technologies rarely matters. Because both types have a sustaining impact, the established firms typically triumph. Disruptive innovations usually do not entail technological breakthroughs. Rather, they package available technologies in a disruptive business model. New breakthrough technologies that emerge from research labs are almost always sustaining in character, and almost always entail unpredictable interdependencies with other subsystems in the product. Hence, there are two powerful reasons why the established firms have a strong advantage in commercializing these technologies.

6. Professor Alfred Chandler’s The Visible Hand (Cambridge, MA: Belknap Press, 1977) is a classic study of how and why vertical integration is critical to the growth of many industries during their early period.

7. Economists’ concept of utility, or the satisfaction that customers receive when they buy and use a product, is a good way to describe how competition in an industry changes when this happens. The marginal utility that customers receive is the incremental addition to satisfaction that they get from buying a better-performing product. The increased price that they are willing to pay for a better product will be proportional to the increased utility they receive from using it—in other words, the marginal price improvement will equal the improvement in marginal utility. When customers can no longer utilize further improvements in a product, marginal utility falls toward zero, and as a result customers become unwilling to pay higher prices for better-performing products.

8. Sanchez and Mahoney, in “Modularity, Flexibility and Knowledge Management in Product and Organization Design,” were among the first to describe this phenomenon.

9. The landmark work of Professors Carliss Baldwin and Kim B. Clark, cited in note 3, describes the process of modularization in a cogent, useful way. We recommend it to those who are interested in studying the process in greater detail.

10. Many students of IBM’s history will disagree with our statement that competition forced the opening of IBM’s architecture, contending instead that the U.S. government’s antitrust litigation forced IBM open. The antitrust action clearly influenced IBM, but we would argue that government action or not, competitive and disruptive forces would have brought an end to IBM’s position of near-monopoly power.

11. Tracy Kidder’s Pulitzer Prize–winning account of product development at Data General, The Soul of a New Machine (New York: Avon Books, 1981), describes what life was like as the basis of competition began to change in the minicomputer industry.

12. MIT Professor Charles Fine has written an important book on this topic as well: Clockspeed (Reading, MA: Perseus Books, 1998). Fine observed that industries go through cycles of integration and nonintegration in a sort of “double helix” cycle. We hope that the model outlined here and in chapter 6 both confirms and adds causal richness to Fine’s findings.

13. The evolving structure of the lending industry offers a clear example of these forces at work. Integrated banks such as J.P. Morgan Chase have powerful competitive advantages in the most complex tiers of the lending market. Integration is key to their ability to knit together huge, complex financing packages for sophisticated and demanding global customers. Decisions about whether and how much to lend cannot be made according to fixed formulas and measures; they can only be made through the intuition of experienced lending officers.

Credit scoring technology and asset securitization, however, are disrupting and dis-integrating the simpler tiers of the lending market. In these tiers, lenders know and can measure precisely those attributes that determine whether borrowers will repay a loan. Verifiable information about borrowers—such as how long they have lived where they live, how long they have worked where they work, what their income is, and whether they’ve paid other bills on time—is combined to make algorithm-based lending decisions. Credit scoring took root in the 1960s in the simplest tier of the lending market, in department stores’ decisions to issue their own credit cards. Then, unfortunately for the big banks, the disruptive horde moved inexorably up-market in pursuit of profit—first to general consumer credit card loans, then to automobile loans and mortgage loans, and now to small business loans. The lending industry in these simpler tiers of the market has largely dis-integrated. Specialist nonbank companies have emerged to provide each slice of added value in these tiers of the lending industry. Whereas integration is a big advantage in the most complex tiers of the market, in overserved tiers it is a disadvantage.

14. Our conclusions support those of Stan J. Liebowitz and Stephen E. Margolis in Winners, Losers & Microsoft: Competition and Antitrust in High Technology (Oakland, CA: Independent Institute, 1999).

15. Another good illustration of this is the push being made by Apple Computer, at the time of this writing, to be the gateway to the consumer for multimedia entertainment. Apple’s interdependent integration of the operating system and applications creates convenience, which customers value at this point because convenience is not yet good enough.

16. Specifiability, measurability, and predictability constitute what an economist would term “sufficient information” for an efficient market to emerge at an interface, allowing organizations to deal with each other at arm’s length. A fundamental tenet of capitalism is that the invisible hand of market competition is superior to that of managerial oversight as a coordinating mechanism between actors in a market. This is why, when a modular interface becomes defined, an industry will dis-integrate at that interface. However, when specifiability, measurability, and predictability do not exist, efficient markets cannot function. It is under these circumstances that managerial oversight and coordination perform better than market competition as a coordinating mechanism.

This is an important underpinning of the award-winning findings of Professor Tarun Khanna and his colleagues, which show that in developing economies, diversified business conglomerates outperform focused, independent companies, whereas the reverse is true in developed economies. See, for example, Tarun Khanna and Krishna G. Palepu, “Why Focused Strategies May Be Wrong for Emerging Markets,” Harvard Business Review, July–August 1997, 41–51; and Tarun Khanna and Jan Rivkin, “Estimating the Performance Effects of Business Groups in Emerging Markets,” Strategic Management Journal 22 (2001): 45–74.

A bedrock set of concepts in understanding why organizational integration is critical when the conditions of modularity are not met is developed in the transaction cost economics (TCE) school of thought, which traces its origins to the work of Ronald Coase (R. H. Coase, “The Nature of the Firm,” Econometrica 4 [1937]: 386–405). Coase argued that firms were created when it got “too expensive” to negotiate and enforce contracts between otherwise “independent” parties. More recently, the work of Oliver Williamson has proven seminal in the exploration of transaction costs as a determinant of firm boundaries. See, for example, O. E. Williamson, Markets and Hierarchies (New York: Free Press, 1975); “Transaction Cost Economics,” in The Economic Institutions of Capitalism, ed., O. E. Williamson (New York: Free Press, 1985), 15–42; and “Transaction-Cost Economics: The Governance of Contractual Relations,” in Organiational Economics, ed., J. B. Barney and W. G. Ouichi (San Francisco: Jossey-Bass, 1986). In particular, TCE has been used to explain the various ways in which firms might expand their operating scope: either through unrelated diversification (C. W. L. Hill, et al., “Cooperative Versus Competitive Structures in Related and Unrelated Diversified Firms,” Organization Science 3, no. 4 [1992]: 501–521); related diversification (D. J. Teece, “Economics of Scope and the Scope of the Enterprise,” Journal of Economic Behavior and Organization 1 [1980]: 223–247); and D. J. Teece, “Toward an Economic Theory of the Multiproduct Firm,” Journal of Economic Behavior and Organization 3 [1982], 39–63); or vertical integration (K. Arrow, The Limits of Organization [New York: W. W. Norton, 1974]; B. R. G. Klein, et al., “Vertical Integration, Appropriable Rents and Competitive Contracting Process,” Journal of Law and Economics 21 [1978] 297–326; and K. R. Harrigan, “Vertical Integration and Corporate Strategy,” Academy of Management Journal 28, no. 2 [1985]: 397–425). More generally, this line of research is known as the “market failures” paradigm for explaining changes in firm scope (K. N. M. Dundas, and P. R. Richardson, “Corporate Strategy and the Concept of Market Failure,” Strategic Management Journal 1, no. 2 [1980]: 177–188). Our hope is that we have advanced this line of thinking by elaborating more precisely the considerations that give rise to the contracting difficulties that lie at the heart of the TCE school.

17. Even if the incumbent local exchange carriers (ILECs) didn’t understand all the complexities and unintended consequences better than CLEC engineers, organizationally they were much better positioned to resolve any difficulties, since they could appeal to organizational mechanisms rather than have to rely on cumbersome and likely incomplete ex ante contracts.

18. See Jeffrey Lee Funk, The Mobile Internet: How Japan Dialed Up and the West Disconnected (Hong Kong: ISI Publications, 2001). This really is an extraordinarily insightful study from which a host of insights can be gleaned. In his own language, Funk shows that another important reason why DoCoMo and J-Phone were so successful in Japan is that they followed the pattern that we describe in chapters 3 and 4 of this book. They initially targeted customers who were largely non-Internet users (teenaged girls) and helped them get done better a job that they had already been trying to do: have fun with their friends. Western entrants into this market, in contrast, envisioned sophisticated offerings to be sold to current customers of mobile phones (who primarily used them for business) and current users of the wire-line Internet. An internal perspective on this development can be found in Mari Matsunaga, The Birth of I-Mode: An Analogue Account of the Mobile Internet (Singapore: Chuang Yi Publishing, 2001). Matsunaga was one of the key players in the development of i-mode at DoCoMo.

19. See “Integrate to Innovate,” a Deloitte Research study by Michael E. Raynor and Clayton M. Christensen. Available at < http://www.dc.com/vcd>, or upon request from [email protected].

20. Some readers who are familiar with the different experiences of the European and American mobile telephony industries may take issue with this paragraph. Very early on, the Europeans coalesced around a prenegotiated standard called GSM, which enabled mobile phone users to use their phones in any country. Mobile phone usage took off more rapidly and achieved higher penetration rates than in America, where several competing standards were battling it out. Many analysts have drawn the general conclusion from the Europeans’ strategy of quickly coalescing around a standard that it is always advisable to avoid the wasteful duplication of competing mutually incompatible architectures. We believe that the benefits of a single standard have been largely exaggerated, and that other important differences between the United States and Europe which contributed significantly to the differential adoption rates have not been given their due.

First, the benefits of a single standard appear to have manifested themselves largely in terms of supply-side rather than demand-side benefits. That is, by stipulating a single standard, European manufacturers of network equipment and handsets were able to achieve greater scale economies than companies manufacturing for the North American markets. This might well have manifested itself in the form of lower prices to consumers; however, the relevant comparison is not the cost of mobile telephony in Europe versus North America—these services were not competing with each other. The relevant comparison is with wireline telephony in each respsective market. And here it is worth noting that wireline local and long distance telephony services are much more expensive in Europe than in North America, and as a result, wireless telephony was a much more attractive substitute for wireline in Europe than in North America. The putative demand-side benefit of transnational usage has not, to our knowledge, been demonstrated in the usage patterns of European consumers. Consequently, we would be willing to suggest that a far more powerful cause of the relative success of mobile telephony in Europe was not that schoolgirls from Sweden could use their handset when on holiday in Spain, but rather the relative improvement in ease of use and cost provided by mobile telephony versus the wireline alternative.

Second, and perhaps even more important, European regulation mandated that “calling party pays” with respect to mobile phone usage, whereas North American regulators mandated that “mobile party pays.” In other words, in Europe, if you call someone’s mobile phone number, you pay the cost of the call; to the recipient, it’s free. In North America, if someone calls you on your mobile phone, it’s on your dime. As a result, Europeans were far freer in giving out their mobile phone numbers, hence increasing the likelihood of usage. For more on this topic, see Strategis Group, “Calling Party Pays Case Study Analysis; ITU-BDT Telecommunication Regulatory Database”; and ITU Web site: <http://www.itu.int/ITU-D/ict/statistics>.

Teasing out the effects of each of these contributors (the GSM standard, lower relative price versus wireline, and calling party pays regulation), as well as others that might be adduced is not a trivial task. But we would suggest that the impact of the single standard is far less than typically implied, and certainly is not the principal factor in explaining higher mobile phone penetration rates in Europe versus North America.