![]()

Designing an efficient method of loading game asset data for HTML5 games is essential in creating a good user experience for players. When games can be played immediately in the browser with no prior downloading there are different considerations to make, not only for the first time play experience but also for future plays of the game. The assets referred to by this chapter are not the usual HTML, CSS, JavaScript, and other media that make up a web site, but are the game assets required specifically for the game experience. The techniques mentioned in this chapter go beyond dealing with the standard image and sound data usually handled by the browser and aim at helping you consider assets such as models, animations, shaders, materials, UIs, and other structural data that is not represented in code. Whether this data is in text or binary form (the “Data Formats” section will discuss both) it somehow needs to be transferred to the player’s machine so that the JavaScript code running the game can turn it into something amazing that players can interact with.

This chapter also discusses various considerations game developers should make regarding the distribution of their game assets and optimizations for loading data. Structuring a good loading mechanism and understanding the communication process between the client’s browser and server are essential for producing a responsive game that can quickly be enjoyed by millions of users simultaneously. Taking advantage of the techniques mentioned in the “Asset Hosting” section is essential for the best first impressions of the game, making sure it starts quickly the first time. The tips in the “Caching Data” section are primarily focussed on improving performance for future runs, making an online, connected game feel like it is sitting on the player’s computer, ready to run at any time. The final section on “Asset Grouping” is about organizing assets in a way that suits the strengths and weaknesses of browser-based data loading.

The concepts covered by each section are a flavor of what you will need to do to improve your loading times. Although the concepts are straightforward to understand, the complexity lies in the details of the implementation with respect to your game, and which services or APIs you choose. Each section outlines the resources that are essential to discover the APIs in more detail. Many of the concepts have been implemented as part of the open source Turbulenz Engine, which is used throughout this chapter as a real world example of the techniques presented. Figure 2-1 shows the Turbulenz Engine in action. The libraries not only prove that the concepts work for published games, but also show how to handle the capabilities and quirks of different browsers in a single implementation. By the end of the chapter you should have a good idea of which quick improvements to make and what new approaches are worth investigating.

Figure 2-1. Polycraft is a complete 3D, HTML5 game built by Wonderstruck Games (http://wonderstruckgames.com) using the Turbulenz Engine. With 1000+ assets equating to ∼50Mbs of data when uncompressed, efficiently loading and processing assets for this amount of data is essential for a smooth gaming experience. The recommendations in this chapter come from our experiences of developing games such as Polycraft for the Web. The development team at Turbulenz hope that by sharing this information, other game developers will also be able to harness the power of the web platform for their games

Caching content to manage the trade-off between loading times and resource limitations has always been a game development concern. Whether it be transferring information from optical media, hard disks, or memory, the amount of bandwidth, latency, and storage space available dictates the strategy required. The browser presents another environment with its own characteristics and so an appropriate strategy must be chosen. There is no guarantee that previous strategies will “just work” in this space.

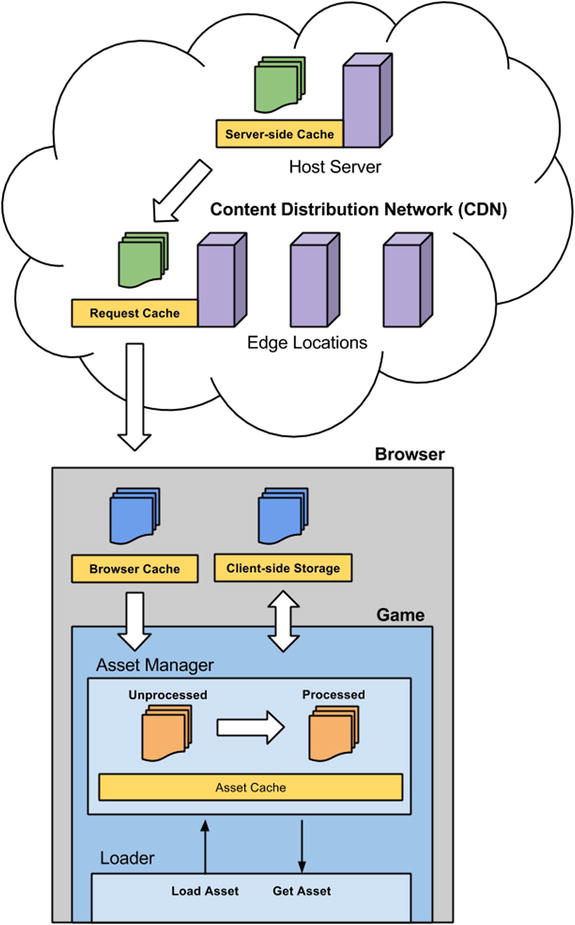

In the world of browser-based gaming, caching can occur in the following locations: server side and client side. On the server side, the type of cache depends on the server configuration and the infrastructure behind it, for example whether a content distribution network (CDN) is being used to host the files. On the client side, it depends on the browser configuration and ultimately the game as it decides what to do with the data it receives. The more of these resources you have control over, the more optimizations you can make. In some occasions, certain features won’t be available so it’s always worth considering having a fallback solution. Figure 2-2 shows a typical distribution configuration. The server-side and request caches on the remote host servers, either on disk or in memory, ensure that when a request comes in, it is handled as quickly as possible. The browser cache and web storage, typically from the local disk, reduce the need to rely on a remote machine. The asset cache, an example of holding data (processed or unprocessed) in memory until required, represents the game’s own ability to manage the limited available memory, avoiding the need to request it from local disk or remote host server.

Figure 2-2. Possible locations on the server and client side where game assets can be cached, from being stored on disk by a remote host server to being stored in memory already processed and ready to use by the game

The most prevalent client-side caching approach is HTTP caching in the browser. When the browser requests a file over HTTP, it takes time to download the file. Once the file has been downloaded, the browser can store it in its local cache. This means for subsequent requests for that file the browser will refer to its local cached copy. This technique eliminates the server request and the need to download the file. It has the added bonus that you will receive fewer server requests, which may save you money in hosting costs. This technique takes advantage of the fact that most game assets are static content, changing infrequently.

When a HTTP server sends a file to a client, it can optionally include metadata in the form of headers, such as the file encoding. To enable more fine-grained control of HTTP caching in the browser requires the server to be configured to provide headers with caching information for the static assets. This tells the browser to use the locally cached file from the disk instead of downloading it again. If the cached file doesn’t exist or the local cache has been cleared, then it will download the file. As far as the game is concerned, there is no difference in this process except that the cached file should load quicker. The behavior of headers is categorized as conditional and unconditional. Conditional means that the browser will use the header information to decide whether to use the cached version or not. It may then issue a conditional request to the server and download if the file has changed. Unconditional means that if the header conditions are met and the file is already in the cache; then it will return the cached copy and it won’t make any requests to the server. These headers give you varying levels of control for how browsers download and cache static assets from your game.

The HTTP/1.1 specification allows you to set the following headers for caching.

- Unconditional:

- Expires: HTTP-DATE. The timestamp after which the browser will make a server request for the file even if it is stored in the local cache. This can be used to stop additional requests from being made.

- Cache control: max-age=DELTA-SECONDS. The time in seconds since the initial request that the browser will cache the response file and hence not make another request. This allows the same behavior as the Expires header, but is specified in a different way. The max-age directive overrides the Expires header so only one or the other should be used.

- Conditional:

- Last-Modified: HTTP-DATE. The timestamp indicating when the response file was last modified. This is typically the last-modified time of the file on the filesystem, but can be a different representation of a last modified date, for example the last time a file was referenced on the server, even if not modified on disk. Since this is a conditional header, it depends on how the browser uses it to decide whether or not a request is made. If the file is in cache and the HTTP-DATE was long ago, the browser is unlikely to re-request the file.

- ETag: ENTITY-TAG. An identifier for the response. This can typically be a hash or some other method of file versioning. The ETag can be sent alongside a request. The server can use the ETag to see if the version of the file on the client matches the version on the server. If the file hasn’t changed, the server returns a HTTP response with a status code of 304 Not Modified. This tells the client that a new copy of the file is not required. For more information on ETags, see http://en.wikipedia.org/wiki/HTTP_ETag.

The ability to control caching settings is not always available from every server and behaviors will differ depending on the server. It is worth referring to the documentation to discover how to enable and set these headers.

HTTP Caching Example

Since the caching works per URL, you will need to serve your asset files in a way that can take advantage of these headers. One approach is to uniquely name each file and set the expires header/max-age to be as long as possible. This will give you a unique URL for each version of the asset file, allowing you to control the headers individually. The unique name could be a hash based on the file contents, which can be done automatically as part of an offline build process. If this hash is deterministic, the same asset used by different versions of your games can be given the same unique URL. If the source asset changes, a new hash is generated, which can also be used to manage versioning of assets.

This approach exhibits the following behaviors:

- You can host assets for different versions of your game (or different games entirely) in the same location. This can save storage space on the server and make the process of deploying your game more efficient as certain hashed assets may have previously been uploaded.

- When a player loads a new version of your game for the first time, if the files shared between versions are already in the local cache, no downloading is required. This speeds up the loading time for game builds with few asset changes, reducing the impact of updating the game for users. Updates are therefore less expensive and this encourages more frequent improvements.

- Since the file requested is versioned via the unique name, changing the request URL can update the file. This has the benefit that the file is not replaced and hence if the game needs to roll back to using an older version of the file, only the request URL needs to change. No additional requests are made and no files need to be re-downloaded, having rolled back (provided the original file is still in local cache).

- Offline processing tools for generating the asset files can use the unique filename to decide if they need to rebuild a file from source. This can improve the iterative development process and help with asset management.

Loading HTTP Cached Assets

Once a game is able to cache static assets in this way, it will need a process to be able to manage which URLs to request. This is a case of matching the name of an asset with a given version of that asset. In this example, you can assume that the source path for an asset can be mapped directly to the latest required version of that asset. The asset contents can be changed, but the source path remains the same, so no code changes are required to update assets. If the game requires a shader called shaders/debug.cgfx, it will need to know the unique hash so it can construct the URL to request. At Turbulenz, this is done by creating a logical mapping between source path and asset filename, and storing the information in a mapping table. A mapping table is effectively a lookup table, loaded by the game and stored as a JavaScript object literal; see Listing 2-1.

Listing 2-1. An Example of a Mapping Table

var urlMapping = {

"shaders/debug.cgfx" : "2Hohp_autOW0WbutP_NSUw.json",

"shaders/defaultrendering.cgfx" : "4HdTZBhuheSPYHe1vmygYA.json",

"shaders/standard.cgfx" : "5Yhd75LjDeV3WEvRsKnGSQ.json"

};

Each source path represents an asset the game can refer to. Using the source path and not the source filename avoids naming conflicts and allows you to structure your assets like a file system. If two source assets generate identical output, the resulting hash will be the same, avoiding the duplication of asset data. This gives you some flexibility when importing asset names from external tools such as 3D editors.

In this example, the shaders for 3D rendering are referenced by their source path, which maps to a processed JSON formatted object representation of the shader. Since the resulting filename is unique, there is no need to maintain a hierarchical directory structure to store files. This allows the server to apply the caching headers to all files in a given directory, in this case a directory named staticmax, which contains all files that should be cached for the longest time period; see Listing 2-2.

Listing 2-2. A Simplified Example of Loading a Static Asset Cached as Described Above

/**

* Assumed global values:

*

* console - The console to output error messages for failure to load/parse assets.

*/

/**

* The prefix appended to the mapping table name.

* This is effectively the location of the asset directory.

* This will eventually be the URL of the hosting server/CDN.

*/

var urlPrefix = 'staticmax/';

/**

* The mapping of the shader source path to the processed asset.

* If an asset is not yet loaded this mapping will be undefined.

*/

var shaderMapping = {};

/**

* The function that will make the asynchronous request for the asset.

* The callback will return with the status code and response it receives from the server.

*/

function requestStaticAssetFn(srcName, callback) {

// If there is no mapping, a URL request cannot be made.

var assetName = urlMapping[srcName];

if (!assetName) {

return false;

}

function httpRequestCallback() {

// When the readyState is 4 (DONE), call the callback

if (xhr.readyState === 4) {

var xhrResponseText = xhr.responseText;

var xhrStatus = xhr.status;

if (callback) {

callback(xhrResponseText, xhrStatus);

}

xhr.onreadystatechange = null;

xhr = null;

callback = null;

}

}

// Construct the URL to request the asset

var requestURL = urlPrefix + assetName;

// Make the request using XHR

var xhr = new window.XMLHttpRequest();

xhr.open('GET', requestURL, true);

if (callback) {

xhr.onreadystatechange = httpRequestCallback;

}

xhr.send(null);

return true;

}

/**

* Generate the callback function for this particular shader.

* The function will also process the shader in the callback.

*/

function shaderResponseCallback(shaderName) {

var sourceName = shaderName;

return function shaderResponseFn(responseText, status) {

// If the server returns 200, then the asset is included as responseText and can be processed.

if (status === 200) {

try {

shaderMapping[sourceName] = JSON.parse(responseText);

}

catch (e) {

console.error("Failed to parse shader with error: " + e);

}

}

else {

console.error("Failed to load shader with status: " + status);

}

};

}

/**

* Actual request for the asset.

* The request should be made if there is no entry for shaderName in the shaderMapping.

* The allows the mapping to be set to null to force the shader to be re-requested.

*/

var shaderName = "shaders/debug.cgfx";

if (!shaderMapping[shaderName]) {

if (!requestStaticAssetFn(shaderName, shaderResponseCallback(shaderName))) {

console.error("Failed to make a request for: " + shaderName);

}

}

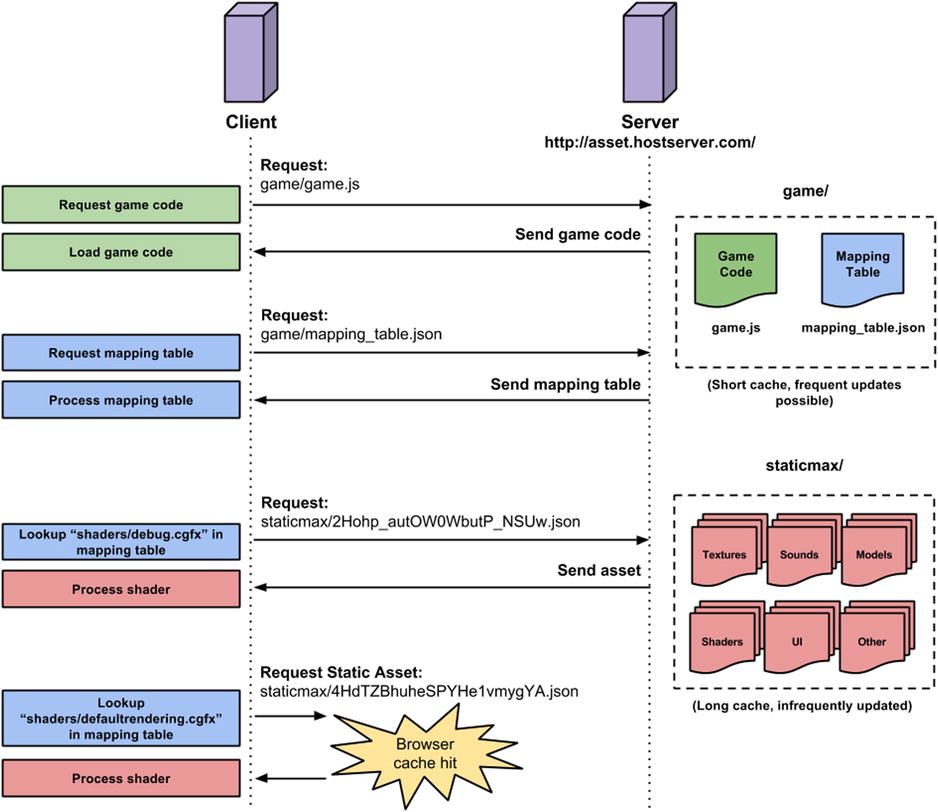

For a live server, the urlPrefix will be something like "http://asset.hostserver.com/game/staticmax/". This example shows how it may work for a text-based shader, but this technique could be applied to other types of assets. It is also worth noting that this example doesn’t handle the many different response codes that are possible during asset loading, such as 404s, 500s, etc. It is assumed that this code will be part of a much more complex asset handling and automatic retry system. Figure 2-3 shows an example of how the process may play out: loading and running the game, loading the latest mapping table, requesting a shader that is sent from the server, and finally, requesting a shader that ends up being resolved by the browser cache. For a more complete example of this, see the Request Handler and Shader Manager classes in the open source Turbulenz Engine (https://github.com/turbulenz/turbulenz_engine).

Figure 2-3. An example of the communication process between a server and the client when using a mapping table. Game code and mapping table data served with short cache times provide the ability to request static assets served with long cache times that take advantage of HTTP caching behaviours

At this point it is worth mentioning a little bit about client-side storage. The techniques described for HTTP caching are about using a cache to avoid a full HTTP request. There is another option for storing data that sits between server requests and memory that could potentially achieve this if used correctly. Client-side storage usually refers to a number of different APIs that allow web sites to store data on a local machine; these are Web Storage (sometimes referred to as Local Storage), IndexedDB, Web SQL Database, and FileSystem. They ultimately promise one thing: persistent storage between accesses to a web site. Before you get excited and start preparing to save all your game data here, it is important to understand what each API provides, the limitations, the pros and cons, and the availability. Two very good articles that explain all of this very well are at www.html5rocks.com/en/tutorials/offline/whats-offline/ and www.html5rocks.com/en/tutorials/offline/storage/.

What is important is how these APIs are potentially useful for games. Web Storage allows the setting of key/value pairs on a wide range of devices, but has limited storage capacity and requires data to be stored as strings. At the other end of scale, file access via the Filesystem API allows applications to request persistent storage much larger than the limitations of Web Storage with the ability to save and load binary content, but is less widely supported across browsers. If you have asset data that makes sense to be cached by one of these APIs, then it may potentially save you loading time.

Ideally a game would be able use client-side storage to save all static asset data so that it can be accessed quickly without server requests, even while offline, but the ability to do this across all browsers is not consistent and at the time of writing it is difficult to write a generic storage library that would be able to utilize one of the APIs depending on what is available. For example, if you wanted to store binary data for quick access, then it would be possible via “Blobs” for IndexedDB, Web SQL Database, and FileSystem API, but would require data to be base64-encoded as plain text for Web Storage. The amount of storage available and the cost of processing this data would differ depending on which API was used; for example, Web Storage is synchronous and will block during loading, unlike the others, which are asynchronous. If you are happy to acknowledge that only users of browsers that support a given API would have the performance improvements, then client-side storage may still be useful for your game.

One thing client-side storage is potentially useful for across all available APIs is the storing and loading of small bits of non-critical data, such as player preferences or storing temporary data such as save game information if the game gets temporarily disconnected from the cloud. If storing this data locally means that HTTP requests are made less frequently or at not all, then this can certainly speed up loading and saving for your game. In the long term, client-side storage is essential in being able to provide offline solutions to HTML5 games. If your game only partially depends on being able to connect to online services, then players should be able to play it without having a persistent Internet connection. Client-side storage solutions will be able to provide locally cached game assets and temporary storage mechanisms for player data that can be synchronized with the cloud when the player is able to get back online.

Having loaded an asset from an HTTP request, cache, or client-side storage, the game then has the opportunity to process the asset appropriately. If the asset is in JSON form, then at this point you might typically parse the data and convert it to the object format. Once parsed, the JSON is no longer needed and can be de-referenced for the garbage collector to clean up. This technique helps the application minimize its memory footprint by avoiding duplicating the data representation of an object in memory. If, however, the game frequently requests common assets in this way, the cost of reprocessing assets, even from local cache, can accumulate. By holding a reference to commonly requested assets, the game can avoid making a request entirely at the cost of caching the data in memory. The trade-off between loading speed and memory usage should be measured per asset to find out which common assets would benefit from this approach.

An example of such an asset might be the contents of a menu screen. During typical gameplay, the game may choose to unload the processed data to save memory for game data, but to have a responsive menu, it may choose to process the compressed representation because it is faster than requesting the asset again. Another case where this is convenient is when two different assets request a shared dependency independently of each other, such as a texture used by two different models. If assets are referred to in a key (in this example, the path to an asset, such as “textures/wall.png”), then the game could use a generic asset cache in memory that has the ability to release assets if they are not being used. Different heuristics can be used to decide if an asset should be released from such a cache, such as size. In the case of texture assets, where texture memory is limited, that cache can be used as a buffer to limit the storage of assets that are used less frequently. Releasing these assets will involve freeing it from the texture memory on the graphics card. Listing 2-3 shows an example of such a memory cache.

Listing 2-3. A Memory Cache with a Limited Asset Size That Prioritizes Cached Assets That Have Been Requested Most Recently

/**

* Assumed global values:

*

* Observer - A class to notify subscribers when a callback is made.

* See https://github.com/turbulenz/turbulenz_enginefor an example.

* TurbulenzEngine - Required for the setTimeout function.

* Used to make callbacks asynchronously.

* requestTexture - A function responsible for requesting the texture asset.

* drawTexture - A function that draws a given texture.

*/

/**

* AssetCache - A class to manage a cache of a fixed size.

*

* When the number of unique assets exceeds the cache size an existing asset is removed.

* The cache prioritizes cached assets that have been requested most recently.

*/

var AssetCache = (function () {

function AssetCache() {}

AssetCache.prototype.exists = function (key) {

return this.cache.hasOwnProperty(key);

};

AssetCache.prototype.isLoading = function (key) {

// See if the asset has a cache entry and if it is loading

var cachedAsset = this.cache[key];

if (cachedAsset) {

return cachedAsset.isLoading;

}

return false;

};

AssetCache.prototype.get = function (key) {

// Look for the asset in the cache

var cachedAsset = this.cache[key];

if (cachedAsset) {

// Set the current hitCounter for the asset

// This indicates it is the last requested asset

cachedAsset.cacheHit = this.hitCounter;

this.hitCounter += 1;

// Return the asset. This is null if the asset is still loading

return cachedAsset.asset;

}

return null;

};

AssetCache.prototype.request = function (key, params, callback) {

// Look for the asset in the cache

var cachedAsset = this.cache[key];

if (cachedAsset) {

// Set the current hitCounter for the asset

// This indicates it is the last requested asset

cachedAsset.cacheHit = this.hitCounter;

this.hitCounter += 1;

if (!callback) {

return;

}

if (cachedAsset.isLoading) {

// Subscribe the callback to be called when loading is complete

cachedAsset.observer.subscribe(callback);

} else {

// Call the callback asynchronously, like a request response

TurbulenzEngine.setTimeout(function requestCallbackFn() {

callback(key, cachedAsset.asset, params);

}, 0);

}

return;

}

var cacheArray = this.cacheArray;

var cacheArrayLength = cacheArray.length;

if (cacheArrayLength >= this.maxCacheSize) {

// If the cache exceeds the maximum cache size, remove an asset

var cache = this.cache;

var oldestCacheHit = this.hitCounter;

var oldestKey = null;

var oldestIndex;

var i;

// Find the oldest cache entry

for (i = 0; i < cacheArrayLength; i += 1) {

if (cacheArray[i].cacheHit < oldestCacheHit) {

oldestCacheHit = cacheArray[i].cacheHit;

oldestIndex = i;

}

}

// Reuse an existing cachedAsset object to avoid object re-creation

cachedAsset = cacheArray[oldestIndex];

oldestKey = cachedAsset.key;

// Call the onDestroy function if the cachedAsset is loaded

if (this.onDestroy && !cachedAsset.isLoading) {

this.onDestroy(oldestKey, cachedAsset.asset);

}

delete cache[oldestKey];

// Reset the cachedAsset for the new entry

cachedAsset.cacheHit = this.hitCounter;

cachedAsset.asset = null;

cachedAsset.isLoading = true;

cachedAsset.key = key;

cachedAsset.observer = Observer.create();

this.cache[key] = cachedAsset;

} else {

// Create a new entry (up to the maxCacheSize)

cachedAsset = this.cache[key] = cacheArray[cacheArrayLength] = {

cacheHit: this.hitCounter,

asset: null,

isLoading: true,

key: key,

observer: Observer.create()

};

}

this.hitCounter += 1;

var that = this;

var observer = cachedAsset.observer;

if (callback) {

// Subscribe the callback to be called when the asset is loaded

observer.subscribe(callback);

}

this.onLoad(key, params, function onLoadedAssetFn(asset) {

if (cachedAsset.key === key) {

// Check the cachedAsset hasn't be re-allocated during loading

cachedAsset.cacheHit = that.hitCounter;

cachedAsset.asset = asset;

cachedAsset.isLoading = false;

that.hitCounter += 1;

// Notify all callbacks that the asset has been loaded

cachedAsset.observer.notify(key, asset, params);

} else {

if (that.onDestroy) {

// Destroy assets that have been removed from the cache during loading

that.onDestroy(key, asset);

}

// Notify the original observer that asset was not saved in the cache

observer.notify(key, null, params);

}

});

};

AssetCache.create = // Constructor function

function (cacheParams) {

if (!cacheParams.onLoad) {

return null;

}

var assetCache = new AssetCache();

assetCache.maxCacheSize = cacheParams.size || 64;

assetCache.onLoad = cacheParams.onLoad;

assetCache.onDestroy = cacheParams.onDestroy;

assetCache.hitCounter = 0;

assetCache.cache = {};

assetCache.cacheArray = [];

return assetCache;

};

AssetCache.version = 2;

return AssetCache;

})();

/**

* Usage:

*

* A textureCache is created to store up to 100 textures in the cache.

* The onLoad and onDestroy functions give the game control

* of how the assets are created and destroy.

*

* In the render loop the texture is fetched from the cache.

* It will be rendered if it exists, otherwise will be requested.

*

* The render loop is unaware of how the texture is obtained, only that

* it will be requested as soon as possible.

*/

var textureCache = AssetCache.create({

size: 100,

onLoad: function loadTextureFn(key, params, loadedCallback) {

requestTexture(key, loadedCallback);

},

onDestroy: function destroyTextureFn(key, texture) {

if (texture) {

texture.destroy();

}

}

});

function renderLoopFn() {

var textureURL = "textures/wall.png";

var texture = textureCache.get(textureURL);

if (texture) {

drawTexture(texture);

}

else if (!textureCache.isLoading(textureURL)) {

textureCache.request(textureURL);

}

}

In this example, an asset cache is created with a limited size of 100. Imagine that you only have the memory allowance to store 100 textures uncompressed in graphics memory at a given time. The game may require more than this number over its lifetime, but not at a single point in time. The aim is to provide the game with the set of textures it requires most often, which are available as it needs them. In addition, you want to avoid re-requesting a texture where possible. By allowing an asset cache to manage the texture storage, the game is able to access the texture quickly while it is in memory and make a request if it is not.

In this example, the textureCache.get function will be called in the render loop every time a texture is required to draw on screen. If the function returns “null”, then the texture is not yet in the cache (or not available). If the texture is not already loading, then the game will request it. The request function will in turn call the onLoad function that the game provides for the AssetCache. This gives the game control over how it loads the asset that the cache will store. In this case, it simply calls a requestTexture function, which will request and allocate memory for the texture. It is assumed that this function will locate the texture using the quickest method, whether that be from an HTTP request, the browser cache, or client-side storage. The onLoad function also provides a callback to the requestTexture function to return the loaded asset. In the case where a request to the textureCache is made but exceeds the 100-texture limit, then the cache will find the least accessed texture and call the onDestroy function, destroying the texture and releasing the memory. If the game attempts to get a texture that has since been removed from the cache, a request will be made and the process will start again.

This allows the game to access many different textures without having to worry about filling up texture memory with unused textures. Other heuristics such as texture size could be used in conjunction with this approach to ensure the best use of texture memory. The “Leaderboards” sample, which can potentially require hundreds or thousands of textures for avatar images, is a more complete example. It is included as part of the open source Turbulenz Engine (see https://github.com/turbulenz/turbulenz_engine).

When building complex HTML5 games with an ever-increasing demand for content, inevitably the amount of asset data required will increase. The previous topic discussed methods to quickly access this data, but what about the cost of the data itself? How it is stored in memory and the processing costs play a part in how quickly the game will be able to load. Memory limitations restrict you from storing all data uncompressed and ready for use, and processing costs have a direct impact on the time to prepare the data.

The processing of data can be a native functionality provided by the browser features of the hardware, such as the GPU, or written in JavaScript and executed by the virtual machine itself. Choosing the appropriate format for the data on a given browser/platform can help utilize the processing and storage functionality available by avoiding long load times and reducing storage cost.

Anyone who has written web content will be familiar with browser support for file formats such as JPEG, PNG, and GIF. In addition, some browsers support additional file formats such as WebP, which provides smaller file sizes for equivalent quality. The browser is usually responsible for loading these types of images, some of which can be used by the Canvas (2D) and WebGL (2D/3D) APIs. By using WebGL as the rendering API for your game, you may have the option to load other image formats and pass the responsibility of processing and storing the data to the graphics card. This is possible with WebGL if certain compressed texture formats are supported by the hardware. When passing an unsupported format such as JPEG to WebGL via the gl.texImage2D function, the image must first be decompressed before uploading to the graphics card. If a compressed texture format such as DXT is supported, then the gl.compressedTexImage2D function can be used to upload and store the texture without decompressing. Not only can you reduce the amount of memory required to store a texture on the graphics card (and hence fit more textures of equivalent quality into memory), but you can also defer the job of decompressing the texture until the shader uses it. Loading textures can be quicker because they are simply being passed as binary data to the graphics card.

In WebGL spec 1.0, the support for compressed texture formats such as DXT1, DXT3, and DXT5 that use the S3 compression algorithm is an extension that you must check for. This simplified example shows you how to check if the extension is supported and then check for the format you require. If the format is available, you will be able to create a compressed texture from your image data. See Listing 2-4 for a simplified example of how to achieve this. The assumed variables are listed in the comment at the top.

Listing 2-4. Checking if the Compressed Textures Extension Is Supported and How to Check if a Required Format is Available

/**

* gl - The WebGL context

* textureData - The data that will be used to create the texture

* pixelFormat - The pixel format of your texture data that you want to use as a compressed texture

* textureWidth - The width of the texture (power of 2)

* textureHeight - The height of the texture (power of 2)

*/

/**

* Request the extension to determine which pixel formats if any are available

* The request for the extension only needs to be done once in the game.

*/

var internalFormat;

var ctExtension = gl.getExtension('WEBGL_compressed_textures_s3tc'),

if (ctExtension) {

switch (pixelFormat) {

case 'DXT1_RGB':

internalFormat = ctExtension.COMPRESSED_RGB_S3TC_DXT1_EXT;

break;

case 'DXT1_RGBA':

internalFormat = ctExtension.COMPRESSED_RGBA_S3TC_DXT1_EXT;

break;

case 'DXT3_RGBA':

internalFormat = ctExtension.COMPRESSED_RGBA_S3TC_DXT3_EXT;

break;

case 'DXT5_RGBA':

internalFormat = ctExtension.COMPRESSED_RGBA_S3TC_DXT5_EXT;

break;

}

}

if (internalFormat === undefined) {

// If the pixel format is not supported, fall back to an option that creates an uncompressed texture.

return "Compressed pixelFormat not supported";

}

var texture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.compressedTexImage2D(

gl.TEXTURE_2D,

0,

internalFormat,

textureWidth,

textureHeight,

0,

textureData);

This simple example does not cover all aspects of using the extension (including checking browser-specific names for the extension and robust error handling), but it should give an indication of how the extension is used. For more information about best practices for using compressed textures, see the Graphics Device implementation as part the open source Turbulenz Engine (https://github.com/turbulenz/turbulenz_engine). It is also worth noting that in the future more compression formats may be supported, so check up-to-date documentation.

There are a few key considerations from looking at this example. The first is what to do when a given pixel format is not supported. As mentioned throughout this chapter, the best way to load an asset is to not load it at all, so checking the available formats should happen before the data is even loaded. This allows the game to select the best option for the given platform. In the event that the WebGL extension or any of the required formats are not supported, the game will have to provide a fallback option. This could be by choosing to load one of the browser-supported file formats and accepting the cost of decompressing or, alternatively, if the file format is not supported by the browser, reading the file and decompressing the data in JavaScript. This may not be as bad as it sounds if the task is executed by Web Workers running in the background while other data loads. Remember that the result will be an uncompressed pixel format, which will take up more memory. If you have control of the compression/decompression, you may be able to choose to use a lower bit depth per pixel (e.g. 16-bit instead of 32-bit), which may affect quality but reduce storage. The advice is to experiment with different combinations for your game and instrument the loading time in different browsers on different platforms. Only then will you be able to get a true idea of what best suits your content as a fallback option.

Another consideration is how texture data is loaded onto the client if the browser doesn’t support the file format and the cost of doing this. DDS is typically used as a container format for DXT compressed textures and will need to be loaded and parsed to extract the texture data. The files are usually requested using XHR as binary data using the arraybuffer response type and can use the Uint8Array constructor to create a byte array that can be manipulated. Once the loader has parsed the container format, the pixel data, which exists as a byte array, can be passed directly to WebGL to use as texture data. The Turbulenz Engine includes a JavaScript implementation of such a DDS loader to provide this functionality. In most cases, the binary data is just being passed to WebGL so there is little processing cost in JavaScript, which makes this a quick way to load texture data.

For some textures in your game, you may want to include mipmaps. This common practice adds processing costs for image formats that don’t include mipmap data, such as PNG and JPEG. If the game requires mipmapped textures created from these formats, they will be generated on the fly, adding to the total load time. The advantage of loading a file format that supports mipmaps such as DDS is that they can be generated offline with more control over the level of quality. This does add to the total size of the file (around 33%, as each mipmap is a quarter the size of the previous level); however, these files tend to compress well with gzip, which can be enabled as a server compression option (discussed later).

The decision for the exact format to use for texture data is often subjective when it comes to quality. The more compression, the smaller the file size and faster load times, but the more visual artifacts that can be seen. Think carefully about options for pixel formats, the use of an alpha channel, the pixel depth, and the compression depending on the image content. The choice of which S3 compressed algorithm (DXT1, DXT3, DXT5) is like choosing between PNGs for images with alpha and JPEGs for images where artifacting is less obvious: it depends on what they support best. Quick loading is the goal, while still attempting to maintain an acceptable level of quality.

Similar to texture formats; balancing data size and quality of audio files will affect the overall load time. Games are among the power-users of audio on the Web when it comes to effects, music, experience, and interactivity. The many variations of sound effects and music tracks can easily add up and eclipse the total size of the other types of data combined (even mesh data). This of course depends on the game, and you should keep track of how much audio data you are transferring during development. It is therefore important to consider different options for loading sounds that best fit your game.

If you are using sound within your game, you should be familiar with HTML5 Audio and the Web Audio API as the options available for browser audio support. The history of support for both the APIs and audio codecs has been hampered due to the complex issue of patents surrounding some audio formats, which has resulted in an uneven landscape of support across different browser vendors. The upshot is that audio support in games is not just a matter selecting the optimal format for your sound data, but also choosing based on availability and licensing. Familiar formats such as MP3 and AAC are supported by the latest versions of the majority of browsers, but cannot be guaranteed due to some browser manufacturers intentionally avoiding licensing issues. Formats such as OGG and WAV are also supported, but again not by all browser manufacturers. At the time of writing, the reality is that documenting the current support of audio formats would quickly be out of date and wouldn’t help address the practicalities of efficiently loading audio for games. The best advice is to look at the following references to help understand the current support for desktop and mobile browsers and to apply the suggestions in this section to your choice of formats: (http://en.wikipedia.org/wiki/HTML5_Audio and https://developer.mozilla.org/en-US/docs/HTML/Supported_media_formats).

Transferring audio data is similar to other types of binary data. It can either be loaded directly by the browser by specifying it in an <AUDIO> tag or via an XHR request. The latter gives the game control of when the sound file is loaded and the option of what to do with it when it is received. The availability of the Web Audio API in browsers means that most games should have a fallback to HTML5 Audio. As with texture formats, testing for support, then deciding which format to use, is preferable to loading all data upfront. This does mean that you will probably be required to have multiple encodings of the same audio data hosted on your server. This is the price of compatibility, unlike textures where the choice is based mainly on performance. See Listing 2-5.

Listing 2-5. A Simplified Example of Loading an Audio File

/**

* soundName - The name of the sound to load

* getPreferredFormat - A function to determine the preferred format to use for a sound with a given name.

* The algorithm for this decision is up to the game.

* audioContext - The audio context instance for the Web Audio API

* bufferCreated - The callback function for when the buffer has been successfully created

* bufferFailed - The callback function for the audio decode has failed

*/

// Check once at the start of the game which audio types are supported

var supported = {

ogg: false,

mp3: false,

wav: false,

mp4: false

};

var audio = new Audio();

if (audio.canPlayType('audio/ogg')) {

supported.ogg = true;

}

if (audio.canPlayType('audio/mp3')) {

supported.mp3 = true;

}

if (audio.canPlayType('audio/wav')) {

supported.wav = true;

}

if (audio.canPlayType('audio/mp4')) {

supported.mp4 = true;

}

// Audio element is thrown away after the support query

audio = null;

var soundPath = getPreferredFormat(soundName, supported);

var xhr = new window.XMLHttpRequest();

xhr.onreadystatechange = function () {

if (xhr.readyState === 4) {

var xhrStatus = xhr.status;

var response = xhr.response;

if (xhrStatus === 200) {

if (audioContext.decodeAudioData) {

audioContext.decodeAudioData(response, bufferCreated, bufferFailed);

} else {

var buffer = audioContext.createBuffer(response, false);

bufferCreated(buffer);

}

}

xhr.onreadystatechange = null;

xhr = null;

}

};

xhr.open('GET', soundPath, true);

xhr.responseType = 'arraybuffer';

xhr.send(null);

In Listing 2-5, the getPreferredFormat function is specified by the game to best decide which format to use. The choice should be based on the knowledge the game has about the required sound and how it is used. For example, if the file is a short sound effect (a few seconds in length) that needs to be played quickly, such a bullet fire sound, then selecting an uncompressed WAV file might be the best option, because unlike most MP3/OGG/AAC files it doesn’t need to be decoded before use. It depends on the size and length of the sound. This example is unlikely to hold true for music files, which are usually minutes, not seconds, in length and hence are much larger when uncompressed.

Since the Web Audio API requires compressed audio files to be decompressed before use, storage of the uncompressed audio can quickly become an issue, especially on mobile where resources are more limited. Depending on the hardware, this decoding can be expensive for large files, so loading all large data files, such as music, upfront and then decoding is considered a bad idea. One option is to only load the music immediately required and wait until later to load other music.

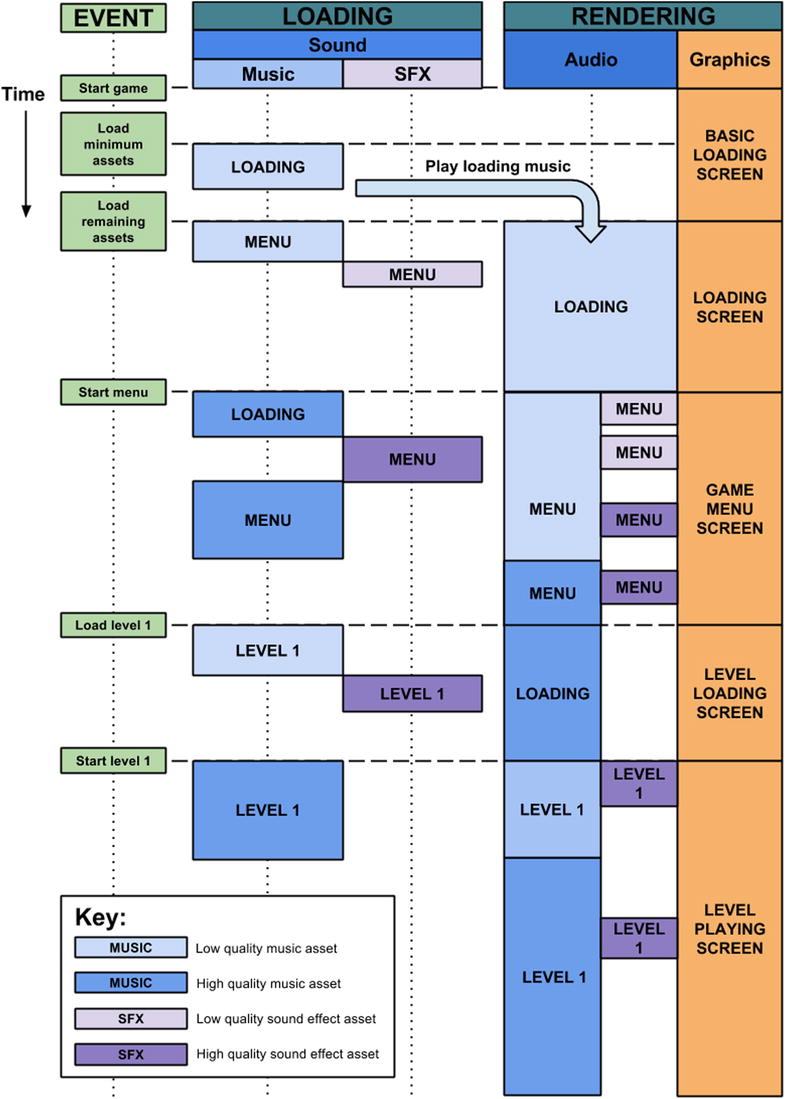

If the sound needs to be played immediately, then an alternative approach is required for larger audio files. It is possible to combine streaming HTML5 Audio sounds with the Web Audio API, but at the time of writing, support is limited and unstable but should get better with improvements to media streaming in the future. In the absence of such features, one possible approach to speed up loading times is to load a smaller lower quality version of an audio file and start playing, only to replace it with a higher quality version after it has been loaded. The ability to seek and cross-fade two sources in the Web Audio API should make this a seamless transition. This type of functionality could be written at an audio library level. Figure 2-4 shows an example of loading and playing audio data with respect to user-driven events within the game.

Figure 2-4. A timeline showing when audio assets are loaded and when they are played. By loading lower-quality audio assets first, sound effects and music can be played sooner and long load times can be avoided. Loading higher-quality sound effects and music can be deferred, leaving the game to decide when is the most appropriate time to process them

Having dealt with the issues of quality, format support, and encoding, the main choice that impacts loading is how late to defer it, assuming that a connection to the asset data server will still be available. Games providing a combination of MP3, OGG, and WAV audio files should cover the question of browser compatibility. A time will come where the available choices outweigh the limitations and at that point selecting an optimal audio format will be more important.

Other Formats

On the web, JSON is commonly used as a data-interchange format for sites. JSON data has the benefit that, once parsed, it can easily be manipulated as a JavaScript object. This makes it a simple way to transfer text data such as “string tables” for localization, where looking up the data is convenient because it is already in the appropriate format for use. It lends itself to defining extensible data formats where manipulating parts of the data is easy to do by setting properties on objects.

The downside is although JSON.parse is a native function in modern browsers, the cost of processing large assets with complex data structures is high, so much so that it can take anything from a few milliseconds to a few seconds to process, depending on the data. This has an impact on loading time, but also on the performance of the game. If the processing takes longer than 16.6ms on a 60fps game, then it can affect the frame-rate of the game, which makes the process of background loading problematic. It is possible to use Web Workers to ensure that the processing is done in a separate thread, which can help reduce the impact of parsing.

Some data, however, lends itself to using a binary format. Accessing this is possible in JavaScript with the help of the arraybuffer XHR transfer type and typed arrays such as Uint8Array, Int32Array, Float64Array, and Float32Array. This allows you to intentionally transfer floating-point values at a given precision or define a fixed size for your data. If you use a Uint8Array view on a transferred arraybuffer, you effectively have a byte array for your file format to manipulate as you require. This allows you to write your own binary data parsers in JavaScript. The DataView interface is designed specifically for doing this and to handle the endianness of the data. For more information for how to use these interfaces for manipulating binary data, see www.html5rocks.com/en/tutorials/webgl/typed_arrays/.

In addition to structuring your own binary file data to be used in JavaScript, proposals are emerging to standardize many of the common formats that are used by games. One example is the Khronos proposal for glTF, a format for transferring and compressing 3D assets that is designed to work with WebGL (www.khronos.org/news/press/khronos-collada-now-recognized-as-iso-standard). Another example is the webgl-loader project that aims to provide mesh compression for WebGL (https://code.google.com/p/webgl-loader/). Proposals like these combine text and binary data and aim to provide a strategy to deliver complex mesh data in a format optimized for web delivery. At the time of writing there is no standardized approach, but it is worth being aware of the data formats that are specifically designed to help deliver certain file content to the Web.

When running your game on your local machine, the performance of loading the asset data from a file or in most cases from a locally run web server can appear to be very quick. As soon as you start loading from a server hosted on a local network and then eventually from where your game will be hosted online, it becomes apparent that what you considered an acceptable load time is no longer acceptable in the real world. There are many factors involved when downloading assets; considering how best to host them is key to having consistent behavior for all players of your game. The decisions are not only about where they are hosted, but how they are hosted. Web sites employ many strategies for delivering content quickly to millions of users all over the globe. Since HTML5 games operate as web sites/web apps, the same strategies can be applied to make loading your assets as quick as possible. There are many other considerations for asset hosting, such as cost and storage size, that should not be ignored, but for now let’s consider performance.

The transfer time of a file is longer the larger the file, given a fixed bandwidth, so anything that can be done to reduce the file size that doesn’t modify the data within the asset can be a benefit. The previous section discussed compression techniques specific to the type of data, but there is also the option of generalized compression algorithms. The majority of browsers support receiving content gzip compressed, such as HTML, CSS, and JavaScript, so compressing large text-based data, such as JSON objects, in the same way is a good idea.

There are many choices of web server technology available to use for hosting, such as Apache, IIS, and nginx. Most servers support enabling gzip as a configuration option and usually allow you to specify which file types to apply it to when a request comes in with the header Accept-Encoding: gzip. If a server has it turned on, it should serve the asset gzipped with the header Content-Encoding: gzip. There is usually an associated CPU cost of encoding and decoding the gzip on server and client side, but this assumes that the cost is less than the transfer cost of a larger file. Server-side compression costs can usually be eliminated if the response is cached with the gzipped file. Also, not all gzip compression utilities are considered equal. At Turbulenz, we have found that 7-Zip provides very good gzip compression, which can be done offline before uploading the assets to the server. In this case, the server should be configured to serve up the pre-compressed version of the file when gzip is requested. It is worth trying different compression tools to find which one works best for you. For more information about enabling HTTP compression, see http://en.wikipedia.org/wiki/HTTP_compression.

Gzip compression is considered essential for uncompressed text formats, but for compressed formats such as PNG it can result in files that are larger than the original compressed file. However, some types of compressed data do benefit from additional gzip compression, such as DXT texture data. The preferred approach should be to compress the file offline and compare with and without compression. If the resulting file sizes are similar, then the added decompression overhead may make loading times slower, and it is probably not worth compressing. If the compressed file size is much smaller, it might be worth considering. If you have limited hosting space, it is worth doing a few tests before choosing which version to upload and serve.

The latency of a request (the time it takes the server to receive, process, and respond) has an impact on how quickly the game will load, regardless of whether the asset is actually transferred or not. Assuming a player is accessing the game for the first time and can’t take advantage of any of the client-side caching techniques previously described, what can be done to reduce this latency? Since the latency is related to how far the client is away from the server, the logical solution is to reduce the distance from the player to the host server. As an example, assume that the latency to the server is 100ms (not including transfer time) and assume your game needs to transfer 100 assets. Worst case, that’s 10000ms (10 seconds) to query the host server to even find out about each asset (assuming a single server with a single sequential request). In reality, browsers can make more than one request at a time to a given server, but there is a limit to how many requests can be made. If, however, the base latency can be reduced, then savings can be made for every asset request. If the player’s bandwidth available to transfer a given asset is the same for two different servers, then the performance benefit will be with the one with lower latency. Having host servers in multiple locations around the world helps reduce the start time for all your players. It’s easy to forget this if you only test run the game from a single location.

Using a Content Distribution Network

Providing geolocated assets in multiple locations around the world would require you to run multiple servers and distribute assets among those locations. Luckily, CDNs exist to provide this type of service. The infrastructure provided by companies running these networks can serve internet content up to millions of users with more hosting servers than would be feasible for an individual games company to provide. Pricing, performance, and interfaces vary depending on the provider, so it is worth investigating before making a decision.

As a model for game asset hosting, CDNs can effectively be configured to cache requests made for certain assets server-side, with a given set of request headers to edge locations around the world, which are closer to the players. The advantage is you don’t need to distribute your assets to these locations; that task is usually handled by the CDN. Another advantage to CDNs is that they can help provide geolocated DNS lookups. If you request an asset from cdn.company.com, some CDN services will use Anycast to connect you to the host that is identified as being closest to the machine that made the request. This is usually managed by the provider’s DNS service and the route with the lowest latency may not always be the geographically closest. This allows all requests for an asset to be made to a single URL, but then handled by different servers. It is worth noting that the behaviours of CDNs in terms of configuration differ between providers, so this is just one example of how a CDN can be used.

Imagine, similar to the local server approach, which you are able to set the cache time to be a long period of time for assets that you consider static and are rarely modified. When you first upload the asset to your server and request it via the CDN, which is configured to respect the cache times of the asset in the same way the client would, the CDN edge locations make a request for the file and from that point onwards any identical requests (with the same request headers) will be served from the CDN and not from your asset server. Your server should now have a manageable number of requests from the CDN edge locations and the actual players will only be loading assets from edge locations with the lowest latency for them. If you want to force the CDNs to re-request the asset, you would need a different type of request to be made or the length of cache time to be smaller. By hashing the files as described in the mapping table section, a new hash will indicate a change of file and hence there is no need to update the CDN edge locations. This assumes that the cost of storage for more data is low, but the cost to invalidate the cache and ask the CDN to re-request the files is high.

To replace an existing file with a modified version, a unique id is generated and the new file is uploaded with the id in the URL. The mapping file is also modified to include a reference to the id and uploaded. Unlike the static asset, the mapping table has a shorter cache time before the CDN will re-request it from the game asset server. This allows it to quickly propagate to each of the edge locations when clients request it. Once a client receives a new mapping table, it will request the new asset from the nearest edge location, which will in turn request it from the game asset server. This will need to happen for all edge locations. Once the new asset has been requested and propagated to all edge locations, then all players can access the new asset. The time it takes for this to happen is effectively the “server-side cache” length (i.e., the time from having uploaded the new asset and mapping table to the time when all users can get the new version of the game asset). Because the old mapping table can still be accessed, both versions can be requested. This has the advantage that it can be used to stagger the roll-out of a new update, by selecting to only serve the new mapping table to a percentage of clients. In this scenario, some players will be given the new game with new assets and some the old game with old assets.

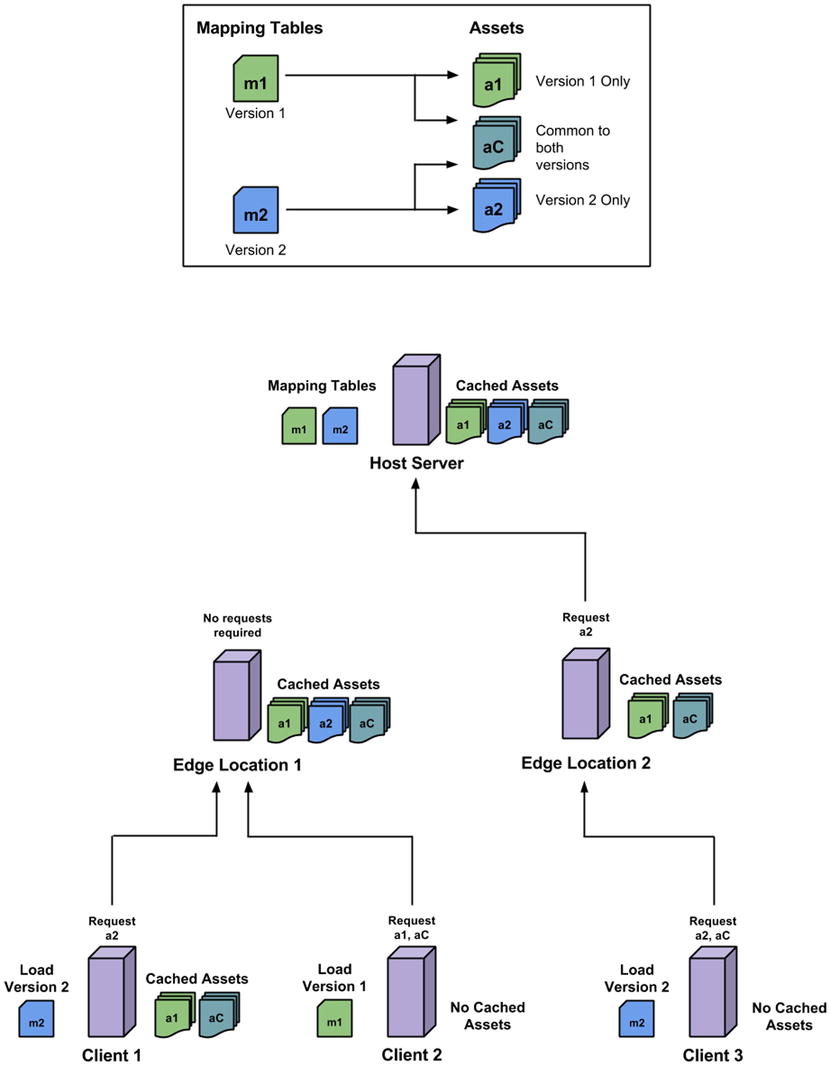

Figure 2-5 shows an example scenario in which clients are requesting different mapping tables and assets from a range of edge locations. It also shows the data that needs to be requested by the clients and the edge locations to satisfy the requirements of the game. Client 1 wants to load version 2 of the game. From the mapping table m2, it requires the group of assets known as a2 and aC. Since the client has already played version 1 of the game, it has both a1 and aC in its cache. This means that it will only need to request a2 in order to play the game. It does so by requesting the assets from Edge Location 1. That server has already downloaded the assets from the Host Server, and therefore no additional requests to the host are required. Client 2 wants to load version 1 of the game. From mapping table m1, it requires the group of assets known as a1 and aC. Since the client has not played the game it will have to request both a1 and aC. It requests these from its preferred server, Edge Location 1. Again, the server already has a1 and aC cached, so it does not need to make any requests to the Host Server. Client 3 wants to load version 2 of the game. From mapping table m2, it requires a2 and aC, which it must request because there are no cached assets. This time, the preferred server is Edge Location 2. This server does have the common assets aC, but does not have a2. The server can quickly respond with aC, but it needs to request a2 from the Host Server. This will only happen once for a single client per edge location. After that, all other clients requesting from that server will be served with the cached assets. This simplified example shows how shared content and the distribution among edge locations can reduce the number of requests when serving multiple versions of a game, resulting in less required data transfer.

Figure 2-5. A mapping table strategy combined with a CDN allows you to serve multiple versions of a game with minimal transfer cost between machines. The examples show different scenarios where each client requests assets as referenced by a given version of a mapping table. The requests made vary on the local availability of assets in cache

Servers play an important role in getting content to your players. You don’t need to be a large game developer to experience a runaway success with your game. The last thing you will want is to have hosting and performance issues impeding the playability of your game. A good hosting strategy will allow another service to do the heavy lifting while you focus on providing exciting content.

Understanding how files are loaded by the browser and how best to process them is important to have a quick loading game, but reasoning at a higher level about whether to load them is the most effective way of reducing load times. As the game developer, you know when you need an asset, how frequently it is used, and what its dependencies are. For example, to render a simple model you will likely require shaders for all the materials used, one or more textures for the material, and possibly other associated meshes with their own shader and texture requirements. If you are aware of the dependencies between the assets, you can consider grouping them into a single request instead of making separate HTTP requests for each asset. This can reduce the total number requests the browser has to handle and ensures that all asset content is available at the same time. Once again there is a trade-off with the ability to process assets in parallel, but this section assumes that the cost of retrieving the assets separately is higher.

One option is to group assets by type (e.g. sounds, textures, shaders). The advantage is that if you can identify a commonality between them, you may be able to group them in a way that reduces redundant data. The most obvious example is the use of spritesheets to group a number of 2D character animations. In a single texture there would be no duplication of start frame of the animation, for example. Depending on the compression method, you may also see improvements in compressing assets together compared to compressing separately. Another advantage is the ability to easily replace one set of assets with another, for example a texture pack for a model that provides a different skin for the same mesh without having to replace the mesh. This might be useful if you have customizable characters. One disadvantage of grouping by type is that you can easily end up waiting for a single asset type to be processed before the game can progress. Players may end up waiting longer for the game to load before they have a visual/audible indication that progress is being made.

Another option is to group by dependency (e.g. any assets that need to co-exist to be used). One example is grouping a model with associated assets specific to that mesh (e.g. shaders, textures, animation data, sounds). The advantage is that you could be animating the model while loading other models in the background. It is important to make sure that background loading of assets doesn’t have an impact on the performance of rendering the model, but that topic deserves its own chapter. One disadvantage of this approach is that shared assets may need to be duplicated or the dependency tree ends up encapsulating the majority of the game assets, losing the granularity of loading in small chunks. To avoid duplicating shared assets, it might make sense to group common assets together, which can then be loaded first before any other group.

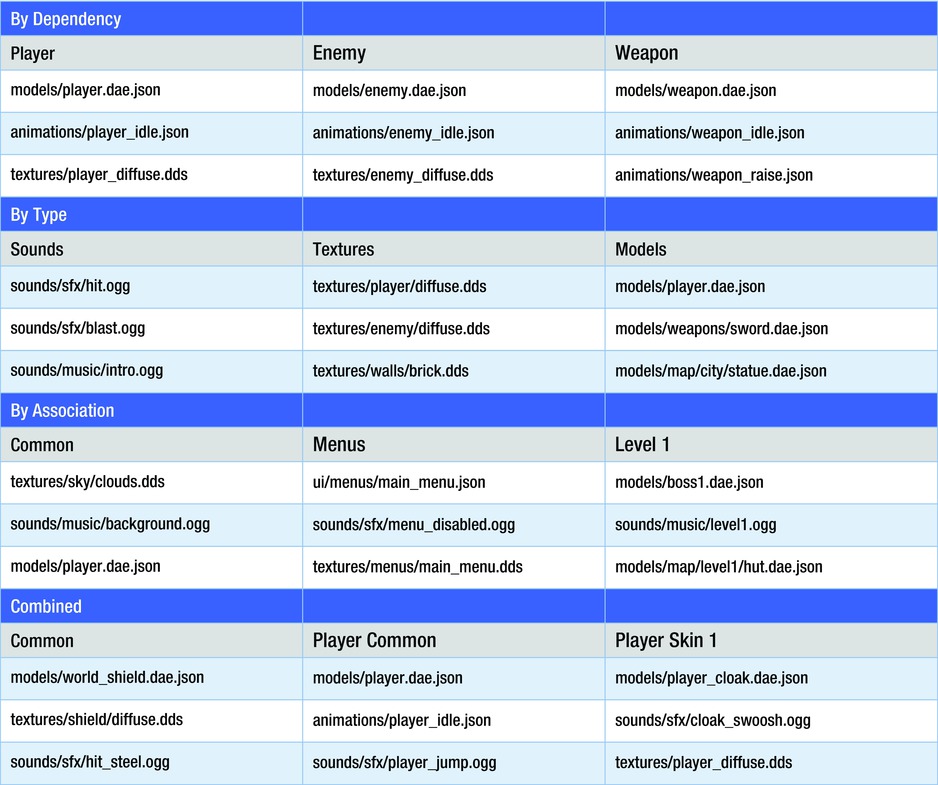

Another option is to group by association. One example would be grouping by game level. If the game only requires certain sounds, textures, and other data for a specific level, then it might make sense to only load the data when the level is being loaded. This is especially true if players have to unlock levels or buy additional content. Grouping in this way assumes that levels are mainly independent of each other and that the game will have the ability to access that data at a later point. These high level associations depend massively on the design of the game and how it is played, but by thinking about grouping at this granularity you can make more impactful decisions on load time. Figure 2-6 presents a few scenarios in which grouping may occur.

Figure 2-6. An example of grouping assets by various scenarios. Combining the options based on your own assets can help reduce the total number of requests required to load your game

If you are interested in grouping assets together as a single file but maintaining some structure, then using the tar archive format is one option. Tar files allow you to archive files of different types by concatenating them together in a single bitstream, which can later be compressed. This process allows you to group assets while preserving the file structure into a single HTTP request, and allows you the choice of compression techniques to transfer it. Since the resulting file is binary data, it can be transferred and processed as an arraybuffer. Tar files contain header information for each file which if processed as binary data gives information about the files contained, for example the filename and filetype. This gives the loading code the option to choose which files to process if and when required. There is an example implementation of a JavaScript based tar loader in the open source Turbulenz Engine (https://github.com/turbulenz/turbulenz_engine).

Conclusion

You should now be familiar with different areas that affect the loading times of HTML5 games. By considering the techniques mentioned for caching, data compression, hosting, and data arrangement applied to your game content, we hope it helps you achieve performance improvements in your HTML5 game.

The Turbulenz team has worked hard in the area of optimization to make sure that not only our own games, but games of other developers, including those using the Turbulenz Engine, take advantage of these techniques. Surely there will be many more cost-saving measures in the future as the technology progresses, which we hope to exchange with other developers to ensure that HTML5 and the Web continues to be a powerful and exciting medium for distributing games.

Acknowledgements

I would personally like to thank Michael Braithwaite, David Galeano, Joe Kilner, Blake Maltby, and Duncan Tebbs for their insights into the inner workings of the Turbulenz Engine, their in-depth knowledge of browser technologies, and their feedback. I would also like to thank the rest of the Turbulenz engineering team and Wonderstruck team for constantly pushing the boundaries of what is possible with HTML5 and for making great browser games, which are the driving force for much of the content of this chapter.