First up are our static pages. While we handled this the idiomatic way earlier, there exists the ability to rewrite our requests, better handle specific 404 error pages, and so on by using the http.ServeFile function, as shown in the following code:

path := r.URL.Path;

staticPatternString := "static/(.*)"

templatePatternString := "template/(.*)"

dynamicPatternString := "dynamic/(.*)"

staticPattern := regexp.MustCompile(staticPatternString)

templatePattern := regexp.MustCompile(templatePatternString)

dynamicDBPattern := regexp.MustCompile(dynamicPatternString)

if staticPattern.MatchString(path) {

page := staticPath + staticPattern.ReplaceAllString(path,

"${1}") + ".html"

http.ServeFile(rw, r, page)

}Here, we simply relegate all requests starting with /static/(.*) to match the request in addition to the .html extension. In our case, we've named our test file (the 80 KB example file) test.html, so all requests to it will go to /static/test.

We've prepended this with staticPath, a constant defined upcode. In our case, it's /var/www/, but you'll want to modify it as necessary.

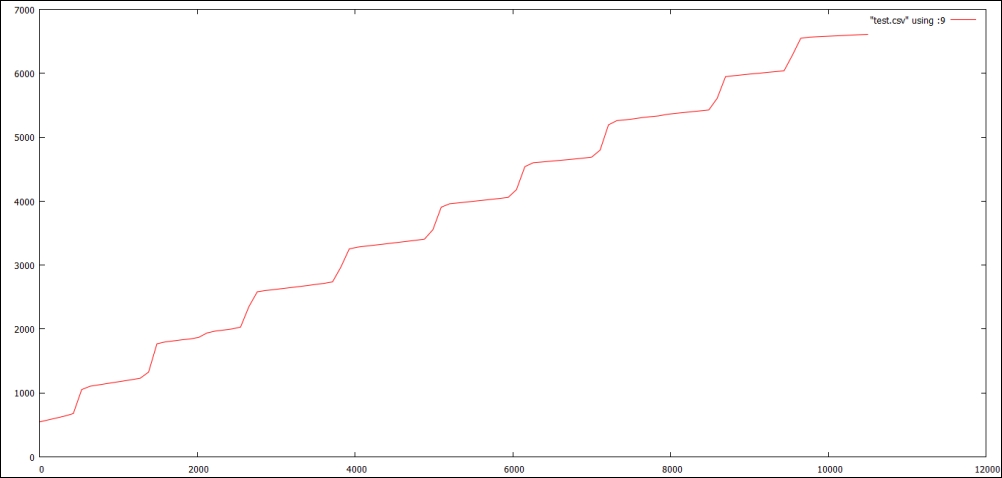

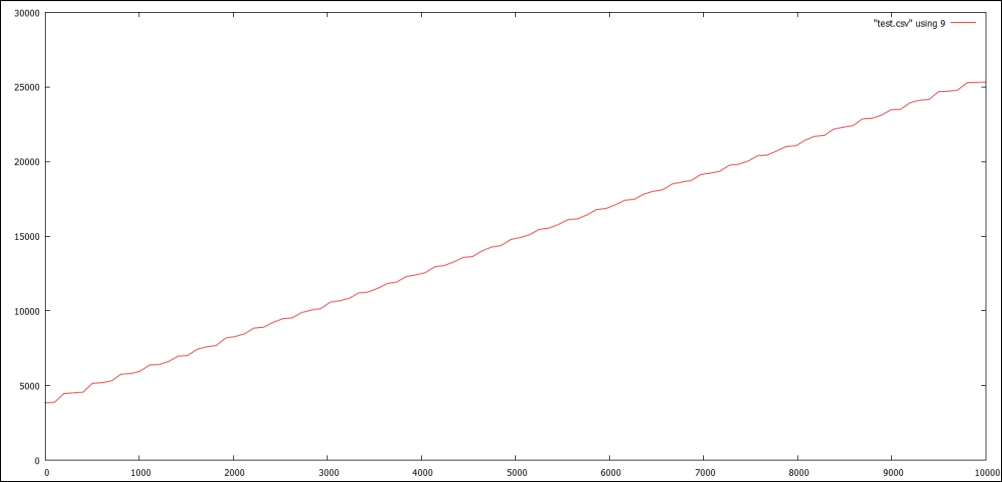

So, let's see what kind of overhead is imposed by introducing some regular expressions, as shown in the following graph:

How about that? Not only is there no overhead imposed, it appears that the FileServer functionality itself is heavier and slower than a distinct FileServe() call. Why is that? Among other reasons, not explicitly calling the file to open and serve imposes an additional OS call, one which can cascade as requests mount up at the expense of concurrency and performance.

Tip

Sometimes it's the little things

Other than strictly serving flat pages here, we're actually doing one other task per request using the following line of code:

fmt.Println(r.URL.Path)

While this ultimately may have no impact on your final performance, we should take care to avoid unnecessary logging or related activities that may impart seemingly minimal performance obstacles that become much larger ones at scale.

In our next phase, we'll measure the impact of reading and parsing a template. To effectively match the previous tests, we'll take our HTML static file and impose some variables on it.

If you recall, our goal here is to mimic real-world scenarios as closely as possible. A real-world web server will certainly handle a lot of static file serving, but today, dynamic calls make up the vast bulk of web traffic.

Our data structure will resemble the simplest of data tables without having access to an actual database:

type WebPage struct {

Title string

Contents string

}We'll want to take any data of this form and render a template with it. Remember that Go creates the notion of public or private variables through the syntactical sugar of capitalized (public) or lowercase (private) values.

If you find that the template fails to render but you're not given explicit errors in the console, check your variable naming. A private value that is called from an HTML (or text) template will cause rendering to stop at that point.

Now, we'll take that data and apply it to a template for any calls to a URL that begins with the /(.*) template. We could certainly do something more useful with the wildcard portion of that regular expression, so let's make it part of the title using the following code:

} else if templatePattern.MatchString(path) {

urlVar := templatePattern.ReplaceAllString(path, "${1}")

page := WebPage{ Title: "This is our URL: "+urlVar, Contents:

"Enjoy our content" }

tmp, _ := template.ParseFiles(staticPath+"template.html")

tmp.Execute(rw,page)

}Hitting localhost:9000/template/hello should render a template with a primary body of the following code:

<h1>{{.Title}}</h1>

<p>{{.Contents}}</p>We will do this with the following output:

One thing to note about templates is that they are not compiled; they remain dynamic. That is to say, if you create a renderable template and start your server, the template can be modified and the results are reflected.

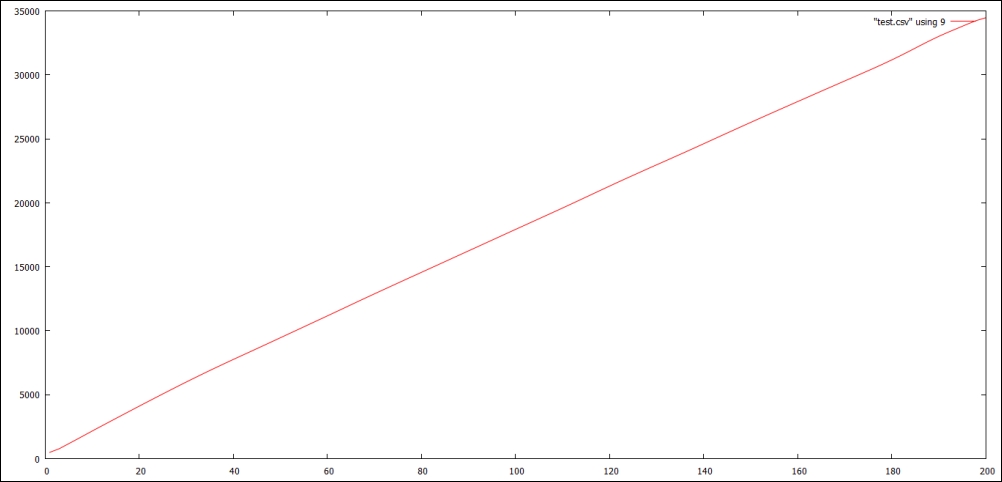

This is noteworthy as a potential performance factor. Let's run our benchmarks again, with template rendering as the added complexity to our application and its architecture:

Yikes! What happened? We've gone from easily hitting 10,000 concurrent requests to barely handling 200.

To be fair, we introduced an intentional stumbling block, one not all that uncommon in the design of any given CMS.

You'll notice that we're calling the template.ParseFiles() method on every request. This is the sort of seemingly cheap call that can really add up when you start stacking the requests.

It may then make sense to move the file operations outside of the request handler, but we'll need to do more than that—to eliminate overhead and a blocking call, we need to set an internal cache for the requests.

Most importantly, all of our template creation and parsing should happen outside the actual request handler if you want to keep your server non-blocking, fast, and responsive. Here's another take:

var customHTML string

var customTemplate template.Template

var page WebPage

var templateSet bool

func main() {

var cr customRouter;

fileName := staticPath + "template.html"

cH,_ := ioutil.ReadFile(fileName)

customHTML = string(cH[:])

page := WebPage{ Title: "This is our URL: ", Contents: "Enjoy

our content" }

cT,_ := template.New("Hey").Parse(customHTML)

customTemplate = *cTEven though we're using the Parse() function prior to our request, we can still modify our URL-specific variables using the Execute() method, which does not carry the same overhead as Parse().

When we move this outside of the customRouter struct's ServeHTTP() method, we're back in business. This is the kind of response we'll get with these changes:

Finally, we need to bring in our biggest potential bottleneck, which is the database. As mentioned earlier, we'll simulate random traffic by generating a random integer between 1 and 10,000 to specify the article we want.

Randomization isn't just useful on the frontend—we'll want to work around any query caching within MySQL itself to limit nonserver optimizations.

We can route our way through a custom connection to MySQL using native Go, but as is often the case, there are a few third-party packages that make this process far less painful. Given that the database here (and associated libraries) is tertiary to the primary exercise, we'll not be too concerned about the particulars here.

The two mature MySQL driver libraries are as follows:

- Go-MySQL-Driver (https://github.com/go-sql-driver/mysql)

- MyMySQL (https://github.com/ziutek/mymysql)

For this example, we'll go with the Go-MySQL-Driver. We'll quickly install it using the following command:

go get github.com/go-sql-driver/mysql

Both of these implement the core SQL database connectivity package in Go, which provides a standardized method to connect to a SQL source and iterate over rows.

One caveat is if you've never used the SQL package in Go but have in other languages—typically, in other languages, the notion of an Open() method implies an open connection. In Go, this simply creates the struct and relevant implemented methods for a database. This means that simply calling Open() on sql.database may not give you relevant connection errors such as username/password issues and so on.

One advantage of this (or disadvantage depending on your vantage point) is that connections to your database may not be left open between requests to your web server. The impact of opening and reopening connections is negligible in the grand scheme.

As we're utilizing a pseudo-random article request, we'll build a MySQL piggyback function to get an article by ID, as shown in the following code:

func getArticle(id int) WebPage {

Database,err := sql.Open("mysql", "test:test@/master")

if err != nil {

fmt.Println("DB error!!!")

}

var articleTitle string

sqlQ := Database.QueryRow("SELECT article_title from articles

where article_id=? LIMIT 1", 1).Scan(&articleTitle)

switch {

case sqlQ == sql.ErrNoRows:

fmt.Printf("No rows!")

case sqlQ != nil:

fmt.Println(sqlQ)

default:

}

wp := WebPage{}

wp.Title = articleTitle

return wp

}We will then call the function directly from our ServeHTTP() method, as shown in the following code:

}else if dynamicDBPattern.MatchString(path) {

rand.Seed(9)

id := rand.Intn(10000)

page = getArticle(id)

customTemplate.Execute(rw,page)

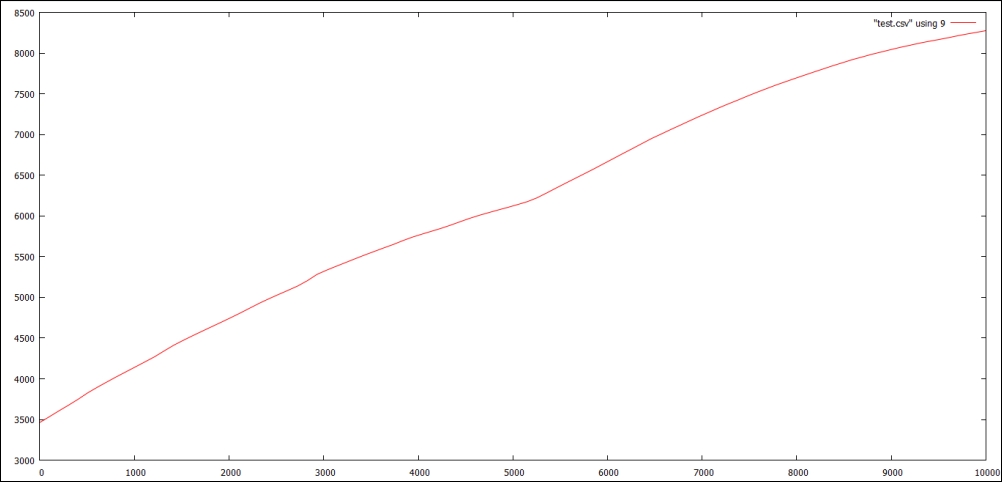

}How did we do here? Take a look at the following graph:

Slower, no doubt, but we held up to all 10,000 concurrent requests, entirely from uncached MySQL calls.

Given that we couldn't hit 1,000 concurrent requests with a default installation of Apache, this is nothing to sneeze at.