While we're here, we should take a moment and talk about defer. Go has an elegant implementation of the defer control mechanism. If you've used defer (or something functionally similar) in other languages, this will seem familiar—it's a useful way of delaying the execution of a statement until the rest of the function is complete.

For the most part, this is syntactical sugar that allows you to see related operations together, even though they won't execute together. If you've ever written something similar to the following pseudocode, you'll know what I mean:

x = file.open('test.txt')

int longFunction() {

…

}

x.close();You probably know the kind of pain that can come from large "distances" separating related bits of code. In Go, you can actually write the code similar to the following:

package main

import(

"os"

)

func main() {

file, _ := os.Create("/defer.txt")

defer file.Close()

for {

break

}

}There isn't any actual functional advantage to this other than making clearer, more readable code, but that's a pretty big plus in itself. Deferred calls are executed reverse of the order in which they are defined, or last-in-first-out. You should also take note that any data passed by reference may be in an unexpected state.

For example, refer to the following code snippet:

func main() {

aValue := new(int)

defer fmt.Println(*aValue)

for i := 0; i < 100; i++ {

*aValue++

}

}This will return 0, and not 100, as it is the default value for an integer.

Note

Defer is not the same as deferred (or futures/promises) in other languages. We'll talk about Go's implementations and alternatives to futures and promises in Chapter 2, Understanding the Concurrency Model.

With a lot of concurrent and parallel applications in other languages, the management of both soft and hard threads is handled at the operating system level. This is known to be inherently inefficient and expensive as the OS is responsible for context switching, among multiple processes. When an application or process can manage its own threads and scheduling, it results in faster runtime. The threads granted to our application and Go's scheduler have fewer OS attributes that need to be considered in context to switching, resulting in less overhead.

If you think about it, this is self-evident—the more you have to juggle, the slower it is to manage all of the balls. Go removes the natural inefficiency of this mechanism by using its own scheduler.

There's really only one quirk to this, one that you'll learn very early on: if you don't ever yield to the main thread, your goroutines will perform in unexpected ways (or won't perform at all).

Another way to look at this is to think that a goroutine must be blocked before concurrency is valid and can begin. Let's modify our example and include some file I/O to log to demonstrate this quirk, as shown in the following code:

package main

import (

"fmt"

"time"

"io/ioutil"

)

type Job struct {

i int

max int

text string

}

func outputText(j *Job) {

fileName := j.text + ".txt"

fileContents := ""

for j.i < j.max {

time.Sleep(1 * time.Millisecond)

fileContents += j.text

fmt.Println(j.text)

j.i++

}

err := ioutil.WriteFile(fileName, []byte(fileContents), 0644)

if (err != nil) {

panic("Something went awry")

}

}

func main() {

hello := new(Job)

hello.text = "hello"

hello.i = 0

hello.max = 3

world := new(Job)

world.text = "world"

world.i = 0

world.max = 5

go outputText(hello)

go outputText(world)

}In theory, all that has changed is that we're now using a file operation to log each operation to a distinct file (in this case, hello.txt and world.txt). However, if you run this, no files are created.

In our last example, we used a sync.WaitSync struct to force the main thread to delay execution until asynchronous tasks were complete. While this works (and elegantly), it doesn't really explain why our asynchronous tasks fail. As mentioned before, you can also utilize blocking code to prevent the main thread from completing before its asynchronous tasks.

Since the Go scheduler manages context switching, each goroutine must yield control back to the main thread to schedule all of these asynchronous tasks. There are two ways to do this manually. One method, and probably the ideal one, is the WaitGroup struct. Another is the GoSched() function in the runtime package.

The GoSched() function temporarily yields the processor and then returns to the current goroutine. Consider the following code as an example:

package main

import(

"runtime"

"fmt"

)

func showNumber(num int) {

fmt.Println(num)

}

func main() {

iterations := 10

for i := 0; i<=iterations; i++ {

go showNumber(i)

}

//runtime.Gosched()

fmt.Println("Goodbye!")

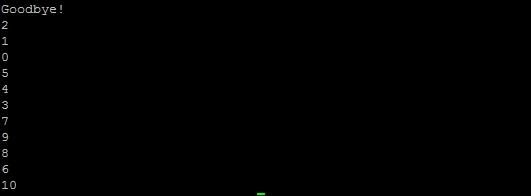

}Run this with runtime.Gosched() commented out and the underscore before "runtime" removed, and you'll see only Goodbye!. This is because there's no guarantee as to how many goroutines, if any, will complete before the end of the main() function.

As we learned earlier, you can explicitly wait for a finite set number of goroutines before ending the execution of the application. However, Gosched() allows (in most cases) for the same basic functionality. Remove the comment before runtime.Gosched(), and you should get 0 through 10 printed before Goodbye!.

Just for fun, try running this code on a multicore server and modify your max processors using runtime.GOMAXPROCS(), as follows:

func main() {

runtime.GOMAXPROCS(2)Also, push your runtime.Gosched() to the absolute end so that all goroutines have a chance to run before main ends.

Got something unexpected? That's not unexpected! You may end up with a totally jostled execution of your goroutines, as shown in the following screenshot:

Although it's not entirely necessary to demonstrate how juggling your goroutines with multiple cores can be vexing, this is one of the simplest ways to show exactly why it's important to have communication between them (and the Go scheduler).

You can debug the parallelism of this using GOMAXPROCS > 1, enveloping your goroutine call with a timestamp display, as follows:

tstamp := strconv.FormatInt(time.Now().UnixNano(), 10) fmt.Println(num, tstamp)

This will also be a good place to see concurrency and compare it to parallelism in action. First, add a one-second delay to the showNumber() function, as shown in the following code snippet:

func showNumber(num int) {

tstamp := strconv.FormatInt(time.Now().UnixNano(), 10)

fmt.Println(num,tstamp)

time.Sleep(time.Millisecond * 10)

}Then, remove the goroutine call before the showNumber() function with GOMAXPROCS(0), as shown in the following code snippet:

runtime.GOMAXPROCS(0)

iterations := 10

for i := 0; i<=iterations; i++ {

showNumber(i)

}As expected, you get 0-10 with 10-millisecond delays between them followed by Goodbye! as an output. This is straight, serial computing.

Next, let's keep GOMAXPROCS at zero for a single thread, but restore the goroutine as follows:

go showNumber(i)

This is the same process as before, except for the fact that everything will execute within the same general timeframe, demonstrating the concurrent nature of execution. Now, go ahead and change your GOMAXPROCS to two and run again. As mentioned earlier, there is only one (or possibly two) timestamp, but the order has changed because everything is running simultaneously.

Goroutines aren't (necessarily) thread-based, but they feel like they are. When Go code is compiled, the goroutines are multiplexed across available threads. It's this very reason why Go's scheduler needs to know what's running, what needs to finish before the application's life ends, and so on. If the code has two threads to work with, that's what it will use.

So what if you want to know how many threads your code has made available to you?

Go has an environment variable returned from the runtime package function GOMAXPROCS. To find out what's available, you can write a quick application similar to the following code:

package main

import (

"fmt"

"runtime"

)

func listThreads() int {

threads := runtime.GOMAXPROCS(0)

return threads

}

func main() {

runtime.GOMAXPROCS(2)

fmt.Printf("%d thread(s) available to Go.", listThreads())

}A simple Go build on this will yield the following output:

2 thread(s) available to Go.

The 0 parameter (or no parameter) delivered to GOMAXPROCS means no change is made. You can put another number in there, but as you might imagine, it will only return what is actually available to Go. You cannot exceed the available cores, but you can limit your application to use less than what's available.

The GOMAXPROCS() call itself returns an integer that represents the previous number of processors available. In this case, we first set it to two and then set it to zero (no change), returning two.

It's also worth noting that increasing GOMAXPROCS can sometimes decrease the performance of your application.

As there are context-switching penalties in larger applications and operating systems, increasing the number of threads used means goroutines can be shared among more than one, and the lightweight advantage of goroutines might be sacrificed.

If you have a multicore system, you can test this pretty easily with Go's internal benchmarking functionality. We'll take a closer look at this functionality in Chapter 5, Locks, Blocks, and Better Channels, and Chapter 7, Performance and Scalability.

The runtime package has a few other very useful environment variable return functions, such as NumCPU, NumGoroutine, CPUProfile, and BlockProfile. These aren't just handy to debug, they're also good to know how to best utilize your resources. This package also plays well with the reflect package, which deals with metaprogramming and program self-analysis. We'll touch on that in more detail later in Chapter 9, Logging and Testing Concurrency in Go, and Chapter 10, Advanced Concurrency and Best Practices.