11

Looking Forward in Cloud Build

Up until now, we have reviewed Cloud Build’s foundations, its common use cases, and the patterns for applying Cloud Build in practice.

However, Cloud Build – both at the time of publication and beyond – continues to expand its functionality and integrate more deeply with the rest of the Google Cloud ecosystem.

An example of this includes creating an API for opinionated CI/CD pipelines that uses Cloud Build to execute these pipelines.

In this chapter, we will review some of the areas to look forward to as far as Cloud Build is concerned, specifically covering the following topic:

- Implementing continuous delivery with Cloud Deploy

Implementing continuous delivery with Cloud Deploy

Previously, in Chapter 8, Securing Software Delivery to GKE with Cloud Build we reviewed how you could roll out new versions of applications to Google Kubernetes Engine using Cloud Build. When defining these rollouts using Cloud Build, there is very little opinion built into Cloud Build – ultimately, users are responsible for defining each build step, connecting the build steps into a full build, and potentially defining the bespoke build triggers for each build.

One of the key things that users will need to consider is how to promote an application between environments. Environments and their respective functions can include the following examples:

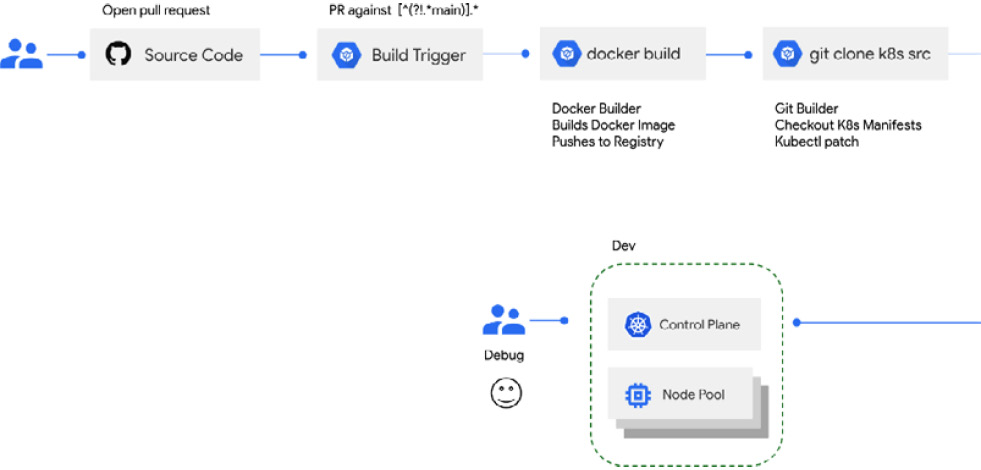

- Dev: The development environment in which applications can run, where developers can test their app’s dependencies on other services in the cluster that are beyond their ownership. Rather than pulling all other dependencies to their local environment, as with a local Kubernetes cluster, they can deploy to this environment instead:

Figure 11.1 – Deploying to a dev environment

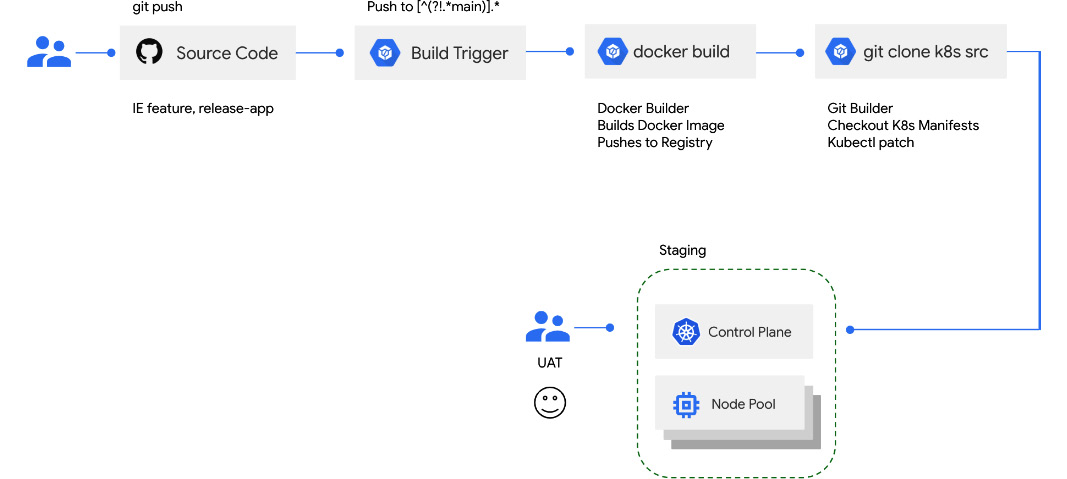

- Staging: Staging environments are typically close to identical to what will eventually run in production. Ideally, bugs and issues have been identified in earlier stage environments – not only dev but also potentially a dedicated QA or testing environment where more complex test suites such as integration have already passed. In this environment, you may want to perform tests that are closer to the experiences of end users, such as smoke tests. You may also want to implement chaos engineering in environments of this kind, where you can attempt to test the resiliency of your entire system in the event of random outages for various components of your stack:

Figure 11.2 – Promoting a release to staging

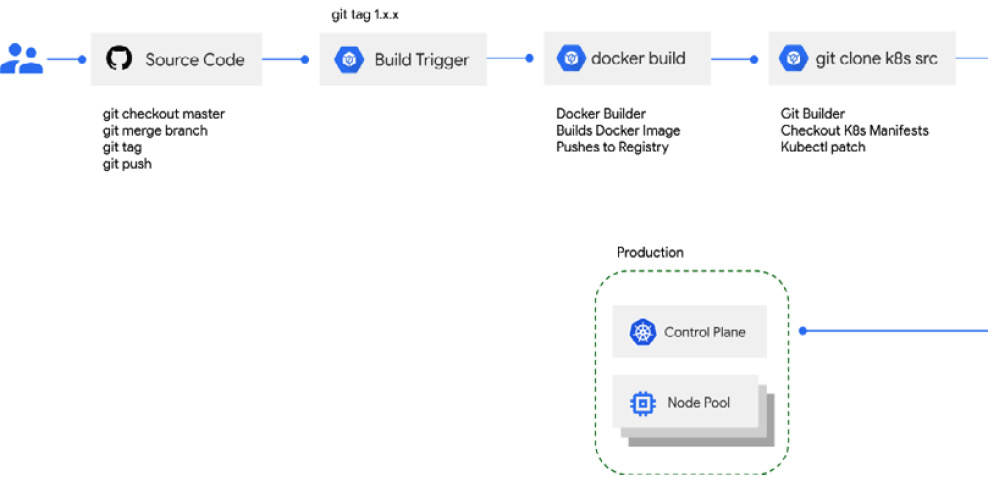

- Prod: Finally, your applications eventually end up in production, where they serve your end users. The rollout to production itself may not be simple, as when deploying to production, you may implement multiple methods for creating the new version of your app and how you shift traffic to that new version:

Figure 11.3 – Promoting a release to production

To successfully promote an application to traverse these various environments, it can be challenging to define all of this on your own in Cloud Build. On top of that, if developers define their own builds to accomplish this, there is the chance for drift and silos to occur across your organization.

Therefore, to simplify this increasingly critical process for users, Google has released Cloud Deploy – an opinionated model for running templated CI/CD pipelines that is built to roll out an application across multiple target environments that represent the different stages of the software delivery cycle. At the time of publication, Cloud Deploy focuses on serving this use case for users deploying applications to Google Kubernetes Engine (GKE) and Anthos clusters.

Cloud Deploy provides a model for using its API to orchestrate the rollout of software to run on Cloud Build workers underneath the hood. Rather than asking users to define each build step along the way, Cloud Deploy exposes resources that allow for declarative pipelines, abstracting away the complex underlying orchestration of Cloud Build resources.

In Cloud Deploy, instead of interfacing with builds and build steps, users instead interface with a combination of Kubernetes and Cloud Deploy specific resources. From a Kubernetes perspective, users will author configurations consistent with the Kubernetes API resources without any required changes.

However, to tell Cloud Deploy which Kubernetes configurations to read, you will author a Skaffold configuration. Skaffold is an open source tool that wraps tools such as kubectl, manifests rendering tools such as helm or kustomize, and builds tools such as buildpacks to deploy Kubernetes configurations to a cluster.

The following is an example of a skaffold.yaml file, which defines the Skaffold profiles to map environments to the respective Kubernetes configuration that will be deployed to them:

# For demonstration purposes only, to run this build would require setup beyond the scope of its inclusion apiVersion: skaffold/v2beta16 kind: Config profiles: - name: dev deploy: kubectl: manifests: - config/dev.yaml - name: staging deploy: kubectl: manifests: - config/staging.yaml - name: dev deploy: kubectl: manifests: - config/prod.yaml

Users then begin to use the Cloud Deploy-specific resources to define a DeliveryPipeline resource. Along with the aforementioned skaffold.yaml file, these define the template for delivering a Kubernetes configuration to multiple environments.

A DeliveryPipeline resource may look similar to the following configuration in YAML:

# For demonstration purposes only, to run this build would require setup beyond the scope of its inclusion apiVersion: deploy.cloud.google.com/v1 kind: DeliveryPipeline metadata: name: app description: deploys to dev, staging, and prod serialPipeline: stages: - targetId: dev profiles: - dev - targetId: staging profiles: - staging - targetId: prod profiles: - prod

Note that DeliveryPipeline outlines the target clusters to which they will deploy the manifests defined in the skaffold.yaml file. It also outlines the order in which the manifests will be applied to each environment, representing the progression of changes through environments such as dev, staging, and prod.

Finally, note that each target also references a profile – this profile aligns to the profiles and specific manifests they align to defined in the skaffold.yaml configuration file. This allows users to perform actions such as flipping the configuration or environment variables between different environments.

Users must then create Target resources referencing each respective GKE cluster and their fully qualified resource name. The following Target resources map to the dev, staging, and prod environments defined in the DeliveryPipeline resource:

# For demonstration purposes only, to run this build would require setup beyond the scope of its inclusion apiVersion: deploy.cloud.google.com/v1 kind: Target metadata: name: dev description: development cluster gke: cluster: projects/PROJECT_ID/locations/us-central1/clusters/dev --- apiVersion: deploy.cloud.google.com/v1 kind: Target metadata: name: staging description: staging cluster gke: cluster: projects/PROJECT_ID/locations/us-central1/clusters/staging --- apiVersion: deploy.cloud.google.com/v1 kind: Target metadata: name: prod description: prod cluster gke: cluster: projects/PROJECT_ID/locations/us-central1/clusters/prod

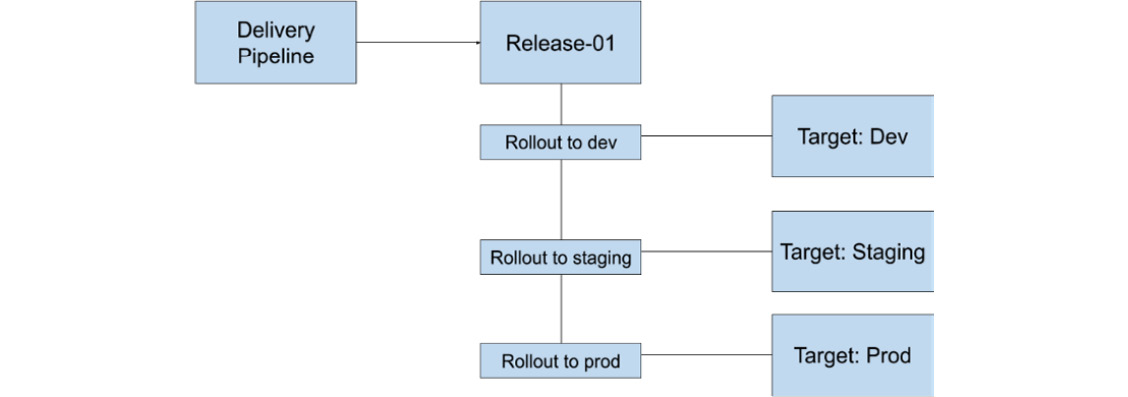

Now that users have defined their respective targets, they can then begin to use their DeliveryPipeline template to create an individual Release – which references that DeliveryPipeline template and also intakes a skaffold.yaml configuration file.

The following command shows how users can kick off by deploying the new version of their application to their desired targets using the Release resource:

$ gcloud deploy releases create release-01

--project=PROJECT_ID

--region=us-central1

--delivery-pipeline=my-demo-app-1

--images=app-01=gcr.io/PROJECT_ID/image:imagetag

This resource compiles down into a Rollout for each Target.

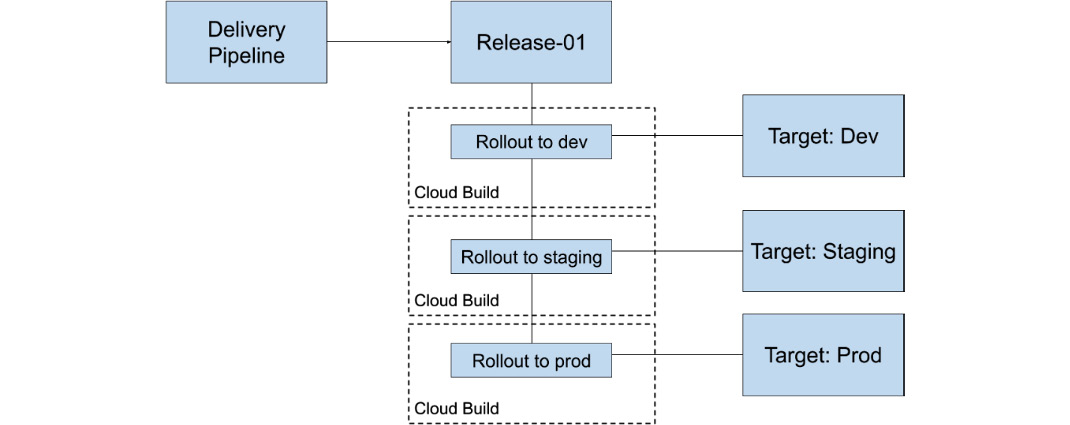

This is where we see a stark difference with doing this via Cloud Build alone. Cloud Deploy enables you to promote an artifact through each Rollout, while also providing easy roll-back mechanisms when deploying this artifact to each Target environment:

Figure 11.4 – The relationship structure when creating a Release

With the fundamental usage of Cloud Deploy covered, let’s now review how Cloud Deploy uses Cloud Build to implement its functionality.

The relationship between Cloud Build and Cloud Deploy

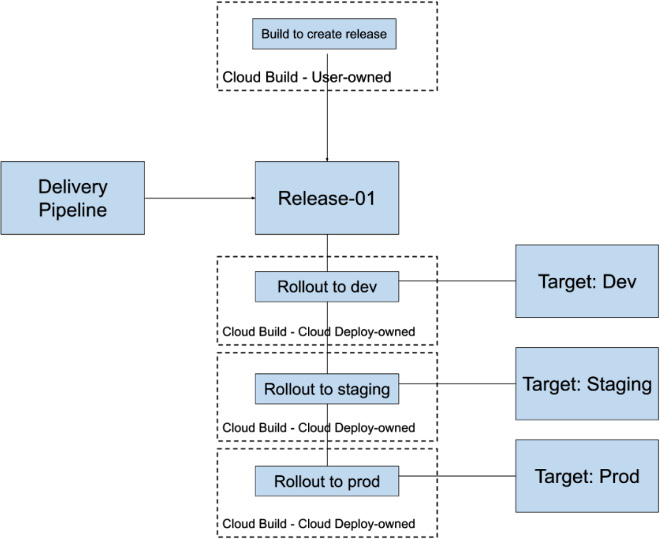

Each Rollout from a given Release in Cloud Deploy runs Skaffold as a single build step build to deploy the appropriate resources to their respective target environments.

Figure 11.5 – The relationship structure when a Release and Rollouts execute

Users do not need to navigate to Cloud Build – however, to monitor and understand the stage their release is at, rather, the Cloud Deploy console provides pipeline visualization to bubble up this information to users:

Figure 11.6 – A pipeline visualization in Cloud Deploy

You can use the pipeline visualization to navigate to each rollout and roll back or promote the release to kick off the next rollout.

Because each rollout to a target is its own Cloud Build build, you can also provide the ability to require approvals before rolling out to a target. For environments such as production, where users may want to implement two-person approvals and releases, this is useful.

Kicking off a release can also be set in its own Cloud Build build step, as demonstrated in the following config:

…

- name: gcr.io/google.com/cloudsdktool/cloud-sdk

entrypoint: gcloud

args:

[

"deploy", "releases", "create", "release-${SHORT_SHA}",

"--delivery-pipeline", "PIPELINE",

"--region", "us-central1",

"--annotations", "commitId=${SHORT_SHA}",

"--images", "app-01=gcr.io/PROJECTID/image:${COMMIT_SHA}"

]

...

This build step can be run in its own build, or it can also be tacked on to a build in which the previous build steps perform the CI and build the container image that will be released via Cloud Deploy.

Figure 11.7 – The relationship structure when kicking off a release using Cloud Build

Cloud Deploy, which launched to General Availability in January 2022, is a prime example of an opinionated set of resources that orchestrates the work that Cloud Build can perform. It provides a distinct way to implement patterns while also surfacing a visualization of what can be complex but related tasks.

Cloud Deploy is also reflective of a common pattern – users running multiple GKE environments and clusters. This expands to users running Kubernetes clusters that exist in Google Cloud and elsewhere, such as their own data centers or co-located environments.

Summary

As we look to what’s next for Cloud Build, we can appreciate the concept of how abstraction caters to different personas and users. We reviewed how Cloud Deploy provides an abstraction to simplify writing CI/CD pipelines for GKE on top of Cloud Build features such as builds and approvals. Cloud Build will only continue to expand the number of use cases and patterns it supports, enhancing the features we have reviewed in this book, as well as building on the newer focus areas reviewed in this chapter specifically.