1 Background

Database systems are fundamental for the information society. Every day, an inestimable amount of data is produced, collected, stored and processed: online shopping, sending emails, using social media, or seeing your physician are just some of the day-to-day activities that involve data management. A properly working database management system is hence crucial for a smooth operation of these activities. In this chapter, we introduce the principles and properties that a database system should ful fill. Database management systems and their components as well as data modeling are the other two basic concepts treated in this chapter.

1.1 Database Properties

As data storage plays such a crucial role in most applications, database systems should guarantee a correct and reliable execution in several use cases. From an abstract perspective, we desire that a database system fulfill the following properties:

Data management. A database system not only stores data, it must just as well support operations for retrieval of data, searches for data and updates on data. To enable interoperability with external applications, the database system must provide communication interfaces or application programming interfaces for several communication protocols or programming languages. A database system should also support transactions: A transaction is a sequence of operations on data in a database that must not be interrupted. In other words, the database executes operations within a transaction according to the “all or nothing” principle: Either all operations succeed to their full extent or none of the operations is executed (and the subsequence of operations that was already executed is undone).

Scalability. The amount of data processed daily with modern information technology is tremendous. Processing these data can only be achieved by distribution of data in a network of database servers and a high level of parallelization. Database systems must flexibly react and adapt to a higher workload.

Heterogeneity. When collecting data or producing data (as output of some program), these data are usually not tailored to being stored in a relational table format. While the data in relational format are called structured and have a fixed schema which prescribes the structure of the data, data often come in different formats. Data that have a more flexible structure than the table format are called semi-structured; these can be tree-like structures (as used in XML documents) or – more generally – graph structures. Furthermore, data can be entirely unstructured (like arbitrary text documents).

Efficiency. The majority of database applications need fast database systems. Online shopping and web searches rely on high-performance search and retrieval operations. Likewise, other database operations like store and update must be executed in a speedy fashion to ensure operability of database applications.

Persistence. The main purpose of a database system is to provide a long-term storage facility for data. Some modern database applications (like data stream processing) just require a kind of selective persistence: only some designated output data have to be stored onto long-term storage devices, whereas the majority of the data is processed in volatile main memory and discarded afterwards.

Reliability. Database systems must prevent data loss. Data stored in the database system should not be distorted unintentionally: data integrity must be maintained by the database system. Storing copies of data on other servers or storage media (a mechanism called physical redundancy or replication) is crucial for data recovery after a failure of a database server.

Consistency. The database system must do its best to ensure that no incorrect or contradictory data persist in the system. This involves the automatic verification of consistency constraints (data dependencies like primary key or foreign key constraints) and the automatic update of distributed data copies (the replicas).

Non-redundancy. While physical redundancy is decisive for the reliability of a database system, duplication of values inside the stored data sets (that is, logical redundancy) should best be avoided. First of all, logical redundancy wastes space on the storage media. Moreover, data sets with logical redundancy are prone to different forms of anomalies that can lead to erroneous or inconsistent data. Normalization is one way to transform data sets into a non-redundant format.

Multi-User Support. Modern database systems must support concurrent accesses by multiple users or applications. Those independent accesses should run in isolation and not interfere with each other so that a user does not notice that other users are accessing the database system at the same time. Another major issue with multi-user support is the need for access control: data of one user should be protected from unwanted accesses by other users. A simple strategy for access control is to only allow users access to certain views on the data sets. A well-defined authentication mechanism is crucial to implement access control.

| A database system should manage large amounts of heterogeneous data in an efficient, persistent, reliable, consistent, non-redundant way for multiple users. |

Database systems often do not satisfy all of these requirements or only to the certain extent. When choosing a database system for a specific application, clarifying all mandatory requirements and weighing the pros and cons of the available systems is the first and foremost task.

1.2 Database Components

The software component that is in charge of all database operations is the database management system (DBMS). Several other systems and components interact with the DBMS as shown in Figure 1.1. The DBMS relies on the operating system and the file system of the database server to store the data on disk. The DBMS also relies on the operating system to be able to use the network interfaces for communication with external applications or other database servers.

The low-level file system (or the operating system) does not have knowledge on internal structure or meaning of stored data, it just handles the stored data as arbitrary records. Hence, the purpose of the database management system is to provide the users with a higher-level interface and more structured data storage and retrieval operations. The DBMS operates on data in the main memory; more precisely it handles data in a particular portion of the main memory (called page buffer) that is reserved for the DBMS. The typical storage unit on disk is a “block” of data; often this data block is called a memory page. The basic procedure of loading stored data from disk into main memory consists of the following steps:

1.the DBMS retrieves a query or command accessing some page not contained in the database buffer (a page fault occurs);

2.the DBMS locates a page on disk containing some of the relevant data (possibly using indexes or “scanning” the table);

3.the DBMS copies this page into its page buffer;

4.as the page usually contains more data than needed by the query or command, the DBMS locates the relevant values (for example, certain attributes of a tuple) inside the page and processes them;

5.if data modified are by a command, the DBMS modifies the values inside the page accordingly;

6.the DBMS eventually writes pages containing modified values back from the page buffer onto disk.

Due to a different organization and size of main memory and disk storage, data management has to handle different kinds of addresses at which a page can be located. On disk, each page has a physical disk address that consists of the disk’s name, the cylinder number, the track number and the number of the page inside the track. Records inside the page can be addressed by an additional offset. Once a page is loaded into main memory, it receives a physical main memory address. The main memory might however be too small to hold all pages needed by an application. Virtual addresses (also called logical addresses) can be used to make accesses from inside an application independent from the actual organization of pages in memory or on disk. This indirection must be handled with a look-up table that translates a virtual address of a page into its current physical address (on disk or in main memory). Moreover, records in pages can contain references (that is, pointers that contain an address) to records in the same page or other pages. When using physical addresses for the pointers, pointer swizzling is the process of converting the disk address of a pointer into a main memory address when the referenced page is loaded into main memory. Hence, main memory management is the interface between the underlying file system and the database management system.

While data storage management is the main task of a DBMS, data management as a whole involves many more complex processes. The DBMS itself consists of several subcomponents to execute theses processes; the specific implementation of these components may vary from database system to database system. Some important components are the following:

Authentication Manager. Users have to provide an identification and a credential (like a user name and a password) when establishing a connection to the database.

Query Parser. The query parser reads the user-supplied query string. It checks whether the query string has a valid syntax. If so, the parser breaks the query up into several commands that are needed internally to answer the query.

Authorization Controller. Based on the authenticated user identity and the access privileges granted to the users by the database administrator, the authorization controller checks whether the accessing user has sufficient privileges to execute the query.

Command Processor. All the subcommands (into which a user’s query is broken) are executed by the command processor.

File Manager. The file manager is aware of all the resources (in particular, disk space) that the database management system may use. With the help of the file manager, the required data parts (the memory pages containing relevant data) are located inside the database files stored on disk. When storing modified data back to disk from the main memory, the file manager finds the correct disk location for writing the data; the basic unit for memory-to-disk transfer is again a memory page.

Buffer Manager. The buffer manager is in charge of loading the data into the main memory and handling the data inside the main memory buffer. From the memory pages inside the buffer it retrieves those values needed to execute the database operations. The buffer manager also initiates the writing of modified memory pages back to disk.

Transaction Manager. Multiple concurrent transactions must be executed by the database system in parallel. The transaction manager takes care of a correct execution of concurrent transaction. When transaction can acquire locks on data (for exclusive access on the data), the transaction manager handles locking and unlocking of data. The transaction manager also ensures that all transactions are either committed (successfully completed) or rolled back (operations of a transaction executed so far are undone).

Scheduler. The scheduler orders read and write operations (of several concurrent transactions) in such a way that the operation from different transactions are interleaved. One criterion for a good scheduler is serializability of the obtained ordering of operations; that is, a schedule that is equivalent (regarding values that are read and written by each transaction) to a non-interleaved, serial execution of the transactions. Variants of schedulers are locking schedulers (that include lock and unlock operations on the data that are read and written) and non-locking schedulers (that usually order operations depending on the start time of transactions).

Recovery Manager. To prepare for the case of server failures, the recovery manager can set up periodical backup copies of the database. It may also use transaction logs to restart the database server into a consistent state after a failure.

1.3 Database Design

Database design is a phase before using a database system – even before deciding which system to use. The design phase should clearly answer basic questions like: Which data are relevant for the customers or the external applications? How should these relevant data be stored in the database? Which are usual access patterns on the stored data? For conventional database systems (with a more or less fixed data schema) changing the schema on a running database system is complex and costly; that is why a good database design is essential for these systems. Nevertheless, for database systems with more flexible schemas (or no schema at all), the design phase is important, too: identifying relationships in the data, grouping data that are often accessed together, or choosing good values for row keys or column names are all beneficial for a good performance of the database system. Hence, database design should be done with due care and following design criteria like the following.

Completeness. All aspects of the information needed by the accessing applications should be covered.

Soundness. All information aspects and relationships between different aspects should be modeled correctly.

Minimality. No unnecessary or logically redundant information should be stored; in some situations however it might be beneficial to allow some form of logical redundancy to obtain a better performance.

Readability. No complex encoding should be used to describe the information; instead the chosen identifiers (like row keys or column names) should be self explanatory.

Modifiability. Changes in the structure of the stored data are likely to occur when running a database system over a long time. While for schema-free database systems these changes have to be handled by the accessing applications, for database systems with a fixed schema a “schema evolution” strategy has to be supported.

Modularity. The entire data set should be divided into subsets that form logically coherent entities in order to simplify data management. A modular design is also advantageous for easy changes of the schema.

There are several graphical languages for database design. We briefly review the Entity-Relationship Model (ERM) and the Unified Modeling Language (UML). We introduce the notation by using the example of a library: readers can borrow books from the library. Other modeling strategies may also be used. For example, XML document can be pictured by a tree; graph structures for graph databases can be depicted by nodes and edges each annotated with a set of properties. These modeling strategies will be deferred to later sections of the book when the respective data models (XML or graph data) are introduced.

1.3.1 Entity-Relationship Model

Entity-Relationship (ER) diagrams have a long history for conceptual modeling – that is, grouping data into concepts and describing their semantics. In particular, ER models have been used in database design to specify which real-world concepts will be represented in the database system, what properties of these concepts will be stored and how different concepts related to each other. We will introduce ER modeling with the example of a library information system. The basic modeling elements of ER diagrams are:

Entities. Entities represent things or beings. They can range from physical objects, over non-physical concepts to roles of persons. They are drawn as rectangles with their entity names written into the rectangle.

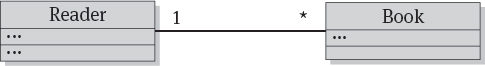

For our library example, we first of all need the two entities Reader and Book:

Relationships. Relationships describe associations between entities. Relationships are diamond-shaped with links to the entities participating in the relationship. In our example, BookLending is a relationship between readers and books:

Attributes. Attributes describe properties of entities and relationships; they carry the information that is relevant for each entity or relationship. Attributes have an oval shape and are connected to the entity or relationship they belong to. A distinction is made between single-valued, multi-valued or composite attributes.

Simple single-valued attributes can have a single value for the property; for example, the title of a book:

Multi-valued attributes can have a set of values for the property; for example, the set of authors of a book:

Composite attributes are attributes that consist of several subattributes; for example, the publisher information of a book consists of the name of the publisher and the city where the publisher’s office is located:

Moreover, key attributes are those attributes the values of which serve as unique identifiers for the corresponding entity. Key attributes are indicated by underlining them. For example, an identifier for each copy of book is is a unique value issued by the library (like the library signature of the book and a counter for the different copies of a book):

Cardinalities. Relationships can come in different complexities. In the simplest case, these relationships are binary (that is, a relationship between two entities). Then, these binary relationships can be distinguished into 1:1, 1:n and n:m relationship:

–a 1:1 relationship links an instance of one entity to exactly one instance of the other entity; an example is a marriage between two persons

–a 1:n relationship links an instance of one entity to multiple instances of the other entity; for example, a book copy can only be lent to a single reader at a time, but a reader can borrow multiple books at the same time

–a n:m relationship is an arbitrary relationship without any restriction on the cardinalities

Such cardinalities are annotated to the relationship links in the ER diagram. In our example, we have the case of a 1:n relationship between books and readers.

The Enhanced Entity-Relationship Modeling (EERM) language offers some advanced modeling elements. Most prominently, the “is-a” relationship is included in EERM to express specializations of an entity. The “is-a” relationship is depicted by a triangle pointing from the more specialized to the more general entity. For example, a novel can be a specialization of a book:

Attributes of the more general entity will also be attributes of the more specialized entity; in other words, attributes are inherited by the specialized entities.

The overall picture of our library example is shown in Figure 1.2. The entity Reader is identified by the key attribute ReaderID (a unique value issued by the library) and has a name and an email address as additional attributes. The entity Book is identified by the BookID, has its title and its year of publication as single-valued attributes, its list of authors as a multi-valued attribute and the publisher information as a composite attribute. Books and readers are linked by a 1:n relationship which has the return date for the book as an additional attribute.

1.3.2 Unified Modeling Language

The Unified Modeling Language (UML) is a widely adopted modeling language – in particular in the object-oriented domain – and it is a standard of the Object Management Group (OMG; see Section 9.1.4). As such it cannot only model entities (also known as classes) and their relationships (also known as associations) but also other object oriented concepts like methods, objects, activities and interactions.

| Web resources: –UML resource page: http://www.uml.org/ –specification: http://www.omg.org/spec/UML/ |

The UML standard consists of several diagram types that can each illustrate a different aspect of the modeled application. These diagrams can specify the application structure (like class diagrams, object diagrams or component diagrams) or the application behavior (like activity diagrams, use case diagrams or sequence diagrams). These diagrams can be used to model an application at different abstraction levels throughout the entire design and implementation process.

From the database point of view, we will confine ourselves to the class diagram which is used to express the general structure of the stored data and is hence closely related to the Entity-Relationship diagram. We briefly review the most important notation elements.

Classes, attributes and methods. Classes describe concepts or things and are hence equivalent to entities of ER Modeling. A class is drawn as a rectangle that is split into three parts. The upper part contains the class name, the middle part contains the attributes (describing state), and the lower part contains method declarations (describing behavior). The Reader class might for example contain methods to borrow and return a book (describing the behavior that a reader can have in the library) in addition to the attributes ID, name and email address (describing the state of each reader object by the values that are stored in the attributes):

Reader

readerID

name

borrowBook()

returnBook()

Types and visibility. As UML is geared towards object-oriented software design, attributes, parameters and return values can also be accompanied by a type declaration. For example, while the readerID would be an integer, the other attributes would be strings; the methods have the appropriate parameters of type Book (a user-defined type) and the return value void (as we don’t expect any value to be returned by the methods). Attributes and methods can also have a visibility denoting if they can be accessed from other classes or only from within the same class. While + stands for public access without any restriction, # stands for protected access only from the same class or its subclasses, ~ stands for access from classes within the same package, and - stands for private access only from within the same class.

Reader

- readerID: int

- name: String

- email: String

~ borrowBook(b: Book): void

~ returnBook(b: Book): void

To model multi-valued attributes, a collection type (like array or list) can be used. For example, we can model the authors as a list of strings:

Book

bookID: int

title: String

year: int

authors: List〈String〉

Associations. Associations between classes are equivalent to relationships between entities. In the simplest case of a binary association (that is, an association between two classes), the association is drawn as a straight line between the classes. To model composite attributes, an association to a new class for the composite attribute containing the subattributes is used:

In more complex cases – for instance, when the association should have additional attributes, or when an association links more than two classes – an association class must be attached to the association. In the library example, we need an explicit association class to model the return date:

Advanced cases like directed associations, aggregation or composition may also be used to express different semantics of an association. These kinds of associations have their own notational elements.

Multiplicities. Similar to the cardinalities in ERM, we can specify complexities of an association. These multiplicities are annotated on the endpoints of the association. In general, arbitrary sequences or ranges of integers are allowed; a special symbol is the asterisk * which stands for an arbitrary number. Again, we model the association between books in such a way that a book can only be lent to a single reader at a time, but a reader can borrow multiple books at the same time:

Specialization. A specialization in UML is depicted by a triangular arrow tip pointing from the subclass to the superclass. A subclass inherits from a superclass all attributes and all method definition; however, a subclass is free to override the inherited methods.

Interfaces and implementation. Interfaces prescribe attributes and methods for the classes implemeting them; methods can however only be declared in the interface but must be defined in the implementing classes. Interfaces have their name written in italic (and optionally have the stereotype <<interface>> written above their interface name). The implementing classes are connected to it by a dashed line with a triangular arrow tip. For example, the reader class may implement a Person class with a name attribute:

The overall UML class diagram in Figure 1.3 is equivalent to the previous ER diagram for our library example.

UML is particularly important for the design of object databases (that directly store objects out of an object-oriented program). But due to the widespread use of UML in software engineering, it also suggests itself as a general-purpose database design language.

1.4 Bibliographic Notes

A wealth of text books is available on the principles of database management systems and data modeling. Profound text books with a focus on relational database management systems include the books by Jukic [Juk13], Connolly and Begg [CB09] and Garcia-Molina, Ullman and Widom [GMUW08].

ER diagrams have a long history for the design of relational databases and the ER model has been unified by Chen in his influential article [Che76]. With a focus on the theory of information system design, Olivé [Oli07] provides a row of UML examples; whereas Halpin and Morgan [HM10] cover conceptual modeling for relational databases with both ER and UML diagrams. For a profound background on UML refer to the text books by Booch, Rumbaugh and Jacobson [BRJ05] and Larman [Lar05]. Last but not least, a general introduction to requirements engineering can be found in the text book by van Lamsweerde [vL09].