10 Distributed Database Systems

For several decades, centralized database management systems – running on a single database server – have been predominant. There are several reasons for this:

– complexity of a single-server system was lower and administration easier;

– the prevalent use case was to evaluate short queries (frequent reads) on a coherent set of data, whereas data modifications happened only rarely (infrequent writes);

– network communication speed was slow and hence sending data between different servers was too costly;

– parallelization required rewriting a query into subqueries and recombining the results and this overhead diminished the positive effects of a parallel execution of subqueries.

Whenever there were more demanding requirements (like increased data volume or more frequent writes), the obvious reaction was to equip the single database server with higher capacity in terms of processor speed, memory size or disk space. This way of improving a single server is often termed scaling up or vertical scaling. However, the amount of data and queries a single database server can handle is limited and a single server is always a single point of failure for which a crash might turn out to be extremely costly. Hence, scaling out or horizontal scaling (connecting several cheaper servers in a network) is now seen as a viable – and in some cases the only – option to improve the throughput and latency of a database system at the cost of coordination and synchronization of the database servers. This disadvantage however pays off for large scale systems or global enterprises with several data centers – in particular, due to increased network communication speed. In this chapter we survey the principles of distributed database systems with a focus on failure models and epidemic protocols.

10.1 Scaling horizontally

The ability of a database system to flexibly scale out by distributing data in a server network is called horizontal scalability. Moreover, these servers can work independently: the individual servers have their own processors, memory and disk systems and only communicate with other servers by a network connection. This architecture is supposedly cheaper than one powerful centralized machine. Historically, it is sometimes called a shared-nothing architecture (in contrast to systems where servers share components – like a shared-disk storage or shared-memory). The most common use case today is a distributed database on a shared-nothing architecture; in other words, a distributed database management system (DDBMS) that runs on a network of independent servers. Due to this independence, the servers need not be large, expensive ones but instead may consist of cheaper commodity hardware so that each server can easily be replaced by a new one. In a distributed database data is spread across several database servers.

| A distributed database is a collection of data records that are physically distributed on several servers in a network while logically they belong together. |

A distributed DBMS can become beneficial not only when handling large volumes of data, but also when aiming for improved availability and reliability in smaller scaled systems. Important features of a DDBMS (as identified in [DHJ+07]) are the following:

Load balancing: User queries and other processes should be assigned to the servers in the network such that all servers have approximately the same load (that is, the same amount of processing tasks); an imbalanced load – and in particular, hotspots consisting of those servers that usually execute a lot more tasks than other servers – can lead to a lower performance of the system because the DDBMS does not make use of all the resources available.

Flexible scalability: Servers may flexibly leave and join the network at any time so that the DDBMS can be reconfigured according to the current storage or performance demands. The term membership churn is used to describe the leaving and joining of database servers in the network.

Heterogeneous nodes: The DDBMS may run on a network of servers where some servers might have more capabilities than others. With such support for such heterogeneous nodes, the DDBMS can for example be stepwise migrated onto more performant nodes without the need to upgrade all nodes at once.

Symmetric configuration: Every node is configured identically to the others; hence, each node has the ability to replace a failed node. In particular, user queries can be handled by any server in the system.

Decentralized control: Peer-to-peer algorithms for data management improve failure tolerance of a DDBMS because they avoid the case of a distinguished node which would be the single point of failure for the system.

10.2 Distribution Transparency

To a user, the distributed DBMS should appear as if he was interacting with a single centralized server. In particular, the user must be allowed to send his query to only one node of the system and the distributed DBMS adapts and redirects his query to one or more data nodes. Hence, for a user it must basically be transparent how the DBMS internally handles data storage and query processing in a distributed manner. This is the notion of distribution transparency which has several more aspects that are relevant for database systems:

Access transparency: The distributed database system provides a uniform query and management interface to users independent of the structure of the network or the storage organization.

Location transparency: The distribution of data in the database system (and hence the exact location of each data item) is hidden from the user. The user can query data without having to specify which data item is to be retrieved from which database server in the network.

Replication transparency: If several copies of a data item are stored on different servers (for recovery and availability reasons), the user should not be aware of this and should not have to care about which copies he is accessing. Replication implies that the problem of data consistency has to be handled: the distributed database system should ensure that the different copies are updated regularly so that users can access any copy and still retrieve correct data. Fragmentation transparency: If a large data set has to be split into several data items (usually called fragments, partitions or shards), the distributed database system does this splitting internally and the user can query the database as if it contained the entire unfragmented data set. In particular, to answer a user query, subqueries are redirected to different servers and the data items relevant to the query are recombined by the database system.

Migration transparency: If some data items have to be moved from one server to another, this should not affect how a user accesses the data. Concurrency transparency: When multiple users access the database system, their operation must not interfere or lead to incorrect data in the database system. Concurrency is much more diffcult to manage for a distributed system than for a centralized one. A major problem is how to resolve conflicts due to the distributed nature of the system: A conflict occurs, for example, when two users concurrently try to each write to a different copy of a replicated data item on two different servers.

Failure transparency: As a distributed database system is more complex than a centralized one, many more failure cases can arise. The distributed database system should hence do its best to continue processing user requests even in the presence of failures.

10.3 Failures in Distributed Systems

In a distributed system with several independent components connected by a network, parts of the network may fail. From a technical perspective, failures can for example be the following:

Server failure: A database server may fail to process messages it receives for example due to a faulty network component; or the server fully crashes and has to be restarted. Servers may also be delayed in processing messages (due to overload) or may send incorrect messages due to errors while processing data.

Message failures: When messages are transmitted over communication links in the network, messages may be delayed or lost during times of high congestion of the network. Even if a message is eventually transmitted, a receiving component may fail to handle a delayed message due to a timeout. At times messages may also be duplicated due to faulty components.

Link failure: A communication link between two servers may be unable to transmit messages – or it might corrupt or duplicate messages. Hence, a link failure can cause a message failure.

Network partition: A network is partitioned when it is split into two or more subnetworks that are unable to communicate because all communication links between them are broken. As a special case, a network is also called partitioned when one of the subnetworks consists of just one single server.

In a more abstract setting, failures of nodes (that is, servers) in a network can be categorized as follows:

Crash failures: A crash failure is a permanent failure of a server and corresponds to aborting a communication protocol. That is, once the server crashed, it will never resume operation so that communication with it is not possible any longer.

Omission failures: An omission failure corresponds to not taking (in other words omitting) some action; for example, an omission failure occurs when some server fails to send a message it should be sending according to some communication protocol.

Commission failures: A commission failure corresponds to taking an action that is not correct according to (and hence is a deviation from) a communication protocol. A server might for example send an incorrect or unnecessary message.

Crash failures are a special case of omission failures (because a crashed server fails without recovering but until the crash the server acts according to protocol). The union of omission and commission failures is called Byzantine failures (based on the article [LSP82]) – hence components of the distributed system may fail in arbitrary ways. In contrast, the term non-Byzantine failures usually refers to omission failures (like crash failures and message loss) but in addition explicitly also covers duplication and reordering of messages.

Distributed DBMSs have to provide a high level of fault tolerance: even in the presence of failures, the unaffected servers should continue to process user queries. A distributed system may in general be devised based on a certain failure model which describes the set of failures that the system can tolerate. Two common failure models are:

Fail-stop model: All server failures are crash failures that permanently render the server unavailable and hence remove it from the set of available servers.

Fail-recover model: A server may halt but it may later resume execution – for example after a restart. There are two particular cases for resuming execution: the server may resume execution in the state before it was halted (it can hence remember its internal state and all the messages is has processed previously) or it may start from scratch (and hence it forgets any previous state it was in or any messages it has processed).

10.4 Epidemic Protocols and Gossip Communication

Due to the many properties (like failure tolerance and scalability) that a distributed database system should have, the propagation of information (like membership lists or data updates) in the network of database servers is diffcult to manage. In the simplest scenario, whenever new information is received by one server, the server sends a notification to all the other servers he knows. However the initiating server might not be aware of all servers currently in the network and some of his messages might be lost due to network failures. Moreover, the network might quickly change due to insertions or removals of servers.

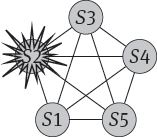

As the more flexible alternative, the database servers can be seen as participants in a peer-to-peer network where there is no central coordinator. These peers can coordinate themselves by communicating pairwise. From a database perspective, epidemic protocols are a category of peer-to-peer algorithms, where information (for example data updates) is spread like an infection all over the server network. Another application of epidemic algorithms is membership of peers in the network: each server has to maintain a list of names of those servers that are part of the network and hence are possible communication partners. This membership list can then be kept up-to-date by an epidemic algorithm: servers exchange their membership lists in a peer-to-peer fashion so that the information which servers are part of the network slowly spreads over the entire network.

The notion of epidemic protocols has its roots in an article on updates in distributed databases ([DGH+88]). With an epidemic algorithm, servers in the network pass on a message like an infection. In the above membership example, a message could for example be the notification that some new server has joined the network so that all servers that receive this message can update their membership list accordingly. In analogy to epidemiology, servers that have received a new message that they want to pass on to others are called infected nodes; nodes that so far have not received the new message are called susceptible nodes. Nodes that already have received the message but are no longer willing to pass it on are called removed nodes.

There are three different communication modes that can be applied in epidemic algorithms:

push-only: An infected server contacts another server and passes on all the new messages it has received. That is, to spread the infection, the infected server has to find another server that is susceptible.

pull-only: A susceptible server contacts another server and asks for new messages. To spread the infection, the susceptible server has to find an infected server.

push-pull: One server contacts another server and both exchange their new messages. After this exchange, both servers have the same state (for example an identical membership list). Both servers are susceptible and infected at the same time.

A term that is often used as a synonym for epidemic message exchange across servers is gossiping; the term expresses that messages spread in a server network like rumors in human communication. It has its background in the graph-theoretical analysis of the gossip problem [Ber73].

Two variants of epidemic algorithms for database updates discussed in [DGH+88] are anti-entropy and rumor spreading. They have the following properties:

Anti-entropy: Anti-entropy is a periodic task that is scheduled for a fixed time span; for example, anti-entropy can be configured to run once every minute. With anti-entropy, one server chooses another server (from its local membership list) at random to exchange new messages in one of the communication modes described above. Anti-entropy is called a simple epidemic because any server is either susceptible or infective (there are no removed servers) and the infection process does not degrade over time or due to some probabilistic decision.

Rumor spreading: With rumor spreading, the infection can be triggered by the arrival of a new message (in which case the server becomes infective); or it can be run periodically. With rumor spreading, the infection proceeds in several rounds. In each round, a server chooses a set of communication partners; the number of communication partners chosen is called the fan-out. Rumor spreading is the case of a complex epidemic because infection of other servers is a dynamic process: the amount of infections decreases with every round as the number of removed servers grows. This decrease of infections can be varied as follows:

probabilistic: After each exchange with another server, the server stops being infective with a certain probability.

counter-based: After a certain number k of exchanges, the server stops being infective. There are two extreme cases: in the infect-and-die case, the number k is equal to the fan-out – that is, the server runs one round of infection and then stops; in the infect-forever case, the number k is infinite and the server never stops.

blind: The server becomes removed without taking the feedback of communication partners is into account. In particular, in the probabilistic case, he decides to become removed with a certain probability after each exchange; in the counter-based case, the server becomes removed after a number k of exchanges.

feedback-based: The server becomes removed if it notices that the communication partners already have received the new message. In particular, in the probabilistic case, whenever the infective server notices that the communication partner already knows the message he wants to spread, then he stops being infective with a certain probability; in the counter case, the being infective stops after k exchanges with a server that already knows the message.

One problem that can occur with epidemic algorithms is the case of isolated subnets: message exchanges only takes place inside subnets but there are no exchanges between the subnets so that the sets of messages between the subnets always differ. This is the case of a logical partition where the communication links are working but nevertheless the subnets do not communicate (in contrast to a physical network partition where some communication links might be broken). This is in particular a problem when an epidemic algorithm is used for membership lists: then the servers only consider the other servers in their subnet as being members of the network – without ever becoming aware of the other subnets. A solution around this is to use a set of seed servers: a set of servers with which every server joining the network starts the message exchange.

10.4.1 Hash Trees

A major issue with epidemic protocols is how two servers can identify those messages in which they differ. For a large amount of messages, a complete comparison of all messages is not feasible as this would slow down the epidemic process tremendously: the entire message list of one server has to be sent to the other server and the server has to go through the two message lists sequentially to find missing messages. A simple improvement is to use a list of hash values: comparison of hash values (which are shorter than the entire messages) is faster; but on the downside, the hash values have to be computed and still the list of hash values has to be compared sequentially.

Hence, a much more efficient way of comparison has to be found. This is possible with a hash tree (or Merkle tree [Mer87]): a hash tree starts with a hash of each message in a leave node, and then iteratively concatenates hashes, hashes the concatenations again and combines the hash values into a tree structure (see Figure 10.1). For the inner nodes, the closer a hash value is to the root, the more leaves (and hence messages) it covers. The last hash value at the root of the tree is called the top hash.

Now, with a hash tree, message list comparison is improved: first of all, when the two top hashes are identical, the two message lists are identical, too – based on the assumption that no collisions occur that result in identical hash values for different inputs. Hence, the case that there are no new messages to spread can be determined by just sending the value of the top hash to the other server that compares the sent one with his own top hash. However, if the top hashes differ, we go the the next level of the tree and compare the hash values there. Whenever we encounter an inner node that has identical hash values in the two hash trees under comparison, then we know that the messages below this inner node are identical; nor further comparisons are necessary for the subtree starting at this node. On the other hand, as long as hash values differ for an inner node, we have to go one level deeper and compare the hash values of the child nodes. When we reach a leaf node with different hash values, we have identified a message on which the two message lists differ. An important precondition for this to work is that both servers use the same sorting order for the messages. If the sorting order differs in the to-be-compared trees, hashes are combined in a different manner and the comparison of the hash values reveals many differences although the message lists are identical. That is, in order to avoid unnecessary hash comparisons, we have to ensure identical root hashes for identical message list. This can for example be done as follows:

–Let the two servers agree on a sorting order, sort all messages according to this order and then compute the Merkle tree just before the comparison. This process obviously introduces a delay due to sorting and the on-the-fly computation of the hash trees.

–Another option is to make each server precompute Merkle trees for any possible sorting order of the messages. For a comparison of their trees, the servers then just have to find those two trees with the same sorting order. This option only makes sense for small message lists because computing all possible Merkle trees as well as updating them whenever a new message arrives costs time; and storing all trees requires a lot of storage space.

Merkle trees are also used for record comparison in extensible record stores or key-value stores. Some of these stores execute a major compaction (see Section 8.2.4) before computing the Merkle tree; in this way, unnecessary records (that are masked by delete markers) are removed and the records are sorted based on the order configured for the keys and based on the timestamps.

10.4.2 Death Certificates

There is a drawback with the decentralized control of peer-to-peer networks when it comes to withdrawing information. With peer-to-peer algorithms in general – and epidemic protocols in particular – it becomes quite complicated to delete messages. The problem is the following: if a message is deleted locally (in one message list), then further message exchanges with the peer servers might reintroduce the deleted message. As an example consider membership lists: when a server leaves the network, it does not suffice to delete the server’s entry from some membership lists; for the deletion to become effective, the server’s entry has to be deleted from all membership lists at the same time. However due to the peer-to-peer nature of the server network this will be impossible to achieve. That is why explicit delete messages (so-called death certificates) have to represent the withdrawal of information. Whenever a death certificate is received by a server, it will delete the corresponding original message (for example, it will remove the server for which the death certificate was received from the local membership list) but it will keep the death certificate. In this way the deletion of messages can spread with the usual epidemic behavior: whenever a message exchange between peers takes place all death certificates are exchanged, too, such that messages for which a death certificate exists are immediately deleted and will not be exchanged. This in effect leads to a local deletion of messages while avoiding any reintroduction of deleted messages.

Death certificates are effective for the deletion of messages, but they have another disadvantage: because death certificates themselves cannot be deleted (in order to avoid reintroductions of messages), over time they can pile up and occupy all the storage space of the servers. In practical settings the following options can help alleviate this problem:

Time-to-live values: One option to avoid this overabundance of death certificates is to attach a time-to-live value to each certificate. Whenever this time span has passed, a server can delete the death certificate. It could however still happen that some servers have not received a death certificate during its time-to-live; these servers can then cause a reintroduction of the to-be-deleted message. The time-to-live value must hence be high enough to keep the probability of reintroduction tolerably small. Yet in practice, if many deletions take place, even with a time-to-live value there may be too many death certificates around that lead to a performance degradation of the epidemic behavior.

Dormant certificates: Another option is to permanently keep death certificates only at some few servers; these are then so-called dormant certificates [DGH+88]. All other servers can delete the certificates after some time. Only in case of a reintroduction the corresponding dormant death certificate is reactivated and spread again to the other servers.

10.5 Bibliographic Notes

The standard textbook by Tanenbaum and van Steen [TvS06] gives a general overview of distributed systems. The textbook by Özsu and Valduriez [ÖV11] provides an in-depth treatment of distributed database systems with a focus on approaches for the relational data model. A textbook focusing on failure tolerance and consensus is the one by Attiya and Welch [AW04].

Gossiping and epidemic algorithms have raised scientific interest for quite some time. Starting with a purely mathematical treatment (the gossip problem [Ber73]), one of the first practical approaches was presented in [DGH+88]. Several variants have been studied since then – for example in [LLSG92, LM99, JVG+07, KvS07, BGFvS09]. Hash trees have been applied by Merkle [Mer87] for authentication of digital signatures.