One of the benefits of asynchronous e-learning is the potential to tailor training to just what the individual learner needs. If someone has already worked with a spreadsheet and knows the basics of cells and formulas, why should she spend time going through all of the introductory lessons on cells and formulas? Unlike the classroom, where learning mostly proceeds in a lock-step group pace and sequence, individualized instruction should in theory adjust instruction to each learner.

As we mentioned in Chapter 1, in the United States we invest over $50 billion a year in organizational training. But that figure does not incorporate the most expensive element of any training program—the time of the learners. If we can save learning time by tailoring instruction to each person's needs, there is a great potential for economic payoff in efficient instruction.

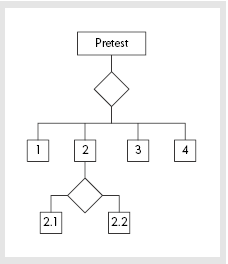

The ability to individualize instruction is called adaptive learning. You are probably most familiar with adaptive learning in the form of pretests that assign learners to the courses or lessons that match their skills. Depending on the size and scope of the course, the learner will typically complete a pretest that includes fifteen to twenty test questions followed by an assignment to specific lessons. However, in most cases, once they start, all learners work through the same lesson, regardless of their rate of learning. Everyone sees the same sequence of examples and works the same number of practice exercises. We know that students learn at different rates and, if we could track learning dynamically in the lesson, we could adjust the amount of instruction to learner needs. However, taking frequent tests throughout a course or lesson is too time-consuming. Therefore, dynamic adaptive instruction has generally not been implemented.

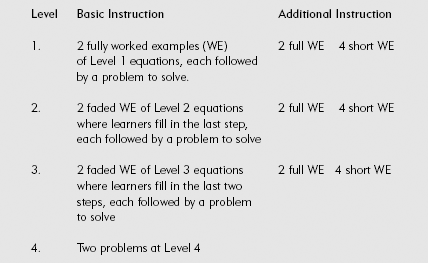

In Chapters 8, 9, and 10 we recommended that you use a series of gradually faded worked examples so that, as learners progress through a lesson, they receive completion examples that require them to fill in more and more steps. However, an implicit assumption behind this method is that all learners develop expertise at a linear rate correspondent with the progressively faded worked examples. A more accurate approach would adjust the sequence from worked examples through faded worked examples to full problems based on actual measures of learning. Some learners may need many fully worked examples to build a schema. Others will be ready for full practice exercises after only one or two worked examples. e-Learning offers a mechanism for tailoring instruction to each learner. However, for ongoing diagnosis of learning, we need a testing method that can quickly and accurately assess expertise as learners progress. Kalyuga and Sweller (2004, 2005) have recently reported a new rapid testing method that can serve this purpose.

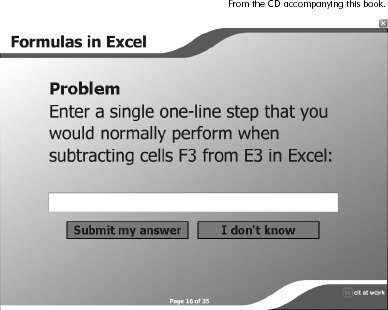

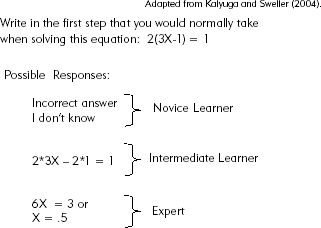

What if, instead of requiring learners to provide complete problem solutions, as in traditional tests, the test only requires them to write down the first step they would take to solve a problem? Because experts have acquired and automated many schemas for solving problems in their domain, they can often jump from a problem statement directly to a solution with few or even no intermediate steps. In contrast, someone with a lower level of expertise might know the general approach to take but will need to invest considerable effort to write out several intermediate steps to solve the same problem. Of course, someone who is a novice may not even attempt the problem. Or he would write out an incorrect step. We illustrate this idea in Figure 11.1 with one algebra question from a rapid diagnostic test and several possible responses. Test takers are instructed to quickly respond by writing down the first step they would normally take to solve the problem. Experts who are very familiar with these types of equations are likely to draw on their existing schemas and immediately jump to the solution or final stages of solution. Novices are more likely to acknowledge they don't know or write out something incorrect. Learners with intermediate expertise will respond with a correct, intermediate step leading to the solution.

Figure 11.1. Alternative First Steps to Solve an Algebra Problem Among Learners of Diverse Experience.

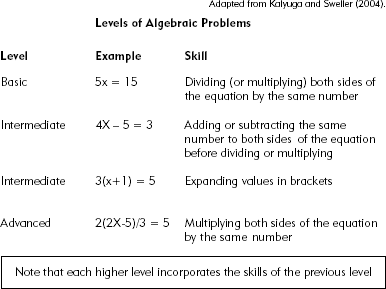

Since learners are only writing out one step to a problem solution, to obtain a comprehensive picture of their range of skills, you would need to include problems at varying levels of complexity. For example, in Figure 11.2 we show four levels of algebraic problems along with their associated solution rules. If you work out the sample we label "advanced," you will see that its solution incorporates all of the rules of the problems that precede it. If you were to construct a fast test to monitor which rules your learners had acquired, you could develop a four-question test asking the learners to write out the first step they would take to solve each of these problems. Learners who miss the basic problem will most likely not be able to solve any of the more advanced problems, since they all incorporate the rule in Problem 1. Likewise, learners who solve Problem 1 but miss Problem 2 probably know Rule 1 but not Rule 2. By asking learners to write out the first step only, you can save a lot of testing time. By including several problems that incorporate the range of skills involved, you can infer your learners' skill levels.

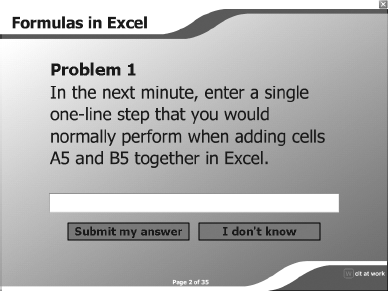

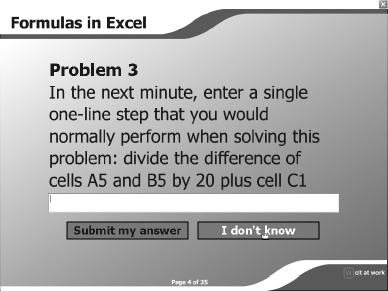

For example in Figures 11.3 and 11.4 you can see Questions 1 and 3 from our Excel demonstration e-lesson pretest on the CD. The first question involves a simple addition operation, whereas the third question requires constructing a formula that involves four different variables. Depending on which questions were answered accurately, the learner is branched to the appropriate topic in the lesson. You can try this out on the load managed web-based lesson on our CD.

In order for a rapid testing method like this one to be practical, we need to know that it does give us an accurate picture of the learner's skills. One way to verify the value of such a rapid test is to compare it with test scores from a traditional test measuring the same skills.

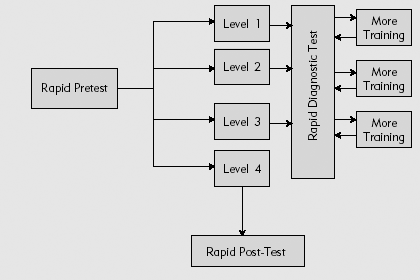

Adaptive online training can be used to tailor instructional content and methods to each learner's level of expertise as they start a course and as they progress through the lessons. Such a program would use results from a rapid pretest to assign learners to the appropriate lesson or topic within a lesson. As the learner progresses through the training in a lesson or lesson topic, the instructional materials are tailored based on her learning.

For example, suppose after taking a lesson pretest, Marcy is assigned to Topic B since she demonstrated an understanding of the prerequisite skill in Topic A. Marcy receives some instruction on Topic B followed by a rapid diagnostic test. If she passes the diagnostic test, she moves on to Topic C. Otherwise, she receives additional training on Topic B and repeats the testing-training cycle until she demonstrates competency in Topic B. In this way, each learner moves through the lessons at his or her own pace and progresses only after demonstrating competency in each skill.

To consider a more specific adaptive scenario, our demonstration asynchronous Excel lesson on formulas included three topics on how to construct formulas for addition and subtraction, multiplication and division, and a combination of both. Three rapid pretest items like the ones shown in Figures 11.3 and 11.4 are used to assign the learner to the appropriate topic in the lesson. In that topic the learner views a worked example and one or two completion examples, ending with a full problem assignment. At that point the learners are tested again using an item like the one shown in Figure 11.5. If they respond correctly, they move to the next topic. Otherwise, they are assigned additional worked examples. You can try out our adaptive instruction when you view the asynchronous Excel lesson example on the CD.

Dynamic rapid testing sounds good, but we need evidence that it works as intended. In the next section, we summarize very recent research reported by Kalyuga and Sweller (2005) that compared the learning from this type of adaptive training to learning of a comparison group that did not receive adaptive training.

In this research, the highly structured domain of algebraic equations allowed the researchers to cleanly develop rapid assessments and instructional treatments with faded worked examples matched to learner expertise. Our Excel formula lesson likewise involved sufficient structure in topics to permit us to easily apply the adaptive plan. We will need further research to determine how to best adapt this methodology to less structured learning goals. Since the rapid testing method is very new, we will need more research, including experiments and field trials, before recommending it for all types of instructional goals. In the next paragraphs, we discuss the benefits and drawbacks of adaptive learning.

From a practical perspective, adaptive testing always requires a greater investment of resources than alternative designs that incorporate the same learning methods for everyone. First, the diagnostic tests must be built. Second, the additional instruction must be built for those learners who do not reach competency after completing the basic instruction. The potential benefits of pretests are efficiency gains for trainee populations with diverse prior knowledge. The pretest will allow learners to bypass training topics that they already know. If you have a sufficiently large population of learners with diverse skill sets, you can likely make a strong economic case for diagnostic pretesting.

The potential benefits of dynamic in-lesson assessment are efficiency in learning for faster learners as well as confidence in equivalent skill achievement among learners requiring different levels of instructional support. The dynamic in-lesson test will ensure that learners do not progress until they have mastered prerequisite skills. If you have a trainee population with sufficiently diverse instructional support requirements and it's critical that all learners reach a similar performance level, you can likely make an economic case for dynamic assessment at the topic or lesson level. Naturally, the more fine-grained your adaptive learning, the more development work is involved. If you work at the lesson level, your diagnostic test items will be fewer than if you work at the topic level. Since e-learning lessons should be relatively short, you might gain most cost benefit at the lesson level.

The research is quite recent on rapid testing. However, based on results to date we conclude that:

Rapid testing can give as valid an assessment of learning as full tests.

Greater efficiency in training can be promoted by tailoring instructional methods and content to diverse levels of learner expertise.

Rapid testing techniques may be applied to pretests and/or internal diagnostic tests.

You should consider adaptive instruction when:

The instruction will be delivered via asynchronous e-learning,

You have a large audience of widely varied skill levels for whom significant time will be saved by pretesting, and/or

You have a large audience with varied instructional support requirements, all of whom must reach a common level of a critical competency.

Depending on your situation, you may want to use the rapid pretest only, the rapid diagnostic test only, or a combination of both. As you can see, the development of the test items and the extra instructional support will add considerably to the cost of the courseware and should be undertaken only when you anticipate sufficient return on investment. Whether using diagnostic assessment or not, you should consider the expertise reversal effect when designing more advanced lessons.

Chapter 11: Use Rapid Testing to Adapt e-Learning to Learner Expertise. Topics include an introduction to rapid testing, an example of a rapid test, a discussion of potential applications of rapid tests, as well as learner control.

The asynchronous Load-Managed Excel Web-Based Lesson demonstrates our adaptation of rapid testing to a lesson on construction of formulas in Excel.

The lesson begins with a three-item rapid pretest. Each item corresponds to a topic on formula formatting in the lesson.

Each lesson topic ends with a rapid diagnostic test. If the response is incorrect, the learner receives additional completion examples and another rapid test item.

The synchronous Virtual Classroom Example mentions (but does not show) a pretest used to assign learners to individual class sessions.

In Parts II and III, we summarized a number of proven techniques for reducing extraneous cognitive load and increasing germane load for novice and for experienced learners. In Part IV we have seen that, when it comes to experienced learners, many of the techniques that improve learning and learning efficiency in novice learners either have no effect or, in some cases, actually have a negative effect.

In the last part of the book we include two chapters that summarize and integrate all of the guidelines we have generated throughout the book. Chapter 12 organizes cognitive load management guidelines into an instructional application context. Chapter 12 won't present any new research or guidelines. It will integrate the guidelines we've discussed throughout the book in a context appropriate for individuals responsible for design, development, evaluation, and delivery of training products.