Data backups and data security are the most important aspects of any organization. It is even more important to design and implement business continuity plans to tackle data loss because of various factors. While Elasticsearch is not a database and it does not provide the backup and security functionalities that you can get in databases, it still offers some way around this. Let's learn how you can create cost effective and robust backup plans for your Elasticsearch clusters.

In this chapter, we will cover the following topics:

- Introducing backup and restore mechanisms

- Securing an Elasticsearch cluster

- Load balancing using Nginx

In Elasticsearch, you can implement a backup and restore functionality in two different ways depending on the requirements and efforts put in. You can either create a script to create manual backups and restoration or you can opt for a more automated and functionality-rich Backup-Restore API offered by Elasticsearch.

A snapshot is the backup of a complete cluster or selected indices. The best thing about snapshots is that they are incremental in nature. So, only data that has been changed since the last snapshot will be taken in the next snapshot.

Life was not so easy before the release of Elasticsearch Version 1.0.0. This release not only introduced powerful aggregation functionalities to Elasticsearch, but also brought in the Snapshot Restore API to create backups and restore them on the fly. Initially, only a shared file system was supported by this API, but gradually it has been possible to use this API on AWS to create backups on AWS buckets, Hadoop to create backups inside Hadoop clusters, and Microsoft Azure to create backups on Azure Storage with the help of plugins. In the upcoming section, you will learn how to create backups using a shared file system repository. To use cloud and Hadoop plugins, have a look at the following URLs:

https://github.com/elastic/elasticsearch-cloud-aws#s3-repository

https://github.com/elastic/elasticsearch-hadoop/tree/master/repository-hdfs

https://github.com/elastic/elasticsearch-cloud-azure#azure-repository

Creating snapshots using file system repositories requires the repository to be accessible from all the data and master nodes in the cluster. For this, we will be creating an network file system (NFS) drive in the next section.

NFS is a distributed file system protocol, which allows you to mount remote directories on your server. The mounted directories look like the local directory of the server, therefore using NFS, multiple servers can write to the same directory.

Let's take an example to create a shared directory using NFS. For this example, there is one host server, which can also be viewed as a backup server of Elasticsearch data, two data nodes, and three master nodes.

The following are the IP addresses of all these nodes:

- Host Server: 10.240.131.44

- Data node 1: 10.240.251.58

- Data node 1: 10.240.251.59

- Master Node 1: 10.240.80.41

- Master Node 2: 10.240.80.42

- Master Node 3: 10.240.80.43

The very first step is to install the nfs-kernel-server package after updating the local package index:

sudo apt-get update sudo apt-get install nfs-kernel-server

Once the package is installed, you can create a directory that can be shared among all the clients. Let's create a directory:

sudo mkdir /mnt/shared-directory

Give the access permission of this directory to the nobody user and the nogroup group. They are a special reserved user and group in the Linux operating system that do not need any special permission to run things:

sudo chown –R nobody:nogroup /mnt/shared-directory

The next step is to configure the

NFS Exports, where we can specify with which machine this directory will be shared. For this, open the /etc/exports file with root permissions:

sudo nano /etc/exports

Add the following line, which contains the directory to be shared and the space-separated client IP lists:

/mnt/shared-directory 10.240.251.58(rw,sync,no_subtree_check) 10.240.251.59(rw,sync,no_subtree_check) 10.240.80.41(rw,sync,no_subtree_check) 10.240.80.42(rw,sync,no_subtree_check) 10.240.80.43(rw,sync,no_subtree_check)

Once done, save the file and exit.

The next step is to create an NFS table, which holds the exports of your share by running the following command:

sudo exportfs –a

Now start the NFS service by running this command:

sudo service nfs-kernel-server start

After this, your shared directory is available to the clients you have configured on your host machine. It's time to do the configurations on the client machines.

First of all, your need to install the NFS client after updating the local package index:

sudo apt-get update sudo apt-get install nfs-common

Now, create a directory on the client machine that will be used to mount the remote shared directory:

sudo mkdir /mnt/nfs

Mount the shared directory (by specifying the nfs server host ip:shared directory name) on the client machine by using the following command:

sudo mount 10.240.131.44:/mnt/shared-directory /mnt/nfs

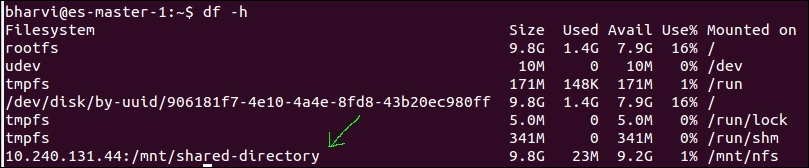

To check whether the mount is successfully done, you can use the following command:

df -h

You will see an extra drive mounted on your system, as shown in the following screenshot, which shows the mounted shared directory:

Please note that mounting the directories/devices using the mount command only mounts them temporarily. For a permanent mount, open the /etc/fstab file:

sudo nano /etc/fstab

host.domain.com:/mnt/shared-directory /mnt/nfs/ nfs auto,noatime,nolock,bg,nfsvers=4,sec=krb5p,intr,tcp,actimeo=1800 0 0

Perform similar steps on all the data and master nodes to mount the shared directory on all of them using NFS.

The following subsections cover the various steps that are performed to create a snapshot.

Add the following line inside the elasticsearch.yml file, to register the path.repo setting on all the master and data nodes:

path.repo: ["/mnt/nfs"]

After this, restart the nodes one by one to reload the configuration.

Register the shared file system repository with the name es-backup:

curl -XPUT 'http://localhost:9200/_snapshot/es-backup' -d '{ "type": "fs", "settings": { "location": "/mnt/nfs/es-backup", "compress": true } }'

In preceding request, the location parameter specifies the path of the snapshots and the compress parameter turns on the compression of the snapshot files. Compression is applied only to the index metadata files (mappings and settings) and not to the data files.

You can create multiple snapshots of the same cluster within a repository. The following is the command that is used to create a snapshot_1 snapshot inside the es-snapshot repository:

curl -XPUT 'http://localhost:9200/_snapshot/es-backup/snapshot_1?wait_for_completion=true'

The wait_for_completion parameter tells whether the request should return immediately after snapshot initialization (defaults to true) or wait for snapshot completion. During snapshot initialization, information about all previous snapshots is loaded into the memory, which means that in large repositories, it may take several seconds (or even minutes) for this command to return even if the wait_for_completion parameter is set to false.

By default, a snapshot of all the open and started indices in the cluster is created. This behavior can be changed by specifying the list of indices in the body of the snapshot request:

curl -XPUT 'http://localhost:9200/_snapshot/es-backup/snapshot_1?wait_for_completion=true' -d '{ "indices": "index_1,index_2", "ignore_unavailable": "true", "include_global_state": false }'

In the preceding request, the indices parameter specifies the names of the indices that need to be included inside the snapshot. The ignore_unavailable parameter, if set to true, enables a snapshot request to not fail if any index is not available in the snapshot creation request. The third parameter, include_global_state, when set to false, avoids the global cluster state to be stored as a part of the snapshot.

To get the details of a single snapshot, you can run the following command:

curl -XPUT 'http://localhost:9200/_snapshot /es-backup/snapshot_1

Use comma-separated snapshot names to get the details of more than one snapshot:

curl -XPUT 'http://localhost:9200/_snapshot /es-backup/snapshot_1

To get the details of all the snapshots, use _all in the end, like this:

curl -XPUT 'http://localhost:9200/_snapshot /es-backup/_all

Restoring a snapshot is very easy and a snapshot can be restored to other clusters too, provided the cluster in which you are restoring is version compatible. You cannot restore a snapshot to a lower version of Elasticsearch.

While restoring snapshots, if the index does not already exist, a new index will be created with the same index name and all the mappings for that index, which was there before creating the snapshot. If the index already exists, then it must be in the closed state and must have the same number of shards as the index snapshot. The restore operation automatically opens the indexes after a successful completion:

Example: restoring a snapshot

To take an example of restoring a snapshot from the es-backup repository and the snapshot_1 snapshot, run the following command against the _restore endpoint on the client node:

curl -XPOST localhost:9200/_snapshot/es-backup/snapshot_1/_restore

This command will restore all the indices of the snapshot.

Elasticsearch offers several options while restoring the snapshots. The following are some of the important ones.

There might be a scenario in which you do not want to restore all the indices of a snapshot and only a few indices. For this, you can use the following command:

curl -XPOST 'localhost:9200/_snapshot/es-backup/snapshot_1/_restore' -d '{ "indices": "index_1,index_2", "ignore_unavailable": "true" }'

Elasticsearch does not have any option to rename an index once it has been created, apart from setting aliases. However, it provides you with an option to rename the indices while restoring from the snapshot. For example:

curl -XPOST 'localhost:9200/_snapshot/es-backup/snapshot_1/_restore' -d '{ "indices": "index_1", "ignore_unavailable": "true", "rename_replacement": "restored_index" }'

Partial restore is a very useful feature. It comes in handy in scenarios such as creating snapshots, if the snapshots can not be created for some of the shards. In this case, the entire restore process will fail if one or more indices does not have a snapshot of all the shards. In this case, you can use the following command to restore such indices back into cluster:

curl -XPOST 'localhost:9200/_snapshot/es-backup/snapshot_1/_restore' -d '{ "partial": true }'

Note that you will lose the data of the missing shard in this case, and those missing shards will be created as empty ones after the completion of the restore process.

During restoration, many of the index settings can be changed, such as the number of replicas and refresh intervals. For example, to restore an index named my_index with a replica size of 0 (for a faster restore process) and a default refresh interval rate, you can run this command:

curl -XPOST 'localhost:9200/_snapshot/es-backup/snapshot_1/_restore' -d '{ "indices": "my_index", "index_settings": { "index.number_of_replicas": 0 }, "ignore_index_settings": [ "index.refresh_interval" ] }'

The indices parameter can contain more than one comma separated index_name.

Once restored, the replicas can be increased with the following command:

curl -XPUT 'localhost:9200/my_index/_settings' -d ' { "index" : { "number_of_replicas" : 1 } }'

To restore a snapshot to a different cluster, you first need to register the repository from where the snapshot needs to be restored to a new cluster.

There are some additional considerations that you need to take in this process:

- The version of the new cluster must be the same or greater than the cluster from which the snapshot had been taken

- Index settings can be applied during snapshot restoration

- The new cluster need not be of the same size (the number of nodes and so on) as the old cluster

- An appropriate disk size and memory must be available for restoration

- The plugins that create additional mapping types must be installed on both the clusters (that is, attachment plugins); otherwise, the index will fail to open due to mapping problems.

Manual backups are simple to understand, but difficult to manage with growing datasets and the number of machines inside the cluster. However, you can still give a thought to creating manual backups in small clusters. The following are the steps needed to be performed to create backups:

- Shut down the node.

- Copy the data to a backup directory. You can either take a backup of all the indices available on a node by navigating to the

path_to_data_directory/cluster_name/nodes/0/directory and copy the complete indices folder or can take a backup of the individual indices too. - Start the node.