CHAPTER 2

Sixty Years of Event Processing

Event processing technologies over the past sixty years up to today and likely developments in the future:

- Discrete event simulation

- Networks

- Active databases

- Middleware, SOA, and the enterprise service bus

- Event driven architectures

Event processing in one form or another is not new. It has been going on for the past sixty years or more. If we look at the history of event processing, we can see a natural development from the use of events in earlier applications to the twenty-first-century uses of events in communications, information processing, and enterprise management. History gives us a perspective on the origins of Complex Event Processing (CEP) and how the CEP techniques evolved and will evolve in the future.

This chapter gives a brief review on how event processing has been a basis for developing various technologies over the past sixty years. These developments happened very rapidly, one after the other, and they are all established technologies today—big business, as it happens! Chapters 5 and 9 describe a longer-term view of future developments in event processing.

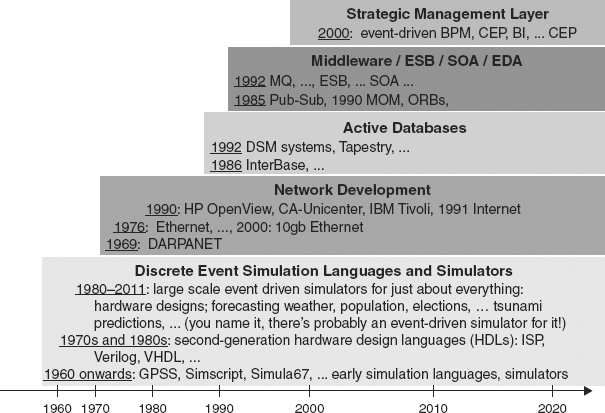

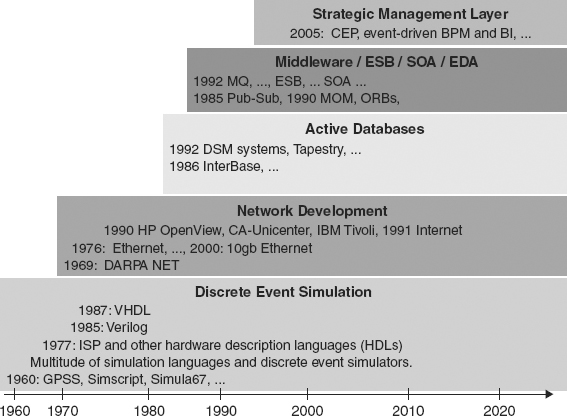

Four technology movements have arisen during the past sixty years, all of them based upon processing different types of events and for very different purposes. They are Discrete Event Simulation, Networks, Databases (among which Active Databases made explicit use of event processing in their design), and the Middleware movement—which includes many developments such as event driven service-oriented architectures (SOA), enterprise service buses (ESBs), and event driven architectures (EDAs). And the ascent of newer event-based ideas continues with business processes, business intelligence systems, and the like. Figure 2.1 shows the timeline over which these technologies developed.1 So modern event processing as we know it in the business world is not new. But there are some new ideas and concepts in its application to the management of enterprises; among them are (1) the types of events being processed, (2) the concepts and techniques being used, and (3) the objectives and goals. Some of those new ideas and concepts are CEP.

FIGURE 2.1 The Rise of Event Driven Technologies over the Past 60 Years

We should clarify the meaning of event here. There are two meanings. First, an event is something that happens. That’s the dictionary definition.

But there’s a second meaning. In using computers to process events with various kinds of analysis and predictions, we represent events as data (sometimes called “objects”) in the computer. We refer to those data as events, also. So essentially, event has a second meaning, the data representing the things that happen.2

We use the word event in both meanings. But we can always tell from the context which meaning of the word we are using. Programmers call words with two meanings overloaded—so we’re going to overload the word event!

There’s another distinction that is perhaps worthy of mention. A terminology that is sometimes used is the distinction between actual events and virtual events. It is a distinction that is made in the world of modeling and computer simulation to distinguish between events in an actual system and their simulations in the computer model.

An example of a virtual event is an event that is thought of as happening—like using your imagination to visualize the events that will happen if you don’t stop at a red light. Suppose, for example, there’s a car crossing on green, and you imagine a crash! But since you stopped at the red light, those events didn’t happen—you imagined them as virtual events. And you used them in decision making.

Event driven simulation refers to the use of events to drive the steps in computing with models to predict the behavior of systems—anything from a design for a controller for traffic lights, or a model for a manufacturing line, to a plan for battlefield operations. This is one of the earliest uses of events and event driven computing. Rooted in World War II, this area of computer programming took off in the 1950s. People started to use computers—a recent invention at the time—to predict how designs of devices such as controllers would behave before they were actually manufactured. The idea was to save costs involved in letting mistakes in designs go forward to the manufacture of the design and also to shorten the time taken in the design-to-manufacture cycle.

Computer simulation started out very simply as a haphazard activity in which people wrote programs, usually in machine code, to predict the behavior of a design or model. The activity quickly expanded to other things, such as predicting how the operations of a company could be improved to grow sales, and later on to much more ambitious undertakings like forecasting the weather.3

During the 1950s, the techniques for building computer simulations quickly became understood and formalized. The core idea is to use a computer program that models—or mimics—an actual system. The system could be anything from a factory production line to a company’s sales plans to voter behavior in an election. Simulation refers to executing the model on input data. It turned out that many simulations used events as a way to organize their computations. The event driven method is now widely adopted.

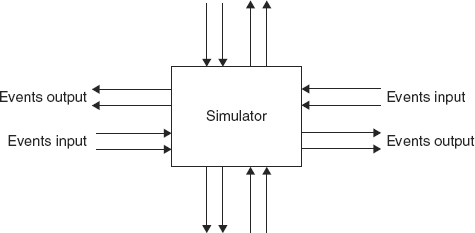

This became known as discrete event simulation, because events are used to control the progress of the computation that is simulating the system and to move that computation forward one step at a time (see Figures 2.2 and 2.3). Each step involves running the model on data representing the current state of the system—a discrete step. Events containing data are input to the next step. As a result, the model is run on those data and produces a new set of output events that contain data defining a new state. These events are input to the next step, and so on. So an event driven simulation produces a step-by-step succession of sets of events that represent states of the system being simulated.

FIGURE 2.2 Event Driven Simulation Cell

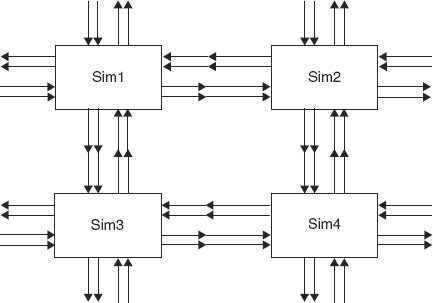

FIGURE 2.3 A Simulation Grid Showing Event Flows between the Cells

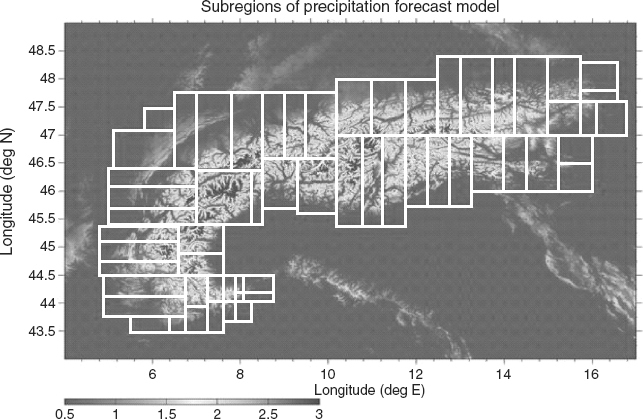

A discrete event simulator is often structured into cooperating simulators for specific parts of the system being modeled. The whole simulation is driven by events. For example, weather simulators are commonly made up of a grid of three-dimensional cells. Each cell is a simulator for the weather in a specific small area, usually covering a surface area less than fifty kilometers square and going up to several thousand feet. A cell takes events from neighboring cells as inputs, uses those data to compute a change of state for the component as a function of the inputs, and outputs its results to its neighboring cells. In such a simulator, a cell is an event processing finite state machine. Figure 2.2 shows a single cell. It waits and does nothing until it receives input events, so it is essentially “driven by events.”

The outputs from each cell are communicated as inputs to its immediate neighboring cells, and so on. The overall simulator is made up of a grid of cells (see Figure 2.3), each one driven by events from its neighbors and computing continuously as new inputs arrive. So events are flowing continuously in and out of the cells of the simulation. Each cell will have a different function and state from the other cells.

Figure 2.4 shows a grid structure for a simulator for weather forecasts over the Austrian Alps. Each cell will encode the unique geographic characteristics of its locality.

FIGURE 2.4 Grid Use in Simulating Alpine Weather Conditions over Austria

In general, event driven simulators use various scheduling methods to run each component of the simulation. A round robin scheduler is often used to ensure fair scheduling, so that each cell gets to run. As event driven simulation developed, many different types of events were used in simulations; in some cases they contained complex data, timing information, and details concerning their origin and destination.

Commercial companies began to see the potential of discrete event simulation. This activity escalated as the production cost benefits became obvious.

By the 1960s, most large manufacturing or aerospace companies had their own internal event driven simulation languages. Some of the better known early simulation languages are listed in Figure 2.1. Simula67 is perhaps the best known of them, notably because it was not only a simulation language but became known in the 1980s for its object-oriented use of modules—the programming language analogy of simulation cells.

Today, event driven simulation is big business. The design of almost any product is subjected to some kind of simulation before it is produced. For example, designs for a new computer chip are subjected to CPU years of simulation at every design level, from the instruction set level to the gate level, before they reach the production phase. Indeed, the further along the production sequence of “design—simulate—prototype—test—manufacture” an error survives, the more expensive it is to correct. And today in many other technology areas we can find the use of large-scale event driven simulations, from weather forecasting to the petroleum industry’s use of simulations in oil field exploration.

Some of the techniques in CEP were first developed to aid in analyzing the output from event driven simulations at Stanford University. This included the use of event patterns and event abstraction. At that time event driven simulators produced a single stream of events ordered by the time at which they were computed by the simulator. Many of the simulators did not show which events actually happened simultaneously in the actual system, and none of the simulators showed which events caused others in the system being modeled. The viewer had to figure all of that out!

The Stanford simulation analyzer did something new. Instead of letting the user try to analyze manually a simulation output stream of low-level events, all jumbled together, CEP techniques were first applied to organize the events in the stream. The stream was analyzed for patterns of low-level events that signified more meaningful higher-level events. This was used to produce a corresponding output stream of higher-level events.

The higher-level events were much easier to understand. These events were much closer to the concepts humans would use to design or talk about the systems being simulated—for example, CPU instructions rather than gate-level events. Also there were far fewer of them, which made them easier to analyze. The number of events produced by a simulation decreases exponentially as the level of the events increase. Errors tended to be magnified in the higher-level events and, therefore, much easier to detect. For example, a CPU instruction fails to complete. The corresponding design error at the gate level could be a single wrong connection among all the connections between two sets of 128 terminal pins, much more difficult to detect.

It was quickly realized at Stanford that these event processing techniques had wide application outside of the simulation area.

Event processing technology was also developed as a fundamental part of networking, starting with the ARPANET in 1969. But there was no recognition in the early days that there was such a thing as an event processing technology within networking. It was just there, as a part of networking. Of course, this was a very different kind of event processing from what goes on in discrete event simulation.

The emphasis was on using very simple types of events to build communications between computers over unreliable transmission lines. Communication was by means of packets. A packet is essentially a sequence of bits that encode a small amount of data, together with the packet’s origin and destination in the network. Computers communicate by sending packets to each other over the transmission line. The sending and receiving of packets are events—things that happen. To ensure that a packet arrives at the other end, the computers engage in a protocol of acknowledging receipt of each packet or resending it if an acknowledgement is not received within a time limit. If a packet is lost during transmission, it will be resent until it does arrive. The communication protocols are methods of processing events to ensure certain properties (e.g., reliability of communication). Note that there may be several “hops” between the sender and receiver, that is, intermediate computers that pass the packet onward.

At the lowest level, then, event processing in a communications network is essentially very small message processing. To send a human communication—say an email—the message is broken down into a set of packets, each packet is transmitted using the protocol, and the set is reassembled at the other end. One can imagine a continuous activity in the network consisting of events involved in sending and receiving lots of packets. This activity obeys standard communications protocols such as TCP/IP (the Internet Transmission Control Protocol Suite, http://www.yale.edu/pclt/COMM/TCPIP.HTM). The standards definitions for network protocols are layered into hierarchies of functions, such as the Open Systems Interconnection (OSI) seven-layer model.4 These functions define how an operation such as email is built up out of processing low-level events involving packets.

The concept of an event hierarchy is central to CEP, where the idea is taken further than the messaging standards by requiring that a means of computing events at one level of the hierarchy from sets of events at a lower level must be part of the event hierarchy definition. So whenever activity in a network results in a sequence of low-level events, the hierarchy definition can be used to compute the corresponding sequence of higher-level events. This gives the capability to view network activity in terms of more understandable higher-level events.

We will deal with computable event hierarchies in a later chapter. It will show how different computable hierarchies can be used to define more than one kind of higher-level event resulting from the same lower level events in a system. We call these higher level events views. For example, events in networks can be viewed at a higher level in terms of security concerns or performance concerns. Activity in financial trading systems can be viewed in terms of compliance to regulations, or in terms of profit and loss or economic impact. And an airline’s day-to-day operations can be viewed as equipment utilization events, as airplane load factor events, or as profitability events. The applications of the event hierarchy concept to systems that are essentially event driven in nature are very far reaching.

It is likely that these event based ideas, pioneered in the Stanford CEP project of the 1990s, will become part of new standards definitions for networks and other event driven systems in the future. Standards definitions should be executable by anyone who has a simulator and wants to see how a standard works in a given case. One has only to consider recent developments and issues.

Networks that developed in the 1960s and 1970s expanded rapidly, first as research networks, such as the ARPANET, and later into public networks and finally the Internet. Today these networks play the role of information highways fundamental to the running of our society. They now include dynamic networks in which the member elements vary at any time, such as the newer satellite-based networks that support mobile devices such as cell phones. The variety of protocols used in processing the communications (e.g., CDMA, GSM, 3G, 4G, etc.) has exploded in number, so new ones appear frequently. The communication activity results in events at many different levels of viewing. These are all event driven systems to which event hierarchy definitions can be applied.

Today, society depends upon the event processing that goes on in the Internet and mobile satellite networks. This event processing has become extremely complicated; in fact, it is not well understood. There are so many levels of issues involved that the event traffic has probably taken on a life of its own. What started out in the ARPANET as an experiment in network messaging to control and direct event traffic has been compounded with many other concerns, such as levels and quality of service, security, theft and hijacking of events, cyber attacks, and so on. In the early days, people didn’t think of such things. Now the question is how to redesign the Internet to deal with all the modern concerns! It would seem that CEP facilities must be built into next generation networks to enable real-time event pattern analysis of the traffic. As for the mobile networks, one can only wait to see what scams arise in both the administration and the misuse of event processing there.

Event processing technology was also developed in the use of databases in the 1980s. Traditional databases are passive, in the sense that they wait for queries and respond to them. Active databases are capable of invoking other applications in response to a query. For example, an active inventory database might initiate an action with an inventory control system to reorder an item if the database detects that the inventory level of that item has fallen below a specified level.

Active databases extend traditional databases with a layer of event processing rules called event-condition-action (E-C-A) rules together with event detection mechanisms. An E-C-A rule has the form

on event if Boolean-condition then action

It responds to the event, called its trigger, by evaluating the Boolean condition and executing the action if the condition is true. An event can be specified as a state change of interest—for example, a change in an inventory, in which case the condition would be the inventory level being below a specified level.

Active databases are another use of event processing. In this case, the idea was to extend the capabilities of the standard databases of the 1980s to bring them in line with the increasing use of real-time technology. One cannot say that they involve the kinds of volumes of event activity that occur in simulations or networking, but a part of their activity is driven by events. Also, they are of interest in building rule-based event processing systems because they had general problems, particularly with consistency of the sets of E-C-A rules, and race conditions between rules that might negate each other’s state updates. Approaches to dealing with these problems apply to building and executing sets of E-C-A rules in general. So active databases are one of the precursors to the modern event processing that we shall be dealing with.

The use of E-C-A rules can be found in many applications of CEP. But the notion of a single event trigger has been extended to the use of event patterns containing several events. The complete pattern must match in order to trigger the rule. Of course, a single event trigger is a special case.

Example 2.1: Detecting Possible Misuse of Credit Cards

If credit card C is used at Location L at time T

and then C is used at Location L1 at time T1

and Travel between L and L1 takes more than T1 – T

then alert C maybe stolen.

Example 2.1 illustrates an E-C-A rule in which the trigger is a pattern containing two card use events and a condition on the locations of use. The action when the trigger is true is to issue an alert.

Middleware technology started in the late 1980s and exploded in a variety of directions in the 1990s and 2000s. It started as an attempt to modularize the network layers and messaging protocols that were becoming the critical infrastructure that wired business applications together. The idea was to hide the messy details of the events and the various event transmission protocols by means of which messages flowed back and forth between users or between users and business applications. Middleware attempted to hide the events at the level of message transmission by providing higher-level communication functions in an application programming Interface or API. The users need not know anything about how their communications and requests were handled.

Thus the development of middleware can be regarded as containing the roots of a hierarchical approach to events and event processing. But there was a long way yet to go!

The perceived advantage of middleware in the beginning was that users no longer needed to worry about how their interactions were implemented in terms of message flows in the underlying network. Businesses could use middleware easily to link applications such as payroll, sales, accounting, inventory, and databases together across multiple geographic locations. Interactions between users and applications could be distributed across the enterprise, perhaps on a worldwide scale.

As middleware applications broadened, a variety of problems arose such as lower quality of service, loss of information, time lags, and the like, which led to many diverse server architectures being developed under the middleware cover to solve these problems. The event processing could involve many different levels of events, from heartbeats to message delivery protocols, but none of this concerns us. Today the use of middleware spans diverse areas from wireless networks to health care to business.

Two main categories of middleware were developed in the early days: synchronous communication, based upon remote procedure call (RPC), and asynchronous communication, based upon messages, called message-oriented middleware or MOM.

The synchronous API gave the ability to send a request for a service (the remote call) and wait until a reply was received (the service answer). This procedure call paradigm was distributed across the enterprise, and it fit right in with the object-oriented programming ideas prevalent at the time. A registry of services was also provided, along with ability to register a service. The classic example of this is Common Object Request Broker Architecture, or CORBA.5 Although the RPC paradigm could be viewed as event communication in terms of function call (one event) and function response (another event), one of the main criticisms was that it was too slow and cumbersome.

Perhaps the simplest form of the asynchronous MOM middleware was the publish/subscribe paradigm, often called pub-sub. Applications can act as publishers, as subscribers, or indeed as both. A middleware product that implements pub-sub provides an API that lets an application publish a message on a particular topic. Other applications can receive messages on this topic by simply subscribing to the topic through the API. Applications that have subscribed to a topic will receive all the messages that are published on that topic. This is a form of one-to-many communication. It is intrinsically asynchronous, because a publisher does not wait to get responses from subscribers, and subscribers can be doing other things while messages on their subscribed topics list are being published.

The event flow in pub-sub middleware can be viewed abstractly as flowing directly from a publisher to all the subscribers to that topic. In actual fact, messages are sent first to servers hidden somewhere in the middleware, and from there to the subscribers. Other common middleware paradigms for transporting events include point-to-point event transmission and message queuing.

The choice between them boils down to the particular cost/performance details of the kind of high-level event processing that will be hosted upon that choice. Each of these three kinds of middleware can emulate the other two kinds.

Middleware concepts did not really contribute to CEP. But middleware provided a fertile ground for CEP applications—to ensure correct functioning of the middleware, perhaps under imperfect conditions—and functioned as a medium that encouraged applications of CEP. For example, content-based routing of messages was an early application of some of the first principles in CEP. This is the routing of messages on middleware using both the content of the message (e.g., keywords within the message) and the history of patterns of activity in the messaging. It was an early application of event patterns. So in fact, middleware acted as an early application area for CEP, and as middleware morphed into more highfalutin’ products such as ESBs, SOAs, and EDAs, the opportunities for CEP continued to expand.

Middleware is often synonymous with the concept of an enterprise service bus (ESB). There is considerable variation in the philosophy of what an ESB actually is or contains. Obviously, the spirit is that an ESB is a high-level application-to-application communications bus, analogous to the hardware bus that carries signals between hardware components in a computer. Alternative views are that it is middleware, based upon de facto standards such as J2EE or .NET, that encapsulates and hides network details and offers services such as document routing. Some philosophies claim that ESBs contain software products for business activity monitoring (BAM) and for building service-oriented architectures (SOAs). So a commercial ESB may bundle together a lot of event driven messaging, often including Web services, SOAP, and XML messaging. Some ESBs go as far as to say they offer CEP in the form of event interpretation, correlation and pattern matching. Much of this is playing into the currently fashionable market for SOA, with a trend toward EDA.6

However, if we ignore the marketing hype, the upshot is that various flavors of middleware now represent a fourth technology development involving event processing. An ESB is based upon event driven network communications at a lower level that is hidden from application programmers. It provides a messaging interface that makes it easier to connect applications into distributed message-driven communicating architectures and to allow such application architectures to evolve as new applications enter the enterprise.

Chaos in the Marketing of Information Systems

Different approaches to designing, building, and managing information systems have proliferated over the past fifteen years, so much so that a new collection of techno-marketing jargon now presents a confusion of competing and overlapping categories or products. It is a minefield of marketing banners to the uninitiated buyer of information technology. There are no guidelines or standards that products in these various categories must satisfy, not for the features they provide, nor the problems they solve, nor how they perform.

Some marketing banners specifically target event processing. And each of the other banners has been “souped up” at some point in time with the addition of event processing. So we’ll review each marketing banner briefly and point out how and where event processing might apply.

First, there is the SOA arena (service oriented architectures), the BPM arena (business process management), and more recent arrivals in the area of event processing, including event driven architectures (EDA). There is much marketing hype associated with each of these three-letter acronyms.

SOA and EDA have often been presented as competing or conflicting, and religious wars have threatened to break out from time to time.7 However, the truth is that at the conceptual level they are complementary, and they all have a role to play in design and management of IT systems. And recently, complex event processing (CEP) has been added to these areas.

SOA is an outgrowth of the object-oriented programming movement of the 1990s. The basic idea is to modularize sets of related services—the business analogy to functions in object-oriented (O-O) programming—into separate modules. For example, a pizza business would be a module that provided sets of services for customers, such as menu browsing, ordering, paying, and delivery tracking.

SOA did not start out having anything to do with event processing. It was a movement toward structuring enterprise software systems. But commercial opportunities helped proliferate the specialized kinds of services that could be included in SOA, and consequently many different flavors of SOA appeared on the marketplace, including event driven SOA. Today, it is a total minefield to the newcomer.

Those who wish to navigate the SOA minefield in the hope of finding a solution to their enterprise IT problems had better start by first mastering the simple ideas that are at the root of this marketing nightmare. And simple they are!

SOA has three top-level defining concepts, services, modularity, and remote access.

What is a service? Think of it as something you want to use: a weather report or a credit rating. Send the weather service your ZIP code and you get back a report for your local area. In its purest and oldest form, a service is a function—you call it, requesting data, and you get an answer.

Modularity deals with the organization of related services into a single-server module. Sets of related services are grouped into server modules. A server module consists of two parts: (1) an interface that specifies the services that are provided and contains metadata defining how they behave, and (2) a separate implementation of those services. An implementation (or module body) can be anything from, say, pure Java code to an adapter that maps the services in the interface to other services provided in other modules or even on the Internet.

An interface containing metadata tells a user what the services do and how to use them. The interface is the users’ (i.e., the consumers’) view of the services. On the other hand, the separate implementation is hidden from users and can be changed without the users knowing. So, one of the goals of SOA is that users of services don’t have to understand how they work, which is pretty much the way drivers of cars operate these days. Of course, the implementation has to be truthful to the interface metadata (i.e., when you step on the brake pedal, the car should stop).

Consistency between an interface and its implementation is something that is ignored in most SOA presentations. In practice, if you purchase an SOA system, inconsistencies can be a source of surprises for the user—but that’s nothing new in software, is it?

Actually, a modern interface design should contain other information not normally required in SOA. It should also specify which services are required by the server module itself—that is, the services that may be used to implement the services that are provided. The reason for required service specifications has to do with architectural design issues that are beyond the scope of our book. Think of them as a privacy statement—“when you use one of our provided services, we will only send your data to these required services.”8

In the early years, a SOA module interface was an object written in a programming language like Java, but these days it is likely to be the user interface of a website. For example, services related to using stock market feeds, such as computing various averages and statistics over time windows (e.g., VWAP) are provided by a market feed website.

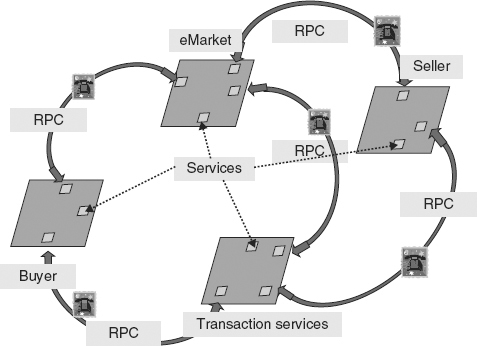

The remote access principle of SOA requires that services should operate in a distributed computing environment. SOA must therefore provide users with an ability to access services remotely. The remote access principle has different paradigms. Access in traditional SOA9 was by remote procedure call (RPC), also called request/reply (R/R). Figure 2.5 is a schematic view of a traditional SOA containing interfaces for eMarketplace services, users (buyers and sellers) and access to services by RPC.

FIGURE 2.5 An SOA Containing Various Services for Stock Trading

RPC involves a synchronizing handshake between user and service. A user sends data to a service (e.g., the remote call to a function) and blocks, waiting for a reply (e.g., the value of the function on those data). One can regard the call and reply as two events. But the user is not free to do anything else until the reply.

An everyday example of RPC is the telephone call. This may be a little more conversational that a straight request/reply call, but it is a remote access and requires synchronization. And as we all know, phone calls can often waste a lot of the caller’s time. We’ve all had the experience of phoning an airline for a service such as booking a flight and first having to deal with an automated menu of services we don’t want before reaching a human who can provide the service or answer the question we do want. A lot of that time is wasted time for the user. Nice for the airline who can employ fewer humans, at the expense of the user!

Event processing (EP) at the level of business events now enters the picture with a new conceptual paradigm for remote access. A user no longer needs to access a service by RPC. Instead, a user can access services by sending and receiving events, asynchronously.

The upside of event driven service access is that it is much more versatile and efficient than RPC. It allows all actors—users and services—to multitask. Instead of a phone call, you send an event—let’s say over the Internet. In an event driven SOA, a call to an airline is executed by the user sending a request event, “Need Service.” A protocol reply is, “Here is the menu of services.” The user chooses to send the event answer, “Human contact needed, callback number N.” Response event: “Your wait time will be exactly 15 minutes; make sure your callback number is free in 15 minutes. You will receive only one callback event.” Now the user is free to use the 15-minute wait time to do other things instead of hanging on the end of a phone line.

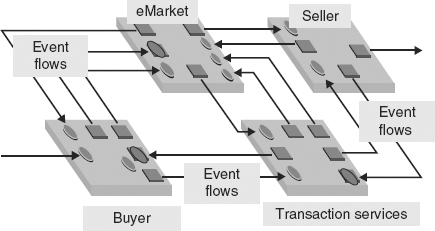

Perhaps it is true that communications have been driven by events since the earliest days of networks. First, there were network protocols like TCP. Then the level of events in communications gradually rose with the advent of pub-sub middleware, message queues, and so on. Nowadays, the communication between users and services in SOA can always be by means of events. This gives us event driven SOA (ED-SOA). This is depicted schematically in Figure 2.6.

FIGURE 2.6 SOA with Asynchronous Event Driven Services

Event driven communication decouples the handshake by using events and protocols. So event driven SOA is an evolution of traditional SOA in which the communication between users and services is by events instead of RPC. Services are triggered by, and react to, events instead of procedure calls. Usually, the implementations of services use communication standards such as WSDL, SOAP, and HTTP for remote access.

There is always a downside, of course. The increased versatility has magnified opportunities for everyone—users, services and, of course, crooks. Scalability has become another issue. We’re talking about real-time operations with, in some cases, very large numbers of events (e.g., 200,000 trading events per second in stock market operations). The management and business intelligence issues are becoming more and more challenging. This is where BPM and CEP come into play.

Now, beware! The next idea is to organize the service modules and access to them into architectures. The simple programming ideas quickly got complicated by the need to add new dimensions to the original three concepts, such as distributed architecture. And then came the smokescreen of commercial interests.

When you read further in the SOA literature, you may be told that “service component architectures (SCA) are part of SOA,” or “a SOA must use protocol standards like XML and WSDL and SOAP,” or “SOA is really just Web Services,” or “SOA must contain load balancing and central service catalogues,” or . . .

Indeed, these are all good and useful things that can play their part in various implementation approaches to organizing and building SOAs.10 But, at the conceptual level, there are only three principal concepts in SOA: services, modularity, and remote access. The rest are packaging and implementation details. The concept “architecture” itself does not yet have a widely accepted definition. And despite efforts toward architectures for SOA,11 there is no widely accepted reference architecture for SOA.12

Figure 2.6 is an illustration of a typical event driven architecture (EDA). Its architecture is a set of interfaces defining the provided and required various services and the communication between those interfaces using their services. The communication is by means of sending and receiving events. The communications are variously called pathways, connections, and channels. These terms all have definitions that vary in complexity and have not been accepted universally within the event processing community. We can think of them simply as event flows. The activity in the architecture is driven by events.

Event driven architecture (EDA) and SOA are related in two ways.

First, event driven access to services is an implementation paradigm for SOA—not a competing technology. ED-SOA is a very good way to organize distributed services over any kind of IT infrastructure. And we’re talking real-time use. Pretty much any modern enterprise’s IT operations are organized as an ED-SOA, especially if they use the Internet or commercial networks such as SWIFT. This includes financial services, stock markets, cellular service providers, automated supply chains and inventory systems, on-demand manufacturing, Supervisory Control and Data Acquisition (SCADA) control systems (e.g., for power stations, dams), banking, and retailing—the list is endless. In fact, it is difficult to think of anything that isn’t event driven, completely or in parts, these days. Of course, there’s legacy in most IT infrastructures, so in practice what you see in most system architectures is a mix of event driven and request/reply.

And the EDA field is expanding. New event driven communication protocols are being developed all the time to support new applications, such as mobile ad hoc wireless networking to enable wireless mesh computing (e.g., the OLPC project). These technology developments are all event driven.

Secondly, SOA can be taken as a design paradigm for event driven applications. In software systems we’ve seen this happening, first in the middleware products and more recently in the enterprise service bus offerings. But SOA designs of event driven systems have been around for nearly fifty years in hardware designs—long before SOA, in fact! That is why I have used a hardware diagram in Figure 2.6. Typically, all the components in a hardware architecture have interfaces that present services, that is, input events that request a service and output events that deliver the service. And their implementations are hidden. An adder requires two input events and then delivers sum-and-carry output events (i.e., its service). Registers, ALUs, and so on all define similar services with interfaces presenting their services (inputs and outputs). And the communication between the interfaces of these components is by triggering events flowing down the connecting wires, timed by a controller. That is, the architecture is a wiring up of the interfaces of the components. It is simple, it is SOA, it is disciplined design, and it is much less error-prone than software. Indeed, one might well ask, why can’t software be more like hardware!

To summarize thus far, SOA and EDA are complementary. EDA is the paradigm for communications in SOAs, and SOA is the design methodology for EDAs. In the future, we would like to be able to define EDA as follows:

Principle 7

An event driven architecture (EDA) is a distributed service-oriented architecture (SOA) in which all communication is by events and all services are independent, concurrent, reactive event driven processes (i.e., they react to input events and produce output events).

Many systems out there today cannot conform to this standard, since many of their components are not event driven13 and they were not designed with SOA principles in mind. Which brings up another question: Is there a test for whether a system is SOA or EDA? This seems to be about as difficult as asking if a system has a good design or not. Some systems are clearly SOA (e.g., built in Java using OO techniques and JMS). Others are clearly not SOA at all (e.g., lumps of COBOL code). But there’s a large space of systems in between. Perhaps someone might design a questionnaire for users: “Is the system you are using SOA/EDA?” It might contain questions like (with a scale of satisfaction from 1 to 10):

- Do you find services are organized in logical groups?

- Is their documentation easy to understand?

- Do the results from services agree with their documentation?

- Do you need to understand how services are implemented in order to use them?

- Can you find the service you’re looking for easily?

- Are you happy with your service response time?

- Do you get surprising results when you request a service?

Finally, the proposed definition of EDA above has to be viewed as a philosophy for the future. The spirit of the philosophy is this: “think hardware designs. Then think hardware designs in which the numbers of components and the pathways connecting them can vary at runtime. And then, think multilayered designs.” That kind of thinking leads us to dynamic, multilayered, event driven architectures.

Summary: Event Processing, 1950–2010

We have outlined four different event processing technologies that developed during the period 1950–2010. Most of these areas are continuing to develop and expand today. Each of these technologies, discrete event simulation, networks, active databases and middleware, use different types of events from each other, they use events in different ways, and their purposes and goals are completely different. But each of them led to problems that provided a demand for solutions involving CEP concepts and applications. Some of the most important requirements were for new kinds of real-time analysis tools and more sophisticated triggering and real-time control logic in enterprise business processes.

As a result of the explosion in use of electronic communications, a new layer of event processing technology centered around enterprise management has been developing, the strategic management layer, as illustrated in Figure 2.7. This latest layer is still developing, and we will have to see what it contains in the future. It contains a new category of event processing products and event analysis tools that are now coming into play. They are targeted to the strategic enterprise management. This event processing technology for management uses CEP as an enabling technology, one of many technologies employed in managing the enterprise. As the event processing problems to be dealt with increase in complexity, more and more CEP techniques will be needed. Not the least of the issues these tools will be needed to tackle is security.

FIGURE 2.7 Historical and Future Developments of Event Processing Technologies

The rest of this book is about the strategic management layer and the roles of CEP.

Notes

1 The timeline in Figure 2.1 is not linear. More event processing developments took place in later years.

2 We give definitions of both meanings of event in Chapter 3, “What Is an Event?”.

3 Robin Stewart, “Computers Meet Weather Forecasting,” Weather Forecasting by Computer, October 1, 2008. www.robinstewart.com/personal/learn/wfbc/computers.html

4 Rachelle Miller, “The OSI Model: An Overview.” SANS Institute Reading Room. www.sans.org/reading_room/whitepapers/standards/osi-model-overview_543

5 Ciaran McHale, “Corba Explained Simply.” www.ciaranmchale.com/corba-explained-simply

6 A survey of 125 banks and other financial institutions published by Gartner in August 2010 found that 40 percent identify SOA+EDA as their primary architectural approach for new applications, whereas 26 percent identify SOA, 10 percent as EDA, 18 percent as three-tier, and 6 percent as monolithic.

7 Do a Google search for “SOA 2.0” for a recent view of this.

8 The motivation for required service specifications is to enable implementations to be changed without introducing inconsistencies, so called plug-and-play—see Luckham, The Power of Events (Boston: Addison-Wesley), 2002, Section 4.6.1.

9 By traditional SOA, we refer to the state of SOA thinking about the time of the initial release of CORBA in 1991.

10 Although I have never had complete confidence in Wikipedia, the entries on SOA as of 2009 were as good a documentation of the state-of-concept chaos in the SOA implementation world as I have found.

11 www.oasis-open.org/committees/tc_home.php?wg_abbrev=soa-rm

12 “Service-Oriented Architecture,” Wikipedia, as of August 2009. http://en.wikipedia.org/wiki/Service-oriented_architecture

13 See the definition of EDA in this book’s Glossary of Terminology