CHAPTER 3

First Concepts in Event Processing

This chapter introduces some of the most basic concepts in event processing:

- Events

- Event clouds

- Layers of events

- Event streams

- Three terms in event processing: EP, ESP, and CEP

- Patterns of events

- Event hierarchies

- Complex event processing

Complex event processing (CEP) is the logical and obvious next step in the applications of event processing that are described in Chapter 2 and illustrated in Figure 2.7. CEP is a set of concepts and principles for processing high-level events—business events, for example. It describes the kind of modern event processing that is needed to support the management levels of today’s business enterprise. There are many different ways to implement these concepts by means of tools and applications.

This chapter is about some of the basic concepts of CEP, how they were first used in the early commercial applications, and examples of how they are being applied commercially today. We also introduce two other common terms used in referring to the processing of events today, EP and ESP.

New Technology Begets New Problems

By the late 1980s, communication by higher-level events had become the basis for running enterprises everywhere—in business, in government, and in the military. Any large enterprise had linked its applications across the networks from office to office, sometimes around the globe. It now operated on top of what was referred to as “the IT layer”—a layered system of software and hardware for transporting communications at the level of business operations while hiding its underlying details from its users. Business and management level events (e.g., trading orders, sales reports, inventory updates, schedules changes, or just plain email) were entering the IT layer of the enterprise from all corners of the globe, from external sources as well as from its own internal offices, and in all the different formats used by the business software applications of the time.

Today, enterprises are no longer processing a simple stream of events—a single one-after-the-other sequence of events ordered by their time of arrival. This has become a cell-phone world! In some cases, businesses are now dealing with hundreds or thousands of event feeds simultaneously, coming from many different sources and all jumbled together. Essentially the modern information-driven enterprise is operating in a veritable cloud of higher-level events, some of which are relevant to business decision making.

As the use of information technology has increased, new concerns have begun to appear. Not only did one need to keep the enterprise IT layer running smoothly, but one was faced with trying to understand what was happening in it. The event cloud obviously contained a lot of information—called business intelligence—that would be useful in managing the enterprise. But the cloud did not come with any explanation; events simply arrived! And in some applications, say in arbitrage trading across multiple markets, events arrived at rates of many thousands of trading events per second. Prior to CEP, attempts to extract business intelligence from the event cloud amounted to storing the events in data warehouses and later on trying to do data mining, thus losing any ability to take right-now action!

What kinds of information might one try to extract from the cloud of events flowing thorough the IT layer of an enterprise? Here are a few examples of the kinds of questions a real-time enterprise might want to answer:

1. Are our business processes running correctly and on time? This question might apply, for example, to supply chain operations or online retail websites or airline operations, or various areas of trading and finance where time is critical.

2. How will the current weather conditions in the southeastern United States impact our flight schedules in Chicago during the next 48 hours?

3. Is our information at risk? Has someone installed spyware on our IT layer? Is anyone trying to steal data from us?

4. Are our financial traders violating their permissions?1

5. Are our accounting processes complying with government regulations?

6. Is there an opportunity developing right now between different financial markets for our trading programs to make a profit?

7. Are our trucks making deliveries and pickups within today’s target schedule limits? If not, can we reschedule some deliveries or pickups right now to catch up?

8. Are our call centers servicing our customer requests in good time? How are our customers reacting?

Every business has questions similar to these that it would love to have answers to. They range across many different aspects of business, from managing the enterprise to detecting market opportunities as they happen, to protecting the enterprise or ensuring that its processes conform to policies. All kinds of possible uses of the information in the event cloud have arisen. And the emphasis is on right now—getting the answers as the events cross the IT layer.

These are the kinds of problems that created a market for CEP.

The most basic concept in CEP is the event. So we start by asking the obvious question.

We already mentioned that there are two definitions of the word event, and we use both of them in most of our discussions about event processing. So let’s clear this up once and for all by going over it in detail.

First there is the everyday use that is found in a dictionary:

Definition 1

An event is anything that happens or is thought of as happening.

Examples:

- A key stroke

- A stock trade

- A simulated event using a model of a system

- A crash landing in a flight simulator

- A dream or imagined happening

- A natural occurrence such as an earthquake

- A social or historical happening such as the abolition of slavery, the battle of Waterloo, the Russian revolution, or the 1929 stock market crash

The dictionary definition is quite subtle because, as we discussed before, it not only allows that an event can be something that actually happens in the real world, but also that it can be imaginary and does not really happen. Our example is driving a car and approaching a red light. You might well imagine the events that would happen if you didn’t stop! But since you do stop, the events you imagined don’t happen. Those events were imagined as happening, but never did actually happen. Nonetheless, they are events. In the simulation world these kinds of events are called virtual events.2

Definition 2

An event is an object that represents or records an activity that happens, or is thought of as happening.

Examples:

- A weather prediction output by a weather simulator

- A purchase confirmation that records a purchase

- A signal resulting from a computer mouse click

- Stock ticker message that reports a stock trade

- An RFID sensor reading

This second meaning, an event object, describes events that are processed in computer systems. Computer systems such as simulations use event objects to represent or record events (activities) that happen or could happen. Often the purpose of this kind of event processing is to predict what might happen in the real world. As our examples show, these objects can be any kind of data structure from binary strings to records and other complex data types.

For example, a weather prediction is an object that can be read, shared with others, or input into a travel scheduler. It represents an event that may or may not happen. A computer operating system reacts to events such as a mouse click or a keyboard stroke. Clicking a mouse results in an event internal to the computer that is processed by the operating system. And a sensor reacting to an RFID tag results in an event object that is a set of electrical signals from the sensor.

Now, we have a choice to make about how we talk about events. We could choose to talk about both events and event objects—that is, we can distinguish between an activity that takes place and an object that represents that activity by always calling the first “an event” and the second “an event object.” But this quickly gets tiresome. So we will use the word “event” in both meanings and rely upon the context to make clear which meaning is intended. Programmers would say that we overload the word event. But occasionally, for absolute clarity, we’ll use “event object” where needed.

Events are not just messages—a popular misconception! Yes, one form of an event might look like a message. But it contains additional information. An event describes not only the activity it represents, but when it happened, how long it took, and how it is related to other activities. So an event describes an activity together with its timing and causal relationships to other activities. We speak of events as being related in the same way as the activities they represent.

An event has a time associated with it—called its time stamp—that is the time at which the activity happened. If the activity took some time to happen, then an event that represents it will have a time interval associated with it—its start time and its end time. And some events may cause other events, if their activities cause one another. So events may be related by causality, time, and other relationships.

Note that in practice, some event processing systems may not include time stamps in their events. We would think of such systems as being deficient.

We will describe data structures of events and how their causal and timing relationships are represented in a later chapter. For now, here are some examples.

Example 3.1

Event1: I send you an email at 13.00 Pacific standard time.

Event2: You send me a reply at 17.00 Eastern standard time.

Event1 causes Event2.

In fact, we could be more precise by giving the times of the events according to pacific standard time as well as their relationship:

Event1 at 13.00 PST causes Event2 at 14.00 PST.

Example 3.2

Event1: A black car approaches a traffic light as it turns from green to yellow and decides to enter the intersection anyway.

Event2: At the same time, a white car approaches the red light on the cross street decides not to stop as the light turns from red to green.

Event3: The two cars collide in the intersection.

Event4: Both drivers report not being injured.

Event1 and Event2 cause Event3.

Well, we said it was an event-driven world! The point in Example 3.2 is that the first two events, taken to together, caused the third event. A pattern of two events caused the collision. Patterns of events, and how to specify them, are one of the fundamental concepts in CEP. Again, we can be precise by giving the event times, since it is crucial that event1 and event2 happened very close together in time.

But what caused event4? Some would say it has no cause, since it is a report of the absence of other events, namely injuries! Others would say that event3 caused event4 because the report of no injuries would only have been given because of the collision. I’ll leave it to you to decide. Causality between events is not always cut and dried. It is sometimes debatable and sometimes unknown.

Definition 3

Event Processing (EP) is any processing by computer of event objects.

Event processing (EP) is a very general term. It may refer to event-driven simulation—say, to make weather forecasts—or to the scanning of event feeds from airports and other entry points to the United States by Homeland Security to detect the entry of possible terrorists, or indeed any other kind of processing of event objects.

Complex Event Processing (CEP) is a subset of EP. We will describe the concepts of CEP later in this chapter.

What kind of event environment does today’s enterprise have to deal with? Well, nowadays most “wired-in” enterprises are using the Internet, cell phones, private networks, and other sources of events to do business, so they have to deal with a veritable cloud of events, as illustrated in Example 3.3.

Example 3.3: The Global Event Cloud

The term global event cloud3 is often used to refer to the set of all the events entering an enterprise, together with the timing, causality, and other relationships between those events. The event cloud enters the enterprise through its own IT layers and communications as well as through a lot of outside sources that the enterprise is using to conduct business.

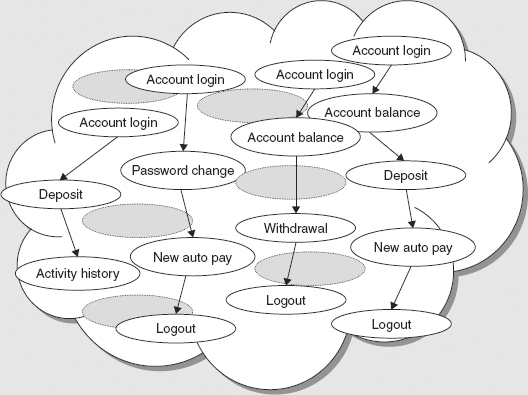

Figure 3.1 shows an event cloud of the customer actions on an online banking website. Each linear sequence of events represents a customer’s actions in accessing an account and making various banking transactions in the order they were done.

FIGURE 3.1 An Event Clous in an Online Banking Website

Example 3.3 assumes that a customer must perform banking actions one at a time. There may be hundreds of customers on the website at any one time. Example 3.4 illustrates how monitoring the event cloud can help.

Example 3.4: Detecting Patterns of Suspicious Activity in the Event Cloud

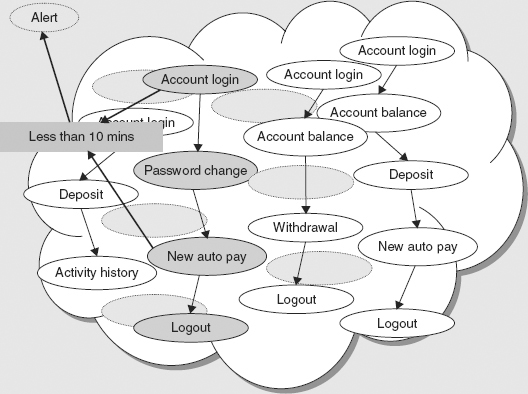

Figure 3.2 shows a result of monitoring the event cloud from the online banking website for patterns of events that might result from unusual activity. One of the event sequences involves an account login, a password change, and then the installation of a new automatic payment order to transfer money out of the account, all happening within a short period of time—under ten minutes.

FIGURE 3.2 A Pattern of Unusual Account Activity

Each of these events by itself is normal. But the order in the sequence is suspicious. Why change the password just before withdrawing money? If the action is fraudulent, it will deny the legitimate account holder access, making him or her unable to see what has happened and stop the transfer of money from the account. Detecting this pattern of events in the website might trigger an alert, which could itself trigger other rules to take further action, such as contacting the account holder or temporarily blocking the account from further activity.

Fraud detection is an expanding market area for commercial applications of event pattern processing today. Unfortunately, it turns out that the methods of detection being employed at the moment are often clumsily hardwired—e.g., some are little more than very long conditional branches of computer code that test all the various special cases of fraud that have been experienced. This makes the detection system inflexible and difficult to change, and of course, subject to inefficiencies and errors. Inflexibility is the friend of the crook! Adoption of high-level event pattern languages supported by event processing rules engines makes fraud detection systems much more flexible and easier to update as new scams appear.

Levels of Events and Event Analysis

Clouds of events form the operating environment of every modern business enterprise today. Although one might think of the cloud of events on the IT layer as one big jumbled mass, it turns out that the events can be organized into some very simple structures. One way to organize events is by layers or levels.

The layering idea goes back to the earlier use of event layers in defining industry standards for communication over networks by means of events. The messaging standards, for example, define six or seven separate layers of events.4 The lowest level is the physical electrical media that actually transport events called packets. Packets are just sequences of binary bits encoding the data and network information. There are lots of packets corresponding to one higher level event—like, say, sending an email message.

Levels of events are also used extensively in design methods, such as designing hardware chips, and in discrete event simulation of hardware designs.

The idea of levels of events has applications to business events too. It gives us one way to break down high-level business events into component lower-level events. One example is a very high-level business event, like a merger of two companies. That event will depend upon lots of lower-level business events: arranging financing and settling with stockholders, merging departments, eliminating jobs, unifying product lines, organizing marketing and sales strategies, and so on. The breakdown into lower-level component events is essential to scheduling and carrying out the high-level event, as well as to tracking the progress of the merger and keeping it on schedule.

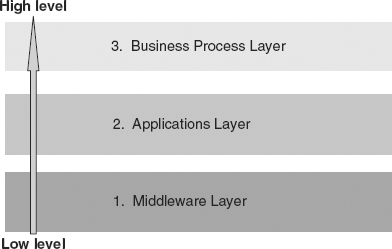

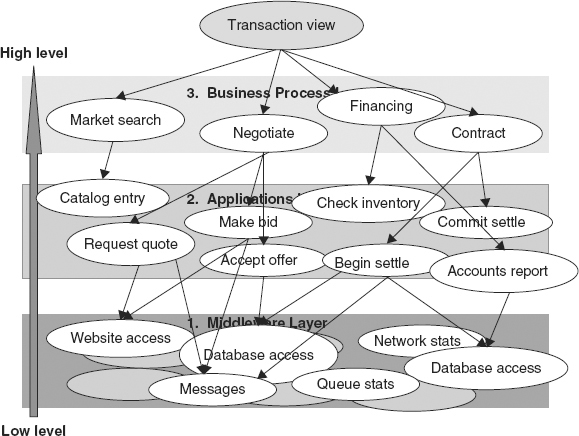

There are lots of other reasons for using layers in structuring everyday business events. A business transaction such as a sale or trade will be broken down into lower-level events used to execute that sale or trade, such as the delegation of contracting and accounting and financing. And these events in turn involve lower-level events, such as the use of various business software applications. There are even lower-level events, most of which are IT events rather than business events. Some of the typical layers in processing business events are shown in Figure 3.3—note that this is a small sample; there are other levels in between the ones shown.

FIGURE 3.3 Some of the Typical Layers of Events in Business Processing

Event layers are useful in building analysis tools that help us to track the steps in our business processes as they are running. We can see with the help of graphical interfaces how a business transaction depends upon lower-level operations. Business process analysis tools work just like the network management tools of earlier times. They can show us how different transactions that are being executed at the same time might be competing for resources, or what we can do to improve the times required for those transactions and identify which lower-level operations might be causing errors. We can get the statistical breakdown of events at each level, as well.

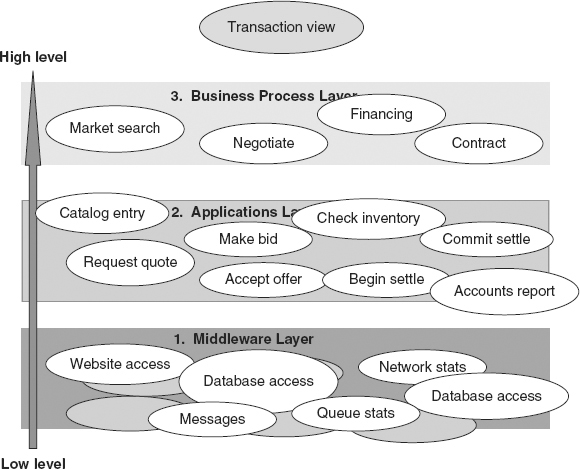

Figure 3.4 shows business events and lower-level events at each of the levels.

FIGURE 3.4 Layers of Business Events and Lower-Level Component Events

A high-level business transaction executed using application software tools breaks down into a series of process steps, such as a market search followed by a negotiation followed by setting up financing and finalized by negotiating a contract (see Figure 3.5). These events are all at the business process level in our hierarchy.

FIGURE 3.5 Dependencies between Events Created by Business Processes and Lower-Level Events Resulting from Actions by the Processes

Each of the business process level events is again composed of sets of lower-level events signifying the use of various applications. Market search might involve several catalogue searches, for example. A negotiation may be composed of requests for quotes, bids, counterbids, and finally acceptance of an offer. Financing may involve a lot of accounting operations, use of spreadsheets, and so on. Figure 3.5 illustrates a few of the possible dependencies between events at each of the three levels in our illustrations.

Level-wise breakdown of business process events lets us analyze the steps involved in creating any high-level event, perhaps to see how we could have done it better or to see what might have caused a process to hang up or go wrong. Also, if we have defined the dependencies between events at different levels, we have the ability to track and analyze our processes right down to the use of individual software applications, databases, and perhaps lower-level events corresponding to distributed communications as well, if necessary—perhaps if something goes wrong.

For example, if a transaction fails for something as mundane as a message going astray and not being answered, knowing how the levelwise breakdown is done is crucial in finding out the causes of the error. Analysis using this levelwise breakdown of higher events into sets of lower events was one of the first applications of event processing analytical tools.

Of course, business managers are interested mainly in the events at the business process layer. And if it is necessary to analyze what happened at the lower-level events, that task is handed off to the IT department! But the breakdown is there for everyone to use.

While there are several examples of industrial standards for event hierarchies—for example, in defining messaging protocol standards—very little has been done to define business event hierarchies. Business event hierarchies can be defined by sets of rules that specify the breakdown of higher-level events into sets of lower-level events.

Remark on Standards for Business Events

The need to have precisely defined standards for business and management-level event processing will be one of the next challenges that commercial event processing software will have to face.

Standardization of event representations, event processing terminology, and eventually, event hierarchies will become a necessity in the future for specific business areas. Doubtless, it will be a somewhat chaotic activity focused on narrow areas of business activity, with localized standards between groups of companies gradually becoming more widely accepted.

There will be a variety of driving forces behind such efforts. One will be a need to integrate different event-driven business systems to facilitate collaborative business agreements, mergers and acquisitions, and so on. Another might be standards efforts arising among consumers of event processing products. Additionally, the event processing vendors might agree on some standards for event processing in specific markets. There is also a distinct possibility of mergers and acquisitions in the vendor space resulting in a few large vendors of event processing, which would certainly lead to partial de facto standardization.

We hazard a guess that event processing standards defining layers of events will be seen in the next ten years in such areas as stock market analysis and trading, real-time inventory control for specific markets, retail stores management, consumer relations, airline operations, manufacturing, online retailing and marketing, to name a few possibilities.

Many areas of opportunity will develop in which event processing standards will be beneficial to all of the actors. This much is obvious. What is not clear is how and from where the standards efforts will come—the vendors, the consumers, or all of the parties. Such efforts, when resulting from collaborations, are known to be arduous and time-consuming, and they always deliver less than one would hope. Nevertheless, they are worthwhile, particularly when one considers the alternative of complete chaos.

The glossary of event processing terminology provided at the back of this book can be considered a small start in the direction of business event processing standards.

Some of the first commercially successful CEP applications processed streams of events. They did not use information about how the events were related, such as which events caused others or whether some events were higher level than others. Only data contained in the events were used. So events such as stock trades were used simply as messages.

These CEP applications were stock trading applications. They processed events in the order in which they arrived in the event feeds. They may have been receiving events from several different markets at the same time, so some events were clearly unrelated, while others may have had close trade relationships. The advantage was simplicity of implementing the event processing. It allowed high volumes of events to be processed in near real time. The disadvantage was that sometimes information that could be used to advantage in trading was lost, such as which pairs of events tended to occur together in small time windows.

Definition: An event stream is a linearly ordered sequence of events occurring within a specified time.

Note: The concept of event cloud includes the concept of an event stream as a special case in which the events fall nicely into a linear order, one after the other. The time between each of the events in order for the events to be considered a stream is left open (i.e., to be determined by the observer). Event clouds and event streams can be finite.

Common examples of an event stream are a stream of readings from a sensor or a ticker tape of stock market news. These kinds of event feeds are a sequence of events that arrive at a processor one event at a time. This is called the order of arrival ordering.

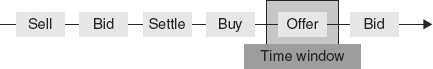

When events are processed in their order of arrival, this is called event streams processing (ESP). Typically, stock trading feeds or sensor data are processed in their order of arrival. For example, stock trading feeds reporting buy, offer, bid, or sale trades in stocks are the result of merging lots of events from different exchanges into a single stream. Figure 3.6 shows a stock market feed of trading events. The events are ordered by when they were entered into the feed. This is necessarily not the order in which the trades were executed.

FIGURE 3.6 A Stream of Stock Market Trading Events

Early trading algorithms processed trades in order of arrival. These event processors had to deal with high numbers of events in a short time window, say ten minutes. They had to make computations on the data in the events and produce a stream of results. Some trading algorithms were programmed to react in milliseconds when they detected trading situations indicated by patterns of events that had been defined beforehand.

So in these early financial trading applications, one of the requirements was that the event processor could handle the rate at which events arrived in stock trading feeds. Thus a name for a subset of EP and of CEP was coined: event streams processing (ESP). The intention was to infer that a tool that did ESP could handle high-speed event feeds.

There was another reason for the appearance of the acronym ESP. Some of the event processing vendors’ marketeers feared that the “complex” in CEP would arouse fears in their customers, associated with the idea of impenetrable and faulty software. This is quite understandable, one must say! So some vendors used the term ESP as a marketing ploy for a year or two. But they soon began to realize that they needed to explore other markets where the event clouds were, perhaps more complex than streams.

There are many event processing applications, outside of stock trading, where orderings of events, such as their creation order or their causal order, are not taken into account, even though the processing could be more efficient and yield more accurate results if they were. This is because the implementations of event processing are not as sophisticated as they should be. Consider Example 3.5.

Example 3.5: Event Streams Processing

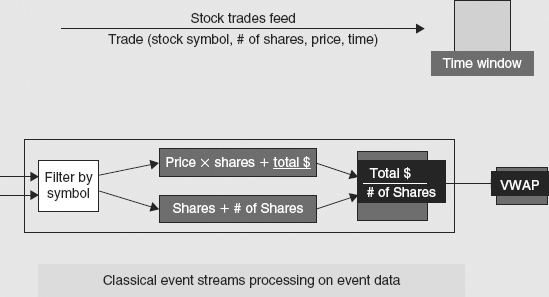

A common component in stock market event processing is the Volume Weighted Average Price (VWAP) computation. Figure 3.7 shows a typical event feed as a sequence of stock trade events where each event contains data such as the stock symbol, the number of shares of that stock traded, the price, and the time. As events arrive, they are processed during a time window that may be a few minutes up to perhaps an hour. The VWAP computation shows two feeds being processed simultaneously.

FIGURE 3.7 Event Streams Flow through Processing Functions to Computer VWAP

First, a filtering operation is applied to split the two feeds into a set of streams of trade report events, one for each stock symbol. This is a basic event processing operation. These streams of report events for trades in each stock symbol are sent to two functions. One computes total dollar amounts over a time window, while the other just adds up the total volume of the trades in the same time window. The results are a stream of dollar amounts and a stream of share volumes for each stock symbol. These two streams are fed into a third computation that divides the dollar amounts by the share volumes. The result is a stream of complex events giving the VWAP for each symbol. Only simple techniques of event processing are involved: filtering stock feeds by symbol and moving events between computations. The complexity of the algorithm lies in the functions that are applied to the events. So the real “smarts” are in the computations performed on the data in the events. The events themselves are treated simply as carriers of data. Actual financial trading applications will involve networks of such like computations in which VWAP is just one node, but the event processing is still very simple.

In a later chapter we will discuss another way to look at the VWAP events—as higher level abstractions of time windows of stock trade events.

Today, algorithmic trading is an increasingly important market for event processing applications. This is particularly true in arbitrage trading on electronic markets, in which the execution of trading orders is automated because the times involved are on the order of milliseconds. The algorithms used at trading companies are often closely guarded secrets.

In general, an enterprise has to deal with many event streams simultaneously, and the events in these streams can be connected or related in many ways. Events in the same stream are related by their arrival time in that stream. They may have different timing relationships as well—their creation time, for example, which is not necessarily the same as their order in the stream. Events in different streams may also be related; some of the events in one stream may be the causes of events in other streams.

If knowing how events are related is important for the kind of event processing that needs to be done, then the streams cannot be treated separately, or shuffled into a single stream. The processing must deal with the event cloud and the event relationships that exist in the cloud, such as timing and causality.

The order of arrival of events can be misleading for many applications. It may not be the order in which events were created, or happened. The time at which an event was created is called its creation time. An event A can be created earlier than another event B according to the local times5 in which they were both created, but B can arrive at the processor before A does.

Consider, for example, medical reports of incidents of a disease that may be suspected as a precursor to an epidemic outbreak. These may be coming from doctor’s offices all over the country. Some reports are made at the same time—in parallel, so to speak. And the reports from different offices are independent because there is no relationship between the doctors or the patients. The reports arrive at the Health Department as an event cloud. They may be shuffled into one big stream at the Department’s information office, but the timing information in the reports would not be ignored, and it certainly isn’t the time at which the reports arrived.

Many industrial event processing problems cannot be simplified by treating the incoming events as a stream ordered by their time of arrival. Common relationships between events are usually not linear. For example their creation time is often “all over the place”—that is, it is not a nice orderly sequence. Some events can happen at the same time, while others happen hours apart. The times of some events may need to be adjusted to a common time zone because they happened in widely separate locations.

Causality between events is usually nonsequential too. Take your email folder as an example. A message in it caused another message, if the second one is a reply to the first one. You can get causal chains, message M1 caused Message M2, which caused M3, and the like—that is, a reply to a reply to a message. But your message folder is not likely to be one single long chain. It is far more likely to contain lots of short chains of three or four messages and replies.

Example 3.6: Airline Baggage Handling

One industry that was an early adopter of event processing was the airline industry and, more generally, the transportation sector, which includes railways and shipping. Adoption within a company is not a single decision, since it usually involved substantial investment not only in the event processing software but also in integrating the new technology into the company’s operational structure.

Adoption usually starts with a toe-in-the-water proof of concept experiment.

One of the early proof of concept experiments was in airline baggage handling. This is both an airport as well as an airline responsibility. The airport starts with the problem, but at certain points the airline should take over.

The motivation, of course was money—quite simply, the cost to the airline of a misdirected bag. More than 42 million items of baggage were mishandled or delayed in 2007, an increase of 25 percent on the previous year, costing airlines and airports an estimated $3.8 billion.6 The biggest cause was baggage being mishandled when passengers transferred flights. The next major cause was failure to load baggage (16 percent), followed by ticketing error, passenger bag switch, security, and “other,” which together accounted for 14 percent of the total.

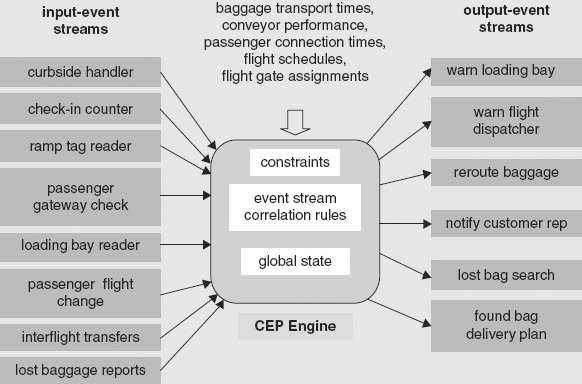

Ideally, an event monitoring system to tackle this problem needs to process many different event feeds resulting from diverse monitoring sources. These include (1) the various pathways that the baggage takes through an airport to be loaded onto the flights and its subsequent handling at connection points and at the final destination; (2) the progress of passengers through the airport, security checkpoints, and final boarding on the flights, as well as at changes of flights; and (3) the progress of the flights. Essentially, such a system is operating in a cloud of airport and airline events, not a simple event stream.

The input-event feeds shown in Figure 3.8 include the baggage tag readers at curbside, ticket counter, and at various points on the pathway transporting baggage from the counter to flight. Similar feeds could be positioned at passenger check-in gates and so on. In total, the events from the input streams form a cloud. Events in different streams are related by time and by common data elements such as passenger names. The information in the input cloud should be checked for consistency—but currently, it is not! A prime example is consistency between the routing of baggage and the routing of a passenger from the ticket counter to the gateway to board a flight. A passenger and a bag are linked together at the ticket counter. At that point the system should know, if it has enough smarts, which flight both should be on. But inconsistencies can and do occur en route through the airport.

FIGURE 3.8 Input- and Output-Event Streams and State Data in Event Processing for Baggage Handling

Typically, a bag can get lost for different reasons—for example, simply falling off a conveyor and failing to arrive, or being misdirected and arriving at an incorrect flight. A passenger can fail to board a flight or get rerouted or delayed by security. The system should detect these situations and issue notifications. More advanced systems might replan baggage handling according to changes in the passenger schedule.

In practice, current baggage handling systems handle only some of these event streams and detect just a few of the situations we have mentioned. Modern airport systems use handheld RFID scanners at points along the baggage routes. But a passenger can disappear into a black hole of security checks or simply get distracted by shopping.

Detecting simple mishandling errors is the first issue. But heading off errors before they occur requires detecting patterns of baggage mishandling in the input-event cloud as they are happening. Patterns of events can be used to detect situations where the handling of a bag is likely to result in an error.

Example 3.6 and Figure 3.8 show an idealized view of an event processing system for airline baggage handling. It is getting lots of input streams from the left. The system contains an event pattern rules engine—that is, a CEP engine. It also has smarts about airport operations, such as baggage transport times within the airport, how the conveyors are performing, flight schedules and schedule updates, gate assignments, and so on. And it outputs various notifications and warnings on the right in Figure 3.8. Examples of some of the outputs are to warn airline flight dispatchers about late baggage, put out advisories to plan re routing baggage, notify airline customer reps that baggage has missed a flight, and plan delivery of found baggage that had been lost.

Some examples of simple event pattern monitoring rules in baggage handling systems are:

Counter checks:

if bag.tag.flight_number =N and N.destination = passenger_ticket.destination

then accept bag

else raise error and reject bag.

if flight.departure_time – passenger.check_in_time < bag.transit_time

action notify (passenger, “insufficient time”)

The counter checks are aids to airline counter agents who deal with passengers and baggage. They are real-time event monitoring rules. The first rule compares the destination of the flight on a baggage tag with the destination on the passenger’s ticket when it is issued at the ticket counter. The second is a rule that will warn passengers that their bags may not reach their flight in time to be loaded.

Trolley loading check:

if bag.tag.flight_number = trolley.destination.flight_number

then load bag

else send bag to rerouting

This is an example of a rule that could avoid some baggage handling errors. It could be applied at many points in an airport—for example, when bags are in transit between connecting flights. The event feeds could be generated by handheld RFID tag readers during unloading and loading.

Baggage handling systems using CEP rules have been in operation at some airports for a few years. The event processing in these systems can involve quite sophisticated CEP principles. They are being continually improved by updating the patterns of events that they use to monitor the event inputs.

We may expect to see proactive flight management event patterns like the following example in future systems:

Security bag loading check:

if bag.tag.passenger_name = X and loaded(bag) and not boarded (X)

and gate.closed

=> action set flight.status = hold until unloaded(bag)

This rule would take a bag off a flight after it had been loaded if the passenger was a “no show.”

More recently, event processing systems are also being employed to deal with other dimensions of airline operations such as crew scheduling and aircraft maintenance operations.

Complex Event Processing and Systems That Use It

Complex event processing (CEP) does not mean “very complicated processing of events.” It is simple processing applied to events that might sometimes be very complex, but usually not as complex as some of the events we face in daily life!

The term CEP for complex event processing was coined in the late 1990s at Stanford to codify what was going on in the Stanford event processing project and to distinguish that work from research in networks and other areas. It entered the public domain of event processing terminology in 2002.7 The acronym stuck as the name for products that process high-level events in business transactions and generally in many areas of enterprise management. Other terms such as “business event processing” and “real-time business intelligence” are also often used.

CEP refers to (1) a set of concepts and principles for processing events and (2) methods of implementing those concepts. Some concepts are well known from other kinds of software systems. Other concepts are only just beginning to enter the state of general practice. For example, one of the key concept deals with how to specify patterns of events and the elements of computer languages needed for defining event patterns (i.e., the expressive power of pattern languages). Another is how to build efficient event pattern-detection engines. A third area of CEP deals with strategies for using event patterns in business event processing—for example, building systems of event pattern–triggered business processes and monitoring their execution and performance, both when they run correctly and when errors occur. Finally, there are concepts that deal with how to define and use hierarchical abstraction in processing multiple levels of events for different applications within the enterprise.

CEP is best understood in the context of how and where its concepts and techniques are used in an event-processing system.

Event processing systems involve many different technologies that include CEP. They usually deal with several event feeds concurrently from different sources and with different types of events. Event arrival rates from a feed can vary from a few events per hour to thousands of events per second. The techniques of processing that are applied vary widely depending upon its goals.

An event processing system may flow the events in a continuous stream through a variety of processing steps that apply various technologies. The processing time of each step is an important consideration.

The commonest first step is to apply very simple criteria to get rid of most of the uninteresting events (i.e., events that cannot contribute to the goals of the processing). This is called filtering. For example, events might be filtered by whether they contain names on some list (say names of stocks), or by who sent them or their date of origin. These criteria are called filters. The main requirements of filters are that they take very little time to execute and that they will not eliminate events that might be relevant.

The remaining events after filtering are processed by more complicated techniques. In stock trading, as an example, a next step might be to apply various statistical algorithms to the data in the events. Another processing step, say in medical systems, might be to group events together by some commonality, such as “all reports of malaria cases from West Africa,” and then to apply summarization methods over time windows, say week by week over the past eight weeks. These kinds of steps will result in new events being created that contain the summary data.

One of the cardinal principles of an event processing system is that events are immutable (see the following discussion). They may be copied or they may be ignored in all processing steps.

One of the first CEP concepts that may be applied at the earlier processing steps is event pattern detection. Patterns of events are critical to many areas of event processing, from stock trading and sales analysis to fraud detection and security. We will have a lot more to say about event patterns and how to specify them!

Another CEP concept is event abstraction. Event-abstraction methods allow us to build systems that can analyze vast numbers of low-level events and create high-level events that summarize—or abstract—information contained in those events. There are far smaller numbers of higher-level events resulting from first round methods. These high-level events are called aggregated events, enriched events, or abstract events, depending upon the kinds of techniques that are used. The result is fewer events, but ones that provide views of the information contained in the event sources that are meaningful to humans. High-level events are more suitable for specialized analysis that may draw on technologies such as:

- Artificial intelligence and heuristic programming

- Statistics and probability theory

- Game theory and decision analysis

- Event driven simulation

- Semantic analysis

- Other technologies

A processing system may cycle many times between applying CEP and applying other technologies, so all of the technologies are interwoven, so to speak.

Here’s a brief outline of some of the main concepts of CEP. Some of them will be quite familiar, since they have been common elements of many software systems. Other CEP concepts are new and need explaining which will be done in later chapters.

- Immutability of events. In CEP all events are immutable. An event cannot be altered or deleted. It can be dropped from any further consideration in an event-processing system, which in effect is the same as deletion (e.g., as a result of filtering). But if an event makes any contribution to the processing, then that original event must always be retrievable if needed. Copies can be created that include changes to data contents or other attributes. Such modified copies are new events. There are strong reasons for immutability. See the discussion following this list.

- Adaptation. The input events are transformed into formats required by an event processing system. This is a preprocessing step that is in general use in any event processing system. Adaptation is often nontrivial, since some event processing systems require many different adaptors to cope with the different types of event input.

- Filtering. Filtering out irrelevant events is a first step toward reducing incoming events to manageable proportions. It should be a fast, small computation step. Criteria for irrelevancy are often decided in advance. But a CEP processor should be flexible enough that the criteria can be adjusted at runtime. Filtering criteria depend upon the goals of the event processing. Filtering is often combined with adaptation. The simplest CEP techniques are used in recognizing irrelevant events or sets of events. There may be several different filtering steps applied in sequence. Many irrelevant events still get through, because filters are usually configured to err on the side of letting through anything that might be useful.

- Prioritization. Prioritization of events as they arrive is an important early step. High-priority events are the ones that require immediate response from the business. They are usually delivered on reserved or private networks and media specially set up to carry the critical operational information. In fact, prioritization may be set up to take place before or after filtering, or sometimes the two operations are applied alternately on the input-event feeds. High-priority events must be dealt with first.

- Computation on event data. The data contained in events are used in computations. This step may involve computations carried out in parallel on the contents of the events. CEP allows any kind of algorithm to be applied at this step. In fact, a set of algorithms may be applied concurrently and the resulting new events put back into the event cloud for the next step.

- Event pattern detection. The event input is searched for the presence of patterns of events. An event pattern is a template that can match many different sets of multiple events, or, in a special case of single event patterns, it may match many different single events. It may require particular data to be present in events, or the events in a matching set to be related in various ways—for example some events may be required to be causes of other events in the set, or to happen within a specific time window. An event pattern may also require some types of events to be absent. CEP suggests minimal requirements on the power of expression of a pattern language.

- Exception detection and handling. The event processing may also result in the detection of errors and anomalies, called exceptions. In CEP exceptions are detected by the presence or absence of events and patterns of events—for example, absence of an event pattern is detected when that pattern fails to match within defined time bounds. When exceptions occur, they are handled by creating new events that signify the specific error that has happened. Note that the absence of an event or event pattern often indicates an error within the system that is the source of the event input.

- Event pattern abstraction. Whenever a pattern of events is detected, the processing may take the action of creating new events that contain properties of, and data in, the set of events that matched that pattern. For example, a new event may contain a summary of the important data contained in several of the events in the set, or it may be a report signifying that a match of the pattern happened. One purpose of the new events is to summarize the important details of the matching events and to filter out or omit what is not important. Another purpose is to make sense for humans of large numbers of low-level events. The new events abstract the matched set of events.

- Event pattern–triggered processes. Reactive processes triggered by patterns of events are used for automating the actions a business or enterprise must take in response to specified patterns of events. Business processes must be set up to react to expected patterns of events. Understanding the enterprise’s operations is part of planning in advance and defining the reactive processes. This step requires planning for patterns of events that may happen and having solutions ready to take action. Typically, such systems are rule-based, each rule being an event pattern trigger and a business-process response to the trigger. It is important that the set of rules can be easily changed while the event processing system is operating.

- Computable event hierarchies. Events that happen in a particular kind of business operation or a specific technology usually fall into layers. Some events are low level, and some are higher-level events. The difference is that higher-level events are composed from sets of lower-level events. For example, a completed sale might consist of several events, such as negotiating a price, checking a customer’s credit rating, processing a down payment, updating inventory, and so on. The sale is at a higher abstraction level than the steps in negotiating it. Events within a specific area of activity can often be categorized into several layers. Some of the goals of event hierarchies are (1) to focus information for individual users and (2) to reduce the number of events that need to be processed at higher levels.

There are several industry standard event hierarchies today, examples being the famous Open Systems Interconnection (OSI)8 seven-layer hierarchy of messaging operations, or the six-layer TCP/IP network protocol. Such classifications promote common terminology and interoperability of event processing systems, diagnostic systems, reuse of rule libraries, and so on. Event hierarchies are important in delivering relevant views of business operations to different role players in the enterprise, as we shall see.

In CEP, event hierarchies are computable. We’ll explain what this term means and its uses later. It is true that, as yet, little has been done in exploring the use of hierarchical classifications in commercial CEP. This is a point at which CEP theory goes beyond current commercial practice.

Of course, there are interesting open questions where CEP concepts and techniques may need to be extended to process information in new types of event sources. One example is what kinds of event processing are needed to take advantage of public event sources such as social networks such as Twitter and Facebook (see Example 3.7). These sources are sometimes the carriers of unexpected and important information—for example, (1) how various items are selling, or are viewed by the public; and (2) rumors and early indications of possible epidemic illnesses and outbreaks or public health breakdowns. Another example of a potentially rich event source is cell phone communications.

To deal with event processing in new event sources as they continue to arise, we may need to apply CEP in ways that have not yet been explored. The definition and use of probabilistic event patterns is one area that is yet to be explored both in theory and in event processing systems. And of course, there are always questions of privacy that perhaps go beyond the domain of technology such as CEP.

Example 3.7: Beware of What You Tweet!

An individual with a good medical insurance plan from his workplace reported a serious back injury to the insurance company. He claimed it necessitated his taking leave from work, and thus he required insurance compensation for loss of wages and medical costs.

During the processing of these claims, an insurance company adjuster Googled the individual and discovered Facebook and Twitter accounts in the individual’s name. The Twitter account revealed the claimant tweeting about his golf game during the time he was supposedly off work injured. Needless to say, the insurance company did not pay, and the individual was faced with fraud charges.

While this example resulted from a human-directed search, it may be expected that event processing applications in the area of fraud may do similar searches for information on the Web and other sources automatically in the future.

Discussion: Immutability of Events

Once an activity has happened, it is history. We cannot alter history, much as most of us would like to! An event object represents an activity that happened—it signifies that activity.

If we could alter the event object by changing its data parameters or its attributes, it would no longer signify that activity. It then becomes questionable as to what activity the altered event object would signify, if any at all. An altered event, therefore, has no known significance. And we cannot trace it back to anything that had significance, because that traceability to the original event is lost.

An event processor that alters the input events in situ is doing object processing. Not event processing. The objects resulting from the processing have no significance, real or imaginary.

On the other hand, if a processor creates a new event from its input event by changing some of the data parameters or attributes of the input, and maintains traceability to the original event, then the new event does have significance. It is the result of operations on an event with significance. If the operations signify real-world activities, then the new event will also signify an activity.

Example 3.8: Enriching Events for Record Tracking

Suppose event E1(M, S, C, T) signifies that I received an email M on a topic S from a correspondent C at time T. I may be interacting with several correspondents on the same topic, a back and forth of arguments and data and counterarguments. And I may want to keep track of the timeliness with which various correspondents respond. A trace of the previous email on topic S with correspondent C will be contained in M. So I have a tracking system that creates a new tracking event E2 from E1 by adding to the data contained in E1, say,

E2 = E1(M, S, C, Avrg, T, T + ΔT)

where Avrg is the average time it takes C to respond to all my emails on topic S using the email trace in M, and T + ΔT is the time at which E2 is created.

I use the E2 events described in Example 3.8 to decide which correspondent to interact with next, depending upon who is likely to respond the most quickly. E2 is created by enriching the data in E1. It is traceable back to E1 and related to it by an enrichment operation. E2 signifies the average response time of correspondent C to a particular topic S at a particular time of day T. Of course, it doesn’t encode how much I agree or disagree with the correspondent, but I could add another enrichment parameter for that too!

The point of this example is that E2 is a different event from E1, created at a different time, and traceable back to E1. If, instead, I altered E1 by adding the Avrg to the parameters, it would cease to have any significance with the real world. It does not signify the email that C sent to me at time T. It is an event obliquely associated with that. I would be doing bookkeeping in situ. I can make sense of it, but I would need a dedicated event traceability system dependent upon what kinds of bookkeeping operations I was performing on events to make sense of the altered events.

This chapter describes basic concepts in event processing (EP) in general and outlines the concepts in complex event processing (CEP) in particular. It describes the concepts: event, event stream, event cloud, patterns of events, levels of events, and computable event hierarchies. The next chapter will outline the stages by which modern event processing happened.

But the reader may now be wondering, what is it all good for? Chapter 5 will deal with the current markets for CEP products and services, the size and growth of these markets, and how CEP is being applied. Later chapters deal with the basic concepts and strategies for their application in event processing in more depth. There is a final chapter (Chapter 9) on our vision of the future of event processing.

Notes

1 Carrick Mollenkamp and David Gauthier-Villars, “France to Fault Société Generale’s Controls in Report” Wall Street Journal (Eastern Edition), February 4, 2008, A3. For more information on Jérôme Kerviel, see Nicola Clark, “Rogue Trader at Société Générale Gets 3 Years,” The New York Times, October 5, 2010. www.nytimes.com/2010/10/06/business/global/06bank.html?partner=rss&emc=rss

2 See also the terminology “virtual reality,” http://en.wikipedia.org/wiki/Virtual_reality

3 This terminology was coined in The Power of Events, section 2.1.2, p. 28.

4 Actually, these standards define services (functions) at each level. But operationally, calls to implementations of these functions would result in layers of events.

5 Comparing creation times of events may require adjustments for differences between the timers or clocks local to where those events were created.

6 The figures come from the fourth annual SITA Baggage Report compiled by specialist baggage tracking company SITA, whose technology is used by 400 airlines and ground handling companies around the world. www.sita.aero/about-sita

7 With the publication of The Power of Events.

8 Rachelle Miller, “The OSI Model: An Overview,” SANS Institute Reading Room. www.sans.org/reading_room/whitepapers/standards/osi-model-overview_543