7

Control Charts for Variables

- 7-1 Introduction and chapter objectives

- 7-2 Selection of characteristics for investigation

- 7-3 Preliminary decisions

- 7-4 Control charts for the mean and range

- 7-5 Control charts for the mean and standard deviation

- 7-6 Control charts for individual units

- 7-7 Control charts for short production runs

- 7-8 Other control charts

- 7-9 Risk-adjusted control charts

- 7-10 Multivariate control charts

- Summary

| Symbols | |||

| μ | Process (or population) mean | ki | Standardized value for range of sample number i |

| σ | Process (or population) standard deviation | σ0 | Target or standard value of process standard deviation |

| Estimate of process standard deviation | Standard deviation of the sample mean | ||

| Sample average | |||

| R | Sample range | Sm | Cumulative sum at sample number m |

| s | Sample standard deviation | w | Span, or width, in calculation of moving average |

| n | Sample or subgroup size | ||

| Xi | ith observation | Mean of sample means | |

| W | Relative range | Mean of sample ranges | |

| g | Number of samples or subgroups | Gt | Geometric moving average at time t |

| Target or standard value of process mean | Mt | Arithmetic moving average at time t | |

| Hotelling's T2 multivariate statistic | |||

| Zi | Standardized value for average of sample number i | MR | Moving range |

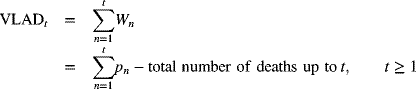

| pn | Predicted pre-operative mortality risk for patient n | Wn | Risk-adjusted weight function for patient n |

7-1 Introduction and Chapter Objectives

In Chapter 6 we introduced the fundamentals of control charts. In this chapter we look at the details of control charts for variables—quality characteristics that are measurable on a numerical scale. Examples of variables include length, thickness, diameter, breaking strength, temperature, acidity, viscosity, order-processing time, time to market a new product, and waiting time for service. We must be able to control the mean value of a quality characteristic as well as its variability. The mean gives an indication of the central tendency of a process, and the variability provides an idea of the process dispersion. Therefore, we need information about both of these statistics to keep a process in control.

Let's consider Figure 7-1. A change in the process mean of a quality characteristic (say, length of a part) is shown in Figure 7-1a, where the mean shifts from μ0 to μ1. It is, of course, important that this change be detected because if the specification limits are as shown in Figure 7-1a, a change in the process mean would change the proportion of parts that do not meet specifications. Figure 7-1b shows a change in the dispersion of the process; the process standard deviation has changed from σ0 to σ1, with the process mean remaining stationary at μ0. Note that the proportion of the output that does not meet specifications has increased. Control charts aid in detecting such changes in process parameters.

Figure 7-1 Changes in the mean and dispersion of a process.

Variables provide more information than attributes. Attributes deal with qualitative information such as whether an item is nonconforming or what the number of nonconformities in an item is. Thus, attributes do not show the degree to which a quality characteristic is nonconforming. For instance, if the specifications on the length of a part are 40 ± 0.5 mm and a part has length 40.6 mm, attribute information would indicate as nonconforming both this part and a part of length 42 mm. The degree to which these two lengths deviate from the specifications is lost in attribute information. This is not so with variables, however, because the numerical value of the quality characteristic (length, in this case) is used in creating the control chart.

The cost of obtaining variable data is usually higher than that for attributes because attribute data are collected by means such as go/no-go gages, which are easier to use and therefore less costly. The total cost of data collection is the sum of two components: the fixed cost and the variable unit cost. Fixed costs include the cost of the inspection equipment; variable unit costs include the cost of inspecting units. The more units inspected, the higher the variable cost, whereas the fixed cost is unaffected. As the use of automated devices for measuring quality characteristic values spreads, the difference in the variable unit cost between variables and attributes may not be much. However, the fixed costs, such as investment costs, may increase.

In health care applications, the severity of illness of patients and consequently the pre-operative mortality rate for surgical patients, say, in an intensive care unit may vary from patient to patient. Risk-adjusted control charts are introduced in this concept. Some of the charts discussed are the risk-adjusted cumulative sum chart, the risk-adjusted sequential probability ratio test, the risk-adjusted exponentially weighted moving-average chart, and the variable life-adjusted display chart.

7-2 Selection of Characteristics for Investigation

In small organizations as well as in large ones, many possible product and process quality characteristics exist. A single component usually has several quality characteristics, such as length, width, height, surface finish, and elasticity. In fact, the number of quality characteristics that affect a product is usually quite large. Now multiply such a number by even a small number of products and the total number of characteristics quickly increases to an unmanageable value. It is normally not feasible to maintain a control chart for each possible variable.

Balancing feasibility and completeness of information is an ongoing task. Accomplishing it involves selecting a few vital quality characteristics from the many candidates. Selecting which quality characteristics to maintain control charts on requires giving higher priority to those that cause more nonconforming items and that increase costs. The goal is to select the “vital few” from among the “trivial many.” This is where Pareto analysis comes in because it clarifies the “important” quality characteristics.

When nonconformities occur because of different defects, the frequency of each defect can be tallied. Table 7-1 shows the Pareto analyses for various defects in an assembly. Alternatively, the cost of producing the nonconformity could be collected. Table 7-1 shows that the three most important defects are the inside hub diameter, the hub length, and the slot depth.

Table 7-1 Pareto Analysis of Defects for Assembly Data

| Defect Code | Defect | Frequency | Percentage |

| 1 | Outside diameter of hub | 30 | 8.82 |

| 2 | Depth of keyway | 20 | 5.88 |

| 3 | Hub length | 60 | 17.65 |

| 4 | Inside diameter of hub | 90 | 26.47 |

| 5 | Width of keyway | 30 | 8.82 |

| 6 | Thickness of flange | 40 | 11.77 |

| 7 | Depth of slot | 50 | 14.71 |

| 8 | Hardness (measured by Brinell hardness number) | 20 | 5.88 |

Using the percentages given in Table 7-1, we can construct a Pareto diagram like the one shown in Figure 7-2. The defects are thus shown in a nonincreasing order of occurrence. From the figure we can see that if we have only enough resources to construct three variable charts, we will choose inside hub diameter (code 4), hub length (code 3), and slot depth (code 7).

Figure 7-2 Pareto diagram for assembly data.

Once quality characteristics for which control charts are to be maintained have been identified, a scheme for obtaining the data should be set up. Quite often, it is desirable to measure process characteristics that have a causal relationship to product quality characteristics. Process characteristics are typically controlled directly through control charts. In the assembly example of Table 7-1, we might decide to monitor process variables (cutting speed, depth of cut, and coolant temperature) that have an impact on hub diameter, hub length, and slot depth. Monitoring process variables through control charts implicitly controls product characteristics.

7-3 Preliminary Decisions

Certain decisions must be made before we can construct control charts. Several of these were discussed in detail in Chapter 6.

Selection of Rational Samples

The manner in which we sample the process deserves our careful attention. The sampling method should maximize differences between samples and minimize differences within samples. This means that separate control charts may have to be kept for different operators, machines, or vendors.

Lots from which samples are chosen should be homogeneous. As mentioned in Chapter 6, if our objective is to determine shifts in process parameters, samples should be made up of items produced at nearly the same time. This gives us a time reference and will be helpful if we need to determine special causes. Alternatively, if we are interested in the nonconformance of items produced since the previous sample was selected, samples should be chosen from items produced since that time.

Sample Size

Sample sizes are normally between 4 and 10, and it is quite common in industry to have sample sizes of 4 or 5. The larger the sample size, the better the chance of detecting small shifts. Other factors, such as cost of inspection or cost of shipping a nonconforming item to the customer, also influence the choice of sample size.

Frequency of Sampling

The sampling frequency depends on the cost of obtaining information compared to the cost of not detecting a nonconforming item. As processes are brought into control, the frequency of sampling is likely to diminish.

Choice of Measuring Instruments

The accuracy of the measuring instrument directly influences the quality of the data collected. Measuring instruments should be calibrated and tested for dependability under controlled conditions. Low-quality data lead to erroneous conclusions. The characteristic being controlled and the desired degree of measurement precision both have an impact on the choice of a measuring instrument. In measuring dimensions such as length, height, or thickness, something as simple as a set of calipers or a micrometer may be acceptable. On the other hand, measuring the thickness of silicon wafers may require complex optical sensory equipment.

Design of Data Recording Forms

Recording forms should be designed in accordance with the control chart to be used. Common features for data recording forms include the sample number, the date and time when the sample was selected, and the raw values of the observations. A column for comments about the process is also useful.

7-4 Control Charts for the Mean and Range

Development of the Charts

- Step 1: Using a pre-selected sampling scheme and sample size, record on the appropriate forms the measurements of the quality characteristic selected.

- Step 2: For each sample, calculate the sample mean and range using the following formulas:

(7-1)

(7-2)

(7-2)

where Xi represents the ith observation, n is the sample size, Xmax is the largest observation, and Xmin is the smallest observation.

- Step 3: Obtain and draw the centerline and the trial control limits for each chart. For the

-

chart, the centerline

-

chart, the centerline  is given by

(7-3)

is given by

(7-3)

where g represents the number of samples. For the R-chart, the centerline

is found from(7-4)

is found from(7-4)

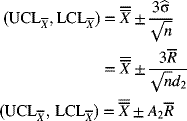

Conceptually, the 3σ control limits for the

-chart are

-chart areRather than compute σ

from the raw data, we can use the relation between the process standard deviation σ (or the standard deviation of the individual items) and the mean of the ranges

from the raw data, we can use the relation between the process standard deviation σ (or the standard deviation of the individual items) and the mean of the ranges  . Multiplying factors used to calculate the centerline and control limits are given in Appendix A-7. When sampling from a population that is normally distributed, the distribution of the statistic W = R/σ (known as the relative range) is dependent on the sample size n. The mean of W is represented by d2 and is tabulated in Appendix A-7. Thus, an estimate of the process standard deviation is

. Multiplying factors used to calculate the centerline and control limits are given in Appendix A-7. When sampling from a population that is normally distributed, the distribution of the statistic W = R/σ (known as the relative range) is dependent on the sample size n. The mean of W is represented by d2 and is tabulated in Appendix A-7. Thus, an estimate of the process standard deviation isThe control limits for an

-chart are therefore estimated as

-chart are therefore estimated aswhere

and is tabulated in Appendix A-7. Equation (7-7) is the working equation for determining the

and is tabulated in Appendix A-7. Equation (7-7) is the working equation for determining the  -chart control limits, given

-chart control limits, given  .

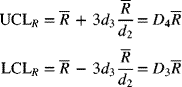

.The control limits for the R-chart are conceptually given by

(7-8)

Since R = σW, we have σR = σσw. In Appendix A-7, σw is tabulated as d3. Using eq. (7-6), we get

The control limits for the R-chart are estimated as

where

Equation (7-9) is the working equation for calculating the control limits for the R-chart. Values of D4 and D3 are tabulated in Appendix A-7.

- Step 4: Plot the values of the range on the control chart for range, with the centerline and the control limits drawn. Determine whether the points are in statistical control. If not, investigate the special causes associated with the out-of-control points (see the rules for this in Chapter 6) and take appropriate remedial action to eliminate special causes.

Typically, only some of the rules are used simultaneously. The most commonly used criterion for determining an out-of-control situation is the presence of a point outside the control limits.

An R-chart is usually analyzed before an

-chart to determine out-of-control situations. An R-chart reflects process variability, which should be brought into control first. As shown by eq. (7-7), the control limits for an

-chart to determine out-of-control situations. An R-chart reflects process variability, which should be brought into control first. As shown by eq. (7-7), the control limits for an  -chart involve the process variability and hence

-chart involve the process variability and hence  . Therefore, if an R-chart shows an out-of-control situation, the limits on the

. Therefore, if an R-chart shows an out-of-control situation, the limits on the  -chart may not be meaningful.

-chart may not be meaningful.Let's consider Figure 7-3. On the R-chart, sample 12 plots above the upper control limit and so is out of control. The

-chart, however, does not show the process to be out of control. Suppose that the special cause is identified as a problem with a new vendor who supplies raw materials and components. The task is to eliminate the cause, perhaps by choosing a new vendor or requiring evidence of statistical process control at the vendor's plant.

-chart, however, does not show the process to be out of control. Suppose that the special cause is identified as a problem with a new vendor who supplies raw materials and components. The task is to eliminate the cause, perhaps by choosing a new vendor or requiring evidence of statistical process control at the vendor's plant.

Figure 7-3 Plot of sample values on

- and R-charts.

- and R-charts. - Step 5: Delete the out-of-control point(s) for which remedial actions have been taken to remove special causes (in this case, sample 12) and use the remaining samples (here they are samples 1–11 and 13–15) to determine the revised centerline and control limits for the

- and R-charts.

- and R-charts.

These limits are known as the revised control limits. The cycle of obtaining information, determining the trial limits, finding out-of-control points, identifying and correcting special causes, and determining revised control limits then continues. The revised control limits will serve as trial control limits for the immediate future until the limits are revised again. This ongoing process is a critical component of continuous improvement.

A point of interest regarding the revision of R-charts concerns observations that plot below the lower control limit, when the lower control limit is greater than zero. Such points that fall below LCLR are, statistically speaking, out of control; however, they are also desirable because they indicate unusually small variability within the sample which is, after all, one of our main objectives. It is most likely that such small variability is due to special causes.

If the user is convinced that the small variability does indeed represent the operating state of the process during that time, an effort should be made to identify the causes. If such conditions can be created consistently, process variability will be reduced. The process should be set to match those favorable conditions, and the observations should be retained for calculating the revised centerline and the revised control limits for the R-chart.

- Step 6: Implement the control charts.

The ![]() - and R-charts should be implemented for future observations using the revised centerline and control limits. The charts should be displayed in a conspicuous place where they will be visible to operators, supervisors, and managers. Statistical process control will be effective only if everyone is committed to it—from the operator to the chief executive officer.

- and R-charts should be implemented for future observations using the revised centerline and control limits. The charts should be displayed in a conspicuous place where they will be visible to operators, supervisors, and managers. Statistical process control will be effective only if everyone is committed to it—from the operator to the chief executive officer.

Variable Sample Size

So far, our sample size has been assumed to be constant. A change in the sample size has an impact on the control limits for the ![]() - and R-charts. It can be seen from eqs. (7-7) and (7-9) that an increase in the sample size n reduces the width of the control limits. For an

- and R-charts. It can be seen from eqs. (7-7) and (7-9) that an increase in the sample size n reduces the width of the control limits. For an ![]() -chart, the width of the control limits from the centerline is inversely proportional to the square root of the sample size. Appendix A-7 shows the pattern in which the values of the control chart factors A2, D4, and D3 decrease with an increase in sample size.

-chart, the width of the control limits from the centerline is inversely proportional to the square root of the sample size. Appendix A-7 shows the pattern in which the values of the control chart factors A2, D4, and D3 decrease with an increase in sample size.

Standardized Control Charts

When the sample size varies, the control limits on an ![]() - and an R-chart will change, as discussed previously. With fluctuating control limits, the rules for identifying out-of-control conditions we discussed in Chapter 6 become difficult to apply—that is, except for Rule 1 (which assumes a process to be out of control when an observation plots outside the control limits). One way to overcome this drawback is to use a standardized control chart. When we standardize a statistic, we subtract its mean from its value and divide this value by its standard deviation. The standardized values then represent the deviation from the mean in units of standard deviation. They are dimensionless and have a mean of zero. The control limits on a standardized chart are at ±3 and are therefore constant. It's easier to interpret shifts in the process from a standardized chart than from a chart with fluctuating control limits.

- and an R-chart will change, as discussed previously. With fluctuating control limits, the rules for identifying out-of-control conditions we discussed in Chapter 6 become difficult to apply—that is, except for Rule 1 (which assumes a process to be out of control when an observation plots outside the control limits). One way to overcome this drawback is to use a standardized control chart. When we standardize a statistic, we subtract its mean from its value and divide this value by its standard deviation. The standardized values then represent the deviation from the mean in units of standard deviation. They are dimensionless and have a mean of zero. The control limits on a standardized chart are at ±3 and are therefore constant. It's easier to interpret shifts in the process from a standardized chart than from a chart with fluctuating control limits.

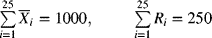

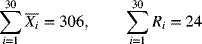

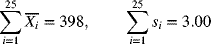

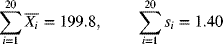

Let the sample size for sample i be denoted by ni, and let ![]() and si denote its average and standard deviation, respectively. The mean of the sample averages is found as

and si denote its average and standard deviation, respectively. The mean of the sample averages is found as

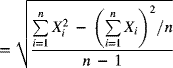

An estimate of the process standard deviation, ![]() , is the square root of the weighted average of the sample variances, where the weights are 1 less the corresponding sample sizes. So,

, is the square root of the weighted average of the sample variances, where the weights are 1 less the corresponding sample sizes. So,

Now, for sample i, the standardized value for the mean, Zi, is obtained from

where ![]() and

and ![]() are given by eqs. (7-10) and (7-11), respectively. A plot of the Zi-values on a control chart, with the centerline at 0, the upper control limit at 3, and the lower control limit at −3, represents a standardized control chart for the mean.

are given by eqs. (7-10) and (7-11), respectively. A plot of the Zi-values on a control chart, with the centerline at 0, the upper control limit at 3, and the lower control limit at −3, represents a standardized control chart for the mean.

To standardize the range chart, the range Ri for sample i is first divided by the estimate of the process standard deviation, ![]() , given by eq. (7-11), to obtain

, given by eq. (7-11), to obtain

The values of ri are then standardized by subtracting its mean d2 and dividing by its standard deviation d3 (Nelson 1989). The factors d2 and d3 are tabulated for various sample sizes in Appendix A-7. So, the standardized value for the range, ki, is given by

These values of ki are plotted on a control chart with a centerline at 0 and upper and lower control limits at 3 and −3, respectively.

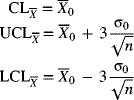

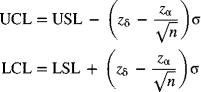

Control Limits for a Given Target or Standard

Management sometimes wants to specify values for the process mean and standard deviation. These values may represent goals or desirable standard or target values. Control charts based on these target values help determine whether the existing process is capable of meeting the desirable standards. Furthermore, they also help management set realistic goals for the existing process.

Let ![]() and σ0 represent the target values of the process mean and standard deviation, respectively. The centerline and control limits based on these standard values for the

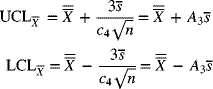

and σ0 represent the target values of the process mean and standard deviation, respectively. The centerline and control limits based on these standard values for the ![]() -chart are given by

-chart are given by

Let ![]() . Values for A are tabulated in Appendix A-7. Equation (7-15) may be rewritten as

. Values for A are tabulated in Appendix A-7. Equation (7-15) may be rewritten as

For the R-chart, the centerline is found as follows, Since ![]() , we have

, we have

where d2 is tabulated in Appendix A-7. The control limits are

where D2 = d2 + 3d3 (Appendix A-7) and σR = d3σ.

Similarly,

where D1 = d2 − 3d3 (Appendix A-7).

We must be cautious when we interpret control charts based on target or standard values. Sample observations can fall outside the control limits even though no special causes are present in the process. This is because these desirable standards may not be consistent with the process conditions. Thus, we could waste time and resources looking for special causes that do not exist.

On an ![]() -chart, plotted points can fall outside the control limits because a target process mean is specified as too high or too low compared to the existing process mean. Usually, it is easier to meet a desirable target value for the process mean than it is for the process variability. For example, adjusting the mean diameter or length of a part can often be accomplished by simply changing controllable process parameters. However, correcting for R-chart points that plot above the upper control limit is generally much more difficult.

-chart, plotted points can fall outside the control limits because a target process mean is specified as too high or too low compared to the existing process mean. Usually, it is easier to meet a desirable target value for the process mean than it is for the process variability. For example, adjusting the mean diameter or length of a part can often be accomplished by simply changing controllable process parameters. However, correcting for R-chart points that plot above the upper control limit is generally much more difficult.

An R-chart based on target values can also indicate excessive process variability without special causes present in the system. Therefore, meeting the target value σ0 may involve drastic changes in the process. Such an R-chart may be implying that the existing process is not capable of meeting the desired standard. This information enables management to set realistic goals.

Interpretation and Inferences from the Charts

The difficult part of analysis is determining and interpreting the special causes and selecting remedial actions. Effective use of control charts requires operators who are familiar with not only the statistical foundations of control charts but also the process itself. They must thoroughly understand how the different controllable parameters influence the dependent variable of interest. The quality assurance manager or analyst should work closely with the product design engineer and the process designer or analyst to come up with optimal policies.

In Chapter 6 we discussed five rules for determining out-of-control conditions. The presence of a point falling outside the 3σ limits is the most widely used of those rules. Determinations can also be made by interpreting typical plot patterns. Once the special cause is determined, this information plus a knowledge of the plot can lead to appropriate remedial actions.

Often, when the R-chart is brought to control, many special causes for the-![]() -chart are eliminated as well. The

-chart are eliminated as well. The ![]() -chart monitors the centering of the process because

-chart monitors the centering of the process because ![]() is a measure of the center. Thus, a jump on the

is a measure of the center. Thus, a jump on the ![]() -chart means that the process average has jumped and an increasing trend indicates the process center is gradually increasing. Process centering usually takes place through adjustments in machine settings or such controllable parameters as proper tool, proper depth of cut, or proper feed. On the other hand, reducing process variability to allow an R-chart to exhibit control is a difficult task that is accomplished through quality improvement.

-chart means that the process average has jumped and an increasing trend indicates the process center is gradually increasing. Process centering usually takes place through adjustments in machine settings or such controllable parameters as proper tool, proper depth of cut, or proper feed. On the other hand, reducing process variability to allow an R-chart to exhibit control is a difficult task that is accomplished through quality improvement.

Once a process is in statistical control, its capability can be estimated by calculating the process standard deviation. This measure can then be used to determine how the process performs with respect to some stated specification limits. The proportion of nonconforming items can be estimated. Depending on the characteristic being considered, some of the output may be reworked, while some may become scrap. Given the unit cost of rework and scrap, an estimate of the total cost of rework and scrap can be obtained. Process capability measures are discussed in more detail in Chapter 9. From an R-chart that exhibits control, the process standard deviation can be estimated as

where ![]() is the centerline and d2 is a factor tabulated in Appendix A-7. If the distribution of the quality characteristic can be assumed to be normal, then given some specification limits, the standard normal table can be used to determine the proportion of output that is nonconforming.

is the centerline and d2 is a factor tabulated in Appendix A-7. If the distribution of the quality characteristic can be assumed to be normal, then given some specification limits, the standard normal table can be used to determine the proportion of output that is nonconforming.

Control Chart Patterns and Corrective Actions

A nonrandom identifiable pattern in the plot of a control chart might provide sufficient reason to look for special causes in the system. Common causes of variation are inherent to a system; a system operating under only common causes is said to be in a state of statistical control. Special causes, however, could be due to periodic and persistent disturbances that affect the process intermittently. The objective is to identify the special causes and take appropriate remedial action.

Western Electric Company engineers have identified 15 typical patterns in control charts. Your ability to recognize these patterns will enable you to determine when action needs to be taken and what action to take (AT&T 19841984). We discuss 9 of these patterns here.

Natural Patterns

A natural pattern is one in which no identifiable arrangement of the plotted points exists. No points fall outside the control limits, the majority of the points are near the centerline, and few points are close to the control limits. Natural patterns are indicative of a process that is in control; that is, they demonstrate the presence of a stable system of common causes. A natural pattern is shown in Figure 7-8.

Figure 7-8 Natural pattern for an in-control process on an  -chart.

-chart.

Sudden Shifts in the Level

Many causes can bring about a sudden change (or jump) in pattern level on an ![]() - or R-chart. Figure 7-9 shows a sudden shift on an

- or R-chart. Figure 7-9 shows a sudden shift on an ![]() -chart. Such jumps occur because of changes—intentional or otherwise—in such process settings as temperature, pressure, or depth of cut. A sudden change in the average service level, for example, could be a change in customer waiting time at a bank because the number of tellers changed. New operators, new equipment, new measuring instruments, new vendors, and new methods of processing are other reasons for sudden shifts on

-chart. Such jumps occur because of changes—intentional or otherwise—in such process settings as temperature, pressure, or depth of cut. A sudden change in the average service level, for example, could be a change in customer waiting time at a bank because the number of tellers changed. New operators, new equipment, new measuring instruments, new vendors, and new methods of processing are other reasons for sudden shifts on ![]() - and R-charts.

- and R-charts.

Figure 7-9 Sudden shift in pattern level on an  -chart.

-chart.

Gradual Shifts in the Level

Gradual shifts in level occur when a process parameter changes gradually over a period of time. Afterward, the process stabilizes. An ![]() -chart might exhibit such a shift because the incoming quality of raw materials or components changed over time, the maintenance program changed, or the style of supervision changed. An R-chart might exhibit such a shift because of a new operator, a decrease in worker skill due to fatigue or monotony, or a gradual improvement in the incoming quality of raw materials because a vendor has implemented a statistical process control system. Figure 7-10 shows an

-chart might exhibit such a shift because the incoming quality of raw materials or components changed over time, the maintenance program changed, or the style of supervision changed. An R-chart might exhibit such a shift because of a new operator, a decrease in worker skill due to fatigue or monotony, or a gradual improvement in the incoming quality of raw materials because a vendor has implemented a statistical process control system. Figure 7-10 shows an ![]() -chart exhibiting a gradual shift in the level.

-chart exhibiting a gradual shift in the level.

Figure 7-10 Gradual shift in pattern level on an  -chart.

-chart.

Trending Pattern

Trends differ from gradual shifts in level in that trends do not stabilize or settle down. Trends represent changes that steadily increase or decrease. An ![]() -chart may exhibit a trend because of tool wear, die wear, gradual deterioration of equipment, buildup of debris in jigs and fixtures, or gradual change in temperature. An R-chart may exhibit a trend because of a gradual improvement in operator skill resulting from on-the-job training or a decrease in operator skill due to fatigue. Figure 7-11 shows a trending pattern on an

-chart may exhibit a trend because of tool wear, die wear, gradual deterioration of equipment, buildup of debris in jigs and fixtures, or gradual change in temperature. An R-chart may exhibit a trend because of a gradual improvement in operator skill resulting from on-the-job training or a decrease in operator skill due to fatigue. Figure 7-11 shows a trending pattern on an ![]() -chart.

-chart.

Figure 7-11 Trending pattern on an  -chart.

-chart.

Cyclic Patterns

Cyclic patterns are characterized by a repetitive periodic behavior in the system. Cycles of low and high points will appear on the control chart. An ![]() -chart may exhibit cyclic behavior because of a rotation of operators, periodic changes in temperature and humidity (such as a cold-morning startup), periodicity in the mechanical or chemical properties of the material, or seasonal variation of incoming components. An R-chart may exhibit cyclic patterns because of operator fatigue and subsequent energization following breaks, a difference between shifts, or periodic maintenance of equipment. Figure 7-12 shows a cyclic pattern for an

-chart may exhibit cyclic behavior because of a rotation of operators, periodic changes in temperature and humidity (such as a cold-morning startup), periodicity in the mechanical or chemical properties of the material, or seasonal variation of incoming components. An R-chart may exhibit cyclic patterns because of operator fatigue and subsequent energization following breaks, a difference between shifts, or periodic maintenance of equipment. Figure 7-12 shows a cyclic pattern for an ![]() -chart. If samples are taken too infrequently, only the high or the low points will be represented, and the graph will not exhibit a cyclic pattern. If control chart users suspect cyclic behavior, they should take samples frequently to investigate the possibility of a cyclic pattern.

-chart. If samples are taken too infrequently, only the high or the low points will be represented, and the graph will not exhibit a cyclic pattern. If control chart users suspect cyclic behavior, they should take samples frequently to investigate the possibility of a cyclic pattern.

Figure 7-12 Cyclic pattern on an  -chart.

-chart.

Wild Patterns

Wild patterns are divided into two categories: freaks and bunches (or groups). Control chart points exhibiting either of these two properties are, statistically speaking, significantly different from the other points. Special causes are generally associated with these points.

Freaks are caused by external disturbances that influence one or more samples. Figure 7-13 shows a control chart exhibiting a freak pattern. Freaks are plotted points too small or too large with respect to the control limits. Such points usually fall outside the control limits and are easily distinguishable from the other points on the chart. It is often not difficult to identify special causes for freaks. You should make sure, however, that there is no measurement or recording error associated with the freak point. Some special causes of freaks include sudden, very short-lived power failures; the use of a new tool for a brief test period; and the failure of a component.

Figure 7-13 Freak pattern on an  -chart.

-chart.

Bunches, or groups, are clusters of several observations that are decidedly different from other points on the plot. Figure 7-14 shows a control chart pattern exhibiting bunching behavior. Possible special causes of such behavior include the use of a new vendor for a short period time, use of a different machine for a brief time period, and new operator used for a short period.

Figure 7-14 Bunching pattern on an  -chart.

-chart.

Mixture Patterns (or the Effect of Two or More Populations)

A mixture pattern is caused by the presence of two or more populations in the sample and is characterized by points that fall near the control limits, with an absence of points near the centerline. A mixture pattern can occur when one set of values is too high and another set too low because of differences in the incoming quality of material from two vendors. A remedial action would be to have a separate control chart for each vendor. Figure 7-15 shows a mixture pattern. On an ![]() -chart, a mixture pattern can also result from overcontrol. If an operator chooses to adjust the machine or process every time a point plots near a control limit, the result will be a pattern of large swings. Mixture patterns can also occur on both

-chart, a mixture pattern can also result from overcontrol. If an operator chooses to adjust the machine or process every time a point plots near a control limit, the result will be a pattern of large swings. Mixture patterns can also occur on both ![]() - and R-charts because of two or more machines being represented on the same control chart. Other examples include two or more operators being represented on the same chart, differences in two or more pieces of testing or measuring equipment, and differences in production methods of two or more lines.

- and R-charts because of two or more machines being represented on the same control chart. Other examples include two or more operators being represented on the same chart, differences in two or more pieces of testing or measuring equipment, and differences in production methods of two or more lines.

Figure 7-15 Mixture pattern on an  -chart.

-chart.

Stratification Patterns

A stratification pattern is another possible result when two or more population distributions of the same quality characteristic are present. In this case, the output is combined, or mixed (say, from two shifts), and samples are selected from the mixed output. In this pattern, the majority of the points are very close to the centerline, with very few points near the control limits, Thus, the plot can be misinterpreted as indicating unusually good control. A stratification pattern is shown in Figure 7-16. Such a plot could have resulted from plotting data for samples composed of the combined output of two shifts, each different in its performance. It is possible for the sample average (which is really the average of parts chosen from both shifts) to fluctuate very little, resulting in a stratification pattern in the plot. Remedial measures in such situations involve having separate control charts for each shift. The method of choosing rational samples should be carefully analyzed so that component distributions are not mixed when samples are selected.

Figure 7-16 Stratifications pattern on an  -chart.

-chart.

Interaction Patterns

An interaction pattern occurs when the level of one variable affects the behavior of other variables associated with the quality characteristic of interest. Furthermore, the combined effect of two or more variables on the output quality characteristic may be different from the individual effect of each variable. An interaction pattern can be detected by changing the scheme for rational sampling. Suppose that in a chemical process the temperature and pressure are two important controllable variables that affect the output quality characteristic of interest. A low pressure and a high temperature may produce a very desirable effect on the output characteristic, whereas a low pressure by itself may not have that effect. An effective sampling method would involve controlling the temperature at several high values and then determining the effect of pressure on the output characteristic for each temperature value. Samples composed of random combinations of temperature and pressure may fail to identify the interactive effect of those variables on the output characteristic. The control chart in Figure 7-17 shows interaction between variables. In the first plot, the temperature was maintained at level A; in the second plot, it was held at level B. Note that the average level and variability of the output characteristic change for the two temperature levels. Also, if the R-chart shows the sample ranges to be small, information regarding the interaction could be used to establish desirable process parameter settings.

Figure 7-17 Interaction pattern between variables on an  -chart.

-chart.

Control Charts for Other Variables

The control chart patterns described in this section also occur in control charts besides ![]() - and R-charts. When found in other types of control charts, these patterns may indicate different causes than those we discussed in this section, but similar reasoning can be used to determine them. Furthermore, both the preliminary considerations and the steps for constructing control charts described earlier also apply to other control charts.

- and R-charts. When found in other types of control charts, these patterns may indicate different causes than those we discussed in this section, but similar reasoning can be used to determine them. Furthermore, both the preliminary considerations and the steps for constructing control charts described earlier also apply to other control charts.

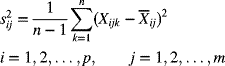

7-5 Control Charts for the Mean and Standard Deviation

Although an R-chart is easy to construct and use, a standard deviation chart (s-chart) is preferable for larger sample sizes (equal to or greater than 10, usually). As mentioned in Chapter 4, the range accounts for only the maximum and minimum sample values and consequently is less effective for large samples. The sample standard deviation serves as a better measure of process variability in these circumstances. The sample standard deviation is given by

If the population distribution of a quality characteristic is normal with a population standard deviation denoted by σ, the mean and standard deviation of the sample standard deviation are given by

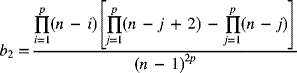

respectively, where c4 is a factor that depends on the sample size and is given by

Values of c4 are tabulated in Appendix A-7.

No Given Standards

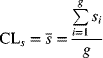

The centerline of a standard deviation chart is

where g is the number of samples and si is the standard deviation of the ith sample. The upper control limit is

In accordance with eq. (7-41), an estimate of the population standard deviation σ is

Substituting this estimate of ![]() in the preceding expression yields

in the preceding expression yields

where ![]() and is tabulated in Appendix A-7. Similarly,

and is tabulated in Appendix A-7. Similarly,

where ![]() and is also tabulated in Appendix A-7. Thus, the 3σ control limits are

and is also tabulated in Appendix A-7. Thus, the 3σ control limits are

The centerline of the chart for the mean ![]() is given by

is given by

The control limits on the ![]() -chart are

-chart are

Using eq. (7-26) to obtain ![]() , we find the control limits to be

, we find the control limits to be

where ![]() and is tabulated in Appendix A-7.

and is tabulated in Appendix A-7.

The process of constructing trial control limits, determining special causes associated with out-of-control points, taking remedial actions, and finding the revised control limits is similar to that explained in the section on ![]() - and R-charts. The s-chart is constructed first. Only if it is in control should the

- and R-charts. The s-chart is constructed first. Only if it is in control should the ![]() -chart be developed, because the standard deviation of

-chart be developed, because the standard deviation of ![]() is dependent on

is dependent on![]() . If the s-chart is not in control, any estimate of the standard deviation of

. If the s-chart is not in control, any estimate of the standard deviation of ![]() will be unreliable, which will in turn create unreliable control limits for

will be unreliable, which will in turn create unreliable control limits for ![]() .

.

Given Standard

If a target standard deviation is specified as σ0, the centerline of the s-chart is found by using eq. (7-22) as

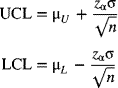

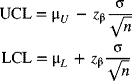

The upper control limit for the s-chart is found by using eq. (7-23) as

where ![]() and is tabulated in Appendix A-7. Similarly, the lower control limit for the s-chart is

and is tabulated in Appendix A-7. Similarly, the lower control limit for the s-chart is

where ![]() and is tabulated in Appendix A-7. Thus, the control limits for the s-chart are

and is tabulated in Appendix A-7. Thus, the control limits for the s-chart are

If a target value for the mean is specified as ![]() , the centerline is given by

, the centerline is given by

Equations for the control limits will be the same as those given by eq. (7-16) in the section on ![]() - and R-charts:

- and R-charts:

where ![]() and is tabulated in Appendix A-7.

and is tabulated in Appendix A-7.

7-6 Control Charts for Individual Units

For some situations in which the rate of production is low, it is not feasible for a sample size to be greater than 1. Additionally, if the testing process is destructive and the cost of the item is expensive, the sample size might be chosen to be 1. Furthermore, if every manufactured unit from a process is inspected, the sample size is essentially 1. Service applications in marketing and accounting often have a sample size of 1.

In a control chart for individual units—for which the value of the quality characteristic is represented by X—the variability of the process is estimated from the moving range (MR), found from two successive observations. The moving range of two observations is simply the result of subtracting the lesser value. Moving ranges are correlated because they use common rather than independent values in their calculations. That is, the moving range of observations 1 and 2 correlates with the moving range of observations 2 and 3. Because they are correlated, the pattern of the MR-chart must be interpreted carefully. Neither can we assume, as we have in previous control charts, that X-values in a chart for individuals will be normally distributed. So we must first check the distribution of the individual values. To do this, we might conduct an initial analysis using frequency histograms to identify the shape of the distribution, its skewness, and its kurtosis. Alternatively, we could conduct a test for normality. This information will tell us whether we can make the assumption of a normal distribution when we establish the control limits.

No Given Standards

An estimate of the process standard deviation is given by

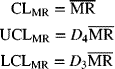

where ![]() is the average of the moving ranges of successive observations. Note that if we have a total of g individual observations, there will be g − 1 moving ranges. The centerline and control limits of the MR-chart are

is the average of the moving ranges of successive observations. Note that if we have a total of g individual observations, there will be g − 1 moving ranges. The centerline and control limits of the MR-chart are

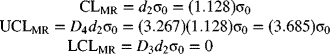

For n = 2, D4 = 3.267, and D3 = 0, the control limits become

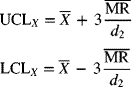

The centerline of the X-chart is

The control limits of the X-chart are

where (for n = 2) Appendix A-7 gives d2 = 1.128.

Given Standard

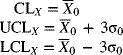

The preceding derivation is based on the assumption that no standard values are given for either the mean or the process standard deviation. If standard values are specified as ![]() and σ0, respectively, the centerline and control limits of the X-chart are

and σ0, respectively, the centerline and control limits of the X-chart are

Assuming n = 2, the MR-chart for standard values has the following centerline and control limits:

One advantage of an X-chart is the ease with which it can be understood. It can also be used to judge the capability of a process by plotting the upper and lower specification limits on the chart itself. However, it has several disadvantages compared to an ![]() -chart. An X-chart is not as sensitive to changes in the process parameters. It typically requires more samples to detect parametric changes of the same magnitude. The main disadvantage of an X-chart, though, is that the control limits can become distorted if the individual items don't fit a normal distribution.

-chart. An X-chart is not as sensitive to changes in the process parameters. It typically requires more samples to detect parametric changes of the same magnitude. The main disadvantage of an X-chart, though, is that the control limits can become distorted if the individual items don't fit a normal distribution.

7-7 Control Charts for Short Production Runs

Organizations, both manufacturing and service, are faced with short production runs for several reasons. Product specialization and being responsive to customer needs are two important reasons. Consider a company that assembles computers based on customer orders. There is no guarantee that the next 50 orders will be for a computer with the same hardware and software features.

- and R-Charts for Short Production Runs

- and R-Charts for Short Production Runs

Where different parts may be produced in the short run, one approach is to use the deviation from the nominal value as the modified observations. The nominal value may vary from part to part. So, the deviation of the observed value Oi from the nominal value N is given by

The procedure for the construction of the ![]() - and R-charts is the same as before using the modified observations, Xi. Different parts are plotted on the same control chart so as to have the minimum information (usually, at least 20 samples) required to construct the charts, even though for each part there are not enough samples to justify construction of a control chart.

- and R-charts is the same as before using the modified observations, Xi. Different parts are plotted on the same control chart so as to have the minimum information (usually, at least 20 samples) required to construct the charts, even though for each part there are not enough samples to justify construction of a control chart.

Several assumptions are made in this approach. First, it is assumed that the process standard deviation is approximately the same for all the parts. Second, what happens when a nominal value is not specified (which is especially true for characteristics that have one-sided specifications, such as breaking strength)? In such a situation, the process average based on historical data may have to be used.

Z-MR Chart

When individuals' data are obtained on the quality characteristic, an approach is to construct a standardized control chart for individuals (Z-chart) and a moving-range (MR) chart. The standardized value is given by

The moving range is calculated from the standardized values using a length of size 2. Depending on how each group (part or product) is defined, the process standard deviation for group i is estimated by

where ![]() represents the average moving range for group i and d2 is a control chart factor found from Appendix A-7. Minitab provides several options for selecting computation of the process mean and process standard deviation. For each group (part or product), the mean of the observations in that group could be used as an estimate of the process mean for that group. Alternatively, historical values of estimates may be specified as an option.

represents the average moving range for group i and d2 is a control chart factor found from Appendix A-7. Minitab provides several options for selecting computation of the process mean and process standard deviation. For each group (part or product), the mean of the observations in that group could be used as an estimate of the process mean for that group. Alternatively, historical values of estimates may be specified as an option.

In estimating the process standard deviation for each group (part or product), Minitab provides options for defining groups as follows: by runs; by parts, where all observations on the same part are combined in one group; constant (combine all observations for all parts in one group); and relative to size (transform the original data by taking the natural logarithm and then combine all into one group).

The relative-to-size option assumes that variability increases with the magnitude of the quality characteristic. The natural logarithm transformation stabilizes the variance. A common estimate (![]() ) of the process standard deviation is obtained from the transformed data. The constant option that pools all data assumes that the variability associated with all groups is the same, implying that product or part type or characteristic size has no influence. This option must be used only if there is enough information to justify the assumption. It produces a single estimate (

) of the process standard deviation is obtained from the transformed data. The constant option that pools all data assumes that the variability associated with all groups is the same, implying that product or part type or characteristic size has no influence. This option must be used only if there is enough information to justify the assumption. It produces a single estimate (![]() ) of the common process standard deviation. The option of pooling by parts assumes that all runs of a particular part have the same variability. It produces an estimate (

) of the common process standard deviation. The option of pooling by parts assumes that all runs of a particular part have the same variability. It produces an estimate (![]() ) of the process standard deviation for each part group. Finally, the option of pooling by runs assumes that part variability may change from run to run. It produces an estimate of the process standard deviation for each run, independently.

) of the process standard deviation for each part group. Finally, the option of pooling by runs assumes that part variability may change from run to run. It produces an estimate of the process standard deviation for each run, independently.

7-8 Other Control Charts

In previous sections we have examined commonly used control charts. Now we look at several other control charts. These charts are specific to certain situations. Procedures for constructing ![]() - and R-charts and interpreting their patterns apply to these charts as well, so they are not repeated here.

- and R-charts and interpreting their patterns apply to these charts as well, so they are not repeated here.

Cumulative Sum Control Chart for the Process Mean

In Shewhart control charts such as the ![]() - and R-charts, a plotted point represents information corresponding to that observation only. It does not use information from previous observations. On the other hand, a cumulative sum chart, usually called a cusum chart, uses information from all of the prior samples by displaying the cumulative sum of the deviation of the sample values (e.g., the sample mean) from a specified target value.

- and R-charts, a plotted point represents information corresponding to that observation only. It does not use information from previous observations. On the other hand, a cumulative sum chart, usually called a cusum chart, uses information from all of the prior samples by displaying the cumulative sum of the deviation of the sample values (e.g., the sample mean) from a specified target value.

The cumulative sum at sample number m is given by

where ![]() is the sample mean for sample i and μ0 is the target mean of the process.

is the sample mean for sample i and μ0 is the target mean of the process.

Cusum charts are more effective than Shewhart control charts in detecting relatively small shifts in the process mean (of magnitude ![]() to about

to about ![]() ). A cusum chart uses information from previous samples, so the effect of a small shift is more pronounced. For situations in which the sample size n is 1 (say, when each part is measured automatically by a machine), the cusum chart is better suited than a Shewhart control chart to determining shifts in the process mean. Because of the magnified effect of small changes, process shifts are easily found by locating the point where the slope of plotted cusum pattern changes.

). A cusum chart uses information from previous samples, so the effect of a small shift is more pronounced. For situations in which the sample size n is 1 (say, when each part is measured automatically by a machine), the cusum chart is better suited than a Shewhart control chart to determining shifts in the process mean. Because of the magnified effect of small changes, process shifts are easily found by locating the point where the slope of plotted cusum pattern changes.

There are some disadvantages to using cusum charts, however. First, because the cusum chart is designed to detect small changes in the process mean, it can be slow to detect large changes in the process parameters. Because a decision criterion is designed to do well under a specific situation does not mean that it will perform equally well under different situations. Details on modifying the decision process for a cusum chart to detect large shifts can be found in Hawkins , Lucas (1976, 1982), and Woodall and Adams 1993. Second, the cusum chart is not an effective tool in analyzing the historical performance of a process to see whether it is in control or to bring it in control. Thus, these charts are typically used for well-established processes that have a history of being stable.

Recall that for Shewhart control charts the individual points are assumed to be uncorrelated. Cumulative values are, however, related. That is, Si−1 and Si are related because ![]() . It is therefore possible for a cusum chart to exhibit runs or other patterns as a result of this relationship. The rules for describing out-of-control conditions based on the plot patterns of Shewhart charts may therefore not be applicable to cusum charts. Finally, training workers to use and maintain cusum charts may be more costly than for Shewhart charts.

. It is therefore possible for a cusum chart to exhibit runs or other patterns as a result of this relationship. The rules for describing out-of-control conditions based on the plot patterns of Shewhart charts may therefore not be applicable to cusum charts. Finally, training workers to use and maintain cusum charts may be more costly than for Shewhart charts.

Cumulative sum charts can model the proportion of nonconforming items, the number of nonconformities, the individual values, the sample range, the sample standard deviation, or the sample mean. In this section we focus on their ability to detect shifts in the process mean.

Suppose that the target value of a process mean when the process is in control is denoted by μ0. If the process mean shifts upward to a higher value μ1, an upward drift will be observed in the value of the cusum Sm given by eq. (7-42) because the old lower value μ0 is still used in the equation even though the X-values are now higher. Similarly, if the process mean shifts to a lower value μ2, a downward trend will be observed in Sm. The task is to determine whether the trend in Sm is significant so that we can conclude that a change has taken place in the process mean.

In the situation where individual observations (n = 1) are collected from a process to monitor the process mean, eq. (7-42) becomes

where S0 = 0.

Tabular Method

Let us first consider the case of individual observations (Xi) being drawn from a process with mean μ0 and standard deviation σ. When the process is in control, we assume that ![]() . In the tabular cusum method, deviations above μ0 are accumulated with a statistic S+, and deviations below μ0 are accumulated with a statistic S−. These two statistics, S+ and S−, are labeled one-sided upper and lower cusums, respectively, and are given by

. In the tabular cusum method, deviations above μ0 are accumulated with a statistic S+, and deviations below μ0 are accumulated with a statistic S−. These two statistics, S+ and S−, are labeled one-sided upper and lower cusums, respectively, and are given by

where S0+ = S0− = 0.

The parameter K in eqs. (7-44) and (7-45) is called the allowable slack in the process and is usually chosen as halfway between the target value μ0 and the shifted value μ1 that we are interested in detecting. Expressing the shift (δ) in standard deviation units, we have ![]() , leading to

, leading to

Thus, examining eqs. (7-44) and (7-45), we find that Sm+ and Sm− accumulate deviations from the target value μ0 that are greater than K. Both are reset to zero upon becoming negative. In practice, K = kσδ, where k is in units of standard deviation. In eq. (7-46), k = 0.5.

A second parameter in the decision-making process using cusums is the decision interval H, to determine out-of-control conditions. As before, we set H = hσ, where h is in standard deviation units. When the value of Sm+ or Sm− plots beyond H, the process will be considered to be out of control. When k = 0.5, a reasonable value of h is 5 (in standard deviation units), which ensures a small average run length for shifts of the magnitude of one standard deviation that we wish to detect (Hawkins 1993). It can be shown that for a small value of β, the probability of a type II error, the decision interval is given by

Thus, if sample averages are used to construct cusums in the above procedures, σ2 will be replaced by σ2/n in eq. (7-47), assuming samples of size n.

To determine when the shift in the process mean was most likely to have occurred, we will monitor two counters, N+ and N−. The counter N+ notes the number of consecutive periods that Sm+ is above 0, whereas N− tracks the number of consecutive periods that Sm− is above zero. When an out-of-control condition is detected, one can count backward from this point to the time period when the cusum was above zero to find the first period in which the process probably shifted. An estimate of the new process mean may be obtained from

or from

V-Mask Method

In the V-mask approach, a template known as a V-mask, proposed by Barnard (1959)1959, is used to determine a change in the process mean through the plotting of cumulative sums. Figure 7-23 shows a V-mask, which has two parameters, the lead distance d and the angle θ of each decision line with respect to the horizontal. The V-mask is positioned such that point P coincides with the last plotted value of the cumulative sum and line OP is parallel to the horizontal axis. If the values plotted previously are within the two arms of the V-mask—that is, between the upper decision line and the lower decision line—the process is judged to be in control. If any value of the cusum lies outside the arms of the V-mask, the process is considered to be out of control.

Figure 7-23 V-mask for making decisions with cumulative sum charts.

In Figure 7-23, notice that a strong upward shift in the process mean is visible for sample 5. This shift makes sense given the fact that the cusum value for sample 1 is below the lower decision line, indicating an out-of-control situation. Similarly, the presence of a plotted value above the upper decision line indicates a downward drift in the process mean.

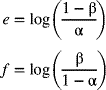

Determination of V-Mask Parameters

The two parameters of a V-mask, d and θ, are determined based on the levels of risk that the decision maker is willing to tolerate. These risks are the type I and type II errors described in Chapter 6. The probability of a type I error, α, is the risk of concluding that a process is out of control when it is really in control. The probability of a type II error, β, is the risk of failing to detect a change in the process parameter and concluding that the process is in control when it is really out of control. Let ![]() denote the amount of shift in the process mean that we want to be able to detect and

denote the amount of shift in the process mean that we want to be able to detect and ![]() denote the standard deviation of

denote the standard deviation of ![]() . Next, consider the equation

. Next, consider the equation

where δ represents the degree of shift in the process mean, relative to the standard deviation of the mean, that we wish to detect. Then, the lead distance for the V-mask is given by

If the probability of a type II error, β, is selected to be small, then eq. (7-51) reduces to

The angle of decision line with respect to the horizontal is obtained from

where k is a scale factor representing the ratio of a vertical-scale unit to a horizontal-scale unit on the plot. The value of k should be between ![]() and

and ![]() , with a preferred value of

, with a preferred value of ![]() .

.

One measure of a control chart's performance is the average run length (ARL). (We discussed ARL in Chapter 6.) This value represents the average number of points that must be plotted before an out-of-control condition is indicated. For a Shewhart control chart, if p represents the probability that a single point will fall outside the control limits, the average run length is given by

For 3σ limits on a Shewhart ![]() -chart, the value of p is about 0.0026 when the process is in control. Hence, the ARL for an

-chart, the value of p is about 0.0026 when the process is in control. Hence, the ARL for an ![]() -chart exhibiting control is

-chart exhibiting control is

The implication of this is that, on average, if the process is in control, every 385th sample statistic will indicate an out-of-control state. The ARL is usually larger for a cusum chart than for a Shewhart chart. For example, for a cusum chart with comparable risks, the ARL is around 500. Thus, if the process is in control, on average, every 500th sample statistic will indicate an out-of-control situation, so there will be fewer false alarms.

Table 7-8 Cumulative Sum of Data for Calcium Content

| Sample, i | Deviation of Sample Mean from Target, |

Cumulative Sum, Si | Sample, i | Deviation of Sample Mean from Target, |

Cumulative Sum, Si |

| 1 | −1.0 | −1.0 | 9 | −0.1 | −1.4 |

| 2 | −0.5 | −1.5 | 10 | −0.2 | −1.6 |

| 3 | 0.1 | −1.4 | 11 | 0.4 | −1.2 |

| 4 | 0.3 | −1.1 | 12 | 1.3 | 0.1 |

| 5 | 1.0 | −0.1 | 13 | −0.3 | −0.2 |

| 6 | −0.6 | −0.7 | 14 | 0.3 | 0.1 |

| 7 | 0.5 | −0.2 | 15 | 0.1 | 0.2 |

| 8 | −1.1 | −1.3 |

Designing a Cumulative Sum Chart for a Specified ARL

The average run length can be used as a design criterion for control charts. If a process is in control, the ARL should be long, whereas if the process is out of control, the ARL should be short. Recall that δ is the degree of shift in the process mean, relative to the standard deviation of the sample mean, that we are interested in detecting; that is, ![]() . Let L(δ) denote the desired ARL when a shift in the process mean is on the order of δ. An ARL curve is a plot of δ versus its corresponding average run length, L(δ). For a process in control, when δ = 0, a large value of L(0) is desirable, For a specified value of δ, we may have a desirable value of L(δ). Thus, two points on the ARL curve, [0, L(0)] and [δ, L(δ)], are specified. The goal is to find the cusum chart parameters d and θ that will satisfy these desirable goals.

. Let L(δ) denote the desired ARL when a shift in the process mean is on the order of δ. An ARL curve is a plot of δ versus its corresponding average run length, L(δ). For a process in control, when δ = 0, a large value of L(0) is desirable, For a specified value of δ, we may have a desirable value of L(δ). Thus, two points on the ARL curve, [0, L(0)] and [δ, L(δ)], are specified. The goal is to find the cusum chart parameters d and θ that will satisfy these desirable goals.

Bowker and Lieberman (1987)1982 provide a table (see Table 7-9) for selecting the V-mask parameters d and θ when the objective is to minimize L(δ) for a given δ. It is assumed that the decision maker has a specified value of L(0) in mind. Table 7-9 gives values for ![]() and d, and the minimum value of L(δ) for a specified δ. We use this table in Example 7-9.

and d, and the minimum value of L(δ) for a specified δ. We use this table in Example 7-9.

Table 7-9 Selection of Cumulative Sum Control Charts Based on Specified ARL

| δ = Deviation from Target Value | L(0) = Expected Run Length When Process Is in Control | ||||||

| (standard deviations) | 50 | 100 | 200 | 300 | 400 | 500 | |

| 0.25 | 0.125 | 0.195 | 0.248 | ||||

| d | 47.6 | 46.2 | 37.4 | ||||

| L(0.25) | 28.3 | 74.0 | 94.0 | ||||

| 0.50 | 0.25 | 0.28 | 0.29 | 0.28 | 0.28 | 0.27 | |

| d | 17.5 | 18.2 | 21.4 | 24.7 | 27.3 | 29.6 | |

| L(0.5) | 15.8 | 19.0 | 24.0 | 26.7 | 29.0 | 30.0 | |

| 0.75 | 0.375 | 0.375 | 0.375 | 0.375 | 0.375 | 0.375 | |

| d | 9.2 | 11.3 | 13.8 | 15.0 | 16.2 | 16.8 | |

| L(0.75) | 8.9 | 11.0 | 13.4 | 14.5 | 15.7 | 16.5 | |

| 1.0 | 0.50 | 0.50 | 0.50 | 0.50 | 0.50 | 0.50 | |

| d | 5.7 | 6.9 | 8.2 | 9.0 | 9.6 | 10.0 | |

| L(1.0) | 6.1 | 7.4 | 8.7 | 9.4 | 10.0 | 10.5 | |

| 1.5 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | |

| d | 2.7 | 3.3 | 3.9 | 4.3 | 4.5 | 4.7 | |

| L(1.5) | 3.4 | 4.0 | 4.6 | 5.0 | 5.2 | 5.4 | |

| 2.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | |

| d | 1.5 | 1.9 | 2.2 | 2.4 | 2.5 | 2.7 | |

| L(2.0) | 2.26 | 2.63 | 2.96 | 3.15 | 3.3 | 3.4 | |

| Source: A. H. Bowker and G. J. Lieberman, Engineering Statistics, 2nd ed.,1987. Reprinted by permission of Pearson Education, Inc. Upper Saddle River, NJ. | |||||||

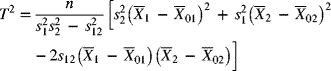

Cumulative Sum for Monitoring Process Variability

Cusum charts may also be used to monitor process variability as discussed by Hawkins (1981)1981. Assuming that Xi ∼ N(μ0, σ), the standardized value Yi is obtained first as Yi = (Xi − μ0)/σ. A new standardized quantity (Hawkins 1993) is constructed as follows:

where it is suggested that the vi are sensitive to both variance and mean changes. For an in-control process, vi is distributed approximately N(0, 1). Two one-sided standardized cusums are constructed as follows to detect scale changes:

where ![]() . The values of h and k are selected using guidelines similar to those discussed in the section on cusum for the process mean. When the process standard deviation increases, the values of

. The values of h and k are selected using guidelines similar to those discussed in the section on cusum for the process mean. When the process standard deviation increases, the values of ![]() in eq. (7-56) will increase. When

in eq. (7-56) will increase. When ![]() exceeds h, we will detect an out-of-control condition. Similarly, if the process standard deviation decreases, values of

exceeds h, we will detect an out-of-control condition. Similarly, if the process standard deviation decreases, values of ![]() will increase.

will increase.

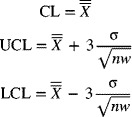

Moving-Average Control Chart

As mentioned previously, standard Shewhart control charts are quite insensitive to small shifts, and cumulative sum charts are one way to alleviate this problem. A control chart using the moving-average method is another. Such charts are effective for detecting shifts of small magnitude in the process mean. Moving-average control charts can also be used in situations for which the sample size is 1, such as when product characteristics are measured automatically or when the time to produce a unit is long. It should be noted that, by their very nature, moving-average values are correlated.

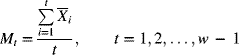

Suppose that samples of size n are collected from the process. Let the first t sample means be denoted by ![]() . (One sample is taken for each time step.) The moving average of width w (i.e., w samples) at time step t is given by

. (One sample is taken for each time step.) The moving average of width w (i.e., w samples) at time step t is given by

At any time step t, the moving average is updated by dropping the oldest mean and adding the newest mean. The variance of each sample mean is

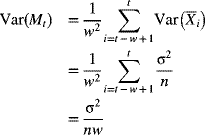

where σ2 is the population variance of the individual values. The variance of Mt is

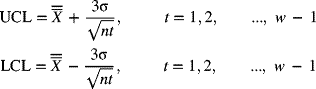

The centerline and control limits for the moving-average chart are given by

From eq. (7-60), we can see that as w increases, the width of the control limits decreases. So, to detect shifts of smaller magnitudes, larger values of w should be chosen.

For the startup period (when t < w), the moving average is given by

The control limits for this startup period are

Since these control limits change at each sample point during this startup period, an alternative procedure would be to use the ordinary ![]() -chart for t < w and use the moving-average chart for t ≥ w.

-chart for t < w and use the moving-average chart for t ≥ w.

Exponentially Weighted Moving-Average or Geometric Moving-Average Control Chart

The preceding discussion showed that a moving-average chart can be used as an alternative to an ordinary ![]() -chart to detect small changes in process parameters. The moving-average method is basically a weighted-average scheme. For sample t, the sample means

-chart to detect small changes in process parameters. The moving-average method is basically a weighted-average scheme. For sample t, the sample means ![]() are each weighted by 1/w [see eq. (7-58)], while the sample means for time steps less than t − w + 1 are weighted by zero. Along similar lines, a chart can be constructed based on varying weights for the prior observations. More weight can be assigned to the most recent observation, with the weights decreasing for less recent observations. A geometric moving-average control chart, also known as an exponentially weighted moving-average (EWMA) chart, is based on this premise. One of the advantages of a geometric moving-average chart over a moving-average chart is that the former is more effective in detecting small changes in process parameters. The geometric moving average at time step t is given by

are each weighted by 1/w [see eq. (7-58)], while the sample means for time steps less than t − w + 1 are weighted by zero. Along similar lines, a chart can be constructed based on varying weights for the prior observations. More weight can be assigned to the most recent observation, with the weights decreasing for less recent observations. A geometric moving-average control chart, also known as an exponentially weighted moving-average (EWMA) chart, is based on this premise. One of the advantages of a geometric moving-average chart over a moving-average chart is that the former is more effective in detecting small changes in process parameters. The geometric moving average at time step t is given by

where r is a weighting constant (0 < r ≤ 1) and G0 is ![]() . By using eq. (7-63) repeatedly, we get

. By using eq. (7-63) repeatedly, we get

Equation (7-64) shows that the weight associated with the ith mean from ![]() is r (1 − r)i. The weights decrease geometrically as the sample mean becomes less recent. The sum of all the weights is 1. Consider, for example, the case for which r = 0.3. This implies that, in calculating Gt, the most recent sample mean

is r (1 − r)i. The weights decrease geometrically as the sample mean becomes less recent. The sum of all the weights is 1. Consider, for example, the case for which r = 0.3. This implies that, in calculating Gt, the most recent sample mean ![]() has a weight of 0.3, the next most recent observation

has a weight of 0.3, the next most recent observation ![]() has a weight of (0.3)(1 − 0.3) = 0.21, the next observation

has a weight of (0.3)(1 − 0.3) = 0.21, the next observation ![]() has a weight of 0.3(1 − 0.3)2 = 0.147, and so on. Here, G0 has a weight of (1 − 0.3)t. Since these weights appear to decrease exponentially, eq. (7-64) describes what is known as the exponentially weighted moving-average model.

has a weight of 0.3(1 − 0.3)2 = 0.147, and so on. Here, G0 has a weight of (1 − 0.3)t. Since these weights appear to decrease exponentially, eq. (7-64) describes what is known as the exponentially weighted moving-average model.

If the sample means![]() are assumed to be independent of each other and if the population standard deviation is σ, the variance of Gt is given by

are assumed to be independent of each other and if the population standard deviation is σ, the variance of Gt is given by

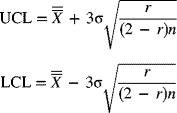

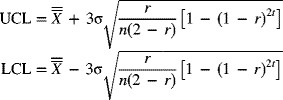

For large values of t, the standard deviation of Gt is

The upper and lower control limits are

For small values of t, the control limits are found using eq. (7-65) to be

A geometric moving-average control chart is based on a concept similar to that of a moving-average chart. By choosing an adequate set of weights, however, where recent sample means are more heavily weighted, the ability to detect small changes in process parameters is increased. If the weighting factor r is selected as