CHAPTER 8

Sound

Sounds for games serve a myriad of roles. Along with creating the signature for a project, music helps to create mood, build tension, introduce mirth, and telegraph conflict. Ambient sounds help construct immersion, and clever audio effects for interfaces communicate a sense of panache, futuristic technology, dark themes, or wacky comedy. The addition of sound, an unseen component in such a visual medium, helps players use their imagination.

Well-designed audio can deeply enhance the gameplay experience, whereas a poor design can damage it. For games that have a long play time, where players may be immersed for 10–20 hours or more, one of the challenges for the audio designers is coming up with enough interesting variation in the sounds to avoid boring repetitions while also keeping file sizes in check.

The art form of designing audio has increased in sophistication as games have improved and developed over the years. Just as movies have used this medium to draw in the audience and help them enjoy a deeper experience, so have games and their designers taken advantage of this rich component to breathe more life into their adventures.

- Organization and planning

- Music

- Ambient sound

- Sound effects

- Speech

- Sound-based computer games

Organization and Planning

Video games typically have hundreds or thousands of digital files generated during their production. Half the battle is keeping track of all that work as it's planned for, produced, and then catalogued for the production. As with any asset that is planned for inclusion in your game, you should determine the need for each sound, where it will appear in the game, how many variations might be needed, what type of sound it is, and so on.

Sound files can quickly increase the size of the game, so the challenge to the audio designers (and most games have fairly small budgets set aside for audio) is to plan for exactly what sounds are needed.

Charting Work

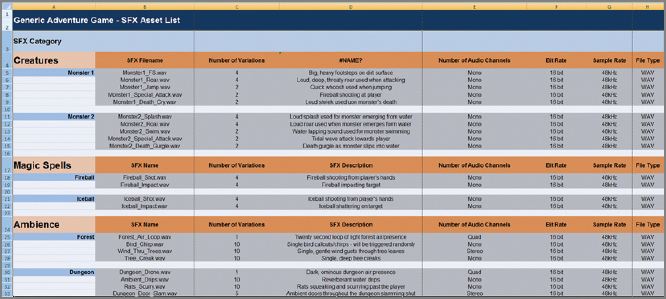

The sample chart in Figure 8.1, provided by Brad Beaumont from Soundelux DMG in Hollywood, California, shows how files can be labeled, sorted, and catalogued for your project.

FIGURE 8.1 This sample breakdown shows how files are labeled and described along with their technical information.

What Sounds Will You Need?

As you begin work on the sound design for your game, you should sort out what types of sound you're looking for:

When gamers achieve a goal, they usually hear a reward audio, such as a large crescendo, and see a showy, elaborate animation, like fireworks or starbursts filling the screen.

Music If your project requires music, will there be a basic background track along with different types of music to telegraph to the player when the game gets intense or calmer, or when there is a reward?

Dialogue If there is dialogue, be sure to script the project completely.

Voice-Overs Do you need recorded dialogue that plays over a scene to help explain different aspects of the game or introduce a new part of the gameplay?

Ambient Sounds What types of ambient sounds will you need? Identify all the environments: forest, city, farm, undersea, outer space, and so on. Then begin breaking down what sounds each location will need.

Special Effects These include gun blasts, magic spells, and so on.

Interface Sound Effects List each sound you'll need for button clicks and rollovers.

Be as thorough as you can, and list every single thing in the game from beginning to end that will require any type of audio. Yes, your list will be long; but the better you plan for all of the asset development and production in your project, the smoother and more rewarding the final game will be.

As you make your list, also think about how often the gamer will be listening to specific sounds. If you see in your list specific sounds that might be played more frequently than others, consider creating variations that the game engine can play randomly so that hearing the same gun blast or explosion over and over again doesn't become a bore.

Music

Scores, soundtracks, and music for games started as simple analog waveforms, similar to what had been used for phonograph records and cassettes. One of the first games to use music as part of the gameplay was an arcade tour de force called Journey, released by Bally Midway in 1983. The game showcased music by the band Journey and featured a series of mini-games where players could travel to different planets with the band members to search for their missing instruments.

The majority of early games featured seriously simple soundtracks, often generated from synthesizers (synthesized means using an algorithm to make sound), that tended to be more annoying than fun. Games now lean toward a fully orchestrated soundtrack similar to what viewers experience in a movie.

Movie makers learned some time ago that music could help complement the story being told. Anyone who has seen the movie Jaws should remember quite well the threatening, frightening cello sequence that telegraphs the approach of the shark. The opening title sequences for movies like Star Wars could bring audiences to their feet, cheering, as the fully orchestrated score filled the theatre while the opening credits for the film marched boldly into the galaxy.

In games as in movies, music helps create the mood and can build anticipation and suspense.

Game designers strive to create worlds and experiences for gamers that are immersive, challenging, fun, and designed to entice the player to return again and again. The signature sounds for games, along with stirring scores, have elevated the entire gameplay experience using many of the same techniques devised for scoring feature films.

Audio Producers and Composers

For the most part, game crews have an audio producer who can act as the supervisor, overseeing the design and development of musical scores or soundtracks, ambient sounds, and special effects audio. Every project is different. You create a prototype first and work with the plans and the producer to continue fine-tuning the vision of the game.

For large games with big scores, much of that work is delegated to professional composers and musicians who are brought on board a project as independent contractors. To get started, the composer will work with the audio producer to get an understanding of the project scope. Music can create such an emotional impact that it's inadequate to simply hand the composer an asset list of scenes or events they need to write for.

The composer needs to understand the world being produced for the game in order to create effective music.

The composer needs to see and understand what the world is all about that they're writing for. Is it scary, bleak, intense, damaging, and overwhelming, or light, carefree, and childlike? Using descriptive language to explain the look and feel of the game to express the impact that the project needs is one way to begin communicating with the composer.

Sometimes there is a script to work from. In addition, providing the composer with concept art, sample animations, animatics, storyboards, and cinematics, or even letting them play what has been developed so far, allows these professionals to immerse themselves in the spirit of the project.

According to composer Garry Schyman (BioShock 1 and 2 and Dante's Inferno), seen on the left in Figure 8.2, one of the techniques used to implement the audio is layering. For example, a section of the game may start with a simple ambient layer of sound or music, that can build in complexity as more audio elements are layered onto it.

An effective soundtrack for a game tends to be invisible. Its presence enhances but never overtakes the play experience, and effective layering is all but undetectable.

The layering can include additional sounds—music or background noises such is crickets, wind, and thunder—or increased instrumentation. In Figure 8.3, Schyman is seen working with a full orchestra as they record his score for the game Dante's Inferno.

Each project has unique requirements. According to Schyman, AAA games are like feature films in that they usually have original music recorded with an orchestra, whereas smaller games are like TV shows that can work with music recorded using just a keyboard and software synthesizer. Generally, a AAA game will require 50–150 minutes of music created for the project.

FIGURE 8.2 Noted composer Garry Schyman helps bring a more cinematic experience to the composition of music for video games by writing original scores and working with musicians to record the tracks.

FIGURE 8.3 As with movie scores, music for games can involve an entire orchestra in a recording session to lay down the tracks.

AAA is an industry term for a game that is high quality and produced with a large budget, such as Halo, World of Warcraft, or Gears of War. The average budget to make a AAA game is about $30 million. These games can take around 18 months to produce, with a crew of approximately 120 people.

A lot of indy games are made with small crews of two or three people doing all the work, with a turnaround time from a few weeks to a few months.

Midsize games employ crews of about 30–65 people and generally take about 9 months to make. Indy game production has grown over the past few years due to increased online distribution systems and the mobile game market.

When music is created for games, the composer will produce what are known as stems, or segments, that the audio producer can then program to play during specific moments during gameplay. Some of those tools added to the engine are from third-party software creators with programs such as FMOD (www.fmod.org) and the WaveWorks Interactive Sound Engine (Wwise) (www.audiokinetic.com). These allow the audio producer to tell the game engine when to turn on or turn off specific pieces of audio (the stems).

Stems can also be created as variations. In other words, the composer may create different versions of music that range from subtle to providing a very strong presence. Usually the composer produces high, medium, and low versions. By playing these in loops or layering them, the audio director can build a unique sound experience for each aspect of the game. As soon as stems are delivered for a project, the audio producer will begin to implement them into the game, so that even as a vertical slice is being created, the correct audio is in place. Temp tracks are occasionally used in the vertical slice; however, whenever possible, the more finished audio is always the first choice to see how it works with gameplay. It's best to get the ambient sound down first and then add positional audio for things like waterfalls and streams.

Audio helps create realism and an immersive environment.

Ambient sounds are the background noises, the atmospheric audio, that can be heard in a particular scene or location. For example, if you're playing a game where your character is walking through a forest, you may hear crickets, creaking tree boughs, wind rustling the leaves, birds chirping, and other nature sounds. Those ambient sounds, when layered into the game, help create a more immersive environment.

A marker is a code used to program where in the environment a sound will play.

Positional audio plays during gameplay only when players move over or near where a marker is placed to activate the sound. For example, should your character see a waterfall in the distance, you might hear the sound of the rushing water as if it were far away. As you move your character closer to the waterfall, the sound will get louder, helping to add to the illusion of realism.

In addition to creating an immersive environment, audio can add to game-play. Music and sounds may draw players toward another area in the game. Heightened audio coming from another region may prompt them to explore.

Music and sounds also give cues that enhance gameplay.

Music can change tempo to create more anticipation during gameplay and raise the player's adrenaline level a bit. It can also slow down significantly to indicate the player is in a safe area.

Signature sounds from games are also used in marketing.

The music that the composer creates for a project can also be used in marketing campaigns. The use of these original, signature scores is one of the ways the distributor helps to build audience recognition.

Breaking Down Music Types

The following is a typical list of how music might be broken down for various parts of gameplay. All music in games is triggered by precise cues programmed for the engine to read and act on:

Scripted Music This is the most basic, overriding music in the game. It runs during gameplay and then quits until another command is given.

Incidental Music These tend to be short spurts of music, about 5–15 seconds each, that run randomly in the background to help fill gaps of time if the player is moving in a remote area of the game.

Location Music As the name indicates, these pieces of music are tied to specific locations in a game. As the player enters a city, that city may have a piece of signature music that plays. As noted previously, if the player is approaching a specific area, the location music may trigger to let the player know something important is up ahead and draw them toward that place. Location music can also be cued to notify players if a location is hostile.

Battle Music When players enter into battle during gameplay, the audio tends to consist of three unique segments: an intro, a loop that can play for as long as the battle runs, and an outro sequence when fighting stops.

Audio and music that change according to what is happening during gameplay are referred to as adaptive audio.

Just as specific locations may have their own sound signatures, characters sometimes have their own music as well.

Ambient Sound

Ambient sound systems help to breathe life into projects. These are the gusts of wind heard blowing in the background, falling rain, crickets in the marsh, and the patter of feet from people walking past your character on the street. Because many games are nonlinear, the audio can't necessarily progress in one set path. If the character turns and goes back the way they came, then the audio needs to reflect that. Again, the more overlapping or layered these sounds are, the more immersive a game can become.

To create effective sound for nonlinear games, most audio is created in short loops, about 5–30 seconds long, that can be triggered by the game engine depending on factors such as

- Physically where the player is in the world

- What the player is doing

- Circumstances such as events or other characters the player encounters

In the simplest of games, the ambient soundscape can be described as utilizing a variety of steady loops creating the overall tone of the setting (such as the steady dripping of leaky pipes in a sewer system), as well as one-shots (the occasional rat scurrying by, or a distant pipe creaking). Multiple files of one-shots can be created and played randomly throughout a game, instead of being specifically triggered by locations or events. This randomness also plays an important part in breathing life into the game and avoiding too much repetition, which can become boring in long games.

Sampling

The sampling rate determines the sound frequency range and is usually viewed as a waveform. This waveform is frequently referred to as its bandwidth.

Sampling, referred to in audio production as the sampling rate, is the number of samples per second required to digitize a specific sound.

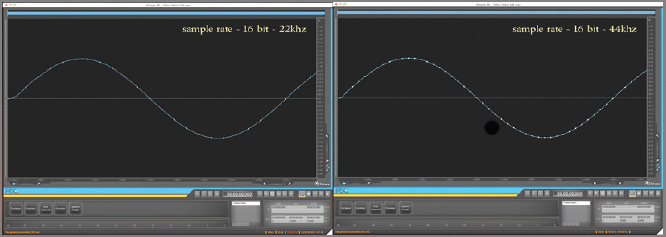

Figure 8.4 shows two sampling rates for the same audio signal. The audio signal is a 1 kilohertz (kHz) sine wave (a fairly common signal used for calibrating audio hardware and software). The screenshots of the different sample rates were taken while these audio files were opened using a program called iZotope RX (www.izotope.com/products/audio/rx/).

The little white square dots that occur along the waveform in each image mark where the audio signal was sampled (to put it in visual terms, it's the same principle of frames per second that is used when discussing film/video: the aperture closing and taking a snapshot of an image in time).

FIGURE 8.4 The two different images depict the same audio signal captured using two separate sample rates.

When comparing the two images, you can see the 44 kHz sample rate is sampling the waveform twice as many times as the 22 kHz sample rate in the same amount of time, allowing for more fidelity of the original signal.

The majority of audio for games is recorded at 96 kHz, but generally it's down-sampled to 48 kHz for the final format.

The sample rate is measured in hertz (Hz) or in thousands of hertz (kilohertz: kHz). The majority of audio for games is recorded at 96 kHz. The chart in Figure 8.4 shows that the higher the sampling rate, the more nuances become available in the sampled sound. When you begin to alter the sampling rate, you're fishing for a unique sound.

The purpose or recording original sound at 96 kHz is to have those sounds at higher sample rates so they can be manipulated at a higher quality in the editing/design process. For example, if you slow the speed of a sound, a recording done with a higher sample rate will yield a much better result due to the fact that a higher-resolution “snapshot” was taken of the audio. Voice-over/dialogue is often recorded at 48 kHz. More often than not, SFX (special effects), dialogue, and music are down-sampled to a combination of different sample rates, usually 48 kHz or lower, to conserve disk space and limit strain on system memory. For many games, those 48 kHz sounds are compressed using a wide variety of licensed and proprietary audio codecs.

The foley process was first used in film and television and has been adapted for games. Many companies that produce foley sounds do so for all of these industries.

Foley and remote recording

Occasionally, audio designers will go to recording sessions held in a foley studio. This type of studio is a controlled environment, and a huge range of sounds can be captured—for example, breaking glass, smashing bricks, or stomping feet on various surfaces such as sand, grass, pavement, gravel, wood, broken china, or glass. If your game has a character who needs to walk through a post-apocalyptic world, recording sounds of someone walking over broken bricks and wood and glass really heightens the illusion of what is happening in this environment and adds to the play experience.

Unlike the foley, which is controlled, other original sounds can be acquired from remote recording sessions, which are specifically geared to capture ambient sounds. Designers travel to places all over the world to record natural or manmade sounds on location for their games. Weapon fire for shooters is often captured this way, by recording the sounds of specific gunfire on ranges.

Some typical remote recording sessions may include

- Missile launches

- Shooting ranges

- Zoos (all types of animals)

- Factories (capturing the ambient sounds of the machines and people working)

- City streets and shopping malls

- Sporting events

Figure 8.5 shows a remote recording session taking place on a ranch, where the sounds of cattle were being recorded.

FIGURE 8.5 Notice that the microphone used by the remote-recording engineer has a fuzzy covering over it. The purpose of the covering is to help prevent the sound of wind from whistling too much over the mic.

Because audio for games is used in a digital format, it's quite easy to look at massive sound-file libraries to find effects for your game. Recording original audio costs money, so game designers choose carefully when they want to tap a library for sounds and when they want to attempt original recordings. If the designer has made the choice to record original sounds, then something unique, sound-wise, is being sought.

Original Sounds

Original sounds can be created by blending and manipulating sounds. Brad Beaumont cleverly manipulates and mixes audio to achieve desired results. For one project, Beaumont needed to come up with the sound for a chainsaw wielded by an otherwordly spirit. In Figure 8.6, you see Beaumont working with the computer, blending different tracks to create new sounds.

FIGURE 8.6 Brad Beaumont from Soundelux, Design Music Group, creates original sound effects for video games.

Even as original sounds are created for unique projects by recording audio or manipulating existing sounds, designers will occasionally play sound backward to obtain entirely new material to work with.

Beaumont started with the basic sound of the chainsaw. To begin altering the sound, searching for the elusive quality that would marry with the chainsaw, Beaumont turned to more organic sounds to blend with the saw's mechanical roaring. After working with a few combinations, he settled on a blending of a real chainsaw and the guttural growling of an adult walrus.

As Beaumont works with the sound, he does so with dual-playback systems. One is very high end, allowing him to hear all the nuances of the sound. The other one is a consumer-grade speaker system. As he listens to the sounds, he searches for the sweet spot in between, where the sound works best. He does so because, as most game designers are aware, home audio systems can vary dramatically.

Editing Software and File Formats

The majority of editors work with the digital audio workstation Pro Tools, which is a multitrack system. Other favorite systems are Logic from Apple Studios, Sound Forge, GarageBand, and WaveLab.

MP3 audio files get their name from the company that created them, the Moving Picture Experts Group. This file type compresses well and is standard for most home playback systems.

File formats can vary, of course, but most audio for games is delivered as MP3s, which are lossy audio files. A lossy file is compressed, which helps keep overall file sizes down for the game. One of the things a game maker wants to avoid is letting the size of the game get too large, and audio files tend to really add up, size-wise. For example, one second of audio from a compact disc uses about the same amount of space as 15,000 words of ASCII text (that is, about a 60-page book).

Many games also use the Waveform Audio File Format (WAV or WAVE), which is common with more expansive projects. A WAV is native to the PC, and its unique feature is that this format stores sound in what are known as chunks. Most WAV files contain only two chunks: the format chunk and the data chunk. WAV files can support compressed data, like MP3s; however, they can also contain uncompressed audio.

Two organizations that focus on audio are the Game Audio Network Guild (GANG, www.audiogang.org) and the Interactive Audio Special Interest Group (IASIG, www.iasig.org).

An excellent website to visit is www.designingsound.org, which provides background information on creating, recording, and manipulating sound. In addition, there are forums where visitors can ask questions of a special guest each month. Another good site to visit for learning resources is www.gamessound.com.

Sound effects

Building elaborate scores and immersive ambient sounds is indeed a major component of designing audio for games. When your game is in development and then production, audio work is going on all the time. Along with music and ambient sound, special effects need to be created for explosions, weapons, unique engines (lots of games have fighter planes, spaceships, and cars), and game interfaces.

As navigation is fine-tuned, the designer will want to create unique sounds for button clicks, page turns, inventory opening and closing, and many other events.

Weapons

For the movie Star Wars, audio designer Ben Burt created the unique light-saber laser sound by recording idling interlock motors in an old movie projector combined with television interference.

Sounds for weapons in games can come from remote recording sessions (traveling to a location where weapons such as guns can be fired, and recording them) or from manipulating existing audio files to get the desired effect. For alien-type weapons, it often works well to record machinery with humming sounds. Many designers mix that highly technical/mechanical sound with organic sounds like animal roars, high winds, or crashing seas to obtain the perfect effect.

Interfaces

Interfaces for games can be truly expansive, with dozens of interactive elements, or just simple spaces to sign in. In most games, when a player clicks any part of the interface that has been designed to be interactive, they usually experience some type of animated effect along with a unique sound that has been designed to accompany that procedure.

Initially, a simple clicking sound was fairly standard. Clever designers were quick to learn that this was another area where signature audio could be created.

If your game is futuristic with a high-tech-looking interface, that simple click will make the hard work of developing the visuals seem almost pedestrian or even comical. The challenge to the audio designer is to create an audio effect that will complement the graphics.

As with the creation of scores and ambient sounds, the more the designer understands the game, the more effective the sound is. Providing the designer with not only what the interface looks like but also how it functions is critical for successful design. Usually, the designer gets a QuickTime movie showing the look and functionality of the interface; however, whenever possible, they get to play the game themselves to get a sense of the timing and importance of any interactive element.

Speech

Dialogue is an example of diegetic sound, which is meant to seem real and plays in-game. Non-diegetic sound seems to play from off-screen, such as narrator commentary.

Dialogue for games, as for any other type of entertainment medium, is planned and scripted. Setting up recording sessions is time consuming and expensive. The better prepared you are for recording, the less likely you are to have to re-record. In most cases, some sections of games need to be re-recorded because of changes in the gameplay or story; but because of the cost and time involved, you want to minimize the need to re-record.

Dialogue

Often, designers record themselves or others on the team so they have temp tracks to work with. Temp tracks are an important part of game creation, because they give the designer the opportunity to build a section of the game and test the gameplay where that audio is needed before investing time and money in recording final dialogue.

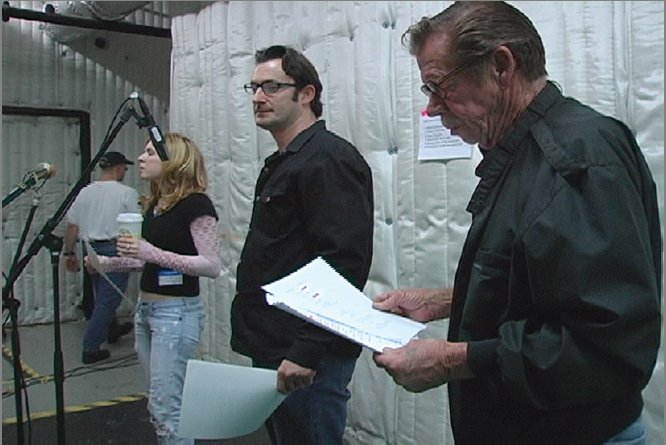

Final dialogue is usually recorded in a controlled environment to avoid picking up background audio that will diminish the performance and quality of the recording. Usually, actors are hired to create the final vocal performances, as seen in Figure 8.7.

FIGURE 8.7 An ensemble cast of actors in a room set up to record their performances. The walls are draped with soundproofing material to help block out external audio that will spoil the performance.

As I noted previously, games have a history of terrible dialogue. To create a more realistic experience, many games now use writers to create dialogue. The goal is natural language and delivery.

Cinematics

For audio work on cinematics and some cutscenes, the approach is more linear. These elements are designed and produced much as they would be for film or television. The experience for the gamer is to see a finished, polished, short movie that is scripted from start to end. All dialogue, music, and other sounds are planned to unfold in a controlled way.

Sound-Based Computer Games

One area that has also been growing, in terms of audio production for games, is sound-based games. These are made for the visually impaired; however, anyone can play and enjoy them.

When approaching the design for a game of this type, the designer should be aware that all information communicated to the player happens aurally with an auditory interface. The majority of games we have looked at in this book have dealt with the creation of visual graphical interfaces. An auditory interface uses recorded dialogue to provide information to the player about how to play the game.

It can become boring to continually listen to a recorded voice saying “turn right, turn left, jump, pick up,” and so on. These types of games still need that all-important gameplay to function well; so once the initial instructions are provided to the player, different audio cues along with music and recorded dialogue can be used to clue the player when to turn, jump, pick up, and so on.

To further enhance the gameplay, these audio cues can change during game-play: for example, if a puzzle is getting more difficult, then those cues can play more quickly or increase in pitch to up the excitement.

These games tend to have large, simple, brightly colored graphics for players who have some visual capabilities. The gameplay is really quite fun. You can find examples at www.audiogames.net. These games encompass a wide variety of gameplay styles, including word, strategy, puzzle, card, educational, racing, role-playing games, adventure, trivia, and arcade.

Sound has become an integral part of game design, enhancing gameplay without overpowering the experience. The techniques for developing sound for games has paralleled that of films by using multiple techniques and taking advantage of full orchestral sound to record expansive and sweeping scores.

The remarkable difference between the two media is that many games, unlike film, aren't linear, so designers need to work in unique ways to keep the experience fresh and exciting without producing endless mind-numbing loops.

The future for audio development continues to grow as technology embraces more and more of what artificial intelligence (AI) will bring to the table. Improvements with AI can mean the audio will be even more reactive to what is happening during gameplay. For example, if a character picks up a heavy object, then the sound from their footsteps will alter. The field of sound for games will improve and grow as the worlds for the games become larger and technology allows for greater diversity in how sound can be delivered during gameplay.

ADDITIONAL EXERCISES

- Sit in your own home, or travel to a mall or restaurant, and close your eyes. Try to identify as many different sounds as you can. Doing this exercise should show you how many sounds are around you on a daily basis that you usually take for granted.

- Prepare a list of ambient sounds that could be included in your own game design. Select certain sounds that would be heard more than others to create variations.

- Adapt the list of sounds you created in exercise 2, based on time of day. For example, what sounds are different from day to night: more crickets or owls, or stronger winds? What changes would you need to account for to match weather changes? For example, if there is rain in your game, what materials would that rain strike in your environment: tin roofs, broad leaves on trees, or a windshield? How would rain sound different in those various environments?

REVIEW QUESTIONS

- Audio segments created for games are referred to as______.

- Stems

- Chunks

- Layers

- Bytes

- True or false. A remote recording session is held in a controlled environment.

- How does layering work?

- Sounds are digitally

- Mechanical sounds and organic sounds are blended together.

- Multiple microphones are used in synthesized. a foley session to capture more sound.

- Audio segments are added one over another to build complexity.

- True or false. Location music plays when a player enters a specific region in a game.

- What does an audio marker do?

- Provides players with more points whenever a specific piece of music turns on

- Programs the computer to turn on or turn off specific audio files

- Uses a visible kiosk or similar structure in the game to allow the player to generate music

- Uses a unique programming trick where the screen changes color depending on what sounds are playing