CHAPTER 4

COMPUTING MULTIPLE QUERIES

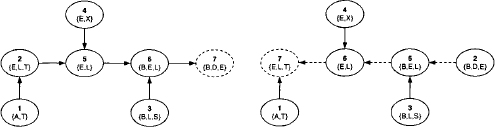

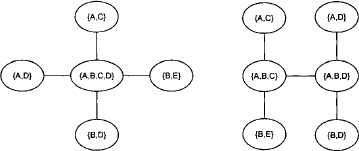

The foregoing chapter introduced three local computation algorithms for the solution of single-query inference problems, among which the generalized collect algorithm was shown to be the most universal and efficient scheme. We therefore take this algorithm as the starting point for our study of the second class of local computation procedures that solve multi-query inference problems. Clearly, the most simple approach to compute multiple queries is to execute the collect algorithm repeatedly for each query. Since we already gave a general definition of a covering join tree that takes an arbitrary number of queries into account, it is not necessary to take a new join tree for the answering of a second query on the same knowledgebase. Instead, we redirect and renumber the join tree in such a way that its root node covers the current query and execute the collect algorithm. This procedure is repeated for each query. In Figure 3.15, arrows indicate the direction of the message-passing for a particular root node and therefore reflect the restrictions imposed on the node numbering. It is easy to see that changing the root node only affects the arrows between the old and the new root node. This is shown in Figure 4.1 where we also observe that a valid node numbering for the redirected join tree is obtained by inverting the numbering on the path between the old and the new root node. Hence, it is possible to solve a multi-query inference problem by repeated execution of the collect algorithm on the same join tree with only a small preparation between two consecutive runs. Neither the join tree nor its factorization have to be modified.

Figure 4.1 The left-hand join tree is rooted towards the node with label {B, D, E}. If then node {E, L, T} is elected as new root node, only the dashed arrows between the old and the new root are affected. Their direction is inverted in the right-hand figure and the new node numbering is obtained by inverting the numbering between the old and the new root.

During a single run of the collect algorithm on a covering join tree (V, E, λ, D), exactly |E| messages are computed and transmitted. Consequently, if a multi-query inference problem with n ![]() queries is solved by repeated application of collect on the same join tree, n|E| messages are computed and exchanged. It will be illustrated in a short while that most of these messages are identical and their computation can therefore be avoided by an appropriate caching policy. The arising algorithm is called Shenoy-Shafer architecture and will be presented in Section 4.1. It reduces the number of computed messages to 2|E| for an arbitrary number of queries. However, the drawback of this approach is that all messages have to be stored until the end of the total message-passing. This was the main reason for the development of more sophisticated algorithms that aim at a shorter lifetime of messages. These algorithms are called Lauritzen-Spiegelhalter architecture, HUGIN architecture and idempotent architecture and will be introduced in Section 4.3 to Section 4.5. But these alternative architectures require more structure in the underlying valuation algebra and are therefore less generally applicable than the Shenoy-Shafer architecture. More concretely, they presuppose some concept of division that will be defined in Section 4.2. Essentially, there are three requirements for introducing a division operator, namely either separativity, regularity or idempotency. These considerations require more profound algebraic insights that are not necessary for the understanding of division-based local computation algorithms. We therefore delay their discussion to the appendix of this chapter. A particular application of division in the context of valuation algebras is scaling or normalization. If, for example, we want to interpret arithmetic potentials from Instance 1.3 as discrete probability distributions, or set potentials from Instance 1.4 as Dempster-Shafer belief functions, normalization becomes an important issue. Based on the division operator, scaling or normalization can be introduced on a generic, algebraic level as explored in Section 4.7. Moreover, it is then also required that the solution of inference problems gives scaled results. We therefore focus in Section 4.8 on how the local computation architectures of this chapter can be adapted to deliver scaled results directly.

queries is solved by repeated application of collect on the same join tree, n|E| messages are computed and exchanged. It will be illustrated in a short while that most of these messages are identical and their computation can therefore be avoided by an appropriate caching policy. The arising algorithm is called Shenoy-Shafer architecture and will be presented in Section 4.1. It reduces the number of computed messages to 2|E| for an arbitrary number of queries. However, the drawback of this approach is that all messages have to be stored until the end of the total message-passing. This was the main reason for the development of more sophisticated algorithms that aim at a shorter lifetime of messages. These algorithms are called Lauritzen-Spiegelhalter architecture, HUGIN architecture and idempotent architecture and will be introduced in Section 4.3 to Section 4.5. But these alternative architectures require more structure in the underlying valuation algebra and are therefore less generally applicable than the Shenoy-Shafer architecture. More concretely, they presuppose some concept of division that will be defined in Section 4.2. Essentially, there are three requirements for introducing a division operator, namely either separativity, regularity or idempotency. These considerations require more profound algebraic insights that are not necessary for the understanding of division-based local computation algorithms. We therefore delay their discussion to the appendix of this chapter. A particular application of division in the context of valuation algebras is scaling or normalization. If, for example, we want to interpret arithmetic potentials from Instance 1.3 as discrete probability distributions, or set potentials from Instance 1.4 as Dempster-Shafer belief functions, normalization becomes an important issue. Based on the division operator, scaling or normalization can be introduced on a generic, algebraic level as explored in Section 4.7. Moreover, it is then also required that the solution of inference problems gives scaled results. We therefore focus in Section 4.8 on how the local computation architectures of this chapter can be adapted to deliver scaled results directly.

4.1 THE SHENOY-SHAFER ARCHITECTURE

The initial situation for the Shenoy-Shafer architecture and all other local computation schemes of this chapter is a covering join tree for the multi-query inference problem according to Definition 3.8. Join tree nodes are initialized using the identity element, and the knowledgebase factors are combined to covering nodes as specified by equation (3.39). Further, we shall see that local computation algorithms for the solution of multi-query inference problems exchange messages in both directions of the edges. It is therefore no longer necessary to direct join trees. Let us now reconsider the above idea of executing the collect algorithm repeatedly for each query on the same join tree. Since only the path from the old to the new root node changes between two consecutive runs, only the messages of these region are affected. All other messages do not change but are nevertheless recomputed.

Example 4.1 Assume that the collect algorithm has been executed on the left-hand join tree of Figure 4.1. Then, the root node is changed and collect is restarted for the right-hand join tree. It is easy to see that the messages ![]() and

and ![]() already existed in the first run of the collect algorithm. Only the node numbering changed but not their content.

already existed in the first run of the collect algorithm. Only the node numbering changed but not their content.

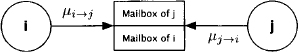

A technique to benefit from already computed messages is to store them for later reuse. The Shenoy-Shafer architecture (Shenoy & Shafer, 1990) organises this caching by installing mailboxes between neighboring nodes which store the exchanged messages. In fact, this is similar to the technique applied in Section 3.10.2 to improve the space complexity of the collect algorithm. This time however, we assume two mailboxes between every pair of neighboring nodes since messages are sent in both directions. Figure 4.2 schematically illustrates this concept.

Figure 4.2 The Shenoy-Shafer architecture assumes mailboxes between neighboring nodes to store the exchanged messages for later reuse.

Then, the Shenoy-Shafer algorithm can be described by the following rules:

| R1: | Node i sends a message to its neighbor j as soon as it has received all messages from its other neighbors. Leaves can send their messages right away. |

| R2: | When node i is ready to send a message to neighbor j, it combines its initial node content with all messages from all other neighbors. The message is computed by projecting this result to the intersection of the result’s domain and the receiving neighbor’s node label. |

The algorithm stops when every node has received all messages from its neighbors. In order to specify this algorithm formally, a new notation is introduced that determines the domain of the valuation described in Rule 2. If i and j are neighbors, the domain of node i at the time where it sends a message to j is given by

(4.1) ![]()

where d(![]() i) = ωi is the domain of the node content of node i.

i) = ωi is the domain of the node content of node i.

- The message sent from node i to node j is

Theorem 4.1 At the end of the message-passing in the Shenoy-Shafer architecture, node i can compute

(4.3) ![]()

Proof: The important point is that the messages ![]() do not depend on the actual schedule used to compute them. Due to this fact, any node

do not depend on the actual schedule used to compute them. Due to this fact, any node ![]() can be selected as root node. Then, the edges are directed towards this root and the nodes are renumbered. The message-passing towards the root corresponds to the collect algorithm and the proposition for node i follows from Theorem 3.7.

can be selected as root node. Then, the edges are directed towards this root and the nodes are renumbered. The message-passing towards the root corresponds to the collect algorithm and the proposition for node i follows from Theorem 3.7.

Answering the queries of the inference problem from this last result demands one additional projection per query. Since each query xi is covered by some node ![]() , we obtain the answer for this query by computing:

, we obtain the answer for this query by computing:

(4.4) ![]()

Example 4.2 We execute the Shenoy-Shafer architecture on the join tree of Figure 4.3. There are 6 edges, thus 12 messages to be sent and stored in mailboxes. These messages are:

Figure 4.3 Since messages are sent in all directions in the Shenoy-Shafer architecture, join trees do not need to be rooted anymore.

At then end of the message-passing, the nodes compute:

Since the Shenoy-Shafer architecture accepts arbitrary valuation algebras, we may in particular apply it to formalisms based on variable elimination. This specialization is sometimes called cluster-tree elimination. In this context, a cluster refers to a join tree node together with the knowledgebase factors that are assigned to it. Further, we could use the join tree from the graphical representation of the fusion algorithm if we are, for example, only interested in queries that consist of single variables. Under this even more restrictive setting, the Shenoy-Shafer architecture is also called bucket-tree elimination (Kask et al., 2001). Answering more general queries with the bucket-tree elimination algorithm is again possible if neutral elements are present. However, we continue to focus our studies on the more general and efficient version of the Shenoy-Shafer architecture introduced above.

4.1.1 Collect & Distribute Phase

For a previously fixed node numbering, Theorem 4.1 implies that

This shows that the collect algorithm is extended by a single message coming from the child node in order to obtain the Shenoy-Shafer architecture. In fact, it is always possible to schedule a first part of the messages in such a way that their sequence corresponds to the execution of the collect algorithm. The root node ![]() will then be the first node which has received the messages of all its neighbors. It suggests itself to name this first phase of the Shenoy-Shafer architecture collect phase or inward phase since the messages are propagated from the leaves towards the root node. It furthermore holds that

will then be the first node which has received the messages of all its neighbors. It suggests itself to name this first phase of the Shenoy-Shafer architecture collect phase or inward phase since the messages are propagated from the leaves towards the root node. It furthermore holds that

![]()

for this particular scheduling. This finally delivers the proof of correctness for the particular implementation proposed in Section 3.10.2 to improve the space complexity of the collect algorithm. In fact, the collect phase of the Shenoy-Shafer architecture behaves exactly in this way. Messages are no more combined to the node content but stored in mailboxes, and the combination is only computed to obtain the message for the child node. Then, the result is again discarded, which guarantees the lower space complexity of equation (3.49). The messages that remain to be sent after the collect phase of the Shenoy-Shafer architecture constitute the distribute phase. Clearly, the root node will be the only node that may initiate this phase since it possesses all necessary messages after the collect phase. Next, the parents of the root node will be able to send their messages and this propagation continues until the leaves are reached. In fact, nodes can send their messages in the inverse order of their numbering. Therefore, the distribute phase is also called outward phase.

Lemma 4.1 It holds that

Proof: We have

The second equality follows from equation (4.5).

The next lemma states that the eliminator ![]() (see Definition 3.12) of each node

(see Definition 3.12) of each node ![]() will be filled after the collect phase:

will be filled after the collect phase:

Lemma 4.2 It holds that

(4.7) ![]()

Proof: Assume X contained in the right-hand part of the equation. Thus, ![]() but X

but X ![]() . From equation (4.6) can be deduced that

. From equation (4.6) can be deduced that ![]() . Since

. Since

![]()

![]() . This proves that

. This proves that

![]()

Assume that X is contained in the left-hand part. So, X ![]() but

but ![]() which in turn implies that

which in turn implies that ![]() and consequently

and consequently ![]() . Thus,

. Thus, ![]() and equality must hold.

and equality must hold.

The particular scheduling into a collect and distribute phase allows us to describe the intrinsically distributed Shenoy-Shafer architecture by a sequential algorithm. First, Algorithm 4.1 performs the complete message-passing. It does not return any value but ensures that all ![]() and

and ![]() are stored in the corresponding mailboxes. To answer a query

are stored in the corresponding mailboxes. To answer a query ![]() we then call Algorithm 4.2 which presupposes that the query is covered by some node in the join tree. The message-passing procedure can therefore be seen as a preparation step to the actual query answering.

we then call Algorithm 4.2 which presupposes that the query is covered by some node in the join tree. The message-passing procedure can therefore be seen as a preparation step to the actual query answering.

Algorithm 4.1 Shenoy-Shafer Architecture: Message-Passing

Algorithm 4.2 Shenoy-Shafer Architecture: Query Answering

The implementation of Algorithm 4.2 corresponds to Theorem 4.1, but can easily be changed using equation (4.5). The combination of the node content with all parent messages has already been computed for the creation of the child message in the collect phase. If this partial result is still available, it is sufficient to combine it with the message obtained from the child node. Doing so, Algorithm 4.2 executes at most one combination. Clearly, under this modification, the collect phase becomes again equal to the original description of the collect algorithm in Section 3.10 since we again store the combination of the parent messages with the original node content for each node. This saves a lot of redundant computation time in the query-answering procedure since the combination of the original node factor with all arrived messages is already available, for the prize of an additional factor per node whose space is again bounded by the node label. Hence, we generally refer to such a situation as a space-far-time trade. As memory seems to be the more sensitive resource in many practical applications, it always depends on the context if a space-for-time trade is also a good deal. In the following, we discuss some further optimization issues.

4.1.2 The Binary Shenoy-Shafer Architecture

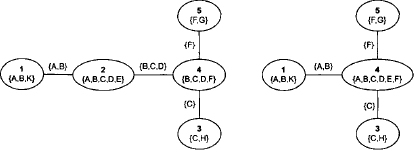

In a binary join tree, each node has at most three neighbors or, if we reconsider directed join trees, at most two parents. (Shenoy, 1997) remarked that binary join trees generally allow better performance for the Shenoy-Shafer architecture. The reason is that nodes with more than three neighbors compute a lot of redundant combinations. Figure 4.4 shows a non-binary join tree that covers the same knowledgebase factors and queries as the join tree of Figure 4.3. Further, this join tree has one node less such that only 10 messages instead of 12 are sent during the Shenoy-Shafer architecture. We list the messages sent by node 5:

Figure 4.4 Using non-binary join trees creates redundant combinations.

It is easy to see that some combinations of messages must be computed more than once. For comparison, let us also list the messages sent by node 5 in Figure 4.3 that only has three neighbors. Here, no combination is computed more than once:

A second reason for dismissing non-binary join trees is that some computations may take place on larger domains than actually necessary. Comparing the two join trees in Figure 4.5, we observe that the treewidth of the binary join tree is smaller which naturally leads to a better time complexity. On the other hand, the binary join tree contains more nodes that again increases the space requirement. But this increase is only linear since the node separators will not grow. It is therefore generally a good idea to use binary join trees for the Shenoy-Shafer architecture. Finally, we state that any join tree can be transformed into a binary join tree by adding a sufficient number of new nodes. A corresponding join tree binarization algorithm is given in (Lehmann, 2001). Further ways to improve the performance of local computation architectures by aiming to cut down on messages during the propagation are proposed by (Schmidt & Shenoy, 1998) and (Haenni, 2004).

Figure 4.5 The treewidth of non-binary join trees is sometimes larger than necessary.

4.1.3 Performance Gains due to the Identity Element

It was foreshadowed multiple times in the context of the generalized collect algorithm that initializing join tree nodes with the identity element instead of neutral elements also increases the efficiency of local computation. In fact, the improvement is absolute and concerns both time and space resources. This effect also occurs when dealing with multiple queries. To solve a multi-query inference problem, we first ensure that each query is covered by some node in the join tree. In most cases, however, queries are very different from the knowledgebase factor domains. Therefore, their covering nodes often still contain the identity element after the knowledgebase factor distribution. In such cases, the performance gain caused by the identity element becomes important. Let us reconsider the knowledgebase of the medical example from Instance 2.1 and assume the query set {{A, B, S, T}, {D, S, X}}. We solve this multi-query inference problem using the Shenoy-Shafer architecture and first build a join tree that covers the eight knowledgebase factors and the two queries. Further, we want to ensure that all computations take place during the run of the Shenoy-Shafer architecture, which requires that each join tree node holds at most one knowledgebase factor. Otherwise, a non-trivial combination would be executed prior to the Shenoy-Shafer architecture as a result of equation (3.39). A binary join tree that fulfills all these requirements is shown in Figure 4.6. Each colored node holds one of the eight knowledgebase factors, and the other (white) nodes store the identity element. We observe that the domain of each factor directly corresponds to the corresponding node label in this particular example.

Figure 4.6 A binary join tree for the medical inference problem with queries {A, B, S, T} and {D, S, X}. The colored nodes indicate the residence of knowledgebase factors.

We now execute the collect phase of the Shenoy-Shafer architecture and send messages towards node 14. In doing so, we are not interested in the actual value of the messages but only in the domain size of the total combination in equation (4.2) before the projection is computed. Since domains are bounded by the node labels, we color each node where the total label has been reached. The result of this process is shown in Figure 4.7. Interestingly, only two further nodes compute on their maximum domain size. All others process valuations with smaller domains. For example, the message sent by node 11 consists of its node content (i.e. the identity element), the message from node 1 (i.e. a valuation with domain {A}) and the message from node 2 (i.e. a valuation of domain {A, T}). Combining these three factors leads to a valuation of domain {A, T} ![]() {A, B, S, T}. Therefore, this node remains uncolored in Figure 4.7. If alternatively neutral elements were used for initialization, each node content would be blown up to the label size, or, in other words, all nodes would be colored.

{A, B, S, T}. Therefore, this node remains uncolored in Figure 4.7. If alternatively neutral elements were used for initialization, each node content would be blown up to the label size, or, in other words, all nodes would be colored.

Figure 4.7 The behavior of node domains during the collect phase. Only the colored nodes deal with valuations of maximum domain size.

4.1.4 Complexity of the Shenoy-Shafer Architecture

The particular message scheduling that separates the Shenoy-Shafer architecture into a collect and a distribute phase suggests that executing the message-passing in the Shenoy-Shafer architecture doubles the effort of the (improved) collect algorithm. We may therefore conclude that the Shenoy-Shafer architecture adopts a similar time and space complexity as the collect algorithm in Section 3.10.2. There are 2|E| = 2(|V|| - 1) messages exchanged in the Shenoy-Shafer architecture. Each message requires one projection and consists of the combination of the original node content with all messages obtained from all other neighbors. This sums up to |ne(i)| - 1 combinations for each message. Clearly, the number of neighbors is always bounded by the degree of the join tree, i.e. we have |ne(i)| - 1 ≤ deg - 1. In total, the number of operations executed in the message-passing of the Shenoy-Shafer architecture is therefore bounded by

![]()

Thus, we obtain the following time complexity bound for the message-passing:

It is clear that we additionally require |ne(i)| - 1 ≤ deg combinations and one projection to obtain the answer for a given query after the message-passing. But since every query must be covered by some node in the join tree, it is reasonable to bound the number of queries by the number of join tree nodes. The additional effort for query answering does therefore not change the above time complexity. Note also that the factor deg is a constant when dealing with binary join trees and disappears from equation (4.8), possibly at the expense of a higher number of nodes.

Regarding space complexity, we recall that the number of messages sent in the Shenoy-Shafer architecture equals two times the number of messages in the improved collect algorithm. Therefore, both algorithms have the same space complexity

(4.9) ![]()

4.1.5 Discussion of the Shenoy-Shafer Architecture

Regarding time complexity, the improvement brought by the Shenoy-Shafer architecture compared to the repeated application of the collect algorithm as proposed in the introduction of this chapter is obvious. If we compute projections to all node labels in the join tree, we need |V| executions of the collect algorithm, which result in an overall complexity of

![]()

Since the degree of a join tree is generally much smaller than its number of nodes, the Shenoy-Shafer architecture provides a considerable speedup. Both approaches further share the same asymptotic space complexity.

The Shenoy-Shafer architecture is the most general local computation scheme for the solution of multi-query inference problems since it can be applied to arbitrary valuation algebras without restrictions of any kind. Further, it provides the best possible asymptotic space complexity one can expect for a local computation procedure. Concerning the time complexity, further architectures for the solution of multi-query inference problems have been proposed that eliminate the degree from equation (4.8), even if the join tree is not binary. However, it was shown in (Lepar & Shenoy, 1998) that the runtime gain of these architectures compared to the binary Shenoy-Shafer architecture is often insignificant. Moreover, these alternative architectures generally have a worse space complexity, which recommends the application of the Shenoy-Shafer architecture whenever memory is the more problematic resource. On the other hand, the computation of messages becomes much easier in these architectures, and they also provide more efficient methods for query answering.

4.1.6 The Super-Cluster Architecture

A possible improvement that applies to the Shenoy-Shafer architecture consists in a time-for-space trade called super-cluster scheme or, if applied to variable systems, super-bucket scheme (Dechter, 2006; Kask et al., 2001). If we recall that the space complexity of the Shenoy-Shafer architecture is determined by the largest separator in the join tree, we may simply merge those neighboring nodes that have a large separator in-between. Doing so, the node labels grow but large separators disappear as shown in Example 4.3. Clearly, it is again subject to the concrete complexity predictors f and g if this time-for-space trade is also a good deal.

Example 4.3 The join tree shown on the left-hand side of Figure 4.8 has treewidth 5 and separator width 3. The separators are shown as edge labels. The largest separator {B,C, D} is between node 2 and 4. Merging the two nodes leads to the join tree on the right-hand side of this of Figure 4.8. Now, the treewidth became 6 but the separator width is only 2.

Figure 4.8 Merging neighboring nodes with a large separator in-between leads to better space complexity at the expense of time complexity.

The subsequent studies of alternative local computation schemes are essentially based on the assumption that time is the more sensitive resource. These additional architectures then simplify the message-passing by exploiting a division operator in the underlying valuation algebra which therefore requires more algebraic structure. The background for the existence of a division operation will next be examined.

4.2 VALUATION ALGEBRAS WITH INVERSE ELEMENTS

A particular important class of valuation algebras are those that contain an inverse element for every valuation ![]()

![]() . Hence, these valuation algebras posses the notion of division, which will later be exploited for a more efficient message caching policy during local computation. Let us first give a formal definition of inverse elements in a valuation algebra (Φ, D), based on the corresponding notion in semigroup theory.

. Hence, these valuation algebras posses the notion of division, which will later be exploited for a more efficient message caching policy during local computation. Let us first give a formal definition of inverse elements in a valuation algebra (Φ, D), based on the corresponding notion in semigroup theory.

Definition 4.1 Valuations ![]() are called inverses, if

are called inverses, if

We directly conclude from this definition that inverses necessarily have the same domain since the first condition implies that ![]() and from the second we obtain

and from the second we obtain ![]() . Therefore,

. Therefore, ![]() must hold. It is furthermore suggested that inverse elements are not necessarily unique.

must hold. It is furthermore suggested that inverse elements are not necessarily unique.

In Appendix D.1 of this chapter, we are going to study under which conditions valuation algebras provide inverse elements. In fact, the pure existence of inverse elements according to the above definition is not yet sufficient for the introduction of division-based local computation architectures since it only imposes a condition of combination but not on projection. For this purpose, (Kohlas, 2003) distinguishes three stronger algebraic properties that all imply the existence of inverses. Separativity is the weakest requirement among them. It allows the valuation algebra to be embedded into a union of groups that naturally include inverse elements. However, the price we pay is that full projection gets lost in the embedding algebra (see Appendix A.3 of Chapter 1 for valuation algebras with partial projection). Alternatively, regularity is a stronger condition than separativity and allows the valuation algebra to be decomposed into groups directly such that full projection is conserved. Finally, if the third and strongest requirement called idempotency holds, every valuation turns out to be the inverse of itself. The algebraic study of division demands notions from semigroup theory, although the understanding of these semigroup constructions is not absolutely necessary, neither for computational purposes nor to retrace concrete examples of valuation algebras with inverses. We continue with a first example of a (regular) valuation algebra that provides inverse elements. Many further instances with division can be found in the appendix of this chapter.

![]() 4.1 Inverse Arithmetic Potentials

4.1 Inverse Arithmetic Potentials

The valuation algebra of arithmetic potentials provides inverse elements. For ![]() with d(p) = s and x

with d(p) = s and x ![]() we define

we define

It is easy to see that p and p−1 satisfy equation (4.10) and therefore are inverses. In fact, we learn in the appendix of this chapter that arithmetic potentials form a regular valuation algebra.

Idempotency is a very strong and important algebraic condition under which each valuation becomes the inverse of itself. Thus, division becomes trivial in the case of an idempotent valuation algebra. Idempotency can be seen as a particular form of regularity, but it also has an interest in itself. There is a special local computation architecture discussed in Section 4.5 that applies only to idempotent valuation algebras but not to formalisms with a weaker notion of division. Finally, idempotent valuation algebras have many interesting properties that become important in later sections.

4.2.1 Idempotent Valuation Algebras

The property of idempotency is defined as follows:

Definition 4.2 A valuation algebra (Φ, D) is called idempotent if for all ![]() and t

and t ![]() , it holds that

, it holds that

(4.11) ![]()

In particular, it follows from this definition that ![]() Thus, choosing Ω =

Thus, choosing Ω = ![]() satisfies the requirement for inverses in equation (4.10) which means that each element becomes the inverse of itself. Comparing the two definitions 4.1 and 4.2, we remark that the latter also includes a statement about projection, which makes it considerably stronger. A similar condition will occur in the definition of separativity and regularity given in the appendix of this chapter that finally enables division-based local computation.

satisfies the requirement for inverses in equation (4.10) which means that each element becomes the inverse of itself. Comparing the two definitions 4.1 and 4.2, we remark that the latter also includes a statement about projection, which makes it considerably stronger. A similar condition will occur in the definition of separativity and regularity given in the appendix of this chapter that finally enables division-based local computation.

Lemma 4.3 The neutrality axiom (A7) always holds in a stable and idempotent valuation algebra.

Proof: From stability and idempotency we derive

![]()

Then, by the labeling axiom and the definition of neutral elements,

![]()

which proves the neutrality axiom.

![]() 4.2 Indicator Functions and Idempotency

4.2 Indicator Functions and Idempotency

The valuation algebra of indicator functions is idempotent. Indeed, for i ![]() with

with ![]() and

and ![]() we have

we have

![]()

This holds because ![]()

![]() 4.3 Relations and Idempotency

4.3 Relations and Idempotency

Is is not surprising that also the valuation algebra of relations is idempotent. For ![]() with d(R) = s and

with d(R) = s and ![]() we have

we have

![]()

Besides their computational importance addressed in this book, idempotent valuation algebras are fundamental in algebraic information theory. An idempotent valuation algebra with neutral and null elements is called information algebra (Kohlas, 2003) and allows the introduction of a partial order between valuations to express that some knowledge pieces are more informative than others. This establishes the basis of an algebraic theory of information that is treated exhaustively in (Kohlas, 2003). See also Exercise D.4 at the end of this chapter.

Definition 4.3 An idempotent valuation algebra with neutral and null elements is called information algebra.

We now focus on local computation schemes which exploit division to improve the message scheduling and time complexity of the Shenoy-Shafer architecture.

4.3 THE LAURITZEN-SPIEGELHALTER ARCHITECTURE

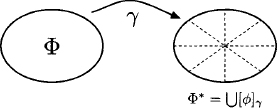

The Shenoy-Shafer architecture answers multi-query inference problems on any valuation algebra and therefore represents the most general local computation scheme. Alternatively, research in the field of Bayesian networks has led to another architecture called the Lauritzen-Spiegelhalter architecture (Lauritzen & Spiegelhalter, 1988), which can be applied if the valuation algebra provides a division operator. Thus, the starting point of this section is a multi-query inference problem with factors taken from a separative valuation algebra. As explained above, separativity is the weakest algebraic condition that allows the introduction of a division operator in the valuation algebra. Essentially, a separative valuation algebra ![]() can be embedded into a union of groups

can be embedded into a union of groups ![]() Φ*, D

Φ*, D![]() where each element of Φ* has a well-defined inverse. On the other hand, it is shown in Appendix D.1.1 that projection is only partially defined in this embedding. We therefore need to impose a further condition on the inference problem to avoid non-defined projections during the local computation process. The knowledgebase factors {

where each element of Φ* has a well-defined inverse. On the other hand, it is shown in Appendix D.1.1 that projection is only partially defined in this embedding. We therefore need to impose a further condition on the inference problem to avoid non-defined projections during the local computation process. The knowledgebase factors {![]() 1, …,

1, …, ![]() n} may be elements of Φ* but the objective function

n} may be elements of Φ* but the objective function ![]() must be element of Φ which guarantees that

must be element of Φ which guarantees that ![]() can be projected to any possible query. The same additional constraint is also assumed for the HUGIN architecture (Jensen et al., 1990) of Section 4.4.

can be projected to any possible query. The same additional constraint is also assumed for the HUGIN architecture (Jensen et al., 1990) of Section 4.4.

Remember, the inward phase of the Shenoy-Shafer architecture is almost equal to the collect algorithm with the only difference that incoming messages are not combined with the node content but kept in mailboxes. This is indispensable for the correctness of the Shenoy-Shafer architecture since otherwise, node i would get back its own message sent during the inward phase as part of the message obtained in the outward phase. In other words, some knowledge would be considered twice in every node, and this would falsify the final result. In case of a division operation, we can divide this doubly treated message out, and this idea is exploited by the Lauritzen-Spiegelhalter architecture and by the HUGIN architecture.

The Lauritzen-Spiegelhalter architecture starts executing the collect algorithm towards root node m. We define by Ψ′ the content of node i just before sending its message to the child node. According to Section 3.10 we have

(4.12) ![]()

Then, the message for the child node ch(i) is computed as

![]()

This is the point where division comes into play. As soon as node i has sent its message, it divides the message out of its own content:

This is repeated up to the root node. Thereupon, the outward propagation proceeds in a similar way. A node sends a message to all its parents when it has received a message from its child. In contrast, however, no division is performed during the outward phase. So, if node j is ready to send a message towards i and its current content is ![]() , the message is

, the message is

![]()

which is combined directly with the content of the receiving node i.

Theorem 4.2 At the end of the Lauritzen-Spiegelhalter architecture, node i contains ![]() provided that all messages during an execution of the Shenoy-Shafer architecture can be computed.

provided that all messages during an execution of the Shenoy-Shafer architecture can be computed.

The proof of this theorem is given in Appendix D.2.1. It is important to note that the correctness of the Lauritzen-Spiegelhalter architecture is conditioned on the existence of the messages in the Shenoy-Shafer architecture, since the computations take place in the separative embedding Φ* where projection is only partially defined. However, (Schneuwly, 2007) weakens this requirement by proving that the existence of the inward messages is in fact sufficient to make the Shenoy-Shafer architecture and therefore also the Lauritzen-Spiegelhalter architecture work. Furthermore, in case of a regular valuation algebra, all factors are elements of Φ and consequently all projections exist. Theorem 4.2 can therefore be simplified by dropping this assumption. A further interesting issue with respect to this theorem concerns the messages sent in the distribute phase. Namely, we may directly conclude that

We again summarize this local computation scheme in the shape of a pseudo-code algorithm. As in the Shenoy-Shafer architecture, we separate the message-passing procedure from the actual query answering process.

Algorithm 4.3 Lauritzen-Spiegelhalter Architecture: Message-Passing

Algorithm 4.4 Lauritzen-Spiegelhalter Architecture: Query Answering

We next illustrate the Lauritzen-Spiegelhalter architecture using the medical knowledgebase. In Instance 2.1 we expressed the conditional probability tables of the Bayesian network that underlies this example in the formalism of arithmetic potentials. It is known from Instance 4.1 that arithmetic potentials form a regular valuation algebra. We are therefore not only allowed to apply this architecture, but we do not even need to care about the existence of messages as explained before.

Example 4.4 Reconsider the covering join tree for the medical knowledgebase of Figure 3.15. During the collect phase, the Lauritzen-Spiegelhalter sends exactly the same messages as the generalized collect algorithm. These messages are given in the Examples 3.20 and 3.22. Whenever a node has sent a message, it divides the latter out of its node content. Thus, the join tree nodes hold the following factors at the end of the inward phase:

The root node does not perform a division since there is no message to divide out. As a consequence of the collect algorithm, it holds that

![]()

We then enter the distribute phase and send messages from the root down to the leaves. These messages are always combined to the node content of the receiver:

- Node 6 obtains the message

and updates its node content to

The second equality follows from the combination axiom. For the remaining nodes, we only give the messages that are obtained in the distribute phase. - Node 5 obtains the message

- Node 4 obtains the message

- Node 3 obtains the message

- Node 2 obtains the message

- Node 1 obtains the message

At the end of the message-passing, each node directly contains the projection of the objective function ![]() to its own node label. This example is well suited to once more highlight the idea of division. Consider node 4 of Figure 3.15: During the collect phase, it sends a message to node 5 that consists of its initial node content. Later, in the distribute phase, it receives a message that consists of the relevant information from all nodes in the join tree, including its own content sent during the collect phase. It is therefore necessary that this particular piece of information has been divided out since otherwise, it would be considered twice in the final result. Dealing with arithmetic potentials, the reader may easily convince himself that combining a potential with itself leads to a different result.

to its own node label. This example is well suited to once more highlight the idea of division. Consider node 4 of Figure 3.15: During the collect phase, it sends a message to node 5 that consists of its initial node content. Later, in the distribute phase, it receives a message that consists of the relevant information from all nodes in the join tree, including its own content sent during the collect phase. It is therefore necessary that this particular piece of information has been divided out since otherwise, it would be considered twice in the final result. Dealing with arithmetic potentials, the reader may easily convince himself that combining a potential with itself leads to a different result.

4.3.1 Complexity of the Lauritzen-Spiegelhalter Architecture

The collect phase of the Lauritzen-Spiegelhalter architecture is almost identical to the unimproved collect algorithm. In a nutshell, there are |V| - 1 transmitted messages and each message is combined to the content of the receiving node. Further, one projection is required to obtain a message that altogether gives 2(|V| - 1) operations. In addition to the collect algorithm, the collect phase of the Lauritzen-Spiegelhalter architecture computes one division in each node. We therefore obtain 3(|V| - 1) operations that all take place on the node labels. To compute a message in the distribute phase, we again need one projection and, since messages are again combined to the node content, we end with an overall number of 5(|V| - 1) operations for the message-passing in the Lauritzen-Spiegelhalter architecture. Finally, query answering is simple and just requires a single projection per query. Thus, the time complexity of the Lauritzen-Spiegelhalter architecture is:

(4.15) ![]()

In contrast to the Shenoy-Shafer architecture, messages do not need to be stored until the end of the complete message-passing, but can be discarded once they have been sent and divided out of the node content. But we again keep one factor in each node whose size is bounded by the node label. This gives us the following space complexity that is equal to the unimproved version of the collect algorithm:

(4.16) ![]()

The Lauritzen-Spiegelhalter architecture performs division during its collect phase. One can easily imagine that a similar effect can be achieved by delaying the execution of division to the distribute phase. This essentially is the idea of a further multi-query local computation scheme called HUGIN architecture. It is then possible to perform division on the separators between the join tree nodes that increases the performance of this operation. The drawback is that we again need to remember the collect messages until the end of the algorithm.

4.4 THE HUGIN ARCHITECTURE

The HUGIN architecture (Jensen et al., 1990) is a modification of Lauritzen-Spiegelhalter to make the divisions less costly. It postpones division to the distribute phase such that the collect phase again corresponds to the collect algorithm with the only difference that every message ![]() is stored in the separator between the neighboring nodes i and j. These separators have the label sep(i) = λ(i)

is stored in the separator between the neighboring nodes i and j. These separators have the label sep(i) = λ(i) ![]() λ(j) according to Definition 3.12. They can be seen as components between join tree nodes, comparable to the mailboxes in the Shenoy-Shafer architecture. A graphical illustration is shown in Figure 4.9. Subsequently, we denote by S the set of all separators. In the following distribute phase, the messages are computed as in the Lauritzen-Spiegelhalter architecture, but have to pass through the separator lying between the sending and receiving nodes. The separator becomes activated by the crossing message and holds it back in order to divide out its current content. Finally, the modified message is delivered to the destination, and the original message is stored in the separator.

λ(j) according to Definition 3.12. They can be seen as components between join tree nodes, comparable to the mailboxes in the Shenoy-Shafer architecture. A graphical illustration is shown in Figure 4.9. Subsequently, we denote by S the set of all separators. In the following distribute phase, the messages are computed as in the Lauritzen-Spiegelhalter architecture, but have to pass through the separator lying between the sending and receiving nodes. The separator becomes activated by the crossing message and holds it back in order to divide out its current content. Finally, the modified message is delivered to the destination, and the original message is stored in the separator.

Figure 4.9 Separator in the HUGIN architecture.

Formally, the message sent from node i to ch(i) during the collect phase is

This message is stored in the separator sep(i). In the distribute phase, node ch(i) then sends the message

towards i. This message arrives at the separator where it is altered to

(4.17) ![]()

The message ![]() is sent to node i and combined with the node content. This formula also shows the advantage of the HUGIN architecture over the Lauritzen-Spiegelhalter architecture. Divisions are performed in the separators exclusively and these have in general smaller labels than the join tree nodes. In equation (4.14) we have seen that the distribute messages in the Lauritzen-Spiegelhalter architecture correspond already to the projection of the objective function to the separator. This is also the case for the messages that are sent in the distribute phase of the HUGIN architecture and that are stored in the separators. However, the message emitted by the separator does not have this property anymore since division has been performed.

is sent to node i and combined with the node content. This formula also shows the advantage of the HUGIN architecture over the Lauritzen-Spiegelhalter architecture. Divisions are performed in the separators exclusively and these have in general smaller labels than the join tree nodes. In equation (4.14) we have seen that the distribute messages in the Lauritzen-Spiegelhalter architecture correspond already to the projection of the objective function to the separator. This is also the case for the messages that are sent in the distribute phase of the HUGIN architecture and that are stored in the separators. However, the message emitted by the separator does not have this property anymore since division has been performed.

Theorem 4.3 At the end of the HUGIN architecture, each node i ![]() V stores

V stores ![]() and every separator between i and j the projection

and every separator between i and j the projection ![]() provjded that all messages during an execution of the Shenoy-Shafer architecture can be computed.

provjded that all messages during an execution of the Shenoy-Shafer architecture can be computed.

The HUGIN algorithm imposes the identical restrictions on the valuation algebra as the Lauritzen-Spiegelhalter architecture and has furthermore been identified as an improvement of the latter that makes the division operation less costly. It is therefore not surprising that the above theorem underlies the same existential condition regarding the messages of the Shenoy-Shafer architecture as Theorem 4.2. The proof of this theorem can be found in Appendix D.2.2.

Algorithm 4.5 HUGIN Architecture: Message-Passing

We again summarize this local computation scheme by a pseudo-code algorithm. Here, the factor ![]() refers to the content of the separator between node i and ch(i). However, since both theorems 4.2 and 4.3 make equal statements about the result at the end of the message-passing, we can reuse Algorithm 4.4 for the query answering in the HUGIN architecture and just redefine the message-passing procedure:

refers to the content of the separator between node i and ch(i). However, since both theorems 4.2 and 4.3 make equal statements about the result at the end of the message-passing, we can reuse Algorithm 4.4 for the query answering in the HUGIN architecture and just redefine the message-passing procedure:

Example 4.5 We illustrate the HUGIN architecture in the same setting as Lauritzen-Spiegelhalter architecture in Example 4.4. However, since the collect phase of the HUGIN architecture is identical to the generalized collect algorithm, we directly take the messages from Examples 3.20 and 3.22. The node contents after the collect phase corresponds to Example 4.4 but without the inverses of the messages. Instead, all messages sent during the collect phase are assumed to be stored in the separators. In the distribute phase, messages are sent from the root node down to the leaves. When a message traverses a separator that stores the upward message from the collect phase, the latter is divided out of the traversing message and the result is forwarded to the receiving node. Let us consider these forwarded messages in detail:

- Node 6 obtains the message

Note that the division was performed while traversing the separator node. Then, node 6 updates its content to

The second equality follows from the combination axiom. For the remaining nodes, we only give the messages that are obtained in the distribute phase. - Node 5 obtains the message

- Node 4 obtains the message

- Node 3 obtains the message

- Node 2 obtains the message

- Node 1 obtains the message

These messages consist of the messages from Example 4.4 from which the collect message is divided out. We therefore see that the division only takes place on the separator size and not on the node label itself.

4.4.1 Complexity of the HUGIN Architecture

Division is more efficient in the HUGIN architecture since it takes place on the separators which are generally smaller than the node labels. On the other hand, we additionally have to keep the inward messages in the memory that is not necessary in the Lauritzen-Spiegelhalter architecture. Both changes do not affect the overall complexity such that HUGIN is subject to the same time and space complexity bounds as derived for the Lauritzen-Spiegelhalter architecture in Section 4.3.1. From this point of view, the HUGIN architecture is generally preferred over Lauritzen-Spiegelhalter. An analysis for the time-space trade-off between the HUGIN and Shenoy-Shafer architecture based on Bayesian networks has been drawn by (Allen & Darwiche, 2003), and we again refer to (Lepar & Shenoy, 1998) where all three multi-query architectures are juxtaposed. We are now going to discuss one last local computation scheme for the solution of multi-query inference problems that applies to idempotent valuation algebras. In some sense, and this already foreshadows its proof, this scheme is identical to the Lauritzen-Spiegelhalter architecture where division is simply ignored because it has no effect in an idempotent valuation algebra.

4.5 THE IDEMPOTENT ARCHITECTURE

Section 4.2.1 introduces idempotency as a strong algebraic property under which division becomes trivial. Thus, the Lauritzen-Spiegelhalter as well as the HUGIN architecture can both be applied to inference problems with factors from an idem-potent valuation algebra. Clearly, if division in these architectures does not provoke any effect, we can ignore its execution altogether. This leads to the most simple local computation scheme called idempotent architecture (Kohlas, 2003).

In the Lauritzen-Spiegelhalter architecture, the message sent from i to ch(i) during the collect phase is divided out of the node content. Since idempotent valuations are their own inverses, this reduces to a simple combination. Hence, instead of dividing out the emitted message, it is simply combined to the content of the sending node. By idempotency, this combination has no effect. Thus, consider the collect message

This message is divided out of the node of i:

The last equality follows from idempotency. Consequently, the idempotent architecture consists in the execution of the collect algorithm towards a previously fixed root node m followed by a simple distribute phase where incoming messages are combined to the node content without any further action. In particular, no division needs to be performed. This derives the idempotent architecture from Lauritzen-Spiegelhalter, but it also follows in a similar way from the HUGIN architecture (Pouly, 2008). To sum it up, the architectures of Lauritzen-Spiegelhalter and HUGIN both simplify to the same scheme where no division needs to be done.

Theorem 4.4 At the end of the idempotent architecture, node i contains ![]() .

.

This theorem follows from the correctness of the Lauritzen-Spiegelhalter architecture and the above considerations. Further, there is no need to condition the statement on the existence of the Shenoy-Shafer messages since idempotent valuation algebras are always regular. This is shown in Appendix D.1.3. For the pseudo-code algorithm, we may again restrict ourselves to the message-passing part and refer to Algorithm 4.4 for the query answering procedure.

Algorithm 4.6 Idempotent Architecture: Message-Passing

Example 4.6 If we again call in the same setting as for the Lauritzen-Spiegelhalter and HUGIN architecture in Example 4.4 and 4.5 for the illustration of the idempotent architecture, things become particularly simple (as it is often the case with the idem-potent architecture). The collect phase executed the generalized collect algorithm whose messages can be found in Example 3.20 and 3.22. Then, the distribute phase just continues in exactly the same way:

- Node 6 obtains the message

and updates its node content to

The second equality follows from the combination axiom. Since corresponds to a projection of

corresponds to a projection of  6

6  it follows from idempotency that

it follows from idempotency that

This explains the third equality. For the remaining nodes, we only give the messages that are obtained in the distribute phase. - Node 5 obtains the message

- Node 4 obtains the message

- Node 3 obtains the message

- Node 2 obtains the message

- Node 1 obtains the message

Observe that no division has been performed anywhere.

4.5.1 Complexity of the Idempotent Architecture

The idempotent architecture is absolutely symmetric concerning the number of executed operations. There are 2(|V| - 1) exchanged messages, thus 2(|V| - 1) projections and 2(|V| - 1) combinations to perform. No division is performed anywhere in this architecture. Further, each message can directly be discarded once it has been combined to the node content of its receiver. Thus, although we obtain the same bounds for the time and space complexity as in the Lauritzen-Spiegelhalter and HUGIN architecture, the idempotent architecture can be considered as getting the best of both worlds. Since no division is performed, the idempotent architecture even outperforms the HUGIN scheme and does not need to remember any messages. Thus, if time is the more sensitive resource and idempotency holds, the idempotent architecture becomes the best choice to solve multi-query inference problems by local computation. Moreover, if we are dealing with a valuation algebra where both complexities are polynomial, the idempotent architecture provides a specialized query answering procedure for queries that are not covered by the join tree. This procedure is presented in the following section.

4.6 ANSWERING UNCOVERED QUERIES

The concept of a covering join tree ensures that all queries from the original inference problem can be answered after the completed distributed phase. But if later a new query arrives that is not covered by any of the join tree nodes, it is generally necessary to construct a new join tree and to execute the local computation architecture from scratch. Alternatively, there are updating methods described in (Schneuwly, 2007) that modify the join tree to cover the new query. Then, only a part of the complete message-passing has to be re-processed. However, a third option that is especially suited for formalisms with a polynomial complexity exists in the idempotent case. This method is a consequence of an interesting property of the idempotent architecture that is concerned with a particular factorization of the objective function. Initially, the original factorization of the objective function is given in the definition of the inference problem. Then, a second factorization is obtained from Lemma 3.8 with the property that the domain of each factor is covered by some specific node of the join tree. In case of idempotency, we obtain a third factorization of the objective function from the node contents after local computation:

Lemma 4.4 In an idempotent valuation algebra we have

(4.18) ![]()

Proof: The proof of this lemma is based on the correctness of the Lauritzen-Spiegelhalter architecture, which can always be executed when idempotency holds. At the beginning, we clearly have

![]()

During the collect phase, each node computes the message for its child node and divides it out of its node content. Then, the message is sent to the child node where it is combined to the node content. Thus, each message that is divided out of some node content is later combined to the content of another node. We therefore conclude that the above equation still holds at the end of the collect phase in the Lauritzen-Spiegelhalter architecture. Formally, equation (4.13) defines the node content at the end of the collect phase in the Lauritzen-Spiegelhalter architecture and we thus have

![]()

By idempotency it further holds that

![]()

and therefore

But the marginal of ![]() with respect to λ(i)

with respect to λ(i) ![]() λ(ch(i)) corresponds exactly to the message sent from node ch(i) to node i during the distribute phase of the Lauritzen-Spiegelhalter architecture, see equation (4.14). We therefore have by the correctness of the Lauritzen-Spiegelhalter architecture

λ(ch(i)) corresponds exactly to the message sent from node ch(i) to node i during the distribute phase of the Lauritzen-Spiegelhalter architecture, see equation (4.14). We therefore have by the correctness of the Lauritzen-Spiegelhalter architecture

As a consequence of this result, we may consider local computation as a transformation of the join tree factorization of Lemma 3.8 into a new factorization where each factor corresponds to the projection of the objective function to some node label. The following theorem provides a further generalization of this statement.

Theorem 4.5 For i = 1, …, r and

it holds for an idempotent valuation algebra that

(4.20) ![]()

Proof: We proceed by induction: For i = 1 the statement follows from Lemma 4.4 and from the domain axiom. For i + 1 we observe that ![]() and obtain using the induction hypothesis and the combination axiom

and obtain using the induction hypothesis and the combination axiom

From the running intersection property we further derive λ(i)![]() λ(ch(i)) = λ(i)

λ(ch(i)) = λ(i)![]() yi+1 and obtain by idempotency

yi+1 and obtain by idempotency

We next observe that combining the node content of two neighboring nodes always results in the objective function projected to their common domain.

Lemma 4.5 For 1 ≤ i, j ≤ r and j ![]() ne(i) we have

ne(i) we have

![]()

Proof: We remark that if i and j are neighbors, then either i = ch(j) or j = ch(i). It is therefore sufficient to prove the following statement:

(4.21) ![]()

It follows from Theorem 4.5, idempotency and the combination axiom that

We further conclude from equation (4.19) that

![]()

and finally obtain

This lemma states that if a new query x ![]() d(

d(![]() ) arrives that is not covered by the join tree, it can nevertheless be answered if we find two neighboring nodes whose union label covers the query, i.e.

) arrives that is not covered by the join tree, it can nevertheless be answered if we find two neighboring nodes whose union label covers the query, i.e. ![]() . We then compute

. We then compute

![]()

Thus, we neither need a new join tree nor a new propagation to answer this query. However, it is admittedly a rare case that two neighboring nodes can be found that cover some new query. The following lemma therefore generalizes this procedure to paths connecting two arbitrary nodes in the join tree.

Lemma 4.6 Let (p1, …, pk) be a path from node p1 to node pk with pi ![]() V for 1 ≤ i ≤ k. We then have for an idempotent valuation algebra

V for 1 ≤ i ≤ k. We then have for an idempotent valuation algebra

(4.22)

Proof: We proceed by induction over the length of the path: For i = 2 we have p1 ![]() ne(p2) and the statement follows from Lemma 4.5. For a path (p1, …, pk+1) we merge the nodes {p1, …, pk} to a single node called z to which all neighbors of p1 to pk are connected. The label of node z is

ne(p2) and the statement follows from Lemma 4.5. For a path (p1, …, pk+1) we merge the nodes {p1, …, pk} to a single node called z to which all neighbors of p1 to pk are connected. The label of node z is ![]() . Clearly, the running intersection property is still satisfied in the new tree. Since pk+1

. Clearly, the running intersection property is still satisfied in the new tree. Since pk+1 ![]() ne(z) we may again apply Lemma 4.5 and obtain,

ne(z) we may again apply Lemma 4.5 and obtain,

![]()

The last equality follows from the induction hypothesis.

It then follows immediately from this lemma and the transitivity of projection that

(4.23)

This allows us to express the following yet rather inefficient procedure to answer a query x ![]() d(

d(![]() ) that is covered by the union label of two arbitrary join tree nodes:

) that is covered by the union label of two arbitrary join tree nodes:

1. Find two nodes i, j ![]() V such that x

V such that x ![]() .

.

2. Combine all node contents on the unique path between i and j.

3. Project the result to x.

We here assume for simplicity that the query is split over only two nodes. If more than two nodes are necessary to cover the query, it is easily possible to generalize Lemma 4.6 to arbitrary subtrees of the join tree instead of only paths. Clearly, this simple procedure is very inefficient and almost amounts to a naive computation of the objective function itself, due to the combination of all node factors on the path between i and j in the second step. However, there is a more intelligent way to perform these computations that only requires combining the content of two neighboring nodes. This is the statement of the following lemma:

Lemma 4.7 In an idempotent valuation algebra, it holds that

![]()

Proof: It follows from the transitivity axiom, Lemma 4.6, the combination axiom and the running intersection property that

We now give an algorithm similar to the above procedure that exploits Lemma 4.7 to avoid the combination of all node factors on the path between node p1 and pk.

Algorithm 4.7 Specialized Query Answering

Theorem 4.6 Algorithm 4.7 outputs ![]() .

.

Proof: Let us first show that the projection of ![]() in the loop is well-defined. After repetition i of the loop we have:

in the loop is well-defined. After repetition i of the loop we have:

In loop ![]() is projected to

is projected to ![]() . Hence, this projection is feasible. We next prove that

. Hence, this projection is feasible. We next prove that

holds at the end of the loop. The statement of the theorem then follows by the transitivity of projection. For k = 2 it follows from Lemma 4.6 that

![]()

The second equality follows by initialization and the third from the domain axiom. This proves the correctness of the algorithm for paths of length 2. We now assume that equation (4.25) holds for paths of length k. For a path of length k + 1, the last repetition of the loop computes

The first equality follows from the induction hypothesis, the second from transitivity and the third from lemma 4.7. This proves the correctness of equation (4.25 and the statement of the theorem follows by the transitivity of projection.

Example 4.7 We want to answer the new query × = {A, B} in the join tree of Figure 4.3 after a complete run of the idempotent architecture. We immediately see that this query is not covered by the join tree, but it is for example covered by the union label of the two nodes 1 and 6, i.e. x ![]() . The path between these two nodes has already been shown in Figure 4.10. The following three steps are executed by Algorithm 4.7:

. The path between these two nodes has already been shown in Figure 4.10. The following three steps are executed by Algorithm 4.7:

Figure 4.10 The path between node 1 and node 6 in the join tree of Figure 4.3.

Step 1: ![]()

Step 2: ![]()

Step 3: ![]()

Finally, the algorithm returns

A closer look on Algorithm 4.7 or more precisely on equation (4.24) shows that we may extract a lot more information from the intermediate results

![]()

for 1 ≤ i ≤ k. In fact, it is not only possible to compute the final query ![]() as stated in Theorem 4.6, but we actually obtain all possible queries that are subsets of λ(p1) ∪ λ(pi) by just one additional projection per query. This is the statement of the following complement to Theorem 4.6.

as stated in Theorem 4.6, but we actually obtain all possible queries that are subsets of λ(p1) ∪ λ(pi) by just one additional projection per query. This is the statement of the following complement to Theorem 4.6.

Lemma 4.8 Let (p1, …, pk) be a path from node p1 to node pk. Algorithm 4.7 can be adapted to compute ![]() for all x

for all x ![]() λ(p1) ∪ λ(pi) for i = 2, …, k.

λ(p1) ∪ λ(pi) for i = 2, …, k.

Example 4.8 From the intermediate results of Example 4.7 we may compute all possible queries that are subsets of {A, T, E, L, T} and {A, T, B, E, L} by just one additional projection per query. This includes in particular the binary queries {A, E}, {A, L} and {T, B} which are not covered by the join tree in Figure 4.3.

A further extension of Algorithm 4.7 provides for a previously fixed node ![]() a pre-compilation of the join tree such that all possible queries, that are subsets of λ(i) ∪ λ(j) for all nodes j

a pre-compilation of the join tree such that all possible queries, that are subsets of λ(i) ∪ λ(j) for all nodes j ![]() V, can be computed by just one additional projection per query. To do so, we start with the selected node i and compute for each neighbor k

V, can be computed by just one additional projection per query. To do so, we start with the selected node i and compute for each neighbor k ![]() ne(i) the projection of

ne(i) the projection of ![]() to λ(i) ∪ λ(k). This intermediate result is stored in the neighbor node k. Then, the process continues for all neighbors of node k that have not yet been considered. If l

to λ(i) ∪ λ(k). This intermediate result is stored in the neighbor node k. Then, the process continues for all neighbors of node k that have not yet been considered. If l ![]() ne(k) is such a neighbor of k, we compute the projection to λ(i) ∪ λ(l) by reusing the intermediate result stored in k. This is possible due to Lemma 4.7:

ne(k) is such a neighbor of k, we compute the projection to λ(i) ∪ λ(l) by reusing the intermediate result stored in k. This is possible due to Lemma 4.7:

![]()

At the end of this process, each node j ![]() V contains

V contains ![]() which allows us to answer all queries that are subsets of λ(i) ∪ λ(j) by just one additional projection. These projections setup another factorization of the objective function

which allows us to answer all queries that are subsets of λ(i) ∪ λ(j) by just one additional projection. These projections setup another factorization of the objective function ![]() as a consequence of Lemma 4.6.

as a consequence of Lemma 4.6.

Algorithm 4.8 Compilation for Specialized Query Answering

4.6.1 The Complexity of Answering Uncovered Queries

We first point out that finding the path between two nodes in a directed tree is particularly easy. We simply compute the intersection of the two paths that connect both nodes with the root node. Due to equation (4.24) the loop statement of Algorithm 4.7 computes a factor of domain ![]() . Its number of repetitions is bounded by the longest possible path in the join tree, which has at most |V| nodes. We therefore conclude that Algorithm 4.7 adopts a time complexity of

. Its number of repetitions is bounded by the longest possible path in the join tree, which has at most |V| nodes. We therefore conclude that Algorithm 4.7 adopts a time complexity of

(4.26) ![]()

Since only one combination of two node contents is kept in memory at every time, we obtain for the space complexity

(4.27) ![]()

Algorithm 4.8 combines the node content of each node with the content of one of its neighbors. This clearly results in the same time complexity. But in contrast, we store one such factor in each node, which gives us a space complexity of

(4.28) ![]()

for Algorithm 4.8. At first glance, the complexities of these algorithms seem very bad because they are controlled by the double of the treewidth. Especially for formalism with exponential time or space behaviour, it could therefore be more efficient to construct a new join tree that also covers the new queries and execute the complete local computation process from scratch. But whenever we add new queries to the inference problem, we risk obtaining a join tree with a larger treewidth. This makes it difficult to directly compare the two approaches. The definition of a covering join tree ensures that every query is covered by the label of a join tree node. Here, we only require that every query is covered by the union of two arbitrary node labels. We will see in Chapter 9 that important applications exist where this is always satisfied. Imagine for example that our query set consists of all possible queries of two variables {X, Y} with ![]() and X ≠ Y. Building a covering join tree for such an inference problem always leads a join tree whose largest node label contains all variables. We then compute the objective function directly and the effect of local computation disappears. On the other hand, it is always guaranteed that such queries are covered by the union label of two join tree nodes. We can therefore ignore the query set in the join tree construction process and obtain the best possible join tree that can be found for the current knowledgebase. Later, queries are answered by the above procedures which clearly is more efficient than computing the objective function directly. Chapter 9 shows that such particular query sets frequently occur when local computation is used for the solution of path problems in sparse networks. Then, the query {X, Y} for example represents the shortest distance between two network hosts X and Y, and the query set of all possible pairs of variables models the so-called all-pairs shortest path problem.

and X ≠ Y. Building a covering join tree for such an inference problem always leads a join tree whose largest node label contains all variables. We then compute the objective function directly and the effect of local computation disappears. On the other hand, it is always guaranteed that such queries are covered by the union label of two join tree nodes. We can therefore ignore the query set in the join tree construction process and obtain the best possible join tree that can be found for the current knowledgebase. Later, queries are answered by the above procedures which clearly is more efficient than computing the objective function directly. Chapter 9 shows that such particular query sets frequently occur when local computation is used for the solution of path problems in sparse networks. Then, the query {X, Y} for example represents the shortest distance between two network hosts X and Y, and the query set of all possible pairs of variables models the so-called all-pairs shortest path problem.

We have now seen several important concepts that are all related to a division operator in the valuation algebra: namely, two local computation architectures that directly exploit division and the particularly simple idempotent architecture, including a specialized procedure to answer uncovered queries. In addition, the presence of inverse elements allows us to introduce the notion of scaling or normalization on an algebraic level and also to derive variations of local computation architectures that directly compute scaled results. This is the subject of the next section.

4.7 SCALING AND NORMALIZATION

Scaling or normalization is an important notion in a couple of valuation algebra instances. If, for example, we want to use arithmetic potentials to represent discrete probability distributions, it is semantically important that all values sum up to one. Only in this case are we allowed to talk about probabilities. Similarly, normalized set potentials from Instance 1.4 adopt the semantics of belief functions (Shafer, 1976). As mentioned above, the algebraic background for the introduction of a generic scaling operator is a separative valuation algebra. It is shown in this section how scaling can be introduced potentially in every valuation algebra that fulfills this mathematical property. Nevertheless, we emphasize that there must also be a semantical reason that demands a scaling operator, and this cannot be treated on a purely algebraic level.

Following (Kohlas, 2003), we define the scaling or normalization of ![]() by

by

It is important to note that because separativity is assumed, scaled valuations do not necessarily belong to Φ but only to the separative embedding Φ*. For simplicity and because this holds for all instances presented in this book, we subsequently assume that ![]() for all

for all ![]() . This ensures that all projections of

. This ensures that all projections of ![]() to t

to t ![]() to t

to t ![]() are defined, although we are dealing with a valuation algebra with partial projection. We refer to (Kohlas, 2003) for a more general study of scaling without this additional assumption. However, to gain further insights into scalable valuation algebras, we require some results derived for separative valuation algebras in Appendix D.1.1. This is necessary to obtain the following equation and for the proof of Lemma 4.9. Nevertheless, it is possible to understand these statements without knowledge of separative valuation algebras.

are defined, although we are dealing with a valuation algebra with partial projection. We refer to (Kohlas, 2003) for a more general study of scaling without this additional assumption. However, to gain further insights into scalable valuation algebras, we require some results derived for separative valuation algebras in Appendix D.1.1. This is necessary to obtain the following equation and for the proof of Lemma 4.9. Nevertheless, it is possible to understand these statements without knowledge of separative valuation algebras.

Combining both sides of equation (4.29) with ![]() leads to

leads to

according to the theory of separative valuation algebras. A valuation and its scale are contained in the same group. The following lemma lists some further elementary properties of scaling. It is important to note that these properties only apply if the scale ![]() of a valuation

of a valuation ![]() is again contained in the original algebra Φ. This is required since scaling is only defined for elements in Φ.

is again contained in the original algebra Φ. This is required since scaling is only defined for elements in Φ.

Lemma 4.9

(4.33) ![]()