CHAPTER 9

SPARSE MATRIX TECHNIQUES

We learned in Section 7.2 that the solution spaces of linear equation systems form a valuation algebra and even an information algebra. This hints at an application of local computation for solving systems of linear equations. However, in contrast to many other inference formalisms studied in this book, the valuations here are infinite structures and therefore not directly suitable for computational purposes. On the other hand, the equations themselves are finite constructs and provide a linguistic description of the solution spaces. It is thus reasonable for linear systems to perform the computations not on the valuation algebra directly but on the associated language level. This is the topic of the present chapter. Whereas solving systems of linear equations is an old and classical subject of numerical mathematics, looking at it from the viewpoint of local computation is new and leads to simple and clear insights especially for computing with sparse matrices. It is often the case that the matrix of a system of linear equations contains many zeros. Such matrices are called sparse, and this sparsity can be exploited to economize memory and computing time. However, it is well-known that zero entries may get lost during the solution process if care is not taken. Matrix entries that change from a zero to a non-zero value are called fill-ins and they destroy the advantages of sparsity. Therefore, much effort has been spent on developing method that maintain sparsity. We claim that local computation offers a very simple and easy to understand method for controlling fill-ins and thus for maintaining sparsity. This is not only true for ordinary, real-valued linear equations, or linear equations over a field, but also for linear equations over semirings. We have seen in Chapter 6 that path problems induce semiring fixpoint equation systems, where the sparsity often comes from an underlying graph. Independently, local computation maintains the sparsity in all these applications.

An interesting aspect that arises from studying equation systems in the context of valuation algebras is the necessity to distinguish between factorizations and decompositions. The generic local computation methods from Chapter 3 and 4 solve inference problems that consist of a knowledgebase of valuations which factor the objective function. Where do these valuations come from? A key note behind the theory of valuation algebras is that information exists in pieces and comes from different sources, which indicates that factorizations occur naturally. This is for example the case in the semiring valuation systems of Chapter 5, where factorizations are often the only mean to express the objective function, due to the exponential complexity behind these formali{ms. In contrast, linear systems are polynomial, and it is often more realistic that a total linear system exists, from which the knowledgebase must be artificially fabricated. We then speak about a decomposition rather than a factorization. We will continue the discussion of factorizations and decompositions throughout this chapter and show that both perspectives are equally important when dealing with linear systems.

In the beginning two sections of this chapter, we treat ordinary systems of linear equations by examining arbitrary systems in Section 9.1 and the important case of systems with symmetric, positive definite matrices in Section 9.2. As an application, the method of least squares is shown to fit into the local computation framework. Formally, this is very similar to the problems that we are going to treat in Chapter 10; only the semantics of the two systems are different. This uncovers a first application of the valuation algebra from Instance 1.6 in Chapter 1. The remaining sections are devoted to the solution of linear fixpoint equation systems over semirings. Section 9.3 focuses on the local computation based solution of arbitrary fixpoint equation systems with values from quasi-regular semirings. The application of this theory to path problems over Kleene algebras is compiled in Section 9.3.3.

9.1 SYSTEMS OF LINEAR EQUATIONS

We first give a short review of Gaussian variable elimination.

9.1.1 Gaussian Variable Elimination

Consider a system of linear equations with real-valued coefficients,

We make no assumptions about the number m of equations or the number n of unknowns and neither about the rank of the matrix of the system. So this system of linear equations may have no solution, exactly one solution or infinitely many solutions. In any case, the solutions form an affine space as explained in Section 7.2. The computational task is to decide whether the system has a solution or not. If it has exactly one solution, then this solution must be determined. If it has infinitely many solutions, then the solution space must be determined. The usual way to solve systems of linear equations is by Gaussian variable elimination. Assuming that the element a1,1 is different from zero, an elimination step based on a1,1 proceeds by first solving the first equation for X1 in terms of the remaining variables,

(9.2) ![]()

Then, the variable X1 is replaced by the right-hand expression in all other equations. After rearranging terms this results in the new system of linear equations

This is called a pivoting step with a1,1 as pivot element. Variable X1 is called the pivot variable and the first equation, where the pivot element is selected from, is called the pivot equation. It eliminates variable X1 and reduces the system of equations to a new system with one less variable and one less equation. The new system is equivalent to the old one in the following sense:

1. If (x1, x2, …, xn) is a solution to the original system, then (x2, …, xn) is a solution of the new system.

2. If (x2, …, xn) is a solution of the new system, then (x1, x2, …, xn) with

![]()

is a solution to the original system.

This procedure is called Gaussian variable elimination and can be repeated on the new system. In order to execute a pivot step on a variable Xi there must be at least one equation with a non-zero coefficient of Xi. If there is no such equation, Xi can be eliminated from the system without further actions. In fact, in this case Xi has zero coefficients in all equations which is tantamount to say that Xi does not appear in the system. So, Gaussian elimination permits to reduce the system stepwise by eliminating one variable after the other until only one variable remains. Several cases may arise during the elimination of variables: assume that the variables X1 to Xp have been successfully eliminated. Then, the following three cases may arise:

1. All coefficients on the left-hand side of the new system vanish, but on the right-hand side there is at least one element different from zero. In this case the system has no solution.

2. All coefficients on both sides of an equation vanish. Then, this equation can be eliminated. If no equations remain after elimination, then the variables Xp+1 to Xn can take arbitrary values.

3. There remains a non-vanishing system of linear equations with at least one equation less than the system before pivoting on Xp. Then, a next pivot step can be executed.

The first case identifies contradictions in the system. For this, we do not necessarily need to continue the pivoting process until all coefficients on the left-hand side of the system vanish. Instead, we may stop the process when the first equation arises, where all coefficient on the left-hand side vanish, but the right-hand side value is different from zero. These three cases solve the linear system in the following sense:

1. If case 1 occurs, the system has no solution.

2. In case 2 a certain number of variables X1, …, Xp can be expressed linearly by the remaining variables Xp+1, …, Xn. This determines the solution space.

3. All variables up to and including Xn-1 can be eliminated. Then Xn gets a fixed value in the last system, and a unique solution exists for the system. It can be found by backward substitution of the values of Xn, Xn-1, …

Note that which of the above cases arises does not depend on the order in which variables are eliminated.

The equations in the system after the first pivot step clearly show that original zero coefficients of certain variables may easily become non-zero in a pivot step. This is called a fill-in. The number of fill-ins depends on the choice of the pivot element or, in other words, there may be many fill-ins if the pivot element is not carefully chosen. Selection of the pivot element is partially a question of selecting elimination sequences of variables, but also of selecting the pivot equation. In the next section, we show how local computation allows to fix an elimination sequence such that fill-ins can be controlled and bounded. It should, however, be emphasized that limiting fill-ins is not the only consideration in selecting pivot elements. Numerical stability and the control of numerical errors are other important aspects that may be in contradiction to the minimization of fill-ins. The reader should keep this aspect in mind, although we here only focus on the limitation of fill-ins.

9.1.2 Fill-ins and Local Computation

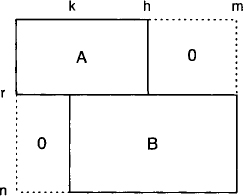

To discuss a concrete scenario we assume that in our system of linear equations (9.1) we have aj,i = 0 for all j = 1, …, r and i = h, …, n and also for j = r + 1, …, m and i = 1, …, k for some r < m and k < h < n. This corresponds to a decomposition of the system into a first subsystem of r equations, where only the variables X1 to Xh occur with non-zero coefficients and a second subsystem of m, − r equations where only the variables Xk+1 to Xn occur. The corresponding matrix decomposition is illustrated in Figure 9.1. We denote by A the r × h matrix of the first subsystem for the variables X1 to Xh and by B the (m − r) × (n − k) matrix of the second subsystem for the variables Xk+1 to Xn. It is already clear that if we select the pivot elements for the variables X1 to Xk in the first subsystem, then pivoting does not change the second subsystem, and in the first subsystem only the coefficients of the variables X1 to Xh change. So the zero-elements outside the two subsystems are maintained. This is how fill-ins are controlled by a decomposition.

Figure 9.1 A first decomposition of a system of linear equations into two subsystems and the associated zero-pattern of the coefficients.

If we want to eliminate the variables X1 to Xk, we have to distinguish two cases:

1. First, assume that r < k, i.e. there are less equations than variables to be eliminated. Then, we may eliminate at most r variables. Let us eliminate the variables in the order of their numbering X1, …, Xr. Then, it either turns out that the first subsystem has no solution, which implies that the whole system has no solution, or X1, …, Xr are at the end of the elimination process linearly expressed by the remaining variables Xr+1, …, Xh of the subsystem. We have for i = 1, …, r,

![]()

Here, the first variables Xr+1 to Xk can freely be chosen because they do not appear in the remaining second part of the system, whereas the last variables Xk+1 to Xh are determined by the second part of the system which can be solved independently of the first part.

2. In the second case, if k ≤ r, the elimination of the variables X1, X2, … may already show that the system has no solution. Otherwise, the variables X1 to Xk are eliminated from at least k equations of the first subsystem and there remain at most r − k equations containing only the variables Xk+1 to Xh. This system is added to the second subsystem which still contains only the variables Xk+1 to Xn. We thus obtain a new linear system for the variables Xk+1 to Xn. The eliminated variables X1, …, Xk are linearly expressed by the variables Xk+1, …, Xh. Once the second subsystem is solved for Xk+1, …, Xn we can backward substitute the solution into the expressions for X1, …, Xk.

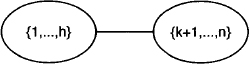

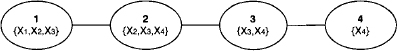

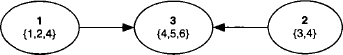

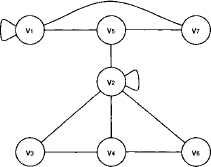

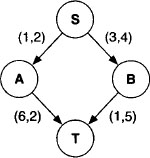

This describes a simple local computation scheme: the decomposition of the system can be represented by the two-node join tree of Figure 9.2. The variables of the first subsystem are covered by the label of the left-hand node and the variables of the second subsystem by the label of the right-hand node. The message sent from the left to the right node is obtained from eliminating all variables outside the intersection of the node labels. These are the variables X1 to Xk. The message is either empty or consists of the remaining system of the first subsystem after eliminating the variables X1 to Xk. If the elimination of the variables in the first subsystem already shows that the total system has no solution, then no message needs to be sent. Otherwise, the arriving message is simply added to the subsystem of the receiving node. Then, the variable elimination is continued in the new system until either a solution is found in the sense of the previous subsection, or it is seen that no solution exists. In the first case, assume that the variables Xk+1 to Xl are expressed by the remaining variables Xl+1, …, Xn. Note that since we did not make any assumption about the rank of the matrix, we cannot be sure that all this holds for all variables Xk+1 to Xh, but only for some l ≤ h. Then, the expression of the variables Xk+1 to Xl may be backward substituted into the expressions of the variables X1 to Xr if r < k, or to Xk obtained in the process of variable elimination in the first subsystem.

Figure 9.2 The join tree corresponding to the decomposition of Figure 9.1.

Let us point out more explicitly how the above scheme is connected to the local computation framework. We know from Section 7.2 that solution spaces of linear systems form a valuation algebra. Sets of equations provide a formal description of solution spaces and can therefore be considered as a representation of the latter. The domain of a set of equations consists of all variables that have at least one non-zero coefficient in this system. In the above description, we combined sets of equations by simple union. The original system therefore corresponds to the objective function and the knowledgebase factors are subsets of equations, whose union builds up the total system. This corresponds to the decomposition view presented in the introduction of this chapter. When producing such a decomposition from an existing system, we should keep the factor domains as small as possible. Otherwise, we will need large join tree nodes to cover these factors, which results in a bad complexity. In the case at hand, we can provide a factorization with minimum granularity where each factor contains exactly one equation. Then, the above procedure can be generalized as follows: we assume a covering join tree (V, E, λ, D) for the knowledgebase where each node i ![]() V contains a subsystem of linear equations whose variables are covered by the node label λ(i). This join tree decomposition reflects a certain pattern of zeros in the original total system. Note also that these systems may be empty on certain nodes, which mirrors the assignment of identity elements in Section 3.6. If we then execute the collect algorithm, the messages correspond to the description above. At the end of the message-passing, the root node contains the total system from which all variables outside the root label have been eliminated. We determine the remaining variables from this system and, if it exists, build the total solution by backward substitution. The depicted variable elimination process clearly maintains the pattern of zeros represented by the join tree decomposition. Consequently, join tree decompositions control fill-ins in general sparse systems of linear equations. This process will be formulated more precisely in Section 9.1.3 for the important case of regular systems that always provide a unique solution. There, we also point out that backward substitution in fact corresponds to the construction of solution configurations according to Section 8.2.1, see also Exercise H.1.

V contains a subsystem of linear equations whose variables are covered by the node label λ(i). This join tree decomposition reflects a certain pattern of zeros in the original total system. Note also that these systems may be empty on certain nodes, which mirrors the assignment of identity elements in Section 3.6. If we then execute the collect algorithm, the messages correspond to the description above. At the end of the message-passing, the root node contains the total system from which all variables outside the root label have been eliminated. We determine the remaining variables from this system and, if it exists, build the total solution by backward substitution. The depicted variable elimination process clearly maintains the pattern of zeros represented by the join tree decomposition. Consequently, join tree decompositions control fill-ins in general sparse systems of linear equations. This process will be formulated more precisely in Section 9.1.3 for the important case of regular systems that always provide a unique solution. There, we also point out that backward substitution in fact corresponds to the construction of solution configurations according to Section 8.2.1, see also Exercise H.1.

To identify the complexity of this approach, we consider a system that gives the most of work, i.e. a regular system of n variables and n equations. Eliminating one variable from this system takes a time complexity of ![]() (n2) and eliminating all variables is thus possible in

(n2) and eliminating all variables is thus possible in ![]() (n3). Backward substitution takes linear time for one variable and is repeated n times which result in

(n3). Backward substitution takes linear time for one variable and is repeated n times which result in ![]() (n2). Altogether, the time complexity of Gaussian elimination without taking care of fill-ins is

(n2). Altogether, the time complexity of Gaussian elimination without taking care of fill-ins is ![]() (n3). If local computation is applied, each join tree node contains a system of at most ω* + 1 variables, where ω* denotes the treewidth of the inference problem derived from the total system. The effort of eliminating one variable is

(n3). If local computation is applied, each join tree node contains a system of at most ω* + 1 variables, where ω* denotes the treewidth of the inference problem derived from the total system. The effort of eliminating one variable is ![]() ((ω* + 1)2), and a complete run of the collect algorithm that eliminates all variables takes

((ω* + 1)2), and a complete run of the collect algorithm that eliminates all variables takes

Backward substitution is performed n times with the equations that result from the variable elimination process and which contain at most ω* variables. We therefore obtain a time complexity of ![]() (nω*) for the complete backward substitution process. Because each node stores a matrix whose domain is bounded by the treewidth, we obtain the same bound as (9.3) for the space complexity. If the treewidth is small with respect to n, then big savings may be expected.

(nω*) for the complete backward substitution process. Because each node stores a matrix whose domain is bounded by the treewidth, we obtain the same bound as (9.3) for the space complexity. If the treewidth is small with respect to n, then big savings may be expected.

9.1.3 Regular Systems

In this section we examine more closely and more precisely the local computation scheme and the related structure of fill-ins for regular systems AX = b, where A is a regular n × n matrix. This assumption means that there is a unique solution to this system. In particular, we look at the fusion algorithm of Section 3.2.1 for computing the solution to this system. We choose an arbitrary elimination sequence for the n variables. By renumbering the variables and applying corresponding permutations of the columns of the matrix A, we may without loss of generality assume that the elimination sequence is (X1, X2, …, Xn). Further, by a well-known theorem of linear numerical analysis, it is also always possible to permute the rows of the matrix A such that in the sequence of the elimination of the variables X1, …, Xn all diagonal elements are different form zero (Schwarz, 1997). Again, without loss of generality, we may assume that the rows of A are already in the required order. This means that the following process of variable elimination works fine.

In the first step variable X1 is eliminated. Since by assumption a1,1 ≠ 0 we may solve the first equation for X1

![]()

where

for i = 2, …, n. Using this linear expression, we replace the variable X1 in the remaining equations to obtain

![]()

for j = 2, …, n with

Let us define

![]()

for j, i = 2, …, n. Then, the new system can be written as

![]()

By assumption ![]() is different from zero, variable X2 can be eliminated by solving the first equation of the new system and so forth. In the k-th step of this elimination process, k = 1, …, n, we similarly have

is different from zero, variable X2 can be eliminated by solving the first equation of the new system and so forth. In the k-th step of this elimination process, k = 1, …, n, we similarly have

where

for i = k + 1, …, n. Further, with

for j, i = k + 1, …, n, where

we get the system

![]()

In this process we define ![]() . For k = n − 1 it remains a simple system

. For k = n − 1 it remains a simple system

![]()

with one unknown that can easily be solved. By backward substitution for k = n − 2, …, 1, using equation (9.6), we obtain the solution of the regular system.

In order to study the control of fill-ins by local computation, we consider the join tree induced by the above execution of the fusion algorithm as described in Section 3.2.2. To each eliminated variable Xi corresponds a node i ![]() V in the join tree (V, E, λ, D) with a certain label λ(i)

V in the join tree (V, E, λ, D) with a certain label λ(i) ![]() D = P({1, …, n}). Let us now interpret the fusion algorithm as a message-passing scheme in this join tree according to Section 3.5. To start with, consider node 1 where the variable X1 is eliminated. Let

D = P({1, …, n}). Let us now interpret the fusion algorithm as a message-passing scheme in this join tree according to Section 3.5. To start with, consider node 1 where the variable X1 is eliminated. Let

![]()

define the index set of all equations which contain the variable X1. Only these equations are changed when variable X1 is eliminated. Further, let

![]()

be the set of variables that occur in the subset of equations with indices in ![]() (1). Note that the first equation is in

(1). Note that the first equation is in ![]() (1), since by assumption a1,1 ≠ 0. Hence, also the variable X1 is in the label λ(1) of node 1. When we now look at the elimination process of variable X1 according to the equations (9.4) and (9.5) above, then we remark that r1,i = 0 for i ∉ λ(1) and lj,1 = 0 for j ∉

(1), since by assumption a1,1 ≠ 0. Hence, also the variable X1 is in the label λ(1) of node 1. When we now look at the elimination process of variable X1 according to the equations (9.4) and (9.5) above, then we remark that r1,i = 0 for i ∉ λ(1) and lj,1 = 0 for j ∉ ![]() (1) and also

(1) and also

![]()

This shows that zeros in the first row and the first column of A are maintained when eliminating variable X1. Further, it exhibits the locality of the process by showing that only equations which contain variable X1 will change. The message sent to the child node ch(1) of node 1 in the join tree is determined by the coefficients

![]()

for j ![]()

![]() (1) − {1} and i

(1) − {1} and i ![]() λ(1) − {1}. These coefficients specify the new system of equations after elimination of variable X1. This process is repeated for variables Xk and nodes k for k = 1, …, n − 1. For node k of the join tree, where the variable Xk is eliminated, we define as above

λ(1) − {1}. These coefficients specify the new system of equations after elimination of variable X1. This process is repeated for variables Xk and nodes k for k = 1, …, n − 1. For node k of the join tree, where the variable Xk is eliminated, we define as above

![]()

for the equations containing variable Xk after k − 1 elimination steps and

![]()

for the variables contained in these equations. Again, by assumption, ![]() ≠ 0. Hence, the k-th. equation belongs to

≠ 0. Hence, the k-th. equation belongs to ![]() (k) and the variable Xk belongs to λ(k). So, we may eliminate variable Xk from the k-th equation. Similar to the first step, we remark that rk,i = 0 for i ∉ λ(k) and lj,k = 0 for j ∉

(k) and the variable Xk belongs to λ(k). So, we may eliminate variable Xk from the k-th equation. Similar to the first step, we remark that rk,i = 0 for i ∉ λ(k) and lj,k = 0 for j ∉ ![]() (k) and also

(k) and also

![]()

The message sent from node k to its child ch(k) is given by the coefficients

![]()

for j ![]()

![]() (k) − {k} and i

(k) − {k} and i ![]() λ(k) − {k}. The process stops at node n. This discussion permits to clarify how the zero-pattern of the matrix A is controlled and maintained by this fusion algorithm.

λ(k) − {k}. The process stops at node n. This discussion permits to clarify how the zero-pattern of the matrix A is controlled and maintained by this fusion algorithm.

Lemma 9.1 The k-th equation of the system AX = b is covered by some node i ≤ k of the join tree (V, E, λ, D). More precisely, there exists an index i ≤ k such that

![]()

Proof: Let i be the least index of the variables occurring in the k-the equation. Since the k-th equation contains no variables with indices h < i, we conclude that k ∉ ![]() (h) for h ≤ i and hence ak,l(i) = ak,l for l = 1, …, n and ak,i(i) = ak,i ≠ 0. So, the k-th equation belongs to

(h) for h ≤ i and hence ak,l(i) = ak,l for l = 1, …, n and ak,i(i) = ak,i ≠ 0. So, the k-th equation belongs to ![]() (i) and is therefore covered by λ(i).

(i) and is therefore covered by λ(i).

This result allows us to assign each equation k to some node i ≤ k of the join tree, and we have ak,h = 0 for h ∉ λ(i). This zero-pattern is maintained through the variable elimination process due to the remarks of the analysis above, i.e. ![]() = 0 for h ∉ λ(i) and l ≤ i.

= 0 for h ∉ λ(i) and l ≤ i.

To complete the description of the fusion algorithm in the case of a regular system of linear equations, we summarize it as a message-passing scheme: The system of equations assigned to the node k of the join tree is denoted by ![]() k for k = 1, …, n. Some of these systems may be empty. A system

k for k = 1, …, n. Some of these systems may be empty. A system ![]() k determines the affine space ψk of its solutions. If the system is empty, the affine space consists of all possible vectors. In other words, ψk is then the neutral valuation in the information algebra of affine spaces. The problem of finding the solution to the system AX = b can then be written for k = 1, …, n as the inference problem

k determines the affine space ψk of its solutions. If the system is empty, the affine space consists of all possible vectors. In other words, ψk is then the neutral valuation in the information algebra of affine spaces. The problem of finding the solution to the system AX = b can then be written for k = 1, …, n as the inference problem

![]()

Given the uniqueness of the solution, each such projection is just a number.

Now, the fusion algorithm is described in terms of the information elements ψk as follows: at step 1, node 1 sends the message

![]()

to its child node ch(1). This is done by transforming the systems of equations ![]() 1 according to the above procedure, i.e. by solving the first equation with respect to X1 and replacing the variable X1 in the remaining equations of

1 according to the above procedure, i.e. by solving the first equation with respect to X1 and replacing the variable X1 in the remaining equations of ![]() 1. The resulting system is denoted by

1. The resulting system is denoted by ![]() 1→ch(1) and represents the message μ1→ch(1). The message is combined to the content of node ch(1) to get

1→ch(1) and represents the message μ1→ch(1). The message is combined to the content of node ch(1) to get

![]()

This new system is simply represented by

![]()

More generally, let ψi(k) be the information element on node i before step k of the fusion algorithm. We therefore have ψi(1) = ψi. The associated system of equations is denoted by ![]() i(k). At step k, node k sends the message

i(k). At step k, node k sends the message

![]()

to node ch(k) by solving the first equation of the system ![]() k(k) with respect to Xk and replacing the variable Xk in the remaining equations, which gives the system

k(k) with respect to Xk and replacing the variable Xk in the remaining equations, which gives the system ![]() k→ch(k) representing the message. This message is added to the system in the child node ch(k) to yield

k→ch(k) representing the message. This message is added to the system in the child node ch(k) to yield

![]()

All other nodes remain unchanged, i.e. ![]() for i ≠ ch(k). This process is repeated for k = 1, …, n − 1. At the end, the root node n contains the valuation

for i ≠ ch(k). This process is repeated for k = 1, …, n − 1. At the end, the root node n contains the valuation ![]() ↓(Xn) which is represented by the system

↓(Xn) which is represented by the system

This is the end of the fusion algorithm interpreted as message-passing scheme, or equivalently, of the collect algorithm as described in Section 3.8, executed on the particular join tree that is induced by the fusion algorithm.

Since we are dealing with an idempotent valuation algebra, we can use the corresponding architecture from Section 4.5 for the distribute phase to compute the complete solution ![]() →{Xi} for all i = 1, …, n. We first transform equation (9.10) on the node n into the equivalent form

→{Xi} for all i = 1, …, n. We first transform equation (9.10) on the node n into the equivalent form

from which we determine the solution value

![]()

This in fact defines the single-point affine space ![]() →{Xn} = {xn}. Observe now that if n − 1 is a neighbor to node n such that ch(n − 1) = n, it follows by the construction of the join tree from the fusion algorithm that λ(n − 1)

→{Xn} = {xn}. Observe now that if n − 1 is a neighbor to node n such that ch(n − 1) = n, it follows by the construction of the join tree from the fusion algorithm that λ(n − 1) ![]() λ(n) = λ(n − 1) − {Xn-1} = {Xn}. So, the message sent from node n to node n − 1 in the distribute phase is

λ(n) = λ(n − 1) − {Xn-1} = {Xn}. So, the message sent from node n to node n − 1 in the distribute phase is

![]()

This means that equation (9.11) is added to the system ![]() containing the equation

containing the equation

besides possibly a second system that represents the message from node n − 1 to n in the collect phase. This second system is redundant with the equation (9.11) and can be eliminated. Finally, the solution xn from equation (9.11) can be introduced into (9.12) to yield the solution value for Xn-1 determining the affine space ![]() →{Xn-1}. In the general step of the distribute phase, node k receives a message

→{Xn-1}. In the general step of the distribute phase, node k receives a message

![]()

from its child node ch(k). By the induction assumption this message is a single-point affine space given by the solution values x1 for i ![]() λ(k) − {k}. The system of equations on node k contains the equation

λ(k) − {k}. The system of equations on node k contains the equation

![]()

The solution values contained in the message are introduced into this equation to get the solution value for Xk,

![]()

So, by induction, distribute corresponds to the construction of the solution values of the variables Xk on the nodes k of the join tree by backward substitution. This is in fact a consequence of the theory of solution construction developed in Chapter 8 applied to context valuation algebras. In Exercise H.4 we proposed to define solution sets in context valuation algebras by their associated set of models. The computations in the relational algebra of solution sets performed by the solution construction algorithms of Section 8.2 therefore correspond to computations in the context valuation algebra itself. Hence, the above execution of the idempotent distribute phase coincides with the application of the generic solution construction procedure from Section 8.2.3. We continue this discussion in Section 9.2 and Section 9.3.2, where other valuation algebra are studied that do not belong to the class of context valuation algebras. There, the solution construction procedures will be applied explicitly.

This completes the picture of the solution process as a message-passing procedure on the join tree induced by the fusion algorithm. In particular, the discussion shows that locality as represented by the join tree is also maintained during the distribute phase. It is important to understand that a similar process can be executed on an arbitrary join tree, where λ(k) ![]() λ(ch(k)) = λ(k) − {Xk} does not necessarily hold. Here, we have chosen the very particular join tree of the fusion algorithm for illustration purposes, i.e. exactly one variable is eliminated from the equation system between two neighboring join tree nodes. It follows a numerical example:

λ(ch(k)) = λ(k) − {Xk} does not necessarily hold. Here, we have chosen the very particular join tree of the fusion algorithm for illustration purposes, i.e. exactly one variable is eliminated from the equation system between two neighboring join tree nodes. It follows a numerical example:

Example 9.1 Consider a regular 4 × 4 matrix A and a corresponding vector b,

We solve the linear system AX = b by elimination of the variables X1, X2 and X3 in this order. Eliminating X1 yields the following new matrix A(1) and the new right-hand side vector b(1):

We remember also the non-zero r- and l-elements r1,2 = 1 and l2,1 = 2. Next, we eliminate X2 and obtain

![]()

In addition we have r2,3 = −1 and l3,2 = −2. It remains to eliminate X3 giving

![]()

with l4,3 = 2/3 and r3,4 = ![]() . We can now solve the equation

. We can now solve the equation

![]()

for X4 and obtain the solution value x4 = 14.

Figure 9.3 shows the join tree induced by the fusion algorithm described above. We may assign the first two equations to the left-most node and the last two equations to the second node from the left. Eliminating the variable X1 generates the equation

Figure 9.3 The join tree induced by the variable elimination in Example 9.1.

![]()

as the message sent to the next node. There, this equation is added to the two equations already hold by this node. This yields the linear system defined by the matrix A(1) and the vector b(1). Next, eliminating the variable X2 generates the system described by the matrix A(2) and the vector b(2) as the message to be sent from the second to the third node. Since the system contained in the third node is empty, the node content becomes equal to the received message. Finally, eliminating the variable X3 generates the equation (1/3)X4 = 14/3 as the message sent to the fourth node. Again, the content of node 4 is an empty system such that the received message directly becomes the new node content.

Now, the distribute phase starts by solving the equation of node 4, which gives us the solution value x4 = 14 as remarked above. Node 4 sends this solution value back to node 3, where it can substitute the variable X4. Using the first equation of the system defined by A(2) and b(2) on this node, we can solve for X3 to obtain X3 = 2/3 − (1/3)x4 = −4. This is the value sent back to node 2, where it can be used to determine the solution value of X2 using the first equation of the system on this node, x2 = 1 + x3 = −3. Finally, this values is sent back to node 1, where we get from the first equation x1 = 2 − x2 = 5. This constitutes the totality of the unique solution of the system AX = b. Note that in the whole process, at each step only the variables belonging to the label of the current join tree node are involved. This shows the locality of the solution process.

In the next section we study a variant of this process, which has advantages if the system of equations AX = b must be solved for different vectors b. By memorizing certain intermediate factors derived from the matrix A in the first run of the above algorithm, we obtain a factorization of A into an upper and a lower triangular matrix. If later another equation system with the same matrix must be solved, only the computations with respect to the new vector must be repeated. This considerably simplifies the computations of the involved local computation messages.

9.1.4 LDR-Decomposition

Let us review the process of Gaussian variable elimination described in Section 9.1.3. From the recursive definition in equation (9.8) we derive

![]()

for 1 ≤ k ≤ i, if we define vk,i = ![]() . This implies that

. This implies that

If we set lk,k = 1, we may also write

This can also be expressed by the matrix product

![]()

where L is a lower triangular und R an upper triangular matrix,

and

This representation is called LV-decomposition of the matrix A. We remark that the vk,i used here are closely related to the elements rk,i in equation (9.7). We have

Therefore, if we define the diagonal matrix D with elements dk,k = 1/vk,k on the diagonal and zeros otherwise, and further the upper triangular matrix

then we have the representation

![]()

This is called LDR-decomposition of the matrix A.

We may neglect the right-hand side of the system AX = b in the fusion algorithm described in the previous Section 9.1.3 and only compute the elements lk,i, rk,i and ![]() that depend on the matrix A. If we relate these elements to the join tree induced by the fusion algorithm, we remind that rj,i = 0 for i ∉ λ(j) and lk,j = 0 for j ∉

that depend on the matrix A. If we relate these elements to the join tree induced by the fusion algorithm, we remind that rj,i = 0 for i ∉ λ(j) and lk,j = 0 for j ∉ ![]() (j). Thus, the two matrices L and R maintain the zero-pattern described by the join tree. The computation of the LDR-decomposition in the fusion algorithm can be interpreted as a compilation of the matrix A, which can then be used to solve AX = b for different vectors b. This will be shown next.

(j). Thus, the two matrices L and R maintain the zero-pattern described by the join tree. The computation of the LDR-decomposition in the fusion algorithm can be interpreted as a compilation of the matrix A, which can then be used to solve AX = b for different vectors b. This will be shown next.

Given some vector b and the LV- or LDR-decomposition of the matrix A, we solve the system AX = b by first solving LY = b, yielding the solution y, and then VX = DRX = y. This solution process is simple, since both matrices L and V are triangular: We first solve the system LY = b by executing the collect algorithm on the join tree. In fact, starting with node 1 we directly obtain the solution value y1 = b1 from the first equation Y1 = b1. We introduce this value into the equations j ![]()

![]() (1) − {1} and obtain the new system

(1) − {1} and obtain the new system

![]()

Defining ![]() = bj − lj,1b1, the message sent to the child node ch(1) is the set

= bj − lj,1b1, the message sent to the child node ch(1) is the set

![]()

This defines the new lower triangular system after elimination of variable Y1. In the k-th step, the equation for Yk on the node k is Yk = ![]() . The solution value yk = bk(k-1) is introduced into the equations j

. The solution value yk = bk(k-1) is introduced into the equations j ![]()

![]() (k) − {k} to obtain

(k) − {k} to obtain

![]()

So, the message sent to the child node ch(k) is the set

![]()

with bk(k) = bj(k-1) − lj,kbk(k-1). In this way, the solution yk for k = 1, …, n is obtained. It is important to remark that in the general case, the collect algorithm only computes the solution for the variable Yn in the root node, and the values for the remaining variables are found by backward substitution in the distribute phase. In the case at hand, we are dealing with triangular systems and therefore obtain all solutions y of the system LY = b already from the collect algorithm.

In a second step, we execute the distribute algorithm to solve the system VX = DRX = y, respectively RX = D−1y. The root node n contains the equation Xn = yn/dn,n from which we determine the solution value xn = yn/dn,n. This value is sent to node n − 1 and replaces there the variable Xn in the equations from RX = D−1y that are contained in the node n − 1. More precisely, we compute for

![]()

for j ![]() λ(n − 1), according to equation (9.12). In the general case, node k receives a message from node ch(k), which consist of

λ(n − 1), according to equation (9.12). In the general case, node k receives a message from node ch(k), which consist of

![]()

for j ![]() ≤(k). Altogether, this permits to solve the triangular system of equations on the node k for k = n, …, 1.

≤(k). Altogether, this permits to solve the triangular system of equations on the node k for k = n, …, 1.

We again point out that the solution of AX = b for an arbitrary, regular matrix A required in the previous section a complete run of the collect and distribute algorithm. But if we dispose of an LDR-decomposition of A, we may solve the system LY = b with a single run of the collect algorithm, because the involved matrix L is lower triangular. Likewise, we directly obtain the solution to the system DR = y by a single run of the distribute algorithm, because the matrix DR is upper triangular. This symmetry between the application of collect and distribute is worth noting.

We apply this compilation approach to Example 9.1 above:

Example 9.2 In Example 9.1 we determined the elements of the matrices L and R,

We remark how the zero-pattern controlled by the join tree of Figure 9.3 is still reflected, both in the matrix L as well as in the matrix R. With the diagonal matrix below we obtain the LDR-decomposition A = LDR:

In the first step, we solve the system LY = b, where b is given in Example 9.3. We again assign the first two equations of this system to the first join tree node and the last two equations to the second node to control fill-ins. In the first node, we obtain y1 = 2 and introduce this value into the second equation, whose right-hand side becomes b2(2) = b2 − l2,1y1 = −1. This value is sent to node 2, where now the solution value y2 = b2(1) = −1 is obtained. Also, node 2 computes the message b3(3) = b3 − l3,2y2 = 2 and sends it to node 3. Node 3 computes y3 = 2 and sends the message b4(3) = b4 − l4,3y3 = 14/3 to node 4, where we finally obtain y4 = 14/3.

The solution of RX = D−1y by the distribute algorithm, using the values y computed in the collect phase, is initiated by the root node 4. We obtain on node 4 the value x4 = y4/d4,4 = 14. This value is sent to node 3, where we obtain x3 = y3/d3,3 − r3,4x4 = −4 and send this message to node 2. Continuing this process, we obtain x2 = y2/d2,2 − r2,3x3 = −3 in node 2 and x1 = y1/d1,1 − r1,2x2 = 5 in node 1. This terminates the distribute phase. We note that on all nodes j only variables from the node label λ(j) are involved.

This ends our first discussion of regular systems. An important special case of regular systems is provided by symmetric, positive definite systems. They allow to refine the present approach as shown in the following section.

9.2 SYMMETRIC, POSITIVE DEFINITE MATRICES

The method of LDR-decomposition presented in the previous section becomes especially interesting in the case of linear systems with symmetric, positive definite matrices. Such systems arise frequently in electrical network analysis, analysis of structural systems and hydraulic problems. Further, they arise in the classical method of least squares, which will be discussed in Section 9.2.5 as an application of the results developed here. In all these cases, the exploitation of sparsity is important. However, it turns out that in the case of systems of linear equations with symmetric, positive definite matrices, the underlying algebra of affine solution spaces is of less interest and can be replaced by another valuation algebra. We first introduce this algebra, which interestingly is closely related to the valuation algebra of Gaussian potentials introduced in Instance 1.6. The reason for this is elucidated in Section 9.2.5, where systems of linear equations with symmetric, positive definite matrices are related to statistical problems.

9.2.1 Valuation Algebra of Symmetric Systems

To put the discussion into the general framework of valuation algebras, we consider variables X1, …, Xn together with the associated index set r = {1, …, n}. Vectors of variables X, as well as matrices A and vectors b then refer to certain subsets s ⊆ r. For instance, a system AX = b is said to be an s-system, if X is the variable vector whose components Xi have indices in s ⊆ r, A is a symmetric, positive definite s × s matrix and b is an s-vector. Such systems are fully determined by the pair (A, b) where A is an s × s matrix, b an s-vector for some s ⊆ r. These are the elements of the valuation algebra to be identified. Let Φs be the set of all pairs (A, b) relative to some subset s ⊆ r and define

![]()

By convention, we define for the empty set ![]() . The label of a pair (A, b) is defined as d(A, b) = s, if (A, b)

. The label of a pair (A, b) is defined as d(A, b) = s, if (A, b) ![]() Φs.

Φs.

In order to define the operations of combination and projection for elements in Φ, we first consider variable elimination in the system AX = b. Suppose (A, b) ![]() Φs, i.e. the system is over variables in s, and assume t ⊆ s. We want to eliminate the variables in the index set s − t. For this purpose, we decompose A as follows:

Φs, i.e. the system is over variables in s, and assume t ⊆ s. We want to eliminate the variables in the index set s − t. For this purpose, we decompose A as follows:

![]()

The system AX = b can then be written as

![]()

Solving the first part for X↓s − t and substituting this in the second part leads to the reduced system for X↓t

![]()

Here we use the fact that any diagonal submatrix of a symmetric, positive definite matrix is regular. We define

and remark that ![]() is still symmetric, positive definite.

is still symmetric, positive definite.

Theorem 9.1 If x is the unique solution of AX = b, then x↓t is the unique solution to ![]() . Conversely, if y is the unique solution of

. Conversely, if y is the unique solution of ![]() and

and

![]()

then (x, y) is the unique solution of AX = b.

Proof: The proof is straightforward, using the definitions of ![]() . Since x is a solution of AX = b, it holds that

. Since x is a solution of AX = b, it holds that

![]()

hence

![]()

This proves the first part of the theorem. The second part follows from the first subsystem above, if x↓s-t is replaced by x and x↓t by y and if it is multiplied on both sides by (A↓s-t,s-t)−1.

This theorem justifies to define the operation of projection for elements in Φ by

for t ⊆ d(A, b). It is well-known in matrix algebra that ![]() may also be written as

may also be written as

![]()

A proof can for example be found in (Harville, 1997).

Next, consider two systems A1X1 = b1 and A2X2 = b2. What is a sensible way to combine these two systems into a new system? Clearly, taking simply the union of both systems as we did so far with systems of linear equations makes no sense, because the matrix of the combined system will no more be symmetric. Moreover, the combined system will most of the time have no solution anymore. We propose alternatively to add the matrices and the right-hand vectors component-wise. More precisely, we define for d(A1, b1) = s and d(A2, b2) = t,

At this point, the proposed definition is rather ad hoc. It will be justified by the success of local computation and even more strongly by a semantical interpretation given in the following Section 9.2.5. For the time being, the question is whether (Φ, D) together with the operations of labeling, projection and combination satisfies the axioms of a valuation algebra. Instead of verifying the axioms directly, we consider the mapping (A, b) ![]() (A, u), where u = A−1b. Let ψ be the set of all pairs (A, u), where A is a symmetric, positive definite s × s matrix and u an s-vector. This mapping is a surjection between Φ and ψ. We next look at how combination and projection in Φ is mapped to ψ. Let (A1, b1) and (A2, b2) belong to Φ with labels s and t, respectively. Then, by the definition of combination above, (A1, b1)⊗(A2, b2) = (A, b) with

(A, u), where u = A−1b. Let ψ be the set of all pairs (A, u), where A is a symmetric, positive definite s × s matrix and u an s-vector. This mapping is a surjection between Φ and ψ. We next look at how combination and projection in Φ is mapped to ψ. Let (A1, b1) and (A2, b2) belong to Φ with labels s and t, respectively. Then, by the definition of combination above, (A1, b1)⊗(A2, b2) = (A, b) with

![]()

Hence, (A1, b1) ⊗ (A2, b2) maps to (A, u) with

where u1 = A1−1b1 and u1 = A2−1b2. Consequently, a combination of two elements in Φ is mapped as follows to an element in ψ

![]()

with

![]()

and

![]()

Turning to projection, let (A, b) be an element in Φ with domain s, which is mapped to (A, u) with u = A−1b. If t ⊆ s, then (A, b)↓t represents the system ![]() with the unique solution u↓t as a consequence of Theorem 9.1. This means that (A, b)↓t maps to

with the unique solution u↓t as a consequence of Theorem 9.1. This means that (A, b)↓t maps to

![]()

where

![]()

We thus have the following projection rule in ψ:

![]()

We observe that combination and projection in ψ correspond exactly to the operations of Gaussian potentials introduced in Instance 1.6 and we therefore know that ![]() ψ, D

ψ, D![]() forms a valuation algebra. Moreover, due to the introduced mapping,

forms a valuation algebra. Moreover, due to the introduced mapping, ![]() ψ, D

ψ, D![]() and

and ![]() ψ, D

ψ, D![]() are isomorphic which implies that

are isomorphic which implies that ![]() Φ, D

Φ, D![]() is a valuation algebra. We proved the following theorem:

is a valuation algebra. We proved the following theorem:

Theorem 9.2 The algebra of linear systems with symmetric, positive definite matrices is isomorphic to the valuation algebra of Gaussian potentials.

Let us have a closer look at the combination of (A1, b1) and (A2, b2) with d(A1, b1) = s and d(A2, b2) = t. If X denotes an (s ∪ t) variable vector, these valuations represent the systems A1X↓s = b1 and A2X↓t = b2 with symmetric, positive definite matrices. We consider the system AX = b which is represented by (A1, b1) ⊗ (A2, b2) such that

![]()

In order to compute the solution to the system AX = b, we proceed in two steps by first projecting to t, i.e. eliminating the variables X↓s-t, and then solving the system ![]() . This is justified by Theorem 9.1. We decompose the matrices and vectors according to the sets s − t, s

. This is justified by Theorem 9.1. We decompose the matrices and vectors according to the sets s − t, s ![]() t and t − s and write the system as

t and t − s and write the system as

Eliminating the variables X↓s − t means to solve the first subsystem for the variables X↓s-t and to substitute the solution into the other equations, which gives the system

![]()

Note that this system is represented by either

(9.16) ![]()

This illustrates the combination axiom and shows how local computation can be applied to symmetric, positive definite systems. If the second representation is used, then the variables X↓s-t in the first system are eliminated and the result is combined to the second system. It would be reasonable to apply the local computation methods of Section 9.1.2 and 9.1.3 for the variable elimination process. This however is not possible because idempotency does not hold anymore in this algebra. Symmetric, positive definite systems only form a valuation algebra but not an information algebra. For local computation, this means that we must apply the Shenoy-Shafer architecture. However, Theorem 9.1 offers an alternative possibility based on solution construction which comes near to idempotency. This is exploited in the following section, and variable elimination and LDR-decomposition with symmetric systems is examined in Section 9.2.3.

9.2.2 Solving Symmetric Systems

We now return to local computation as a means to control fill-ins. Note that zero-patterns in a symmetric matrix are symmetric too. Consequently, symmetric decompositions of the matrix A as shown in Figure 9.1 are not possible. This implies that the underlying valuation algebra of affine spaces introduced in Section 7.2 and used so far is no longer of interest. In other words, combination as intersection or join of affine spaces does not really reflect the natural decomposition of symmetric systems. Instead, this is achieved by adding symmetric, positive definite matrices as showed in the foregoing section. The corresponding algebra will now be used to control fill-ins.

Consider a join tree (V, E, λ, D) with the labeling function λ: V → D = P(r) for r = {1, …, n}. We number the nodes from 1 to m = |V| and assume that any node i ![]() V in this join tree covers a pair

V in this join tree covers a pair ![]() i = (Ai, bi) with ωi = d(

i = (Ai, bi) with ωi = d(![]() i). They consist of a symmetric, positive definite ωi × ωi matrix Ai and an ωi-vector bi. We further assume that ωi

i). They consist of a symmetric, positive definite ωi × ωi matrix Ai and an ωi-vector bi. We further assume that ωi ![]() λ(i) and ω1 ∪ … ∪ ωm = r. In other words,

λ(i) and ω1 ∪ … ∪ ωm = r. In other words, ![]() i are elements of the valuation algebra introduced in Section 9.2.1 and (V, E, λ, D) is a covering join tree for the factorization

i are elements of the valuation algebra introduced in Section 9.2.1 and (V, E, λ, D) is a covering join tree for the factorization

according to Definition 3.8. As usual, the nodes of the join tree are numbered such that if node j is on the path form node i to node m, then i < j. We will see in Section 9.2.4 how such decompositions can be produced, and it will be shown in 9.2.5 that factorizations of symmetric, positive definite systems may also occur naturally in certain applications. The objective function ![]() represents the symmetric, positive definite system AX = b, where

represents the symmetric, positive definite system AX = b, where

![]()

and

![]()

The join tree exhibits the sparsity of the matrix A. We have aj,h = 0, if j and h do not belong both to some ωi. Its zero-pattern is denoted by the set

Given a factorized system ![]() = (A, b) =

= (A, b) = ![]() 1 ⊗ … ⊗

1 ⊗ … ⊗ ![]() m and some covering join tree, we next focus on the solution process using local computation. As mentioned above, this algebra is fundamentally different from the algebra of affine spaces and does not directly yield solution sets. Instead, we aim at first executing a local computation scheme and later build the solution x = A−1b using a generic solution construction algorithm from Chapter 8. Hence, we start by introducing a suitable notion of configuration extension sets for symmetric, positive define systems, motivated by Theorem 9.1: For a t-vector of real numbers x,

m and some covering join tree, we next focus on the solution process using local computation. As mentioned above, this algebra is fundamentally different from the algebra of affine spaces and does not directly yield solution sets. Instead, we aim at first executing a local computation scheme and later build the solution x = A−1b using a generic solution construction algorithm from Chapter 8. Hence, we start by introducing a suitable notion of configuration extension sets for symmetric, positive define systems, motivated by Theorem 9.1: For a t-vector of real numbers x, ![]() = (A, b) and t ⊆ d(

= (A, b) and t ⊆ d(![]() ) we define

) we define

For later reference we prove the following property:

Lemma 9.2 Assume ![]() 1 = (A, b1) and

1 = (A, b1) and ![]() 2 = (A, b2) with d(

2 = (A, b2) with d(![]() 1) = d(

1) = d(![]() 2) = s and t ⊆ u ⊆ s. If

2) = s and t ⊆ u ⊆ s. If

![]()

then it holds for all t-vectors x that

![]()

Proof: By equation (9.19) and ![]() we have for i = 1, 2

we have for i = 1, 2

The statement of the lemma then follows directly from:

Based on this lemma, we show that the definition of equation (9.19) complies with the general definition of configuration extension sets in Section 8.1.

Theorem 9.3 Solution extension sets in valuation algebras of symmetric, positive definite systems satisfy the property of Definition 8.1, i.e. for all ![]() = (A, b)

= (A, b) ![]() Φ with t ⊆ u ⊆ s = d(

Φ with t ⊆ u ⊆ s = d(![]() ) and all t-vectors x we have

) and all t-vectors x we have

![]()

Proof: If x is the partial solution to the system ![]() = (A, b), then the above statement follows directly from Theorem 9.1. In the following proof, we consider an arbitrary t-vector x and determine a new system

= (A, b), then the above statement follows directly from Theorem 9.1. In the following proof, we consider an arbitrary t-vector x and determine a new system ![]() 1 = (A, b1) to which x is the partial solution. The statement then holds for x with respect to this new system, and if

1 = (A, b1) to which x is the partial solution. The statement then holds for x with respect to this new system, and if ![]() 1 is chosen in such a way that Lemma 9.2 applies, then the statement also holds for x with respect to the original system

1 is chosen in such a way that Lemma 9.2 applies, then the statement also holds for x with respect to the original system ![]() . Hence, let x be an arbitrary t-vector. We consider the new system

. Hence, let x be an arbitrary t-vector. We consider the new system ![]() 1 = (A, b1) with

1 = (A, b1) with

![]()

and determine ![]() such that x is the solution to

such that x is the solution to ![]() , i.e.

, i.e.

![]()

and therefore

![]()

Since x is the solution to (A, b1)→t, it follows from Theorem 9.1 that (y, x) with

![]()

is the solution to the system (A, b1). We thus have

![]()

as a consequence of Lemma 9.2. Then, since (y,x) is the solution to (A,b1), it follows from Theorem 9.1 that (y↓u−t, x) is the solution to (A, b1)↓u. Hence,

![]()

by Lemma 9.2. Finally, since ![]() is the solution to (A, b1)↓u it again follows from Theorem 9.1 that (y↓u−t,x) is the solution to (A, b1). We have

is the solution to (A, b1)↓u it again follows from Theorem 9.1 that (y↓u−t,x) is the solution to (A, b1). We have

![]()

Altogether, this show that for an arbitrary t-vector x we have

![]()

Next, we specialize general solution sets from Definition 8.2 to symmetric, positive definite systems. For ![]() = (A, b)

= (A, b) ![]() Φ and t =

Φ and t = ![]() , it follows from equation (9.19) that

, it follows from equation (9.19) that

(9.20) ![]()

This shows that c![]() indeed corresponds to the singleton set of the unique solution x = A−1b to the symmetric, positive definite system AX = b. We therefore conclude that the generic solution construction procedure of Section 8.2.1 could be applied to build c

indeed corresponds to the singleton set of the unique solution x = A−1b to the symmetric, positive definite system AX = b. We therefore conclude that the generic solution construction procedure of Section 8.2.1 could be applied to build c![]() based on the results of a multi-query local computation scheme. However, the same is also possible using the results of a single-query architecture only. This follows by verifying the property of Theorem 8.3:

based on the results of a multi-query local computation scheme. However, the same is also possible using the results of a single-query architecture only. This follows by verifying the property of Theorem 8.3:

Lemma 9.3 Symmetric, positive definite systems satisfy the property that for all ψ1, ψ2 ![]() Φ with d(ψ1) = s, d(ψ2) = t, s ⊆ u ⊆ s ∪ t and u-vector x we have

Φ with d(ψ1) = s, d(ψ2) = t, s ⊆ u ⊆ s ∪ t and u-vector x we have

![]()

Proof: We observe the following identities for ψ1 = (A1, b1) and ψ2 = (A2, b2):

![]()

and similarly

![]()

It then follows that

Hence, given the results of a single-query local computation architecture, we may apply Algorithm 8.4 to build the unique solution x = A−1b of a factorized system ![]() = (A, b) =

= (A, b) = ![]() 1 ⊗ … ⊗

1 ⊗ … ⊗ ![]() m with

m with ![]() i = (Ai, bi) for i = 1, …, m. At this point, we should remember that the valuation algebra of symmetric, positive definite systems is isomorphic to the valuation algebra of Gaussian potentials and does not provide neutral elements; see Instance 3.5. Hence, the domain ωi = d(

i = (Ai, bi) for i = 1, …, m. At this point, we should remember that the valuation algebra of symmetric, positive definite systems is isomorphic to the valuation algebra of Gaussian potentials and does not provide neutral elements; see Instance 3.5. Hence, the domain ωi = d(![]() i) of node i

i) of node i ![]() V in the join tree does not necessarily correspond to the node label λ(i). We only have ωi ⊆ λ(i). Consequently, we must choose the generalized collect algorithm from Section 3.10 to explain the message-passing view of this local computation based solution. The run of this algorithm followed by the solution construction process is delineated next, using the notation of equation (3.42) from Section 3.10.

V in the join tree does not necessarily correspond to the node label λ(i). We only have ωi ⊆ λ(i). Consequently, we must choose the generalized collect algorithm from Section 3.10 to explain the message-passing view of this local computation based solution. The run of this algorithm followed by the solution construction process is delineated next, using the notation of equation (3.42) from Section 3.10.

At step i = 1, …, m −1, node i sends the message

![]()

to its child node ch(i), where the message is combined with the current node content

![]()

The domain of the node content updates to:

![]()

For all other nodes j ≠ ch(i) we set

![]()

with

![]()

for all i = 1, …, m. We now add an additional step that is not part of the general collect algorithm. When node i computes the message for its child node, it has to eliminate the variables

from its node content. This equality is due to Lemma 4.2. The eliminated variables satisfy the system

For later use in the solution construction phase, we store in node i the matrices on the right-hand side of the equation. This is the only modification with respect to the generalized collect algorithm. If we repeat this process up to i = m, we obtain on node m the pair

![]()

as a consequence of Theorem 3.7. The content of node m at the end of the collect phase represents the symmetric system

![]()

Solving this system gives us the partial solution set ![]() , as a consequence of Lemma 9.20 and Lemma 8.1. We now follow the solution construction algorithm of Section 8.2.3 starting in the root node m. At step i = m −1, …, 1 node i

, as a consequence of Lemma 9.20 and Lemma 8.1. We now follow the solution construction algorithm of Section 8.2.3 starting in the root node m. At step i = m −1, …, 1 node i ![]() V receives the partial solution set

V receives the partial solution set

![]()

from its child node ch(i). Using the matrices stored in (9.22), node i computes

(9.23) ![]()

to obtain the partial solution x↓λ(i) = ![]() with respect to its proper node label. At the end of the solution construction process, each node i

with respect to its proper node label. At the end of the solution construction process, each node i ![]() λ(i) contains the solution x to the system AX = b projected to its node label, and the complete solution x can simply be obtained by assembling these partial solutions. This shows how factorized, symmetric, positive definite systems are solved using local computation. The sparsity reflected by the join tree is maintained all the time. A very similar scheme for the computation of solutions in quasi-regular semiring systems will be presented in Section 9.3.2. Here, we next focus on the adaption of LDR-decomposition to symmetric, positive definite systems.

λ(i) contains the solution x to the system AX = b projected to its node label, and the complete solution x can simply be obtained by assembling these partial solutions. This shows how factorized, symmetric, positive definite systems are solved using local computation. The sparsity reflected by the join tree is maintained all the time. A very similar scheme for the computation of solutions in quasi-regular semiring systems will be presented in Section 9.3.2. Here, we next focus on the adaption of LDR-decomposition to symmetric, positive definite systems.

9.2.3 Symmetric Gaussian Elimination

Consider again a system AX = b, where A is a symmetric, positive definite n × n matrix, and X and b are vectors with corresponding dimensions. Since positive definite systems are always regular, we could apply the local computation scheme based on the LDR-decomposition from Section 9.1.3, if we have to solve this system multiple times for different vectors b. However, here we are dealing with a different valuation algebra such that parts of this discussion must be revisited. Moreover, the approach presented in this section does not only exploit the sparsity of the system, but also benefits from the symmetry in the matrix A. Because this matrix is regular, we can use any variable elimination sequence in the Gaussian method to solve the system. As in Section 9.1.3, we renumber the variables such that they are eliminated in the order X1, …, Xn. We only need to permute the columns and the rows of the matrix A accordingly. By the symmetry of the matrix, the same permutation must be used for both columns and rows. In addition, it follows by symmetry that the LDR-decomposition satisfies R = LT such that

![]()

For positive definite matrices, D has positive diagonal entries. It also holds that

![]()

where G = LD1/2. This decomposition is due to Choleski (Forsythe & Moler, 1967).

The symmetry of the matrix A can be exploited for the elimination of variables, where it is now sufficient to store the lower (or upper) half of the matrix A and all derived matrices. Then, equation (9.9) still applies: For j, i = k + 1, …, n we have

and according to equation (9.8) also

![]()

Only the elements ![]() for k ≤ j ≤ i must be computed due to the symmetry in the matrix A. We again remark that in a symmetric, positive definite matrix the diagonal elements dk,k are positive in each step k = 1, …, n. They can thus be used as pivot elements (Schwarz, 1997). Once the decomposition A = LDLT is found, the solution to the system is obtained by solving the triangular systems LY = b, DZ = Y and LTX = Z, or alternatively by solving LY = b and LTX = D−1Y.

for k ≤ j ≤ i must be computed due to the symmetry in the matrix A. We again remark that in a symmetric, positive definite matrix the diagonal elements dk,k are positive in each step k = 1, …, n. They can thus be used as pivot elements (Schwarz, 1997). Once the decomposition A = LDLT is found, the solution to the system is obtained by solving the triangular systems LY = b, DZ = Y and LTX = Z, or alternatively by solving LY = b and LTX = D−1Y.

We now return to local computation to avoid fill-ins in the set of zero elements Z given in equation (9.18). Since we are still dealing with a valuation algebra without neutral elements, we again assume a covering join tree for the factorization of equation (9.17) and further that the join tree edges are directed towards the root node m. As usual, the nodes are numbered such that if node j is on the path form node i to node m, then i < j. Each node i ![]() V contains a factor

V contains a factor ![]() i with ωi = d(

i with ωi = d(![]() i) ⊆ λ(i). If we execute the collect algorithm as in Section 9.2.2, the computation of each message μi→ch(i) requires to eliminate the variables in

i) ⊆ λ(i). If we execute the collect algorithm as in Section 9.2.2, the computation of each message μi→ch(i) requires to eliminate the variables in

![]()

See equation 9.21. This allows us to determine a corresponding variable elimination sequence. Because the matrix A is regular, we may without loss of generality assume that the variables are numbered such that X1, …, Xn is a variable elimination sequence that corresponds to the sequence of messages. Note that a possible renumbering of variables matches a permutation of the rows and columns of the matrix A and the vector b.

We claim that the factors ![]() remain zero for all elimination steps k, if (j, h)

remain zero for all elimination steps k, if (j, h) ![]() Z.

Z.

Theorem 9.4 For all (j, h) ![]() Z and k = 0, …, n − 1 we have

Z and k = 0, …, n − 1 we have ![]() and lj,h = 0.

and lj,h = 0.

Proof: We prove the theorem by induction: For k = 0 the proposition holds by the definition of Z. We note also that lj,1 = 0 for all (j, 1) ![]() Z. This follows from equation (9.24). So, let us assume that the proposition holds for k − 1 and also that lj,k = 0 for all (j, k)

Z. This follows from equation (9.24). So, let us assume that the proposition holds for k − 1 and also that lj,k = 0 for all (j, k) ![]() Z. From equation (9.8) follows that

Z. From equation (9.8) follows that ![]() = 0, if (j, h)

= 0, if (j, h) ![]() Z. Then, by equation (9.24) it also follows that lj,k+1 = 0 for (j, k + 1)

Z. Then, by equation (9.24) it also follows that lj,k+1 = 0 for (j, k + 1) ![]() Z.

Z.

The theorem states that the non-zero pattern defined by the join tree decomposition of the matrix A is maintained in the LDLT factorization of A.

We next observe that all diagonal, square submatrices of a lower triangular matrix are lower triangular too. So, for s ⊆ r = {X1, …, Xn} the submatrix L↓s,s is lower triangular as well as L↓t-s,t-s and ![]() with s

with s ![]() t = r. This situation is schematically represented in Figure 9.4.

t = r. This situation is schematically represented in Figure 9.4.

Figure 9.4 Square submatrices of a lower triangular matrix are lower triangular too.

Subsequently, we assume that the factors ![]() or the l-elements are still available from an earlier run of the collect algorithm, see equation (9.24). This means that each node i

or the l-elements are still available from an earlier run of the collect algorithm, see equation (9.24). This means that each node i ![]() V contains the matrix

V contains the matrix ![]() which is lower triangular as remarked above. Using these matrices, we now discuss how the system AX = b can be solved by LDLT-decomposition and local computation. In a first step, we solve the system LY = b by a similar collect phase as in the foregoing Section 9.2.2. Thus, we directly explain the computations performed by node i

which is lower triangular as remarked above. Using these matrices, we now discuss how the system AX = b can be solved by LDLT-decomposition and local computation. In a first step, we solve the system LY = b by a similar collect phase as in the foregoing Section 9.2.2. Thus, we directly explain the computations performed by node i ![]() V in the step i = 1, …, m − 1. At step i of the generalized collect algorithm, node i computes the message to its child node by eliminating the variables

V in the step i = 1, …, m − 1. At step i of the generalized collect algorithm, node i computes the message to its child node by eliminating the variables

![]()

(see equation (9.21)) from the system

![]()

From the decomposition

we obtain

![]()

Note that due to the triangularity of the involved matrix, it is a simple stepwise process to compute ![]() . This solution is introduced into the second part of the system which gives after rearrangement

. This solution is introduced into the second part of the system which gives after rearrangement

![]()

We define the vector

where u is the union of all λ(k) for k = i, …, m. So, node i sends the message

![]()

to its child node. The child node combines the message with its content ![]() :

:

We thus obtain for the domain of the updated node content:

![]()

The content of all other nodes does not change at step i. At the end of the collect algorithm, the root node m contains the system

![]()

from which we obtain

![]()

This clearly shows how the collect algorithm operates locally on the join tree of the decomposition (9.17). At the end of the collect algorithm, each node i = 1, …, m-1 contains the partial solution y↓λ(i)-λ(ch(i)), and the root node contains y↓λ(m). Remark also that no solution extension phase is necessary, because the involved matrices are all lower triangular. The total solution y could be obtained from Lemma 8.4, although this is not necessary for the second part of the process.

As in Section 9.2.2 we now solve the system DLTX = Y by solution extension. The root node m solves the system

![]()

and finds the partial solution

These computations are simple because DLT is upper triangular. Assume now that node i = m−1, …, 1 obtains the partial solution ![]() from its child node. Then, node i computes equation (9.22) with A replaced by DLT and b by y:

from its child node. Then, node i computes equation (9.22) with A replaced by DLT and b by y:

and obtains

![]()

However, there is actually no need to compute the inverse matrices that occur in this formula explicitly. Instead, x↓λ(i)-λ(ch(i)) corresponds to the solution to the system

and solving this system is simple because only triangular matrices are involved. This process is repeated for i = m − 1, …, 1. At the end of the solution construction process, each node i ![]() V contains the partial solution x↓λ(i) which can then be aggregated to the total solution x of AX = b.

V contains the partial solution x↓λ(i) which can then be aggregated to the total solution x of AX = b.

We given an example of this process:

Example 9.3 Consider the join tree of Figure 9.5 where the nodes are labeled with index sets. This is suitable because we are going to solve two systems with different variable vectors X on the same join tree. The variables are already numbered according to an elimination sequence that corresponds to this join tree, if node 3 is taken as root. The symmetric, positive definite matrices A1, A2 and A3 are then written as

Figure 9.5 The join tree of Example 9.3.

Similarly, we define the vectors b1, b2 and b3 associated with the three matrices. This defines three valuation ![]() i = (Ai, bi) for i = 1,2,3, which combine to the total system

i = (Ai, bi) for i = 1,2,3, which combine to the total system ![]() =

= ![]() representing the valuation

representing the valuation ![]() = (A, b) with

= (A, b) with

![]()

and

![]()

Note also that all join tree nodes are filled in this simple example, i.e. ωi = λ(z). We further observe that the matrix A has the following non-zero structure

where × denotes a non-zero element.

If we execute the collect algorithm on the join tree of Figure 9.5, we first eliminate the variables X1 and X2 from the system in node 1 to obtain the message sent from node 1 to node 3. Then, variable X3 is eliminated for the message of node 2 to node 3. Finally, on node 3 the variables X4 and X5 are eliminated. This gives the lower triangular matrix L with the following non-zero structure:

We see that this matrix maintains the zero-pattern captured by the join tree and exhibited in the matrix A. Note also that the submatrices like L↓{1,2,4},{1,2,4} and L↓{3,4},{3,4} are still lower triangular.

Let us now consider the computations of the collect phase for solving LY = b in more detail. In the first step, we eliminate the variables Y1 and Y1 to obtain the message that is sent from node 1 to node 3. We thus have

![]()

Solving this system is very simple because the matrix L↓{1,2},{1,2} is lower triangular. In fact, from

![]()

we obtain

![]()

This solution is introduced into the fourth equation on node 3 to obtain

![]()

The message of node 2 to node 3 determines the value of Y3, which then allows to compute the solution values of variables Y4, Y5 and Y6. This builds up the entire solution y of the system LY = b and concludes the collect phase.

The solution to the system AX = b is then obtained by solving DLTX = y by solution extension. The root node obtains from equation (9.25) the partial solution x↓{x4, x5, x6} and sends the message x↓{X4} to the nodes 1 and 2. The two receiving nodes compute the partial solutions with respect to their proper node label by equation (9.26). For example, node 1 solves the system

![]()

Finally, the total solution x is build from x↓{X4, X5, X6}, x↓{X1, x2} and x↓{X3}.