CHAPTER 3

COMPUTING SINGLE QUERIES

The foregoing chapter introduced the major computational task when dealing with valuation algebras, the inference or projection problem. Also, we gained a first insight into the different semantics that inference problems can adopt under specific valuation algebra instances. The logical next step consists now in the development of algorithms to solve general inference problems. In order to be generic, these algorithms must only be based on the valuation algebra operations without any further assumptions about the concrete, underlying formalism. But before this step, we should also raise the question of whether such algorithms are necessary at all, or, in other words, why a straightforward computation of inference problems is inadequate in most cases. To this end, remember that inference problems are defined by a knowledgebase whose factors combine to the objective function which has to be projected on the actual queries of interest. Naturally, this description can directly be understood as a trivial procedure for the computation of inference problems. However, the reader may have guessed that the complexity of this simple procedure puts us a spoke in our wheel. If the knowledgebase consists of ![]() valuations, then, as a consequence of the labeling axiom, computing the objective function

valuations, then, as a consequence of the labeling axiom, computing the objective function ![]() results in a valuation of domain

results in a valuation of domain ![]() . In many valuation algebra instances the size of valuations grows at least exponentially with the size of their domain. And even if the domains grow only polynomially, this growth may be prohibitive. Let us be more concrete and analyse the amount of memory that is used to store a valuation. In Definition 3.3 this measure will be called weight function. Since indicator functions, arithmetic potentials and all their connatural formalisms (see Chapter 5) can be represented in tabular form, their weight is bounded by the number of table entries which corresponds to the number of configurations of the domain. Clearly, this is exponential in the number of variables. Even worse is the situation for set potentials and all instances of Section 5.7 whose weights behave super-exponentially in the worst case. If we further assume that the time complexity of the valuation algebra operations is related to the weight of their factors, we may come to the following conclusion: it is in most cases intractable to compute the objective function

. In many valuation algebra instances the size of valuations grows at least exponentially with the size of their domain. And even if the domains grow only polynomially, this growth may be prohibitive. Let us be more concrete and analyse the amount of memory that is used to store a valuation. In Definition 3.3 this measure will be called weight function. Since indicator functions, arithmetic potentials and all their connatural formalisms (see Chapter 5) can be represented in tabular form, their weight is bounded by the number of table entries which corresponds to the number of configurations of the domain. Clearly, this is exponential in the number of variables. Even worse is the situation for set potentials and all instances of Section 5.7 whose weights behave super-exponentially in the worst case. If we further assume that the time complexity of the valuation algebra operations is related to the weight of their factors, we may come to the following conclusion: it is in most cases intractable to compute the objective function ![]() explicitly. Second, the domain acts as the crucial point for complexity when dealing with valuation algebras. Consequently, the solution of inference problems is intractable unless algorithms are used which confine the domain size of all intermediate results. This essentially is the promise of local computation that organizes the computations in such a way that domains do not grow significantly. Before we start the discussion of local computation methods, we would like to point out that not all valuation algebras are subject to the above complexity concerns. There are indeed instances that have a pure polynomial behaviour, and this also seems to be the reason why these formalisms have rarely been considered in the context of local computation. However, we will introduce a family of such formalisms in Chapter 6 and also show that there are good reasons to apply local computation to polynomial formalisms.

explicitly. Second, the domain acts as the crucial point for complexity when dealing with valuation algebras. Consequently, the solution of inference problems is intractable unless algorithms are used which confine the domain size of all intermediate results. This essentially is the promise of local computation that organizes the computations in such a way that domains do not grow significantly. Before we start the discussion of local computation methods, we would like to point out that not all valuation algebras are subject to the above complexity concerns. There are indeed instances that have a pure polynomial behaviour, and this also seems to be the reason why these formalisms have rarely been considered in the context of local computation. However, we will introduce a family of such formalisms in Chapter 6 and also show that there are good reasons to apply local computation to polynomial formalisms.

This first chapter about local computation techniques focuses on the solution of single-query inference problems. We start with the simplest and most basic local computation scheme called fusion or bucket elimination algorithm. Then, a graphical representation of the fusion process is introduced that brings the fundamental ingredient of local computation to light: the important concept of a join tree. On the one hand, join trees allow giving more detailed information about the complexity gain of local computation compared to the trivial approach discussed above. On the other hand, they represent the required structure for the introduction of a second and more general local computation scheme for the computation of single queries called collect algorithms. Finally, we are going to reconsider the inference problem of the discrete Fourier transform from Section 2.3 and show in a case study that applying these schemes reveals the complexity of the fast Fourier transform. This shows that applying local computation may also be worthwhile for polynomial problems.

The fusion or bucket elimination algorithm is a simple local computation scheme that is based on variable elimination instead of projection. Therefore, we first investigate how the operation of projection in a valuation algebra can (almost) equivalently be replaced by the operation of variable elimination.

3.1 VALUATION ALGEBRAS WITH VARIABLE ELIMINATION

The axiomatic system of a valuation algebra given in Chapter 1 is based on a universe of variables r whose finite subsets form the set of domains D. This allows us to replace the operation of projection in the definition of a valuation algebra by another primitive operation called variable elimination which is sometimes more convenient as for the introduction of the fusion algorithm. Thus, let ![]() be a valuation algebra with D = P(r) and Φ being the set of all possible valuations with domains in D. For a valuation

be a valuation algebra with D = P(r) and Φ being the set of all possible valuations with domains in D. For a valuation ![]()

![]() Φ and a variable X

Φ and a variable X ![]() d(

d(![]() ), we define

), we define

Some important properties of variable elimination, that follow from this definition and the valuation algebra axioms, are pooled in the following lemma:

Lemma 3.1

Proof:

1. By the labeling axiom

![]()

2. By Property 1 and the transitivity axiom

3. Since Y ![]() x and Y

x and Y ![]() y we have x

y we have x ![]() (x

(x ![]() y) - {Y}

y) - {Y} ![]() ×

× ![]() y. Hence, we obtain by application of the combination axiom

y. Hence, we obtain by application of the combination axiom

According to equation (3.3) variables can be eliminated in any order with the same result. We may therefore define the consecutive elimination of a non-empty set of variables s = {X1, …, Xn} ![]() d(

d(![]() ) unambiguously by

) unambiguously by

(3.5) ![]()

This, on the other hand, permits expressing any projection by the elimination of all unrequested variables. For x ![]() d{

d{![]() }, we have

}, we have

To sum it up, equation (3.1) and (3.6) allow switching between projection and variable elimination ad libitum. Moreover, we may, as an alternative to definitions in Chapter 1, define the valuation algebra framework based on variable elimination instead of projection (Kohlas, 2003). Then, the properties in the above lemma replace their counterparts based on projection in the new axiomatic system, and projection can later be derived using equation (3.6) and the additional domain axiom (Shafer, 1991).

3.2 FUSION AND BUCKET ELIMINATION

The fusion (Shenoy, 1992b) and bucket elimination (Dechter, 1999) algorithms are the two simplest local computation schemes for the solution of single-query inference problems. They are both based on successive variable elimination and correspond essentially to traditional dynamic programming (Bertele & Brioschi, 1972). Applied to the valuation algebra of crisp constraints, bucket elimination is also known as adaptive consistency (Dechter & Pearl, 1987). We will see in this section that fusion and bucket elimination perform exactly the same computations and are therefore equivalent. On the other hand, their description is based on two different but strongly connected graphical structures that bring the two important perspectives of local computation to the front: the structural buildup of inference problems that makes local computation possible and the concept that determines its complexity. This section introduces both algorithms separately and proves their equivalence such that later considerations about complexity apply to both schemes at once. In doing so, we provide a slightly more general introduction which is not limited to queries consisting of single variables, as it is often the case for variable elimination schemes.

3.2.1 The Fusion Algorithm

Let ![]() be a valuation algebra with the operation of projection replaced by variable elimination. We further assume an inference problem given by its knowledgebase

be a valuation algebra with the operation of projection replaced by variable elimination. We further assume an inference problem given by its knowledgebase ![]() and a single query

and a single query ![]() where

where ![]() denotes the joint valuation

denotes the joint valuation ![]() . The domain d(

. The domain d(![]() ) must correspond to a set of variables {X1, …, Xn} with n = |d(

) must correspond to a set of variables {X1, …, Xn} with n = |d(![]() )|. The fusion algorithm (Shenoy, 1992b; Kohlas, 2003) is based on the important property that eliminating a variable X

)|. The fusion algorithm (Shenoy, 1992b; Kohlas, 2003) is based on the important property that eliminating a variable X ![]() d(

d(![]() ) only affects the knowledgebase factors whose domains contain X. This is the statement of the following theorem:

) only affects the knowledgebase factors whose domains contain X. This is the statement of the following theorem:

Theorem 3.1 Consider a valuation algebra ![]() and a factorization

and a factorization ![]() with

with ![]() i

i ![]() and 1 ≥ i ≤ m. For X

and 1 ≥ i ≤ m. For X ![]() d(

d(![]() ) we have

) we have

(3.7)

Proof: This theorem is a simple consequence of Lemma 3.1, Property 3. Since combination is associative and commutative, we may always write

We therefore conclude that eliminating variable X only requires combining the factors whose domains contain X. According to the labeling axiom, this creates a new factor of domain

(3.8) ![]()

that generally is much smaller than the total domain d(![]() ) of the objective function. The same considerations now apply successively to all variables that do not belong to the query x

) of the objective function. The same considerations now apply successively to all variables that do not belong to the query x ![]() d(

d(![]() ). This procedure is called fusion algorithm (Shenoy, 1992b) and can formally be defined as follows: let Fusy({

). This procedure is called fusion algorithm (Shenoy, 1992b) and can formally be defined as follows: let Fusy({![]() 1, …,

1, …, ![]() m}) denote the set of valuations after fusing {

m}) denote the set of valuations after fusing {![]() 1, …,

1, …, ![]() m} with respect to variable Y

m} with respect to variable Y ![]() d(

d(![]() 1)

1) ![]() …

… ![]() d(

d(![]() m),

m),

where

Using this notation, the result of Theorem 3.1 can equivalently be expressed as:

(3.10) ![]()

By repeated application and the commutativity of variable elimination we finally obtain the fusion algorithm for the computation of a query x; ![]() d(

d(![]() ). If {Y1, …, Yk} = d(

). If {Y1, …, Yk} = d(![]() ) - x are the variables to be eliminated, we have

) - x are the variables to be eliminated, we have

This is a generic algorithm for solving the single-query inference problem over an arbitrary valuation algebra as introduced in Chapter 1. Algorithm 3.1 provides a summary of the complete fusion process which stands out by its simplicity and compactness. Also, Example 3.1 expands the complete computation of fusion for the inference problem of Instance 2.1.

Algorithm 3.1 The Fusion Algorithm

Example 3.1 To exemplify the fusion algorithm, we reconsider the inference problem of the Bayesian network from Instance 2.1. The knowledgebase consists of eight valuations with domains: d(![]() 1) = {A}, d(

1) = {A}, d(![]() 2) = {A, T}, d(

2) = {A, T}, d(![]() 3) = {L, S}, d(

3) = {L, S}, d(![]() 4) = {B, S}, d(

4) = {B, S}, d(![]() 5) = {E,L,T}, d(

5) = {E,L,T}, d(![]() 6) = {E, X}, d(

6) = {E, X}, d(![]() 7) = {B,D,E} and d(

7) = {B,D,E} and d(![]() 8) = {S}. We choose {A, B, D} as query and eliminate all variables from {E, L, S, T, X} in the elimination sequence (X, S, L, T, E):

8) = {S}. We choose {A, B, D} as query and eliminate all variables from {E, L, S, T, X} in the elimination sequence (X, S, L, T, E):

Elimination of variable X:

![]()

Elimination of Variable S:

![]()

Elimination of Variable L:

![]()

Elimination of Variable T:

![]()

Elimination of Variable E:

![]()

We next present a graphical representation of the fusion process proposed by (Shafer, 1996). For this purpose, we maintain a set of domains that is initialized with the domains of all knowledgebase factors l = {d(![]() 1), …, d(

1), …, d(![]() m)}. In parallel, we build up a labeled graph (V, E, λ, D) which is assumed to be empty at the beginning, i.e. V =

m)}. In parallel, we build up a labeled graph (V, E, λ, D) which is assumed to be empty at the beginning, i.e. V = ![]() and E =

and E = ![]() . When variable Xi is eliminated during the fusion process, we remove all domains from the set l that contain variable Xi and compute their union

. When variable Xi is eliminated during the fusion process, we remove all domains from the set l that contain variable Xi and compute their union

We then add the new domain si - {Xi} to the set l which altogether updates to

![]()

This corresponds to the i-th step of the fusion algorithm where variable Xi ![]() d(

d(![]() ) - x is eliminated. Then, a new node i is constructed with label λ(i) = sj. This node is tagged with a color and added to the graph. We then go through all other colored graph nodes: if the label of such a node v

) - x is eliminated. Then, a new node i is constructed with label λ(i) = sj. This node is tagged with a color and added to the graph. We then go through all other colored graph nodes: if the label of such a node v ![]() V contains the variable Xi, then its color is removed and a new edge {i, v} is added to the graph. This whole process is repeated for all variables d(

V contains the variable Xi, then its color is removed and a new edge {i, v} is added to the graph. This whole process is repeated for all variables d(![]() ) - x to be eliminated. Finally, one last finalization step has to be performed, which corresponds to the combination in equation (3.11): at the end of the variable elimination process, the set l will not be empty. It consists either of the query variables that are never eliminated, or, if the query is empty, it contains an empty set. We therefore add a last, colored node to the graph whose domain corresponds to the union of all remaining elements in the domain set. Then, we link all nodes that are still tagged with a color to this new node and remove all node colors. Algorithm 3.2 gives a summery of the whole construction process and returns a labeled graph (V, E, λ, D). Since all node labels created during the graph construction process consist of the variables from d(

) - x to be eliminated. Finally, one last finalization step has to be performed, which corresponds to the combination in equation (3.11): at the end of the variable elimination process, the set l will not be empty. It consists either of the query variables that are never eliminated, or, if the query is empty, it contains an empty set. We therefore add a last, colored node to the graph whose domain corresponds to the union of all remaining elements in the domain set. Then, we link all nodes that are still tagged with a color to this new node and remove all node colors. Algorithm 3.2 gives a summery of the whole construction process and returns a labeled graph (V, E, λ, D). Since all node labels created during the graph construction process consist of the variables from d(![]() ), we may always set D = P(d(λ)). The yet mysterious name of this algorithm will be explained subsequently.

), we may always set D = P(d(λ)). The yet mysterious name of this algorithm will be explained subsequently.

Algorithm 3.2 Join Tree Construction

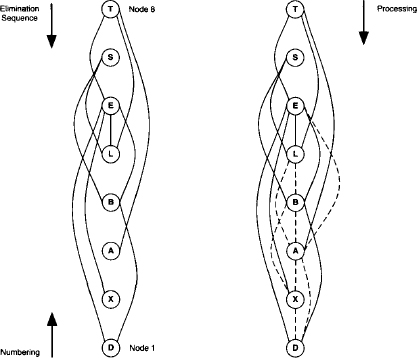

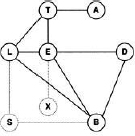

Example 3.2 We give an example of the graphical representation of the fusion algorithm based on the factor domains of the Bayesian network example from Instance 2.1. At the beginning, we have l = {{A}, {A, T}, {L, S}, {B, S}, {E, L, T}, {E, X}, {B, D, E}, {S}}. The query of this inference problem is {A, B, D} and the variables {E, L, S, T, X} have to be eliminated. We choose the elimination sequence (X, S, L, T, E) as in Example 3.1 and proceed according to the above description. Colored nodes are represented by a dashed border.

- Elimination of variable X:

- Elimination of variable S:

- Elimination of variable L:

- Elimination of variable T:

- Elimination of variable E:

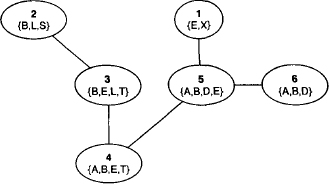

- End of variable elimination: after the elimination of variable E, only the last added node {A, B, E, D} is colored. This, however, is due to the particular structure of our knowledgebase, and it is quite possible that multiple colored nodes still exist after the elimination of the last variable. We next enter the finalization step and add a last node whose label corresponds to the union of the remaining elements in the domain list, i.e. {A} and {A, B, D}. This node is then connected to the colored node and all colors are removed. The result of the construction process is shown in Figure 3.1.

Figure 3.1 Finalization of the graphical fusion process.

3.2.2 Join Trees

Let us investigate the graphical structure that results from the fusion process in more detail. We first remark that each node can be given the number of the eliminated variable. For example, in Figure 3.1 the node labeled with {B, E, L, T} has number 3 because it was introduced during the elimination of the third variable that corresponds to L in the elimination sequence. This holds for all nodes except the one added in the finalization step. We therefore say that variable Xi has been eliminated in node i for 1 ≤ i ≤ |d(![]() ) - x|. If we further assign number |d(

) - x|. If we further assign number |d(![]() ) - x| + 1 to the finalization node, we see that if i < j implies that node j has been introduced after node i.

) - x| + 1 to the finalization node, we see that if i < j implies that node j has been introduced after node i.

Lemma 3.2 At the end of the fusion algorithm the graph G is a tree.

Proof: During the graph construction process, only colored nodes are connected, which always causes one of them to lose its color. It is therefore impossible to create cycles. But it may well be that different (unconnected) colored nodes exist after the variable elimination process. Each of them is part of an independent tree, and each tree contains exactly one colored node. In the finalization step, we add a further node and connect it with all the remaining colored nodes. The total graph G must therefore be a tree to which all nodes are connected.

This tree further satisfies the running intersection property which is sometimes also called Markov property or simply join tree property. Accordingly, such trees are named join trees, junction trees or Markov trees.

Definition 3.1 A labeled tree (V, E, λ, D) satisfies the running intersection property if for two nodes i, j ![]() V and X

V and X ![]() λ(i)

λ(i) ![]() λ(j), X

λ(j), X ![]() λ(k) for all nodes k on the path between i and j.

λ(k) for all nodes k on the path between i and j.

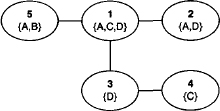

Example 3.3 Figure 3.2 reconsiders the tree G2 from Example 2.1 equipped with a labeling function λ for r = {A, B, C, D}. The labels are: λ(1) = {A, C, D}, λ(2) = {A, D}, λ(3) = {D}, λ(4) = {C} and λ(5) = {A, B}. This labeled tree is not a join tree since C ![]() λ(1)

λ(1) ![]() λ(4) but C

λ(4) but C ![]() λ(3). However, adding variable C to node label λ(3) will result in a join tree.

λ(3). However, adding variable C to node label λ(3) will result in a join tree.

Figure 3.2 This labeled tree is not a join tree since variable C is missing in node 3.

Lemma 3.3 At the end of the fusion algorithm the graph G is a join tree.

Proof: We already know that G is a tree. Select two nodes s′ and s″ and ![]() . We distinguish two cases: If X does not belong to the query, then X is eliminated in some node. We go down from s′ along to later nodes, until we arrive at the node where X is eliminated. Let k′ be the number of this node. Similarly, we go down from s″ to later nodes until we arrive at the elimination node of X. Assume the number of this node to be k". But X is eliminated in exactly one node. So k′ = k″ and the path from s′ to s″ goes from s′ to k′ = k″ and from there to s". X belongs to all nodes of the paths from s′ to k′ = k″ and s″ to k′ = k", hence to all nodes of the path from s′ to s". The second possibility is that X belongs to the query. Then, X has never been eliminated but belongs to the label of the node added in the finalization step. Thus, the two paths meet in this node and the same argument applies.

. We distinguish two cases: If X does not belong to the query, then X is eliminated in some node. We go down from s′ along to later nodes, until we arrive at the node where X is eliminated. Let k′ be the number of this node. Similarly, we go down from s″ to later nodes until we arrive at the elimination node of X. Assume the number of this node to be k". But X is eliminated in exactly one node. So k′ = k″ and the path from s′ to s″ goes from s′ to k′ = k″ and from there to s". X belongs to all nodes of the paths from s′ to k′ = k″ and s″ to k′ = k", hence to all nodes of the path from s′ to s". The second possibility is that X belongs to the query. Then, X has never been eliminated but belongs to the label of the node added in the finalization step. Thus, the two paths meet in this node and the same argument applies.

Example 3.4 In Figure 3.1, variable E is eliminated in node 5 but appears already in node 3, therefore it must also belong to the label of node 4. On the other hand, variable B belongs to the query and is therefore part of the node label introduced in the finalization step. This is node number 6. But variable B also appears in node 2 and therefore does so in the nodes 3 to 5.

3.2.3 The Bucket Elimination Algorithm

We reconsider the same setting for the introduction of bucket elimination: let ![]() be a valuation algebra with the operation of projection replaced by variable elimination. The knowledgebase of the inference problem is {

be a valuation algebra with the operation of projection replaced by variable elimination. The knowledgebase of the inference problem is {![]() 1, …,

1, …, ![]() m}

m} ![]() Φ and x

Φ and x ![]() d(

d(![]() ) = {X1, …, Xn} denotes the single query. Similarly to the fusion algorithm, the bucket elimination scheme (Dechter, 1999) is based on the important observation of Theorem 3.1 that eliminating a variable only affects the valuations whose domain contain this variable. We again choose an elimination sequence (Y1, …, Yk) for the variables in d(

) = {X1, …, Xn} denotes the single query. Similarly to the fusion algorithm, the bucket elimination scheme (Dechter, 1999) is based on the important observation of Theorem 3.1 that eliminating a variable only affects the valuations whose domain contain this variable. We again choose an elimination sequence (Y1, …, Yk) for the variables in d(![]() ) - x and imagine a bucket for each of these variables. These buckets are ordered with respect to the variable elimination sequence. We then partition the valuations in the knowledgebase as follows: all valuations whose domain contains the first variable Y1 are placed in the first bucket, which we subsequently denote by bucket1. This process is repeated for all other buckets, such that each valuation is placed in the first bucket that mentions one of its domain variables. It is not possible that a valuation is placed in more than one bucket, but there may be valuations whose domain is a subset of the query and therefore do not fit in any bucket. Bucket elimination then proceeds as follows: at step i = 1, …, k - 1, bucketi is processed by combining all valuations in this bucket, eliminating variable Yi from the result and placing the obtained valuation in the first of the remaining buckets that mentions one of its domain variables. At then end, we combine all valuations contained in bucketk with the remaining valuations of the knowledgebase for which no bucket was found in the initialization process.

) - x and imagine a bucket for each of these variables. These buckets are ordered with respect to the variable elimination sequence. We then partition the valuations in the knowledgebase as follows: all valuations whose domain contains the first variable Y1 are placed in the first bucket, which we subsequently denote by bucket1. This process is repeated for all other buckets, such that each valuation is placed in the first bucket that mentions one of its domain variables. It is not possible that a valuation is placed in more than one bucket, but there may be valuations whose domain is a subset of the query and therefore do not fit in any bucket. Bucket elimination then proceeds as follows: at step i = 1, …, k - 1, bucketi is processed by combining all valuations in this bucket, eliminating variable Yi from the result and placing the obtained valuation in the first of the remaining buckets that mentions one of its domain variables. At then end, we combine all valuations contained in bucketk with the remaining valuations of the knowledgebase for which no bucket was found in the initialization process.

In the first step of the bucket elimination process, bucket1 computes

and moves the result into the next bucket that contains one of its domain variables. This corresponds exactly to equation (3.9) whereas

![]()

In other words, the first step of the bucket elimination process corresponds to the first step of the fusion algorithm, and the same holds also naturally for the remaining steps. Bucket elimination therefore computes equation (3.11) that implies its correctness and the equivalence to the fusion algorithm.

Theorem 3.2 Fusion and bucket elimination with identical elimination sequences perform exactly the same computations.

Example 3.5 We repeat the computations of Example 3.1 and 3.2 from the perspective of bucket elimination. The knowledgebase consists of eight valuations with domains: d(![]() 1) = {A}, d(

1) = {A}, d(![]() 2) = {A, T}, d(

2) = {A, T}, d(![]() 3) = {L, S}, d(

3) = {L, S}, d(![]() 4) = {B, S}, d(

4) = {B, S}, d(![]() 5) = {E, L, T}, d(

5) = {E, L, T}, d(![]() 6) = {E, X}, d(

6) = {E, X}, d(![]() 7) = {B, D, E} and d(

7) = {B, D, E} and d(![]() 8) = {S}. The query is {A, B, D}. We choose the same elimination sequence (X, S, L, T, E), construct a bucket for each variable in the elimination sequence and partition the valuations accordingly. Since d(

8) = {S}. The query is {A, B, D}. We choose the same elimination sequence (X, S, L, T, E), construct a bucket for each variable in the elimination sequence and partition the valuations accordingly. Since d(![]() 1)

1) ![]() x,

x, ![]() 1 is not contained in any bucket.

1 is not contained in any bucket.

Initialization:

bucketX:![]() 6

6

bucketS:![]() 3,

3, ![]() 4,

4, ![]() 8

8

bucketL:![]() 5

5

bucketT:![]() 2

2

bucketE:![]() 7

7

Elimination of bucket X:

bucketS:![]() 3,

3, ![]() 4,

4, ![]() 8

8

bucketL:![]() 5

5

bucketT:![]() 2

2

bucketE:![]() 7,

7, ![]()

Elimination of bucket S:

bucketL:![]() 5, (

5, (![]() 3

3 ![]()

![]() 4

4 ![]()

![]() 8)-S

8)-S

bucketT:![]() 2

2

bucketE:![]() 7,

7, ![]()

Elimination of bucket L:

bucketT:![]() 2, (

2, (![]() 5

5 ![]() (

(![]() 3

3 ![]()

![]() 4

4 ![]()

![]() 8)-S)-L

8)-S)-L

bucketE:![]() 7,

7, ![]()

Elimination of bucket N:

bucketE:![]() 7,

7, ![]() , (

, (![]() 2

2 ![]() (

(![]() 5

5 ![]() (

(![]() 3

3 ![]()

![]() 4

4 ![]()

![]() 8)-S)-L)-T

8)-S)-L)-T

Elimination of bucket E:

(![]() 7

7 ![]()

![]()

![]() (

(![]() 2

2 ![]() (

(![]() 5

5 ![]() (

(![]() 3

3 ![]()

![]() 4

4 ![]()

![]() 8)-S)-L)-T)-E

8)-S)-L)-T)-E

Combining this with valuation ![]() 1 confirms the result of Example 3.2.

1 confirms the result of Example 3.2.

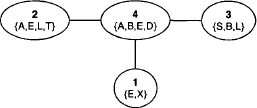

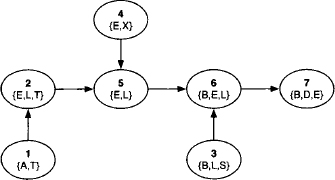

Similar to the fusion algorithm, we give a graphical representation of the bucket elimination process. We first create a graph node for each bucket and label it with the corresponding variable. If during the elimination of bucketX a valuation is added to bucketY, then an edge {X, Y} is added to the graph. Since the elimination of each bucket generates exactly one new valuation that is added to the bucket of a variable which follows later in the elimination sequence, the resulting graph will always be a tree called bucket-tree. The bucket-tree of Example 3.5 is shown in Figure 3.3.

Figure 3.3 The bucket-tree of Example 3.5.

Based on the bucket-tree, we can derive the join tree that underlies the bucket elimination process. We revisit each bucket at the time of its elimination and compute the union domain of all valuations contained in this bucket. This domain is assigned as label to the corresponding node in the bucket tree. Finally, we add a new node with the query of the inference problem as label to the modified bucket-tree and connect this node with the node that belongs to the last variable in the elimination sequence. It follows from Theorem 3.2 and Lemma 3.3 that the resulting graph is a join tree, i.e. we obtain the same join tree as for the fusion algorithm.

Example 3.6 At the time of elimination, the buckets of Example 3.5 contain valuations over the following variables:

bucketX:{E, X}

bucketS:{B, L, S}

bucketL:{B, E, L, T}

bucketT:{A, B, E, T}

bucketE:{A, B, D, E}

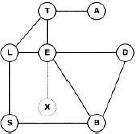

We next assign these domains as labels to the corresponding nodes in the bucket-tree. Finally, we create a new node with the query domain {A, B, D} and connect it to the node that refers to the last variable in the elimination sequence. The resulting graph is shown in Figure 3.4. If we further replace the variables from the bucket-tree by their position in the elimination sequence and assign number k + 1 to the node with the query domain, we obtain the join tree of Figure 3.1.

Figure 3.4 The join tree obtained from the bucket-tree of Figure 3.3.

Our presentation of the bucket elimination algorithm differs in two points from the usual way that it is introduced. First, we consider arbitrary query sets with possibly more than one variable. Consequently, there may be valuations that cannot be assigned to any bucket as shown in Example 3.5, and this makes it necessary to add the additional query node when deriving the join tree from the bucket-tree. The second difference concerns the elimination sequence. In the literature, buckets are normally processed in the inverse order to the elimination sequence. This however is only a question of terminology. Before we turn towards a complexity analysis of the fusion or bucket elimination algorithm, we reconsider the computational task of Example 1.2 used to illustrate the potential benefit of local computation in the introduction of this book.

Example 3.7 The formalism used in Example 1.2 in the introduction consists of mappings from configurations to real numbers. Combination refers to point-wise multiplication and projection to summing up the values of the eliminated variables. In other words, this formalism corresponds to the valuation algebra of arithmetic potentials. The knowledgebase is given by the set of function {f1, …, f100} and the query is {X101, X102}. We recall that an explicit computation of the objective function is impossible. Instead, we apply the fusion or bucket elimination algorithm to this inference problem with the elimination sequence (X1, …, X100). This leads to the join tree shown in Figure 3.5, and the executed computations correspond to equation (I.3). Thus, the domains of all intermediate factors that occur during the computations contain at most three variables.

Figure 3.5 The join tree for Example 1.2.

3.2.4 First Complexity Considerations

Making a statement about the complexity of local computation for arbitrary valuation algebras is impossible since we do not have information about the form of valuations. Remember, the valuation algebra framework describes valuations only by their operational behaviour. We therefore restrict ourselves first to a particular class of valuations and show later how the corresponding complexity considerations can be generalized. More concretely, we focus on valuations that are representable in tabular form such as indicator functions from Instance 1.1 or arithmetic potentials from Instance 1.3. In fact, we shall study in Chapter 5 the family of semiring valuation algebras which all share this common structure of mapping configurations to specific values. Thus, given the universe of variables with finite frames, we denote by ![]() the size of the largest variable frame. Then, the space (subsequently called weight) of a valuation

the size of the largest variable frame. Then, the space (subsequently called weight) of a valuation ![]() with domain d(

with domain d(![]() ) = s is bounded by

) = s is bounded by ![]() and similar statements can be made about the time complexity of combination and projection.

and similar statements can be made about the time complexity of combination and projection.

When during the fusion process variable Xi ![]() d(

d(![]() )-x is eliminated, all valuations are combined whose domain contains this variable according to equation (3.9). Then, variable Xi is eliminated. Thus, if mi valuations contain variable Xi before iteration i of the fusion algorithm, its elimination requires mi, - 1 combinations and one variable elimination (projection). The domain si of the valuation resulting from the combination sequence is given in equation (3.12). We may therefore say that during the elimination of variable Xi, the time complexity of each operation is bounded by

)-x is eliminated, all valuations are combined whose domain contains this variable according to equation (3.9). Then, variable Xi is eliminated. Thus, if mi valuations contain variable Xi before iteration i of the fusion algorithm, its elimination requires mi, - 1 combinations and one variable elimination (projection). The domain si of the valuation resulting from the combination sequence is given in equation (3.12). We may therefore say that during the elimination of variable Xi, the time complexity of each operation is bounded by ![]() which gives us the following total time complexity for the fusion or bucket elimination process:

which gives us the following total time complexity for the fusion or bucket elimination process:

In the graphical representation of the fusion algorithm, the elimination of variable Xi introduces a new join tree node with label λ(i) = si. The size of these labels are bounded by the largest node label ![]() . A related measure called treewidth will be introduced in Definition 3.2 below. Further, we observe that the fusion process reinserts a valuation into its current knowledgebase after the elimination of each variable. This valuation was called ψ in equation (3.9). We may therefore say that Σ mi ≤ m + |d(

. A related measure called treewidth will be introduced in Definition 3.2 below. Further, we observe that the fusion process reinserts a valuation into its current knowledgebase after the elimination of each variable. This valuation was called ψ in equation (3.9). We may therefore say that Σ mi ≤ m + |d(![]() ) - x| ≤ m + |d(

) - x| ≤ m + |d(![]() )| where m denotes the size of the original knowledgebase of the inference problem and x the query. Putting things together, we obtain the following simplified expression for the time complexity:

)| where m denotes the size of the original knowledgebase of the inference problem and x the query. Putting things together, we obtain the following simplified expression for the time complexity:

Slightly better is the space complexity. During the elimination of variable Xi a sequence of combinations is computed that creates an intermediate result with domain si, from which the variable Xi is eliminated. In case of valuations with a tabular representation, the intermediate result does not need to be computed explicitly. This will be explained in more detail in Section 5.6. Here, we content ourselves with the rather intuitive idea that each value (or table entry) of this intermediate valuation can be computed separately and directly projected. This omits the computation of the complete intermediate valuation and directly gives the result after eliminating variable Xi from the combination. The domain size of this valuation is si - {Xi} ≤ ω - 1, and its size is therefore bounded by ![]() . Further, we have just seen that the number of eliminations is at most |d(

. Further, we have just seen that the number of eliminations is at most |d(![]() )| which gives us a total space complexity of

)| which gives us a total space complexity of

It is important to remark that both complexities depend on the shape of the join tree, respectively on its largest node label called treewidth. This measure varies under different elimination sequences as illustrated in the following example.

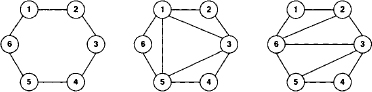

Example 3.8 Figure 3.6 shows two different join trees for the domain list of Example 3.2 obtained by varying the elimination sequence. In order to get comparable results, we take the empty set as query and eliminate all variables using Algorithm 3.2. The left-hand join tree is obtained from the elimination sequence (T, S, E, L, B, A, X, D) and has ω = 6. The right-hand join tree has ω = 3 due to the clever choice of the elimination sequence (A, T, S, L, B, E, X, D). After a certain number of variable elimination steps only a single valuation remains in both examples from which one variable after the other is eliminated. This uninteresting part is omitted in the join trees of Figure 3.6. A third join tree with ω = 4 can be obtained by eliminating the remaining variables in Example 3.2. This corresponds to the elimination sequences that start with (X, S, L, T, E)

Figure 3.6 Different elimination sequences produce different join trees and therefore influence the size of the largest node label.

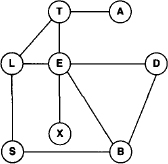

The complexity of solving an inference problem with the fusion algorithm therefore depends on the variable elimination sequence that produces a join tree whose largest node label should be as small as possible. This follows from equation (3.13) and (3.14). In order to understand how elimination sequences and the treewidth measure are related, we consider the primal graph of the inference problem introduced in Section 2.1. Since a primal graph contains a node for every variable, an elimination sequence can be represented by a particular node numbering. Here, we number the nodes with respect to the inverse elimination sequence as shown on the left-hand side of Figure 3.7. Such graphs are called ordered graphs, and we define the width of a node in an ordered graph as the number of its earlier neighbors. Likewise, we define the width of an ordered graph as the maximum node width. Next, we construct the induced graph of the ordered primal graph by the following procedure: process in the reversed order of the node numbering (i.e. according to the elimination sequence) and add edges to ensure for each node that its earlier neighbors are directly connected. We define the induced width of an elimination sequence (or ordered graph) as the width of its induced graph and refer to the induced width of a graph ω* as the minimum width over all possible elimination sequences (orderings) (Dechter & Pearl, 1987). Since a primal graph is associated with each inference problem, we may also speak about the induced width of an inference problem.

Figure 3.7 A primal graph and its induced graph.

Example 3.9 The left-hand part of Figure 3.7 shows the primal graph of Example 3.2 ordered with respect to the elimination sequence (T, S, E, L, B, A, X, D). Node E has width 4 since four neighbors posses a smaller node number. Then, the right-hand part of Figure 3.7 shows its induced graph. Here, node E has 5 neighbors {A, B, D, L, X} with a smaller number which also determines the width of this elimination sequence. Observe that this corresponds to the largest node label of the join tree on the left-hand side of Figure 3.6, if we add the variable E that has been eliminated in this node.

The following theorem has been proved by (Arnborg, 1985):

Theorem 3.3 The largest node label in a join tree equals the induced width of the elimination sequence plus one.

This relationship allows us subsequently to focus only on join trees when dealing with complexity issues. It is then common to use a second but equivalent terminology called treewidth, which has been introduced by (Robertson & Seymour, 1983; Robertson & Seymour, 1986):

Definition 3.2 The treewidth of a join tree (V, E, λ, D) is given by

and we refer to the minimum treewidth over all join trees created from all possible variable elimination sequences as the treewidth of the inference problem.

Since treewidth and induced width are equal according to the above theorem, we reuse the notation ω* for the treewidth of an inference problem. It is very important to distinguish properly between the two notions treewidth of a join tree and treewidth of an inference problem. The first refers to a concrete join tree whereas the second captures the best join tree over all possible elimination sequences of a given inference problem. The decrement in equation (3.15) ensures that inference problems whose primal graphs are trees have treewidth one. Besides induced width and treewidth, there are other equivalent characterization in the literature. Perhaps most common among them is the notion of a partial k-tree (van Leeuwen, 1990).

Example 3.10 The value ω from Example 3.8 refers to the incremented treewidth of the join tree. We therefore have in this example three join trees of treewidth 2, 3 and 5 and conclude that the treewidth of the medical inference problem with empty query is at most 2. In fact, it is equal to 2 because one of the knowledgebase factors has a domain of three variables, which makes it impossible to get a lower treewidth.

Returning to the task of identifying the complexity of the fusion and bucket elimination algorithm, we obtain a complexity bound for the solution of a single-query inference problem by including the treewidth ω* of the latter into equation (3.13) and (3.14):

This is called a parameterized complexity (Downey & Fellows, 1999) with the treewidth of the inference problem as parameter. Naturally, the question arises how the minimum treewidth over all possible variable elimination sequences can be found, the more so as it determines the complexity of applying the fusion and bucket elimination algorithm. Concerning this important question we only have bad news. It has been shown by (Arnborg et al., 1987) that finding the smallest treewidth of a graph is NP-complete. In fact, the task of deciding if the primal graph of the inference problem has a treewidth below a certain constant k can be performed in O(|d(![]() )| · g(k)) where g(k) is a very bad exponential function in k (Bodlaender, 1998; Bodlaender, 1993). Fortunately, there are good heuristics for this task that deliver join trees with reasonable treewidths as we will see in Section 3.7. It is thus important to distinguish the two ways of specifying the complexity of local computation schemes. Given a concrete join tree or an elimination sequence with treewidth ω, we insert this value into equation (3.16) and obtain the achieved complexity of a concrete run of the fusion algorithm. On the other hand, using the treewidth ω* of the inference problem in these formulae denotes the best complexity over all possible join trees or variable elimination sequences that could be achieved for the solution of this inference problem by the fusion or bucket elimination algorithm. But since we are generally not able to exactly determine this value, this often remains an unsatisfied wish. However, it is nevertheless common to specify the complexity of local computation schemes by the treewidth of the inference problem and we will also go along with this.

)| · g(k)) where g(k) is a very bad exponential function in k (Bodlaender, 1998; Bodlaender, 1993). Fortunately, there are good heuristics for this task that deliver join trees with reasonable treewidths as we will see in Section 3.7. It is thus important to distinguish the two ways of specifying the complexity of local computation schemes. Given a concrete join tree or an elimination sequence with treewidth ω, we insert this value into equation (3.16) and obtain the achieved complexity of a concrete run of the fusion algorithm. On the other hand, using the treewidth ω* of the inference problem in these formulae denotes the best complexity over all possible join trees or variable elimination sequences that could be achieved for the solution of this inference problem by the fusion or bucket elimination algorithm. But since we are generally not able to exactly determine this value, this often remains an unsatisfied wish. However, it is nevertheless common to specify the complexity of local computation schemes by the treewidth of the inference problem and we will also go along with this.

3.2.5 Some Generalizing Complexity Comments

At the beginning of our complexity considerations we limited ourselves to a family of valuation algebras whose elements are representable in tabular form. These formalisms share the property that the size of valuations grows exponentially with the size of their domains. Thus, the space of a valuation ![]() with domain s = d(

with domain s = d(![]() ) is bounded by O(d|s|) where d denotes the size of the largest variable frame. Similar statements were made about the time complexity of combination and projection. This view is too restrictive, since there are many other valuation algebra instances that do not keep with this estimation. On the other hand, there are also examples where it is unreasonable to measure time and space complexity by the same function as it has been done in equation (3.16). Examples of such formalisms will be given in Chapter 6. However, a general assumption we are allowed to make is that the space of a valuation and the execution time for the operations shrink under projection. Remember, the idea of local computation is to confine the domain size of intermediate factors during the computations. If this assumption could not be made, local computation would hardly be a reasonable approach.

) is bounded by O(d|s|) where d denotes the size of the largest variable frame. Similar statements were made about the time complexity of combination and projection. This view is too restrictive, since there are many other valuation algebra instances that do not keep with this estimation. On the other hand, there are also examples where it is unreasonable to measure time and space complexity by the same function as it has been done in equation (3.16). Examples of such formalisms will be given in Chapter 6. However, a general assumption we are allowed to make is that the space of a valuation and the execution time for the operations shrink under projection. Remember, the idea of local computation is to confine the domain size of intermediate factors during the computations. If this assumption could not be made, local computation would hardly be a reasonable approach.

Definition 3.3 Let ![]() be a valuation algebra. A function

be a valuation algebra. A function ![]() is called weight function if for all

is called weight function if for all ![]()

![]() Φ and x

Φ and x ![]() d(

d(![]() ) we have

) we have ![]() .

.

Weight functions are still too general to be used for complexity considerations. In many cases however, we can give a predictor for the weight function which is only based on the valuation’s domain. Such weight predictable valuation algebras share the property that the weight of a valuation can be estimated from its domain only.

Definition 3.4 A weight function ω of a valuation algebra ![]() is called weight predictor if there exists a function

is called weight predictor if there exists a function ![]() such that for all

such that for all ![]()

![]() Φ

Φ

![]()

We give some examples of weight functions and weight predictors for the valuation algebras introduced in Chapter 1:

Example 3.11

- A weight predictor for the valuation algebra of arithmetic potentials that also applies to indicator functions and all formalisms of Section 5.3 has already been used in equation (3.16):

where s = d(![]() ) denotes the domain of valuation

) denotes the domain of valuation ![]() and

and

![]()

the largest variable frame. Observe that in this particular case, we could also use the weight function directly as weight predictor since it depends only on the valuation’s domain.

- A reasonable and often used weight function for relations is ω(

) = |d(

) = |d( )| · card(

)| · card( ), where card(

), where card( ) stands for the number of tuples in

) stands for the number of tuples in  . Regrettably, this is not a weight predictor itself since the number of tuples cannot be deduced from the relation’s domain.

. Regrettably, this is not a weight predictor itself since the number of tuples cannot be deduced from the relation’s domain. - Finally, a weight predictor for the valuation algebra of set potentials and all formalisms of Section 5.7 is

(3.18) ![]()

Observe that we presuppose binary variables in this formula.

Subsequently, we will use weight predictors for the analysis of the time and space complexity of local computation. This allows us to make more general complexity statements that can then be specialized to a specific formalism by choosing an appropriate weight predictor. Further, we use two different weight predictors f and g for time and space complexity. This accounts for the observation that time and space complexity cannot necessarily be estimated by the same function, but both satisfy the fundamental property that they are non-increasing under projection. We obtain for the general time complexity of the fusion and bucket elimination algorithm:

This follows directly from equation (3.16). Remember that the assumption of valuations with a tabular representation allowed us to derive a lower space complexity in equation (3.16). This optimization cannot be performed for arbitrary valuation algebras. For the elimination of variable Xi we therefore need to compute the combinations first, which results in an intermediate factor with domain size |λ(i)| ≤ ω* + 1. Then, the variable Xi can be eliminated and the result is added to the knowledgebase. Altogether, this gives us the following general space complexity of the fusion and bucket elimination algorithm:

Clearly, for the case of applying the fusion algorithm to arithmetic potentials, indicator functions and all other formalism of Section 5.3, we may choose the same weight predictor of equation (3.17) for both time and space complexity. Moreover, the space complexity can then be improved to the one given in equation (3.16). This shows the difficulties of making general complexity statements for local computation applied to unspecified valuation algebras.

3.2.6 Limitations of Fusion and Bucket Elimination

The fusion or bucket elimination algorithm is a generic local computation scheme to solve single-query inference problems in a simple and natural manner. Nevertheless, it has some drawbacks compared to other local computation procedures that will be introduced below. Here, we address two important points of criticism:

- The fusion or bucket elimination algorithm can be applied to each formalism that satisfies the valuation algebra axioms from Section 1.1. But we have also seen in Appendix A.2 of Chapter 1 that there exists a more general definition of the axiomatic framework which is based on general lattices instead of variable systems. Fusion and bucket elimination require variable elimination and can therefore not be generalized to valuation algebras on arbitrary lattices.

- Time and space complexity of the fusion algorithm are bounded by the treewidth of the inference problem, which makes the application difficult when join trees have large labels. Experiments from constraint programming (Larrosa & Morancho, 2003) show that the space requirement is particularly sensitive, for which reason inference is often combined with search methods that only require linear space. However, search methods need more structure than offered by the valuation framework. We refer to (Rossi et al., 2006) for a survey of such trading or hybrid methods in constraint programming. Looking at equation (3.20), we observe that the function arguments of the two terms distinguish in a constant number. We will see later that this is a consequence of the particular join trees that emerge from the fusion and bucket elimination process. Further, we know that the first term vanishes when dealing with particular valuation algebras. It will be shown in Section 5.3 that this also includes constraint systems. To make local computation more space efficient for such formalisms, we therefore aim at reducing the argument of the second term in equation (3.20).

In the subsequent sections, we present an alternative picture of local computation that finally leads to a second algorithm for the solution of single-query inference problems that does not depend on variables anymore and which may also provide a better space performance. The essential difference with respect to the fusion algorithm is that the join tree construction is decoupled from the actual inference process. Instead, a suitable join tree for the current inference problem is initially constructed, and local computation is then described as a message-passing process where nodes act as virtual processors and collaborate by exchanging messages. The key advantage of this approach is that we no longer depend on the very particular join trees that are obtained from the fusion algorithm. To study the requirements for a join tree to serve as local computation base, we first introduce a specialized class of valuation algebras that provide neutral information pieces. It will later be shown in Section 3.10 that the existence of such neutral elements is not mandatory for the description of local computation as message-passing scheme. But if they exist, the discussion simplifies considerably. Moreover, neutral elements are also interesting in other contexts that will be discussed in later parts of this book.

3.3 VALUATION ALGEBRAS WITH NEUTRAL ELEMENTS

Following the view of a valuation algebra as a generic representation of knowledge or information, some instances may exist that contain pieces that express vacuous information. In this case, such a neutral element must exist for every set of questions S![]() D and must therefore be contained in every subsemigroup Φs of Φ. If we then combine those pieces with already existing knowledge of the same domain, we do not gain new information. Hence, there is an element

D and must therefore be contained in every subsemigroup Φs of Φ. If we then combine those pieces with already existing knowledge of the same domain, we do not gain new information. Hence, there is an element ![]() for every subsemigroup Φs such that

for every subsemigroup Φs such that ![]()

![]() = es

= es ![]()

![]() = for all

= for all ![]()

![]() Φs. We further add a neutrality axiom to the valuation algebra system which states that a combination of neutral elements leads to a neutral element with respect to the union domain.

Φs. We further add a neutrality axiom to the valuation algebra system which states that a combination of neutral elements leads to a neutral element with respect to the union domain.

Definition 3.5 A valuation algebra ![]() has neutral elements, if for every domain s

has neutral elements, if for every domain s ![]() D there exists an element es

D there exists an element es ![]() Φs such that

Φs such that ![]()

![]() es = es

es = es ![]()

![]() =

= ![]() for all

for all ![]()

![]() Φs. These elements must satisfy the following property:

Φs. These elements must satisfy the following property:

(A7) Neutrality: For x,y ![]() D,

D,

(3.21) ![]()

If neutral elements exist, they are unique within the corresponding subsemigroup. In fact, suppose the existence of another element ![]() with the identical property that

with the identical property that ![]() for all

for all ![]()

![]() Φs. Then, since es and

Φs. Then, since es and ![]() behave neutrally among each other,

behave neutrally among each other, ![]() . Furthermore, valuation algebras with neutral elements allow for a simplified version of the combination axiom that was already proposed in (Shenoy & Shafer, 1990).

. Furthermore, valuation algebras with neutral elements allow for a simplified version of the combination axiom that was already proposed in (Shenoy & Shafer, 1990).

Lemma 3.4 In a valuation algebra with neutral elements, the combination axiom is equivalently expressed as:

(A5) Combination: For ![]() with

with ![]() ,

,

Proof: Equation (3.22) follows directly from the combination axiom with z = x. On the other hand, let ![]() . Since

. Since ![]() we derive from equation (3.22) and Axiom (A1) that

we derive from equation (3.22) and Axiom (A1) that

The last equality holds because

![]()

Note that this result presupposes the distributivity of the lattice D.

The following lemma shows that neutral elements also behave neutral with respect to valuations on larger domains:

Lemma 3.5 For ![]()

![]() Φ with d(

Φ with d(![]() ) = x and y

) = x and y ![]() x it holds that

x it holds that

(3.23) ![]()

Proof: From the associativity of combination and the neutrality axiom follows

![]()

The presence of neutral elements allows furthermore to extend valuations to larger domains. This operation is called vacuous extension and may be seen as a dual operation to projection. For ![]()

![]() Φ and d(

Φ and d(![]() )

) ![]() y we define

y we define

Before we consider some concrete examples of valuation algebras with and without neutral elements, we introduce another property called stability that is closely related to neutral elements. In fact, stability can either be seen as the algebraic property that enables undoing the operation of vacuous extension, or as a strengthening of the domain axiom (A6).

3.3.1 Stable Valuation Algebras

The neutrality axiom (A7) determines the behaviour of neutral elements under the operation of combination. It states that a combination of neutral elements will always result in a neutral element again. However, a similar property can also be requested for the operation of projection. In a stable valuation algebra, neutral elements always project to neutral elements again (Shafer, 1991). This property seems natural but there are indeed important examples which do not fulfill stability.

Definition 3.6 A valuation algebra with neutral elements ![]() is called stable if the following property is satisfied for all x,y

is called stable if the following property is satisfied for all x,y ![]() D with x

D with x ![]() y,

y,

(A8) Stability:

(3.25) ![]()

Stability is a very strong condition which also implies other valuation algebra properties: together with the combination axiom, it can, for example, be seen as a strengthening of the domain axiom. Also, it is possible to revoke vacuous extension under stability. These are the statements of the following lemma:

Lemma 3.6

1. The property of stability with the combination axiom implies the domain axiom.

1. If stability holds, we have for ![]()

![]() Φ with d(

Φ with d(![]() ) = x

) = x ![]() y,

y,

Proof:

1. For ![]()

![]() Φ with d(

Φ with d(![]() ) = x we have

) = x we have

![]()

2. For ![]()

![]() Φ with d(

Φ with d(![]() ) = x

) = x ![]() y we derive from the combination axiom

y we derive from the combination axiom

![]()

![]() 3.1 Neutral Elements and Indicator Functions

3.1 Neutral Elements and Indicator Functions

In the valuation algebras of indicator functions, the neutral element for the domain s ![]() D is given by es(x) = 1 for all x

D is given by es(x) = 1 for all x ![]() Ωs. We then have for

Ωs. We then have for ![]()

![]() Φs

Φs

![]()

Also, the neutrality axiom holds, since for s,t ![]() D with

D with ![]() we have

we have

![]()

Finally, the valuation algebra of indicator functions is also stable. For s,t ![]() D with s

D with s ![]() t and x

t and x ![]() Ωs it holds that

Ωs it holds that

![]()

![]() 3.2 Neutral Elements and Arithmetic Potentials

3.2 Neutral Elements and Arithmetic Potentials

Arithmetic potentials and indicator functions share the same definition of combination. We may therefore conclude that arithmetic potentials also provide neutral elements. However, in contrast to indicator functions, arithmetic potentials are not stable. We have for s,t ![]() D with s

D with s ![]() t and ×

t and × ![]() Ωs

Ωs

![]()

![]() 3.3 Neutral Elements and Set Potentials

3.3 Neutral Elements and Set Potentials

In the valuation algebra of set potentials, the neutral element es for the domain s ![]() D is given by es(A) = 0 for all proper subsets A

D is given by es(A) = 0 for all proper subsets A ![]() Ωs and es(Ωs) = 1. Indeed, it holds for

Ωs and es(Ωs) = 1. Indeed, it holds for ![]()

![]() Φ with d(

Φ with d(![]() ) = s that

) = s that

![]()

The second equality follows since for all other values of C we have es(C) = 0. Also, the neutrality axiom holds which will be proved explicitly in Section 5.8.1. Further, set potentials are stable. For s,t ![]() D and s

D and s ![]() t it holds that

t it holds that

![]()

The second equality holds because et(Ωt) = 1 is the only non-zero summand.

![]() 3.4 Neutral Elements and Relations

3.4 Neutral Elements and Relations

For s ![]() D the neutral element in the valuation algebra of relations is given by es = Ωs. Similar to indicator functions these neutral elements are also stable. However, there is an important issue regarding neutral elements in the relational algebra. Since variable frames are often very large or even infinite (i.e. all possible strings), the neutral elements can sometimes not be expressed explicitly.

D the neutral element in the valuation algebra of relations is given by es = Ωs. Similar to indicator functions these neutral elements are also stable. However, there is an important issue regarding neutral elements in the relational algebra. Since variable frames are often very large or even infinite (i.e. all possible strings), the neutral elements can sometimes not be expressed explicitly.

![]() 3.5 Neutral Elements and Density Functions

3.5 Neutral Elements and Density Functions

Equation (1.15) introduced the combination of density functions as simple multiplication. Therefore, the definition ![]() would clearly meet the requirements for a neutral element. However, this function es is not a density since its integral will not be finite

would clearly meet the requirements for a neutral element. However, this function es is not a density since its integral will not be finite

![]()

Hence, densities form a valuation algebra without neutral elements. This holds in particular also for Gaussian potentials in Instance 1.6.

Before we continue with the interpretation of the fusion algorithm as a message-passing scheme in Section 3.5, we introduce a second family of elements that may be contained in a valuation algebra. As neutral elements express neutral knowledge, null elements express contradictory knowledge with respect to their domain.

3.4 VALUATION ALGEBRAS WITH NULL ELEMENTS

Some valuation algebras contain elements that express incompatible, inconsistent or contradictory knowledge according to questions s ![]() D. Such valuations behave absorbingly for combination and are therefore called absorbing elements or null elements. Hence, in a valuation algebra with null elements there is an element zs

D. Such valuations behave absorbingly for combination and are therefore called absorbing elements or null elements. Hence, in a valuation algebra with null elements there is an element zs ![]() Φs such that zs

Φs such that zs ![]()

![]() =

= ![]()

![]() zs = zs for all

zs = zs for all ![]()

![]() Φs. We call a valuation

Φs. We call a valuation ![]()

![]() Φs consistent, if and only if

Φs consistent, if and only if ![]() ≠ zs. It is furthermore a very natural claim that a projection of some consistent valuation produces again a consistent valuation. This requirement is captured by the following additional axiom.

≠ zs. It is furthermore a very natural claim that a projection of some consistent valuation produces again a consistent valuation. This requirement is captured by the following additional axiom.

(A9) Nullity: For x, y ![]() D, x

D, x ![]() y and

y and ![]()

![]() Φy,

Φy,

![]()

if, and only if, ![]() = zy.

= zy.

In other words, if some valuation projects to a null element, then it must itself be a null element. Thus, contradictions can only be derived from contradictions. We further observe that according to the above definition, null elements absorb only valuations of the same domain. In case of stable valuation algebras, however, this property also holds for any other valuation.

Lemma 3.7 In a stable valuation algebra with null elements we have for all ![]()

![]() Φ with d(

Φ with d(![]() ) = x and y

) = x and y ![]() D

D

(3.27) ![]()

Proof: We remark first that from equation (3.26) it follows

![]()

Hence, we conclude from the nullity axiom that ![]() and derive

and derive

![]()

Let us search for null elements in the valuation algebra instances of Chapter 1.

![]() 3.6 Null Elements and Indicator Functions

3.6 Null Elements and Indicator Functions

In the valuation algebras of indicator functions the null element for the domain s ![]() D is given by zs(x) = 0 for all x

D is given by zs(x) = 0 for all x ![]() Ωs. We then have

Ωs. We then have

![]()

Since projection refers to maximization, it is easy to see that null elements, and only null elements project to null elements.

![]() 3.7 Null Elements and Arithmetic Potentials

3.7 Null Elements and Arithmetic Potentials

Again, since their combination rules are equal, arithmetic potentials and indicator functions share the same definition of null elements: for s ![]() D we define zs(x) = 0 for all x

D we define zs(x) = 0 for all x ![]() Ωs. The absorption property for combination follows directly from Instance 3.6. Further, projection is defined as summation for arithmetic potentials and since the values are non-negative, it is again only the null element that projects to a null element.

Ωs. The absorption property for combination follows directly from Instance 3.6. Further, projection is defined as summation for arithmetic potentials and since the values are non-negative, it is again only the null element that projects to a null element.

![]() 3.8 Null Elements and Relations

3.8 Null Elements and Relations

Since we have already identified the null elements in the valuation algebra of indicator functions, it is simple to give way to relations. Due to equation (1.9) null elements are simply empty relations with respect to their domain.

![]() 3.9 Null Elements and Set Potentials

3.9 Null Elements and Set Potentials

In the valuation algebra of set potentials, the null element zs for the domain s ![]() r is defined by zs(A) = 0 for all A

r is defined by zs(A) = 0 for all A ![]() Ωs. These elements behave absorbingly for combination, and are the only elements that project to null elements, which follows again from their non-negative values. Although this is rather easy to see, we will give a formal proof in Section 5.8.2.

Ωs. These elements behave absorbingly for combination, and are the only elements that project to null elements, which follows again from their non-negative values. Although this is rather easy to see, we will give a formal proof in Section 5.8.2.

![]() 3.10 Null Elements and Density Functions

3.10 Null Elements and Density Functions

In the valuation algebra of density functions, the null element for the domain s is given by zs(x) = 0 for all x ![]() Ωs. The properties of null elements follow again from the fact that density functions are non-negative.

Ωs. The properties of null elements follow again from the fact that density functions are non-negative.

![]() 3.11 Null Elements and Gaussian Densities

3.11 Null Elements and Gaussian Densities

In the introduction of density functions we also mentioned that the family of Gaussian densities forms a subalgebra of the valuation algebra of density functions. Although we are going to study this formalism extensively in Chapter 10, we already point out that Gaussian densities do not possess null elements due to the requirement for a positive definite concentration matrix.

Null elements play an important role in the semantics of inference problems. Imagine that we solve an inference problem with the empty set as single query. After the execution of local computation, we find a null element as answer to this query:

![]()

We therefore conclude from Axiom (A9) that the objective function ![]() must itself be a null element, or, in other words, that the knowledgebase is inconsistent. This is an important issue in constraint programming since it allows to check the satisfiability of a constraint system by local computation. A similar argumentation has, for example, been used in Instance 2.4.

must itself be a null element, or, in other words, that the knowledgebase is inconsistent. This is an important issue in constraint programming since it allows to check the satisfiability of a constraint system by local computation. A similar argumentation has, for example, been used in Instance 2.4.

This closes our intermezzo of special elements in a valuation algebra. We now return to the solution of single-query inference problems by local computation techniques and give an alternative picture of the fusion algorithm in terms of a message-passing scheme. This presupposes a valuation algebra with neutral elements, although it will later be shown in Section 3.10 that this assumption can be avoided.

3.5 LOCAL COMPUTATION AS MESSAGE-PASSING SCHEME

Let us reconsider the join tree resulting from the graphical representation of the fusion algorithm. We then observe that for all knowledgebase factor domains d(![]() i), there exists a node v

i), there exists a node v ![]() V in the join tree which covers d(

V in the join tree which covers d(![]() i), i.e. d(

i), i.e. d(![]() i)

i) ![]() λ(v). This is a simple consequence of equation (3.12), which defines the label of the new nodes added during the elimination of a variable. More precisely, if Xj

λ(v). This is a simple consequence of equation (3.12), which defines the label of the new nodes added during the elimination of a variable. More precisely, if Xj ![]() d(

d(![]() i) is the first variable in the elimination sequence, then d(

i) is the first variable in the elimination sequence, then d(![]() i)

i) ![]() λ(j). This is a consequence of the particular node numbering defined in the fusion process. We may therefore assign factor

λ(j). This is a consequence of the particular node numbering defined in the fusion process. We may therefore assign factor ![]() i to the join tree node j

i to the join tree node j ![]() V. This process is repeated for all factors in the knowledgebase of the inference problem, which is illustrated in Example 3.12. Remember also that the query x

V. This process is repeated for all factors in the knowledgebase of the inference problem, which is illustrated in Example 3.12. Remember also that the query x ![]() d(

d(![]() ) of the inference problem always corresponds to the label of the root node since the latter contains all variables that have not been eliminated. A join tree that allows such a factor distribution will later be called a covering join tree in Definition 3.8. The process of distributing knowledgebase factors over join tree nodes may assign multiple factors to one node, whereas other nodes go away without an assigned valuation. In Example 3.12, only the root node does not contain a knowledgebase factor, but if we had eliminated more variables, then inner nodes also would exist that do not posses a knowledgebase factor. If we further assume a valuation algebra with neutral elements, we may assign the neutral element eλ(i) to all nodes i

) of the inference problem always corresponds to the label of the root node since the latter contains all variables that have not been eliminated. A join tree that allows such a factor distribution will later be called a covering join tree in Definition 3.8. The process of distributing knowledgebase factors over join tree nodes may assign multiple factors to one node, whereas other nodes go away without an assigned valuation. In Example 3.12, only the root node does not contain a knowledgebase factor, but if we had eliminated more variables, then inner nodes also would exist that do not posses a knowledgebase factor. If we further assume a valuation algebra with neutral elements, we may assign the neutral element eλ(i) to all nodes i ![]() V which do not hold a knowledgebase factor. On the other hand, if a node contains multiple knowledgebase factors, they are combined. The result of this process is a join tree where every node i

V which do not hold a knowledgebase factor. On the other hand, if a node contains multiple knowledgebase factors, they are combined. The result of this process is a join tree where every node i ![]() V contains exactly one valuation Ψi with d(Ψi)

V contains exactly one valuation Ψi with d(Ψi) ![]() λ(i). This valuation either corresponds to a single knowledgebase factor, to a combination of multiple knowledgebase factors or to a neutral element, as shown in Example 3.12.

λ(i). This valuation either corresponds to a single knowledgebase factor, to a combination of multiple knowledgebase factors or to a neutral element, as shown in Example 3.12.

Example 3.12 The knowledgebase factor domains in the medical inference problem of Instance 2.1 are: d(![]() 1) = {A}, d(

1) = {A}, d(![]() 2) = {A,T}, d(

2) = {A,T}, d(![]() 3) = {L,S}, d(

3) = {L,S}, d(![]() 4) = {B,S}, d(

4) = {B,S}, d(![]() 5) = {E,L,T}, d(

5) = {E,L,T}, d(![]() 6) = {E,X}, d(

6) = {E,X}, d(![]() 7) = {B,D,E} and d(

7) = {B,D,E} and d(![]() 8) = {S}. The single query to compute is x = {A, B, D}. These factors are distributed over the nodes of the covering join tree in Figure 3.8 that results from the graphical fusion process (see Figure 3.1). The node factors of this join tree become: Ψ1 =