animation for multimedia and new media

by Alan Peacock

by Alan Peacock

Animation for multimedia and new media

INTRODUCTION

In interactive media, animation makes an important contribution to the user’s experience. It contributes to the apparent responsiveness of the system and to the user’s feeling of agency (their ability to act meaningfully within the artefact), to the engagement of the user and their feeling of reward. In its visual qualities and its pace, animation plays a significant part in creating the atmosphere or tone of the multimedia artefact.

Animation in multimedia has its own particular concerns and issues, some of which differ from those of animation within the filmic tradition of cinema and television display. In this chapter ideas about animation in interactive multimedia are explored first through a brief review of what animation contributes to interactivity, and then through a detailed discussion of particular issues, ideas and processes.

The design and realization of animated elements of a multimedia artefact involve many of the same aesthetic and technical issues as those discussed elsewhere in this book, but bring with them, also, issues that are particular to multimedia. Some of those issues are to do with different delivery means. Designing for a CD-ROM, for example, can be very different to designing for the networked distribution of the World Wide Web. These different delivery modes affect not only what can be made successfully, but also the strategies and processes used for making and for the ideas development and evaluation stages of any multimedia work.

Other issues are to do with the very nature of interactive media. These issues are unique to the new media of interactive narrative, and animation has a particularly important role to play in the forms and structures it takes. Animation integrates in interactive multimedia in several ways:

Fig.4.1 Animated objects are part of the everyday experience of using computers.

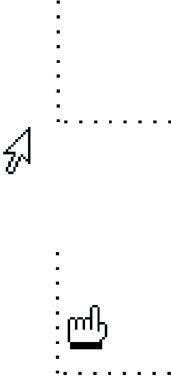

• as the cursor tracking about the screen and changing to indicate the presence of a link or a different function

• as a rollover image which changes to give user feedback

• as on-screen movement of objects within the display

• as an embedded animated diagram or movie

• as scrolling screens and changing world views

• as a way of enabling the user’s sense of agency and empowerment (these ideas are explored later)

• as a way of convincing the user that the system is working and ‘reliable’.

When you have finished reading this chapter you will have a basic understanding of:

• How animation is a key element of interactive media.

• The main forms of animation used in, and as, interactive multimedia.

• Some of the ways animated elements are used in multimedia and on the web.

• Some of the technical issues that affect animation in multimedia and on the web.

MOTIVATION AND RESPONSIVENESS: ANIMATED OBJECTS IN MULTIMEDIA

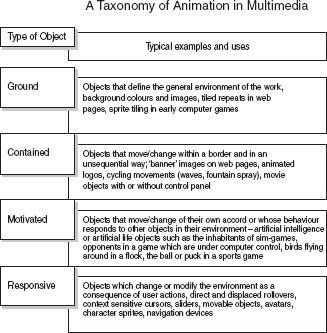

In any animation there are two sorts of visual objects – ones that form the ‘ground’ on which other objects play. Ground objects are often static, or have contained movement (the swirling of water, the waving of trees, the drift of clouds), and these contrast with the ‘figure’ objects which are (or appear to be) ‘motivated’. That is to say their movement has apparent purpose either in an anthropomorphic sense in that we can understand their actions and the motives for them, or in the way in which there is a play of cause and effect. It is these motivated objects, representational images of people, mice, ducks, cats, steam trains, that ‘come to life’, are animated in moving image sequences. Even if the animated world exaggerates or contradicts the rules of cause and effect as we know them from our day-to-day experiences, we quickly learn and accommodate the difference and derive pleasure from it.

In multimedia there is an extended range of objects, and a blurring of the distinction between them. Again there are ‘ground’ objects, either as static images or as contained animations cycling away, and there are ‘motivated’ objects which move around or change independently. In addition there are ‘responsive’ objects, things that move or change in response to the viewer’s actions and interventions, such as a button that changes colour when the mouse is clicked on it.

It is in the presence of ‘responsive’ objects that the particular concerns of animation in multimedia are most present and most clear. Not only because their appearance or movement changes with the actions of the user, but also because the intervention of the user may have other, and deeper, consequences also. The act of sliding a marker along a scale may adjust the speed at which (the image of) a motor car moves on screen, to the point where its behaviour becomes unstable and it crashes. Here the user’s actions have determined not only the appearance of the control device but also the ‘motivation’ of a separate object, and have affected events that follow.

Fig 4.2 This screen shows the types of objects found in interactive media.

The outline shapes of the playing area are ground objects; the ‘puck’, centre left, is a motivated object whose behaviour is controlled by a set of rules about how its movement changes when it collides with side wall, obstacles and the players’ ‘bat’.

The ‘bat’ itself is a responsive object, directly controlled by the user’s actions.

Responsive objects are navigation tools, they are ways of forming a story from the choices available. They need to be readily recognizable for what they are, and they need to have a way of indicating that they are working, of giving feedback to the user. The affordance of a responsive object is the way it is distinguished from other objects, and feedback is given in the way the object’s appearance changes or through associated actions. Feedback, even at the level of something moving, or changing, is a form of animation.

Fig. 4.3 Context sensitive cursors are a simple form of animation.

Applying these ideas of objects to interactive media (both stand-alone CD-based works and web pages), allows us to identify certain features of the way animation is used and to recognize what is particular about animation in multimedia.

Fig. 4.4 Animation, as the change of a cursor or icon is an integral part of interactive multimedia.

CHANGING MOVES: ANIMATION AND INTERACTION

It is difficult to think of interactive media that does not have elements of animation. Indeed some of the main applications used to author interactive media are used also to create animations. Packages like Macromedia Director and Flash have developed from tools for creating animations to become powerful multimedia authoring environments which combine timeline animation with feature-rich programming languages. Web authoring packages integrate simple animation in the form of rollover buttons and independently positionable layered graphics, often using a timeline to define movement.

Fig. 4.5 In contemporary operating systems and interfaces image-flipping animation is used to provide feedback.

In interactive media the user’s actions produce change, or movement, in the screen display. At one level this takes the form of a cursor which moves, and in moving corresponds to the movement of their hand holding the mouse. At other levels actions, such as the clicking of a mouse button, have a cause and effect relationship with on-screen events triggering some, controlling others. Interactive media responds to the user’s actions and seems to ‘come alive’. This ‘coming alive’ quality of multimedia is an important part of the experience of interactivity – and ‘coming alive’ is itself a definition of ‘animation’.

Visual elements in interactive media may be animated in their own right – moving around the screen independently of the user’s actions, or sequentially displaying a series of images that suggest or imply a consistency and continuity of movement or change (fig. 4.5).

In interactive media, animation contributes significantly to the user experience in many ways and at a number of levels. It contributes to the apparent responsiveness of the system and to the user’s feeling of agency (their ability to act meaningfully within the artefact), to the engagement of the user and their feeling of reward, and in the qualities and timing of movement it helps define the atmosphere or tone of the multimedia artefact.

The definition of ‘changes or moves’ we have used here includes also the development of virtual worlds that allow the users to experience moving around within a believable environment. Virtual worlds represent a special kind of animation, they provide convincing experiences of ‘being there’, of presence in a place, and of moving within a world rather than watching it from the outside.

ANIMATION: AN EVERYDAY EXPERIENCE

Graphic User Interfaces (GUIs) are the dominant form of interaction with computers. In these simple ‘change or move’ animation is a part of the ordinary experience of using a computer. The visual display of contemporary computers is constantly changing: it seems alive with movement and change, and in this it embodies a believable intelligence. Icons in toolboxes change to indicate the currently selected tool or option, menu lists drop down from toolbars. Context sensitive cursors replace the simple pointer with crosshairs, hourglasses, rotating cogs, a wristwatch with moving hands. The cursor on my word-processor page blinks purposefully whenever I pause in writing this text. Status cursors appear when a mouse button is pressed to indicate the current action or its possibilities. Images of sheets of paper fly from one folder to another.

Our computers, left alone for five minutes, start to display scrolling text messages, Lissajous loops, flying toasters or company logos. We click on an icon representing a program, a folder, a file and drag it around the screen from one place to another. Our simplest and most profound actions are represented in animations. All this ‘move and change’ animation has become commonplace, almost invisible in our familiarity with it.

In interactive multimedia animation is an integral part of the communication. Movement brings emphasis to some sections; it structures and organizes the flow of information; it provides visual interest, pace and feedback. Used well, it promotes the overall meanings of the artefact effectively.

Fig 4.6 Sprite animation draws on separate images that depict each ‘frame’ of the animation. Each of these ‘sprite images’ is displayed in turn at the sprite’s screen location.

An animated object may be made up of several sprites. In the example here the changing smoke trail could be separate to the image of the model train. In sprite-based multimedia these objects may have behaviours associated with them.

EARLY GAMES AND HYPERTEXT

At the end of the twentieth century the increasing accessibility of computer technologies, both economically and in terms of the knowledge required to create content for them and use them, contributed to the emergence of new media forms, and animation became a defining mode within them. Outside of commercial applications, the emergent computer industries in both leisure and military sectors adopted visual display technologies that became increasingly capable of ‘realistic’ graphics.

Early graphics were often crude blocks or lines, but they carried enough information to allow a viewer, willing to suspend disbelief, to accept the ‘reality’ of what was depicted. An important element of these early graphics, and a major feature of their ‘believability’, was change and movement – in short, animation. The on-screen location of the ‘sprite’ images used to construct the display can be changed in computer programming code relatively easily. If this happens fast enough (above 12 frames a second in interactive media) the changes are read as continuous action. Updating the location of a graphic object creates an apparent continuity of movement; changing its image creates a continuity of ‘behaviour’.

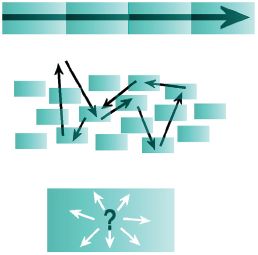

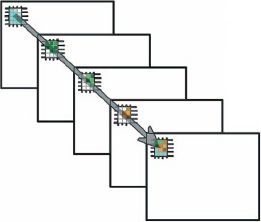

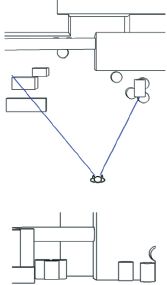

Fig 4.7 Narrative forms can be shown diagrammatically. Here the unisequential mode of the cinema-type animation is shown in the top diagram. Separate episodes follow a single consistent line that does not vary from showing to showing.

The middle diagram represents the narrative form or shape of interactive multimedia where links between sections are set and determined by the author. In this form of multimedia the user chooses from among fixed options, and although their personal pathway may be unique they remain limited to the prescribed links.

The lower diagram illustrates the kind of narrative form that comes with navigable 3D worlds. In these worlds the user is free to go anywhere they wish within the rules of the world.

Some graphic objects – the ball in Pong, the spaceships in Space Invaders – were motivated objects: they apparently moved or changed of their own accord and in increasingly complex ways. Other graphic objects were responsive, moving and changing in accordance with the player’s actions – the paddles in Pong, the weapon in Space Invaders. Between these two sorts of graphic objects existed a play of cause and effect; the actions of the user manipulating the controls of a responsive object could bring about a change in a motivated object. Firing the weapon in Space Invaders at the right moment meant one of the alien ships would disintegrate in a shower of pixels, getting the paddle in Pong in the right place sent the ball careering back across the screen to the goal of your opponent. In the interplay of these graphic objects a new narrative, a narrative of interactivity, began to emerge.

Elsewhere, ideas about the nature of information (put forward by Vannevar Bush and others) were being used to develop computer systems. Those ideas, about associational thinking, about threaded pathways between pieces of information, predate computing technologies and the forms of Random Access storage (on chip and disk) that are associated with them. As computing technologies became more accessible there were a number of people exploring ideas about what Ted Nelson came to call ‘hypertext’ (1968). A hypertext is ‘navigable’; it does not have a fixed and determined order but is determined by the choices and decisions of the user. Hypertext led to ‘hypermedia’ or ‘multimedia’ where the information sources included, alongside written word texts and the links between sections, images, both moving and static, and sound, as music, spot effects and voice.

The shape of a hypermedia narrative may be relatively undetermined, it may have no distinct starting point and no clear ending or sense of closure. This means that there is a very different relationship between the author and the work, and between the audience and the work, than those in traditional, unisequential media. In an important sense the author no longer determines the work and, in an equally important sense, the audience now contributes to the work.

WWW

The World Wide Web is a particular implementation of hypermedia, a particular kind of interactive multimedia. In its earliest form the underlying HTML (HyperText Markup Language) devised by Tim Berners-Lee and others at CERN in the early 1990s handled only written word text, it did not include images or many of the features later web browsers would implement. Marc Andreessen developed Mosaic, a browser that included the <IMG SRC …..> tag to identify, locate, retrieve and display an image in either .JPEG or .GIF formats.

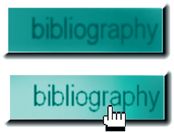

Fig 4.8 Affordance is the visual qualities of a link object that allow it to be recognized.

Here, the bevelled edges and the text name make the object readily identifiable.

The animated change from the arrow to pointy finger cursor is used in web browsers to indicate the presence of a hypertext link. Animation, movement and change, offers a high order of affordance as it makes multimedia objects readily distinguishable.

Affordance is a relative quality – it depends on context and surrounding imagery. An animated cursor is highly visible on an otherwise static background but may be less visible if there is a lot of on-screen movement.

Berners-Lee’s development of embedding URLS in code so that a link could represent rather than state its destination, accompanied by Andreessen’s integration of images, made HTML technologies into a readily accessible form of hypermedia – opening up the Internet to the general public.

It quickly became a convention that a link on a web page is indicated not only by a visual affordance of some kind – text coloured differently to adjacent words, overt ‘button’ design – but by a change in the cursor from arrow to ‘pointy finger’. This simple context-responsive animation provides effective feedback to the user. It reinforces the affordance of the underlining and different colouring that have come to conventionally represent a hyperlink in WWW browsers. Further developments in HTML technologies and especially the integration of JavaScript into browsers meant that image flipping could be used for rollovers within web pages. This gave web page designers a simple form of animation, one which gives a distinct flavour to the user experience because the screen image reacts and changes as the user interacts with it.

Web pages may contain images and these can function as links. Usually and conventionally an image-link is marked by an outlining border in the ‘link’ colour. But this border is a parameter that the designer can alter – setting it to 0 means there is no visible border, and no visible indication that the image is a link. While some images may have a high degree of affordance others may only be identified as a link by the ‘arrow to pointy finger’ animation when the cursor trespasses on the image. In a similar vein a web page may contain image maps. These are images (GIF or JPEG) which have associated with them a ‘map’ of hotspot areas which can act as links or may trigger other scripted actions. The image does not necessarily have any affordance that identifies the hotspot areas. The possibility of action is indicated solely by the context sensitive cursor change from arrow to pointy finger. These techniques enable an artist or designer to embed ‘hidden’ links or actions in an image so that the reader has to actively search them out through a process of active discovery and exploration. The simple animation of the cursor becomes the tool of discovery.

Web pages may contain other animated media, also. These usually require an additional ‘plug-in’ that extends the capabilities of the browser and extends the depth of interactivity that the user may experience. Common animation media that may be incorporated into web pages are Shockwave (a special highly compressed file from Macromedia Director), Flash (vector graphics), Quicktime (Apple’s proprietary technology that allows the playback of linear) and various plug-ins that facilitate 3D worlds (such as COSMOPLAYER, CULT 3D). These media forms range from contained objects (Quicktime movies, AVIs) through to objects which are themselves separate instances of interactive media (Shockwave, Flash, VRML).

The web is a networked technology and this has considerable implications for the design of animated media components. There are issues of file size and bandwidth to consider, alongside such things as the diversity of browsers, plug-ins and hardware specifications of the computer on which the work is to be viewed.

Figs 4.10–4.12 Web pages may include image maps. The active areas (here the bicycles, diver and canoeists in fig. 4.1) are revealed when the map is explored by moving the cursor over the image. Any active areas are hotspots (shown in fig. 4.11). Their presence is shown by the arrow cursor changing to the pointy finger above a hot spot area (fig. 4.12).

The use of JavaScript allows the rollover event to trigger actions elsewhere; another image may change, a sound might play.

Virtual worlds

Some of the early computer games and military simulations placed the user in a virtual environment. There, although objects existed as pre-determined datasets (a tank, a space craft), what happened after the user entered the world is entirely the consequence of the user’s interaction with the virtual world, its objects and rules. The early games and simulations, such as David Braben’s Elite, took the form of outline vector graphics. These simple images allowed for a fast redraw of the screen and so a ‘believable’ frame rate and an apparent continuity of action/effect and movement within the virtual world (fig. 4.13).

Fig. 4.13 Vector graphics draw and redraw quickly – for this reason they were used for early ‘3D’ graphics in games and simulators. The green outlined shape on a black background became a strong referent meaning ‘computer imagery’.

As computer processing and graphic display power have increased so these applications have developed from vector graphics to volumetric rendered 3D spaces using texture mapping to create solid surfaces. This is typified in games like Doom, Quake, Tomb Raider, Golden Eye. These forms of interactive multimedia are included in this section because they fall within the concept of ‘change and move’ used to define animation within multimedia. The animation here, of course, is of the world view of the user in that world. This is a special form of animation because the user or viewer is the centre of the animated world. They are an active participant in it rather than a viewer of it. What is seen on the screen is the product of the world, its objects and the user’s position and angle of view. Data about each of those is used to generate the image frame-by-frame, step-by-step, action by action. This is not animation as contained and held at one remove by a cinema screen, a television display, a computer monitor window. This is a believable world come alive around us, and generated for us uniquely, each time we visit.

ANIMATION IN iTV (interactive TeleVision)

Interactive media technologies are being adopted and adapted for interactive television. Many set-top boxes that receive and decode iTV signals contain a virtual web browser or similar technology. Move and change animation, in the form of cursors and rollovers, are key components of the responsiveness of this technology. As with the web there are many issues of bandwidth, download and image resolution for the iTV designer to deal with. This new medium provides further challenges and opportunities for animation and for animation designers.

APPROACHES AND WORKING METHODS

Animation and interactivity blur together not only in the user’s experiences but in the ways in which authoring packages work. Various software packages work. Various software packages such as Macromedia Director, Flash and Dreamweaver are used to author interactive content for both stand-alone CD and web-based delivery. Although these are not the only authoring software packages available for creating interactive media content, each has been adopted widely in the industry and each illustrates a particular approach to multimedia content creation.

Director is, at heart, a sprite animation package that uses a timeline to organize its content. Sprites are drawn from a ‘cast’ and placed on a ‘stage’. The attributes of the sprite (location, cast member used, etc.) are controlled through a score. In the score time runs left-to-right in a series of frames grouped together as columns of cells. These cells represent the content of that frame for a number of sprite ‘channels’ which run horizontally. These ideas are discussed again later and illustrated in figures 4.15, 4.16 and 4.30.

Fig 4.14 Vector draw and animation packages, like Flash, can produce in-betweened or morphed shapes.

In this example of a circle morphed into an eight point star, the colour properties of the object have also been morphed or in-betweened across the colour space from cyan to yellow.

Used in animations this process can result in fluid changes of both representational and abstract forms.

The timeline supports the use of keyframes to in-between sprite movements and changes to other attributes. This saves the laborious process of frame-by-frame detailing each sprite’s position and properties. It is a fairly straightforward process to create sprite animations working at the score level.

Sprites are bitmap objects which can be created in Director’s own Paint area but more often are prepared beforehand in a dedicated graphics application like Photoshop, PaintShop Pro or Illustrator. The prepared images are imported into Director and become cast members. These can be dragged onto the stage and positioned as required, or called by code to become the content of a sprite.

Director 8 supports real time rotation and distortion of sprites. Elsewhere, in similar authoring packages and earlier versions of Director it is necessary to create individual images for each small difference in a ‘cast’ member as in the tumbling hourglass that illustrates the first page of this chapter.

Within Director sits Lingo, a complete object oriented programming language. Lingo provides the opportunity for fine control of sprites, sound, movies and other media. It allows for elaborate conditional statements to monitor and respond to user’s actions. Using Lingo the multimedia designer can craft rich interactive experiences for the user. However, it is not necessary to be a programmer to create interactivity in Director as there is a library of pre-set behaviours that enable basic interactivity.

Flash is inherently a vector animation package. Like Director it uses a timeline to organize and sequence events. This timeline is not the same as the Director score in that it contains only a single channel to start with. In Flash an object which is to be controlled separately has to be placed on an individual layer. As more objects are added more layers are added and the timeline comes to resemble Director’s score. Flash layers do not only hold vector or bitmap objects – they may contain other Flash movies which in turn have objects with their own movement and behaviours.

Later versions of Flash use a programming language called ActionScript to support interactivity and programmed events. ActionScript is a version of ECMAScript, a standard definition that includes also JavaScript and Jscript. Like Director, Flash uses some ready-made script elements to enable non-programmers to create interactivity.

Flash graphics are vector-based and this means that they can be in-betweened in both their location and also ‘morphed’ in various ways. Morphing involves a procedure that recalculates the position and relationship of the vector control points. This means that objects can be rotated, stretched, and can be made to dynamically alter shape across a period of time. Because the shapes are vectors they remain true shapes and forms without the unwanted distortions that come when bitmaps are rotated, stretched, sheared, etc.

Web pages were originally static layouts. Early versions of HTML (the page description language that is used to create web pages) did not support movable or moving elements. Movement was confined to animated GIFs, rollovers and the cursor change that indicated a link. Later versions of HTML (the so-called Dynamic HTML) support the creation of independent layers within the page. These layers have their own attributes of location, size and visibility and can be made to move by restating their position within a screen update. This provides a simple way of moving elements around the screen. However, it remains slow (frame rates below 10 a second are common) and relatively clumsy in visual terms.

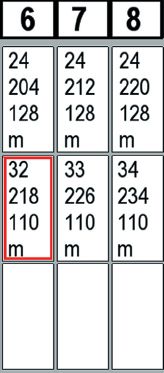

Figs 4.15 and 4.16 opposite The timeline (fig. 4.15), a graphic representation of sprites and their properties is a common tool in multimedia authoring software. Usually time flows left-to-right and the vertical axis describes what is happening on screen frame-by-frame.

In this example the red dots represent keyframes and the red bars show in-betweening actions of the sprites.

The cells on the grid hold information about the sprite’s location on screen, its contents and associated behaviours. These can usually be viewed in an ‘expanded’ display – an example is shown in fig. 4.16.

In this extended timeline display the first line in each cell is the number of the image drawn from the sprite bank, next come horizontal and vertical screen co-ordinates and then the properties of the sprite – here ‘m’ means a matte display. In the topmost cells a single image moves across the screen in 8 pixel steps. In the next line of cells sequential images change frame-by-frame to create an animated change and move across the screen in 8 pixel steps. Again the red boxed line indicates a ‘keyframe’.

Dreamweaver and similar web authoring packages use a timeline and keyframe concept to organize layered elements. Although these web authoring packages will generate JavaScript code for things like rollovers and mouseclick actions, they rarely provide access to the depths and power of the language. This limits what can be done in web pages in terms of interactivity unless the designer or artist is willing to learn how to program in JavaScript or Jscript. As Director and Flash files can be exported in forms suitable for embedding in web pages, the restricted animation and interaction possibilities of web authoring packages is not a limit to the creativity of designers and artists.

The graphics in a web page are external assets that have to be prepared beforehand in dedicated image creation software. There are two dominant image formats used in web pages, .GIF and .JPG (a third, .PNG, is supported in browsers but not widely used). In broad terms .JPG files are best used for photographic-type images. This format uses a lossy compression routine but preserves the visual quality of photographic-type images. The .GIF format is best used for images with areas of flat colour. This format uses a compression routine that works well and preserves the visual quality of this type of image. The .GIF format also supports a transparent colour option which means that images need not be contained in a rectangle. The .GIF 89a format supports image-flipping animation. This animation format is a pre-rendered bitmap mode; it holds a copy of all the images in the animation and displays them sequentially.

The timelines and keyframes

At the heart of multimedia authoring packages like these is a tool for organizing events across time – in most instances this is presented as a timeline of some kind.

The use of a timeline to construct a representation of actions makes creating animation (and interactive media) a kind of extended drawing practice. The timeline is a visualization of events that can be run or tested out at any point in its development. This means that ideas about movement, pace, timing, visual communication can be tried out and developed through a kind of intuitive test-and-see process. This playing with versions is like sketching where ideas are tried out.

The majority of timeline tools include ‘keyframes’ and ‘in-betweening’ routines. In terms of a sprite object that moves across the screen from the left edge to the right edge there need only be two keyframes. The first will hold the left-edge co-ordinates of the sprite’s position on screen – here 1, 100 (0 pixel in from the left on the horizontal axis, 100 pixels down from the top on the vertical axis). The second keyframe holds the sprite’s co-ordinates at the right edge of the screen, here 800, 100 (800 pixels in from the left on the horizontal axis, 100 pixels down from the top on the vertical axis). The number of frames between the first keyframe and the second are used to decide the frame-by-frame ‘in-between’ movement of the sprite. So, if there are 20 frames between the keyframes the sprite will move 800 pixels/20 = 40 pixels between each frame, if there are 50 frames then 800 pixels/ 50 = 16 pixels between each frame.

Keyframes in multimedia can hold other properties also – the sprite’s rotation, transparency, the size of the bounding rectangle it is mapped into. With vector objects keyframes may be used to ‘morph’ the shape. Morphing can apply to several of the object’s attributes. To its shape because by recalculating the curves that make up the shape between frames it is redrawn. Colour values can be ‘morphed’, because they can be used to determine a number of steps through a palette or colour range. The line and fill attributes of objects can also be used for sequential changes across a range of frames (fig. 4.17).

Fig. 4.17 Interactive multimedia can be thought of as a series of looping episodes, each likely to contain animated elements. The user’s actions ‘jump’ between the loops creating a ‘personal’ version of the story.

Used for a simple linear animation the timeline represents the process from the first frame to the last frame of the sequence. For interactive multimedia the timeline is the place where the conditions and separate sequences of the work are determined and represented. An interactive artefact can be thought of as a set of looping sequences; within each loop there are conditions that allow a jump to another (looping) sequence or a change to what is happening in the current one. Those conditions are things like ‘when the mouse is clicked on this object go to …’, or, ‘if the count of this equals ten then do …’. The authoring of these conditions, the programming of them in effective code that does what the designer or artist intends, is a large part of the challenge of creating interactive multimedia.

Some technical considerations

Animation in multimedia, in all its forms, is generated rather than pre-rendered. The presence, location and movement of elements are determined by the unique actions and histories of the user. This means that the designer of multimedia has to take aboard technical issues that impact on the quality of the animation. Those qualities are, essentially, visual and temporal. Visual includes such things as the colour depth and pixel size of sprites. Temporal includes things such as the achievable frame rate, the system’s responsiveness to user actions. The designer’s or artist’s original idea may need to be redefined in terms of what can be done within the systems that the multimedia application will be shown on.

Technological advances in processor speed, graphics capabilities (including dedicated graphics co-processors) and the increasing power of base specification machines would suggest that these problems are less relevant than they once were. Unfortunately, while the power of readily available computers has risen exponentially, so too have users’ expectations and designers’ visions. A good example of this is the shift in the ‘standard’ screen display. VGA, once seen as a high resolution standard, is set at 640 × 480 pixels (a total of 307 200 pixels) and usually thought of in the context of a 14 inch (29 cm) monitor. Nowadays it is more common to have 800 × 600 pixel display (480 000 pixels) on 15 or 17 inch monitors and this is giving way to resolutions of 1024 × 768 (786 432 pixels) and above. Displays of 1280 × 1024 (over 1 million pixels) will soon be common. This is coupled with a similar advancement in colour depth from VGA’s 8-bit (256 colour) display to contemporary 24-bit colour depths. Taken together these factors mean that the graphics handling capabilities of ordinary computer systems, things like the speed at which a buffered image can be assembled and displayed, remain an issue for animation in multimedia and on the web. While processor speed has increased massively since the VGA standard was established, the usual graphics display has nearly tripled in its pixel count and in its colour depth.

The expectations of the audience have increased considerably. When VGA first came out any movement on screen seemed almost magical. Carefully developed demonstrations meticulously optimized for the machine’s capabilities would show checker-patterned bouncing balls, with shadows, in a 320 × 240 pixel window. Contemporary audiences expect much more, and future audiences will continue to expect more of the quality of animation in their multimedia applications.

The quality of animation in multimedia, the move and change of parts of the visual display, is affected by a number of factors. These are primarily technical but impact on the aesthetic qualities of the work. For smooth movement the display needs to maintain a frame rate of at least 12 frames per second and preferably twice that. While pre-rendered animation media can use advanced codecs (compression/ decompression algorithms) to reduce both file size and the required media transfer bandwidth, a responsive multimedia animation is generated on the fly. This means that the system has to:

1. take note of user actions

2. calculate the consequences of the user actions in terms of sprite attributes (location, etc.) or in terms of vectors

3. locate and draw on assets (sprites) held in RAM or on disk, or recalculate vectors

4. assemble and render a screen image (place assets in z-axis order, place bitmaps, draw out vector effects, calculate any effects such as transparency, logical colour mixing) within the monitor refresh time

5. display that image.

Fig. 4.18 In a sprite animation package, like Director, the separate screen elements overlay each other. The value of the displayed pixel is the result of calculating which object sits in front of the others and how its logical mixing affects the values of underlying sprite images.

If all that is happening on the screen is a system cursor moving over a static image, smooth and regular movement is not a problem. The Operating System handles the cursor display and screen refresh. But more complex tasks can result in image tear (as the monitor refresh rate and the speed at which a new display is calculated fall out of synch) or a juddering custom cursor that is not convincingly tied in to mouse movements.

Using a large number of sprites, and using large sprites, using compound transparencies and logical mixing (Director’s ink effects), all have an overhead in terms of the third and fourth steps. Fetching bitmap sprites from memory takes time, fetching them from external asset sources such as a hard disk or CD-ROM takes longer. The larger the sprites are, and the more of them there are, the longer it takes to get them to the buffer where the screen image is being assembled and rendered.

The calculation of a final pixel value for the screen display can be thought of as a single line rising through all the elements of the display from the backmost to the foremost. If the elements simply overlay each other then the calculation of the pixel’s value is straightforward, it is the value of the pixel in that position on the foremost element. If, on the other hand, the foremost element, and any other underlying ones, has a logical colour mixing property, then the calculation is more complex, and it takes longer to determine the colour value of the display pixel.

Although we are talking a few milliseconds for each ‘delay’ in fetching assets and in calculating pixel values these can still add up considerably. To refresh a screen to give a believable continuity of movement of 12 f.p.s. means updating the display every 0.083 seconds (every 83 milliseconds). To achieve full motion halves the time available.

A fairly ordinary multimedia screen may include a background image (800 × 600), possibly 10–15 other sprites (buttons with rollover states, static images, calculated animation images), a text box, possibly a Quicktime movie or AVI file, a custom cursor image dynamically placed at the cursor’s current co-ordinates. And the machine may also be handling sound (from the Quicktime or AVI, spot sounds on button rollovers).

To achieve continuous smooth movement needs careful design bearing in mind all the factors that may impair the display rate. When designing for a point-of-sales display or for in-house training programmes, where the specification of the display machine is known, things can be optimized for the performance parameters of the display machine. Elsewhere, when working on multimedia that will be distributed on CD-ROM, the diversity of machines used by the wider ‘general public’ makes the design process more difficult. It is quite usual to specify a ‘reference platform’, a minimum specification on which the software will run as intended, and ensure that all testing and evaluation takes place on a computer with that specification. That way the designer or artist gains some control over how their multimedia and animations will behave.

In web pages, where the animated multimedia forms happen within the environment of a browser, there is the additional computing overhead of the browser itself. When animating layers using (D)HTML/Javascript processes the usual display refresh speed is between 12 and 18 f.p.s. Shockwave files may run faster and Flash, using vector graphics, can achieve a much higher rate still.

But for web pages the big issue is, and for some time to come will remain, download time. The speed of connection determines how quickly the media assets of the HTML page become available. It is generally thought that users will wait 10 seconds for a page to appear. Designing web animated graphics (rollovers, GIF animations, etc.) that will download in that time on an ordinary connection is an important consideration of the web page realization process.

Figs 4.19–4.25 Various forms of rollover showing display and alert states.

Fig 4.19 The red torus comes into focus which conveys a very literal message to the user about where their actions are centred.

Fig. 4.20 The indented surface suggests a plasticity and materiality that relates on-screen actions to real world tactile sensations.

Fig. 4.21 The button lights up, making the text clearer.

Fig. 4.22 The plastic duck dances with the rollover facing right then left and then right again when the cursor moves away.

ROLLOVERS, BUTTONS AND OTHER RESPONSIVE OBJECTS

The rollover is a common animated element within multimedia. The ‘come to life’ change that happens as the mouse is moved over a screen object confirms the responsiveness of the application. At one level this is a form of phatic communication; it lets us know the thing is still working, that communication is happening. At another level rollovers contribute to our feeling of agency and to our understanding of what we can do and where we can do it within the application.

This section takes a broad view of what rollovers are to discuss some of the principles and issues involved in sprite animation as it is used in interactive multimedia.

Rollovers are responsive objects. They change when the user interacts with them. Other responsive objects include buttons that change when clicked on, objects that can be dragged around the screen, and text links. In the case of a rollover the change happens when the cursor enters the ‘hotspot’ that the object makes in the screen display. In the case of buttons and text links the change happens when the cursor is above the object and the mouse button is clicked. In some instances the responsive object includes both modes – the object changes when the cursor enters its area, and changes again when the mouse button is clicked (and may change again while any action initiated by the mouse click takes place). These changes give a rich feedback to the user about the application’s responsiveness, its recognition of their actions, and the user’s agency within the work. Rollovers may be clearly marked (having a visual affordance that differentiates them from the surrounding image) and may be used as overt navigation devices. The user makes decisions about what to do after recognizing and reading them.

Fig.4.23 When the picnic hamper is rolled over, it takes on colour and increasing detail; this kind of visua change provides clear affordance about actions and opportunities.

Fig. 4.24 The hand of playing cards fans open to suggest either a flawed flush, or a run with a pair, and this play with meaning contributes to the overall tone or atmosphere of the page.

Fig. 4.25 The button has a bevelled edge effect which makes it stand proud of the background surface; on rollover the reversed bevel creates a recess effect, giving a ‘soft’ version of real world actions.

The category of Rollover includes also any ‘hotspot area’ in the display image. These are the active areas of image maps in web pages, and transparent or invisible objects in authoring packages like Director. The active area may only be recognized because of a change to the cursor or because an action is triggered somewhere else in the display. Hotspots, and rollover objects without clear affordance, make navigation a process of exploration rather than route following.

The change that happens with a rollover may be displaced or direct. That is the change may happen where the cursor is, in which case it is direct, or may happen in some other part of the display, in which case it is displaced. In some instances the change may happen simultaneously in more than one place – the area where the cursor is may light up to indicate what option is selected while an image elsewhere changes which is the content of that option.

Buttons are a particular kind of active area in multimedia applications. They have a high level of affordance which clearly distinguishes them from the surrounding image, and are used to indicate choices available to the user. Their depiction in the ‘soft world’ of the interface is sometimes a representation of a similar device in the ‘hard world’ of physical devices. This correspondence between the hard and soft objects means that such buttons have an ‘iconic’ mode of representation – the soft button looks like the hard physical object. So, a button may have a 3D appearance that gives it the appearance of standing proud of the surrounding surface. Some graphics editors (noticeably PaintShop Pro) have tools that ‘buttonize’ an image by darkening and lightening the edges to give a bevelled appearance to the image. When the mouse is clicked over these ‘iconic’ buttons it is usual that the change of state of the object simulates a real world action. The button appears to be pressed into the surface, it changes colour, or appears to light up, and often a sound sample provides aural feedback as a distinct ‘click’. These literal, or iconic, buttons have many uses especially when a simple and clear navigation is required. Because of their iconic image quality they are suitable for use with audiences who are not familiar with using computers, or ones that have to work readily with a wide range of people.

The ‘button’ is a multistate object: the current state is dependent on the user’s current or past actions. So, we can think of this kind of object as having these states or features:

• display – its appearance as part of the display, and to which it returns when not activated (usually)

• alert – its appearance when the cursor enters its bounding or hotspot area

• selected – its appearance when ‘clicked on’ to cause the action it represents to be initiated

• active – its appearance while the action it represents happens (and while it remains in the display if a change of display occurs)

• hotspot – while not a part of its appearance this is the part of the object’s display area that is ‘clickable’.

Not all responsive objects make use of all these modes. When GIF or JPEG images are used on a web page, for instance, it is the bounding rectangle of the image that is defined as the ‘hotspot’ area. This can mean that when a GIF with a transparent background is used to have an irregular shaped graphic object, the arrow to pointy finger cursor change that indicates the link, and the clickable area, do not overlay and correspond to the image exactly. By using an image map in a web page an irregular area can be defined as a hotspot separate from the image’s bounding rectangle. Similar issues affect sprites used as rollovers in packages like Macromedia’s Director, where the rectangular bounding box of the sprite can contain transparent areas. Lingo, the programming language underpinning Director, has a special command that makes only the visible image area of a sprite the active hotspot for a rollover.

Fig. 4.26 In web pages and in multimedia authoring, images used as rollovers need to be the same size otherwise they will be stretched or squashed to fit.

More things about rollovers and buttons

An important issue, in practically all situations, is making sure that images used for rollovers are the same pixel size. Web pages and multimedia authoring packages will usually resize an image to fit the pixel dimensions of the first image used. This has two effects: firstly images may be stretched, squashed and otherwise distorted, and so the designer loses control of the visual design, and secondly there is a computing overhead in the rescaling of the image which may affect responsiveness and should be avoided.

Web authoring packages like Macromedia Dreamweaver make it relatively straightforward to create rollovers with ‘display’ and ‘alert’ states using two images. Usually these rollovers indicate a potential link, and the following mouseclick selects that link and sets in action the display of another page.

Rollovers can be used much more imaginatively than as two state links. Advanced use of Javascript in web pages and underlying programming languages like Lingo in Director, opens up rich possibilities for the artistic use of activated objects. These include randomizing the image used in the alert or selected states so that the user’s experience is continually different, causing unexpected changes to other parts of the display than the current cursor position – creating displaced rollover effects – and playing spot sound effects or music along with, or instead of, image changes.

It is not necessary to return an activated object to its display state when the mouse leaves the hotspot area. By leaving the object in its alert state a lasting change happens that persists until the whole display changes. This can give the user strong feelings of agency, of effective intervention in the application. Blank or wholly transparent images which change on rollover can become parts of a picture the user completes through discovery and play.

Fig. 4.27 Flash uses a 4-state object as a button. The ‘hotspot’ area (the outline grey image on the right) is defined separately from the display, alert and active state images.

Flash uses self-contained movie (symbol) objects for buttons. The Flash button/symbol editor has four boxes for images which will be used as a button; the first three are used for images, the fourth is a map area used to define the ‘hotspot’ of the symbol/button. The three images define the display, alert and selected states. Having an independently defined hotspot area means the designer or artist working with Flash is readily able to create irregular shaped images with a corresponding hotspot.

Activated objects do not have to be static images. In web pages an animated GIF may be used for the display state and another animated GIF for the alert state on rollover. In Flash the symbol/button images can be, themselves, Flash movies. A particularly effective approach is to use an animation for the display state and a static image for the rollover, alert, state – this has the effect of the user’s actions stopping or arresting change in one part of the screen. Similarly in a package like Director where the contents of a sprite are defined in relation to a set of assets (in Director this is known as the cast) relatively straightforward programming can be used to change the cast member currently displayed in the sprite. This would create an illusion of animation. By changing a variable within the code, the order in which the cast members are displayed can be reversed, randomized, changed to another set of images, or whatever the designer’s imagination dreams up.

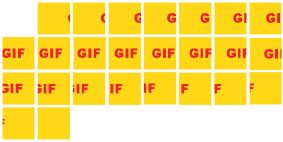

GIF ANIMATION

Animated GIFs are a special form of the .GIF file format used in web pages. The principle and ideas involved in GIF animation illustrate a number of points about animation in multimedia generally as well as specifically for the web.

Animated GIFs are basically a form of image-flipping animation where one image overlays another in turn. The rate at which the images flip is an attribute of the file format and is independent of other surrounding media. This means that animated GIFs can be used for a slideshow (slow rate of change) or for character animations (fast rate of change). Another file level attribute is ‘loop’; this can be set to continuous so that the animation plays over and again, and, if made to have a seamless repeat will appear to have no particular beginning or end. ‘Loop’ can also be set to fixed numbers; a setting of 1 causes the animation to play through once and stop on its last frame.

GIF animations are pre-rendered bitmaps that have a colour depth of up to 256 colours (8-bit depth). The compression algorithm used in the .GIF file format makes it especially useful for images with areas of flat colour – it is not very good at compressing photographic type images or images which are highly dithered. All the images that make up the animation sit within a bank of images that are displayed sequentially. The file size of the GIF animation is a product of four factors. Three of those – image resolution (X, Y pixel size), the number of frames, and colour depth (2, 4, 5, 6, 7 or 8-bit) – are calculable by the designer. The fourth, the consequence of the compression algorithm, is not predictable without a detailed understanding of how the routine works. In general terms, large areas of continuous colour in horizontal rows compress very well; areas with a lot of changes between pixels will not compress well (fig. 4.28).

Fig. 4.28 GIF animations are pre-rendered bitmaps. The individual ‘frames’ are displayed sequentially to create the illusion of movement.

For example, the red letters ‘GIF’ scroll horizontally across a yellow background and disappear. They form an animation which is 100 × 100 pixels image size, which lasts for 25 frames, and which has a 4-bit colour depth (16 colour palette). This information can be used to calculate the ‘raw’ file size of the animation:

• 100 × 100 pixels = 10 00 pixels for each frame.

• 25 frames × 10 000 pixels = 250 000 pixels.

• 4-bit colour depth means 4 × 250 000 = 1 000 000 bits.

• Divide by 8 to translate to bytes means 1 000 000/8 = 125 000 bytes = 125 kilobytes for the raw file size.

Note: this calculation is not truly accurate: there are in fact 1024 bytes in a kilobyte – but for ready reckoning 1000 is an easier number for most of us to use.

Using the optimization tools in JASC Animation Shop 3.02 this animation is reduced to just under 4 kb (a 30:1 compression) and this is fairly standard for an animation that includes simple flat colour areas.

Some GIF animation optimizers will offer a temporal (delta) compression option. This form of compression is particularly suited to moving image work such as animation as it works by saving a reference frame (usually the first) and then saving only the differences between that frame and the next.

Using and making GIF animations

In web pages, GIF animations are flexible and can be used effectively in many situations. Used as the active state of a rollover they mean that a button or non-linked area of the screen can awaken, ‘come to life’, when the cursor passes over it. And when the cursor moves out of the area the activity may stop, suggesting a quieted state, or the animation may continue, suggesting something brought to life. This coming to life of the ‘soft’ surface of a button that slowly recovers from the cursor contact may be simply decorative, providing richer visual interest than a static image. By placing a static image that is the same as the final image of a ‘loop once’ GIF animation on the screen, the move from static to moving image can be made apparently seamless. This ‘continuity’ of the visual display supports the user’s experience of a responsive system and gives to their actions an appropriate agency within the work.

A wide range of software supports the creation of GIF animations. Some (like JASC’s Animation Shop, and GIF animator) are dedicated software packages that import images made elsewhere and render and optimize the GIF animation. This sort of package will often also include some sort of generator for moving text, transitions and special effects. These features allow the user to create frames within the package by adjusting the parameters of a predetermined set of features. Typically the moving text generator tools will allow the user to create text that scrolls across from left or right, up or down, which spins in to the frame, with or without a drop shadow, is lit from the front or back by a moving light. Transitions and effects will offer dissolves, fades, ripples of various kinds.

While dedicated packages like these are very good at optimizing file sizes, care should be taken when using the generator tools. Firstly, because GIF animations are bitmaps adding a few frames for a transition soon increases the file; secondly, the effects add detail in both colour and pixel distribution, and this can increase the file size of the animation considerably. Taken together these factors mean the file will take longer to download. For many users this is something they are paying for in their online charges.

Many image editing and creation software packages (Photoshop, Fireworks, Paintshop Pro) either support the direct creation of animated GIFs or have associated tools that integrate with the main application. In some of these packages particular animation tools such as onion-skins, or layer tools, can be used to provide guides to drawing or positioning sequential images. These tools allow for a previsualization of the animation on screen, but when creating an animated GIF, the same as with any animation, it is a good idea to sketch out the idea and some simple images on paper first. We all feel less precious about thumbnail sketches than we do about on-screen imagery so when working with paper we are more ready to start again, to throw poor ideas away, to rethink what we want to say and how well we are saying it.

MOVABLE OBJECTS

In many multimedia applications the user can drag image objects around the screen. These objects may be static or animated, large or small, move singly or in multiples around the screen. Some may move relative to the mouse action, others may move independent of it because their movements do not correspond completely to the user’s actions.

This category of movable objects includes the custom cursors. In most multimedia applications there are commands to turn off the display of the system cursor, to read the current X-axis and Y-axis co-ordinates of the cursor, and to place a sprite at a particular set of co-ordinates. A custom cursor is simply a sprite that is mapped to the screen co-ordinates of the mouse. By continuously updating the current cursor X-axis and Y-axis values for the sprite it appears to behave like a cursor.

Fig. 4.30 Sprite images that are to be used for sequential animation should be placed in numbered order.

The registration point of a sprite, the point that places it at the screen co-ordinates, is usually in the centre of the bounding rectangle (fig. 4.31), but it can be changed to any point within the area (fig. 4.32).

If the image content of the sprite is changed in some way the cursor will appear to animate separately from its own movement. For example, the image asset used for the sprite may change through a sequence that appears to have a continuity within its changes – a dripping tap for example, or sand running through an hourglass.

A sprite has a ‘reference point’ which is the place mapped to the X, Y co-ordinates. Where this reference point is located (sprite centre, upper left, etc.) varies between applications but most allow the designer to alter it. In Director, for example, the reference point for bitmap graphics is initially the centre of the bounding rectangle of the cast member when it is created. The reference point tool in the Paint window allows this to be redefined. For drawn objects, in Director, the original reference point is the upper left corner of the bounding box.

Some multimedia programming languages allow for ‘collision detection’ algorithms which determine when a sprite overlaps, encloses or is enclosed by another. Using these it is possible to create movable objects that are dragged around and affect the movements and behaviours of other objects. A good example of this is an image puzzle where fragments of an image have to be dragged into place to complete the image. Using movable objects and collision detection to determine when an object overlies another, this is relatively straightforward.

Having movable objects (animateable things) on screen allows the user to feel empowered. The user’s experience is that their effect on the world of the artefact is not only what happens when they click on a button but has greater depth and consequence. Moving things around, altering the visual display permanently or for the moment, engages them at a deeper level, deepens the feeling of agency, and involves them in the world of the artefact more intensely.

ANIMATED WORLDS

There is a form of animation that is very different to any we have discussed so far. It is a truly animated medium, and potentially the most pure form of animation because it ‘brings to life’ not a representation of something contained in a frame of some sort, but a complete world which has no frames because the user is inside that world. What we are discussing here is ‘Virtual Reality’.

Virtual Reality exists as a number of technologies of varying complexity, expense and accessibility. The forms to be discussed in detail, here, are ones that are readily accessible. They do not involve the rare, expensive and complex technologies employed for a work such as Char Davies’ Osmose where the user enters a truly immersive Virtual Reality (for more information about this important VR artwork see http://www.immersence.com/osmose.htm). Such works involve Head Mounted Display units, elaborate 6-axis tracking and navigation devices and immense computer processing power. VR, of the kind we are concerned with, works within the display conditions of ordinary computer workstations or games consoles, using the mouse/keyboard or controlpad for navigation.

Importantly, the kinds of VR discussed are accessible in terms of making things because they can be realized on ordinary desktop computers. The first is the panoramic image typified by Apple’s QTVR (QuickTime Virtual Reality) and similar products. The second is the volumetric 3D virtual reality typified by games such as Tomb Raider and Quake. These two forms also exemplify the two dominant approaches to computer graphics – the first is inherently bitmap, the second essentially vector driven.

QTVR is a bitmap medium. It uses an image with a high horizontal resolution to form continuous 360-degree images. When viewed in a smaller framing window the image can be rotated from left to right and with some limited tilt. An anamorphic process, inherent in the software, manipulates the image in such a way it appears ‘correct’ in the viewing window.

Figs 4.33 and 4.34 Panoramic images, used in QTVR or similar applications, are stitched together or rendered as 360-degree sweeps with anamorphic distortion to compensate for the ‘bend’ in the image. This distortion is visible along the lower edge of fig. 4.33. The display area is a smaller fig. 4.34.

Fig 4.35 The user’s experience of a panoramic image is as if they stand at the centre of the scene and spin on the spot.

The visible ‘window’ onto the scene can be thought of as a ‘field of view’ – and it remains ‘cinematic’ in its framing rectangle.

Limited zoom in and out movement is possible, but the inherently bitmap nature of the image means that this is essentially a magnification of pixels process that leads to an unrealistic pixellated image.

QTVR is generally used as a photographic-type medium. A series of photographs of a scene are taken and these are ‘stitched’ together into the panoramic format by software which compensates for overlapping areas and differential lighting conditions. Specialist photographic hardware (calibrated tripod heads and motorized assemblies) support professional practice, but good results can be obtained by using an ordinary hand-held camera if thought is given to planning. Apart from Apple’s QTVR format and software there are several applications for the Windows platform.

Once the panoramic image set has been created the file can be viewed. When the user moves the cursor to the left edge the image pans in that direction, moving to the right edge causes the pan to reverse. Key presses typically allow the user to zoom in and zoom out within the limits of the image. Of course, as it is a bitmap medium the act of zooming in can result in a degraded, pixellated image. Some limited tilt up and down is available, accessed by moving the cursor to the top and bottom of the image respectively. This navigation puts the user at the centre of the scene, able to rotate and with some limited movement in and out the image field.

The photographic quality of the images and their responsiveness creates a genuine ‘feel of presence’ for the user and this is exploited in the usual kind of subject matter – landscapes, hotel rooms, archaeological excavations, great sights and places of interest. Panoramic worlds belong in the tradition of spectacle and curiosity like the dioramas and stereoscopic slide viewing devices of the Victorians.

Panoramic images are remarkably easy to use and to work with. Typing ‘QTVR’ into a web search engine will produce many examples.

QTVR itself includes tools to create ‘hotspots’, and to link panoramas together to create walkthroughs from a sequence of panoramic images. The richness of this experience, when compared to a slideshow sequence of consecutive images, comes when you stop to look around and choose to investigate a small detail.

Hugo Glendenning has worked with dance and theatre groups to create staged tableaux photographed as panoramas and constructed as interlinked nodes which can be explored to discover an underlying story. The imagery is of rooms, city streets and its strangely compelling air of fragile disturbance and mystery is carried effectively through the panning zooming navigation of images in which we seek narratives of meaning (see http://www.moose.co.uk/userfiles/hugog/ for more about this artist’s work).

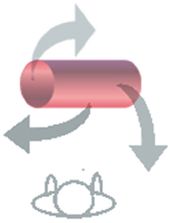

Fig. 4.36 QTVR objects place the user in the opposite viewing position to that in panoramas. Here the viewer is outside the object which is tumbled in space by their actions.

QTVR objects contain the opposite relationship between viewer and viewed as in panoramas. Where in panoramas the user sits or stands at the centre and looks out at the world as they rotate, with objects, the viewer looks into the space the object occupies. The user’s actions control the spin and tumble of the object. This mode of animated imagery is a powerful tool for communicating the design features of small objects such as hairdryers, table lamps, chairs. There comes a point though where there is an increasing discrepancy to the supposed mass of the object and the ease with which its orientation is manipulated. After that point the believability of the object reduces and we perceive not the object but a representation, a toy. This of course returns us to an important feature of all animation – the ‘believability’ of the object as contained in its behaviour when moving. QTVR files can be viewed on any computer with a late version of Apple’s Quicktime player software. They are easily embedded in web pages. Other similar applications may require Java or a proprietorial plug-in to make the panorama navigable.

Believability is at the very centre of 3D navigable worlds. These worlds are generated on the fly in response to the user’s actions and the visual image quality of these worlds is defined by the need to keep shapes, forms and textures simple enough to enable real time rendering. These are the virtual realities of Quake, Super Mario World and Tomb Raider. The narrative of these animations is a free-form discovery where the world exists without fixed choices or paths and the user can go anywhere they wish and, within the rules of the world, do whatever they want. These worlds are animated by the user’s actions – if the user stands still in the world nothing changes. If, and as they move, the world comes alive in the changing point of view the user experiences and their (virtual) movement through the world.

At the heart of these generated animations is the engine which tracks where the user is in the virtual world, the direction they are looking and, drawing on the world model, renders as an image what they can see. To make the world believable the frame rate must be above 12 f.p.s., and preferably closer to 24 f.p.s., and there cannot be any noticeable ‘lag’ between user’s actions and the display changing. Because the calculations involved are complex and computer intensive the virtual 3-dimensional models used are often simplified, low polygon forms. Bitmap textures applied to the surface provide surface detail and an acceptable level of realism much of the time.

To make navigable worlds is not difficult so long as you can use 3D modeling software. Many packages offer an export as VRML (Virtual Reality Modeling Language) filter that saves the datafile in the .wrl file format. Any bitmap images used as textures need to be saved as .jpg files in the same directory. The world can then be viewed using a web browser VRML viewer plug-in like Cosmoplayer. 3D Studio Max will export as VRML objects which you have animated in the working file so that your VRML .wrl can have flying birds, working fountains, opening doors, all moving independently and continuously in the virtual world.

Fig 4.37 In a navigable 3D world the screen image (far side) is calculated from information about where the user’s avatar (in-world presence) is placed, the direction they are looking in, their field of view, and the properties of the objects they look at (near side).

This immersive view of the world is readily convincing, disbelief is willingly suspended, aspects of the experience which are extended by the strong sense of agency and presence that characterizes the ‘free-form’ exploration of these worlds.

3D navigable worlds of these kinds are used extensively for training simulators and for games. They can be seen to be the ultimate animation, the ultimate ‘coming alive’, as they create a world the viewer enters, a reality the viewer takes part in and which is no longer framed as separate by the darkened cinema auditorium or the domestic monitor/TV.

These immersive animations are a long way from hand-drawn early animated films, they mark animation as a medium that has, technologically and in terms of narrative content, matured well beyond the filmic tradition of cinema and television which nurtured it through the twentieth century.

CONCLUSION

In this chapter we have discussed the contribution that animation makes to our experience of multimedia and new media. We noted that in contemporary computer systems and applications animation is an ordinary part of the user’s experiences – in things like image-flipping icons, rollovers, buttons, animated and context sensitive cursors that give feedback to the user.

We considered, also, the kinds of ‘object’ that are found in multimedia artefacts, noting that there are similarities with traditional filmic forms of animation and important differences. In the main those differences rest in ‘responsive’ objects which react to and can be controlled by the user’s actions. This makes the overall narrative of the experience, and of the artefact, modifiable. The responsiveness of objects culminates in the ‘navigable worlds’ of Virtual Realities which are a special form of animation in that we, the user, sit inside the world they represent rather than viewing it at one remove.

We looked at important underlying ideas and processes involved in the forms of animation used in multimedia and the Web. We considered some of the factors that affect delivery, display and responsiveness. And we looked at the concepts of the timeline and keyframe as ways of thinking about, organizing and realizing multimedia artefacts. Those ideas underpin the sprite-based and vector-based authoring applications which are used to create multimedia and new media content in areas as diverse as educational materials, artworks, advertising, and online encyclopaedias.

Animation in multimedia and new media has an exciting future. Indeed it can be argued that it is with the development of powerful and readily accessible computers that animation has found a new cultural role. Not only have these technologies provided tools for creative invention, they also bring new opportunities for that creativity, new forms of distribution and new sites for its display in the form of interactive artworks, computer games, websites, and a hundred thousand things we have not dreamt of. Yet.