CHAPTER 5

Privacy and Security in Healthcare

This chapter covers Domain 5, “Privacy and Security in Healthcare,” of the HCISPP certification. After you read and study this chapter, you should be able to:

• Identify key information security objectives and attributes

• Understand common information security definitions and concepts

• Know fundamental privacy terms and principles used in information protection

• Comprehend the interdependence of privacy and security in healthcare organizations

• Categorize sensitive health information according to US and international guidelines

• Define privacy and security terms as they apply to healthcare

• Distinguish methods for reducing or mitigating the sensitivity of healthcare information

The importance of understanding and applying proper privacy and security controls on healthcare information is foundational to your success as a healthcare information privacy and security professional. The healthcare industry is highly regulated in the United States as is the protection of personal data in most other countries. As we move from our discussion of the regulatory environments that impact our healthcare organizations, we will examine specific information security and privacy definitions and concepts that regulations and ultimately our policies and procedures are built upon. In this chapter, you will learn to understand security objectives and attributes, including the principles of confidentiality, integrity, and availability. You’ll also learn about accountability, which is often a major part of the discussion of security as it relates to healthcare organizations. You will also learn general security definitions as they apply to healthcare.

As with most healthcare organizations, your organization likely faces a multitude of challenges, not the least of which is the need to apply a reasonable standard of due care and due diligence to safeguard the confidentiality, integrity, and availability of patient healthcare information. Whether the intent is to improve patient care, to protect the sensitive information we need to serve our customers, or to ensure compliance with regulatory requirements, the challenges are significant.

This chapter addresses security- and privacy-related topics together. This is in part because it also includes an overview of the relationship between information security and privacy. Privacy involves controlling access to personal information and the control a person can have over information discovery and sharing. Security is administrative, technical, and physical mechanism that protects information from unauthorized access, alteration, and loss. In short, privacy is about what we protect, and security is about how we protect it.1

Privacy and security are important to everyone involved in healthcare, including health facility employees, patients, family members, and care givers. It also applies to anyone who works for organizations that play cursory roles in patient care—for example, workers at a clearinghouse for healthcare information who never work directly providing patient healthcare. These workers also have obligations to ensure that the privacy and security of patient data are maintained in accordance with their employers’ policies and applicable regulations.

Guiding Principles of Information Security: Confidentiality, Integrity, and Availability

Data security has three guiding principles: confidentiality, integrity, and availability (CIA).2 In general, it does not matter where you work, where you live, or what organizations you support; these principles remain the same. In addition to understanding CIA, you need to understand the importance of accountability, another central concept akin to CIA.

The CIA model is driven by the implementation of a combination of technical, administrative, and physical information protection controls. You should understand the relationship between CIA components in general and within your organization. Depending on a variety of concerns, including your role in the organization, the organization’s mission and size, applicable regulatory authorities, and sensitivity of information, one component may be emphasized over the others. For example, as a system administrator, providing integrity and availability may be more appropriate to your job description than providing confidentiality.

The prevailing illustration used for the CIA triad is an equilateral triangle that indicates the “weight” of each component as being equal to the others. The reality of these relationships, however, depends on situational factors. This is an important concept, because the emphasis placed on these three factors represents the assessment and balance of choices for security and privacy tools within your organization. So, for example, in your organization, confidentiality may be considered more of a priority than the other two factors, which means you’ll have increased focus on access controls and encryption. Or if data availability takes precedence, you may invest more in technical solutions for disaster recovery.

Figure 5-1 shows a comparison of the CIA triad in two scenarios. In the solid line triangle, each component is weighted equally. The triangle comprising dotted lines depicts an emphasis on confidentiality in implementing security and privacy controls.

Figure 5-1 The CIA is often depicted as a triangle that implies the relationship of the three components.

Confidentiality

Confidentiality relates to protecting sensitive proprietary information or personally identifiable information (PII) from unauthorized disclosure. The objective is to control access to a limited amount of data so that only those with proper authorization are allowed to access it. Security controls are implemented to protect confidentiality and avoid unauthorized disclosure. In healthcare, a breach in confidentiality could include an employee calling a media outlet to “anonymously” inform them that a public figure has been admitted to a rehabilitation facility. A similar example of a confidentiality breach, though beyond the scope of healthcare, also occurs when a fan magazine publishes photos of famous people enjoying personal time at a beach or other locale.

Although confidentiality is an important aspect in protecting information in any organization, healthcare information, in particular, warrants a higher level of protection and enforcement—consider, for example, an unauthorized disclosure that an individual has HIV, a psychiatric disorder, or another health concern.

Healthcare data confidentiality requirements are recognized internationally. For example, in the United States, the National Institute of Standards and Technology (NIST) has issued directives regarding CIA for healthcare information, and within the scope of confidentiality it states the following in a 2008 publication:

The confidentiality impact level is the effect of unauthorized disclosure of health care delivery services on the ability of responsible agencies to provide and support the delivery of health care to its beneficiaries will have only a limited adverse effect on agency operations, assets, or individuals. Special Factors Affecting Confidentiality Impact Determination: Some information associated with health care involves confidential patient information subject to the Privacy Act and to HIPAA [Health Insurance Portability and Accountability Act]. The Privacy Act Information provisional impact levels are documented in the Personal Identity and Authentication information type. Other information (e.g., information proprietary to hospitals, pharmaceutical companies, insurers, and care givers) must be protected under rules governing proprietary information and procurement management. In some cases, unauthorized disclosure of this information such as privacy-protected medical records can have serious consequences for agency operations. In such cases, the confidentiality impact level may be moderate.3

In Canada, the Canadian Privacy Act, Section 63, states the following:

Subject to this Act, the Privacy Commissioner and every person acting on behalf or under the direction of the Commissioner shall not disclose any information that comes to their knowledge in the performance of their duties and functions under this Act.4

In healthcare, confidentiality is not only important to protect individuals from medical and financial identity theft, but research shows that a breach of confidentiality can impact patient care. In some cases, where breaches are all too common, some patients are worried their private information will fall into the wrong hands.5 As mentioned in Chapter 4, providers understand that patients who fear their information might be disclosed in an unauthorized manner may delay seeking care or withhold information.

Specific circumstances require additional confidentiality considerations. For example, patient care information related to HIV, behavioral health, substance abuse, and children’s health often have even more restrictive confidentiality requirements than other types of information.

Whether in the United States under HIPAA or in the European Union under the General Data Protection Regulation (GDPR), confidentiality requirements often continue even after an employee’s role has changed or after an employee leaves a position or an organization. Most healthcare regulatory requirements clearly state that even when an individual no longer has access to the information, he or she is still required to keep the information confidential. For example, in the European Union, the Data Protection Directive, Article 28, Section 7, states the following:

Member States shall provide that the members and staff of the supervisory authority, even after their employment has ended, are to be subject to a duty of professional secrecy with regard to confidential information to which they have access.6

By law, data collectors are responsible, in the United States and in most other nations, for maintaining the confidentiality of the information forever, even if the patient discloses the information. Of course, the patient can give consent for specific disclosures, but generally, regulations do not permit the healthcare organization to disclose the information outside of legal allowances. Further, the organization must protect the information from disclosure until it can legally and properly destroy it.

Integrity

Integrity is a security pursuit that is intended to protect information from unauthorized editing, alteration, or amendment. Imagine a scenario in which malicious code (such as a software virus) is introduced via a malware software application into a medical device. If, for example, that medical device is responsible for dosing medications to a patient, and the virus causes all decimal points to move to the right one space, the resulting dosage change can be significant, and potentially life-threatening, to the patient (for example, a dose of 0.5 ml may have a disastrous effect if only 0.05 ml is indicated). The integrity of healthcare data is important for patient safety and for many other reasons.

Data integrity is achieved by protecting the accuracy, quality, and completeness of the information. Integrity of information is maintained by assuring that any changes made to data are authorized and correct or not made at all. Security controls for integrity follow the data flow through the lifecycle of the information. When you examine the process of data collection and use in a healthcare setting, the data often changes format, and various data elements are combined, parsed out, or even aggregated. Throughout this process, however, the integrity of the data must remain intact. A patient name or date of birth, for instance, must remain the same even if it is collected as Jane Doe, December 14, 1970, at admissions and then changed to Doe, Jane, 12/14/70, after it gets transcribed into the billing system. Although this is an exceedingly simplified example, maintaining data integrity across data flow is one reason for the existence of standards such as the US Centers for Disease Control and Prevention (CDC) guideline ICD-10 for coding patient encounters, and Health Level (HL7), a set of international standards for transmitting health information across organizations and systems. Using standard data sets and transaction codes helps to assure data integrity.

To accomplish integrity, several methods are used, including error checking and validation procedures. The following list includes some generic data integrity approaches that apply, some sample technical methods, and the security improvements that are addressed. The list is adapted from data integrity guidance from the NIST SP 1800-11 (Draft), Data Integrity: Recovering from Ransomware and Other Destructive Events:7

• Corruption testing This procedure includes the use of extract-transform-load (ETL) data testing applications, reliable backups, and TCP/IP checksum testing to examine unauthorized changes. The process includes logging and auditing for a retrospective review of data. The testing uses file hashing and encryption algorithms to identify cybersecurity events and data alteration.

• Secure storage This process includes encrypted backups and immutable (unchangeable) storage solutions with write-once, read-many (WORM) properties. Technical processes, such as redundant storage—namely RAID—are storage configuration solutions that satisfy secure storage and data integrity protection.

• Logging A significant component of data integrity is to collect and enable the review of access to data and user activity. In this case, logging is used in alerting and analysis to discover any unusual or unauthorized activity and in legal discovery and e-forensics. It can be generated from individual systems. Several analysis tools can be used, such as security incident and event monitoring (SIEM) applications and network data capture systems.

• Backup capability Data integrity is preserved through procedures that enable data to be replicated and recovered periodically. Related to secure storage, backup tools support full, incremental, and differential schedules for backup. Another approach to backups is mirroring, which is similar to a full backup except that an exact copy of the data is stored separately, matching the source. Other backup procedures store files in one encrypted storage repository.

In healthcare, information integrity has a strong association with patient care and patient safety. While unauthorized disclosure (confidentiality) may lead to an unauthorized individual having access to healthcare data, and while the unavailability of data may hinder care, the fact remains that if the data we have on a patient is not accurate, it can in fact lead to death. For example, an unconscious patient who has an allergy to a specific medication undergoing treatment cannot advise staff about his allergy. In such a case, the availability of accurate data can save his life.

Availability

Information is valuable only if it is accessible and timely. The data can be accurate and kept private, but if it is not available when it is needed, this third part of the CIA triad has failed. Availability of data is generally described as proper access at the time the information is needed. In healthcare, we can certainly understand the failure of protecting PII and ensuring that patient records are accurate. But experiencing network downtime with no contingency operations plan in place would mean the information is not available at the point of care. If the provider does not have the ability to access the information he or she needs, patient care is affected and patient safety risks increase.

Paper-based health records and procedure manual processes can exacerbate the availability issue, because they may not be as easily accessed or enacted as digitally stored information. Not having availability of information can result in improper diagnosis, inefficient or redundant tests, and in some cases adverse drug-to-drug interactions. A major assurance of availability from an information security and privacy perspective is reached through implementation of business continuity and disaster recovery procedures. These focus areas require the use of administrative, technical, and physical controls to oversee high-availability system architectures, reliable backups, secondary operating locations, and practiced recovery procedures.

Availability also relates to having only the necessary information available. Having too much information available or having unorganized raw data can pose a security issue. Privacy and security frameworks such as the DPD, GDPR, and HIPAA, for instance, address the issue of having relevant information versus having more information than is needed. Consider an example: A provider who requires a relevant prior MRI image when treating an orthopedic injury must certainly have the most recent MRI on the affected body part to compare against the latest image. However, that provider would be overwhelmed by having to search through all the images on record for unrelated care of that patient. If nothing else, the search would be time consuming and wasteful.

In addition, by limiting availability, we can prevent unauthorized disclosures or data breaches simply by not sharing unused or extra data in the transaction. For illustration, consider an example from the past. There was a time in the United States when credit card numbers were printed in their entirety on receipts. This was useful for identification purposes and convenient for the payer when proving a purchase or seeking a refund. But eventually the practice ceased, because proof of purchase could be determined in more discrete ways, such as using only the last four to six digits on the card with the other digits masked. The practice of including the entire credit card number introduced too much risk of data loss and identity theft. This is a good example of the security impact of unnecessarily disclosing too much information.

Accountability

While generally not considered part of the CIA principles, accountability is often included as a high-level security principle. Within the healthcare environment, compliance standards often treat accountability as a basic principle. Accountability in information security and privacy refers to the determination of who is responsible for proper and improper information access and use. For example, a clinician treating a patient who enters data, thereby editing the patient record, is accountable for the actions they take to enter the information. The clinician should be responsible for ensuring that the information is accurate when it is entered. Accountability intersects with the CIA principles. The individual with access should ensure the integrity of the data entered, while leveraging availability of the system, and ensuring confidentiality that the information is available only to those with proper authorization.

An organization must also demonstrate accountability for the information it collects and uses. Data use actions must be logged and audited to various degrees to prove that measures of accountability are in place. Accountability incorporates tracking actions and identification of responsible parties in retrospect after cybersecurity incidents and data breach recovery. Auditing information disclosure reports enables us to view and remediate any disclosures that may have been unauthorized, or at least to prove to government regulators that disclosures are tracked as required. Nonrepudiation also applies to accountability. By providing protections such as digital signatures and encryption algorithms, an organization can ensure that the sender of an electronic message cannot deny sending it and that the receiver cannot deny receiving it. In this way, nonrepudiation assists organizations by providing ways to prove accountability.

Understanding Security Concepts

The concepts that shape information security can seem abstract and complex. To help you understand the approaches and practices of information security, this section describes some basic approaches and methods to security that you should be familiar with. These concepts are central to information security practices and compliance, and many progressive security processes are based on them.

You should be familiar with three basic aspects of security that will help you better understand the information that follows in this and other chapters: security controls, defense-in-depth, and security categorization. Understanding these important concepts may also improve your ability to do a good job in supporting and providing security and privacy in your organization.

Security Controls Security controls include management controls (often administrative, such as policies or procedures), operational controls (the processes we follow to do things), and technical controls (hardware and software implementations to assist in securing computer-based resources). The organization’s cost considerations and interrelationships between security controls have a great deal of influence on the ability of the organization to deliver on its mission.

Defense-in-Depth Defense-in-depth consists of implementation of various defensive controls working together for your systems or applications to protect the overall security of organizational assets. In the IT world, examples of defense-in-depth include the integrated use of antivirus and antimalware software, firewalls, encryption, intrusion detection and prevention systems, and biometric authentication. An example of defense-in-depth in your home is an alarm system for the house that includes smoke and carbon monoxide detectors, which may or may not be separate from the smoke detectors; cameras that you can check remotely over the Internet; and smartphone apps that enables you to control lighting, entry doors, and so on. Figure 5-2 demonstrates the defense-in-depth principle, and although it may not depict a system used in larger organizations, it provides a basic understanding of how the layers of the system must rely on one another to be effective.

Figure 5-2 Simplified defense-in-depth

Security Categorization Security categorization enables you to determine the level of security required for a system based on the information (or data) type the system uses or maintains. This book specifically addresses working with healthcare information, which includes sensitive information such as protected health information (PHI) and electronic protected health information (ePHI), personal health records (PHRs), personally identifiable information (PII), and a number of other terms, depending on where you work (which can include the specific nation, continent, province, or state).

In determining the security categorization of a system, an application, or an organization overall, you must first identify, or categorize, the type of information or data involved. You then review the security controls required, including those you have implemented and those that should be implemented (referred to as “planned”). Organizations must determine the category, or categories, of information stored or used in their system, such as the healthcare information categories discussed in NIST SP 800-60, Vol. 2 Rev. 1, Section D.14.4. A similar system is shown in Figure 5-3. This method for security categorization can be adapted to fit any organization.

Figure 5-3 Categorizing information for security

Note that one system may include multiple types of data—for example, your health information system may also store insurance data, billing information, employee records, and so on, which may be categorized differently than PHI and PII. To categorize a variety of data, based on the information type, the organization sets a “provisional impact level.” Here’s an example of how a healthcare security categorization system process might work:

If Confidentiality = Medium, Integrity = High, and Availability = Medium, the overall security impact may be considered High if data integrity carries the most weight. An example in which data integrity might be weighted higher than the other information protection categorizations might involve a medical device like the linear accelerator used in precision radiology treatment of cancer. The data that informs the actions of the device must be protected from tampering, arguably against all other considerations so the patient does not get more or less radiation and the treatment is in the exact right area. This initial categorization can be accomplished by an individual with knowledge of the data system. However, the next step should be done by representatives that serve different functions within the organization, such as IT, biomedical engineering, administration, patient care services, and so on.

The next part of the process involves two phases: the first phase involves reviewing the controls based on the provisional impact of High. In the second phase, the organization adjusts the controls based on its organization and systems. The organization also determines which controls are already in place (such as access control policies) and which ones are planned (such as training for system administrators).

In the final part, the organization finalizes the security categorization. Outside that process, the organization goes through the security controls and implements and documents how and if each control meets the criteria established.

Although this process may appear cumbersome, it is necessary to ensure that the organization adheres to security standards and can prove it to patients, government agencies, and other stakeholders. Healthcare organizations must develop strategies for protecting sensitive data. Security categorization supports developing processes, procedures, and policies as part of the organization’s strategic vision. Categorization leads to effective implementation of established security standards. The result is useful documentation of the organization’s security approach that guides the implementation of security controls in a tiered fashion, based on the systems operated and the data protected.

Identity and Access Management

An organization’s IAM strategy defines and manages the roles and access privileges of network users and the circumstances in which users are granted or denied access privileges. NIST guidelines describe IAM as a set of critical cybersecurity controls that function together to ensure the right people and things have the right access to the right resources at the right time.8 A successful healthcare IAM strategy must incorporate information use requirements with a complex technical environment and progressively demanding compliance requirements.

Although many technologies are available to help an organization create a general IAM strategy, each organization’s IAM strategy must align with its particular requirements, especially with regard to accessing PHI, PHRs, and PII. Following are a few examples of IAM:

• Password-management tools These can be used to create complex passwords on request, and they provide a secure, encrypted repository for current passwords for retrieval as needed.

• Privileged account management systems For users and accounts with elevated levels of permissions, or for administrative accounts, these systems provide management assistance, often with automation, and an auditable record of account use.

• Provisioning (and deprovisioning) software As employees are hired, this software maps permissions and access to various resources based on users’ roles or organizational policy (provisioning). User permissions and access are reviewed periodically, and when they’re no longer needed (such as upon employee termination), the software supports removing the user’s permissions and access (deprovisioning).

• Certificate management Certificates are digital keys used to secure data through encryption. Certificate management includes approval, issuance, inventory, distribution, controlling, suspension, and retirement. Certificates held by subscribers are documented by a registration authority, or responsible certificate authority, which is normally an external entity that issues trusted digital keys based on the authority’s initial root certificate.

• Single-sign on (SSO) applications This access management solution enables secure authentication to more than one system managed by an organization using a single user credential. The underlying identity framework is usually the Lightweight Directory Access Protocol (LDAP) and the necessary LDAP credential databases to coordinate information services.

• Multifactor authentication (MFA) This additional layer of identification and access protection enhances the use of a password and user ID as credentials. MFFA requires two or more pieces of evidence, to include something you have (such as a key fob or smartcard), something you know (such as a passcode or PIN), or something you are (such as facial recognition).

IAM helps the organization administer and secure credentials, privileges, and authentication processes in a consistent way. The various systems and tools that can be used to provide IAM can scale to the size of the organization and distribute access across multiple business and clinical entities. Centralized IAM models use Active Directory, which holds user credentials in one repository. Federated architectures use applications such as PingFederate to distribute user credentials across multiple systems with authentication requirements. Federated IAM may use single sign-on (SSO) to help reduce administrative fatigue from having to provide credentials for each system. The SSO solution would provide a level of trust that would be shared with each individual system even though user credentials are not stored in each. In any case, an IAM system provides a capability to guard against excessive authorization levels and protect against compromised user credentials.

The IAM security controls and mechanisms that accompany security processes constitute a valuable information protection resource to monitor and enforce policies and procedures in the healthcare organization. The processes of granting access, creating access roles, reviewing access periodically, and terminating access were once a manually accomplished, instance by instance, using rosters and spreadsheets that was a very labor intensive, frustrating process. Today, many IAM systems are available to help organizations automate and improve security by reducing human error and neglect due to the monotony of the required tasks.

Access Control

The ability to use a technical asset is defined as access. The term usually refers to a technical capability such as reading, creating, modifying, or deleting a file; executing a program; or using an external connection. The term access is often mistakenly confused with “authorization” and “authentication.” Access control protects sensitive information and is made up of all of the actions and controls put in place to regulate viewing, storing, copying, modifying, transferring, and deleting information. Controlling access also includes limitations of time and situation. For instance, an end user may be allowed to access a system at a predetermined time, but not afterward, or for certain reasons, but not others. An example of situational access is provided in an emergency room, where physicians are given some access to a patient’s behavioral health records at that point of care, but the same physicians would not have access to those records in a primary care setting.

Access control includes the following:

• Authorization Policies and procedures for determining which permissions an end user will have and when (that is, a user’s level of access).

• Authentication The process used to verify the identity of end users and validate that they are who they claim to be.

• Identification The act of indicating who a person is—in computing technologies, a person is usually identified by a username or identity code (for example, firstname, lastname). (Note that the identification does not include combining this username with a password, which is considered authentication, and combines a simple identity code with a verification code, or password.)

• Nonrepudiation Use of identification authentication methods and tools in a digital transaction to provide indisputable or assured proof of who the sender and/or the recipient of the data is.

Organizations implement access controls to permit legitimate use by authorized personnel while preventing unauthorized use. Keep in mind that “legitimate use” can change depending on the situations at hand, however, so access control must occur at various levels and at key intervals of access. For instance, access control can be implemented at the operating-system level to safeguard files and storage media, and at the database level to guard against unauthorized access and potential corruption of data. In addition, well-designed applications and web services typically enforce several independent access controls to layer the protection, even if an SSO technology is used.

Access control is an effort to prevent unauthorized use, but it also allows for sharing of sensitive information at an acceptable level of risk to the organization. Without access controls in place, most organizations would consider it too risky to share PHI or PII, and that would prevent or degrade patient care in a digitized healthcare environment. For access control to be effective, an authentication process must support the controls.

Identifying personnel properly and granting them appropriate access levels can involve two types of authentication: The first, MFA, is present when at least two conditions are satisfied independently by the same individual who wants to access a network, system, or application. In many contexts, MFA and 2FA are synonymous based on how many conditions must be satisfied. When an organization implements proper MFA, the process is recognized as strong authentication. The second type, computer-based access controls, or logical access controls, determine who (a person) or what (a process) can have access to a category of data or a computing system. These controls may be built into the operating system; may be incorporated into applications, programs, or major utilities (for example, database management systems or communications systems); or may be implemented through add-on security packages. Logical access controls may be implemented internally in the computer system being protected or they may be implemented in external devices.

Logical access controls can help protect the integrity and availability of the following:

• Software applications that include EHR, as well as underlying operating systems, such as Windows and Unix, from unauthorized changes and misuses

• Sensitive information through management and limitation of access by people and system processes, which reduces risk of unauthorized access or disclosure

Access controls can be administered globally for access to an entire network or at a single system level. A single system access control could be implemented to restrict access to one or two people for the ER pharmaceutical cabinet, for example. Access controls augment and enforce the administrative and physical controls that work together to protect sensitive information and, in the case of the pharmaceutical cabinet, access to controlled assets.

Access control models are used to enforce authentication and authorization guidelines, and the controls used are automated. (Imagine how difficult it would be to check the credentials of each end user manually every time they desired access to data or to a system!) The common models for access control are mandatory, discretionary, role-based, attribute-based, and context-based. Some hybrid models combine features of each, but these are the most prevalent structures and approaches. You will recognize some overlap and common features in each and how they work together.

Mandatory Access Control

In mandatory access control (MAC), a central authority, such as the organization’s chief information officer (CIO), creates the access control policy, which is implemented by the IT department. The actual access control is enforced at the hardware or operating system level as a technical control. Most often, MAC is used in organizations that handle classified information, such as the military and, in some cases, a healthcare organization, where rigid, centralized controls are used in some applications and networked resources. In this model, individual system or data owners cannot change the level of access allowed.

A MAC model depends on proper security categorization of information, because the access policy in this case most likely will be determined by the sensitivity of the information. In healthcare organizations, information confidentiality is vital, so the central authority can determine who is allowed access to information. Improperly categorized information can lock out individuals who may need access, or it may allow access to those who have no need to know the information. Neither case is desired. On the positive side, having a central authority enforce the access control makes standard, equitable policies possible.

Discretionary Access Control

A discretionary access control (DAC) model is used if access control is more decentralized or delegated to the owner of an individual system or to the owner of the data itself. Privileges are granted by the system owner or data owner to whomever he or she considers authorized to access the information. DAC is more flexible than MAC, but it introduces greater risk. For instance, once someone has access to view a file, the system owner has little control over whether that person decides to copy it and share it with others.

Role-Based Access Control

Role-based access control (RBAC) is probably the most prevalent type of nondiscretionary access control model, in which system owners determine the level of access based on a user’s or group’s job function or organizational role, versus individual attributes. Role-based access is implemented to match access to data or systems according to the functional or structural role an individual plays within the organization. For example, a doctor who works in the emergency department will have the same access to data granted to another doctor in the emergency department, but this access would not be granted for a doctor who works in the ophthalmology department. In some cases, there may be one type of access control for physicians in pediatrics and another for nurses in the same department. An advantage to role-based access controls (RBACs) is that when a new doctor is assigned to the emergency department, the menus, access, and capabilities of another doctor in the department can be copied if the new doctor is to have identical privileges.

Rules-Based Access Control

Rules-based access control (RuBAC) uses of rules, policies, or attributes to set predetermined conditions for user access. RuBAC is a policy-based control that sets policies or rules that enable access and permissions to particular users, actions, objects, or contexts. RuBAC differs from RBAC in that RuBAC depends on established rules to grant access, rather than user roles. In RuBAC, for example, a rule could allow network or resource access to some IP addresses but block others. Rules require more administrative design and maintenance, as specific combinations of attributes are built to allow precise levels and conditions for access. Consider the scenario, for example, in which a newly hired physician is granted identical access as other physicians with the same role under RBAC. RuBAC can permit additional controls to increase or decrease access according to differences in the job functions or dynamic needs for access, such as time of day or location from which the physician desires information. RuBAC uses properties that are described to the access control engine and become preset rules.

Context-Based Access Control

For context-based access control (CBAC), controls are not established at the user level but are based on settings within the firewall that control traffic flow based on application layer protocol session information. CBACs demonstrate that access controls are not always connected to a person. They are used to manage access by systems, services, and other computing assets. CBAC limits traffic using access control lists (ACLs), which implement packet examination at the network layer or at the transport layer. CBACs can inspect data at the network or transport layer but can also examine application layer protocol information.

Data Encryption

Encryption is another mechanism that can be used for logical access control, for both data in transit and at rest. This technical security control gives an organization the ability to limit who has access to sensitive data and to protect information confidentiality. Strictly speaking, encryption does not focus on protecting or providing data integrity or availability; however, cryptographic algorithms, called hashing algorithms, do provide for data integrity. Sensitive data, such as PHI and PII, must be encrypted under the prevailing information security regulations and standards, such as HIPAA and ISO 27001, to name just two. There are two basic states in which data can (and should) be encrypted: when it is at rest and when it is in transit.

Data at rest refers to data that is stored on any media and is not currently being processed or transmitted. If data at rest has been encrypted, media that is lost or stolen and recovered by someone unauthorized to view the data will be unusable or unreadable to the person who finds it, because that person does not have authorized access to it. In this case, the only people who can access the data are those who possess the cryptographic key to decrypt it. The principle concept is that the data is protected from unauthorized access and disclosure by being rendered unusable to anyone other than someone with authorized access. This type of encryption can be applied in two common ways: encrypt the entire volume of information on the disk with the same encryption key, or encrypt at the file level. Encrypting the entire volume of the disk has benefits in that human error is minimized, because users may forget or neglect to encrypt data if any part of the process is manual or discretionary, as file-level encryption can be. An advanced form of encryption of data at rest includes encryption of database files within the file at a table, column, row, or even individual cell level. At this advanced level, you will encounter the tokenization process, where the identifying data is encrypted and replaces the identifiable data within the file or database. A re-identifying key is stored separately to enable reinsertion of the column-, row-, or cell-level data in the original file or database. However, to satisfy most leading security standards and regulations, data at rest encryption for disk level and file level is sufficient.

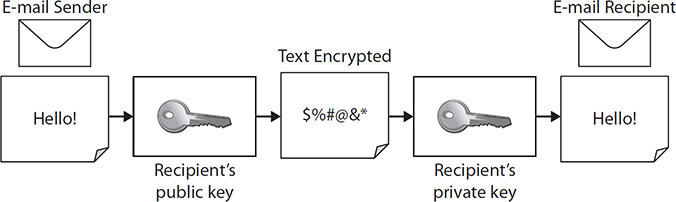

With data in transit encryption, some sensitive data must be transferred via an e-mail message, while other data is transferred over a networked connection. During these transfer processes, PHI and PII data must be protected by encryption. The cryptographic key process is similar whether the data is at rest or in transit. To achieve encryption of data in transit, an encryption key is attached to a digital certificate related to public and private keys for each sender and recipient. The private key is secret, but the public key is not and can be used by anyone to encrypt the message. Only the private key of the intended recipient can decipher the message. These two keys are different, but they are related by a mathematical algorithm that makes this process possible. A certificate is the mechanism used to uniquely identify an encryption key and associate it with the asset owner, which assures confidentiality and prevents unauthorized disclosure.

It is important to note that the leading standard for encryption keys is included in NIST publication FIPS 140-2, Security Requirements for Cryptographic Modules. If an encryption key has been tested and certified under FIPS 140-2, it can be used to provide safe harbor protection under HIPAA in the United States. Keep in mind the distinction between HIPAA safe harbor and the former DPD safe harbor provisions, as described in Chapter 4. There are important resources that provide guidelines for implementation and use of encryption for data at rest and in transit. You will not need them on a daily basis, but you will use them to ensure that your configurations and solutions from product suppliers or developers meet your legal and local policy guidelines for encryption. The following are a few to consult:

• NIST SP 800-175B, Guideline for Using Cryptographic Standards in the Federal Government: Cryptographic Mechanisms

• NIST SP 800-111, Guide to Storage Encryption Technologies for End-User Devices

• NIST SP 800-52 Rev. 2, Guidelines for the Selection, Configuration, and Use of Transport Layer Security (TLS) Implementations

• NIST SP 800-77, Guide to IPsec VPNs

• NIST SP 800-113, Guide to SSL VPNs

• ISO/IEC 18033-x, Information technology – Security techniques – Encryption algorithms (This publication has multiple parts, indicated after the hyphen: Part 1 covers general techniques and more specific technologies are covered in later parts.)

Figure 5-4 depicts a simple e-mail transfer using encryption for data in transit. It illustrates components of public key infrastructure (PKI), which consists of all technology, personnel, and policies that work together to create, manage, and share digital certificates.10 The encrypted information can be decrypted, allowing access only by those possessing the appropriate cryptographic key. This is especially useful if strong physical access controls cannot be provided, such as for laptops or mobile media devices. This ensures that if information is encrypted on a laptop computer and the laptop is stolen, the information cannot be accessed, because it is rendered unreadable without the decryption key. Encryption can provide strong access control, but it must be accompanied by effective key management.

Figure 5-4 The transfer of encrypted data in transit

Training and Awareness

An active employee training and awareness program is one of the most cost-effective security controls that any organization can implement. Training and awareness can help prevent the breaches caused by employee mistakes and reduce complacency around handling PHI and PII. Training and awareness practices must be used in tandem to conduct a comprehensive workforce information security program.

Security training teaches employees skills and techniques that can help them be more successful at keeping healthcare information private and secure. Often, classes are delivered based on the end users’ level of access, whether they are system administrators or standard end users, for example.

Training is most effective when it’s delivered regularly. In fact, many required training courses are mandated for annual or more frequent recurrence, sometimes by law. This training is tracked, and compliance is reported to relevant leadership or to the appropriate government agencies. HIPAA, for example, requires that a healthcare organization provide training to all workforce members on relevant security policies and procedures.

Training can be especially effective when it’s offered on an ad hoc or as-needed basis. A class on how to encrypt an e-mail containing PHI may be helpful as a weekly in-service topic for a specific audience, for example. The more often ad hoc trainings are offered on a variety of timely topics, the more effective they are at influencing proper information security behaviors.

Training can address many levels, from basic security practices to advanced or specialized skills, and often focuses on role-based access requirements. For example, a system administrator who manages a server farm with a specific operating system and desktop/laptop environment may need security-relevant training to perform her role. A network administrator who manages a network that uses specific vendor products also requires security training that is specific to the systems he manages, but this training would be quite different from that of the server administrator. Although these two examples are specific to technology, training is also important for employees with regard to specific duties performed in nontechnology roles. An example of such training could include payment card industry (PCI) requirements for staff who work in billing or cashiers in the insurance support areas of the healthcare organization.

Security awareness is the preferred outcome of training activities. Awareness is often incorporated into basic security training and can involve any method that encourages employee awareness of best security practices. Most awareness programs include marketing and communication of relevant information through information security campaigns using posters displayed in prominent areas, newsletters, banners that pop up during login, or announcements made in staff meetings, for example. Although delivery of security information could also be viewed as a form of ad hoc training, the targeted nature of the messages and the audience provide a distinction.

Awareness improves when all employees or certain groups of employees are regularly informed of proper security practices at work—such how to create appropriate passwords, what to do in the event of a virus or other security issue, or how and when to notify appropriate staff of a potential security violation. Many organizations require annual reviews of policies or procedures to test employee awareness. Awareness also improves employee security practices, such as logging off a computer system when it’s not in use.

Sanction Policy

Even the best training and awareness programs cannot prevent every employee-caused security incident. To address employee violations of the organization’s privacy and security practices and policies, a sanction policy is required. A sanction policy is a set of prescribed actions that management can take with regard to employee security violations.

Although some incidents are so serious that immediate termination is appropriate, the majority of incidents are accidental by nature. Therefore, the policy should outline progressive levels of discipline and provide for management discretion whenever possible. To facilitate this, the organization must categorize the types of infractions and match them against the various types of penalties to be considered. In the end, a good sanction policy will be fair and consistent, not only with regard to who commits a violation but also with regard to other organizational policies for human resource types of disciplinary actions.

As a part of the organization’s training and awareness program, data from the sanction policy enforcement process is invaluable in determining trending issues and reasons for breaches, as well for aggregate reporting of outcomes. Research has demonstrated that informing employees specifically about the sanction policy and actions related to it help improve employee compliance with information protection policies in healthcare settings.11

Logging and Monitoring

Within the information security environment are many different event logs and types of logging. Basically, a log is a record that is generated by the processing of events on the network and on systems, applications, and end user devices. Each specific event is recorded. The logs that relate to potential security events, such as failed login attempts or denied access incidents at the firewall, can be helpful tools. One of the principle duties of an information security and privacy professional is to review logs actively. The information gained from logs is invaluable in supporting performance improvement, detecting abnormalities from a security perspective, supporting forensics, and responding to legal requests for historical data.

Monitoring and tracking the health and status of the system and its operations may require specialized training by staff members to understand what information is being collected, how to determine whether a potential violation or suspected event may have occurred, and how to identify issues up to and including vulnerabilities to a specific system or application. In short, logging and monitoring are functions of generating performance and security data and acting on any events that trigger alerts.

Reviewing logs is a daunting task, because the sheer volume and complexity of logs have increased over time. Manual review approaches are infeasible as the need to monitor the computing environment has increased in importance. Monitoring today requires automated methods, in which rules and/or parameters are set to distinguish normal network behavior from potential incidents or events. For instance, a good monitoring process would provide an alert when a simultaneous logon by the same end user occurs on the network inside the computing domain and on the organization’s virtual private network. This incident would indicate the likelihood of spoofed or a stolen set of credentials. Although automated monitoring is the norm, administrators require the appropriate training and knowledge to set up the monitoring tools and to know and understand the complex rules of behavior, what normal behavior is, and what should alert action.

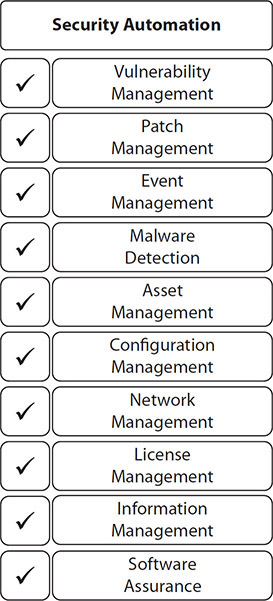

A growing trend within logging and monitoring, because of improved automated tools and process in security automation, is information security continuous monitoring (ISCM). NIST SP 800-137, Information Security Continuous Monitoring (ISCM) for Federal Information Systems and Organizations, defines ISCM as the ongoing awareness of information security, vulnerabilities, and threats to support organizational risk management decisions. More important is that the directive outlines domains of potential automation and best practice philosophies around the domains, as depicted in Figure 5-5. Although this publication only introduces these domains, the key point is that logging and monitoring in these domains is increasingly automated and continuous.

Figure 5-5 Security automation domains

Vulnerability Management

A vulnerability provides a description of a potential risk that exists within the organization and its information assets based on the state of a computing asset. The vulnerability makes it possible for a mistake by an internal employee or an attack to expose a security weakness. We often think of vulnerabilities when we assess systems and applications being up to date from a security perspective. But along with software and other technical controls, a vulnerability can be related to the lack of physical and administrative controls. For example, an unlocked office door could allow physical access by an unauthorized person, and the lack of a privilege access review process could lead to credential theft or misuse.

The measure of vulnerabilities is a central component of evaluating risk, particularly the likelihood of an event happening. Vulnerabilities are simply indicators that alert risk managers to consider actions and to balance risk, factoring in the likelihood of occurrence versus cost implications. Commonly, the cumulative impact of vulnerabilities increases concern and drives risk management priorities. In some cases, a single vulnerability could be significant enough that risk management actions are elevated to the highest priority. Figure 5-6 shows the interrelationships of vulnerability and risk.

Figure 5-6 Relationships among threats, vulnerabilities, safeguards, and assets

If we implement the proper safeguards, we can reduce vulnerabilities and mitigate the risks of most threats. Vulnerability management can never eliminate all vulnerabilities, however. Some threats will exploit a vulnerability that we are unable to manage by a security control or safeguard. For example, if a user is using an obsolete operating system that is no longer supported by the manufacturer, operating system upgrades and security patches are no longer feasible. If the outdated system is valuable or important to the business or clinical practice, the vulnerability may have to be accepted, and compensating controls such as network segmentation and limited access may be the best approaches to mitigating the risk, but not eliminate it.

The better an organization manages its implementation of safeguards, the less the chance that a vulnerability will affect its systems. In reality, however, there will always be new vulnerabilities leading to risk. The role of a healthcare information security and privacy professional is to design, implement, and enforce the security controls of safeguards that reduce risk of vulnerability exposure. To measure and evaluate whether the proper safeguards are in place and operating effectively and efficiently, a security controls assessment is a necessary part of your role.

The practice of vulnerability management includes the process of patching systems and applications with updated code or software changes. This patch management process is a technical control that is vital to maintaining a properly safeguarded network and computing environment. Operating systems and various applications all are designed and implemented with a secure configuration when they are introduced to the marketplace. However, vulnerability management, specifically patch management, is a dynamic, ongoing process that addresses current identified vulnerabilities in established application code. Sometimes the vulnerability is found through an exploitation, such as a hacking attempt or the introduction of malicious code. Other times, the vulnerability is discovered by software developers who bring the vulnerability to light before anyone exploits it (the preferred scenario, of course). In such cases, the patch required to fix the vulnerability is coded, developed, tested, and distributed to the marketplace as quickly as possible in hopes of preventing malicious activity.

You may have heard of a vulnerability being “in the wild.” That indicates an active exploit in a production environment of one or more organizations. This is contrasted with creating a hack or exploit in a lab or research condition. “In the wild” exploits increase the criticality of patches, because the likelihood of further exploits for other organizations is increased significantly.

The process of patch management has benefited from security automation. Patches (once tested and validated) can be automatically distributed across a local area network and automatically updated on all networked resources. With some exceptions for types of operating systems, application compatibility, and patient safety concerns (see the following Exam Tip), this is efficient and effective.

Segregation of Duties

The segregation of duties is a sort of checks and balances system implemented to reduce the risk of accidental or deliberate misuse of information. It involves processes and controls that help create and maintain a separation of security roles and responsibilities within an organization to ensure that the integrity of security processes is not jeopardized, and to ensure that no single person has the ability to disrupt a critical computing process or security function. A segregation of duties policy, for example, prevents an individual from making system changes and then changing the audit logs so that there is no record of the changes. Or, for example, in another area of the healthcare organization’s operations, an individual who is permitted to request a payment to a vendor or customer should not be the same person who issues the check for that payment.

Vulnerabilities are not always technical in nature. The ability of one individual to cause unauthorized changes or disruptions, inadvertently or intentionally, is an example of a nontechnical vulnerability to the overall information security program.

Least Privilege (Need to Know)

The term “least privilege” may be more familiar to many of us as the “need to know” principle. Least privilege refers to each user having only the permissions, rights, and privileges necessary to perform his or her assigned duties, and no more. In addition, users of information should be granted access only to the information they need to perform their duties. Least privilege does not mean that all users have extremely limited functional access. Some employees will be granted significant access to data if it is required for their position. Following this principle can decrease the risk of and limit the damage resulting from accidents, errors, or unauthorized use of system resources.

Many of the concepts within information security (and privacy) relate to avoiding too much access or too much disclosure, where such access or disclosure is not needed. Generally, least privilege or minimal use concepts must support every information protection program. It is an imperative to protect information from individuals who have no need for it, have no reason to use it, or no longer need it. Limited access policies go hand-in-hand with least privilege policies. These policies require that only as much information as needed should be disclosed, transferred, used, and stored, and as soon as the information is no longer required, it should be destroyed.

One of the distinctive elements of providing information protection in a healthcare environment is that least privilege concerns can be highly dynamic. For example, a physician may have access to records one day because she is dealing with a specific patient’s case or a specific responsibility, but the next day that access may be restricted if her duties change. This commonly happens as physicians see patients in emergency situations, such as when a behavioral health patient arrives in the emergency room. Another example is that sometimes, for peer review under medical records management processes, physicians may be granted temporary access to pediatric records, where their normal clinical duties would not include children under the age of 18. This process is important within a healthcare setting for continuity of patient care.

Business Continuity

Business continuity, or continuity of operations, includes all the actions taken to enable a healthcare organization to perform clinical and business services with minimal to no interruption or degradation. The organization must be able to perform certain activities in an effort to continue its mission should an unforeseen event occur. The ability to deliver medical care, for example, could be affected by electrical outages, weather-related events, community-based events such as riots, accidents, or even a serious outbreak of a virus that affects staffing levels. The healthcare organization must plan for and have procedures in place for such events.

Few industries are required to function 24/7, but in healthcare, this is a must. Not only is this affected by regulatory pressures, but many governments at the national, provincial, and state levels also require reporting procedures when a healthcare organization is not functioning. For our purposes, we concentrate on information asset functioning, so network or application downtime is the primary issue. In the United States, healthcare networks are included in the NIST publication Framework for Improving Critical Infrastructure Cybersecurity, which includes rigid guidelines to help assure continuity of operations.

As with all security controls, business continuity plans also include time-related elements that help ensure that a facility can recover from an issue quickly. Having a continuity of operations plan (COOP) for disasters is a preventive aspect of security control, and monitoring network activity is a detection function. Once a disaster happens, the optimal method for business continuity is a redundant system or an alternative source of recovery. For example, if an electrical outage occurs, a healthcare organization may switch temporarily to power provided by generators running diesel fuel. This helps ensure that patient care and business processes are not likely to be interrupted. When network resources are not available, because of power outages or for another reason, manual processes should be implemented. As an example, a lab system that processes samples using bar code technology may also have a manual process that uses hand-written intake forms. Personnel should be trained and able to implement the manual forms in the event that the bar code–scanning system is unavailable.

Disaster Recovery

Disaster recovery is another important security control that supports business continuity. The ability to recover systems, specifically information technology systems, is a vital aspect of operations in any business, but in healthcare organizations, especially, it can mean the difference between life and death.

Power outages, weather events, or other incidents can cause damage to the data center where the systems are housed. Even if the system itself is not damaged, restarting or configuring the system is an important consideration in a disaster recovery plan. Once a system has been remediated (and after the threat is no longer present), a process for bringing the system back online is needed. This is true for every system or application. For example, if a database server is corrupted by an attack, the server must obviously be tested and evaluated prior to putting it back into the production environment.

The best COOP, specifically for system recovery, includes a plan for a scheduled, prioritized system restart. Clinical systems and business systems would be restarted first. Then additional systems could be started, while the impacts of the restarts are monitored. Depending on the duration of the outage or system failure, a significant amount of new and sensitive healthcare information may have been collected. That data will need to be integrated into the entirety of the patient record and related business records.

System Backup and Recovery

System backup processes and recovery capabilities are fundamental components of a healthcare organization’s resiliency. To illustrate, a University of Texas researcher estimated that large hospitals’ losses can reach $1 million per hour during an unplanned outage of their EHR.12 Having secondary or duplicate copies of data, high-availability systems, and alternate operating locations are all part of a robust resiliency program to ensure the availability of data and reduce downtime.

Events such as ransomware attacks or natural disasters may cause primary systems or sources of data to be unusable. System backups and recovery plans alleviate the impact of these risks. Backups are needed for other reasons as well, such as for audits, forensics, and other regulatory information requests. For the most part, we will concentrate on the use of backups and recovery as part of a security control to maintain the CIA triad. Factors that determine the strategies for system backup and recovery include the sensitivity of the information, the risk tolerance of the organization, and operational requirements.

Backup Storage Approaches

There are several types of backup approaches with distinct purposes. With advancing technology such as cloud platforms and cloud storage solutions, you will encounter variations in these approaches. Learning the traditional basic backups cycles will be a good start, however, so we will cover the most traditional forms here—full backups, incremental backups, and differential backups:

• Full backups Backups should include a full copy of the entire system, including the entire database, operating system, boot files, and an exact copy of the system drive. Usually, the first backup copy is a full backup. Subsequent backups are usually not full backups. Storage requirements are a significant concern if previous backups are not deleted. To be safe, full backups are good practice before any major upgrades or migrations of systems occur. This backup can be used to fully restore the last known good copy of the system files. The restore process from a full backup is the slowest of the options presented here.

• Differential backups This process backs up only the changes made since the last full backup and copies those changes in each subsequent backup. The system accumulates updates periodically with a collection of all modifications and variations in multiple, subsequent copies. Differential backups occur each day until another full backup occurs. Differential backups help to compress the time it takes to restore a system, but depending on the frequency of differential backups, storage costs can become a problem. To restore from a differential backup, you would normally use the latest differential copy and the full backup copy together.

• Incremental backups An incremental backup copies only the files, data, and system information that have changed from the previous backup. You can run incremental backups more often because of the relatively small storage requirements and the cost-effectiveness of the solutions. Restoration from incremental backups would include the last incremental backup and the last full backup.

Backup Storage Locations

Related to the ability to restore backups and operate during a business disruption is the location of backups. A risk assessment and business requirements evaluation will help determine which location approach the organization should use. The correct choice depends on how much downtime the organization can accept, any pertinent regulatory requirements, and which solution or combination of solutions offers the best options regarding data availability.

The most secure locations for data storage during a catastrophic event are offsite locations, particularly because onsite data centers may be unusable or destroyed. There are three types of offsite configurations:

• Hot site A hot site is a computing environment that is almost identical to the onsite environment. This is the best choice for critical systems. To minimize disruption of services, the hot site runs concurrently, along with the main production environment. The use of a hot site is the costliest of location options, because these sites continuously operate and secure data assets. System backups at a hot site may occur via two main approaches: If the hot site is intended to serve as a high-availability solution, backups are continuous or a secondary data flow in real time. In this scenario, hot site systems provide a redundant capability to maintain uptime requirements to a level close to 99.999 percent. More than likely, hot sites require daily backups so the site can be made operational in a very short amount of time, usually measured in a few hours. A good hot site would be located far enough away from the source site to be outside of any shared risks, such as earthquakes, hurricanes, and so on.

• Warm site Warm sites are locations with adequate equipment, such as hardware and software, that can be accessed fairly quickly when needed. The warm site normally has electricity, telecommunications, and networking infrastructure at a minimum. Backups are stored there in case they are needed for recovery. In the event of a disaster or cyber incident that causes disruption at the source site, the warm site would be brought to full power. There would be a short delay to production levels for critical systems, because immediate recovery is not expected.

• Cold site The least costly option is a cold site. A cold site includes the bare minimum and may store nothing but backups, or backups could be transported or transferred to the cold site when required. A cold site would take the longest to power up and bring to production levels.

Configuration, or Change Management

To help ensure integrity within the parameters of the CIA triad, organizations establish a configuration, or change management process. This process is part of an organization’s overall information governance approach and a valuable security control measure to ensure consistency in how changes are made to the network, systems, and applications. The goal of change management is to establish standard procedures for managing changes efficiently to control risk and minimize disruption to IT services and business operations.

A change management process is a critical best practice that’s included in the Information Technology Infrastructure Library (ITIL) framework. ITIL recognizes the value of an efficient, organized methodology for taking new products or updates of existing products from design to operations without adding risk.13

In the realm of healthcare, a change management process may affect a laboratory department that, for example, wants to purchase a new information system for processing lab results. The new system would require network access and must interface with current systems, including EHR. Imagine the result if the acquisition and implementation of this new system did not include a formal, management-level review of the operating system, interfacing requirements, physical installation environment, and other significant factors. Without a change management system in place to ensure that the new acquisition is consistent with existing systems, results could be disastrous: the new system might fail to be interoperable with existing systems, chiefly the EHR, or the system may not work at all.

Other considerations for change management could involve required changes to perimeter security defenses, such as border firewalls. If a networked system needed to communicate with external entities across the Internet, the data traffic devices would exit the organization’s network through the firewall via distinct ports using specific protocols. Outside of the ACL’s approved ports and protocols, all other traffic would be blocked. Special-purpose computing systems, such as medical device systems, may require the use of a port not on the current ACL. To request or ultimately make changes to perimeter defenses, a formal change management process is required.

Incident Response

Incident response is effective as a corrective measure that occurs after a security incident. A successful incident response strategy can limit the duration and impact of an exploited vulnerability. Today, as data breaches seem almost inevitable no matter how well you implement security processes and controls, the one security control that may make the most difference is your incident response strategy. NIST SP 800-61 Rev. 2, Computer Security Incident Handling Guide, provides direction for establishing a solid incident response program that accomplishes detection, analysis, prioritization, and handling of security events, such as data breaches. According to Stroz Friedberg (Aon), a leading global cybersecurity service firm with specific expertise in incident response, “The way an organization responds can be the difference between exacerbating the reputational and financial damages from a breach, and mitigating them.”14 A solid incident response strategy will be based on significant regulatory requirements regarding notification, with HIPAA and HITECH in the United States, and internationally with the European Union’s DPD, for example.

Understanding Privacy Concepts

As an HCISPP, you are expected to have a solid understanding of privacy concepts. Some concepts are used independently of security controls. However, as the healthcare industry becomes ever more digitally based versus paper based, the integration of privacy and security controls also increases. The significance of privacy cannot be overstated. Worldwide, the emphasis on privacy as a human right can lead to enforcement under criminal law. In this section, we examine how various privacy frameworks incorporate privacy concepts. Another current aspect of privacy is the ability to provide privacy in the face of social media, public surveillance, and information sharing.

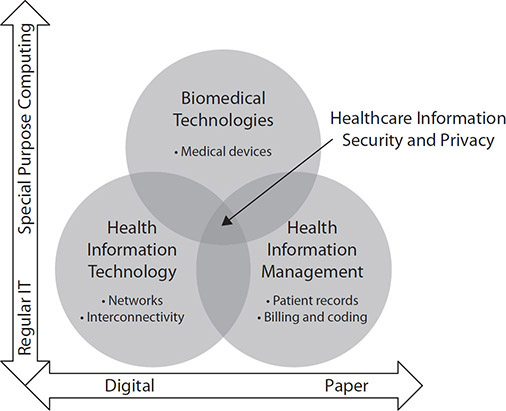

Within healthcare, the roles and responsibilities of those who are charged with protecting information converge around distinct roles that may or may not have previously involved working with digital or electronic information. Some roles originate from traditional privacy or legal roles in health information management, with a shift from paper-based information storage to digital storage; others come from IT support backgrounds, such as local area networking, application management, and end-user support, where new concerns over protected health information are relevant. Still others may come from the clinical engineering or biomedical technology professions, where the interconnectivity of medical devices and to internal and external networks is rapidly evolving. Figure 5-7 depicts the intersection.

Figure 5-7 Convergence of healthcare competencies with information privacy and security responsibilities

For these previously distinct and somewhat separated communities, this section provides a primer in privacy compliance for those with stronger backgrounds in security, and in security compliance for those with stronger backgrounds in privacy. It offers guidance to those who have a responsibility for complying with information privacy in healthcare, as well as those with traditional privacy or legal compliance roles in healthcare, who have increasing roles in protecting digital information through information security management.

The following concepts and definitions are found in the leading privacy frameworks and regulations. Minor differences may exist, particularly where terms are combined to make one principle rather than two distinct principles in the framework. Refer back to Chapter 4 to review these terms in the context of the framework that advocates them.

US Approach to Privacy