CHAPTER 5

VIRTUAL ROOTKITS

Virtual computing, or virtualization, is a computing environment that simulates or acts like a real computing system, hence, the name virtual machine or virtual system. Most enterprises are moving into virtualization today because it makes their systems more agile and simpler to manage. Small and medium-size businesses are also finding virtualization cheaper than traditional hardware-based computer systems. It boils down to lowering operating costs. Even home users such as myself use virtualization for our computing needs.

The pace at which virtualization is being adopted has also made it very popular with attackers. Instead of malware avoiding a virtual system altogether out of fear that it is really a test machine designed to analyze malware, modern malware employs additional checks to determine whether the virtual environment is a test machine. If it is, then the malware simply stops executing and removes itself. And if it isn’t, then it continues with its directive.

Modern malware that makes its living in virtualization or on virtualized systems is called virtual rootkit malware, or, more simply, virtual rootkits. Virtual rootkits represent the bleeding edge of rootkit technology. Hardware and software support for virtualization has improved by leaps and bounds in recent years, paving the way for an entirely new attack vector for rootkits. The technical mechanisms that make virtualization work also lend the technology to subversion in stealthy ways not previously possible. To make matters worse, virtualization technology can be extremely complex and thus difficult to understand, making it challenging to educate users on the threat. One could say virtualization technology in its current state is a perfect storm.

To better understand the virtual rootkit threat, we’ll cover some of the broad technical details of how virtualization works and the most important components that are targeted by virtual rootkits. These topics include virtualization strategies, virtual memory management, and hypervisors. After covering the technology itself, we’ll discuss various virtual rootkit techniques, such as escaping from a virtual environment and even hijacking the hypervisor. We conclude the chapter with some in-depth analysis of three virtual rootkits: SubVirt, Blue Pill, and Vitriol.

Overview of Virtual Machine Technology

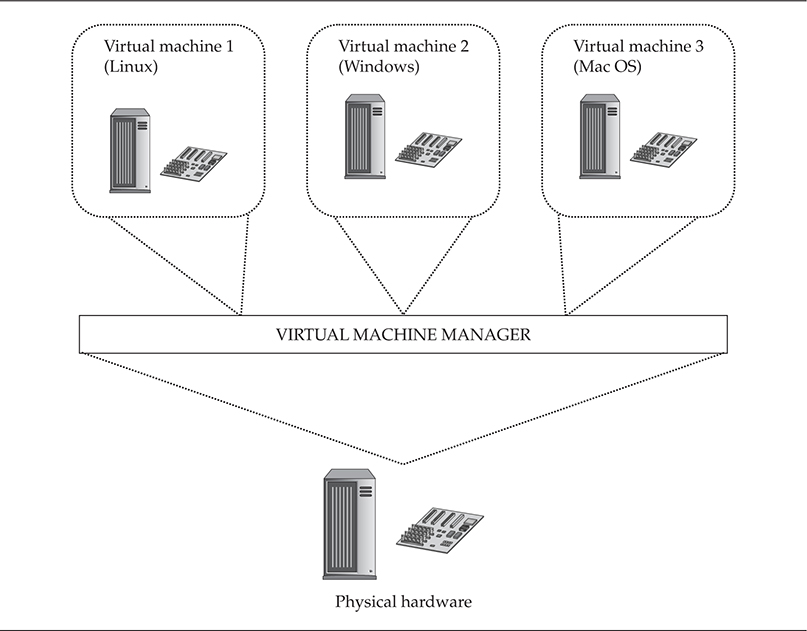

Virtualization technology has redefined modern computing for servers and workstations alike. Virtualization allows a single computer to share its resources among multiple operating systems executing simultaneously. Prior to virtualization, a computer was limited to running one instance of the operating system at a time (unless one counts mainframes as the first example of virtualization). This is a waste of resources because the underlying architecture is capable of supporting multiple instances simultaneously. An obvious benefit to this parallelization and sharing of resources is increased productivity in server environments, such as web and file servers. Since system administrators can now run multiple web servers on a single computer, they’re able to do more work with fewer resources. Virtualization’s parallel computing value also means fully utilizing and maximizing the hardware and computing capability of the physical machine. The virtualization market also extends to individual users’ personal computers, allowing them to multitask across several different types of operating systems (Linux, OS X, and so on). Figure 5-1 illustrates the concept of virtualization of system resources to run multiple operating systems.

Figure 5-1 Virtualization of system resources

There are two widely accepted classes of virtual machines: process virtual machines and system virtual machines (also called hardware virtual machines). We’ll briefly touch on process virtual machines but focus primarily on system virtual machines.

Types of Virtual Machines

The process virtual machine, also known as an application virtual machine, is normally installed on an OS and can virtually support a single process. Examples of the process virtual machine are the Java Virtual Machine and the .NET Framework. This type of virtual machine (VM) provides an execution environment (often called a sandbox) for the running process to use and manages system resources on behalf of the process.

Process virtual machines are much simpler in design than hardware virtual machines, the second major class of virtual machine technology. Rather than simply providing an execution environment for a single process, hardware virtual machines provide low-level hardware emulation for multiple operating systems, known as guest operating systems, to use simultaneously. This means the VM mimics x86 architecture, providing all of the expected hardware and assembly instructions. This emulation or virtualization can be implemented in “bare-metal” hardware (meaning on the CPU chip) or in software on top of an existing running operating system known as the host operating system. The operator of this emulation is known as the hypervisor (or virtual machine manager, VMM).

The Hypervisor

The hypervisor is the hardware virtual machine component that handles system-level virtualization for all VMs running on the host system. It manages the resource mapping and executions between the physical and virtual hardware, allowing two or more operating systems to share system resources. The hypervisor handles system resource sharing, virtual machine isolation, and all of the core responsibilities for the subordinate virtual machines. Each virtual machine inside a system virtual machine runs a complete operating system, for example, Windows 10 or Red Hat Enterprise Linux.

There are two types of hypervisors: Type I (native) and Type II (hosted). Type I hypervisors are implemented in system hardware on the motherboard, whereas Type II hypervisors are implemented in software on top of the host operating system. As seen in Figure 5-2, Type II hypervisors have kernel-mode components that sit on the same level as the operating system and handle isolating the virtual machines from the host operating system. These types of hypervisors provide hardware emulation services, so the virtual machines think they are working directly with the physical hardware. Type II hypervisors include well-known products such as VMware Workstation and Oracle VirtualBox.

Figure 5-2 Type II hypervisors

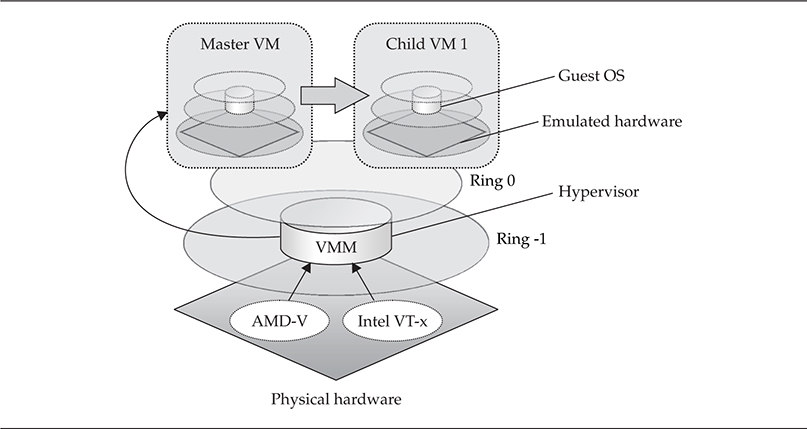

Type I hypervisors, illustrated in Figure 5-3, operate beneath the operating system in a special privilege level called ring –1. Another name for these hypervisors is bare-metal hypervisors because they rely on virtualization support provided in hardware by the manufacturer (in the form of special registers and circuits), as well as on special instructions in the CPU. Type I hypervisors typically are faster due to virtualization support embedded in the hardware itself. Examples of Type I hypervisors include Citrix XenServer, Microsoft Hyper-V, and VMware vSphere. Note that Figure 5-3 is a generic illustration of a Type I hypervisor. It is not representative of all Type I hypervisor implementations available, as details of specific vendor solutions would require dozens of illustrations.

The general idea of a Type I hypervisor is to compress the protection rings downward, so that Child VMs can execute on top of hardware just like the Master VM does. VM separation and system integrity are maintained by special communication between the hypervisor and the Master VM. As shown in Figure 5-3, the hypervisor is supported by hardware-level virtualization support from either Intel or AMD.

The hypervisor is the most important component in virtualization technology. It runs beneath all of the individual guest operating systems and ensures system integrity. The hypervisor must literally maintain the illusion so the guest operating systems think they’re interacting directly with the system hardware. This requirement is critical for virtualization technology and also the source of much controversy and debate in the computer security world.

Many debates and discussions have arisen around the inability of the hypervisor to remain transparent and segregated from the subordinate operating systems. The hypervisor cannot possibly maintain a complete virtualization illusion, and the guest operating system (or installed applications) will always be able to determine if it is in a virtual environment. This remains true despite efforts by AMD and Intel to provide the hypervisor with capabilities to better hide itself from some of the detection techniques released by the research community (such as timing attacks and specialized CPU instructions).

Virtualization Strategies

Three main virtualization strategies are used by most virtualization technologies available today. These strategies differ fundamentally in their integration with the operating system and underlying hardware.

The first strategy is known as virtual machine emulation and requires the hypervisor to emulate real and virtual hardware for the guest operating systems to use. The hypervisor is responsible for “mapping” the virtual hardware that the guest operating system can access to the real hardware installed on the computer. The key requirement with emulation is ensuring that the necessary privilege level is available and validated when guest operating systems need to use privileged CPU instructions. This arbitration is handled by the hypervisor. Products that use emulation include VMware, Bochs, Parallels, QEMU, and Windows Virtual PC. A critical point here is this strategy requires the hypervisor to “fool” the guest operating systems into thinking they are using real hardware.

The second strategy is known as paravirtualization. This strategy relies on the guest operating system itself being modified to support virtualization internally. This removes the requirement for the hypervisor to “arbitrate” special CPU instructions, as well as the need to “fool” the guest operating systems. In fact, the guest operating systems realize they’re in a virtual environment because they’re assisting in the virtualization process. A popular product that implements this strategy is Xen and Oracle VirtualBox.

The final strategy is OS-level virtualization, in which the operating system itself completely manages the isolation and virtualization. It essentially makes multiple copies of itself and then isolates those copies from each other. The best example of this technique is Oracle Solaris Zones.

Understanding these three strategies is important to appreciating how virtual rootkits take advantage of the complexities involved with each implementation strategy. Keep in mind these are just the popular virtualization strategies. Many other strategies exist in the commercial world, in the research community, and in classified government institutions. Each one of those implementations brings its own unique strengths and weaknesses to the virtual battlefield.

Virtual Memory Management

An important example of the hypervisor’s responsibility to abstract physical hardware into virtual usable hardware is found in virtual memory management. Virtual memory is not a concept unique to virtualization. All modern operating systems take advantage of abstracting physical memory into virtual memory, so the system can support scalable multiprocessing (run multiple processes at once). For example, all processes running on 32-bit, non-PAE Windows NT–based platforms are assigned 2GB of virtual memory. However, the system may only have 512MB of physical RAM installed on the system. To make this “overbooking” feasible, the operating system’s memory manager handles translating a process’s virtual address space to a physical address in conjunction with a page file that is written to disk. In the same vein, the hypervisor must translate the underlying physical addresses used by guest operating systems to real physical addresses in the hardware. Thus, there is an additional layer of abstraction when managing memory.

The virtual memory manager is a critical component of virtualization design, and different vendors take varying approaches to managing system memory. VMware isolates the memory address spaces for the host operating system from those belonging to the guest operating system, so the guest cannot ever touch an address in the host. Other solutions utilize a combination of hardware and software solutions to manage memory allocation. In the end, these solutions amount to a limited defense against advanced rootkits that are able to violate the isolation between guest and host operating systems.

Virtual Machine Isolation

Another of the hypervisor’s critical responsibilities is to isolate the guest operating systems from each other. Any files or memory space used by one virtual machine should not be visible to any other virtual machine. The sum of techniques and components used to achieve this separation is known as virtual machine isolation. The hypervisor runs beneath all of the guest operating systems and/or on bare hardware, so it is able to intercept requests to system resources and control visibility. Virtual memory management and I/O request mediation are necessities for isolating VMs, along with instruction set emulation and controlling privilege escalation (such as SYSENTER, call gates, and so on).

VM isolation also implies isolating the guest operating systems from the host operating system. This separation is critical for integrity, stability, and performance reasons. It turns out virtualization technology is pretty good at protecting individual guest operating systems from each other. As you’ll see shortly, this is not the case for protecting the underlying host operating system from its subordinate guest operating systems. As was stated earlier, within the industry it is widely accepted that virtualization technology has failed to maintain the boundary between the “real world” and the “virtual world.”

Virtual Machine Rootkit Techniques

We’ll now shift our focus from virtualization technology itself to how this technology is exploited by three classes of virtual rootkits. First, let’s take a quick trip through history to see how malware has evolved to this point.

Rootkits in the Matrix: How Did We Get Here?!

Virtualization technology has become the staging ground for an entire new generation of malware and rootkits. In Chapter 4, we covered the taxonomy of malware defined by Joanna Rutkowska. To facilitate the discussion of how malware and rootkits have evolved and overcome threats, we’ll generalize Joanna’s model to describe the sophistication level of the four types of malware as it applies to rootkits:

• Type 0/user-mode rootkits Not sophisticated

• Type I/static kernel-mode rootkits Moderately sophisticated but easily detected

• Type II/dynamic kernel-mode rootkits Sophisticated but always on a level playing field with detectors

• Type III/virtual rootkits Highly sophisticated and constantly evolving battleground

The trend we are seeing with rootkits and malware in general (and as evidenced by the malware taxonomy just described) is that as technology becomes more sophisticated, so do offensive and defensive security measures. This means that defenders have to work harder to detect rootkits, and rootkit authors have to work harder to write more sophisticated rootkits. Part of this struggle is a result of increasing complexity in technology convergence (i.e., virtualization), but it is also a direct result of the constant battle between malware authors and computer defenders. In the taxonomy, each type of malware can be thought of as a generation of malware that grew out of needing to find a better infection method. Rootkit authoring and detection technologies have now found their home in virtualization.

And so the war continues to be waged. An analogy was made between this escalation of rootkit technologies into the virtualization world and the struggle of humankind for true awareness in the movie The Matrix. In 2006, Joanna Rutkowska released a virtual rootkit known as Blue Pill. The rootkit was named after the blue pill in the movie, which Morpheus offers to Neo when he’s facing the decision either to reenter The Matrix (remain ignorant of the real world) or to take the red pill to escape the virtual world and enter the real world (Joanna also released a tool to detect virtual environments, aptly named the Red Pill). The analogy is that the victim operating system “swallows the blue pill,” that is, the virtual rootkit, and is now inside the “matrix,” which is controlled by the virtual machine. Consequently, the Red Pill is able to detect the virtual environment. The analogy falls short, however, because the tool does not actually allow the OS to escape from the VM as the red pill allows Neo to escape The Matrix.

Although the security implications of virtualization had been studied for some time, Joanna’s research helped raise the issue to the mainstream research community, and a number of tools and papers have since been released.

What Is a Virtual Rootkit?

A virtual rootkit is a rootkit that is coded and designed specifically for virtualization environments. Its goal is the same as the traditional rootkits we have discussed so far in this book (i.e., gain persistence on the machine using stealthy tactics), and the components are largely the same, but the technique is entirely different. The primary difference is the rootkit’s target has shifted from directly modifying the operating system to subverting it transparently inside a virtual environment. In short, the virtual rootkit contains functionality to detect and optionally escape from the virtual environment (if it is deployed within one of the guest VMs), as well as completely hijack the native (host) operating system by installing a malicious hypervisor beneath it.

The virtual rootkit moves the battlefield from being at the same level as the operating system to being beneath the operating system (hence, it is Type III malware as discussed earlier). Whereas traditional rootkits must determine stealthy ways to alter the operating system without its knowledge (and without triggering third-party detection tools), the virtual rootkit achieves its goals without having to touch the operating system at all. It leverages virtualization support in hardware and software to insert itself beneath the operating system.

Types of Virtual Rootkits

For the purposes of this book, we will define three classes of virtual rootkits (the last two definitions in the list have already been defined by other security researchers in the community):

• Virtualization-aware malware (VAM) This is your “common” malware that has added functionality to detect the virtual environment and behave differently (terminate, stall) or attack the VM itself.

• Virtual machine-based rootkits (VMBRs) This is a traditional type of rootkit that has the ability to envelope the native OS inside a VM without its knowledge; this is achieved by modifying existing virtualization software.

• Hypervisor virtual machine (HVM) rootkits This rootkit leverages hardware virtualization support to replace the underlying hypervisor completely with its own custom hypervisor and then envelope the currently running operating systems (hosts and guests) on-the-fly.

Virtualization-aware malware is more of an annoyance than a real threat. This type of malware simply alters its behavior when a virtual environment is detected, for example, terminating its own process or just stalling execution as if it has no malicious intent. Many common viruses, worms, and Trojans fall into this category. The goal of this polymorphic behavior is primarily to fool analysts who use virtualization environments to analyze malware in a sandboxed environment. By behaving benignly when a virtual machine is detected, the malware can slip past unsuspecting analysts. This technique is easily overcome using debuggers, as the analyst is able to disable the polymorphic behavior and uncover the malware’s true capabilities. Honeypots are also commonly used to sandbox malware and to condense resources to run multiple “light” VMs; thus, they are often both intentionally and unintentionally targeted by virtualization-aware malware.

VMBRs were first defined by Tal Garfinkel, Keith Adams, Andrew Warfield, and Jason Franklin at Stanford University (http://www.cs.cmu.edu/~jfrankli/hotos07/vmm_detection_hotos07.pdf) and comprise a class of virtual rootkits that are able to move the host OS into a VM by altering the system boot sequence to point to the rootkit’s hypervisor, which is loaded by a stealthy kernel driver in the target OS. The example illustrated in this book is SubVirt, which requires a modified version of VMware or Virtual PC to run. The operating systems themselves—Windows XP and Linux—were also modified to make the proof-of-concept rootkit. The VMBR is more sophisticated in design and capability than VAM, but it still lacks autonomy and the uber stealthiness of the HVM rootkits. It also has flaws inherent to the lack of full virtualization in native x86 architecture. This means a laundry list of CPU instructions (sgdt, sidt, sldt, str, popf, movm, just to name a few) is not trapped by the hypervisor and can be executed in user mode to detect the VMBR. Because the instruction is not considered privileged by Intel, the CPU does not natively trap the issued instruction. Therefore, emulation software (such as a VMBR) cannot intercept these instructions that can reveal the VMBR’s presence.

HVM rootkits were the most advanced virtual rootkits known at that time. They are capable of installing a custom, super-lightweight hypervisor that hoists the native OS into a VM on-the-fly (that is, transparently to the OS itself)—and does so with utmost stealth. The HVM virtual rootkit relies on hardware virtualization support provided by AMD and Intel to achieve its goals. The hardware support comes in the form of additional CPU-level instructions that can be executed by software (such as the OS or an HVM rootkit) to quickly and efficiently set up a hypervisor and run guest operating systems in an isolated virtual environment.

The argument in the security community is whether the host operating system (or underlying hypervisor) can detect this subversion (or whether detection is even relevant, assuming everything may be virtualized in the near future). Now, we’ll discuss how virtual malware can detect and escape the virtual environment.

Detecting the Virtual Environment

Detecting a virtual environment is an important capability for both malware and malware detectors. Think about it: If you were in “the matrix,” wouldn’t you want to know about it? You might just rethink how you act if you knew someone was stealthily watching your every move.

![]()

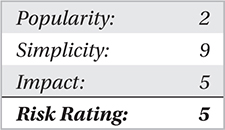

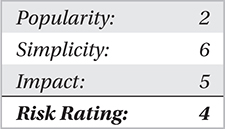

The risk ratings in this chapter approximate the likelihood that discussed techniques are used in malware in the wild, even though the technique itself (such as VM breakout) may not really be an attack. Because virtual rootkits are not utilized as much, the risk ratings are very low.

Detecting VM Artifacts

Because virtual machines use system resources, they leave traces of their existence in locations throughout the system. The authors of “On the Cutting Edge: Thwarting Virtual Machine Detection” (http://handlers.sans.org/tliston/ThwartingVMDetection_Liston_Skoudis.pdf) describe four main areas to examine for indicators of a virtual environment:

• Artifacts in processes, the file system, and the registry, for example, the VMware hypervisor process.

• Artifacts in system memory. OS structures normally loaded at certain locations in memory are loaded in different locations when virtualized; strings present in memory indicate a virtual hypervisor is running.

• The presence of virtual hardware used by virtual machines, such as VMware’s virtual network adapters and USB drivers.

• CPU instructions specific to virtualization, for instance, nonstandard x86 instructions added to increase virtualization performance, such as Intel VT-x’s VMXON/VMXOFF.

By searching for any of these artifacts, malware and VM detectors alike can discover that they are inside a virtual environment.

VM Anomalies and Transparency

Although detection methods are useful, the fundamental issue under scrutiny here is how a virtual machine fails to achieve transparency. A fundamental goal of virtualization technology is to emulate the underlying hardware transparently. In other words, the guest operating systems should not realize, for performance and abstraction reasons, that they are in a virtual environment. However, although transparency is a goal for performance reasons, the actual goal is to achieve good enough transparency such that performance is not impacted by the emulation. In other words, a virtual machine was never intended to be, and arguably cannot be, completely transparent.

And here’s the pinch: because virtualization technology itself is detectable, any malware or detection utility that employs or relies on the technology will be detectable to its adversary. Thus, it is a lose-lose situation for both detectors and malware: if a detector uses a VM to analyze malware, the malware can always detect that it is in a VM; likewise, if a virtual rootkit tries to trap a host operating system in a virtual environment by installing its own hypervisor, the host OS will always be able to detect the change.

So what are the underlying indicators of a virtual environment? Many of these indicators relate to design issues—such as how to emulate a certain CPU instruction—but most are an artifact of physical limitations and performance issues inherent to the hypervisor acting as a proxy between “real hardware” and “virtual hardware.”

A very good summary of these virtualization anomalies—logical discrepancies, resource discrepancies, and timing discrepancies—is provided in “Compatibility Is Not Transparency: VMM Detection Myths and Realities,” by Garfinkel et al. (https://www.usenix.org/legacy/event/hotos07/tech/full_papers/garfinkel/garfinkel.pdf).

Logical discrepancies are implementation differences between the true x86 instruction set architecture of the CPU manufacturer (Intel, AMD, and so on) and the virtualization provider such as VMware or Virtual PC. The discrepancy here is simple: to emulate the hardware, companies like VMware and Microsoft have to “reinvent the wheel,” and they don’t always get it just right, and they go about it in different ways. Thus, distinguishing their implementations from true x86 support is not hard. One example is the current limitations of VMs to emulate the x86 SIDT instruction.

Resource discrepancies are evident in a virtual environment simply because the virtual machine and hypervisor must consume resources themselves. These discrepancies manifest primarily in the CPU caches, main memory, and hard drive space. A common VM detection technique involves benchmarking the storage requirements of a nonvirtualized environment and using any deviations as indicators of a virtual environment.

The same technique holds for the third VM anomaly, timing discrepancies. Under nonvirtualized operating constraints, certain system instructions execute in a predictable amount of time. When emulated, those same instructions take fractions of a second longer, but the discrepancy is easily detectable. An example from Garfinkel et al.’s paper mentioned previously is the intrinsic performance hit on the system due to the hypervisor’s virtual memory manager processing an increased number of page faults. These page faults are a direct result of management overhead by the virtual machine to implement such critical features as VM isolation (i.e., protecting the memory space of the host OS from the guest OS). A well-known timing attack to detect VMs involves executing two x86 instructions in parallel (CPUID and NOP) and measuring the divergence of execution times over a period of time. Most VM technologies fall into predictable ranges of divergence, whereas nonvirtualized environments do not.

Now we’ll explore some tools that can be used to detect the presence of a virtual environment. Unless otherwise noted, these tools only detect VMware and Virtual PC VMs. For a more comprehensive list of detection methodologies for other VMs, including Parallels, Bochs, Hydra, and many others, see http://www.symantec.com/avcenter/reference/Virtual_Machine_Threats.pdf.

Red Pill by Joanna Rutkowska: Logical Discrepancy Anomaly Using SIDT

Red Pill by Joanna Rutkowska: Logical Discrepancy Anomaly Using SIDT

The Red Pill was released by Joanna Rutkowska in 2004 after observing some anomalies in testing the SuckIt rootkit inside VMware versus on a “real” host. As it turns out, the rootkit (which hooked the IDT) failed to load in VMware because of how VMware handled the SIDT (store IDT) x86 instruction. Because multiple operating systems could be running in a VM, and there was only one IDT register to store the IDT when the SIDT instruction was issued, the VM had to swap the IDTs out and store one of them in memory. Although this broke the rootkit’s functionality, it happened to reveal one of the many implementation quirks in VMs that made them easily detectable; hence, Red Pill was born.

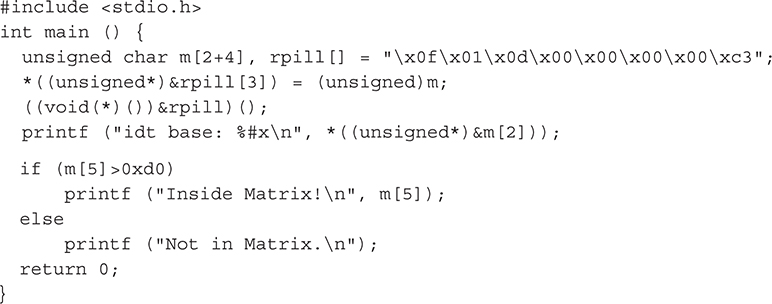

Red Pill issued the SIDT instruction inside a VM and tested the returned address of the IDT against known values for Virtual PC and VMware Workstation. Based on the return value, Red Pill could detect if it was inside a VM. The following code is the entire program in C:

Note the SIDT instruction was included as hex opcodes (byte representation of CPU instructions and operands) in the source code to increase its portability. To have the compiler generate the opcodes, you simply used inline assembly code (e.g., MOV eax,4) rather than opcodes.

Nopill by Danny Quist and Val Smith (Offensive Computing): Logical Discrepancy Anomaly Using SLDT

Nopill by Danny Quist and Val Smith (Offensive Computing): Logical Discrepancy Anomaly Using SLDT

Not long after the Red Pill was released, two researchers at Offensive Computing noted some grave limitations with its approach and released a whitepaper with improved proof-of-concept code called Nopill. Namely, the SIDT approach failed on multicore and multiprocessor systems because each processor had an IDT assigned to it and the resulting byte signatures could vary drastically (thus, the two hardcoded values Red Pill used were unreliable). Red Pill also had difficulties with false positives on nonvirtualized, multiprocessor systems.

Their improvement was to use the x86 Local Descriptor Table (LDT), a per-process data structure used for memory access protections, to achieve the same goal. By issuing the SLDT x86 instruction, Nopill was able to more reliably detect VMs on multiprocessor systems. The signature used by Nopill was based on the fact that the Windows OS did not utilize LDT (thus its location would be 0x00) and GDT structures, but VMware had to provide virtual support for them anyway. Thus, the location of each structure would vary predictably on a virtualized system versus a nonvirtualized system. The code for Nopill is shown here:

As shown in the source code, Nopill actually read all of the IDT, GDT, and LDT structures but only considered the LDT for VM detection. For the IDT and GDT structures, Nopill issued the appropriate x86 instruction to store the table information in a memory location (SIDT or SGDT) and then examined the resulting table address to see if it was greater than the magic address location 0xd0 (shown to be the predictable location of the relocated table in virtual environments). It then read the LDT to determine if the code was executing in a VM. If the address of the LDT was not 0x00 for both entries, then a VM must have relocated the table because Windows does not use the LDT (and thus it would be 0x00).

ScoopyNG by Tobias Klein (Trapkit): Resource and Logical Discrepancies

ScoopyNG by Tobias Klein (Trapkit): Resource and Logical Discrepancies

From 2006 onward, Tobias Klein of the Trapkit.de website released a series of tools to test various detection methods. These tools—Scoopy, Scoopy Doo, and Jerry—were streamlined into a single tool called ScoopyNG in 2008.

Scoopy Doo originally looked for basic resource discrepancies present in VMware virtual environments by searching for known VMware-issued MAC addresses and other virtual hardware. However, this proved to be less reliable than assembly-level techniques, so it was discontinued.

The ScoopyNG tool uses seven different tests (from multiple researchers) to determine whether the code is being run inside a VM. It detects VMware VMs on single and multiprocessor systems and uses the following techniques:

• Test 1 In VM if IDT base address is at known location.

• Test 2 In VM if LDT base address is not 0x00.

• Test 3 In VM if GDT base address is at known location.

• Test 4 In VM if STR MEM instruction returns 0x00,0x40.

• Test 5 In VM if special assembly instruction 0x0a (version) returns VMware magic value 0x564D5868 (ASCII VMXh).

• Test 6 In VM if special assembly instruction 0x14 (memsize) returns VMWare magic value 0x564D5868 (ASCII VMXh).

• Test 7 In VM if an exception test triggers a VMware bug

Tests 1–3 are well known and have been covered already. Test 4 was based on research by Alfredo Andres Omella of S21Sec in 2006 (http://charette.no-ip.com:81/programming/2009-12-30_Virtualization/www.s21sec.com_vmware-eng.pdf). It issues an x86 instruction called store task register (STR) and examines the return value of the task segment selector (TSS). Alfredo noticed that the return value differed for nonvirtualized environments. Although this check is not portable in multicore and multiprocessor environments, it is another test to add to the growing list of assembly instructions that reveal implementation defects.

Tests 5 and 6 were based on research by Ken Kato (http://www.tdeig.ch/visag/Backdoor.pdf) into VMware “I/O backdoors” used by the VM to allow guest and host operating systems to communicate with each other (i.e., copy and paste). Remember, these ports are not real; they are virtual ports. One such port was found at 0x5658. In the following code, the port is queried with various parameters and the results checked against known magic values (which are simply unique values that identify the existence of the product by their presence):

The x86 instruction IN is trapped by the VM and emulated to perform the operation. It reads the parameters to the IN instruction, located inside the EAX (magic value VMXh) and ECX (operand 0x14 means get memory size) registers, and then returns a value. If the returned value matches VMXh, then the code is executing inside a VM.

The final test, Test 7, was based on research by Derek Soeder of eEye (http://eeyeresearch.typepad.com/blog/2006/09/another_vmware_.html). This test was based on advanced architecture concepts, but in a nutshell, it relies on a bug in VMware that incorrectly handles a CPU protection fault. In short, the emulation is incorrect and issues execution transfer before the fault is issued. A “real” processor would issue the fault first. Thus, Test 7 causes a fault to occur and then checks the resulting CPU register values for evidence of the bug.

Vrdtsc by Bugcheck: Timing Discrepancies

Vrdtsc by Bugcheck: Timing Discrepancies

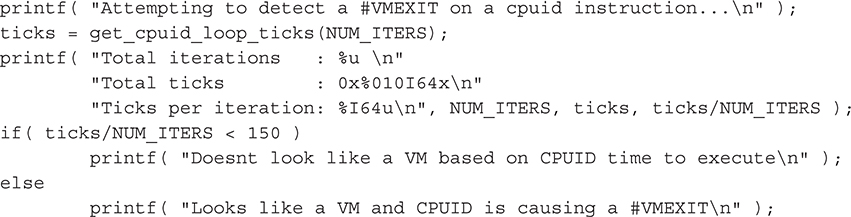

The tool Vrdtsc, written by the Canadian researcher Bugcheck in 2006, performs various timing tests to see if the code is executing in a virtual environment. This tool works against hardware-assisted virtual environments, such as Intel’s VT-x technology. The tool performs two tests using two different Intel instructions: CPUID and RDTSC (read-time stamp counter).

The first test issues 10 million CPUID instructions and tests how many processor ticks (units of time at the processor level) the requests took. On a nonvirtualized machine, the request should take roughly 50–150 ticks, but using a VM with Intel VT-x hardware support, it would take roughly 5000–8000 ticks. The code is shown here:

The second test issues the RDTSC instruction 10 million times and then compares the actual time difference based on tick calculations and checks the start and end time using the time() function. If the total execution time is greater than 10 seconds, or the RDTSC instruction takes more than 15 ticks (the time it would take on a nonvirtualized machine), the tool reports that it is inside a VM.

At this point, we have looked at numerous methods to detect the presence of a virtual machine. It should be obvious by now that this issue has been researched enough to conclusively prove true transparency in a virtual environment is impossible. We’ll now turn our attention to how rootkits escape the virtual environment.

Escaping the Virtual Environment

Once a piece of malware has detected that it is trapped in a virtual environment, it may want to escape into the host operating system rather than simply terminate its process. Typically escaping the VM requires using an exploit to cause a service or the entire VM itself to crash, resulting in the malware escaping the virtual cage. One such example is a directory traversal vulnerability in VMWare file-sharing services that causes the service to provide root-level directory access to the host OS file system (http://www.coresecurity.com/content/advisory-vmware). A directory traversal attack is a well-known technique in the world of penetration testing, in which unauthorized access to a file or folder is obtained by leveraging a weakness in an application’s ability to interpret user input. A thorough testing of VM stability through fuzzing techniques is presented in a paper by Tavis Ormandy (http://taviso.decsystem.org/virtsec.pdf). Fuzzing is also a penetration technique that attempts to gain unauthorized system access by supplying malformed input to an application.

However, perhaps the most abused feature of VMware is the undocumented ComChannel interface used by VMware Tools, a suite of productivity components that allows the host and guest operating systems to interact (e.g., share files). ComChannel is the most publicized example of VMware’s use of so-called backdoor I/O undocumented features. At SANSfire 2007, Ed Skoudis and Tom Liston demoed a variety of tools built from ComChannel:

• VMChat

• VMCat

• VMDrag-n-Hack

• VMDrag-n-Sploit

• VMFtp

All of these tools used exploit techniques to cause unintended/unauthorized access to the host operating system from within the guest operating system over the ComChannel link. The first tool, VMChat, actually performed a DLL injection over the ComChannel interface into the host operating system. Once the DLL was inside the host’s memory space, a backdoor channel was opened that allowed bidirectional communication between the host and the guest.

As it turned out, Ken Kato (previously mentioned in the “Detecting the Virtual Environment” section) had been researching the ComChannel issue for some years in his “VM Back” project (http://chitchat.at.infoseek.co.jp/vmware/).

Although these tools represent serious issues in VM isolation and protection, they do not represent the most critical threat in virtualization technology. The third class of malware, hypervisor-replacing virtual malware, represents this threat.

Hijacking the Hypervisor

The ultimate goal for an advanced virtual rootkit is to subvert the hypervisor itself—the brains controlling the virtual environment. If the rootkit could insert itself beneath guest operating systems, it would control the entire system.

This is exactly what HVM rootkits achieve in a few deceptively hard steps:

1. Install a kernel driver in the guest operating system.

2. Find and initialize the hardware virtualization support (AMD-V or Intel VT-x).

3. Load the malicious hypervisor code into memory from the driver.

4. Create a new VM to place the host operating system inside.

5. Bind the new VM to the rootkit’s hypervisor.

6. Launch the new VM, effectively switching the host into a guest mode from which it cannot escape.

This process occurs entirely on-the-fly, with no reboot required (although in the case of the SubVirt rootkit, a reboot is required for the rootkit to load initially, after which the process is achieved without a reboot).

However, before we get into the details of these steps, we have to explore a new concept that was briefly mentioned in Chapter 4, Ring –1.

Ring –1

To achieve these goals, the virtual rootkit must leverage a concept created by the hardware virtualization support offered by the two main CPU manufacturers, Intel and AMD. This concept is Ring –1. Recall the diagram of the x86 CPU rings of privilege from Chapter 4. They range from Ring 0 (most privileged—the OS runs in this mode) to Ring 3 (user applications run in this mode). Ring 0 used to be the most privileged ring, but now Ring –1 contains a hardware-level hypervisor that has even more privilege than the operating system.

In order for the CPU manufacturers to implement this Ring –1 (thereby adding native hardware support for virtualization software), they added several new CPU instructions, registers, and processor control flags. AMD named their additions AMD-V Secure Virtual Machine (SVM), and Intel named their technology Virtualization Technology extensions, or VT-x. Let’s take a quick look at the similarities between them.

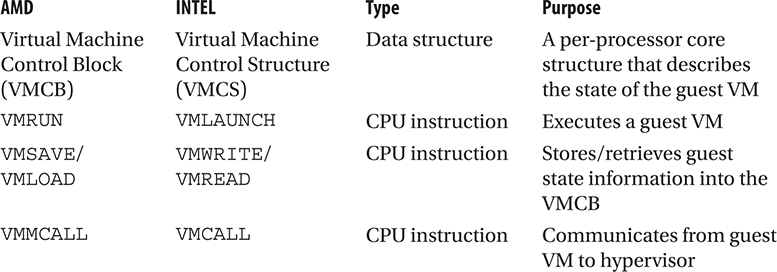

AMD-V SVM/Pacifica and Intel VT-x/Vanderpool

To understand how HVM rootkits leverage these hardware-based virtualization technologies, having a firm grasp of the capabilities these extensions add to the x86 instruction set is important. The following table summarizes the major commands and data structures added by these extensions. These extensions are used by Blue Pill and Vitriol.

This is not an exhaustive list of the additions to the x86 instruction set, but in actuality, the number is reasonably small. The relatively light nature of this hardware support is solely for performance reasons.

Virtual Rootkit Samples

SubVirt is an example of VMBR, whereas Blue Pill and Vitriol are HVB rootkits. Most virtual rootkits coming out today are variations on these three.

• SubVirt, developed by Samuel T. King and Peter M. Chen at the University of Michigan in coordination with Yi-Min Wang, Chad Verbowski, Helen J. Wang, and Jacob R. Lorch at Microsoft Research, targets Intel x86 technology. It was tested on Windows XP using Virtual PC and Gentoo Linux using VMWare.

• Blue Pill by Joanna Rutkowska of Invisible Things Lab targets AMD-V SVM/Pacifica technology. It was tested on x64 Vista.

• Vitriol by Dino Dai Zovi of Matasano Security targets Intel VT-x. It was tested on MacOS X.

SubVirt: Virtual Machine-Based Rootkit (VMBR)

SubVirt: Virtual Machine-Based Rootkit (VMBR)

SubVirt inserts itself beneath the host operating system, creating a new hypervisor. It relies on x86 architecture rather than specific virtualization technology like SVM and loads itself by altering the system boot sequence. The authors also implemented malicious services to showcase how the rootkit could cause damage after installing itself. Even though SubVirt targeted both Windows XP and Linux, we’ll only cover the Windows aspects of the VMBR.

To modify the boot sequence, SubVirt requires and assumes the attacker has gained root privileges on the system and is able to copy the VMBR to persistent storage on the target system. Although this is an acceptable assumption, this requirement does expose the rootkit to certain offline attacks and limits the tool’s applicability in some cases. The VMBR is copied to the first active partition on Windows XP.

The boot sequence is then modified to first execute the VMBR instead of the OS boot loader. It does this by overwriting the sectors on disk to which the BIOS transfers control. To maneuver around antivirus, HIDS/HIPS, and personal firewall solutions that may alert users to this activity on Windows XP, SubVirt uses a kernel driver to register a LastChanceShutdown callback routine. This routine is called by the operating system kernel when the system is shutting down, at which point most processes have been terminated and the file system itself has been unloaded. As a second level of protection, this malicious kernel driver is a low-level driver that hooks beneath the file-system driver and most antivirus-type products. Thus, none of those higher-level drivers will ever see SubVirt. As a third layer of protection, this low-level kernel driver hooks the low-level disk driver’s write() routine, allowing only its VMBR to be written to boot blocks on the disk.

Once the system is rebooted, the BIOS transfers execution to the VMBR, which then loads a custom “attack” operating system that hosts malicious services and a hypervisor. The hypervisor controls and boots the encapsulated host operating system (referred to as the “target OS” by the authors), and the attack operating system provides malicious services and low-level protection to malware operating inside the target OS (without its knowledge).

The goal of the VMBR is to support the malware running inside the target OS, which has now been pushed into a VM. It does this by hosting three classes of malicious services, which the authors define as

• Services that do not communicate with the target OS at all. Examples include spam relays, botnets, and phishing web servers.

• Services that observe data or events from the target OS. Examples include a keylogger and network packet sniffer.

• Services that deliberately alter execution of the target OS, such as dropping packets or erasing suspect data.

The VMBR is able to offer these services without the target OS knowing because the VMBR is able to trap instructions issued by the target OS to hardware and alter those instructions’ functionality. This is what a normal VM emulator does, but in this case, it is for malicious intent.

We’ll briefly cover the sample services in each of these three classes implemented in the SubVirt code base.

Phishing Web Server Service

The phishing web server within the SubVirt VMBR was developed to characterize malicious code that has no interaction with the target operating system. A phishing website is a decoy of a real website intended to guide users into divulging personally identifiable information (PII). This information often contains names, addresses, Social Security numbers, credit card information, or usernames and passwords. This phishing site uses a thttpd web server in the attack operating system. The virtual network settings are modified to redirect all traffic to the target except inbound TCP packets bound for port 8080, which are directed to the phishing server. The VMBR environment does not contain any state or event information that is visible from within the target operating system.

Keystroke Logger Service

As with the phishing web server, the SubVirt VMBR contains an optional keystroke logger that may be used. A keystroke logger is an application that is intended to collect and store all keystrokes. In this example, as it runs from the virtual machine within a rootkit, it is attempting to collect all keystroke data without the target OS’s knowledge. This is accomplished by emulating the keyboard module within the VMBR to copy all keystrokes and send them to the attacker OS. Once received by the attacker OS, the custom keystroke program searches for possible passwords. After analyzing the keystrokes on the attacker system, the data is forwarded to the target OS.

Sensitive Information File Scanner Service

The authors also developed a module to scan the target OS file system for files that may contain sensitive information, such as user credentials or private keys.

Defense Countermeasure Service

This service implemented by the authors represents the most aggressive service that falls under the third class of services offered—actively modifying the target OS. This service actually modifies an exported kernel function in Windows XP that handles mapping executable files into a process’s address space (i.e., the Windows loader). If the modified function detects the Red Pill program, it sets a breakpoint on the SIDT instruction in the program. Thus, when Red Pill runs and calls SIDT, it breaks execution into the hypervisor (because the hypervisor traps the instruction for emulation), which subsequently falsifies the results to fool Red Pill.

Maintaining Control Through Reboots and Shutdowns

We have seen several stealthy capabilities of the SubVirt rootkit, such as modifying the boot sequence by hooking low in the disk driver stack and installing services to transparently monitor or fool the target OS. The VMBR adds an even stealthier feature to prevent the system from truly shutting down. If the system were to shut down, the VMBR would be susceptible to offline attacks, such as the BIOS passing control to a bootable CD-ROM or USB drive, where detection utilities could detect and clean SubVirt.

To protect itself from system reboots, the VMBR resets the virtual hardware rather than the physical hardware. This provides the target OS with the illusion that the devices have been reset, when, in actuality, the physical system is still running. To prevent system shutdown (such as when a user pushes the power off button), the VMBR has the capability to use Advanced Configuration and Power Interface (ACPI) sleep states to make the system appear to physically shut down. This induces a low-power mode on the system, where power is still applied to RAM, but most moving parts are turned off.

SubVirt Countermeasures

SubVirt Countermeasures

The authors suggested several ways to thwart their rootkit. The first way is to validate the boot sequence using hardware such as a Trusted Platform Module (TPM) that holds hashes of authorized boot devices. During boot-up, the BIOS hashes the boot sequence items and compares them against the known hashes to make sure no malware is present. A second method is to boot using removable media and scan the system with forensic tools like Helix Live-CD and rootkit detectors such as Strider Ghostbuster. A final method is to utilize a secure boot process that involves a preexisting hypervisor validating the various system components.

The general weaknesses of the SubVirt approach include

• It must modify the boot sector of the hard drive to install, making it susceptible to a class of offline detection techniques.

• It targets x86 architecture, which is not fully virtualized (some instructions such as SIDT run in unprivileged mode), making it susceptible to all detection techniques previously discussed.

• It uses a “heavy hypervisor” (VMware and Virtual PC) that attempts to emulate instructions and provide virtual hardware, making it susceptible to detection through hardware fingerprinting, as discussed previously.

Blue Pill: Hypervisor Virtual Machine (HVM) Rootkit

Blue Pill: Hypervisor Virtual Machine (HVM) Rootkit

Blue Pill was released at Black Hat USA in 2006 and has since grown beyond its original proof-of-concept scope. It is now a stable research project, supported by multiple developers, and has been ported to other architectures. We’ll cover the original Blue Pill, which is based on AMD64 SVM extensions.

The host operating system is moved on-the-fly into the virtual machine using AMD64 Secure Virtual Machine (SVM) extensions. This is a critical feature that other virtual rootkits such as SubVirt do not have. SVM is an instruction set added to the AMD64 instruction set architecture (ISA) to provide hardware support to hypervisors. After the rootkit envelopes the host OS inside a VM, it monitors the guest OS for commands from malicious services.

Blue Pill first detects the virtual environment and then injects a “thin hypervisor” beneath the host operating system, encapsulating it inside a virtual machine. The author defines a “thin hypervisor” as one that transparently controls the target machine. This should raise a red flag immediately since we have previously discussed the intractable nature of providing transparent virtualization, as discussed by Garfinkel et al. in their paper found at http://www.cs.cmu.edu/~jfrankli/hotos07/vmm_detection_hotos07.pdf. This is a sticking point among the researchers at Invisible Things Lab and the research community in general.

Blue Pill is loaded in the following manner:

1. Load a kernel-mode driver.

2. Enable SVM support by setting a special CPU register to 1 : Extended Feature Enable Register (EFER) Model Specific Register (MSR).

3. Allocate and initialize a special data structure called the Virtual Machine Control Block (VMCB), which will be used to “jail” the host operating system after the Blue Pill hypervisor takes over.

4. Copy the hypervisor into a hidden spot in memory.

5. Save the address of the host processor information in a special register called VM_HSAVE_PA MSR.

6. Modify the VMCB data structure to contain logic that allows the guest to transfer execution back to the hypervisor.

7. Set up the VMCB to look like the saved state of the target VM you are about to jail.

8. Jump to hypervisor code.

9. Execute the VMRUN instruction, passing the address of the “jailed” VM.

Once the VMRUN instruction is issued, the CPU runs in unprivileged guest mode. The only time the CPU execution level is elevated is when a VMEXIT instruction is issued by the guest VM. The Blue Pill hypervisor captures this instruction.

Blue Pill has several capabilities that contribute to its stealthiness:

• The “thin hypervisor” does not attempt to emulate hardware or instruction sets, so most of the detection methods discussed previously do not work.

• There is very little impact to performance.

• Blue Pill installs on-the-fly and no reboot is needed.

• Bill Pill uses “Blue Chicken” to deter timing detection by briefly uninstalling the Blue Pill hypervisor if a timing instruction call is detected.

Some limitations of Blue Pill include

• It’s not persistent—a reboot removes it.

• Researchers have shown that Translation Lookaside Buffer (TLB), branch prediction, counter-based clock, and General Protection (GP) exceptions can detect Blue Pill side effects. These are all processor-specific structures/capabilities that Blue Pill cannot control directly but are directly affected because Blue Pill uses system resources just like any other software.

Vitriol: Hardware Virtual Machine (HVM) Rootkit

Vitriol: Hardware Virtual Machine (HVM) Rootkit

The Vitriol rootkit was released at the same time as Joanna’s Blue Pill at Black Hat USA 2006. This rootkit is the yin to Blue Pill’s yang, because it targets Intel VT-x hardware virtualization support and Blue Pill targets AMD-V SVM support.

As previously mentioned, Intel VT-x support provides hardware-level CPU instructions that the VT-x hypervisor uses to raise and lower the execution level of the CPU. There are two execution levels in VT-x terminology: VMX root (Ring 0) and VMX non-root (a “less privileged” Ring 0). Guest operating systems are launched and run in VMX non-root mode but can issue a VM exit instruction when they need to access privileged instructions, such as to perform I/O. When this occurs, the CPU is elevated to VMX root.

This technology is not remarkably different than AMD-V SVM support: Both technologies achieve the same goal of a hardware-level hypervisor with full virtualization support. They are also exploited in similar ways by Blue Pill and Vitriol. Both virtual rootkits

• Install a kernel driver into the target OS

• Access the low-level virtualization support instructions (such as VMXON in VT-x)

• Create memory space for the malicious hypervisor

• Create memory space for the new VM

• Migrate the running OS into the new VM

• Entrench the malicious hypervisor by trapping all commands from the new VM

Vitriol implements all of these steps in three main functions to trap the host OS inside a VM without its knowledge:

• Vmx_init() Detects and initializes VT-x

• Vmx_fork() Pushes the running host OS into a VM and slides the hypervisor beneath it

• On_vm_exit() Processes VMEXIT requests and performs emulation

The last function also provides the typical rootkit-like capabilities: accesses filter devices, hides processes and files, reads/modifies network traffic, and logs keystrokes. All of these capabilities are implemented beneath the operating system in the rootkit’s hypervisor.

Virtual Rootkit Countermeasures

Virtual Rootkit Countermeasures

As corporate infrastructures and data centers continue to trade in bare-metal for virtual servers, the threat of virtual rootkits and malicious software will continue to grow. Since the original hysteria surrounding Blue Pill’s (and the less-hyped Vitriol’s) release in 2006, AMD and Intel have made revisions to their virtualization technologies to counter the threat, even though no source code was released until 2007, a year later. As mentioned in a whitepaper by Crucial Security (http://megasecurity.org/papers/hvmrootkits.pdf), AMD’s revision 2 release for the AMD64 processor included the ability to require a cryptographic key to enable and disable the SVM virtualization technology. As you’ll recall, this is one of the prerequisites to Blue Pill loading: the ability to enable SVM programmatically by setting the SVME bit of the EFER MSR register to 1. That is, Blue Pill would not be able to execute if it could not enable or disable SVM in its code.

While the debate continues on the questionable “100 percent undetectable” nature of HVMs, this does not change the fact that these rootkits exist and represent a growing threat.

Summary

Looking back at the types of virtual rootkits, all three types must be able to determine if they are in a virtual environment. Virtualization-aware malware, however, is a dying breed. As the authors of SubVirt point out, malware will eventually have no choice but to run in virtual environments because data centers and large commercial and government organizations are continuing to migrate their traditionally physical assets into virtual assets. Malware authors have to accept the possibility that they are being watched in a virtual environment because the gain in possible host systems outweighs the risk of being discovered and analyzed. In essence, the issue of VM detection has become mostly moot for malware.

For the remaining types of virtual malware that represent advanced virtual rootkits—VMBR and HVM rootkits—researchers and the Blue Pill authors have hotly debated as to whether the detection methods discussed (timing, resource, and logical anomalies) are actually detecting Blue Pill itself or simply the presence of SVM virtualization. The argument boils down to whether you assume the future of computing is 100 percent virtualization. If that is the case, then the fact that a host operating system detects it is in a VM is irrelevant. The Blue Pill authors take this position and compare the discoveries of current VM detection techniques to declaring the presence of network activity on a system as evidence of a botnet.

Aside from that debate, there are some countermeasures suggested by the Blue Pill authors that would prevent all HVM rootkits (as well as SubVirt):

• Disable virtualization support in BIOS if it is not needed.

• A futuristic, hardware-based hypervisor that allows only cryptographically signed Virtual Machine images to load.

• “Hardware Red Pill” or “SVMCHECK”—a hardware-supported instruction that requires a unique password to load a VM/hypervisor.

Virtualization creates a unique challenge for both rootkit authors and rootkit detectors alike. Rest assured that we have not seen the end of this debate.

To end on a positive note, hypervisors are being used for reasons other than subversion (or their intended purpose). Two hypervisor-based rootkit detectors have been released: Hypersight by North Security Labs (http://www.softpedia.com/get/Security/Security-Related/Hypersight-Rootkit-Detector.shtml) and Paladin by students at Rutgers University (http://www.cs.rutgers.edu/~iftode/intrusion06.pdf). Essentially these tools do the same thing Blue Pill does, except their intent is to detect and prevent virtual and traditional rootkits from loading at all.