Chapter 15

Web Applications and Mobile Apps

In This Chapter

![]() Testing websites and web applications

Testing websites and web applications

![]() Uncovering flaws in mobile apps

Uncovering flaws in mobile apps

![]() Protecting against SQL injection and cross-site scripting

Protecting against SQL injection and cross-site scripting

![]() Preventing login weaknesses

Preventing login weaknesses

![]() Manually analyzing software flaws

Manually analyzing software flaws

![]() Countering web abuse

Countering web abuse

![]() Analyzing source code

Analyzing source code

Websites and web applications are common targets for attack because they’re everywhere and often open for anyone to poke and prod. Basic websites used for marketing, contact information, document downloads, and so on are especially easy for the bad guys to play around with. Commonly-used web platforms such as WordPress and related content management systems are especially vulnerable to attack because of their presence and lack of testing and patching. For criminal hackers, websites that provide a front end to complex applications and databases that store valuable information, such as credit card and Social Security numbers, are especially attractive. This is where the money is, both literally and figuratively.

Why are websites and applications so vulnerable? The consensus is that they’re vulnerable because of poor software development and testing practices. Sound familiar? It should; this same problem affects operating systems and practically all aspects of computer systems, including automobiles and related Internet of Things (IoT) systems. This is the side effect of relying on software compilers to perform error checking, questionable user demand for higher-quality software, and emphasizing time-to-market and usability over security.

This chapter presents security tests to run on your websites, applications, and mobile apps. Given all the custom configuration possibilities and system complexities, you can test for literally thousands of software vulnerabilities. In this chapter, I focus on the ones I see most often using both automated scanners and manual analysis. I also outline countermeasures to help minimize the chances that someone with ill intent can carry out these attacks against what are likely considered your most critical business systems.

Choosing Your Web Security Testing Tools

Good web security testing tools can help ensure that you get the most from your work. As with many things in life, I find that you get what you pay for when it comes to testing for web security holes. This is why I mostly use commercial tools in my work when testing websites and web applications for vulnerabilities.

These are my favorite web security testing tools:

- Acunetix Web Vulnerability Scanner (

www.acunetix.com) for all-in-one security testing, including a port scanner and an HTTP sniffer - AppSpider (

www.rapid7.com/products/appspider) for all-in-one security testing including excellent capabilities for authenticated scanning Web Developer (

http://chrispederick.com/work/web-developer) for manual analysis and manipulation of web pages Yes, you must do manual analysis. You definitely want to use a scanner, because scanners find around half of the issues. For the other half, you need to do much more than just run automated scanning tools. Remember that you have to pick up where scanners leave off to truly assess the overall security of your websites and applications. You have to do some manual work not because web vulnerability scanners are faulty, but because poking and prodding web systems simply require good old-fashioned hacker trickery and your favorite web browser.

Yes, you must do manual analysis. You definitely want to use a scanner, because scanners find around half of the issues. For the other half, you need to do much more than just run automated scanning tools. Remember that you have to pick up where scanners leave off to truly assess the overall security of your websites and applications. You have to do some manual work not because web vulnerability scanners are faulty, but because poking and prodding web systems simply require good old-fashioned hacker trickery and your favorite web browser.- Netsparker (

www.netsparker.com) for all-in-one security testing that often uncovers vulnerabilities the other tools do not

You can also use general vulnerability scanners, such as Nexpose and LanGuard, as well as exploit tools, such as Metasploit, when testing websites and applications. You can use these tools to find (and exploit) weaknesses at the web server level that you might not otherwise find with standard web-scanning tools and manual analysis. Google can be beneficial for rooting through web applications and looking for sensitive information as well. Although these non–application-specific tools can be beneficial, it’s important to know that they won’t drill down as deep as the tools I mention in the preceding list.

Seeking Out Web Vulnerabilities

Attacks against vulnerable websites and applications via Hypertext Transfer Protocol (HTTP) make up the majority of all Internet-related attacks. Most of these attacks can be carried out even if the HTTP traffic is encrypted (via HTTPS, also known as HTTP over SSL/TLS) because the communications medium has nothing to do with these attacks. The security vulnerabilities actually lie within the websites and applications themselves or the web server and browser software that the systems run on and communicate with.

Many attacks against websites and applications are just minor nuisances and might not affect sensitive information or system availability. However, some attacks can wreak havoc on your systems, putting sensitive information at risk and even placing your organization out of compliance with state, federal, and international information privacy and security laws and regulations.

You don’t necessarily have to perform manual analysis of your websites and applications every time you test, but you need to do it periodically — at least once or twice a year. Don’t let anyone tell you otherwise!

Directory traversal

I start you out with a simple directory traversal attack. Directory traversal is a really basic weakness, but it can turn up interesting — sometimes sensitive — information about a web system. This attack involves browsing a site and looking for clues about the server’s directory structure and sensitive files that might have been loaded intentionally or unintentionally.

Perform the following tests to determine information about your website’s directory structure.

Crawlers

A spider program, such as the free HTTrack Website Copier (https://httrack.com), can crawl your site to look for every publicly accessible file. To use HTTrack, simply load it, give your project a name, tell HTTrack which website(s) to mirror, and after a few minutes, possibly hours (depending on the size and complexity of the site), you’ll have everything that’s publicly accessible on the site stored on your local drive in c:My Web Sites. Figure 15-1 shows the crawl output of a basic website.

Figure 15-1: Using HTTrack to crawl a website.

Complicated sites often reveal a lot more information that should not be there, including old data files and even application scripts and source code.

Look at the output of your crawling program to see what files are available. Regular HTML and PDF files are probably okay because they’re most likely needed for normal web usage. But it wouldn’t hurt to open each file to make sure it belongs there and doesn’t contain sensitive information you don’t want to share with the world.

Google, the search engine company that many love to hate, can also be used for directory traversal. In fact, Google’s advanced queries are so powerful that you can use them to root out sensitive information, critical web server files and directories, credit card numbers, webcams — basically anything that Google has discovered on your site — without having to mirror your site and sift through everything manually. It’s already sitting there in Google’s cache waiting to be viewed.

The following are a couple of advanced Google queries that you can enter directly into the Google search field:

- site:hostname keywords — This query searches for any keyword you list, such as SSN, confidential, credit card, and so on. An example would be:

site:www.principlelogic.com speaker - filetype:file-extension site:hostname — This query searches for specific file types on a specific website, such as doc, pdf, db, dbf, zip, and more. These file types might contain sensitive information. An example would be:

filetype:pdf site:www.principlelogic.com

Other advanced Google operators include the following:

- allintitle searches for keywords in the title of a web page.

- inurl searches for keywords in the URL of a web page.

- related finds pages similar to this web page.

- link shows other sites that link to this web page.

Specific definitions and more can be found at www.googleguide.com/advanced_operators.html. Many web vulnerability scanners also perform checks against the Google Hacking Database (GHDB) site www.exploit-db.com/google-hacking-database.

Looking at the bigger picture of web security, Google hacking is pretty limited, but if you’re really into it, check out Johnny Long’s book, Google Hacking for Penetration Testers (Syngress).

Countermeasures against directory traversals

You can employ three main countermeasures against having files compromised via malicious directory traversals:

- Don’t store old, sensitive, or otherwise nonpublic files on your web server. The only files that should be in your /htdocs or DocumentRoot folder are those that are needed for the site to function properly. These files should not contain confidential information that you don’t want the world to see.

- Configure your robots.txt file to prevent search engines, such as Google, from crawling the more sensitive areas of your site.

Ensure that your web server is properly configured to allow public access to only those directories that are needed for the site to function. Minimum privileges are key here, so provide access to only the files and directories needed for the web application to perform properly.

Check your web server’s documentation for instructions on controlling public access. Depending on your web server version, these access controls are set in

Check your web server’s documentation for instructions on controlling public access. Depending on your web server version, these access controls are set in- The httpd.conf file and the .htaccess files for Apache (See http://httpd.apache.org/docs/current/configuring.html for more information.)

- Internet Information Services Manager for IIS

The latest versions of these web servers have good directory security by default so, if possible, make sure you’re running the latest versions.

Finally, consider using a search engine honeypot, such as the Google Hack Honeypot (http://ghh.sourceforge.net). A honeypot draws in malicious users so you can see how the bad guys are working against your site. Then, you can use the knowledge you gain to keep them at bay.

Input-filtering attacks

Websites and applications are notorious for taking practically any type of input, mistakenly assuming that it’s valid, and processing it further. Not validating input is one of the greatest mistakes that web developers can make.

Several attacks that insert malformed data — often, too much at one time — can be run against a website or application, which can confuse the system and make it divulge too much information to the attacker. Input attacks can also make it easy for the bad guys to glean sensitive information from the web browsers of unsuspecting users.

Buffer overflows

One of the most serious input attacks is a buffer overflow that specifically targets input fields in web applications.

For instance, a credit-reporting application might authenticate users before they’re allowed to submit data or pull reports. The login form uses the following code to grab user IDs with a maximum input of 12 characters, as denoted by the maxsize variable:

<form name="Webauthenticate" action="www.your_web_app.com/

login.cgi" method="POST">

…

<input type="text" name="inputname" maxsize="12">

…

A typical login session would involve a valid login name of 12 characters or fewer. However, the maxsize variable can be changed to something huge, such as 100 or even 1,000. Then an attacker can enter bogus data in the login field. What happens next is anyone’s call — the application might hang, overwrite other data in memory, or crash the server.

A simple way to manipulate such a variable is to step through the page submission by using a web proxy, such as those built in to the commercial web vulnerability scanners I mention or the free Burp Proxy (https://portswigger.net/burp/proxy.html).

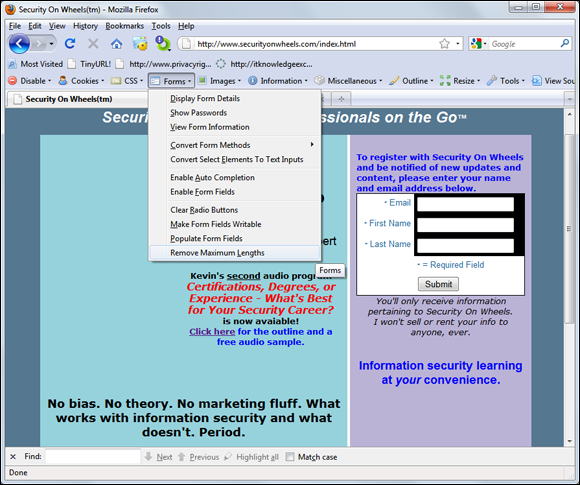

All you have to do is change the field length of the variable before your browser submits the page, and it will be submitted using whatever length you give. You can also use the Web Developer to remove maximum form lengths defined in web forms, as shown in Figure 15-2.

Figure 15-2: Using Firefox Web Developer to reset form field lengths.

URL manipulation

An automated input attack manipulates a URL and sends it back to the server, telling the web application to do various things, such as redirect to third-party sites, load sensitive files off the server, and so on. Local file inclusion is one such vulnerability. This is when the web application accepts URL-based input and returns the specified file’s contents to the user such as in the following example of an attempted breach of a Linux server’s passwd file:

https://www.your_web_app.com/onlineserv/Checkout.cgi?state=

detail&language=english&imageSet=/../..//../..//../..//../

..///etc/passwd

It’s important to note that most recent application platforms such as ASP.NET and Java are pretty good about not allowing such manipulation of the URL variables, but I do still see this vulnerability periodically.

The following links demonstrate another example of URL trickery called URL redirection:

http://www.your_web_app.com/error.aspx?URL=http://www.

bad~site.com&ERROR=Path+’OPTIONS’+is+forbidden.

http://www.your_web_app.com/exit.asp?URL=http://www.

bad~site.com

In both situations, an attacker can exploit this vulnerability by sending the link to unsuspecting users via e-mail or by posting it on a website. When users click the link, they can be redirected to a malicious third-party site containing malware or inappropriate material.

Hidden field manipulation

Some websites and applications embed hidden fields within web pages to pass state information between the web server and the browser. Hidden fields are represented in a web form as <input type="hidden">. Because of poor coding practices, hidden fields often contain confidential information (such as product prices on an e-commerce site) that should be stored only in a back-end database. Users shouldn’t see hidden fields — hence the name — but the curious attacker can discover and exploit them with these steps:

View the HTML source code.

To see the source code in Internet Explorer and Firefox, you can usually right-click on the page and select View source or View Page Source.

To see the source code in Internet Explorer and Firefox, you can usually right-click on the page and select View source or View Page Source.Change the information stored in these fields.

For example, a malicious user might change the price from $100 to $10.

Repost the page back to the server.

This step allows the attacker to obtain ill-gotten gains, such as a lower price on a web purchase.

Such vulnerabilities are becoming rare, but like URL manipulation, the possibility exists so it pays to keep an eye out.

Several tools, such as the proxies that come with commercial web vulnerability scanners or Burp Proxy, can easily manipulate hidden fields. Figure 15-3 shows the WebInspect SPI Proxy interface and a web page’s hidden field.

Figure 15-3: Using WebInspect to find and manipulate hidden fields.

If you come across hidden fields, you can try to manipulate them to see what can be done. It’s as simple as that.

Code injection and SQL injection

Similar to URL manipulation attacks, code-injection attacks manipulate specific system variables. Here’s an example:

http://www.your_web_app.com/script.php?info_variable=X

Attackers who see this variable can start entering different data into the info_variable field, changing X to something like one of the following lines:

http://www.your_web_app.com/script.php?info_variable=Y

http://www.your_web_app.com/script.php?info_variable=123XYZ

This is a rudimentary example but, nonetheless, the web application might respond in a way that gives attackers more information than they want, such as detailed errors or access into data fields they’re not authorized to access. The invalid input might also cause the application or the server to hang. Similar to the case study earlier in the chapter, hackers can use this information to determine more about the web application and its inner workings, which can ultimately lead to a serious system compromise.

I once used a web application to manage some personal information that did just this. Because a "name" parameter was part of the URL, anyone could gain access to other people’s personal information by changing the "name" value. For example, if the URL included "name=kbeaver", a simple change to "name=jsmith" would bring up J. Smith’s home address, Social Security number, and so on. Ouch! I alerted the system administrator to this vulnerability. After a few minutes of denial, he agreed that it was indeed a problem and proceeded to work with the developers to fix it.

Code injection can also be carried out against back-end SQL databases — an attack known as SQL injection. Malicious attackers insert SQL statements, such as CONNECT, SELECT, and UNION, into URL requests to attempt to connect and extract information from the SQL database that the web application interacts with. SQL injection is made possible by applications not properly validating input combined with informative errors returned from database servers and web servers.

Two general types of SQL injection are standard (also called error-based) and blind. Error-based SQL injection is exploited based on error messages returned from the application when invalid information is input into the system. Blind SQL injection happens when error messages are disabled, requiring the hacker or automated tool to guess what the database is returning and how it’s responding to injection attacks.

You’re definitely going to get what you pay for when it comes to scanning for and uncovering SQL injection flaws with a web vulnerability scanner. As with URL manipulation, you’re much better off running a web vulnerability scanner to check for SQL injection, which allows an attacker to inject database queries and commands through the vulnerable page to the backend database. Figure 15-4 shows numerous SQL injection vulnerabilities discovered by the Netsparker vulnerability scanner.

Figure 15-4: Netsparker discovered SQL injection vulnerabilities.

When you discover SQL injection vulnerabilities, you might be inclined to stop there and not try to exploit the weakness. That’s fine. However, I prefer to see how far I can get into the database system. I recommend using any SQL injection capabilities built into your web vulnerability scanner if possible so you can demonstrate the flaw to management.

I cover database security more in depth in Chapter 16.

Cross-site scripting

Cross-site scripting (XSS) is perhaps the most well-known — and widespread — web vulnerability that occurs when a web page displays user input — typically via JavaScript — that isn’t properly validated. A criminal hacker can take advantage of the absence of input filtering and cause a web page to execute malicious code on any user’s computer that views the page.

For example, an XSS attack can display the user ID and password login page from another rogue website. If users unknowingly enter their user IDs and passwords in the login page, the user IDs and passwords are entered into the hacker’s web server log file. Other malicious code can be sent to a victim’s computer and run with the same security privileges as the web browser or e-mail application that’s viewing it on the system; the malicious code could provide a hacker with full Read/Write access to browser cookies, browser history files, or even permit the download/installation of malware.

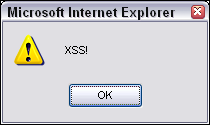

<script>alert('XSS')</script>

If a window pops up that reads XSS, as shown in Figure 15-5, the application is vulnerable. The XSS-Me Firefox Add-on (https://addons.mozilla.org/en-US/firefox/addon/xss-me/) is a novel way to test for this vulnerability as well.

Figure 15-5: Script code reflected to the browser.

There are many more iterations for exploiting XSS, such as those requiring user interaction via the JavaScript onmouseover function. As with SQL injection, you really need to use an automated scanner to check for XSS. Both Netsparker and Acunetix Web Vulnerability Scanner do a great job of finding XSS. However, they often tend to find different XSS issues, a detail that highlights the importance of using multiple scanners when you can. Figure 15-6 shows some sample XSS findings in Acunetix Web Vulnerability Scanner.

Figure 15-6: Using Acunetix Web Vulnerability Scanner to find cross-site scripting in a web application.

Countermeasures against input attacks

Websites and applications must filter incoming data. It’s as simple as that. The sites and applications must check and ensure that the data entered fits within the parameters of what the application is expecting. If the data doesn’t match, the application should generate an error or return to the previous page. Under no circumstances should the application accept the junk data, process it, and reflect it back to the user.

Secure software coding practices can eliminate all these issues if they’re made a critical part of the development process. Developers should know and implement these best practices:

- Never present static values that the web browser and the user don’t need to see. Instead, this data should be implemented within the web application on the server side and retrieved from a database only when needed.

- Filter out <script> tags from input fields.

- Disable detailed web server and database-related error messages if possible.

Default script attacks

Poorly written web programs, such as Hypertext Preprocessor (PHP) and Active Server Pages (ASP) scripts, can allow hackers to view and manipulate files on a web server and do other things they’re not authorized to do. These flaws are also common in content management systems (CMSs) that are used by developers, IT staff, and marketing professionals to maintain a website’s content. Default script attacks are common because so much poorly written code is freely accessible on websites. Hackers can also take advantage of various sample scripts that install on web servers, especially older versions of Microsoft’s IIS web server.

To test for script vulnerabilities, you can peruse scripts manually or use a text search tool (such as the search function built in to the Windows Start menu or the Find program in Linux) to find any hard-coded usernames, passwords, and other sensitive information. Search for admin, root, user, ID, login, signon, password, pass, pwd, and so on. Sensitive information embedded in scripts like this is rarely necessary and is often the result of poor coding practices that give precedence to convenience over security.

Countermeasures against default script attacks

You can help prevent attacks against default web scripts as follows:

- Know how scripts work before deploying them within a web environment.

- Make sure that all default or sample scripts are removed from the web server before using them.

Keep any content management system software updated, especially WordPress as it tends to be a big target for attackers.

Don’t use publicly accessible scripts that contain hard-coded confidential information. They’re a security incident in the making.

Don’t use publicly accessible scripts that contain hard-coded confidential information. They’re a security incident in the making.- Set file permissions on sensitive areas of your site/application to prevent public access.

Unsecured login mechanisms

Many websites require users to log in before they can do anything with the application. These login mechanisms often don’t handle incorrect user IDs or passwords gracefully. They often divulge too much information that an attacker can use to gather valid user IDs and passwords.

To test for unsecured login mechanisms, browse to your application and log in

- Using an invalid user ID with a valid password

- Using a valid user ID with an invalid password

- Using an invalid user ID and invalid password

After you enter this information, the web application will probably respond with a message similar to Your user ID is invalid or Your password is invalid. The web application might return a generic error message, such as Your user ID and password combination is invalid and, at the same time, return different error codes in the URL for invalid user IDs and invalid passwords, as shown in Figures 15-7 and 15-8.

Figure 15-7: URL returns an error when an invalid user ID is entered.

Figure 15-8: The URL returns a different error when an invalid password is entered.

In either case, this is bad news because the application is telling you not only which parameter is invalid, but also which one is valid. This means that malicious attackers now know a good username or password — their workload has been cut in half! If they know the username (which usually is easier to guess), they can simply write a script to automate the password-cracking process, and vice versa.

You should also take your login testing to the next level by using a web login cracking tool, such as Brutus (www.hoobie.net/brutus/index.html), as shown in Figure 15-9. Brutus is a very simple tool that can be used to crack both HTTP and form-based authentication mechanisms by using both dictionary and brute-force attacks.

Figure 15-9: The Brutus tool for testing for weak web logins.

An alternative — and better maintained — tool for cracking web passwords is THC-Hydra (www.thc.org/thc-hydra)

Most commercial web vulnerability scanners have decent dictionary-based web password crackers but none (that I’m aware of) can do true brute-force testing like Brutus can. As I discuss in Chapter 8, your password-cracking success is highly dependent on your dictionary lists. Here are some popular sites that house dictionary files and other miscellaneous word lists:

ftp://ftp.cerias.purdue.edu/pub/dicthttp://packetstormsecurity.org/Crackers/wordlistswww.outpost9.com/files/WordLists.html

You might not need a password-cracking tool at all because many front-end web systems, such as storage management systems and IP video and physical access control systems, simply have the passwords that came on them. These default passwords are usually “password,” “admin,” or nothing at all. Some passwords are even embedded right in the login page’s source code, such as the network camera source code shown in lines 207 and 208 in Figure 15-10.

Figure 15-10: A network camera’s login credentials embedded directly in its HTML source code.

Countermeasures against unsecured login systems

You can implement the following countermeasures to prevent people from attacking weak login systems in your web applications:

- Any login errors that are returned to the end user should be as generic as possible, saying something similar to Your user ID and password combination is invalid.

The application should never return error codes in the URL that differentiate between an invalid user ID and an invalid password.

If a URL message must be returned, the application should keep it as generic as possible. Here’s an example:

If a URL message must be returned, the application should keep it as generic as possible. Here’s an example:www.your_web_app.com/login.cgi?success=falseThis URL message might not be convenient to the user, but it helps hide the mechanism and the behind-the-scenes actions from the attacker.

- Use CAPTCHA (also reCAPTCHA) or web login forms to help prevent password-cracking attempts.

- Employ an intruder lockout mechanism on your web server or within your web applications to lock user accounts after 10–15 failed login attempts. This chore can be handled via session tracking or via a third-party web application firewall add-on like I discuss in the later section “Putting up firewalls.”

- Check for and change any vendor default passwords to something that’s easy to remember yet difficult to crack.

Performing general security scans for web application vulnerabilities

I want to reiterate that both automated and manual testing need to be performed against your web systems. You’re not going to see the whole picture by relying on just one of these methods. I highly recommend using an all-in-one web application vulnerability scanner such as Acunetix Web Vulnerability Scanner or AppSpider to help you root out web vulnerabilities that would be unreasonable if not impossible to find otherwise. Combine the scanner results with a malicious mindset and the hacking techniques I describe in this chapter, and you’re on your way to finding the web security flaws that matter.

Minimizing Web Security Risks

Keeping your web applications secure requires ongoing vigilance in your ethical hacking efforts and on the part of your web developers and vendors. Keep up with the latest hacks, testing tools, and techniques and let your developers and vendors know that security needs to be a top priority for your organization. I discuss getting security buy-in in Chapter 20.

- OWASP WebGoat Project (

www.owasp.org/index.php/Category:OWASP_WebGoat_Project) - Foundstone’s SASS Hacme Tools (

www.mcafee.com/us/downloads/free-tools/index.aspx)

I highly recommended you check them out and get your hands dirty!

Practicing security by obscurity

The following forms of security by obscurity — hiding something from obvious view using trivial methods — can help prevent automated attacks from worms or scripts that are hard-coded to attack specific script types or default HTTP ports:

To protect web applications and related databases, use different machines to run each web server, application, and database server.

The operating systems on these individual machines should be tested for security vulnerabilities and hardened based on best practices and the countermeasures described in Chapters 12 and 13.

The operating systems on these individual machines should be tested for security vulnerabilities and hardened based on best practices and the countermeasures described in Chapters 12 and 13.- Use built-in web server security features to handle access controls and process isolation, such as the application-isolation feature in IIS. This practice helps ensure that if one web application is attacked, it won’t necessarily put any other applications running on the same server at risk.

- Use a tool for obscuring your web server’s identity — essentially anonymizing your server. An example is Port 80 Software’s ServerMask (

www.port80software.com/products/servermask). - If you’re running a Linux web server, use a program such as IP Personality (

http://ippersonality.sourceforge.net) to change the OS fingerprint so the system looks like it’s running something else. - Change your web application to run on a nonstandard port. Change from the default HTTP port 80 or HTTPS port 443 to a high port number, such as 8877, and, if possible, set the server to run as an unprivileged user — that is, something other than system, administrator, root, and so on.

Putting up firewalls

Consider using additional controls to protect your web systems, including the following:

- A network-based firewall or IPS that can detect and block attacks against web applications. This includes commercial firewalls from such companies as WatchGuard (

www.watchguard.com) and Palo Alto Networks (www.paloaltonetworks.com) - A host-based web application IPS, such as SecureIIS (

www.eeye.com/products/secureiis-web-server-security) or ServerDefender (www.port80software.com/products/serverdefender) or a Web Application Firewall (WAF) from vendors such as Barracuda Networks (www.barracuda.com/products/webapplicationfirewall) and FortiNet (www.fortinet.com/products/fortiweb/index.html)

These programs can detect web application and certain database attacks in real time and cut them off before they have a chance to do any harm.

Analyzing source code

Software development is where many software security holes begin and should end but rarely do. If you feel confident in your security testing efforts to this point, you can dig deeper to find security flaws in your source code — things that might never be discovered by traditional scanners and hacking techniques but that are problems nonetheless. Fear not! It’s actually much simpler than it sounds. No, you won’t have to go through the code line by line to see what’s happening. You don’t even need development experience (although it does help).

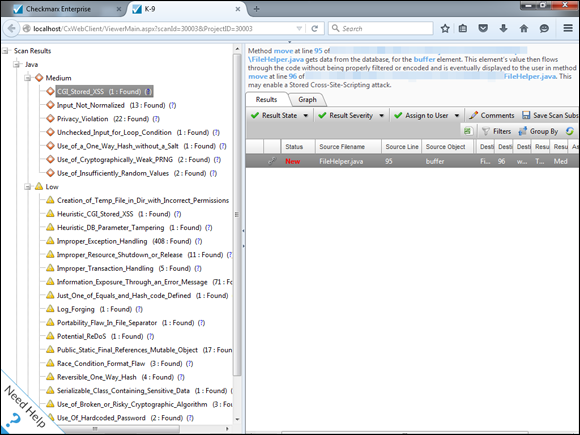

To do this, you can use a static source code analysis tool, such as those offered by Klocwork (www.klocwork.com) and Checkmarx (www.checkmarx.com). Checkmarx’s CxSuite is a standalone tool that’s reasonably priced and very comprehensive in its testing of both web applications and mobile apps — something that’s hard to find among source code analysis vendors.

As shown in Figure 15-11, with CxSuite, you simply load the Enterprise Client, log in to the application (default credentials are admin@cx/admin), run the Create Scan Wizard to point it to the source code and select your scan policy, click Next, click Run, and you’re off and running.

Figure 15-11: Using CxSuite to do an analysis of an open source Android mobile app.

When the scan completes, you can review the findings and recommended solutions, as shown in Figure 15-12.

Figure 15-12: Reviewing the results of an open source Android e-mail app.

As you can see, what was seemingly a safe and secure e-mail app doesn’t appear to be all that. You never know until you check the source code!

CxDeveloper is pretty much all you need to analyze and report on vulnerabilities in your C#, Java, and mobile source code bundled into one simple package. Checkmarx, like a few others, also offers a cloud-based source code analysis service. If you can get over any hurdles associated with uploading your source code to a third party in the cloud, these can offer a more efficient and mostly hands-free option for source code analysis.

The bottom line with web application and mobile app security is that if you can show your developers and quality assurance analysts that security begins with them, you can really make a difference in your organization’s overall information security.

Uncovering Mobile App Flaws

In addition to running a tool such as CxSuite to check for mobile app vulnerabilities, there are several other things you’ll want to look for including:

- Cryptographic database keys that are hard-coded into the app

- Improper handling of sensitive information such as storing personally-identifiable information (a.k.a. PII) locally where the user and other apps can access it

- Login weaknesses, such as being able to get around login prompts

- Allowing weak, or blank, passwords

Note that these checks are mostly uncovered via manual analysis and may require tools such as wireless network analyzers, forensics tools, and web proxies that I talk about in Chapter 9 and Chapter 11, respectively. As with IoT, the important thing is that you’re testing the security of your mobile apps. Better for you to find the flaws than for someone else!

Inevitably, when performing web security assessments, I stumble across

Inevitably, when performing web security assessments, I stumble across