Analysis of Numerical Errors

Adrian Peralta-Alvaa and Manuel S. Santosb, aResearch Division, Federal Reserve Bank of Saint Louis, St Louis, MO, USA, bDepartment of Economics, University of Miami, Coral Gables, FL, USA

Abstract

This paper provides a general framework for the quantitative analysis of stochastic dynamic models. We review the convergence properties of some numerical algorithms and available methods to bound approximation errors. We then address the convergence and accuracy properties of the simulated moments. We study both optimal and non-optimal economies. Optimal economies generate smooth laws of motion defining Markov equilibria, and can be approximated by recursive methods with contractive properties. Non-optimal economies, however, lack existence of continuous Markov equilibria, and need to be simulated by numerical methods with weaker approximation properties.

Keywords

Dynamic stochastic economy; Markov equilibrium; Numerical solution; Approximation error; Accuracy; Simulation-based estimation; Consistency

JEL Classification Codes

C63; E60

1 Introduction

Numerical methods are essential to assess the predictions of non-linear economic models. Indeed, a vast majority of economic models lack analytical solutions, and hence researchers must rely on numerical algorithms—which contain approximation errors. At the heart of modern quantitative analysis is the presumption that the numerical method mimics well the original model statistics. In practice, however, matters are not so simple and there are many situations in which researchers are unable to control for undesirable propagating effects of numerical errors.

In static economies it is usually easy to bound the size of the error. But in infinite-horizon models we have to realize that numerical errors may cumulate in unexpected ways. Cumulative errors can be bounded in models where equilibria may be approximated by contraction operators. But if the contraction property is missing then the most that one can hope for is to establish asymptotic properties of the numerical solution as we refine the approximation.

Model simulations are mechanically performed in macroeconomics and other disciplines, but there is much to be learned about laws of large numbers that can justify the convergence of the simulated moments, and the propagating effects of numerical errors in these simulations. Numerical errors may bias stationary solutions and parameter estimates from simulation-based estimation.

Hence, simulation-based estimation must cope with changes in parameter values affecting the dynamics of the system. Indeed, the estimation process encompasses a continuum of invariant distributions indexed by a vector of parameters. Therefore, simulation-based estimation needs fast and accurate algorithms that can sample the parameter space. Asymptotic properties of these estimators such as consistency and normality are much harder to establish than in traditional data-based estimation in which there is a unique stochastic distribution given by the data-generating process.

This chapter is intended to survey theoretical work on the convergence properties of numerical algorithms and the accuracy of simulations. More specifically, we shall review the established literature with an eye toward a better understanding of the following issues: (i) convergence properties of numerical algorithms and accuracy tests that can bound the size of approximation errors, (ii) accuracy properties of the simulated moments from numerical algorithms and laws of large numbers that can justify model simulation, and (iii) calibration and simulation-based estimation.

We study these issues along with a few illustrative examples. We focus on a large class of dynamic general equilibrium models of wide application in economics and finance. We break the analysis into optimal and non-optimal economies. Optimal economies satisfy the welfare theorems. Hence, equilibria can be computed by associated optimization problems, and under regular conditions these equilibria admit Markovian representations defined by continuous (or differentiable) policy functions. Non-optimal economies may lack the existence of Markov equilibria over the natural state space—or such equilibria may not be continuous. One could certainly restore the Markovian property by expanding the state space, but the non-continuity of the equilibrium remains. These technical problems limit the application of standard algorithms which assume continuous or differentiable approximation rules. (Differentiability properties of the solution are instrumental to characterize the dynamics of the system and to establish error bounds.)

We here put together several results for the computation and simulation of upper semicontinuous correspondences. The idea is to build reliable algorithms and laws of large numbers that can be applied to economies with market frictions and heterogeneous agents as commonly observed in many macroeconomic models.

Section 2 lays out an analytical setting conformed by several equilibrium conditions that include feasibility constraints and first-order conditions. This simplified framework is appropriate for computation. We then consider three illustrative examples: a growth model with taxes, a consumption-based asset-pricing model, and an overlapping generations economy. We show how these economies can readily be mapped into our general framework of analysis.

Our main theoretical results are presented in Sections 3 and 4. Each section starts with a review of some numerical solution methods, and then goes into the analysis of associated computational errors and convergence of the simulated statistics. Section 3 deals with models with continuous Markov equilibria. There is a vast literature on the computation of these equilibria, and here we only deal with the bare essentials. We nevertheless provide a more comprehensive study of the associated error from numerical approximations. Some regularity conditions, such as differentiability or contraction properties, may become instrumental to validate error bounds. These conditions are also needed to establish accuracy and consistency properties of simulation-based estimators.

Section 4 is devoted to non-optimal economies. For this family of models, Markov equilibria on the natural state space may fail to exist, and standard computational methods—which iterate over continuous functions—may produce inaccurate solutions. We present a reliable algorithm based on the iteration over candidate equilibrium correspondences. The algorithm has good convergence and approximation properties, and its fixed point contains a Markovian correspondence that generates all competitive equilibria. Hence, competitive equilibria still admit a recursive representation. But this representation may only be obtained in an enlarged state space (which includes the shadow values of asset holdings), and may not be continuous. The non-continuity of the equilibrium solution precludes application of standard laws of large numbers. This is problematic because we need an asymptotic theory to justify the simulation and estimation of economies with frictions. We discuss some important accuracy results as well as an extended version of the law of large numbers which entails that the sample moments from numerical approximations must approach those of some invariant distribution of the model as the error in the approximated equilibrium correspondence vanishes.

Section 5 presents several numerical experiments. We first study a standard business cycle model. This optimal planning problem becomes handy to assess the accuracy of the computed solutions using the Euler equation residuals. We then introduce some non-optimal economies in which simple Markov equilibria may fail to exist: an overlapping generations economy and an asset-pricing model with endogenous constraints. These examples make clear that standard solution methods would result in substantial computational errors that may drastically change the ergodic sets and corresponding equilibrium dynamics. The equilibrium solutions are then computed by a reliable algorithm introduced in Section 4. This algorithm can also be applied to some other models of interest with heterogeneous agents such as a production economy with taxes and individual rationality constraints, and a consumption-based asset-pricing model with collateral requirements. There are cases in which the solution of this robust algorithm approaches a continuous policy function, and hence we have numerical evidence of existence of a unique equilibrium. Uniqueness of equilibrium guarantees the existence and continuity of a simple Markov equilibrium—which simplifies the computation and simulation of the model. Uniqueness of equilibrium is hard to check using standard numerical methods.

We conclude in Section 6 with further comments and suggestions.

2 Dynamic Stochastic Economies

Our objective is to study quantitative properties of stochastic sequences ![]() that emerge as equilibria of our model economies. These equilibrium sequences arise from the solution of non-linear equation systems, the intertemporal optimization behavior of individual agents, as well as the economy’s aggregate constraints, and the exogenously given sequence of shocks

that emerge as equilibria of our model economies. These equilibrium sequences arise from the solution of non-linear equation systems, the intertemporal optimization behavior of individual agents, as well as the economy’s aggregate constraints, and the exogenously given sequence of shocks ![]() . Our framework of analysis encompasses both competitive and non-competitive economies, with or without a government sector, and incomplete financial markets.

. Our framework of analysis encompasses both competitive and non-competitive economies, with or without a government sector, and incomplete financial markets.

Time is discrete, ![]() , and

, and ![]() is a history of shocks up to period

is a history of shocks up to period ![]() , which is governed by a time-invariant Markov process. For convenience, let us decompose the economic variables of interest as

, which is governed by a time-invariant Markov process. For convenience, let us decompose the economic variables of interest as ![]() . Vector

. Vector ![]() represents predetermined variables, such as capital stocks and portfolio holdings. Future values of these variables will be determined endogenously by current and future actions. Vector

represents predetermined variables, such as capital stocks and portfolio holdings. Future values of these variables will be determined endogenously by current and future actions. Vector ![]() denotes all other current endogenous variables such as consumption, investment, asset prices, interest rates, and so on. Further more, sometimes vector

denotes all other current endogenous variables such as consumption, investment, asset prices, interest rates, and so on. Further more, sometimes vector ![]() may include the shock

may include the shock ![]() .

.

The dynamics of state vector ![]() will be captured by a system of non-linear equations:

will be captured by a system of non-linear equations:

![]() (1)

(1)

Function ![]() may incorporate technological constraints and individual budget constraints. Likewise, present and future values of vector

may incorporate technological constraints and individual budget constraints. Likewise, present and future values of vector ![]() are linked by the non-linear system:

are linked by the non-linear system:

![]() (2)

(2)

where ![]() is the expectations operator conditioning on information at time

is the expectations operator conditioning on information at time ![]() . Conditions describing function

. Conditions describing function ![]() may correspond to individual optimality conditions (such as Euler equations), short-sales and liquidity requirements, endogenous borrowing constraints, individual rationality constraints, and market clearing conditions.

may correspond to individual optimality conditions (such as Euler equations), short-sales and liquidity requirements, endogenous borrowing constraints, individual rationality constraints, and market clearing conditions.

We now present three different examples to illustrate that standard macro models can readily be mapped into this framework.

2.1 A Growth Model with Taxes

The economy is made up of a representative household and a single firm. The exogenously given stochastic process ![]() is an index of total factor productivity. For given sequences of rental rates,

is an index of total factor productivity. For given sequences of rental rates, ![]() , wages,

, wages, ![]() , profits redistributed by the firm,

, profits redistributed by the firm, ![]() , government lump-sum transfers,

, government lump-sum transfers, ![]() , and tax functions,

, and tax functions, ![]() , the household solves the following optimization problem:

, the household solves the following optimization problem:

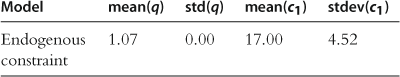

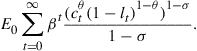

(3)

(3)

Here, ![]() denotes consumption,

denotes consumption, ![]() denotes the amount of labor supplied, and

denotes the amount of labor supplied, and ![]() denotes holdings of physical capital. Parameter

denotes holdings of physical capital. Parameter ![]() is the discount factor and

is the discount factor and ![]() is the capital depreciation rate. Taxes

is the capital depreciation rate. Taxes ![]() may be non-linear functions of income variables (such as capital or labor income) and of the aggregate capital stock

may be non-linear functions of income variables (such as capital or labor income) and of the aggregate capital stock ![]() . Households take the sequences of tax functions as given—contingent upon the history of realizations

. Households take the sequences of tax functions as given—contingent upon the history of realizations ![]() .

.

For a given sequence of technology shocks ![]() , factor prices, and output taxes

, factor prices, and output taxes ![]() , the representative firm seeks to maximize one-period profits by selecting the optimal amount of capital and labor

, the representative firm seeks to maximize one-period profits by selecting the optimal amount of capital and labor

![]()

All tax revenues are rebated back to the representative household as lump-sum transfers ![]() .

.

For a given sequence of tax functions ![]() and transfers

and transfers ![]() , a competitive equilibrium for this economy is conformed by stochastic sequences of factor prices and profits

, a competitive equilibrium for this economy is conformed by stochastic sequences of factor prices and profits ![]() , and sequences of consumption, capital and labor allocations

, and sequences of consumption, capital and labor allocations ![]() , such that: (i)

, such that: (i) ![]() solve the above optimization problem of the household; and

solve the above optimization problem of the household; and ![]() maximizes one-period profits for the firm; (ii) the supplies of capital and labor are equal to the quantities demanded:

maximizes one-period profits for the firm; (ii) the supplies of capital and labor are equal to the quantities demanded: ![]() , and

, and ![]() ; and (iii) consumption and investment allocations are feasible

; and (iii) consumption and investment allocations are feasible

![]() (4)

(4)

Getting back to our general framework, we observe that capital is the only predetermined endogenous variable: ![]() , while consumption and hours worked are the endogenous variables

, while consumption and hours worked are the endogenous variables ![]() . Function

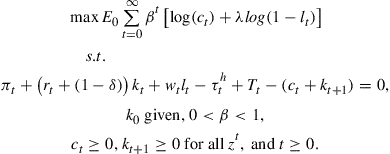

. Function ![]() is thus given by (4). We also have the intertemporal equilibrium conditions:

is thus given by (4). We also have the intertemporal equilibrium conditions:

(5)

(5)

![]() (6)

(6)

It follows that ![]() is defined by equation systems (5) and (6) over constraint (3).

is defined by equation systems (5) and (6) over constraint (3).

2.2 An Asset-Pricing Model with Financial Frictions

The economy is populated by a finite number of agents, ![]() . At each node

. At each node ![]() , there exist spot markets for the consumption good and a fixed set

, there exist spot markets for the consumption good and a fixed set ![]() of securities. For convenience we assume that the supply of each security is equal to unity. Among these securities, we may include a one-period real bond which is a promise to one unit of the consumption good at all successor nodes

of securities. For convenience we assume that the supply of each security is equal to unity. Among these securities, we may include a one-period real bond which is a promise to one unit of the consumption good at all successor nodes ![]() . Our general stylized framework above can embed several financial frictions such as incomplete markets, collateral requirements, and short-sale constraints.

. Our general stylized framework above can embed several financial frictions such as incomplete markets, collateral requirements, and short-sale constraints.

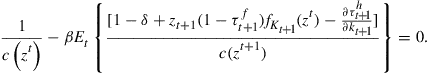

Each agent ![]() maximizes the intertemporal objective

maximizes the intertemporal objective

(7)

(7)

where ![]() , and

, and ![]() is strictly increasing, strictly concave, and continuously differentiable with derivative

is strictly increasing, strictly concave, and continuously differentiable with derivative ![]() . At each node

. At each node ![]() the agent receives

the agent receives ![]() units of the consumption good contingent on the present realization

units of the consumption good contingent on the present realization ![]() . Securities are specified by the current vector of prices,

. Securities are specified by the current vector of prices, ![]() , and the vectors of dividends

, and the vectors of dividends ![]() promised to deliver at future information sets

promised to deliver at future information sets ![]() for

for ![]() . The vector of security prices

. The vector of security prices ![]() is non-negative, and the vector of dividends

is non-negative, and the vector of dividends ![]() is positive and depends only on the current realization of the vector of shocks

is positive and depends only on the current realization of the vector of shocks ![]() .

.

For a given price process ![]() , each agent

, each agent ![]() can choose desired quantities of consumption and security holdings

can choose desired quantities of consumption and security holdings ![]() subject to the following sequence of budget constraints

subject to the following sequence of budget constraints

![]() (8)

(8)

(9)

(9)

for all ![]() . Note that (9) imposes non-negative holdings of all securities. Let

. Note that (9) imposes non-negative holdings of all securities. Let ![]() be the associated vector of multipliers to this non-negativity constraint.

be the associated vector of multipliers to this non-negativity constraint.

A competitive equilibrium for this economy is a collection of vectors ![]() such that: (i) each agent

such that: (i) each agent ![]() maximizes the objective (7) subject to constraints (8) and (9); and (ii) markets clear:

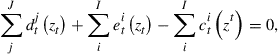

maximizes the objective (7) subject to constraints (8) and (9); and (ii) markets clear:

(10)

(10)

(11)

(11)

for ![]() , all

, all ![]() .

.

It is not hard to see that this model can be mapped into our analytical framework. Again, the vector of exogenous shocks ![]() defines sequences of endowments and dividends,

defines sequences of endowments and dividends, ![]() . Without loss of generality, we have assumed that the space of asset holdings is given by

. Without loss of generality, we have assumed that the space of asset holdings is given by ![]() . Asset holdings

. Asset holdings ![]() are the predetermined variables corresponding to vector

are the predetermined variables corresponding to vector ![]() , whereas consumption

, whereas consumption ![]() and asset prices

and asset prices ![]() are the current endogenous variables corresponding to vector

are the current endogenous variables corresponding to vector ![]() .

.

Function ![]() is simply given by the vector of individual budget constraints (8) and (9). Function

is simply given by the vector of individual budget constraints (8) and (9). Function ![]() is defined by the first-order conditions for intertemporal utility maximization over the equilibrium conditions for the aggregate good and financial markets (10) and (11). Observe that all constraints hold with equality as we introduce the associated vectors of multipliers

is defined by the first-order conditions for intertemporal utility maximization over the equilibrium conditions for the aggregate good and financial markets (10) and (11). Observe that all constraints hold with equality as we introduce the associated vectors of multipliers ![]() for the non-negativity constraints.

for the non-negativity constraints.

2.3 An Overlapping Generations Economy

We study a version of the economy analyzed by Kubler and Polemarchakis (2004). The economy is subject to an exogenously given sequence of shocks ![]() , with

, with ![]() for all

for all ![]() . At each date,

. At each date, ![]() new individuals appear in the economy and stay present for

new individuals appear in the economy and stay present for ![]() periods. Thus, agents are defined by their individual type

periods. Thus, agents are defined by their individual type ![]() , and the specific date-event in which they initiate their life span

, and the specific date-event in which they initiate their life span ![]() . There are

. There are ![]() goods, and each individual receives a positive stochastic endowment

goods, and each individual receives a positive stochastic endowment ![]() at every node

at every node ![]() while present in the economy. Endowments are assumed to be Markovian—defined by the type of the agent,

while present in the economy. Endowments are assumed to be Markovian—defined by the type of the agent, ![]() , age,

, age, ![]() , and the current realization of the shock,

, and the current realization of the shock, ![]() . Preferences over stochastic consumption streams

. Preferences over stochastic consumption streams ![]() are represented by an expected utility function

are represented by an expected utility function

(12)

(12)

Again, we impose a Markovian structure on preferences—assumed to depend on ![]() , and the current realized value

, and the current realized value ![]() .

.

At each date-event ![]() agents can trade one-period bonds that pay one unit of numeraire good

agents can trade one-period bonds that pay one unit of numeraire good ![]() regardless of the state of the world next period. These bonds are always in zero net supply, and

regardless of the state of the world next period. These bonds are always in zero net supply, and ![]() is the price of a bond that trades at date-event

is the price of a bond that trades at date-event ![]() . An infinitely lived Lucas tree may also be available from time zero. The tree produces a random stream of dividends

. An infinitely lived Lucas tree may also be available from time zero. The tree produces a random stream of dividends ![]() of consumption good 1. Then,

of consumption good 1. Then, ![]() is the market value of the tree, and

is the market value of the tree, and ![]() the holdings of bonds and shares of the tree for agent

the holdings of bonds and shares of the tree for agent ![]() . Shares cannot be sold short.

. Shares cannot be sold short.

Each individual consumer ![]() faces the following budget constraints for periods

faces the following budget constraints for periods ![]() ,

,

![]()

![]() (13)

(13)

![]() (14)

(14)

![]() (15)

(15)

Note that (14) insures that stock holdings must be non-negative, whereas (15) insures that debts must be honored in the terminal period.

As before, a competitive equilibrium for this economy is conformed by sequences of prices, ![]() , consumption allocations,

, consumption allocations, ![]() , and asset holdings

, and asset holdings ![]() for all agents over their corresponding ages, such that: (i) each agent maximizes her expected utility subject to individual budget constraints, (ii) the goods markets clear: consumption allocations add up to the aggregate endowment at all possible date-events, and (iii) financial markets clear: bond holdings add up to zero and share holdings add up to one.

for all agents over their corresponding ages, such that: (i) each agent maximizes her expected utility subject to individual budget constraints, (ii) the goods markets clear: consumption allocations add up to the aggregate endowment at all possible date-events, and (iii) financial markets clear: bond holdings add up to zero and share holdings add up to one.

Now, our purpose is to clarify how to map this model into the analytical framework developed before. The vector of exogenous shocks is ![]() , which defines the endowment and dividend processes

, which defines the endowment and dividend processes ![]() . As predetermined variables we have

. As predetermined variables we have ![]() ; that is, the portfolio holdings for all agents

; that is, the portfolio holdings for all agents ![]() alive at every date-event

alive at every date-event ![]() . And as current endogenous variables we have

. And as current endogenous variables we have ![]() the consumption allocations and the prices of both the bond and the Lucas tree for every date-event

the consumption allocations and the prices of both the bond and the Lucas tree for every date-event ![]() .

.

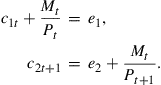

For the sake of the presentation, let’s consider a version of the model with one consumption good and two agents that live for two periods. Function ![]() is simply given by the vector of individual budget constraints (13). Function

is simply given by the vector of individual budget constraints (13). Function ![]() is defined by: (i) the individual optimality conditions for bonds

is defined by: (i) the individual optimality conditions for bonds

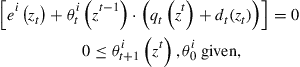

![]() (16)

(16)

(ii) if the Lucas tree is available, the Euler equation

![]() (17)

(17)

where ![]() is the multiplier on the short-sales constraints (14); and (iii) market clearing conditions. It is evident that many other constraints may be brought up into the analysis, such as a collateral restriction along the lines of Kubler and Schmedders (2003) that set up a limit for negative holdings of the bond based on the value of the holdings of the tree.

is the multiplier on the short-sales constraints (14); and (iii) market clearing conditions. It is evident that many other constraints may be brought up into the analysis, such as a collateral restriction along the lines of Kubler and Schmedders (2003) that set up a limit for negative holdings of the bond based on the value of the holdings of the tree.

3 Numerical Solution of Simple Markov Equilibria

For the above models, fairly general conditions guarantee the existence of stochastic equilibrium sequences. But even if the economy has a Markovian structure (i.e., the stochastic process driving the exogenous shocks and conditions (1) and (2) over the constraints is Markovian), equilibrium sequences may depend on some history of shocks. Equilibria with this type of path dependence are not amenable to numerical or statistical methods.

Hence, most quantitative research has focused on models where one can find continuous functions ![]() such that the sequences

such that the sequences

![]() (18)

(18)

generate a competitive equilibrium. Following Krueger and Kubler (2008), a competitive equilibrium that can be generated by equilibrium functions of the form (18) will be called a simple Markov equilibrium.

For frictionless economies the second welfare theorem applies—equilibrium allocations can be characterized as solutions to a planner’s problem. Using dynamic programming arguments, well-established conditions on primitives insure the existence of a simple Markov equilibrium (cf., Stokey et al., 1989). Matters are more complicated in models with real distortions such as taxes, or with financial frictions such as incomplete markets and collateral constraints. Section 4 details the issues involved. In this section we will review some results on the accuracy of numerical methods for simple Markov equilibria. First, we discuss some of the algorithms available to approximate equilibrium function ![]() . Then, we study methods to determine the accuracy of such approximations. Finally, we discuss how the approximation error in the policy function may propagate over the simulated moments affecting the estimation of parameter values.

. Then, we study methods to determine the accuracy of such approximations. Finally, we discuss how the approximation error in the policy function may propagate over the simulated moments affecting the estimation of parameter values.

3.1 Numerical Approximations of Equilibrium Functions

We can think of two major families of algorithms approximating simple Markov equilibria. The first group approximates directly the equilibrium functions (1) and (2) using the Euler equations and constraints (a variation of this method is Marcet’s original parameterized expectations algorithm that indirectly gets the equilibrium function via an approximation of the expectations function below, e.g., see Christiano and Fisher (2000)). These numerical algorithms may use local approximation techniques (perturbation methods), or global approximation techniques (projection methods). Projection methods require finding the fixed point of an equations system which may be highly non-linear. Hence, projection methods offer no guarantee of global convergence and uniqueness of the solution.

Another family of algorithms is based on dynamic programming (DP). The DP algorithms are reliable and have desirable convergence properties. However, their computational complexity increases quite rapidly with the dimension of the state space, especially because maximizations must be performed at each iteration. In addition, DP methods cannot be extended to models with distortions where the welfare theorems do not apply. For instance, in the above examples in Section 2, for most formulations, the growth model with taxes, the asset-pricing model with various added frictions, and the overlapping generations economy cannot be solved directly by DP methods. In all these economies, an equilibrium solution cannot be characterized by a social planning problem.

3.1.1 Methods Based on the Euler Equations

Simple Markov equilibria are characterized by continuous functions ![]() that satisfy

that satisfy

![]() (19)

(19)

![]() (20)

(20)

for all ![]() . Of course, in the absence of an analytical solution the system must be solved by numerical approximations.

. Of course, in the absence of an analytical solution the system must be solved by numerical approximations.

As mentioned above, two basic approaches are typically used to obtain approximate functions ![]() . Perturbation methods—pioneered by Judd and Guu (1997)—take a Taylor approximation around a point with known solution or quite close to the exact solution. This point typically corresponds to the deterministic steady state of the model, that is, an equilibrium where

. Perturbation methods—pioneered by Judd and Guu (1997)—take a Taylor approximation around a point with known solution or quite close to the exact solution. This point typically corresponds to the deterministic steady state of the model, that is, an equilibrium where ![]() for all

for all ![]() . Projection methods—developed by Judd (1992)—aim instead at more global approximations. First, a finite-dimensional space of functions is chosen that can approximate arbitrarily well continuous mappings. Common finite-dimensional spaces include finite elements (tent maps, splines, polynomials defined in small neighborhoods), or global bases such as polynomials or other functions defined over the whole domain

. Projection methods—developed by Judd (1992)—aim instead at more global approximations. First, a finite-dimensional space of functions is chosen that can approximate arbitrarily well continuous mappings. Common finite-dimensional spaces include finite elements (tent maps, splines, polynomials defined in small neighborhoods), or global bases such as polynomials or other functions defined over the whole domain ![]() . Second, let

. Second, let ![]() be elements of this finite-dimensional space evaluated at the nodal points

be elements of this finite-dimensional space evaluated at the nodal points ![]() defining these functions. Then, nodal values

defining these functions. Then, nodal values ![]() are obtained as solutions of non-linear systems conformed by equations (19) and (20) evaluated at some pre determined points of the state space

are obtained as solutions of non-linear systems conformed by equations (19) and (20) evaluated at some pre determined points of the state space ![]() . It is assumed that this non-linear system has a well-defined solution—albeit in most cases the existence of the solution is hard to show. And third, rules for the optimal placement of such pre determined points exist for some functional basis; e.g., Chebyshev polynomials that have some regular orthogonality properties could be evaluated at the Chebyshev nodes in the hope of minimizing oscillations.

. It is assumed that this non-linear system has a well-defined solution—albeit in most cases the existence of the solution is hard to show. And third, rules for the optimal placement of such pre determined points exist for some functional basis; e.g., Chebyshev polynomials that have some regular orthogonality properties could be evaluated at the Chebyshev nodes in the hope of minimizing oscillations.

3.1.2 Dynamic Programming

For economies satisfying the conditions of the second welfare theorem, equilibria can be computed by an optimization problem over a social welfare function subject to aggregate feasibility constraints. Then, one can find prices that support the planner’s allocation as a competitive equilibrium with transfer payments. A competitive equilibrium is attained when these transfers are equal to zero. Therefore, we need to search for the appropriate individual weights in the social welfare function in order to make these transfers equal to zero.

Matters are simplified by the principle of optimality: the planner’s intertemporal optimization problem can be summarized by a value function ![]() satisfying Bellman’s functional equation

satisfying Bellman’s functional equation

![]() (21)

(21)

![]()

Here, ![]() is the intertemporal discount factor,

is the intertemporal discount factor, ![]() is the one-period return function, and

is the one-period return function, and ![]() is a correspondence that captures the feasibility constraints of the economy. Note that our vectors

is a correspondence that captures the feasibility constraints of the economy. Note that our vectors ![]() and

and ![]() now refer to allocations only, while in previous decentralized models these vectors may include prices, taxes, or other variables outside the planning problem.

now refer to allocations only, while in previous decentralized models these vectors may include prices, taxes, or other variables outside the planning problem.

Value function ![]() is therefore a fixed point of Bellman’s equation (21). Under mild regularity conditions (cf., Stokey et al., 1989) it is easy to show that this fixed point can be approximated by the following DP operator. Let

is therefore a fixed point of Bellman’s equation (21). Under mild regularity conditions (cf., Stokey et al., 1989) it is easy to show that this fixed point can be approximated by the following DP operator. Let ![]() be the space of bounded functions. Then, operator

be the space of bounded functions. Then, operator ![]() is defined as

is defined as

![]() (22)

(22)

![]()

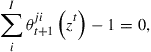

Operator ![]() is actually a contraction mapping with modulus

is actually a contraction mapping with modulus ![]() . It follows that

. It follows that ![]() is a unique solution of the functional equation (21), and can be found as the limit of the sequence recursively defined by

is a unique solution of the functional equation (21), and can be found as the limit of the sequence recursively defined by ![]() for an arbitrarily given initial function

for an arbitrarily given initial function ![]() . This iterating procedure is called the method of successive approximations, and operator

. This iterating procedure is called the method of successive approximations, and operator ![]() is called the DP operator.

is called the DP operator.

By the contraction property of the DP operator, it is possible to construct reliable numerical algorithms discretizing (22). For instance, Santos and Vigo-Aguiar (1998) establish error bounds for a numerical DP algorithm preserving the contraction property. The analysis starts with a set of piecewise-linear functions defined over state space X on a discrete set of nodal points with grid size ![]() . Then, a discretized version

. Then, a discretized version ![]() of operator

of operator ![]() is obtained by solving the optimization problem (22) at each nodal point. For piecewise-linear interpolation, operator

is obtained by solving the optimization problem (22) at each nodal point. For piecewise-linear interpolation, operator ![]() is also a contraction mapping. Hence, given any grid size

is also a contraction mapping. Hence, given any grid size ![]() , and any initial value function

, and any initial value function ![]() the sequence of functions

the sequence of functions ![]() converges to a unique solution

converges to a unique solution ![]() . Moreover, the contraction property of operator

. Moreover, the contraction property of operator ![]() can help bound the distance between such limit

can help bound the distance between such limit ![]() , and the

, and the ![]() application of this operator,

application of this operator, ![]() . Finally, it is important to remark that this approximation scheme will converge to the true solution of the model as the grid size

. Finally, it is important to remark that this approximation scheme will converge to the true solution of the model as the grid size ![]() goes to zero; that is,

goes to zero; that is, ![]() will be sufficiently close to the original value function

will be sufficiently close to the original value function ![]() for some small

for some small ![]() —as a matter of fact, convergence is of order

—as a matter of fact, convergence is of order ![]() . Of course, once a numerical value function

. Of course, once a numerical value function ![]() has been secured it is easy to obtain good approximations for our equilibrium functions

has been secured it is easy to obtain good approximations for our equilibrium functions ![]() from operator

from operator ![]() .

.

What slows down the DP algorithm is the maximization process at each iteration. Hence, functional interpolation—as opposed to discrete functions just defined over a set of nodal points—facilitates the use of some fast maximization routines. Splines and high-order polynomials may also be operative but these approximations may damage the concavity of the computed functions; moreover, for some interpolations there is no guarantee that the discretized operator is a contraction. There are other procedures to speed up the maximization process. Santos and Vigo-Aguiar (1998) use a multigrid method which can be efficiently implemented by an analysis of the approximation errors. Another popular method is policy iteration—contrary to popular belief this latter algorithm turns out to be quite slow for very fine grids (Santos and Rust, 2004).

3.2 Accuracy

As already pointed out, the quantitative analysis of non-linear models primarily relies on numerical approximations ![]() . Then, care must be exercised so that numerical equilibrium function

. Then, care must be exercised so that numerical equilibrium function ![]() is close enough to the actual decision rule

is close enough to the actual decision rule ![]() ; more precisely, we need to insure that

; more precisely, we need to insure that ![]() , where

, where ![]() is a norm relevant for the problem at hand, and

is a norm relevant for the problem at hand, and ![]() is a tolerance estimate.

is a tolerance estimate.

We now present various results for bounding the error in numerical approximations. Error bounds for optimal decision rules are available for some computational algorithms such as the above DP algorithm. It should be noted that these error bounds are not good enough for most quantitative exercises in which the object of interest is the time series properties of the simulated moments. Error bounds for optimal decision rules quantify the period-by-period bias introduced by a numerical approximation. This error, however, may grow in long simulations. A simple example below illustrates this point where the error of the simulated statistics gets large even when the error of the decision rule can be made arbitrarily small. Hence, the last part of this section considers some of the regularity conditions required for desirable asymptotic properties of the statistics from numerical simulations.

3.2.1 Accuracy of Equilibrium Functions

Suppose that we come up with a pair of numerical approximations ![]() . Is there a way of assessing the magnitude of the approximation error without actual knowledge of the solution of the model:

. Is there a way of assessing the magnitude of the approximation error without actual knowledge of the solution of the model: ![]() ?

?

To develop intuition on key ideas behind existing accuracy tests, let us define the Euler equation residuals for functions ![]() as

as

![]() (23)

(23)

![]() (24)

(24)

Note that an exact solution of the model will have Euler equation residuals equal to zero at all possible values of the state ![]() . Hence, “small” Euler equation residuals should indicate that the approximation error is also “small.” The relevant question, of course, is what we mean by “small.” Furthermore, we are circumventing other technical issues since first-order conditions may not be enough to characterize optimal solutions.

. Hence, “small” Euler equation residuals should indicate that the approximation error is also “small.” The relevant question, of course, is what we mean by “small.” Furthermore, we are circumventing other technical issues since first-order conditions may not be enough to characterize optimal solutions.

Den Haan and Marcet (1994) appeal to statistical techniques and propose testing for orthogonality of the Euler equation residuals over current and past information as a measure of accuracy. Since orthogonal Euler equation residuals may occur in spite of large deviations from the optimal policy, Judd (1992) suggests to evaluate the size of the Euler equation residuals over the whole state space as a test for accuracy. Moreover, for strongly concave infinite-horizon optimization problems Santos (2000) demonstrates that the approximation error of the policy function is of the same order of magnitude as the size of the Euler equation residuals, and the constants involved in these error bounds can be related to model primitives.

These theoretical error bounds are based on worse-case scenarios and hence they are usually not optimal for applied work. In some cases, researchers may want to assess numerically the approximation errors in the hope of getting more operative estimates (cf. Santos, 2000). Besides, for some algorithms it is possible to derive error bounds from their approximation procedures. This is the case of the DP algorithm (Santos and Vigo-Aguiar, 1998) and in some models with quadratic-linear approximations (Schmitt-Grohe and Uribe, 2004).

The logic underlying numerical estimation of error bounds from the Euler equation residuals goes as follows (Santos, 2000). We start with a model under a fixed set of parameter values. Then, Euler equation residuals are computed for several numerical equilibrium functions. We need sufficient variability in these approximations in order to obtain good and robust estimates. This variability is obtained by considering various approximation spaces or by changing the grid size. Let ![]() be the approximation with the lowest Euler equation residuals, which would be our best candidate for the true policy function. Then, for each available numerical approximation

be the approximation with the lowest Euler equation residuals, which would be our best candidate for the true policy function. Then, for each available numerical approximation ![]() we compute the approximation constant

we compute the approximation constant

![]() (25)

(25)

Here, ![]() is the max norm in the space of functions. From the available theory (cf. Santos, 2000), the approximation error of the policy function is of the same order of magnitude as that of the Euler equation residuals. Then, the values of

is the max norm in the space of functions. From the available theory (cf. Santos, 2000), the approximation error of the policy function is of the same order of magnitude as that of the Euler equation residuals. Then, the values of ![]() should have bounded variability (unless the approximation

should have bounded variability (unless the approximation ![]() is very close to

is very close to ![]() ). Indeed in many cases

). Indeed in many cases ![]() hovers around certain values. Hence, any upper bound

hovers around certain values. Hence, any upper bound ![]() for these values would be a conservative estimate for the constant involved in these error estimates. It follows that the resulting assessed value,

for these values would be a conservative estimate for the constant involved in these error estimates. It follows that the resulting assessed value, ![]() , can be used to estimate an error bound for our candidate solution:

, can be used to estimate an error bound for our candidate solution:

![]() (26)

(26)

Note that in this last equation we contemplate the error between our best policy function ![]() and the true policy function

and the true policy function ![]() .

.

Therefore, worst-case error bounds are directly obtained from constants given by the theoretical analysis. These bounds are usually very conservative. Numerical estimation of these bounds should be viewed as a heuristic procedure to assess the actual value of the bounding constant. From the available theory we know that the error of the equilibrium function is of the same order of magnitude as the size of the Euler equation residuals. That is, the following error bound holds:

![]() (27)

(27)

We thus obtain an estimate ![]() for constant

for constant ![]() from various comparisons of approximated equilibrium functions.

from various comparisons of approximated equilibrium functions.

3.2.2 Accuracy of the Simulated Moments

Researchers usually focus on long-run properties of equilibrium time series. The common belief is that equilibrium orbits will stabilize and converge to a stationary distribution. Stationary distributions are simply the stochastic counterparts of steady states in deterministic models. Computation of the moments of an invariant distribution for a non-linear model is usually a rather complicated task—even for analytical equilibrium functions. Hence, laws of large numbers are invoked to compute the moments of an invariant distribution from the sample moments.

The above one-period approximation error (27) is just a first step to control the cumulative error of numerical simulations. Following Santos and Peralta-Alva (2005), our goal now is to present some regularity conditions so that the error from the simulated statistics converges to zero as the approximated equilibrium function approaches the exact equilibrium function. The following example illustrates that certain convergence properties may not always hold.

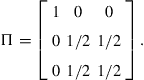

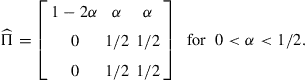

Let us now perturb ![]() slightly so that the new stochastic matrix is the following

slightly so that the new stochastic matrix is the following

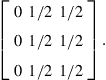

As ![]() , the sequence of stochastic matrices

, the sequence of stochastic matrices ![]() converges to

converges to

Hence, ![]() is the only possible long-run distribution for the system. Moreover,

is the only possible long-run distribution for the system. Moreover, ![]() is a transient state, and

is a transient state, and ![]() is the only ergodic set. Consequently, a small perturbation on a transition probability

is the only ergodic set. Consequently, a small perturbation on a transition probability ![]() may lead to a pronounced change in its invariant distributions. Indeed, small errors may propagate over time and alter the existing ergodic sets.

may lead to a pronounced change in its invariant distributions. Indeed, small errors may propagate over time and alter the existing ergodic sets.

Santos and Peralta-Alva (2005) show that certain continuity properties of the policy function suffice to establish some generalized laws of large numbers for numerical simulations. To provide a formal statement of their results, we need to lay down some standard concepts and terminology.

For ease of presentation, we restrict attention to exogenous stochastic shocks of the form

![]()

where ![]() is an iid shock. The distribution of this shock

is an iid shock. The distribution of this shock ![]() is denoted by probability measure

is denoted by probability measure ![]() on a measurable space

on a measurable space ![]() . Then, as it is standard in the literature (cf. Stokey et al., 1989) we define a new probability space comprising all infinite sequences

. Then, as it is standard in the literature (cf. Stokey et al., 1989) we define a new probability space comprising all infinite sequences ![]() . Let

. Let ![]() be the countably infinite Cartesian product of copies of E. Let

be the countably infinite Cartesian product of copies of E. Let ![]() be the

be the ![]() -field in

-field in ![]() generated by the collection of all cylinders

generated by the collection of all cylinders ![]() where

where ![]() for

for ![]() . A probability measure

. A probability measure ![]() can be constructed over the finite-dimensional sets as

can be constructed over the finite-dimensional sets as

![]()

Measure ![]() has a unique extension on

has a unique extension on ![]() . Hence, the triple

. Hence, the triple ![]() denotes a probability space. Now, for every initial value

denotes a probability space. Now, for every initial value ![]() and sequence of shocks

and sequence of shocks ![]() , let

, let ![]() be the sample paths generated by the policy functions

be the sample paths generated by the policy functions ![]() , so that

, so that ![]() for all

for all ![]() .

.

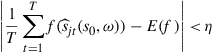

Let ![]() be the sample path generated from an approximate policy function

be the sample path generated from an approximate policy function ![]() . Averaging over these sample paths we get sequences of simulated statistics

. Averaging over these sample paths we get sequences of simulated statistics ![]() as defined by some function

as defined by some function ![]() . Let

. Let ![]() be the expected value under an invariant distribution

be the expected value under an invariant distribution ![]() of the original equilibrium function

of the original equilibrium function ![]() . Santos and Peralta-Alva (2005) establish the following result:

. Santos and Peralta-Alva (2005) establish the following result:

Therefore, for a sufficiently good numerical approximation ![]() and for a sufficiently large

and for a sufficiently large ![]() the sequence

the sequence ![]() approaches (almost surely) the expected value

approaches (almost surely) the expected value ![]() of the invariant distribution

of the invariant distribution ![]() of the original equilibrium function

of the original equilibrium function ![]() .

.

Note that this theorem does not require uniqueness of the invariant distribution for each numerical policy function. This requirement would be rather restrictive: numerical approximations may contain multiple steady states. For instance, consider a polynomial approximation of the policy function. As is well understood, the fluctuating behavior of polynomials may give rise to several ergodic sets. But according to the theorem, these multiple distributions from these approximations will eventually be close to the unique invariant distribution of the model. Moreover, if the model has multiple invariant distributions, then there is an extension of Theorem 1 in which the simulated statics of computed policy functions ![]() become close to those of some invariant distribution of the model for

become close to those of some invariant distribution of the model for ![]() large enough [see

large enough [see ![]() .

. ![]() .].

.].

The existence of an invariant distribution is guaranteed under the so-called Feller property (cf. Stokey et al., 1989). The Feller property is satisfied if equilibrium function ![]() is a continuous mapping on a compact domain or if the domain is made up of a finite number of points. (These latter stochastic processes are called Markov chains.) There are several extensions of these results to non-continuous mappings and non-compact domains (cf. Futia, 1982; Hopenhayn and Prescott, 1992; Stenflo, 2001). These papers also establish conditions for uniqueness of the invariant distribution under mixing or contractive conditions. The following contraction property is taken from Stenflo (2001): CONDITION C: There exists a constant

is a continuous mapping on a compact domain or if the domain is made up of a finite number of points. (These latter stochastic processes are called Markov chains.) There are several extensions of these results to non-continuous mappings and non-compact domains (cf. Futia, 1982; Hopenhayn and Prescott, 1992; Stenflo, 2001). These papers also establish conditions for uniqueness of the invariant distribution under mixing or contractive conditions. The following contraction property is taken from Stenflo (2001): CONDITION C: There exists a constant ![]() such that

such that ![]() for all pairs

for all pairs ![]() .

.

Condition C may arise naturally in growth models [Schenk-Hoppe and Schmalfuss (2001)], in learning models (Ellison and Fudenberg, 1993), and in certain types of stochastic games (Sanghvi and Sobel, 1976).

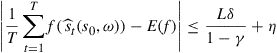

Using Condition C, the following bounds for the approximation error of the simulated moments are established in Santos and Peralta-Alva (2005). A real-valued function ![]() on

on ![]() is called Lipschitz with constant

is called Lipschitz with constant ![]() if

if ![]() for all pairs

for all pairs ![]() and

and ![]() .

.

Again, this is another application of the contraction property, which becomes instrumental to substantiate error bounds. Stachurski and Martin (2008) study a Monte Carlo algorithm for computing densities of invariant measures and establish global asymptotic convergence as well as error bounds.

3.3 Calibration, Estimation, and Testing

As in other applied sciences, economic theories build upon the analysis of highly stylized models. The estimation and testing of these models can be quite challenging, and the literature is still in a process of early development in which various technical problems need to be overcome. Indeed, there are important classes of models for which we still lack a good sense of the types of conditions under which simulation-based methods may yield estimators that achieve consistency and asymptotic normality. Besides, computation of these estimators may turn out to be a quite complex task.

A basic tenet of simulation-based estimation is that parameters are often specified as the by-product of some simplifying assumptions with no close empirical counterparts. These parameter values will affect the equilibrium dynamics which can be highly non-linear. Hence, as a first step in the process of estimation it seems reasonable to characterize the invariant probability measures or steady-state solutions, which commonly determine the long-run behavior of a model. But because of lack of information about the domain and form of these invariant probabilities, the model must be simulated to compute the moments and other useful statistics of these distributions.

Therefore, the process of estimation may entail the simulation of a parameterized family of models. Relatively fast algorithms are thus needed in order to sample the parameter space. Classical properties of these estimators such as consistency and asymptotic normality will depend on various conditions of the equilibrium functions. The study of these asymptotic properties requires methods of analysis of probability theory in its interface with dynamical systems.

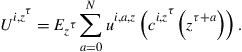

Our purpose here is to discuss some available methods for model estimation and testing. To make further progress in this discussion, let us rewrite (18) in the following form

![]() (29)

(29)

![]() (30)

(30)

where ![]() is a vector of parameters, and

is a vector of parameters, and ![]() . Functions

. Functions ![]() and

and ![]() may represent the exact solution of a dynamic model or some numerical approximation. One should realize that the assumptions underlying these functions may be of a different economic significance, since

may represent the exact solution of a dynamic model or some numerical approximation. One should realize that the assumptions underlying these functions may be of a different economic significance, since ![]() governs the law of motion of the vector of endogenous variables

governs the law of motion of the vector of endogenous variables ![]() , and

, and ![]() represents the evolution of the exogenous process

represents the evolution of the exogenous process ![]() . Observe that the vector of parameters

. Observe that the vector of parameters ![]() characterizing the evolution of the exogenous state variables

characterizing the evolution of the exogenous state variables ![]() may influence the law of motion of the endogenous variables

may influence the law of motion of the endogenous variables ![]() , but this endogenous process may also be influenced by some additional parameters

, but this endogenous process may also be influenced by some additional parameters ![]() which may stem from utility and production functions.

which may stem from utility and production functions.

For a given notion of distance the estimation problem may be defined as follows: Find a parameter vector ![]() such that a selected set of model predictions is closest to those of the data generating process. An estimator is thus a rule that yields a sequence of candidate solutions

such that a selected set of model predictions is closest to those of the data generating process. An estimator is thus a rule that yields a sequence of candidate solutions ![]() from finite samples of model simulations and data. It is generally agreed that a reasonable estimator should possess the following consistency property: as sampling errors vanish the sequence of estimated values

from finite samples of model simulations and data. It is generally agreed that a reasonable estimator should possess the following consistency property: as sampling errors vanish the sequence of estimated values ![]() should converge to the optimal solution

should converge to the optimal solution ![]() . Further, we would like the estimator to satisfy asymptotic normality so that it is possible to derive approximate confidence intervals and address questions of efficiency.

. Further, we would like the estimator to satisfy asymptotic normality so that it is possible to derive approximate confidence intervals and address questions of efficiency.

Data-based estimators are usually quite effective, since they may involve low computational cost. For instance, standard non-linear least squares (e.g., Jennrich, 1969) and other generalized estimators (cf., Newey and McFadden, 1994) may be applied whenever functions ![]() and

and ![]() have analytical representations. Similarly, from functions

have analytical representations. Similarly, from functions ![]() and

and ![]() one can compute the likelihood function that posits a probability law for the process

one can compute the likelihood function that posits a probability law for the process ![]() with explicit dependence on the parameter vector

with explicit dependence on the parameter vector ![]() . In general, data-based estimation methods can be applied for closed-form representations of the dynamic process of state variables and vector of parameters. This is particularly restrictive for the law of motion of the endogenous state variables: only under rather special circumstances one obtains a closed-form representation for the solution of a non-linear dynamic model

. In general, data-based estimation methods can be applied for closed-form representations of the dynamic process of state variables and vector of parameters. This is particularly restrictive for the law of motion of the endogenous state variables: only under rather special circumstances one obtains a closed-form representation for the solution of a non-linear dynamic model ![]() .

.

Since a change in ![]() may feed into the dynamics of the system in rather complex ways, traditional (data-based) estimators may be of limited applicability for non-linear dynamic models. Indeed, these estimators do not take into account the effects of parameter changes in the equilibrium dynamics, and hence they can only be applied to full-fledged, structural dynamic models under fairly specific conditions. In traditional estimation there is only a unique distribution generated by the data process, and such distribution is not influenced by the vector of parameters. For a simulation-based estimator, however, the following major analytical difficulty arises: each vector of parameters is manifested in a different dynamical system. Hence, proofs of consistency of the estimator would have to cope with a continuous family of invariant distributions defined over the parameter space.

may feed into the dynamics of the system in rather complex ways, traditional (data-based) estimators may be of limited applicability for non-linear dynamic models. Indeed, these estimators do not take into account the effects of parameter changes in the equilibrium dynamics, and hence they can only be applied to full-fledged, structural dynamic models under fairly specific conditions. In traditional estimation there is only a unique distribution generated by the data process, and such distribution is not influenced by the vector of parameters. For a simulation-based estimator, however, the following major analytical difficulty arises: each vector of parameters is manifested in a different dynamical system. Hence, proofs of consistency of the estimator would have to cope with a continuous family of invariant distributions defined over the parameter space.

An alternative route to the estimation of non-linear dynamic models is via the Euler equations (e.g., see Hansen and Singleton, 1982) where the vector of parameters is determined by a set of orthogonality conditions conforming the first-order conditions or Euler equations of the optimization problem. A main advantage of this approach is that one does not need to model the shock process or to know the functional dependence of the law of motion of the state variables on the vector of parameters, since the objective is to find the best fit for the Euler equations over available data samples, within the admissible region of parameter values. The estimation of the Euler equations can then be carried out by standard non-linear least squares or by some other generalized estimator (Hansen, 1982). However, model estimation via the Euler equations under traditional statistical methods is not always feasible. These methods are only valid for convex optimization problems with interior solutions in which technically the decision variables outnumber the parameters; moreover, the objective and feasibility constraints of the optimization problem must satisfy certain strict separability conditions along with the process of exogenous shocks. Sometimes the model may feature some latent variables or some private information which is not observed by the econometrician (e.g., shocks to preferences); lack of knowledge about these components of the model may preclude the specification of the Euler equations (e.g., Duffie and Singleton, 1993). An even more fundamental limitation is that the estimation is confined to orthogonality conditions generated by the Euler equations, whereas it may be of more economic relevance to estimate or test a model along some other dimensions such as those including certain moments of the invariant distributions or the process of convergence to such stationary solutions.

3.3.1 Calibration

Faced with these complex analytical problems, the economics literature has come up with many simplifying approaches for model estimation. Starting with the real business cycle literature (e.g., Cooley and Prescott, 1995), parameter values are often determined from independent evidence or from other parts of the theory not related to the basic facts selected for testing. This is loosely referred to as model calibration. Christiano and Eichembaum (1992) is a good example of this approach. They consider a business cycle model, and pin down parameter values from various steady-state conditions. In other words, the model is evaluated according to business cycle predictions, and it is calibrated to replicate empirical properties of balanced growth paths. As a matter of fact, Christiano and Eichembaum (1992) are able to provide standard errors for their estimates, and hence their analysis goes beyond most calibration exercises.

3.3.2 Simulation-Based Estimation

The aforementioned limitations of traditional estimation methods for non-linear systems along with advances in computing have fostered the more recent use of estimation and testing based upon simulations of the model. Estimation by model simulation offers more flexibility to evaluate the behavior of the model by computing statistics of its invariant distributions that can be compared with their data counterparts. But this greater flexibility inherent in simulation-based estimators entails a major computational cost: extensive model simulations may be needed to sample the entire parameter space. Relatively little is known about the family of models in which simulation-based estimators would have good asymptotic properties such as consistency and normality. These properties would seem a minimal requirement for a rigorous application of estimation methods under the rather complex and delicate techniques of numerical simulation in which approximation errors may propagate in unexpected ways.

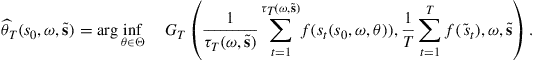

To fix ideas, we will focus on a simulated moments estimator (SME) put forward by Lee and Ingram (1991). This estimation method allows the researcher to assess the behavior of the model along various dimensions. Indeed, the conditions characterizing the estimation process may involve some moments of the model’s invariant distributions or some other features of the dynamics on which the desired vector of parameters must be selected.

Several elements conform the SME. First, one specifies a target function or function of interest which typically would characterize a selected set of moments of the invariant distribution of the model and those of the data generating process. Second, a notion of distance is defined between the selected statistics of the model and its data counterparts. The minimum distance between these statistics is attained at some vector of parameters ![]() in a space

in a space ![]() . Then, the estimation method yields a sequence of candidate solutions

. Then, the estimation method yields a sequence of candidate solutions ![]() over increasing finite samples of the model simulations

over increasing finite samples of the model simulations ![]() and data

and data ![]() so as to approximate the true value

so as to approximate the true value ![]() .

.

(a) The target function (or function of interest) ![]() is assumed to be continuous. This function may represent

is assumed to be continuous. This function may represent ![]() moments of an invariant distribution

moments of an invariant distribution ![]() under

under ![]() defined as

defined as ![]() for

for ![]() . The expected value of

. The expected value of ![]() over the invariant distribution of the data-generating process will be denoted by

over the invariant distribution of the data-generating process will be denoted by ![]() .

.

(b) The distance function ![]() is assumed to be continuous. The minimum distance is attained at a vector of parameter values

is assumed to be continuous. The minimum distance is attained at a vector of parameter values

![]() (31)

(31)

A typical specification of the distance function ![]() is the following quadratic form:

is the following quadratic form:

![]() (32)

(32)

where ![]() is a positive definite

is a positive definite ![]() matrix. Under certain standard assumptions (cf., Santos and Peralta-Alva, 2005, Theorem 3.2) one can show there exists an optimal solution

matrix. Under certain standard assumptions (cf., Santos and Peralta-Alva, 2005, Theorem 3.2) one can show there exists an optimal solution ![]() . Moreover, for the analysis below there is no restriction of generality to consider that

. Moreover, for the analysis below there is no restriction of generality to consider that ![]() is unique.

is unique.

(c) An estimation rule characterized by a sequence of distance functions ![]() and choices for the horizon

and choices for the horizon ![]() of the model simulations. This rule yields a sequence of estimated values

of the model simulations. This rule yields a sequence of estimated values ![]() from associated optimization problems with finite samples of the model simulations

from associated optimization problems with finite samples of the model simulations ![]() and data

and data ![]() . The estimated value

. The estimated value ![]() is obtained as

is obtained as

(33)

(33)

We assume that the sequence of continuous functions ![]() converges uniformly to function

converges uniformly to function ![]() for

for ![]() -almost all

-almost all ![]() , and the sequence of functions

, and the sequence of functions ![]() goes to

goes to ![]() for

for ![]() -almost all

-almost all ![]() . Note that both functions

. Note that both functions ![]() and

and ![]() are allowed to depend on the sequence of random shocks

are allowed to depend on the sequence of random shocks ![]() and data

and data ![]() , and

, and ![]() is a measure defined over

is a measure defined over ![]() and

and ![]() . These functions will usually depend on all information available up to time

. These functions will usually depend on all information available up to time ![]() . The rule

. The rule ![]() reflects that the length of model’s simulations may be different from that of data samples.

reflects that the length of model’s simulations may be different from that of data samples.

It should be stressed that problem (31) is defined over population characteristics of the model and of the data-generating process, whereas problem (33) is defined over statistics of finite simulations and data.

By the measurable selection theorem (Crauel, 2002) there exists a sequence of measurable functions ![]() . See Duffie and Singleton (1993) and Santos (2010) for asymptotic properties of this estimator. Sometimes vector

. See Duffie and Singleton (1993) and Santos (2010) for asymptotic properties of this estimator. Sometimes vector ![]() could be estimated independently, and hence we could then try to get an SME estimate of

could be estimated independently, and hence we could then try to get an SME estimate of ![]() . This mixed procedure can still recover consistency and it may save on computational cost. Consistency of the estimator can also be established for numerical approximations: the SME would converge to the true value

. This mixed procedure can still recover consistency and it may save on computational cost. Consistency of the estimator can also be established for numerical approximations: the SME would converge to the true value ![]() as the approximation error goes to zero.

as the approximation error goes to zero.

Another route to estimation is via the likelihood function. The existence of such functions imposes certain regularity conditions on the dynamics of the model which are sometimes hard to check. Fernandez-Villaverde and Rubio-Ramirez (2007) propose computation of the likelihood function by a particle filter. Numerical errors of the computed solution will also affect the likelihood function and the estimated parameter values (see Fernandez-Villaverde et al., 2006). Recent research on dynamic stochastic general equilibrium models has made extensive use of Monte Carlo methods such as the Markov Chain Monte Carlo method and the Metropolis-Hastings algorithm (e.g., Fernandez-Villaverde, 2012).

4 Recursive Methods for Non-optimal Economies

We now get into the more complex issue of numerical simulation of non-optimal economies. In general, these models cannot be computed by associated global optimization problems—ruling out the application of numerical DP algorithms as well as the derivation of error bounds for strongly concave optimization problems. This leaves the field open for algorithms based on approximating the Euler equations such as perturbation and projection methods. These approximation methods, however, search for smooth equilibrium functions; as already pointed out, the existence of continuous Markov equilibria cannot be insured under regularity assumptions. The existence problem is a technical issue which is mostly ignored in the applied literature. See Hellwig (1983) and Kydland and Prescott (1980) for early discussions on the non-existence of simple Markov equilibrium, and Abreu et al. (1990) for a related approach to repeated games.

As it is clear from these early contributions, simple Markov equilibrium may only fail to exist in the presence of multiple equilibria. Then, to insure uniqueness of equilibrium the literature has considered a stronger related condition: monotonicity of equilibrium. This monotonicity condition means that if the values of our predetermined state variables are increased today, then the resulting equilibrium path must always reflect higher values for these variables in the future. Monotonicity is hard to verify in models with heterogeneous agents with constraints that occasionally bind, or in models with incomplete financial markets, or with distorting taxes and externalities.

Indeed, most well-known cases of monotone dynamics have been confined to one-dimensional models. For instance, Coleman (1991), Greenwood and Huffman (1995), and Datta et al. (2002) consider versions of the one-sector neoclassical growth model and establish the existence of a simple Markov equilibrium by an Euler iteration method. This iterative method guarantees uniform convergence, but it does not display the contraction property as the DP algorithm. It is unclear how this approach may be extended to other models, and several examples have been found of non-existence of continuous simple Markov equilibria (cf. Kubler and Schmedders, 2002; Kubler and Polemarchakis, 2004; Santos, 2002).

Therefore, for non-optimal economies a recursive representation of equilibria may only be possible when conditioning over an expanded set of state variables. Following Duffie et al. (1994), the existence of a Markov equilibrium in a generalized space of variables is proved in Kubler and Schmedders (2003) for an asset-pricing model with collateral constraints. Feng et al. (2012) extend these existence results to other economies, and define a Markov equilibrium as a solution over an expanded state of variables that include the shadow values of investment. The addition of the shadow values of investment as state variables facilitates computation of the numerical solution. This formulation was originally proposed by Kydland and Prescott (1980), and later used in Marcet and Marimon (1998) for recursive contracts, and in Phelan and Stacchetti (2001) for a competitive economy with a representative agent. The main insight of Feng et al. (2012) is to develop a reliable and computable algorithm with good approximation properties for the numerical simulation of competitive economies with heterogeneous agents and market frictions including endogenous borrowing constraints. Before advancing to the study of the theoretical issues involved, we begin with a few examples to illustrate some of the pitfalls found in the computation of non-optimal economies.

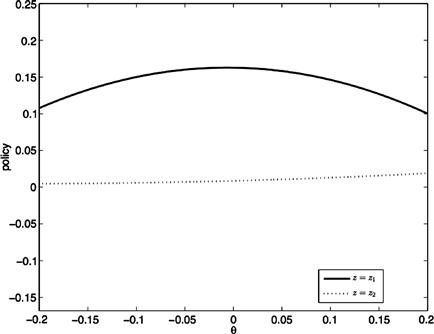

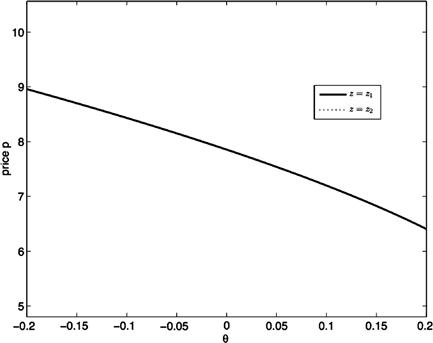

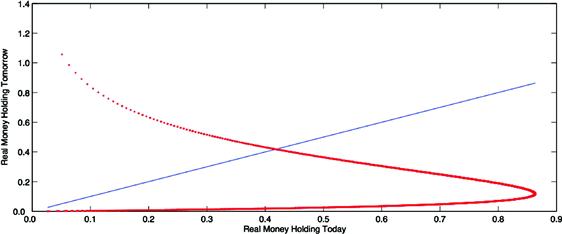

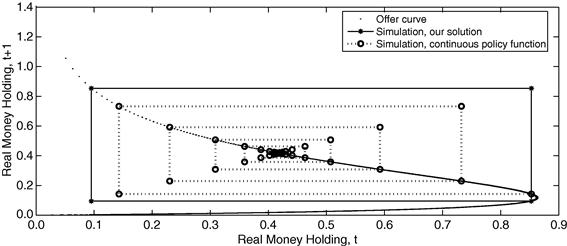

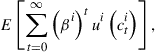

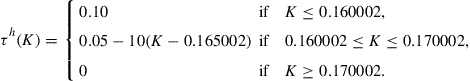

4.1 Problems in the Simulation of Non-Optimal Economies