Numerical Methods for Large-Scale Dynamic Economic Models

Lilia Maliar and Serguei Maliar, T24, Hoover Institution, Stanford, CA, USA, [email protected]

Abstract

We survey numerical methods that are tractable in dynamic economic models with a finite, large number of continuous state variables. (Examples of such models are new Keynesian models, life-cycle models, heterogeneous-agents models, asset-pricing models, multisector models, multicountry models, and climate change models.) First, we describe the ingredients that help us to reduce the cost of global solution methods. These are efficient nonproduct techniques for interpolating and approximating functions (Smolyak, stochastic simulation, and ![]() -distinguishable set grids), accurate low-cost monomial integration formulas, derivative-free solvers, and numerically stable regression methods. Second, we discuss endogenous grid and envelope condition methods that reduce the cost and increase accuracy of value function iteration. Third, we show precomputation techniques that construct solution manifolds for some models’ variables outside the main iterative cycle. Fourth, we review techniques that increase the accuracy of perturbation methods: a change of variables and a hybrid of local and global solutions. Finally, we show examples of parallel computation using multiple CPUs and GPUs including applications on a supercomputer. We illustrate the performance of the surveyed methods using a multiagent model. Many codes are publicly available.

-distinguishable set grids), accurate low-cost monomial integration formulas, derivative-free solvers, and numerically stable regression methods. Second, we discuss endogenous grid and envelope condition methods that reduce the cost and increase accuracy of value function iteration. Third, we show precomputation techniques that construct solution manifolds for some models’ variables outside the main iterative cycle. Fourth, we review techniques that increase the accuracy of perturbation methods: a change of variables and a hybrid of local and global solutions. Finally, we show examples of parallel computation using multiple CPUs and GPUs including applications on a supercomputer. We illustrate the performance of the surveyed methods using a multiagent model. Many codes are publicly available.

Keywords

High dimensions; Large scale; Projection; Perturbation; Stochastic simulation; Value function iteration; Endogenous grid; Envelope condition; Smolyak; ![]() -distinguishable set; Curse of dimensionality; Precomputation; Manifold; Parallel computation; Supercomputers

-distinguishable set; Curse of dimensionality; Precomputation; Manifold; Parallel computation; Supercomputers

JEL Classification Codes

C63; C68

1 Introduction

The economic literature is moving to richer and more complex dynamic models. Heterogeneous-agents models may have a large number of agents that differ in one or several dimensions, and models of firm behavior may have a large number of heterogeneous firms and different production sectors.1 Asset-pricing models may have a large number of assets; life-cycle models have at least as many state variables as the number of periods (years); and international trade models may have state variables of both domestic and foreign countries.2 New Keynesian models may have a large number of state variables and kinks in decision functions due to a zero lower bound on nominal interest rates.3 Introducing new features into economic models increases their complexity and dimensionality even further.4 Moreover, in some applications, dynamic economic models must be solved a large number of times under different parameters vectors.5

Dynamic economic models do not typically admit closed-form solutions. Moreover, conventional numerical solution methods—perturbation, projection, and stochastic simulation—become intractable (i.e., either infeasible or inaccurate) if the number of state variables is large. First, projection methods build on tensor-product rules; they are accurate and fast in models with few state variables but their cost grows rapidly as the number of state variables increases; see, e.g., a projection method of Judd (1992). Second, stochastic simulation methods rely on Monte Carlo integration and least-squares learning; they are feasible in high-dimensional problems but their accuracy is severely limited by the low accuracy of Monte Carlo integration; also, least-squares learning is often numerically unstable, see, e.g., a parameterized expectation algorithm of Marcet (1988). Finally, perturbation methods solve models in a steady state using Taylor expansions of the models’ equations. They are also practical for solving large-scale models but the range of their accuracy is uncertain, particularly in the presence of strong nonlinearities and kinks in decision functions; see, e.g., a perturbation method of Judd and Guu (1993).

In this chapter, we show how to re-design conventional projection, stochastic simulation, and perturbation methods to make them tractable (i.e., both feasible and accurate) in broad and empirically relevant classes of dynamic economic models with finite large numbers of continuous state variables.6

Let us highlight four key ideas. First, to reduce the cost of projection methods, Krueger and Kubler (2004) replace an expensive tensor-product grid with a low-cost, nonproduct Smolyak sparse grid. Second, Judd et al. (2011b) introduce a generalized stochastic simulation algorithm in which inaccurate Monte Carlo integration is replaced with accurate deterministic integration and in which unstable least-squares learning is replaced with numerically stable regression methods. Third, Judd et al. (2012) propose a ![]() -distinguishable set method that merges stochastic simulation and projection: it uses stochastic simulation to identify a high-probability area of the state space and it uses projection-style analysis to accurately solve the model in this area. Fourth, to increase the accuracy of perturbation methods, we describe two techniques. One is a change of variables of Judd (2003): it constructs many locally equivalent Taylor expansions and chooses the one that is most accurate globally. The other is a hybrid of local and global solutions by Maliar et al. (2013) that combines local solutions produced by a perturbation method with global solutions constructed to satisfy the model’s equation exactly.

-distinguishable set method that merges stochastic simulation and projection: it uses stochastic simulation to identify a high-probability area of the state space and it uses projection-style analysis to accurately solve the model in this area. Fourth, to increase the accuracy of perturbation methods, we describe two techniques. One is a change of variables of Judd (2003): it constructs many locally equivalent Taylor expansions and chooses the one that is most accurate globally. The other is a hybrid of local and global solutions by Maliar et al. (2013) that combines local solutions produced by a perturbation method with global solutions constructed to satisfy the model’s equation exactly.

Other ingredients that help us to reduce the cost of solution methods in high-dimensional problems are efficient nonproduct techniques for representing, interpolating, and approximating functions; accurate, low-cost monomial integration formulas; and derivative-free solvers. Also, we describe an endogenous grid method of Carroll (2005) and an envelope condition method of Maliar and Maliar (2013) that simplify rootfinding in the Bellman equation, thus reducing dramatically the cost of value function iteration. Furthermore, we show precomputation techniques that save on cost by constructing solution manifolds outside the main iterative cycle, namely, precomputation of intratemporal choice by Maliar and Maliar (2005a), precomputation of integrals by Judd et al. (2011d), and imperfect aggregation by Maliar and Maliar (2001).

Finally, we argue that parallel computation can bring us a further considerable reduction in computational expense. Parallel computation arises naturally on recent computers, which are equipped with multiple central processing units (CPUs) and graphics processing units (GPUs). It is reasonable to expect capacities for parallel computation will continue to grow in the future. Therefore, we complement our survey of numerical methods with a discussion of parallel computation tools that may reduce the cost of solving large-scale dynamic economic models. First, we revisit the surveyed numerical methods and we distinguish those methods that are suitable for parallelizing. Second, we review MATLAB tools that are useful for parallel computation, including parallel computing toolboxes for multiple CPUs and GPUs, a deployment tool for creating executable files and a mex tool for incorporating routines from other programming languages such as C or Fortran. Finally, we discuss how to solve large-scale applications using supercomputers; in particular, we provide illustrative examples on Blacklight supercomputer from the Pittsburgh Supercomputing Center.

The numerical techniques surveyed in this chapter proved to be remarkably successful in applications. Krueger and Kubler (2004) solve life-cycle models with 20–30 periods using a Smolyak method. Judd et al. (2011b) compute accurate quadratic solutions to a multiagent optimal growth model with up to 40 state variables using GSSA. Hasanhodzic and Kotlikoff (2013) used GSSA to solve life-cycle models with up to 80 periods. Kollmann et al. (2011b) compare the performance of six state-of-the-art methods in the context of real business cycle models of international trade with up to 20 state variables. Maliar et al. (2013) show hybrids of perturbation and global solutions that take just a few seconds to construct but that are nearly as accurate as the most sophisticated global solutions. Furthermore, the techniques described in the chapter can solve problems with kinks and strong nonlinearities; in particular, a few recent papers solve moderately large new Keynesian models with a zero lower bound on nominal interest rates: see Judd et al. (2011d, 2012), Fernández-Villaverde et al. (2012), and Aruoba and Schorfheide (2012). Also, the surveyed techniques can be used in the context of large-scale dynamic programming problems: see Maliar and Maliar (2012a,b). Finally, Aldrich et al. (2011), Valero et al. (2012), Maliar (2013), and Cai et al. (2013a,b) demonstrate that a significant reduction in cost can be achieved using parallel computation techniques and more powerful hardware and software.7

An important question is how to evaluate the quality of numerical approximations. In the context of complex large-scale models, it is typically hard to prove convergence theorems and to derive error bounds analytically. As an alternative, we control the quality of approximations numerically using a two-stage procedure outlined in Judd et al. (2011b). In Stage 1, we attempt to compute a candidate solution. If the convergence is achieved, we proceed to Stage 2, in which we subject a candidate solution to a tight accuracy check. Specifically, we construct a new set of points on which we want a numerical solution to be accurate (typically, we use a set of points produced by stochastic simulation) and we compute unit-free residuals in the model’s equations in all such points. If the economic significance of the approximation errors is small, we accept a candidate solution. Otherwise, we tighten up Stage 1; for example, we use a larger number of grid points, a more flexible approximating function, a more demanding convergence criterion, etc.

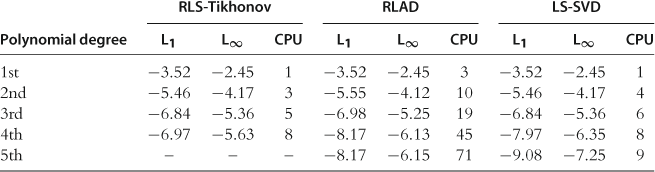

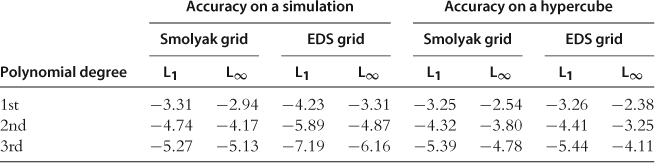

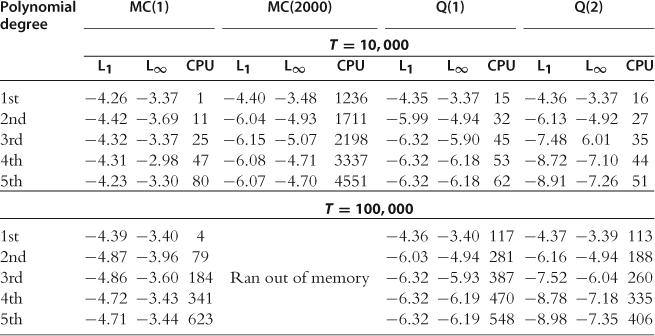

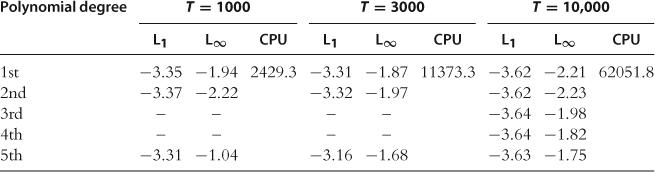

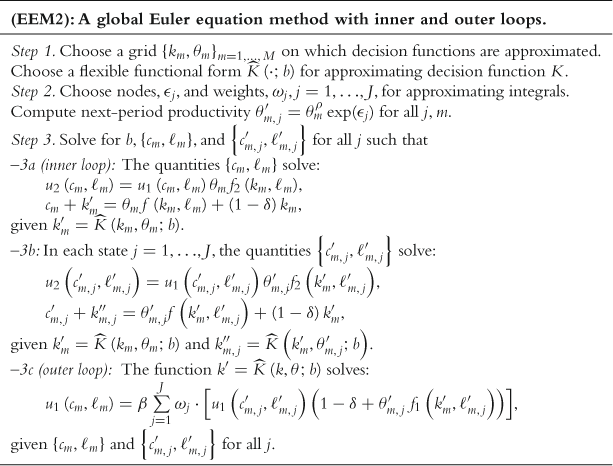

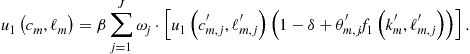

We assess the performance of the surveyed numerical solution methods in the context of a canonical optimal growth model with heterogeneous agents studied in Kollmann et al. (2011b). We implement six solution methods: three global Euler equation methods (a version of the Smolyak method in line with Judd et al. (2013), a generalized stochastic simulation algorithm of Judd et al. (2011b), and an ![]() -distinguishable set algorithm of Judd et al. (2012)); two global dynamic programming methods (specifically, two versions of the envelope condition method of Maliar and Maliar (2012b, 2013), one that solves for value function and the other that solves for derivatives of value function); and a hybrid of the perturbation and global solutions methods of Maliar et al. (2013). In our examples, we compute polynomial approximations of degrees up to 3, while the solution methods studied in Kollmann et al. (2011b) are limited to polynomials of degrees 2.

-distinguishable set algorithm of Judd et al. (2012)); two global dynamic programming methods (specifically, two versions of the envelope condition method of Maliar and Maliar (2012b, 2013), one that solves for value function and the other that solves for derivatives of value function); and a hybrid of the perturbation and global solutions methods of Maliar et al. (2013). In our examples, we compute polynomial approximations of degrees up to 3, while the solution methods studied in Kollmann et al. (2011b) are limited to polynomials of degrees 2.

The surveyed methods proved to be tractable, accurate, and reliable. In our experiments, unit-free residuals in the model’s equation are less than 0.001% on a stochastic simulation of 10,000 observations for most accurate methods. Our main message is that all three classes of methods considered can produce highly accurate solutions if properly implemented. Stochastic simulation methods become as accurate as projection methods if they build on accurate deterministic integration methods and numerically stable regression methods. Perturbation methods can deliver high accuracy levels if their local solutions are combined with global solutions using a hybrid style of analysis. Finally, dynamic programming methods are as accurate as Euler equation methods if they approximate the derivatives of value function instead of, or in addition to, value function itself.

In our numerical analysis, we explore a variety of interpolation, integration, optimization, fitting, and other computational techniques, and we evaluate the role of these techniques in accuracy and cost of the numerical solutions methods. At the end of this numerical exploration, we provide a detailed list of practical recommendations and tips on how computational techniques can be combined, coordinated, and implemented more efficiently in the context of large-scale applications.

The rest of the paper is organized as follows: in Section 2, we discuss the related literature. In Section 3, we provide a roadmap of the chapter. Through Sections 4–9, we introduce computational techniques that are tractable in problems with high dimensionality. In Section 10, we focus on parallel computation techniques. In Sections 11 and 12, we assess the performance of the surveyed numerical solution methods in the context of multiagent models. Finally, in Section 13, we conclude.

2 Literature Review

There is a variety of numerical methods in the literature for solving dynamic economic models; for reviews, see Taylor and Uhlig (1990), Rust (1996), Gaspar and Judd (1997), Judd (1998), Marimon and Scott (1999), Santos (1999), Christiano and Fisher (2000), Miranda and Fackler (2002), Adda and Cooper (2003), Aruoba et al. (2006), Kendrik et al. (2006), Heer and Maußner (2008, 2010), Lim and McNelis (2008), Stachursky (2009), Canova (2007), Den Haan (2010), Kollmann et al. (2011b). However, many of the existing methods are subject to the “curse of dimensionality”—that is, their computational expense grows exponentially with the dimensionality of state space.8

High-dimensional dynamic economic models represent three main challenges to numerical methods. First, the number of arguments in decision functions increases with the dimensionality of the problem and such functions become increasingly costly to approximate numerically (also, we may have more functions to approximate). Second, the cost of integration increases as the number of exogenous random variables increases (also, we may have more integrals to approximate). Finally, larger models are normally characterized by larger and more complex systems of equations, which are more expensive to solve numerically.

Three classes of numerical solution methods in the literature are projection, perturbation, and stochastic simulation. Projection methods are used in, e.g., Judd (1992), Gaspar and Judd (1997), Christiano and Fisher (2000), Aruoba et al. (2006), and Anderson et al. (2010). Conventional projection methods are accurate and fast in models with few state variables; however, they become intractable even in medium-scale models. This is because, first, they use expensive tensor-product rules both for interpolating decision functions and for approximating integrals and, second, because they use expensive Newton’s methods for solving nonlinear systems of equations.

Perturbation methods are introduced to economics in Judd and Guu (1993) and become a popular tool in the literature. For examples of applications of perturbation methods, see Gaspar and Judd (1997), Judd (1998), Collard and Juillard (2001, 2011), Jin and Judd (2002), Judd (2003), Schmitt-Grohé and Uribe (2004), Fernández-Villaverde and Rubio-Ramírez (2007), Aruoba et al. (2006), Swanson et al. (2006), Kim et al. (2008), Chen and Zadrozny (2009), Reiter (2009), Lombardo (2010), Adjemian et al. (2011), Gomme and Klein (2011), Maliar and Maliar (2011), Maliar et al. (2013), Den Haan and De Wind (2012), Mertens and Judd (2013), and Guerrieri and Iacoviello (2013) among others. Perturbation methods are practical for problems with very high dimensionality; however, the accuracy of local solutions may deteriorate dramatically away from the steady-state point in which such solutions are computed, especially in the presence of strong nonlinearities and kinks in decision functions.

Finally, simulation-based solution methods are introduced to the literature by Fair and Taylor (1983) and Marcet (1988). The former paper presents an extended path method for solving deterministic models while the latter paper proposes a parameterized expectation algorithm (PEA) for solving economic models with uncertainty. Simulation techniques are used in the context of many other solution methods, e.g., Smith (1991, 1993), Aiyagari (1994), Rust (1997), Krusell and Smith (1998), and Maliar and Maliar (2005a), as well as in the context of learning methods: see, e.g., Marcet and Sargent (1989), Tsitsiklis (1994), Bertsekas and Tsitsiklis (1996), Pakes and McGuire (2001), Evans and Honkapohja (2001), Weintraub et al. (2008), Powell (2011), Jirnyi and Lepetyuk (2011); see Birge and Louveaux (1997) for a review of stochastic programming methods and see also Fudenberg and Levine (1993) and Cho and Sargent (2008) for a related concept of self-conforming equilibria. Simulation and learning methods are feasible in problems with high dimensionality. However, Monte Carlo integration has a low rate of convergence—a square root of the number of observations—which considerably limits the accuracy of such methods since an infeasibly long simulation is needed to attain high accuracy levels. Moreover, least-squares learning is numerically unstable in the context of stochastic simulation.9

The above discussion raises three questions: first, how can one reduce the cost of conventional projection methods in high-dimensional problems (while maintaining their high accuracy levels)? Second, how can one increase the accuracy of perturbation methods (while maintaining their low computational expense)? Finally, how can one enhance their numerical stability of stochastic simulation methods and increase the accuracy (while maintaining a feasible simulation length)? These questions were addressed in the literature, and we survey the findings of this literature below.

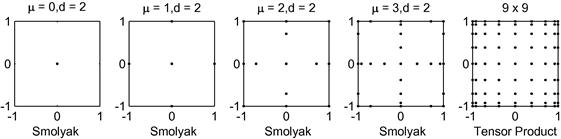

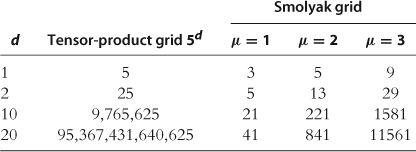

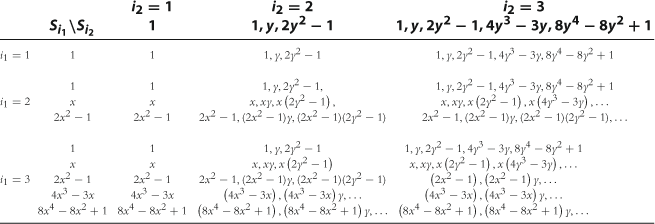

To make projection methods tractable in large-scale problems, Krueger and Kubler (2004) introduce to the economic literature a Smolyak sparse grid technique; see also Malin et al. (2011). The sparse grid technique, introduced to the literature by Smolyak (1963), selects only a small subset of tensor-product grid elements that are most important for the quality of approximation. The Smolyak grid reduces the cost of projection methods dramatically without a significant accuracy loss. Winschel and Krätzig (2010) apply the Smolyak method for developing state-space filters that are tractable in problems with high dimensionality. Fernández-Villaverde et al. (2012) use the Smolyak method to solve a new Keynesian model. Finally, Judd et al. (2013) modify and generalize the Smolyak method in various dimensions to improve its performance in economic applications.

To increase the accuracy of stochastic simulation methods, Judd et al. (2011b) introduce a generalized stochastic simulation algorithm (GSSA) which uses stochastic simulation as a way to obtain grid points for computing solutions but replaces an inaccurate Monte Carlo integration method with highly accurate deterministic (quadrature and monomial) methods. Furthermore, GSSA replaces the least-squares learning method with regression methods that are robust to ill-conditioned problems, including least-squares methods that use singular value decomposition, Tikhonov regularization, least-absolute deviations methods, and the principal component regression method. The key advantage of stochastic simulation and learning methods is that they solve models only in the area of the state space where the solution “lives.” Thus, they avoid the cost of finding a solution in those areas that are unlikely to happen in equilibrium. GSSA preserves this useful feature but corrects the shortcomings of the earlier stochastic simulation approaches. It delivers accuracy levels that are comparable to the best accuracy attained in the related literature.

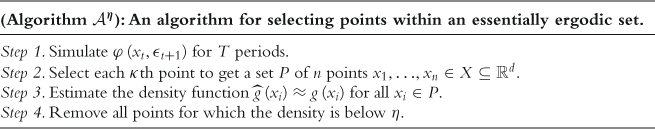

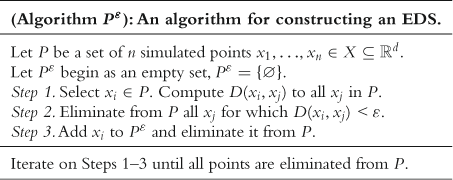

Furthermore, Judd et al. (2010, 2012) introduce two solution methods that merge stochastic simulation and projection techniques, a cluster grid algorithm (CGA) and ![]() -distinguishable set method (EDS). Both methods use stochastic simulation to identify a high-probability area of the state space, cover this area with a relatively small set of representative points, and use projection-style techniques to solve a model on the constructed set (grid) of points. The two methods differ in the way in which they construct representative points: CGA partitions the simulated data into clusters and uses the centers of the clusters as grid points while EDS selects a roughly evenly spaced subset of simulated points. These two approaches are used to solve moderately large new Keynesian models, namely, Judd et al. (2011b) and Aruoba and Schorfheide (2012) use clustering techniques while Judd et al. (2012) use the EDS construction.

-distinguishable set method (EDS). Both methods use stochastic simulation to identify a high-probability area of the state space, cover this area with a relatively small set of representative points, and use projection-style techniques to solve a model on the constructed set (grid) of points. The two methods differ in the way in which they construct representative points: CGA partitions the simulated data into clusters and uses the centers of the clusters as grid points while EDS selects a roughly evenly spaced subset of simulated points. These two approaches are used to solve moderately large new Keynesian models, namely, Judd et al. (2011b) and Aruoba and Schorfheide (2012) use clustering techniques while Judd et al. (2012) use the EDS construction.

As far as integration is concerned, there is a variety of methods that are tractable in high-dimensional problems, e.g., Monte Carlo methods, quasi-Monte Carlo methods, and nonparametric methods; see Niederreiter (1992), Geweke (1996), Rust (1997), Judd (1998), Pagan and Ullah (1999), Scott and Sain (2005) for reviews. However, the quality of approximations of integrals differs considerably across methods. For models with smooth decision functions, deterministic Gaussian quadrature integration methods with just a few nodes are far more accurate than Monte Carlo methods with thousands of random draws; see Judd et al. (2011a,b) for numerical examples. However, the cost of Gaussian product rules is prohibitive in problems with high dimensionality; see Gaspar and Judd (1997). To ameliorate the curse of dimensionality, Judd (1998) introduces to the economic literature nonproduct monomial integration formulas. Monomial formulas turn out to be a key piece for constructing global solution methods that are tractable in high-dimensional applications. Such formulas combine a low cost with high accuracy and can be generalized to the case of correlated exogenous shocks using a Cholesky decomposition; see Judd et al. (2011b) for a detailed description of monomial formulas, as well as for a numerical assessment of the accuracy and cost of such formulas in the context of economically relevant examples.

Finally, we focus on numerical methods that can solve large systems of nonlinear equations. In the context of Euler equation approaches, Maliar et al. (2011) argue that the cost of finding a solution to a system of equilibrium conditions can be reduced by dividing the whole system into two parts: intratemporal choice conditions (those that contain variables known at time ![]() ) and intertemporal choice conditions (those that contain some variables unknown at time

) and intertemporal choice conditions (those that contain some variables unknown at time ![]() ). Maliar and Maliar (2013) suggest a similar construction in the context of dynamic programming methods; specifically, they separate all optimality conditions into a system of the usual equations that identifies the optimal quantities and a system of functional equations that identifies a value function.

). Maliar and Maliar (2013) suggest a similar construction in the context of dynamic programming methods; specifically, they separate all optimality conditions into a system of the usual equations that identifies the optimal quantities and a system of functional equations that identifies a value function.

The system of intertemporal choice conditions (functional equations) must be solved with respect to the parameters of the approximating functions. In a high-dimensional model this system is large, and solving it with Newton-style methods may be expensive. In turn, the system of intratemporal choice conditions is much smaller in size; however, Newton’s methods can still be expensive because such a system must be solved a large number of times (in each grid point, time period, and future integration node). As an alternative to Newton’s methods, we advocate the use of a simple derivative-free fixed-point iteration method in line with the Gauss-Jacobi and Gauss-Siedel schemes. In particular, Maliar et al. (2011) propose a simple iteration-on-allocation solver that can find the intratemporal choice in many points simultaneously and that produces essentially zero approximation errors in all intratemporal choice conditions. See Wright and Williams (1984), Miranda and Helmberger (1988), and Marcet (1988) for early applications of fixed-point iteration to economic problems; see Judd (1998) for a review of various fixed-point iteration schemes. Also, see Eaves and Schmedders (1999) and Judd et al. (2012b), respectively, for applications of homotopy methods and efficient Newton’s methods to economic problems.

A February 2011 special issue of the Journal of Economic Dynamics and Control (henceforth, the JEDC project) studied the performance of six state-of-the-art solution methods in the context of an optimal growth model with heterogeneous agents (interpreted as countries). The model includes up to 10 countries (20 state variables) and features heterogeneity in preferences and technology, complete markets, capital adjustment cost, and elastic labor supply. The objectives of this project are outlined in Den Haan et al. (2011). The participating methods are a perturbation method of Kollmann et al. (2011a), a stochastic simulation and cluster grid algorithms of Maliar et al. (2011), a monomial rule Galerkin method of Pichler (2011), and a Smolyak’s collocation method of Malin et al. (2011). The methodology of the numerical analysis is described in Juillard and Villemot (2011). In particular, they develop a test suite that evaluates the accuracy of solutions by computing unit-free residuals in the model’s equations. The residuals are computed both on sets of points produced by stochastic simulation and on sets of points situated on a hypersphere. These two kinds of accuracy checks are introduced in Jin and Judd (2002) and Judd (1992), respectively; see also Den Haan and Marcet (1994) and Santos (2000) for other techniques for accuracy evaluation.

The results of the JEDC comparison are summarized in Kollmann et al. (2011b). The main findings are as follows: First, an increase in the degree of an approximating polynomial function by 1 increases the accuracy levels roughly by an order of magnitude (provided that other computational techniques, such as integration, fitting, intratemporal choice, etc., are sufficiently accurate). Second, methods that operate on simulation-based grids are very accurate in the high-probability area of the state space, while methods that operate on exogenous hypercube domains are less accurate in that area—although their accuracy is more uniformly distributed on a large multidimensional hypercube. Third, Monte Carlo integration is very inaccurate and restricts the overall accuracy of solutions. In contrast, monomial integration formulas are very accurate and reliable. Finally, approximating accurately the intratemporal choice is critical for the overall accuracy of solutions.

The importance of solving for intratemporal choice with a high degree of accuracy is emphasized by Maliar et al. (2011), who define the intertemporal choice (capital functions) parametrically; however, they define the intratemporal choice (consumption and leisure) nonparametrically as quantities that satisfy the intratemporal choice conditions given the future intertemporal choice. Under this construction, stochastic simulation and cluster grid algorithms of Maliar et al. (2011) solve for the intratemporal choice with essentially zero approximation errors (using an iteration-on-allocation solver). In contrast, the other methods participating in the JEDC comparison solve for some of the intertemporal choice variables parametrically and face such large errors in the intratemporal choice conditions that they dominate the overall accuracy of their solutions.

All six methods that participated in the JEDC comparison analysis work with Euler equations. In addition to the Euler equation approach, we are interested in constructing Bellman equation approaches that are tractable in high-dimensional applications. In general, there is no simple one-to-one relation between the Bellman and Euler equation approaches. For some problems, the value function is not differentiable, and we do not have Euler equations. On the contrary, for other problems (e.g., problems with distortionary taxation, externalities, etc.), we are able to derive the Euler equation even though such problems do not admit dynamic programming representations; for a general discussion of dynamic programming methods and their applications to economic problems, see Bertsekas and Tsitsiklis (1996), Rust (1996, 1997, 2008), Judd (1998), Santos (1999), Judd et al. (2003), Aruoba et al. (2006), Powell (2011), Fukushima and Waki (2011), Cai and Judd (2010, 2012), among others.

The Bellman and Euler equation approaches are affected by the curse of dimensionality in a similar way. Hence, the same kinds of remedies can be used to enhance their performance in large-scale applications, including nonproduct grid construction, monomial integration, and derivative-free solvers. However, there is an additional important computational issue that is specific to the dynamic programming methods: expensive rootfinding. To find a solution to the Bellman equation in a single grid point, conventional value function iteration (VFI) explores many different candidate points and, in each such point, it interpolates value function in many integration nodes to approximate expectations. Conventional VFI is costly even in low-dimensional problems; see Aruoba et al. (2006) for an assessment of its cost.

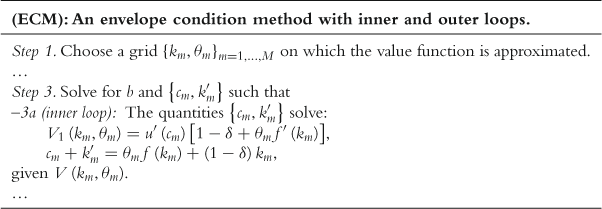

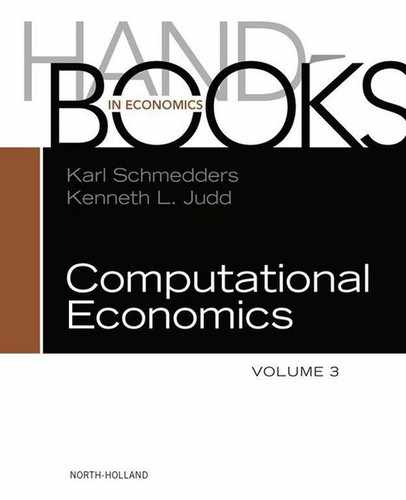

Two alternatives to conventional VFI are proposed in the literature. First, Carroll (2005) shows an endogenous grid method (EGM) that can significantly reduce the cost of conventional VFI by using future endogenous state variables for constructing grid points instead of the current ones; see also Barillas and Fernández-Villaverde (2007) and Villemot (2012) for applications of EGM to models with elastic labor supply and sovereign debt, respectively. Second, Maliar and Maliar (2013) show an envelope condition method (ECM) that replaces conventional expensive backward-looking iteration on value function with a cheaper, forward-looking iteration. Also, Maliar and Maliar (2013) develop versions of EGM and ECM that approximate derivatives of the value function and deliver much higher accuracy levels than similar methods approximating the value function itself. Finally, Maliar and Maliar (2012a,b) use a version of ECM to solve a multicountry model studied in Kollmann et al. (2011b) and show that value function iteration methods can successfully compete with most efficient Euler equation methods in high-dimensional applications.

Precomputation—computation of a solution to some model’s equations outside the main iterative cycle—is a technique that can reduce the cost of global solution methods even further. We review three examples. First, Maliar and Maliar (2005a) introduce a technique of precomputing of intratemporal choice. It constructs the intratemporal choice manifolds outside the main iterative cycle and uses the constructed manifolds inside the cycle as if a closed-form solution were available; see Maliar et al. (2011) for a further development of this technique. Second, Judd et al. (2011d) propose a technique of precomputation of integrals. This technique makes it possible to construct conditional expectation functions in the stage of initialization of a solution algorithm and, in effect, converts a stochastic problem into a deterministic one. Finally, Maliar and Maliar (2001, 2003a) introduce an analytical technique of imperfect aggregation, which allows us to characterize the aggregate behavior of a multiagent economy in terms of a one-agent model.

There are many other numerical techniques that are useful in the context of global solution methods. In particular, Tauchen (1986) and Tauchen and Hussey (1991) propose a discretization method that approximates a Markov process with a finite-state Markov chain. Such a discretization can be performed using nonproduct rules, combined with other computational techniques that are tractable in large-scale applications. For example, Maliar et al. (2010) and Young (2010) develop variants of Krusell and Smith’s (1998) method that replaces stochastic simulation with iteration on discretized ergodic distribution; the former method iterates backward as in Rios-Rull (1997) while the latter method introduces a forward iteration; see also Horvath (2012) for an extension. These two methods deliver the most accurate approximations to the aggregate law of motion in the context of Krusell and Smith’s (1998) model studied in the comparison analysis of Den Haan (2010). Of special interest are techniques that are designed for dealing with multiplicity of equilibria, such as simulation-based methods of Peralta-Alva and Santos (2005), a global solution method of Feng et al. (2009), and Gröbner bases introduced to economics by Kubler and Schmedders (2010). Finally, an interaction of a large number of heterogeneous agents is studied by agent-based computational economics. In this literature, behavioral rules of agents are not necessarily derived from optimization and that the interaction of the agents does not necessarily lead to an equilibrium; see Tesfatsion and Judd (2006) for a review.

We next focus on the perturbation class of solution methods. Specifically, we survey two techniques that increase the accuracy of local solutions produced by perturbation methods. The first technique—a change of variables—is introduced by Judd (2003), who pointed out that an ordinary Taylor expansion can be dominated in accuracy by other expansions implied by changes of variables (e.g., an expansion in levels may lead to more accurate solutions than that in logarithms or vice versa). All the expansions are equivalent in the steady state but differ globally. The goal is to choose an expansion that performs best in terms of accuracy on the relevant domain. In the context of a simple optimal growth model, Judd (2003) finds that a change of variables can increase the accuracy of the conventional perturbation method by two orders of magnitude. Fernández-Villaverde and Rubio-Ramírez (2006) show how to apply the method of change of variables to a model with uncertainty and an elastic labor supply.

The second technique—a hybrid of local and global solutions—is developed by Maliar et al. (2013). Their general presentation of the hybrid method encompasses some previous examples in the literature obtained using linear and loglinear solution methods; see Dotsey and Mao (1992) and Maliar et al. (2010, 2011). This perturbation-based method computes a standard perturbation solution, fixes some perturbation decision functions, and finds the remaining decision functions to exactly satisfy certain models’ equations. The construction of the latter part of the hybrid solution mimics global solution methods: for each point of the state space considered, nonlinear equations are solved either analytically (when closed-form solutions are available) or with a numerical solver. In numerical examples studied in Maliar et al. (2013), some hybrid solutions are orders of magnitude more accurate than the original perturbation solutions and are comparable in accuracy to solutions produced by global solution methods.

Finally, there is another technique that can help us to increase the accuracy of perturbation methods. Namely, it is possible to compute Taylor expansion around stochastic steady state instead of deterministic steady state. Such a steady state is computed by taking into account the attitude of agents toward risk. This idea is developed in Juillard (2011) and Maliar and Maliar (2011): the former article computes a stochastic steady state numerically, whereas the latter article uses analytical techniques of precomputation of integrals introduced in Judd et al. (2011d).

We complement our survey of efficient numerical methods with a discussion of recent developments in hardware and software that can help to reduce the cost of large-scale problems. Parallel computation is the main tool for dealing with computationally intensive tasks in recent computer science literature. A large number of central processing units (CPUs) or graphics processing units (GPUs) are connected with a fast network and are coordinated to perform a single job. Early applications of parallel computation to economic problems are dated back to Amman (1986, 1990), Chong and Hendry (1986), Coleman (1992), Nagurney and Zhang (1998); also, see Nagurney (1996) for a survey. But after the early contributions, parallel computation received little attention in the economic literature. Recently, the situation has begun to change. In particular, Doornik et al. (2006) review applications of parallel computation in econometrics; Creel (2005, 2008) and Creel and Goffe (2008) illustrate the benefits of parallel computation in the context of several economically relevant examples; Sims et al. (2008) employ parallel computation in the context of large-scale Markov switching models; Aldrich et al. (2011), Morozov and Mathur (2012) apply GPU computation to solve dynamic economic models; Durham and Geweke (2012) use GPUs to produce sequential posterior simulators for applied Bayesian inference; Cai et al. (2012) apply high-throughput computing (Condor network) to implement value function iteration; Valero et al. (2013) review parallel computing tools available in MATLAB and illustrate their application by way of examples; and, finally, Maliar (2013) assesses efficiency of parallelization using message passing interface (MPI) and open memory programming (OpenMP) in the context of high-performance computing (a Blacklight supercomputer).

In particular, Maliar (2013) finds that information transfers on supercomputers are far more expensive than on desktops. Hence, the problem must be sufficiently large to ensure gains from parallelization. The task assigned to each core must be at least few seconds if several cores are used, and it must be a minute or more if a large number (thousands) of cores are used. Maliar (2013) also finds that for small problems, OpenMP leads to a higher efficiency of parallelization than MPI. Furthermore, Valero et al. (2013) explore options for reducing the cost of a Smolyak solution method in the context of large-scale models studied in the JEDC project. Parallelizing the Smolyak method effectively is a nontrivial task because there are large information transfers between the outer and inner loops and because certain steps should be implemented in a serial manner. Nonetheless, considerable gains from parallelization are possible even on a desktop computer. Specifically, in a model with 16 state variables, Valero et al. (2013) attain the efficiency of parallelization of nearly 90% on a four-core machine via a parfor tool. Furthermore, translating expensive parts of the MATLAB code into C++ via a mex tool also leads to a considerable reduction in computational expense in some examples. However, transferring expensive computations to GPUs does not reduce the computational expense: a high cost of transfers between CPUs and GPUs outweighs the gains from parallelization.

3 The Chapter at a Glance

In this section, we provide a roadmap of the chapter and highlight the key ideas using a collection of simple examples.

A Neoclassical Stochastic Growth Model

The techniques surveyed in the chapter are designed for dealing with high-dimensional problems. However, to explain these techniques, we use the simplest possible framework, the standard one-sector neoclassical growth model. Later, in Sections 11 and 12, we show how such techniques can be applied for solving large-scale heterogeneous-agents models.

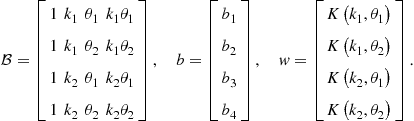

We consider a model with elastic labor supply. The agent solves:

(1)

(1)

![]() (2)

(2)

![]() (3)

(3)

where ![]() is given;

is given; ![]() is the expectation operator conditional on information at time

is the expectation operator conditional on information at time ![]() , and

, and ![]() are consumption, labor, end-of-period capital, and productivity level, respectively;

are consumption, labor, end-of-period capital, and productivity level, respectively; ![]() and

and ![]() are the utility and production functions, respectively, both of which are strictly increasing, continuously differentiable, and concave.

are the utility and production functions, respectively, both of which are strictly increasing, continuously differentiable, and concave.

Our goal is to solve for a recursive Markov equilibrium in which the decisions on next-period capital, consumption, and labor are made according to some time-invariant state contingent functions ![]() , and

, and ![]() .

.

A version of model (1)–(3) in which the agent does not value leisure and supplies to the market all her time endowment is referred to as a model with inelastic labor supply. Formally, such a model is obtained by replacing ![]() and

and ![]() with

with ![]() and

and ![]() in (1) and (2), respectively.

in (1) and (2), respectively.

First-Order Conditions

We assume that a solution to model (1)–(3) is interior and satisfies the first-order conditions (FOCs)

![]() (4)

(4)

![]() (5)

(5)

and budget constraint (2). Here, and further on, notation of type ![]() stands for the first-order partial derivative of a function

stands for the first-order partial derivative of a function ![]() with respect to a variable

with respect to a variable ![]() . Condition (4) is called the Euler equation.

. Condition (4) is called the Euler equation.

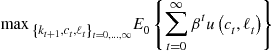

An Example of a Global Projection-Style Euler Equation Method

We approximate functions ![]() , and

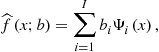

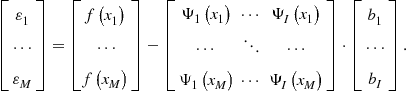

, and ![]() numerically. As a starting point, we consider a projection-style method in line with Judd (1992) that approximates these functions to satisfy (2)–(5) on a grid of points.

numerically. As a starting point, we consider a projection-style method in line with Judd (1992) that approximates these functions to satisfy (2)–(5) on a grid of points.

We use the assumed decision functions to determine the choices in the current period ![]() , as well as to determine these choices in

, as well as to determine these choices in ![]() possible future states

possible future states ![]() , and

, and ![]() .

.

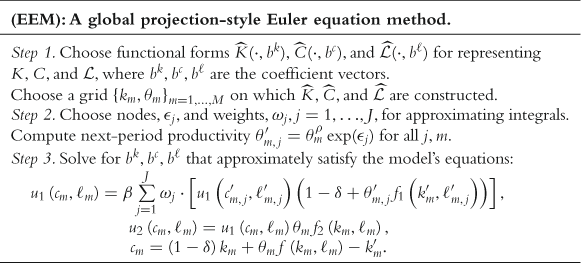

Unidimensional Grid Points and Basis Functions

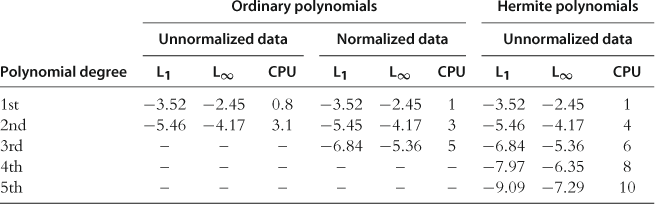

To solve the model, we discretize the state space into a finite set of grid points ![]() . Our construction of a multidimensional grid begins with unidimensional grid points and basis functions. The simplest possible choice is a family of ordinary polynomials and a grid of uniformly spaced points but many other choices are possible. In particular, a useful alternative is a family of Chebyshev polynomials and a grid composed of extrema of Chebyshev polynomials. Such polynomials are defined in an interval

. Our construction of a multidimensional grid begins with unidimensional grid points and basis functions. The simplest possible choice is a family of ordinary polynomials and a grid of uniformly spaced points but many other choices are possible. In particular, a useful alternative is a family of Chebyshev polynomials and a grid composed of extrema of Chebyshev polynomials. Such polynomials are defined in an interval ![]() , and thus, the model’s variables such as

, and thus, the model’s variables such as ![]() and

and ![]() must be rescaled to be inside this interval prior to any computation. In Table 1, we compare two choices discussed above: one is ordinary polynomials and a grid of uniformly spaced points and the other is Chebyshev polynomials and a grid of their extrema.

must be rescaled to be inside this interval prior to any computation. In Table 1, we compare two choices discussed above: one is ordinary polynomials and a grid of uniformly spaced points and the other is Chebyshev polynomials and a grid of their extrema.

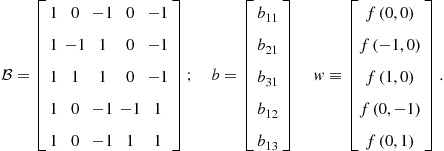

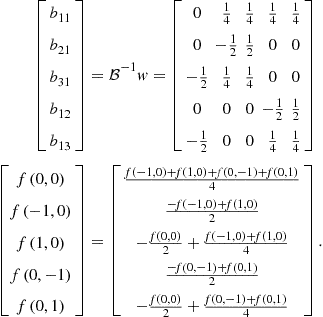

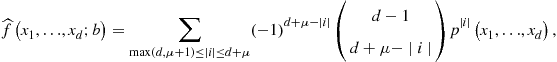

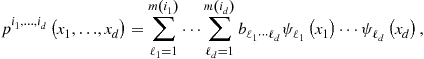

Table 1

Ordinary and Chebyshev unidimensional polynomials.

Notes: Ordinary polynomial of degree ![]() is given by

is given by ![]() ; Chebyshev polynomial of degree

; Chebyshev polynomial of degree ![]() is given by

is given by ![]() ; and finally,

; and finally, ![]() extrema of Chebyshev polynomials of degree

extrema of Chebyshev polynomials of degree ![]() are given by

are given by ![]() .

.

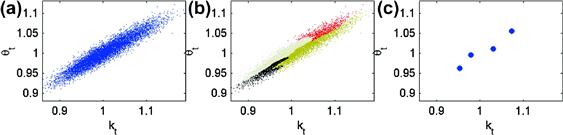

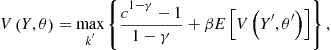

As we see, Chebyshev polynomials are just linear combinations of ordinary polynomials. If we had an infinite arithmetic precision on a computer, it would not matter which family of polynomials we use. But with a finite number of floating points, Chebyshev polynomials have an advantage over ordinary polynomials. To see the point, in Figure 1 we plot ordinary and Chebyshev polynomials of degrees from 1 to 5.

For the ordinary polynomial family, the basis functions look very similar on ![]() . Approximation methods using ordinary polynomials may fail because they cannot distinguish between similarly shaped polynomial terms such as

. Approximation methods using ordinary polynomials may fail because they cannot distinguish between similarly shaped polynomial terms such as ![]() and

and ![]() . In contrast, for the Chebyshev polynomial family, basis functions have very different shapes and are easy to distinguish.

. In contrast, for the Chebyshev polynomial family, basis functions have very different shapes and are easy to distinguish.

We now illustrate the use of Chebyshev polynomials for approximation by way of example.

It is possible to use Chebyshev polynomials with other grids, but the grid of extrema of Chebyshev polynomials is a perfect match. (The extrema listed in Table 1 are also seen in Figure 1.) First, the resulting approximations are uniformly accurate, and the error bounds can be constructed. Second, there is a unique set of coefficients such that a Chebyshev polynomial function of degree ![]() matches exactly

matches exactly ![]() given values, and this property carries over to multidimensional approximations that build on unidimensional Chebyshev polynomials. Finally, the coefficients that we compute in our example using an inverse problem can be derived in a closed form using the property of orthogonality of Chebyshev polynomials. The advantages of Chebyshev polynomials for approximation are emphasized by Judd (1992) in the context of projection methods for solving dynamic economic models; see Judd (1998) for a further discussion of Chebyshev as well as other orthogonal polynomials (Hermite, Legendre, etc.).

given values, and this property carries over to multidimensional approximations that build on unidimensional Chebyshev polynomials. Finally, the coefficients that we compute in our example using an inverse problem can be derived in a closed form using the property of orthogonality of Chebyshev polynomials. The advantages of Chebyshev polynomials for approximation are emphasized by Judd (1992) in the context of projection methods for solving dynamic economic models; see Judd (1998) for a further discussion of Chebyshev as well as other orthogonal polynomials (Hermite, Legendre, etc.).

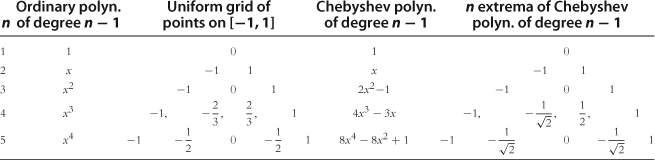

Multidimensional Grid Points and Basis Functions

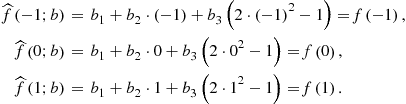

In Step 1 of the Euler equation algorithm, we must specify a method for approximating, representing, and interpolating two-dimensional functions. A tensor-product method constructs multidimensional grid points and basis functions using all possible combinations of unidimensional grid points and basis functions. As an example, let us approximate the capital decision function ![]() . First, we take two grid points for each state variable, namely,

. First, we take two grid points for each state variable, namely, ![]() and

and ![]() , and we combine them to construct two-dimensional grid points,

, and we combine them to construct two-dimensional grid points, ![]()

![]() . Second, we take two basis functions for each state variable, namely,

. Second, we take two basis functions for each state variable, namely, ![]() and

and ![]() , and we combine them to construct two-dimensional basis functions

, and we combine them to construct two-dimensional basis functions ![]() . Third, we construct a flexible functional form for approximating

. Third, we construct a flexible functional form for approximating ![]() ,

,

![]() (6)

(6)

Finally, we identify the four unknown coefficients ![]() such that

such that ![]() and

and ![]() coincide exactly in the four grid points constructed. That is, we write

coincide exactly in the four grid points constructed. That is, we write ![]() , where

, where

(7)

(7)

If ![]() has full rank, then coefficient vector

has full rank, then coefficient vector ![]() is uniquely determined by

is uniquely determined by ![]() . The obtained approximation (6) can be used to interpolate the capital decision function in each point off the grid.

. The obtained approximation (6) can be used to interpolate the capital decision function in each point off the grid.

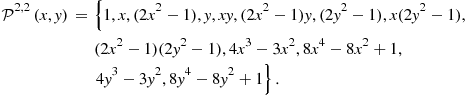

Tensor-product constructions are successfully used in the literature to solve economic models with few state variables; see, e.g., Judd (1992). However, the number of grid points and basis functions grows exponentially (i.e., as ![]() ) with the number of state variables

) with the number of state variables ![]() . For problems with high dimensionality, we need nonproduct techniques for constructing multidimensional grid points and basis functions.

. For problems with high dimensionality, we need nonproduct techniques for constructing multidimensional grid points and basis functions.

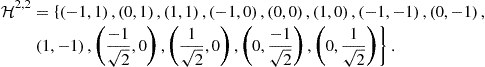

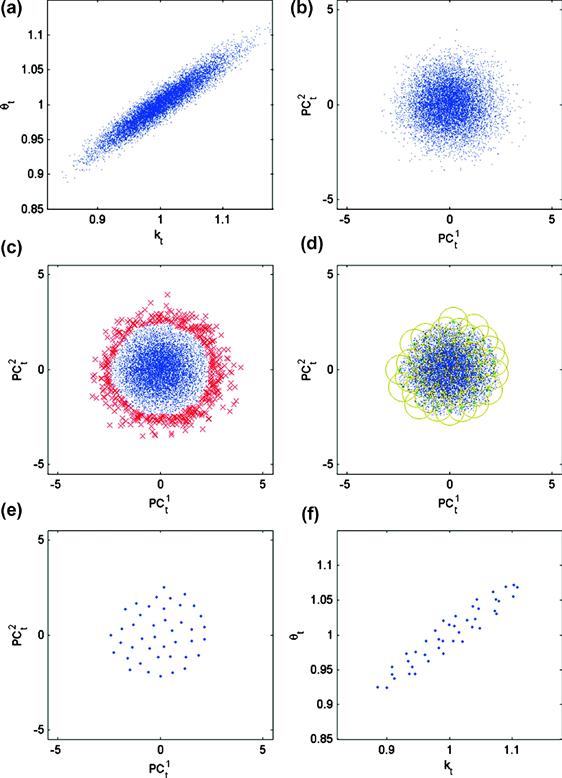

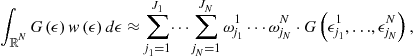

Nonproduct techniques for constructing multidimensional grids are the first essential ingredient of solution methods for high-dimensional problems. We survey several such techniques, including Smolyak (sparse), stochastic simulation, ![]() -distinguishable set, and cluster grids. The above techniques attack the curse of dimensionality in two different ways: one is to reduce the number of points within a fixed solution domain and the other is to reduce the size of the domain itself. To be specific, the Smolyak method uses a fixed geometry, a multidimensional hypercube, but chooses a small set of points within the hypercube. In turn, a stochastic simulation method uses an adaptive geometry: it places grid points exclusively in the area of the state space in which the solution “lives” and thus avoids the cost of computing a solution in those areas that are unlikely to happen in equilibrium. Finally, an

-distinguishable set, and cluster grids. The above techniques attack the curse of dimensionality in two different ways: one is to reduce the number of points within a fixed solution domain and the other is to reduce the size of the domain itself. To be specific, the Smolyak method uses a fixed geometry, a multidimensional hypercube, but chooses a small set of points within the hypercube. In turn, a stochastic simulation method uses an adaptive geometry: it places grid points exclusively in the area of the state space in which the solution “lives” and thus avoids the cost of computing a solution in those areas that are unlikely to happen in equilibrium. Finally, an ![]() -distinguishable set and cluster grid methods combine an adaptive geometry with an efficient discretization: they distinguish a high-probability area of the state space and cover such an area with a relatively small set of points. We survey these techniques in Section 4.

-distinguishable set and cluster grid methods combine an adaptive geometry with an efficient discretization: they distinguish a high-probability area of the state space and cover such an area with a relatively small set of points. We survey these techniques in Section 4.

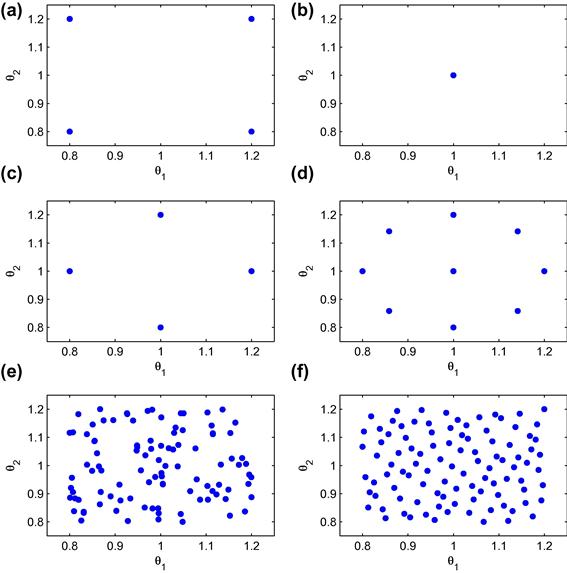

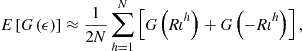

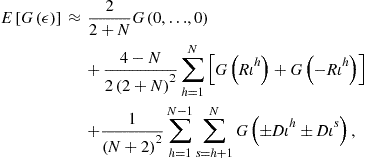

Numerical Integration

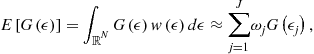

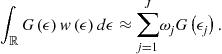

In Step 2 of the Euler equation algorithm, we need to specify a method for approximating integrals. As a starting point, we consider a simple two-node Gauss-Hermite quadrature method that approximates an integral of a function of a normally distributed variable ![]() with a weighted average of just two values

with a weighted average of just two values ![]() and

and ![]() that happen with probability

that happen with probability ![]() , i.e.,

, i.e.,

![]()

where ![]() is a bounded continuous function, and

is a bounded continuous function, and ![]() is a density function of a normal distribution. Alternatively, we can use a three-node Gauss-Hermite quadrature method, which uses nodes

is a density function of a normal distribution. Alternatively, we can use a three-node Gauss-Hermite quadrature method, which uses nodes ![]() and weights

and weights ![]() and

and ![]() or even a one-node Gauss-Hermite quadrature method, which uses

or even a one-node Gauss-Hermite quadrature method, which uses ![]() and

and ![]() .

.

Another possibility is to approximate integrals using Monte Carlo integration. We can make ![]() random draws and approximate an integral with a simple average of the draws,

random draws and approximate an integral with a simple average of the draws,

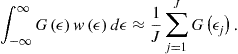

Let us compare the above integration methods using an example.

Note that the quadrature method with two nodes delivers the exact value of the integral. Even with just one node, the quadrature method can deliver an accurate integral if ![]() is close to linear (which is often the case in real business cycle models), i.e.,

is close to linear (which is often the case in real business cycle models), i.e., ![]() . To evaluate the accuracy of Monte Carlo integration, let us use

. To evaluate the accuracy of Monte Carlo integration, let us use ![]() , which is consistent with the magnitude of fluctuations in real business cycle models. Let us concentrate just on the term

, which is consistent with the magnitude of fluctuations in real business cycle models. Let us concentrate just on the term ![]() for which the expected value and standard deviation are

for which the expected value and standard deviation are ![]() and

and ![]() , respectively. The standard deviation depends on the number of random draws: with one random draw, it is

, respectively. The standard deviation depends on the number of random draws: with one random draw, it is ![]() and with 1,000,000 draws, it is

and with 1,000,000 draws, it is ![]() . The last number represents an (expected) error in approximating the integral and places a restriction on the overall accuracy of solutions that can be attained by a solution algorithm using Monte Carlo integration. An infeasibly long simulation is needed for a Monte Carlo method to deliver the same accuracy level as that of Gauss-Hermite quadrature in our example.

. The last number represents an (expected) error in approximating the integral and places a restriction on the overall accuracy of solutions that can be attained by a solution algorithm using Monte Carlo integration. An infeasibly long simulation is needed for a Monte Carlo method to deliver the same accuracy level as that of Gauss-Hermite quadrature in our example.

Why is Monte Carlo integration inefficient in the context of numerical methods for solving dynamic economic models? This is because we compute expectations as do econometricians, who do not know the true density function of the data-generating process and have no choice but to estimate such a function from noisy data using a regression. However, when solving an economic model, we do know the process for shocks. Hence, we can construct the “true” density function and we can use such a function to compute integrals very accurately, which is done by the Gauss-Hermite quadrature method.

In principle, the Gauss-Hermite quadrature method can be extended to an arbitrary dimension using a tensor-product rule; see, e.g., Gaspar and Judd (1997). However, the number of integration nodes grows exponentially with the number of shocks in the model. To ameliorate the curse of dimensionality, we again need to avoid product rules. Monomial formulas are a nonproduct integration method that combines high accuracy and low cost; this class of integration methods is introduced to the economic literature in Judd (1998). Integration methods that are tractable in problems with a large number of shocks are surveyed in Section 5.

Optimization Methods

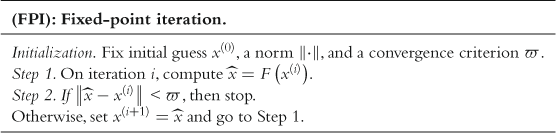

In Step 3 of the algorithm, we need to solve a system of nonlinear equations with respect to the unknown parameters vectors ![]() . In principle, this can be done with Newton-style optimization methods; see, e.g., Judd (1992). Such methods compute first and second derivatives of an objective function with respect to the unknowns and move in the direction of gradient descent until a solution is found. Newton methods are fast and efficient in small problems but become increasingly expensive when the number of unknowns increases. In high-dimensional applications, we may have thousands of parameters in approximating functions, and the cost of computing derivatives may be prohibitive. In such applications, derivative-free optimization methods are an effective alternative. In particular, a useful choice is a fixed-point iteration method that finds a root to an equation

. In principle, this can be done with Newton-style optimization methods; see, e.g., Judd (1992). Such methods compute first and second derivatives of an objective function with respect to the unknowns and move in the direction of gradient descent until a solution is found. Newton methods are fast and efficient in small problems but become increasingly expensive when the number of unknowns increases. In high-dimensional applications, we may have thousands of parameters in approximating functions, and the cost of computing derivatives may be prohibitive. In such applications, derivative-free optimization methods are an effective alternative. In particular, a useful choice is a fixed-point iteration method that finds a root to an equation ![]() by constructing a sequence

by constructing a sequence ![]() . We illustrate this method using an example.

. We illustrate this method using an example.

The advantage of fixed-point iteration is that it can iterate in this simple manner on objects of any dimensionality, for example, on a vector of the polynomial coefficients. The cost of this procedure does not grow considerably with the number of polynomial coefficients. The shortcoming is that it does not always converge. For example, if we wrote the above equation as ![]() and implemented fixed-point iteration

and implemented fixed-point iteration ![]() , we would obtain a sequence that diverges to

, we would obtain a sequence that diverges to ![]() starting from

starting from ![]() .

.

We survey derivative-free optimization methods in Section 6, and we show how to enhance their convergence properties by using damping. We apply fixed-point iteration in two different contexts. One is to solve for parameters vectors in approximating functions and the other is to solve for quantities satisfying a system of equilibrium conditions. The latter version of fixed-point iteration is advocated in Maliar et al. (2011) and is called iteration-on-allocation.

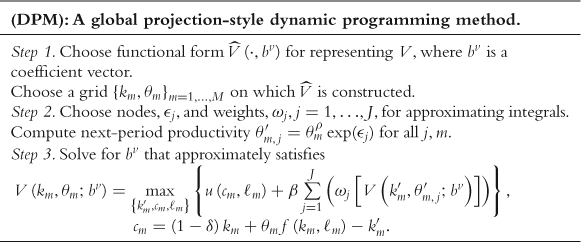

An Example of a Global Projection-Style Dynamic Programming Algorithm

We can also use a projection-style algorithm to solve for a value function ![]() .

.

Observe that this dynamic programming method also requires us to approximate multidimensional functions and integrals. Hence, our discussion about the curse of dimensionality and the need of nonproduct grids and nonproduct integration methods applies to dynamic programming algorithms as well. Furthermore, if a solution to the Bellman equation is interior, derivative-free optimization methods are also a suitable choice.

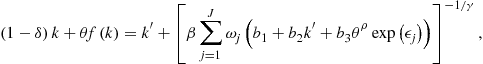

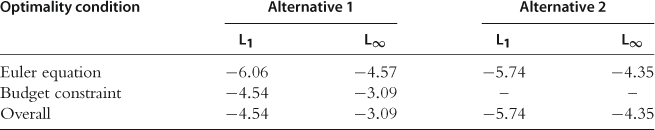

There are issues that are specific to dynamic programming problems, in addition to those issues that are common for dynamic programming and Euler equation methods. The first issue is that conventional value function iteration leads to systems of optimality conditions that are expensive to solve numerically. As an example, let us consider a version of model (8)–(10) with inelastic labor supply. We combine FOC ![]() with budget constraint (10) to obtain

with budget constraint (10) to obtain

![]() (11)

(11)

We parameterize ![]() with the simplest possible first-degree polynomial

with the simplest possible first-degree polynomial ![]() . Then, (11) is

. Then, (11) is

(12)

(12)

where we assume ![]() (and hence,

(and hence, ![]() ) for expository convenience. Solving (12) with respect to

) for expository convenience. Solving (12) with respect to ![]() is expensive even in this simple case. We need to find a root numerically by computing

is expensive even in this simple case. We need to find a root numerically by computing ![]() in a large number of candidate points

in a large number of candidate points ![]() , as well as by approximating expectations for each value of

, as well as by approximating expectations for each value of ![]() considered. For high-dimensional problems, the cost of conventional value function iteration is prohibitive.

considered. For high-dimensional problems, the cost of conventional value function iteration is prohibitive.

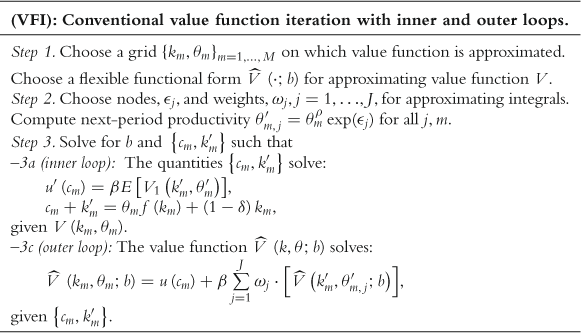

Two methods are proposed to simplify rootfinding to (12), one is an endogenous grid method of Carroll (2005) and the other is an envelope condition method of Maliar and Maliar (2013). The essence of Carroll’s (2005) method is the following simple observation: it is easier to solve (12) with respect to ![]() given

given ![]() than to solve it with respect to

than to solve it with respect to ![]() given

given ![]() . Hence, Carroll (2005) fixes future endogenous state variable

. Hence, Carroll (2005) fixes future endogenous state variable ![]() as grid points and treats current endogenous state variable

as grid points and treats current endogenous state variable ![]() as an unknown.

as an unknown.

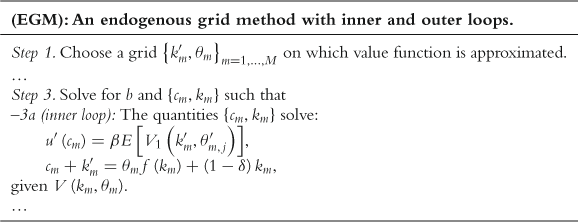

The envelope condition method of Maliar and Maliar (2012a,b) builds on a different idea. Namely, it replaces backward-looking iteration based on a FOC by forward-looking iteration based on an envelope condition. For a version of (8)–(10) with inelastic labor supply, the envelope condition is ![]() , which leads to an explicit formula for consumption

, which leads to an explicit formula for consumption

![]() (13)

(13)

where we again assume ![]() . Since

. Since ![]() follows directly from the envelope condition, and since

follows directly from the envelope condition, and since ![]() follows directly from budget constraint (9), no rootfinding is needed at all in this example.

follows directly from budget constraint (9), no rootfinding is needed at all in this example.

Our second issue is that the accuracy of Bellman equation approaches is typically lower than that of similar Euler equation approaches. This is because the object that is relevant for accuracy is the derivative of value function ![]() and not

and not ![]() itself (namely,

itself (namely, ![]() enters optimality conditions (11) and (13) and determines the optimal quantities and not

enters optimality conditions (11) and (13) and determines the optimal quantities and not ![]() ). If we approximate

). If we approximate ![]() with a polynomial of degree

with a polynomial of degree ![]() , we effectively approximate

, we effectively approximate ![]() with a polynomial of degree

with a polynomial of degree ![]() , i.e., we effectively “lose” one polynomial degree after differentiation with the corresponding accuracy loss. To avoid this accuracy loss, Maliar and Maliar (2013) introduce variants of the endogenous grid and envelope condition methods that solve for derivatives of value function instead of or in addition to the value function itself. These variants produce highly accurate solutions.

, i.e., we effectively “lose” one polynomial degree after differentiation with the corresponding accuracy loss. To avoid this accuracy loss, Maliar and Maliar (2013) introduce variants of the endogenous grid and envelope condition methods that solve for derivatives of value function instead of or in addition to the value function itself. These variants produce highly accurate solutions.

Precomputation

Steps inside the main iterative cycle are repeated a large number of times. Computational expense can be reduced if some expensive steps are taken outside the main iterative cycle, i.e., precomputed.

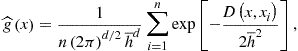

As an example, consider a method of precomputation of integrals introduced in Judd et al. (2011d). Let us parameterize ![]() by

by ![]() . The key here is to observe that we can compute the expectation of

. The key here is to observe that we can compute the expectation of ![]() up-front, without solving the model,

up-front, without solving the model,

![]()

where under assumption (10), we have ![]() . With this result, we rewrite Bellman equation (8) as

. With this result, we rewrite Bellman equation (8) as

![]()

Effectively, this converts a stochastic Bellman equation into a deterministic one. The effect of uncertainty is fully captured by a precomputed value ![]() . Judd et al. (2011d) also develop precomputation of integrals in the context of Euler equation methods.

. Judd et al. (2011d) also develop precomputation of integrals in the context of Euler equation methods.

Maliar and Maliar (2005b) and Maliar et al. (2011) introduce another kind of precomputation, one that constructs the solution manifolds outside the main iterative cycle. For example, consider (2) and (5),

![]()

If ![]() is fixed, we have a system of two equations with two unknowns,

is fixed, we have a system of two equations with two unknowns, ![]() and

and ![]() . Solving this system once is not costly but solving it repeatedly in each grid point and integration node inside the main iterative cycle may have a considerable cost even in our simple example. We precompute the choice of

. Solving this system once is not costly but solving it repeatedly in each grid point and integration node inside the main iterative cycle may have a considerable cost even in our simple example. We precompute the choice of ![]() and

and ![]() to reduce this cost. Specifically, outside the main iterative cycle, we specify a grid of points for

to reduce this cost. Specifically, outside the main iterative cycle, we specify a grid of points for ![]() , and we find a solution for

, and we find a solution for ![]() and

and ![]() in each grid point. The resulting solution manifolds

in each grid point. The resulting solution manifolds ![]() and

and ![]() give us

give us ![]() and

and ![]() for each given triple

for each given triple ![]() . Inside the main iterative cycle, we use the precomputed manifolds to infer consumption and labor choices as if their closed-form solutions in terms of

. Inside the main iterative cycle, we use the precomputed manifolds to infer consumption and labor choices as if their closed-form solutions in terms of ![]() were available.

were available.

Finally, Maliar and Maliar (2001, 2003a) introduce a technique of imperfect aggregation that makes it possible to precompute aggregate decision rules in certain classes of heterogeneous-agent economies. The precomputation methods are surveyed in Section 8.

Perturbation Methods

Perturbation methods approximate a solution in just one point—a deterministic steady state—using Taylor expansions of the optimality conditions. The costs of perturbation methods do not increase rapidly with the dimensionality of the problem. However, the accuracy of a perturbation solution may deteriorate dramatically away from the steady state in which such a solution is computed.

One technique that can increase the accuracy of perturbation methods is a change of variables proposed by Judd (2003). Among many different Taylor expansions that are locally equivalent, we must choose the one that is most accurate globally. For example, consider two approximations for the capital decision function,

![]()

Let ![]() be a steady state. If we set

be a steady state. If we set ![]() and

and ![]() , then

, then ![]() and

and ![]() are locally equivalent in a sense that they have the same values and the same derivatives in the steady state. Hence, we can compare their accuracy away from the steady state and choose the one that has a higher overall accuracy.

are locally equivalent in a sense that they have the same values and the same derivatives in the steady state. Hence, we can compare their accuracy away from the steady state and choose the one that has a higher overall accuracy.

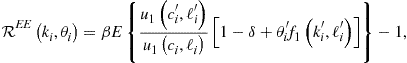

The other technique for increasing the accuracy of perturbation methods is a hybrid of local and global solutions developed in Maliar et al. (2013). The idea is to combine local solutions produced by a perturbation method with global solutions that are constructed to satisfy the model’s equations exactly. For example, assume that a first-order perturbation method delivers us a solution ![]() and

and ![]() in a model with inelastic labor supply. We keep the perturbation solution for

in a model with inelastic labor supply. We keep the perturbation solution for ![]() but replace

but replace ![]() with a new function for consumption

with a new function for consumption ![]() that is constructed to satisfy (9) exactly

that is constructed to satisfy (9) exactly

![]()

The obtained ![]() are an example of a hybrid solution. This particular hybrid solution produces a zero residual in the budget constraint unlike the original perturbation solution that produces nonzero residuals in all the model’s equations. The techniques for increasing the accuracy of perturbation methods are surveyed in Section 9.

are an example of a hybrid solution. This particular hybrid solution produces a zero residual in the budget constraint unlike the original perturbation solution that produces nonzero residuals in all the model’s equations. The techniques for increasing the accuracy of perturbation methods are surveyed in Section 9.

Parallel Computing

In the past decades, the speed of computers was steadily growing. However, this process has a natural limit (because the speed of electricity along the conducting material is limited and because the thickness and length of the conducting material are limited). The recent progress in solving computationally intense problems is related to parallel computation. We split a large problem into smaller subproblems, allocate the subproblems among many workers (computers), and solve them simultaneously. Each worker does not have much power but all together they can form a supercomputer. However, to benefit from this new computation technology, we need to design solution methods in a manner that is suitable for parallelizing. We also need hardware and software that support parallel computation. These issues are discussed in Section 10.

Methodology of the Numerical Analysis and Computational Details

Our solution procedure has two stages. In Stage 1, a method attempts to compute a numerical solution to a model. Provided that it succeeds, we proceed to Stage 2, in which we subject a candidate solution to a tight accuracy check. We specifically construct a set of points ![]() that covers an area in which we want the solution to be accurate, and we compute unit-free residuals in the model’s equations:

that covers an area in which we want the solution to be accurate, and we compute unit-free residuals in the model’s equations:

![]() (14)

(14)

(15)

(15)

![]() (16)

(16)

where ![]() , and

, and ![]() are the residuals in budget constraint (9), Euler equation (4), and FOC for the marginal utility of leisure (5). In the exact solution, such residuals are zero, so we can judge the quality of approximation by how far these residuals are away from zero.

are the residuals in budget constraint (9), Euler equation (4), and FOC for the marginal utility of leisure (5). In the exact solution, such residuals are zero, so we can judge the quality of approximation by how far these residuals are away from zero.

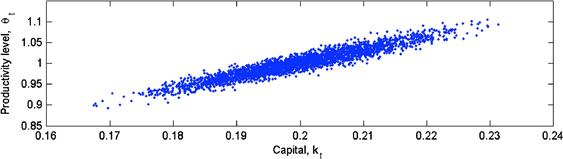

In most experiments, we evaluate residuals on stochastic simulation (we use 10,200 observations and we discard the first 200 observations to eliminate the effect of initial conditions). This style of accuracy checks is introduced in Jin and Judd (2002). In some experiments, we evaluate accuracy on deterministic sets of points that cover a given area in the state space such as a hypersphere or hypercube; this kind of accuracy check is proposed by Judd (1992). We must emphasize that we never evaluate residuals on points used for computing a solution in Stage 1 (in particular, for some methods the residuals in the grid points are zeros by construction) but we do so on a new set of points constructed for Stage 2.

If either a solution method fails to converge in Stage 1 or the quality of a candidate solution in Stage 2 is economically inacceptable, we modify the algorithm’s design (i.e., the number and placement of grid points, approximating functions, integration method, fitting method, etc.) and we repeat the computations until a satisfactory solution is produced.

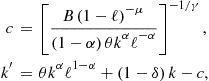

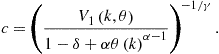

Parameterizations of the Model

In Sections (4)–(10), we report the results of numerical experiments. In those experiments, we parameterize model (1)–(3) with elastic labor supply by

![]() (17)

(17)

where parameters ![]() . We use

. We use ![]() , and we use

, and we use ![]() and

and ![]() to parameterize the stochastic process (3). For the utility parameters, our benchmark choice is

to parameterize the stochastic process (3). For the utility parameters, our benchmark choice is ![]() and

and ![]() but we also explore other values of

but we also explore other values of ![]() and

and ![]() .

.

In the model with inelastic labor supply, we assume ![]() and

and ![]() , i.e.,

, i.e.,

![]() (18)

(18)

Finally, we also study the latter model under the assumptions of full depreciation of capital, ![]() , and the logarithmic utility function,

, and the logarithmic utility function, ![]() ; this version of the model has a closed-form solution:

; this version of the model has a closed-form solution: ![]() and

and ![]() .

.

Reported Numerical Results

For each computational experiment, we report two accuracy measures, namely, the average and maximum absolute residuals across both the optimality conditions and ![]() test points. The residuals are represented in

test points. The residuals are represented in ![]() units, for example,

units, for example, ![]() means that a residual in the budget constraint is

means that a residual in the budget constraint is ![]() , and

, and ![]() means such a residual is

means such a residual is ![]() .

.

As a measure of computational expense, we report the running time. Unless specified separately, we use MATLAB, version 7.6.0.324 (R2008a) and a desktop computer with a Quad processor Intel® CoreTM i7 CPU920 @2.67 GHz, RAM 6,00 GB, and Windows Vista 64 bit.

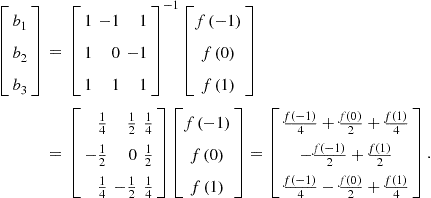

Acronyms Used

We summarize all the acronyms used in the chapter in Table 2.

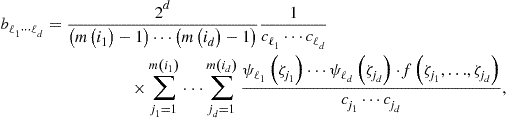

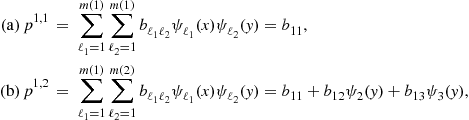

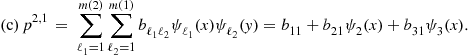

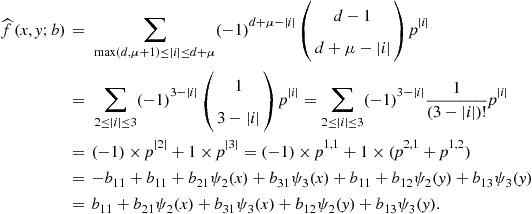

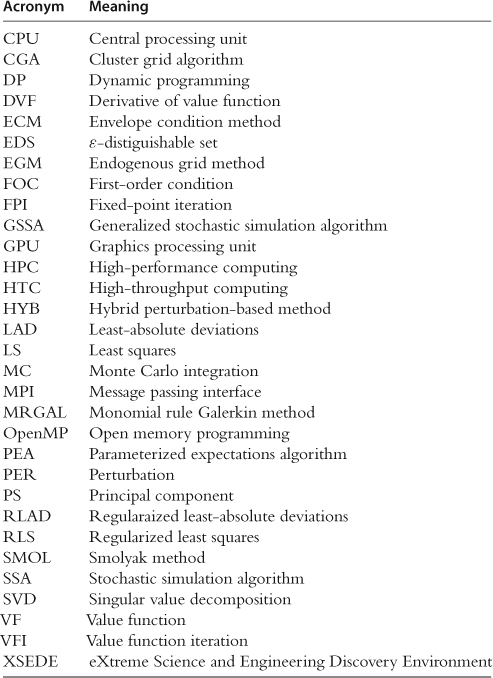

4 Nonproduct Approaches to Representing, Approximating, and Interpolating Functions