GDPS Metro

In this chapter we discuss the capabilities and prerequisites of the GDPS Metro offering. GDPS Metro supports both planned and unplanned situations, helping to maximize application availability and providing business continuity. A GDPS Metro solution delivers the following benefits:

•Near-continuous availability

•Disaster recovery (DR) across metropolitan distances

•Protection against multiple failures

GDPS Metro implemented in a dual leg configuration maintains three copies of your data so that even if one copy becomes unavailable, GDPS can continue to provide near-continuous availability and DR by using the remaining two copies.

•Recovery time objective (RTO) less than an hour

•Recovery point objective (RPO) of zero

The functions provided by GDPS Metro fall into two categories: Protecting your data and controlling the resources managed by GDPS. The following functions are among those that are included:

•Protecting your data:

– Ensuring the consistency of the secondary copies of your data in the event of a disaster or suspected disaster, including the option to also ensure zero data loss

– Transparent switching to either of the secondary disk sets using HyperSwap

•Controlling the resources managed by GDPS during normal operations, planned changes, and following a disaster:

– Monitoring and managing the state of the production z/OS systems and LPARs (shutdown, activating, deactivating, IPL, and automated recovery)

– Monitoring and managing z/VM guests (shutdown, activating, deactivating, IPL, and automated recovery)

– Managing the couple data sets and coupling facility recovery

– Support for switching your disk, or systems, or both, to another site

– User-customizable scripts that control how GDPS Metro reacts to specified error situations, which can also be used for planned events

This chapter includes the following topics:

3.1 Introduction to GDPS Metro

GDPS Metro is a continuous availability and disaster recovery solution that handles many types of planned and unplanned outages. As described in Chapter 1, “Introduction to business resilience and the role of GDPS” on page 1, most outages are planned, and even among unplanned outages, most are not disasters. GDPS Metro provides capabilities to address the required levels of availability across these outages and in a disaster scenario. These capabilities are described in this chapter.

3.1.1 Protecting data integrity and data availability with GDPS Metro

In 2.2, “Data consistency” on page 17, we point out that data integrity across primary and secondary volumes of data is essential to perform a database restart and accomplish an RTO of less than hour. This section includes details about how GDPS Metro automation provides both data consistency if there are mirroring problems and data availability if there are primary disk problems.

The following types of disk problems trigger a GDPS automated reaction:

•Mirroring problems (Freeze triggers). No problem exists writing to the primary disk subsystem, but a problem exists mirroring the data to one or both of the secondary disk subsystems. For more information, see “GDPS Freeze function for mirroring failures” on page 55.”

•Primary disk problems (HyperSwap triggers). There is a problem writing to the primary disk: either a hard failure, or the disk subsystem is not accessible.

GDPS Freeze function for mirroring failures

GDPS uses automation, keyed off events or messages, to stop all mirroring for a given replication leg when a remote copy failure occurs between one or more of the primary/secondary disk subsystem pairs on that replication leg. In particular, the GDPS automation uses the IBM PPRC Freeze and Run architecture, which has been implemented as part of Metro Mirror on IBM disk subsystems and also by other enterprise disk vendors. In this way, if the disk hardware supports the Freeze and Run architecture, GDPS can ensure consistency across all data in the sysplex (consistency group), regardless of disk hardware type.

This preferred approach differs from proprietary hardware approaches that work only for one type of disk hardware. For more information about data consistency with synchronous disk mirroring, see “Metro Mirror data consistency” on page 24.

When a mirroring failure occurs, this problem is classified as a Freeze trigger and GDPS stops activity across all disk subsystems for the affected replication leg at the time the initial failure is detected, thus ensuring that the dependent write consistency of the secondary disks for that replication leg is maintained. Note that in a dual-leg environment, mirroring activity for the other replication leg is not affected by the freeze.

The following process occurs when a GDPS performs a Freeze:

1. Remote copy is suspended for all device pairs on the affected replication leg.

2. While the suspend command is being processed for each LSS, each device goes into a long busy state. When the suspend completes for each device, z/OS marks the device unit control block (UCB) in all connected operating systems to indicate an Extended Long Busy (ELB) state.

3. No I/Os can be issued to the affected devices until the ELB is thawed with the PPRC Run (or “thaw”) action or until it times out. (The consistency group timer setting commonly defaults to 120 seconds, although for most configurations a longer ELB is preferable.)

4. All paths between the Metro Mirrored disks on the affected replication leg are removed, which prevents further I/O to the associated secondary disks if Metro Mirror is accidentally restarted.

Because no I/Os are processed for a remote-copied volume during the ELB, dependent write logic ensures the consistency of the affected secondary disks. GDPS performs a Freeze for all LSS pairs that contain GDPS managed mirrored devices.

|

Important: Because of the dependent write logic, it is not necessary for all LSSs to be frozen at the same instant. In a large configuration with many thousands of remote copy pairs, it is not unusual to see short gaps between the times when the Freeze command is issued to each disk subsystem. Because of the ELB, however, such gaps are not a problem.

|

After GDPS performs the Freeze and the consistency of the secondary disks on the affected leg is protected, the action GDPS takes next depends on the client’s PPRCFAILURE policy (also known as Freeze policy). For more information about the actions GDPS takes based on this policy, see “Freeze policy (PPRCFAILURE policy) options” on page 57.

GDPS Metro uses a combination of storage subsystem and sysplex triggers to automatically secure, at the first indication of a potential disaster, a data-consistent secondary copy of your data using the Freeze function. In this way, the secondary copy of the data is preserved in a consistent state, perhaps even before production applications are aware of any issues.

Ensuring the data consistency of the secondary copy ensures that a normal system restart can be performed instead of having to perform DBMS forward recovery actions. This is an essential design element of GDPS to minimize the time to recover the critical workloads in the event of a disaster in the primary site.

You can appreciate why such a process must be automated. When a device suspends, there is not enough time to launch a manual investigation process. The entire mirror for the affected leg must be frozen by stopping further I/O to it, and then the policy indicates whether production will continue to run with mirroring temporarily suspended, or whether all systems should be stopped to guarantee zero data loss.

In summary, a freeze is triggered as a result of a Metro Mirror suspension event for any primary disk in the GDPS configuration; that is, at the first sign that a duplex mirror that is going out of the duplex state. When a device suspends, all attached systems are sent a “State Change Interrupt” (SCI). A message is issued in all of those systems and then each system must issue multiple I/Os to investigate the reason for the suspension event.

When GDPS performs a freeze, all primary devices in the Metro Mirror configuration suspend for the affected replication leg. This can result in significant SCI traffic and many messages in all of the systems. GDPS, with z/OS and microcode on the DS8000 disk subsystems, supports reporting suspensions in a summary message per LSS instead of at the individual device level. This feature is known as Summary Event Notification for PPRC Suspends (PPRCSUM). When compared to reporting suspensions on a per devices basis, PPRCSUM dramatically reduces the message traffic and extraneous processing associated with Metro Mirror suspension events and freeze processing.

Freeze policy (PPRCFAILURE policy) options

As we have described, when a mirroring failure is detected on a replication leg, GDPS automatically and unconditionally performs a Freeze of that leg to secure a consistent set of secondary volumes in case the mirroring failure could be the first indication of a site failure. Because the primary disks are in the Extended Long Busy state as a result of the freeze and the production systems are locked out, GDPS must take some action. Here, there is no time to interact with the operator on an event-by-event basis. The action must be taken immediately. The action to be taken is determined by a customer policy setting, that is, the PPRCFAILURE policy option (also known as the Freeze policy option). GDPS will use this same policy setting after every Freeze event to determine what its next action should be. The policy can be specified at a leg level allowing a different policy specification for each of the replication legs. The following options are available:

•PPRCFAILURE=GO (Freeze and Go)

GDPS allows production systems to continue operation after mirroring is suspended.

•PPRCFAILURE=STOP (Freeze and Stop)

GDPS resets production systems while I/O is suspended.

•PPRCFAILURE=STOPLAST

This option is only relevant to dual-leg configurations. When it is specified, GDPS checks the mirroring status of the other replication leg. If the status of the other leg is OK, GDPS performs a Go. If not, and this is the last viable leg that GDPS has just frozen, GDPS performs a Stop.

•PPRCFAILURE=COND (Freeze and Stop conditionally)

GDPS tries to determine if a secondary disk caused the mirroring failure. If so, GDPS performs a Go. If not, GDPS performs a Stop.

•PPRCFAILURE=CONDLAST

This option is only relevant to dual-leg configurations. When it is specified, GDPS checks the mirroring status of the other replication leg. If the status of the other leg is OK, GDPS performs a Go. If not (the freeze was performed on the last viable leg), GDPS tries to determine if a secondary disk caused the mirroring failure. If so, GDPS performs a Go. If not, GDPS performs a Stop.

Freeze and Go

With this policy, after performing the Freeze, GDPS performs a Run action against all primary LSSs, which is also known as performing a Go. Performing a Go removes the ELB and allows production systems to continue using these devices. The devices will be in remote copy-suspended mode in relation to the secondary devices on the affected leg, so any further writes to these devices are no longer being mirrored to the secondary devices on that leg (writes will continue to be mirrored to the secondary devices on the other leg in dual-leg configurations, assuming that mirroring on that leg is in duplex status at the time). However, changes are being tracked by the hardware so that, later, only the changed data will be resynchronized to the secondary disks and the affected leg.

With this policy you avoid an unnecessary outage for a false freeze event, that is, if the trigger is simply a transient event. However, if the trigger turns out to be the first sign of an actual disaster, you might continue operating for an amount of time before all systems fail. Any updates made to the primary volumes during this time are not replicated to the secondary disk on the affected leg, and therefore are lost if you end up having to recover on those secondary disk. In addition, because the CF structures were updated after the secondary disks were frozen, the CF structure content is not consistent with the secondary disks. Therefore, the CF structures in either site cannot be used to restart workloads and log-based restart must be used when restarting applications.

This is not full forward recovery. It is forward recovery of any data, such as DB2 group buffer pools, that might have existed in a CF but might not have been written to disk yet. This results in prolonged recovery times. The duration depends on how much such data existed in the CFs at that time. With a Freeze and Go policy, you might consider tuning applications such as DB2, which can harden such data on disk more frequently than otherwise.

Freeze and Go is a high availability option that avoids production outage for false freeze events. However, it carries a potential for data loss.

Freeze and Stop

With this policy, you can be assured that no updates are made to the primary volumes after the Freeze because all systems that can update the primary volumes are reset. This ensures that no more updates can occur to the primary disks because such updates would not be mirrored to the affected secondary disk, meaning that it would not be possible to achieve zero data loss if a failure occurs (or if the original trigger was an indication of a catastrophic failure) and recovery on the affected secondary disk is required.

You can choose to restart the systems when you want. For example, if this was a false freeze (that is, a false alarm), then you can quickly resynchronize the mirror and restart the systems only after the mirror is duplex.

If you are using duplexed coupling facility (CF) structures along with a Freeze and Stop policy, it might seem that you are guaranteed to use the duplexed instance of your structures if you must recover and restart your workload with the frozen secondary copy of your disks. However, this is not always the case. There can be rolling disaster scenarios where before, after, or during the freeze event, there is an interruption (perhaps failure of CF duplexing links) that forces CFRM to drop out of duplexing.

There is no guarantee that it is the structure instance in the surviving site that is kept. It is possible that CFRM keeps the instance in the site that is about to totally fail. In this case, there will not be an instance of the structure in the site that survives the failure.

To summarize, with a Freeze and Stop policy, if there is a surviving, accessible instance of application-related CF structures, this instance will be consistent with the frozen secondary disks. However, depending on the circumstances of the failure, even with structures duplexed across two sites you are not 100% guaranteed to have a surviving, accessible instance of the application structures and therefore you must have the procedures in place to restart your workloads without the structures.

Although a Stop policy can be used to ensure no data loss, if a failure occurs that is a false freeze event, that is, it is a transient failure that did not necessitate recovering using the frozen disks, it results in unnecessarily stopping the systems.

Freeze and Stop last

For dual-leg configurations, when this policy option is specified, after the Freeze, GDPS checks the status of mirroring on the other replication leg (the leg other than the one that was just frozen) to determine whether the leg that just frozen was the last leg actively replicating data. If the other leg is still actively replicating data, GDPS performs a Go. But if the other leg is already frozen or mirroring status is not OK, GDPS performs a Stop.

When you have only one replication leg defined in your configuration (you have only one secondary copy of your data), using this policy specification is the same as using a Freeze and Stop policy.

Freeze and Stop conditional

Field experience has shown that most of the Freeze triggers are not necessarily the start of a rolling disaster, but are “False Freeze” events that do not necessitate recovery on the secondary disk. Examples of such events include connectivity problems to the secondary disks and secondary disk subsystem failure conditions.

With a COND policy, the action that GDPS takes after it performs the Freeze is conditional. GDPS tries to determine if the mirroring problem was as a result of a permanent or temporary secondary disk subsystem problem:

•If GDPS can determine that the freeze was triggered as a result of a secondary disk subsystem problem, GDPS performs a Go. That is, it allows production systems to continue to run by using the primary disks. However, updates will not be mirrored until the secondary disk can be fixed and Metro Mirror can be resynchronized.

•If GDPS cannot ascertain that the cause of the freeze was a secondary disk subsystem, GDPS operates on the assumption that this could still be the beginning of a rolling disaster in the primary site and performs a Stop, resetting all the production systems to guarantee zero data loss. GDPS cannot always detect that a particular freeze trigger was caused by a secondary disk, and that some freeze events that are in fact caused by a secondary disk could still result in a Stop.

For GDPS to determine whether a freeze trigger might have been caused by the secondary disk subsystem, the IBM DS8000 disk subsystems provide a special query capability known as the Query Storage Controller Status microcode function. If all disk subsystems in the GDPS managed configuration support this feature, GDPS uses this special function to query the secondary disk subsystems in the configuration to understand the state of the secondaries and if one of these secondaries might have caused the freeze. If you use the COND policy setting but all disks in your configuration do not support this function, GDPS cannot query the secondary disk subsystems, and the resulting action is a Stop.

This option can provide a good compromise where you can minimize the chance that systems would be stopped for a false freeze event and increase the chance of achieving zero data loss for a real disaster event.

Freeze and Stop conditional last

For dual-leg configurations, when this policy option is specified, after the Freeze, GDPS checks the status of mirroring on the other replication leg (the leg other than the one that was just frozen) to determine if the leg just frozen was the last leg actively replicating data. If the other leg is still actively replicating data, GDPS performs a Go. If the other leg is already frozen or mirroring status is not OK, GDPS performs conditional Stop processing; that is, it queries the secondary disk subsystem and performs a Go if, as a result of the query, it determines that the freeze was caused by the secondary, but performs a Stop if it cannot determine for sure that the problem was caused by the secondary.

When you only have one replication leg defined in your configuration (you only have one secondary copy of your data), using this policy specification is the same as using a Freeze and Stop conditional policy.

PPRCFAILURE policy selection considerations

The PPRCFAILURE policy option specification directly relates to recovery time and recovery point objectives (RTO and RPO, respectively), which are business objectives.Therefore, the policy option selection is really a business decision rather than an IT decision. If data associated with your transactions is high-value, it might be more important to ensure that no data associated with your transactions is ever lost, so you might decide on a Freeze and Stop policy.

If you have huge volumes of relatively low-value transactions, you might be willing to risk some lost data in return for avoiding unnecessary outages with a Freeze and Go policy. The Freeze and Stop Conditional policy attempts to minimize the chance of unnecessary outages and the chance of data loss; however, there is still a risk of either, however small.

The various PPRCFAILURE policy options, combined with the fact that the policy options are specified on a per replication leg basis (different policies can be specified for different legs), gives you the flexibility to refine your policies to meet your unique business goals.

For example, if your RPO is zero, you can use the following PPRCFAILURE policy:

•For RL2, Freeze and Stop (PPRCFAILURE=STOP)

Because RS3 is your disaster recovery copy and you must ensure that you never lose data should you ever have to recover and run on the RS3 disk, you must always unconditionally stop the systems to ensure that no further updates occur to the primary disks that could be lost in a recovery scenario.

•For RL1, Freeze and Stop on last leg only (STOPLAST)

You do not need to take a production outage when Metro Mirror freezes on the high-availability leg if RL2 is still functional and continues to provide disaster recovery protection. However, if RL2 is not functional when Metro Mirror on RL1 suspends, you might want to at least retain the capability to recover on RS2 disk with zero data loss if it becomes necessary.

However, if you want to avoid unnecessary outages at the risk of losing data if there is an actual disaster, you can specify Freeze and Go for both of your replication legs.

GDPS HyperSwap function

If there is a problem writing or accessing the primary disk because of a failing, failed, or inaccessible or non-responsive disk, there is a need to swap from the primary disks to one of the sets of secondary disks.

GDPS Metro delivers a powerful function known as HyperSwap. HyperSwap provides the ability to swap from using the primary devices in a mirrored configuration to using what had been one of the sets of secondary devices, in a manner that is transparent to the production systems and applications using these devices. Before the availability of HyperSwap, a transparent disk swap was not possible. All systems using the primary disk would have been shut down (or might have failed, depending on the nature and scope of the failure) and would have been re-IPLed using the secondary disks. Disk failures were often a single point of failure for the entire sysplex.

With HyperSwap, such a switch can be accomplished without IPL and with just a brief hold on application I/O. The HyperSwap function is completely controlled by automation, thus allowing all aspects of the disk configuration switch to be controlled through GDPS.

HyperSwap can be invoked in two ways:

•Planned HyperSwap

A planned HyperSwap is invoked by operator action using GDPS facilities. One example of a planned HyperSwap is where a HyperSwap is initiated in advance of planned disruptive maintenance to a disk subsystem.

•Unplanned HyperSwap

An unplanned HyperSwap is invoked automatically by GDPS, triggered by events that indicate the primary disk problem.

Primary disk problems can be detected as a direct result of an I/O operation to a specific device that fails because of a reason that indicates a primary disk problem such as:

– No paths available to the device

– Permanent error

– I/O timeout

In addition to a disk problem being detected as a result of an I/O operation, it is also possible for a primary disk subsystem to proactively report that it is experiencing an acute problem. The IBM DS8000 provides a special microcode function known as the Storage Controller Health Message Alert capability. Problems of different severity are reported by disk subsystems that support this capability. Those problems classified as acute are also treated as HyperSwap triggers. After systems are swapped to use the secondary disks, the disk subsystem and operating system can try to perform recovery actions on the former primary without impacting applications since the applications are no longer using those disks.

Planned and unplanned HyperSwap have requirements in terms of the physical configuration, such as having to be symmetrically configured, and so on. While a client’s environment meets these requirements, there is no special enablement required to perform planned swaps. Unplanned swaps are not enabled by default and must be enabled explicitly as a policy option. This is described in more detail in “Preferred Swap Leg and HyperSwap (Primary Failure) policy options” on page 62.

When a swap is initiated, GDPS always validates various conditions to ensure that it is safe to swap. For example, if the mirror is not fully duplex on a given leg, that is, not all volume pairs are in a duplex state, a swap cannot be performed on that leg. The way that GDPS reacts to such conditions changes depending on the condition detected and whether the swap is a planned or unplanned swap.

Assuming that there are no show-stoppers and the swap proceeds, for both planned and unplanned HyperSwap, the systems that are using the primary volumes will experience a temporary pause in I/O processing. GDPS blocks I/O both at the channel subsystem level by performing a Freeze which results in all disks going into Extended Long Busy, and also in all systems, where I/O is quiesced at the operating system (UCB) level. This is to ensure that no systems use the disks until the switch is complete. During the time when I/O is paused, the following process is completed:

1. The Metro Mirror configuration is physically switched. This includes physically changing the secondary disk status to primary. Secondary disks are protected and cannot be used by applications. Changing their status to primary allows them to come online to systems and be used.

2. The disks will be logically switched in each of the systems in the GDPS configuration. This involves switching the internal pointers in the operating system control blocks (UCBs). After the switch, the operating system will point to the former secondary devices which will be the new primary devices.

3. Finally, the systems resume operation using the new, swapped-to primary devices. The applications are not aware of the fact that different devices are now being used.

This brief pause during which systems are locked out of performing I/O is known as the User Impact Time. In benchmark measurements at IBM using currently supported releases of GDPS and IBM DS8000 disk subsystems, the User Impact Time to swap 10,000 pairs across 16 systems during an unplanned HyperSwap was less than 10 seconds. Most implementations are actually much smaller than this and typical impact times in a well-configured environment using the most current storage and server hardware are measured in seconds. Although results will depend on your configuration, these numbers give you a high-level idea of what to expect.

HyperSwap can be executed on either replication leg in a GDPS Metro dual-leg environment. For a planned swap, you must specify which leg you want to use for the swap. For an unplanned swap, which leg is chosen depends on many factors, including your HyperSwap policy. This is described in more detail in “Preferred Swap Leg and HyperSwap (Primary Failure) policy options” on page 62.

After a replication leg is selected for the HyperSwap, GDPS swaps all devices on the selected replication leg. Just as the Freeze function applies to the entire consistency group, HyperSwap is for the entire consistency group. For example, if a single mirrored volume fails and HyperSwap is invoked, processing is swapped to one of the sets of secondary devices for all primary volumes in the configuration, including those in other, unaffected, disk subsystems. This is to ensure that all primary volumes remain in the same site. If HyperSwap were to swap only the failed LSS, you would then have several primaries in one location, and the remainder in another location. This would make for a significantly complex environment to operate and administer I/O configurations.

Incremental Resynchronization

For dual-leg configurations, when a disk switch or recovery on one of the secondaries occurs, MTMM provides for a capability known as “incremental resynchronization” (IR). Assume your RS1 disks are the current primaries and the RS2 and RS3 disks are the current secondaries. If you switch from using RS1 to using RS2 as your primary disks, to maintain a multi-target configuration, you will need to establish replication on RL1, between RS2 and RS1, and on RL3, between RS2 and RS3. A feature of the Metro Mirror copy technology known as Failover/Failback, together with the MTMM IR capability allows you to establish replication for RL1 and RL3 without having to copy all of the data from RS2 to RS1 or from RS2 to RS3. Only the changes that occur on B after the switch to B are copied in order to resynchronize the two legs.

If there is an unplanned HyperSwap from RS1 to RS2, because RS1 has failed, replication can be established on RL3 between RS2 and RS3 in order to restore disaster recovery readiness. Again, this is an incremental resynchronization (only changed tracks are copied), so the duration to get to a protected position will be much faster compared to performing an initial copy for the leg.

HyperSwap with less than full channel bandwidth

You may consider enabling unplanned HyperSwap on the cross-site replication leg (RL2), even if you do not have sufficient cross-site channel bandwidth to sustain the full production workload for normal operations. Assuming that a disk failure is likely to cause an outage and that you have to switch to using the RS3 disk in the other site (because the RS2 disks in the same site are down at the time), the unplanned HyperSwap to RS3 might at least present you with the opportunity to perform an orderly shutdown of your systems first. Shutting down your systems cleanly avoids the complications and restart time elongation associated with a crash-restart of application subsystems.

Preferred Swap Leg and HyperSwap (Primary Failure) policy options

Clients might prefer not to immediately enable their environment for unplanned HyperSwap when they first implement GDPS. For this reason, unplanned HyperSwap is not enabled by default. However, we strongly suggest that all GDPS Metro clients enable their environment for unplanned HyperSwap, at a minimum, on the local replication leg (RL1) if it is configured. Both copies of disk on the RL1 leg (RS1 and RS2) are local and therefore distance and connectivity should not be an issue.

You control the actions that GDPS takes for primary disk problems by specifying a Primary Failure policy option. This option is applicable to both replication legs. However, you have the option of overriding this specification at a leg level and request a different action based on which leg is selected by GDPS to act upon. Furthermore, there is the Preferred Swap Leg policy, which is factored in when GDPS decides which leg to act upon as a result of a primary disk problem trigger.

Preferred Swap Leg selection for unplanned HyperSwap

In a dual-leg configuration, a primary disk problem trigger is common to both replication legs because the primary disk is common to both legs. Before acting on the trigger, GDPS first needs to select which leg to act upon. GDPS provides you with the ability to influence this decision by specifying a Preferred Swap Leg policy. GDPS will attempt to select the leg that you have identified as the Preferred Swap Leg first. However, if this leg is not eligible for the action that you specified in your Primary Failure policy, GDPS attempts to select the other active replication leg. These are among the reasons that your Preferred Swap Leg might not be eligible for selection:

•It is currently the MTIR leg.

•All pairs for the leg are not in a duplex state.

•It is currently not HyperSwap enabled.

HyperSwap retry on non-preferred leg

If the preferred leg is viable and selected for an unplanned swap, there is still a possibility (albeit small) that the swap on this leg fails for some reason. When swap on the first leg fails, if the other replication leg is enabled for HyperSwap, GDPS will retry the swap on the other leg. This maximizes the chances of a successful swap.

Primary failure policy options

After GDPS has selected which leg it will act on when a primary disk problem trigger occurs, the first thing it will do will be a Freeze on the selected leg (the same as is performed when a mirroring problem trigger is encountered). GDPS then applies the Primary Failure policy option specified for that leg. The Primary Failure policy for each leg can specify a different action. You can specify the following Primary Failure policy options:

•PRIMARYFAILURE=GO

No swap is performed. The action GDPS takes is the same as for a freeze event with policy option PPRCFAILURE=GO. A Run action is performed, which will allow systems to continue using the original primary disks. Metro Mirror is suspended and therefore updates are not being replicated to the secondary. Note, however, that depending on the scope of the primary disk problem, it might be that some or all production workloads simply cannot run or cannot sustain required service levels. Such a situation might necessitate restarting the systems on the secondary disks. Because of the freeze, the secondary disks are in a consistent state and can be used for restart. However, any transactions that ran after the Go action will be lost.

•PRIMARYFAILURE=STOP

No swap is performed. The action GDPS takes is the same as for a freeze event with policy option PPRCFAILURE=STOP. GDPS system-resets all the production systems. This ensures that no further I/O occurs. After performing situation analysis, if it is determined that this was not a transient issue and that the secondaries should be used to IPL the systems again, no data will be lost.

•PRIMARYFAILURE=SWAP,swap_disabled_action

The first parameter, SWAP, indicates that after performing the Freeze, GDPS will proceed with performing an unplanned HyperSwap. When the swap is complete, the systems will be running on the new, swapped-to primary disks (former secondaries). Mirroring on the selected leg will be in a suspended state; because the primary disks are known to be in a problematic state, there is no attempt to reverse mirroring. After the problem with the primary disks is fixed, you can instruct GDPS to resynchronize Metro Mirror from the current primaries to the former ones (which are now considered to be secondaries).

The second part of this policy, swap_disabled_action, indicates what GDPS should do if HyperSwap had been temporarily disabled by operator action at the time the trigger was encountered. Effectively, an operator action has instructed GDPS not to perform a HyperSwap, even if there is a swap trigger. GDPS has already performed a freeze. The second part of the policy control what action GDPS will take next.

The following options (which are in effect only if HyperSwap is disabled by the operator) are available for the second parameter (remember that the disk is already frozen):

GO This is the same action as GDPS would have performed if the policy option had been specified as PRIMARYFAILURE=GO.

STOP This is the same action as GDPS would have performed if the policy option had been specified as PRIMARYFAILURE=STOP.

Preferred Swap Leg and Primary Failure policy selection considerations

For the Preferred Swap Leg policy, consider whether you can tolerate running with disk and systems in opposite sites with no/minimal performance impact. If that is acceptable, you can choose either leg, although it might be better to prefer the RL2 (Site1-Site2) leg. If you cannot tolerate running with disks and systems in opposite sites, choose the RL1, local leg.

For the Primary Failure policy, again we recommend that you specify SWAP for the first part of the policy option to enable HyperSwap, at least on the local replication leg (RL1). If distance and connectivity between your sites is not an issue, consider specifying SWAP for the first part of the policy on the remote replication leg (RL2) also.

For the Stop or Go choice, either as the second part of the policy option or if you will not be using SWAP, similar considerations apply as for the PPRCFAILURE policy options to Stop or Go. Go carries the risk of data loss if it is necessary to abandon the primary disk and restart systems on the secondary. Stop carries the risk of taking an unnecessary outage if the problem was transient. The key difference is that with a mirroring failure, the primary disks are not broken. When you allow the systems to continue to run on the primary disk with the Go option, other than a disaster (which is low probability), the systems are likely to run with no problems. With a primary disk problem, with the Go option, you are allowing the systems to continue running on what are known to be disks that experienced a problem just seconds ago. If this was a serious problem with widespread impact, such as an entire disk subsystem failure, the applications will experience severe problems. Some transactions might continue to commit data to those disks that are not broken. Other transactions might be failing or experiencing serious service time issues. Also, if there is a decision to restart systems on the secondary because the primary disks are simply not able to support the workloads, there will be data loss. The probability that a primary disk problem is a real problem that will necessitate restart on the secondary disks is much higher when compared to a mirroring problem. A Go specification in the Primary Failure policy increases your risk of data loss.

If the primary failure was of a transient nature, a Stop specification results in an unnecessary outage. However, with primary disk problems, the probability that the problem could necessitate restart on the secondary disks is high, so a Stop specification in the Primary Failure policy avoids data loss and facilitates faster restart.

The considerations relating to CF structures with a PRIMARYFAILURE event are similar to a PPRCFAILURE event. If there is an actual swap, the systems continue to run and continue to use the same structures as they did before the swap; the swap is transparent. With a Go action, because you continue to update the CF structures along with the primary disks after the Go, if you need to abandon the primary disks and restart on the secondary, the structures are inconsistent with the secondary disks and are not usable for restart purposes. This will prolong the restart, and therefore your recovery time. With Stop, if you decide to restart the systems using the secondary disks, there is no consistency issue with the CF structures because no further updates occurred on either set of disks after the trigger was captured.

GDPS use of DS8000 functions

GDPS strives to use (when it makes sense) enhancements to the IBM DS8000 disk technologies. In this section we provide information about the key DS8000 technologies that GDPS supports and uses.

PPRC Failover/Failback support

When a primary disk failure occurs and the disks are switched to the secondary devices, PPRC Failover/Failback (FO/FB) support eliminates the need to do a full copy when reestablishing replication in the opposite direction. Because the primary and secondary volumes are often in the same state when the freeze occurred, the only differences between the volumes are the updates that occur to the secondary devices after the switch.

Failover processing sets the secondary devices to primary suspended status and starts change recording for any subsequent changes made. When the mirror is reestablished with failback processing, the original primary devices become secondary devices and a resynchronization of changed tracks takes place.

GDPS Metro requires PPRC FO/FB capability to be available on all disk subsystems in the managed configuration.

PPRC eXtended Distance (PPRC-XD)

PPRC-XD (also known as Global Copy) is an asynchronous form of the PPRC copy technology. GDPS uses PPRC-XD rather than Metro Mirror (which is the synchronous form of PPRC) to reduce the performance impact of certain remote copy operations that potentially involve a large amount of data. For more information, see 3.7.2, “Reduced impact initial copy and resynchronization” on page 106.

Storage Controller Health Message Alert

This facilitates triggering an unplanned HyperSwap proactively when the disk subsystem reports an acute problem that requires extended recovery time.

PPRC Summary Event Messages

GDPS supports the DS8000 PPRC Summary Event Messages (PPRCSUM) function which is aimed at reducing the message traffic and the processing of these messages for Freeze events. This is described in “GDPS Freeze function for mirroring failures” on page 55.

Soft Fence

Soft Fence provides the capability to block access to selected devices. As discussed in “Protecting secondary disks from accidental update” on page 66, GDPS uses Soft Fence to avoid write activity on disks that are exposed to accidental update in certain scenarios.

On-demand dump (also known as non-disruptive statesave)

When problems occur with disk subsystems such as those which result in an unplanned HyperSwap, a mirroring suspension or performance issues, a lack of diagnostic data from the time the event occurs can result in difficulties in identifying the root cause of the problem. Taking a full statesave can lead to temporary disruption to host I/O and is often frowned upon by clients for this reason. The on-demand dump (ODD) capability of the disk subsystem facilitates taking a non-disruptive statesave (NDSS) at the time that such an event occurs. The microcode does this automatically for certain events such as taking a dump of the primary disk subsystem that triggers a Metro Mirror freeze event and also allows an NDSS to be requested by an exploiter. This enables first failure data capture (FFDC) and thus ensures that diagnostic data is available to aid problem determination. Be aware that not all information that is contained in a full statesave is contained in an NDSS and therefore there may still be failure situations where a full statesave is requested by the support organization.

GDPS provides support for taking an NDSS using the remote copy panels. In addition to this support, GDPS autonomically takes an NDSS if there is an unplanned Freeze or HyperSwap event.

Query Host Access function

When a Metro Mirror disk pair is being established, the device that is the target (secondary) must not be in use by any system. The same is true when establishing a FlashCopy relationship to a target device. If the target is in use, the establishment of the Metro Mirror or FlashCopy relationship fails. When such failures occur, it can be a tedious task to identify which system is holding up the operation.

The Query Host Access disk function provides the means to query and identify what system is using a selected device. GDPS uses this capability and adds usability in several ways:

•Query Host Access identifies the LPAR that is using the selected device through the CPC serial number and LPAR number. It is still a tedious job for operations staff to translate this information to a system or CPC and LPAR name. GDPS does this translation and presents the operator with more readily usable information, thereby avoiding this additional translation effort.

•Whenever GDPS is requested to perform a Metro Mirror or FlashCopy establish operation, GDPS first performs Query Host Access to see if the operation is expected to succeed or fail as a result of one or more target devices being in use. GDPS alerts the operator if the operation is expected to fail, and identifies the target devices in use and the LPARs holding them.

•GDPS continually monitors the target devices defined in the GDPS configuration and alerts operations to the fact that target devices are in use when they should not be. This allows operations to fix the reported problems in a timely manner.

•GDPS provides the ability for the operator to perform ad hoc Query Host Access to any selected device using the GDPS panels.

Protecting secondary disks from accidental update

A system cannot be IPLed using a disk that is physically a Metro Mirror secondary disk because Metro Mirror secondary disks cannot be brought online to any systems. However, a disk can be secondary from a GDPS (and application use) perspective but physically, from a Metro Mirror perspective, have simplex or primary status.

For both planned and unplanned HyperSwap, and a disk recovery, GDPS changes former secondary disks to primary or simplex state. However, these actions do not modify the state of the former primary devices, which remain in the primary state.

Therefore, the former primary devices remain accessible and usable even though they are considered to be the secondary disks from a GDPS perspective. This makes it is possible to accidentally update or IPL from the wrong set of disks. Accidentally using the wrong set of disks can potentially result in a loss of data integrity or data.

GDPS Metro provides protection against using the wrong set of disks in different ways:

•If you attempt to load a system through GDPS (either script or panel) using the wrong set of disks, GDPS rejects the load operation.

•If you used the HMC rather than GDPS facilities for the load, then early in the IPL process, during initialization of GDPS, if GDPS detects that the system coming up has just been IPLed using the wrong set of disks, GDPS will quiesce that system, preventing any data integrity problems that could be experienced had the applications been started.

•GDPS uses a DS8000 disk subsystem capability, which is called Soft Fence for configurations where the disks support this function. Soft Fence provides the means to fence (that is, block) access to a selected device. GDPS uses Soft Fence when appropriate to fence devices that would otherwise be exposed to accidental update.

3.1.2 Protecting tape data

Although most of your critical data will be resident on disk, it is possible that other data you require following a disaster resides on tape. Just as you mirror your disk-resident data to protect it, equally you can mirror your tape-resident data. GDPS Metro provides support for management of the IBM TS7700. GDPS provides TS7700 configuration management and displays the status of the managed TS7700s on GDPS panels.

TS7700s that are managed by GDPS are monitored and alerts are generated for non-normal conditions. The capability to control TS7700 replication from GDPS scripts and panels using TAPE ENABLE and TAPE DISABLE by library, grid, or site is provided for managing TS7700 during planned and unplanned outage scenarios.

Another important aspect with replicated tape is identification of “in-doubt” tapes. Tape replication is not exactly like disk replication in that the replication is not done every time a record is written to the tape. The replication is typically performed at tape unload rewind time or perhaps even later. This means that if there is an unplanned event or interruption to the replication, some volumes could be back-level in one or more libraries in the grid. If you must perform a recovery operation in one site because the other site has failed, it is important to identify if any of the tapes in the library in the site where you are recovering are back-level. Depending on the situation with any in-doubt tapes in the library or libraries you will use in the recovery site, you might need to perform special recovery actions. For example, you might need to rerun one or more batch jobs before resuming batch operations.

GDPS provides support for identifying in-doubt tapes in a TS7700 library. The TS7700 provides a capability called Bulk Volume Information Retrieval (BVIR). By using this BVIR capability, GDPS automatically collects information about all volumes in all libraries in the grid where the replication problem occurred if there is an unplanned interruption to tape replication. GDPS can then use this information to report on in-doubt volumes in any given library in that grid if the user requests a report. In addition to this automatic collection of in-doubt tape information, it is possible to request GDPS to perform BVIR processing for a selected library using the GDPS panel interface at any time.

The IBM TS7700 provides comprehensive support for replication of tape data. For more information about the TS7700 technology that complements GDPS for tape data, see IBM TS7700 Release 5.1, SG24-8464.

3.1.3 Protecting distributed (FB) data

|

Terminology: The following definitions describe the terminology that we use in this book when referring to the various types of disks:

•IBM Z or Count-Key-Data (CKD) disks

GDPS can manage disks that are formatted as CKD disks (the traditional mainframe format) that are used by any of the following IBM Z operating systems: z/VM, VSE, KVM, and Linux on IBM Z.

We refer to the disks that are used by a system running on the mainframe as IBM Z disks, CKD disks, or CKD devices. These terms are used interchangeably.

•FB disks

Disks that are used by systems other than those running on IBM Z are traditionally formatted as Fixed Block (FB) and are referred to as FB disks or FB devices in this book.

|

GDPS Metro can manage the mirroring of FB devices that are used by non-mainframe operating systems. The FB devices can be part of the same consistency group as the mainframe CKD devices, or they can be managed separately in their own consistency group.

For more information about FB disk management, see 3.3.1, “Fixed Block disk management” on page 77.

3.1.4 Protecting other CKD data

Systems that are fully managed by GDPS are known as GDPS managed systems or GDPS systems. The following types of GDPS systems are available:

•z/OS systems that are in the GDPS sysplex

•z/VM systems that are managed by GDPS Metro MultiPlatform Resiliency for IBM Z (xDR)

•KVM systems that are managed by GDPS Metro MultiPlatform Resiliency for IBM Z (xDR)

•IBM Db2 Analytics Accelerator on Z systems running in Secure Service Container (SSC) LPARs that are managed by GDPS Metro MultiPlatform Resiliency for IBM Z (xDR)

•z/OS systems that are outside of the GDPS sysplex that are managed by the GDPS Metro z/OS Proxy (z/OS Proxy)

GDPS Metro can also manage the disk mirroring of CKD disks used by systems outside of the sysplex: other z/OS systems, Linux on IBM Z, VM, VSE, and KVM systems that are not running any GDPS Metro or xDR automation. These are known as “foreign systems.”

Because GDPS manages Metro Mirror for the disks used by these systems, their disks will be attached to the GDPS controlling systems. With this setup, GDPS is able to capture mirroring problems and will perform a freeze. All GDPS managed disks belonging to the GDPS systems and these foreign systems are frozen together, regardless of whether the mirroring problem is encountered on the GDPS systems’ disks or the foreign systems’ disks.

GDPS Metro is not able to directly communicate with these foreign systems. For this reason, GDPS automation will not be aware of certain other conditions such as a primary disk problem that is detected by these systems. Because GDPS will not be aware of such conditions that would have otherwise driven autonomic actions such as HyperSwap, GDPS will not react to these events.

If an unplanned HyperSwap occurs (because it was triggered on a GDPS managed system), the foreign systems cannot and will not swap to using the secondaries. Mechanisms are provided to prevent these systems from continuing to use the former primary devices after the GDPS systems have been swapped. You can then use GDPS automation facilities to reset these systems and re-IPL them using the swapped-to primary disks.

3.2 GDPS Metro configurations

At its most basic, a GDPS Metro configuration consists of at least one production system, at least one controlling system in a sysplex, primary disks, and at least one set of secondary disks. The actual configuration depends on your business and availability requirements.

One aspect of availability requirements has to do with the availability of the servers, systems, and application instances. The following configurations that address this aspect of availability are most common:

•Single-site workload configuration

In this configuration, all of the production systems normally run in the same site, referred to as Site1, and the GDPS controlling system runs in Site2. In effect, Site1 is the active site for all production systems. The controlling system in Site2 is running and resources are available to move production to Site2, if necessary, for a planned or unplanned outage of Site1. Although you might also hear this referred to as an Active/Standby GDPS Metro configuration, we avoid the Active/Standby term to avoid confusion with the same term used in conjunction with the GDPS Continuous Availability product.

•Multisite workload configuration

In this configuration, the production systems run in both sites, Site1 and Site2. This configuration typically uses the full benefits of data sharing available with a Parallel Sysplex. Having two GDPS controlling systems, one in each site, is preferable. Although you might also hear this referred to as an Active/Active GDPS Metro configuration, we avoid the Active/Active term to avoid confusion with the same term used in conjunction with the GDPS Continuous Availability product.

•Business Recovery Services (BRS) configuration

In this configuration, the production systems and the controlling system are all in the same site, referred to as Site1. Site2 can be a client site or can be owned by a third-party recovery services provider (thus the name BRS). You might hear this referred to as an Active/Cold configuration.

Another aspect of availability requirements has to do with the availability of data. The most basic configuration of GDPS Metro consists of to copies of data, a set of primary disks and one set of secondary disks. This is known as a single-leg configuration.

GDPS Metro also leverages the IBM MTMM disk mirroring technology to maintain two synchronous secondary copies of your data. This configuration, known as a dual-leg configuration, provides an additional level of availability because data resiliency can be maintained, even when one copy of data has been lost.

These configuration options are described later in this section.

3.2.1 Controlling systems

Why does a GDPS Metro configuration need a controlling system? At first, you might think this is an additional infrastructure overhead. However, when you have an unplanned outage that affects production systems or the disk subsystems, it is crucial to have a system such as the controlling system that can survive failures that might have impacted other portions of your infrastructure. The controlling system allows you to perform situation analysis after the unplanned event to determine the status of the production systems or the disks, and then to drive automated recovery actions. The controlling system plays a vital role in a GDPS Metro configuration.

The controlling system must be in the same sysplex as the production system (or systems) so it can see all the messages from those systems and communicate with those systems. However, it shares an absolute minimum number of resources with the production systems (typically just the couple data sets). By being configured to be as self-contained as possible, the controlling system will be unaffected by errors that can stop the production systems (for example, an Extended Long Busy event on a primary volume).

The controlling system must have connectivity to all the Site1 and Site2 primary and secondary devices that it will manage. If available, it is preferable to isolate the controlling system infrastructure on a disk subsystem that is not housing mirrored disks that are managed by GDPS.

The controlling system is responsible for carrying out all recovery actions following a disaster or potential disaster, for managing the disk mirroring configuration, for initiating a HyperSwap, for initiating a freeze and implementing the freeze/swap policy actions, for reassigning STP roles; for re-IPLing failed systems, and so on.

|

Note: The availability of the dedicated GDPS controlling system (or systems) in all configurations is a fundamental requirement of GDPS. It is not possible to merge the function of the controlling system with any other system that accesses or uses the primary volumes or other production resources.

|

Configuring GDPS Metro with two controlling systems, one in each site is highly recommended. This is because a controlling system is designed to survive a failure in the opposite site of where the primary disks are. Primary disks are normally in Site1 and the controlling system in Site2 is designed to survive if Site1 or the disks in Site1 fail. However, if you reverse the configuration so that primary disks are now in Site2, the controlling system is in the same site as the primary disks. It will certainly not survive a failure in Site2 and might not survive a failure of the disks in Site2 depending on the configuration. Configuring a controlling system in both sites ensures the same level of protection, no matter which site is the primary disk site. When two controlling systems are available, GDPS manages assigning a Master role to the controlling system that is in the same site as the secondary disks and switching the Master role if there is a disk switch.

Improved controlling system availability: Enhanced timer support

Normally, a loss of synchronization with the sysplex timing source will generate a disabled console WTOR that suspends all processing on the LPAR, until a response is made to the WTOR. The WTOR message is IEA394A in STP timing mode.

In a GDPS environment, z/OS is aware that a given system is a GDPS controlling system and will allow a GDPS controlling system to continue processing even when the server it is running on loses its time source and becomes unsynchronized. The controlling system is therefore able to complete any freeze or HyperSwap processing it might have started and is available for situation analysis and other recovery actions, instead of being in a disabled WTOR state.

In addition, because the controlling system is operational, it can be used to help in problem determination and situation analysis during the outage, thus further reducing the recovery time needed to restart applications.

The controlling system is required to perform GDPS automation in the event of a failure. Actions might include these tasks:

•Reassigning STP roles

•Performing the freeze processing to guarantee secondary data consistency

•Coordinating HyperSwap processing

•Executing a takeover script

•Aiding with situation analysis

Because the controlling system needs to run with only a degree of time synchronization that allows it to correctly participate in heartbeat processing with respect to the other systems in the sysplex, this system should be able to run unsynchronized for a period of time (80 minutes) using the local time-of-day (TOD) clock of the server (referred to as local timing mode), instead of generating a WTOR.

Automated response to STP sync WTORs

GDPS on the controlling systems, using the BCP Internal Interface, provides automation to reply to WTOR IEA394A when the controlling systems are running in local timing mode. See “Improved controlling system availability: Enhanced timer support” on page 70. A server in an STP network might have recovered from an unsynchronized to a synchronized timing state without client intervention. By automating the response to the WTORs, potential time outs of subsystems and applications in the client’s enterprise might be averted, thus potentially preventing a production outage.

If WTOR IEA394A is posted for production systems, GDPS uses the BCP Internal Interface to automatically reply RETRY to the WTOR. If z/OS determines that the CPC is in a synchronized state, either because STP recovered or the CTN was reconfigured, it will no longer spin and continue processing. If the CPC is still in an unsynchronized state when GDPS automation responded with RETRY to the WTOR, however, the WTOR will be reposted.

The automated reply for any given system is retried for 60 minutes. After 60 minutes, you will need to manually respond to the WTOR.

3.2.2 Single-site workload configuration

A GDPS Metro single-site workload environment typically consists of a multisite sysplex, with all production systems running in a single site, normally Site1, and the GDPS controlling system in Site2. The controlling system (or systems, because you may have two in some configurations) will normally run in the site containing the secondary disk volumes.

The multisite sysplex can be a base sysplex or a Parallel Sysplex; a coupling facility is not strictly required. The multisite sysplex must be configured with redundant hardware (for example, a coupling facility and a Sysplex Timer in each site), and the cross-site connections must also be redundant. Instead of using Sysplex Timers to synchronize the servers, you can also use Server Time Protocol (STP) to synchronize the servers.

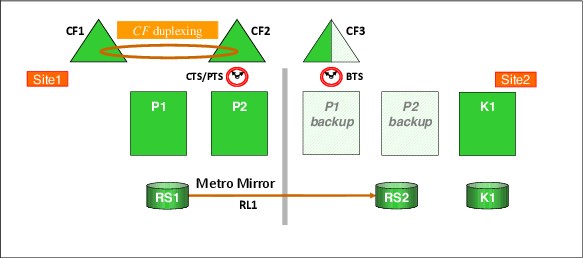

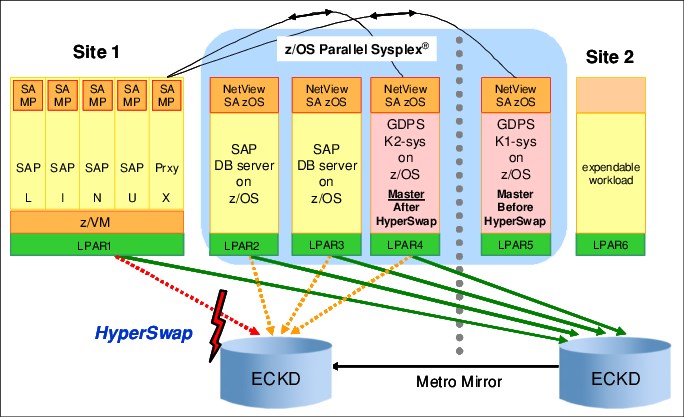

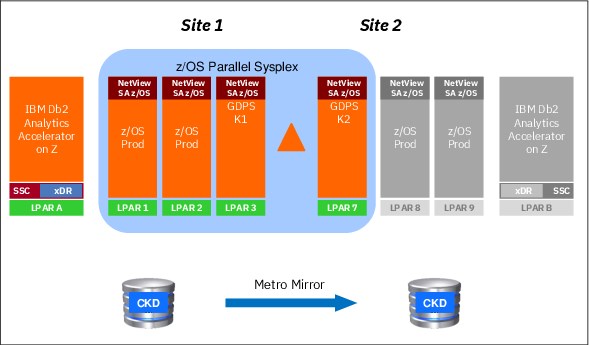

Figure 3-1 shows a typical GDPS Metro single-site workload configuration. LPARs P1 and P2 are in the production sysplex, as are the coupling facilities CF1, CF2, and CF3. The primary (RS1) disks are in Site1, with a set of secondaries (RS2) also in Site1 and another set of secondaries (RS3) in Site2. All the production systems are running in Site1, with only the GDPS controlling system (K1) running in Site2. You will notice that system K1’s disks (those marked K1) are also in Site2 and are not mirrored.

The GDPS Metro code itself runs under NetView and System Automation, and runs in every system in the GDPS sysplex.

Figure 3-1 GDPS Metro single site workload configuration

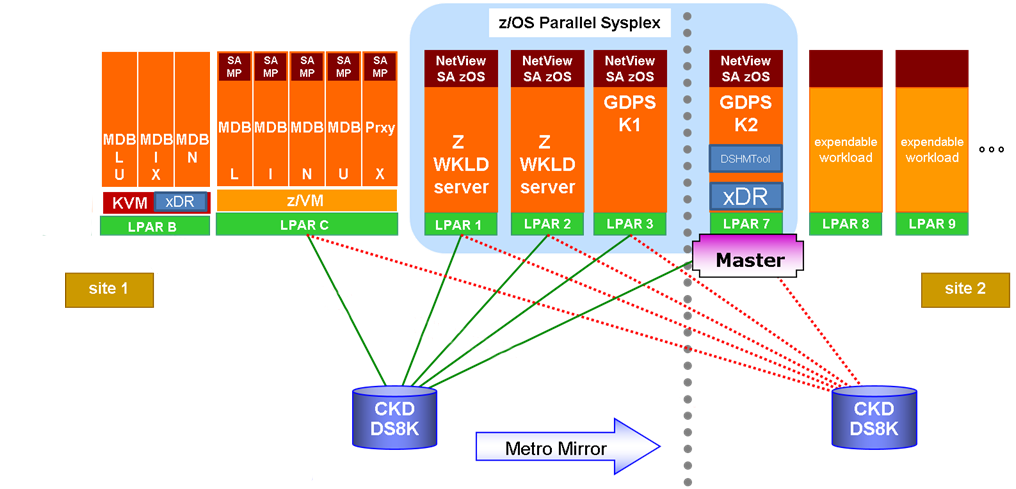

3.2.3 Multisite workload configuration

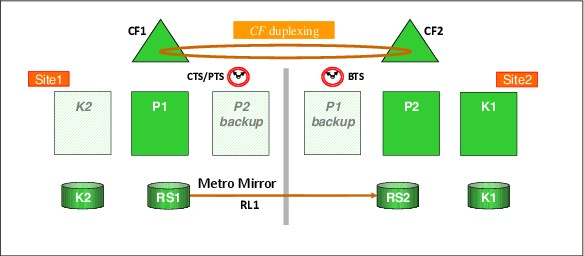

A multisite workload configuration, shown in Figure 3-2, differs from a single-site workload in that production systems are running in both sites. Although, running a multisite workload as a base sysplex is possible, seeing this configuration as a base sysplex (that is, without coupling facilities) is unusual. This is because a multisite workload is usually a result of higher availability requirements, and Parallel Sysplex and data sharing are core components of such an environment.

Figure 3-2 GDPS Metro multisite workload configuration

Because in this example we have production systems in both sites, we need to provide the capability to recover from a failure in either site. So, in this case, there is also a GDPS controlling system with its own local (not mirrored) disk running in Site1, namely System K2. Therefore, if there is a disaster that disables Site2, there will still be a GDPS controlling system available to decide how to react to that failure and what recovery actions are to be taken.

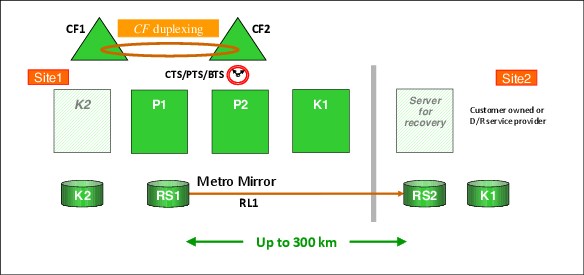

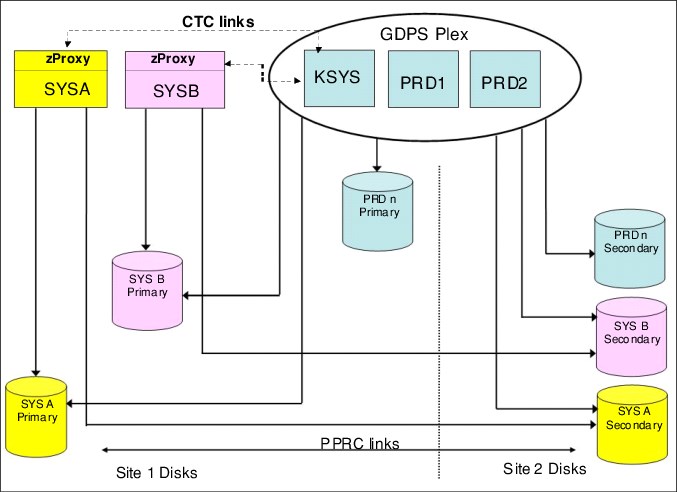

3.2.4 Business Recovery Services configuration

A third configuration is known as the Business Recovery Services (BRS) configuration, and is shown in Figure 3-3 on page 74. In this configuration, all the systems in the GDPS configuration, including the controlling systems, are in a sysplex in the same site, namely Site1. The sysplex does not span the two sites. The second site, Site2, might be a client site or might be owned by a third-party recovery services provider; thus the name BRS.

Site2 contains the secondary disks and the alternate couple data sets (CDS), and might also contain processors that will be available in case of a disaster, but are not part of the configuration. This configuration can also be used when the distance between the two sites exceeds the distance supported for a multisite sysplex, but is within the maximum distance supported by FICON and Metro Mirror.

Although there is no need for a multisite sysplex with this configuration, you must have channel connectivity from the GDPS systems to the secondary disk subsystems. Also, as explained in the next paragraph, the controlling system in Site1 needs channel connectivity to its disk devices in Site2. Therefore, FICON link connectivity from Site1 to Site2 is required.

For more information about options that are available to extend the distance of FICON links between sites, see 2.9.7, “Connectivity options” on page 47, and IBM z Systems Connectivity Handbook, SG24-5444.

In the BRS configuration one of the two controlling systems must have its disk devices in Site2. This permits that system to be restarted manually in Site2 after a disaster is declared. After it restarts in Site2, the system runs a GDPS script to recover the secondary disk subsystems, reconfigure the recovery site, and restart the production systems from the disk subsystems in Site2.

If you have only a single controlling system and you have a total cross-site fiber connectivity failure, the controlling system running on Site2 disks might not be able to complete the Freeze operation because it will lose access to its disk in Site2. Having a second controlling system running on Site1 local disks ensures that the freeze operation completes successfully if the controlling system running on Site2 disks is down or cannot function because of a cross-site fiber loss.

GDPS attempts to maintain the current Master system in the controlling system by using the secondary disks (see Figure 3-3).

Figure 3-3 GDPS Metro BRS configuration

3.2.5 Single-leg configuration

The previous sections showed GDPS Metro in single-leg configurations. That is, the configurations consisted of only two copies of the production data: a primary copy and a secondary copy. The primary and secondary copies of data are called disk locations or replication sites. The copy in Site1 is known as RS1 and the copy in Site2 is known as RS2.

The replication connection between the replication sites is called a replication leg or simply a leg. A replication leg has a fixed name that is based on the two disk locations that it connects. In a single-leg configuration, there is only one replication leg, called RL1, and it connects the two disk locations RS1 and RS2.

3.2.6 Dual-leg configuration

In addition to providing the single-leg configuration that was described in the previous section, GDPS Metro also uses the IBM MTMM disk mirroring technology to provide a dual-leg configuration which maintains two synchronous secondary copies of your data to provide an additional level of data resiliency.

With a dual-leg configuration, a replication site is added and two replication legs also are added. The three disk locations, or copies, are known as RS1, RS2, and RS3. RS1 and RS2 are assumed to be “local” and are fixed in Site1 an RS3 is fixed in Site2.

Although any of the three replication sites can assume the primary disk role, in a typical configuration:

•The primary disk is in Site1, that is, either RS1 or RS2.

•The other disk copy in Site1 provides high availability or HA protection.

•The copy in Site2 (RS3) provides disaster recovery or DR protection.

The replication legs in a dual-leg configuration have fixed names that again, are based on the two disk locations that they connect:

•The RS1-RS2 (or RS2-RS1) leg is RL1

•The RS1-RS3 (or RS3-RS1) leg is RL2

•The RS2-RS3 (or RS3-RS2) leg is RL3

The name of a given replication leg never changes, even if the replication direction is reversed for that leg. However, the role of a leg can change, depending on the primary disk location. The two legs from the current primary to each of the two secondaries serve as the active legs whereas the leg between the two secondary locations serves as the incremental resync or MTIR leg.

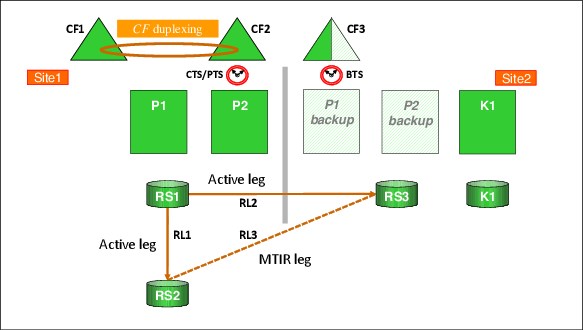

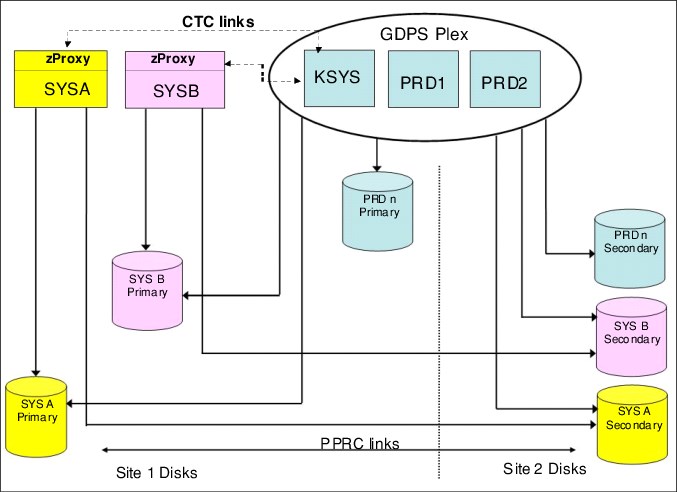

To illustrate this concept, consider the sample dual-leg configuration that is shown in Figure 3-4.

Figure 3-4 Typical GDPS Metro dual-leg configuration

In this sample configuration, RS1 is the primary disk location, RL1 and RL2 are the active replication legs, and RL3 is the MTIR leg.

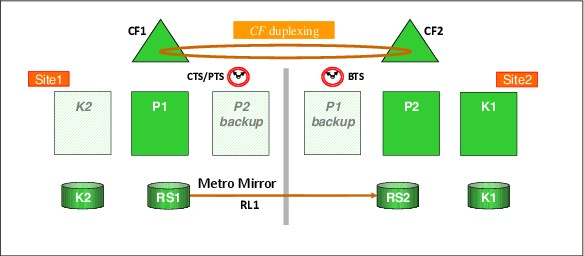

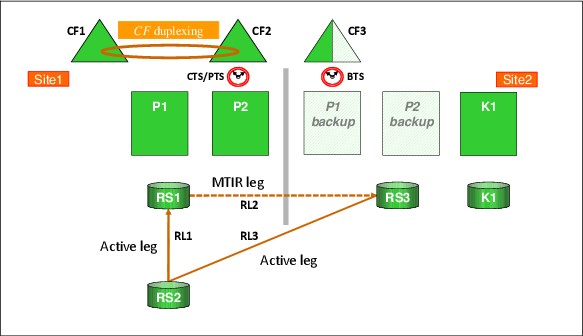

If a disk switch exists and RS2 becomes the new primary disk, RL1 and RL3 become the active replication legs and RL2 then becomes the MTIR leg, as shown in Figure 3-5.

Figure 3-5 GDPS Metro dual-leg configuration following switch to RS2

3.2.7 Combining GDPS Metro with GDPS GM or GDPS XRC

When configured in a single-leg topology, GDPS Metro can be combined with GDPS Global - GM (GDPS GM) or GDPS Global - XRC (GDPS XRC) in 3-site and 4-site configurations. In such configurations, GDPS Metro (when combined with Parallel Sysplex use and HyperSwap) in one region provides continuous availability across a metropolitan area or within the same local site, and GDPS GM or GDPS XRC provide disaster recovery capability using a remote site in a different region.

The 4-site environment is configured in a symmetric manner so that there is a GDPS Metro-managed replication leg available in both regions to provide continuous availability (CA) within the region, with GDPS GM or GDPS XRC to provide cross-region DR, no matter in which region production is running at any time.

Combining GDPS Metro and GDPS GM in this fashion is referred to as GDPS Metro Global - GM (GDPS MGM). Combining GDPS Metro and GDPS XRC in this fashion is referred to as GDPS Metro Global - XRC (GDPS MzGM).

For more information about GDPS MGM and GDPS MzGM configurations, see Chapter 9, “Combining local and metro continuous availability with out-of-region disaster recovery” on page 267.

3.2.8 GDPS Metro in a single site

The final configuration is where you want to benefit from the capabilities of GDPS Metro to extend the continuous availability attributes of a Parallel Sysplex to planned and unplanned disk reconfigurations, but you do not have the facilities to mirror disk across two sites. In this case, you can implement GDPS Metro HyperSwap Manager (GDPS HM).

GDPS HM is similar to the full function GDPS Metro offering, except that it does not include the scripts for management of the LPARs and workloads. GDPS HM is upgradeable to a full GDPS Metro implementation. For more information about GDPS HM, see Chapter 4, “GDPS Metro HyperSwap Manager” on page 113.

Because configuring GDPS HM (or GDPS Metro) within a single site does not provide protection against site failure events, such a configuration is likely to be used within the context of a GDPS MGM or GDPS MzGM multi-site solution rather than a stand-alone solution.

Another possibility is that this is for a client environment that has aggressive recovery time objectives for failures other than a disaster event and some mechanism such as tape vaulting is used for disaster protection. This means that long recovery times and a fair amount of data loss can be tolerated during a disaster.

3.2.9 Other considerations

The availability of the dedicated GDPS controlling system (or systems) in all scenarios is a fundamental requirement in GDPS. Merging the function of the controlling system with any other system that accesses or uses the primary volumes is not possible.

Equally important is that certain functions (stopping and restarting systems and changing the couple data set configuration) are done through the scripts and panel interface provided by GDPS. Because events such as systems going down or changes to the couple data set configuration are indicators of a potential disaster, such changes must be initiated using GDPS functions so that GDPS understands that these are planned events.

3.3 GDPS Metro management of distributed systems and data

Most enterprises today have a heterogeneous IT environment where the applications and data are on various hardware and software platforms, such as IBM Z, IBM System p, UNIX, Windows, and Linux. Such an environment can benefit greatly if a single point of control manages the data across all the platforms, and for the disaster recovery solution to coordinate the recovery across multiple platforms.

In this section, we describe the following functions that are provided by GDPS Metro that are available for clients to manage data and coordinate disaster recovery across multiple platforms:

•Fixed Block disk management

•Multiplatform Resiliency for IBM Z (also known as xDR)

3.3.1 Fixed Block disk management

Most enterprises today run applications that update data across multiple platforms. For these enterprises, there is a need to manage and protect not just the data that is on CKD devices, but also the data that is on FB devices for IBM Z and non IBM Z servers. GDPS Metro provides the capability to manage a heterogeneous environment of IBM Z and distributed systems data through a function that is called Fixed Block Disk Management (FB Disk Management).

The FB Disk Management function allows GDPS to be a single point of control to manage business resiliency across multiple tiers in the infrastructure, which improves cross-platform system management and business processes. GDPS Metro can manage the Metro Mirror remote copy configuration and FlashCopy for distributed systems storage.

Specifically, FB disk support extends the GDPS Metro Freeze capability to FB devices that reside in supported disk subsystems to provide data consistency for the IBM Z data and the data on the FB devices.

With FB devices included in your configuration, you can select one of the following options to specify how Freeze processing is to be handled for FB disks and IBM Z (CKD disks), when mirroring or primary disk problems are detected:

•You can select to Freeze all devices managed by GDPS.

If this option is used, the CKD and FB devices are in a single consistency group. Any Freeze trigger for the IBM Z or FB devices results in the FB and the IBM Z LSSs managed by GDPS being frozen. This option allows you to have consistent data across heterogeneous platforms in the case of a disaster, which allows you to restart systems in the site where secondary disks are located.

This option is especially suitable when distributed units of work are on IBM Z and distributed servers that update the same data; for example, by using the IBM Distributed Relational Database Architecture (IBM DB2 DRDA).

•You can select to Freeze devices by group.

If this option is selected, the CKD devices are in a separate consistency group from the FB devices. Also, the FB devices can be separated into multiple consistency groups; for example, by distributed workloads. The Freeze is performed on only the group for which the Freeze trigger was received. If the Freeze trigger occurs for an IBM Z disk device, only the CKD devices are frozen. If the trigger occurs for a FB disk, only the FB disks within the same group as that disk are frozen.

FB disk management prerequisites

GDPS requires the disk subsystems that contain the FB devices to support the z/OS Fixed Block Architecture (zFBA) feature. GDPS runs on z/OS and therefore communicates to the disk subsystems directly over a channel connection. The z/OS Fixed Block Architecture (zFBA) provides GDPS the ability to send the commands that are necessary to manage Metro Mirror and FlashCopy directly to FB devices over a channel connection. It also enables GDPS to receive notifications for certain error conditions (for example, suspension of an FB device pair). These notifications allow the GDPS controlling system to drive autonomic action such as performing a freeze for a mirroring failure.

|

Note: HyperSwap for FB disks is not supported for any IBM Z or non IBM Z servers.

|

3.3.2 Multiplatform Resiliency for IBM Z

GDPS Metro includes a function known as Multiplatform Resiliency for IBM Z (also known as xDR). This function extends the near-continuous availability and disaster recovery capabilities provided by GDPS Metro to other platforms, or operating systems, running on IBM Z servers.

For example, to reduce IT costs and complexity, many enterprises are consolidating open servers into Linux on IBM Z servers. Linux on IBM Z systems can be implemented as guests that are running under z/VM, as servers that are running natively on IBM Z, or as servers that are running under the KVM Hypervisor on IBM Z. This results in a multitiered architecture in which the application server and the database server are running on different IBM Z platforms. Several examples exist of an application server running on Linux on IBM Z and a database server running on z/OS, including the following examples:

•WebSphere Application Server running on Linux and CICS, DB2 running under z/OS

•SAP application servers running on Linux and database servers running on z/OS

For such multitiered architectures, Multiplatform Resiliency for IBM Z provides a coordinated near-continuous availability and disaster recovery solution for the z/OS and the Linux on IBM Z tiers. It can be implemented if the Linux on IBM Z systems run as guests under z/VM or as servers that are running under the KVM Hypervisor on IBM Z (native Linux on IBM Z systems are not supported) and if the disks being used are CKD disks.

|

Note: For the remainder of this section, Linux on IBM Z is also referred to as Linux. The terms are used interchangeably.

|

Another IBM Z platform that requires coordinated near-continuous availability and disaster recovery protection is known as the IBM Secure Service Container, or SSC platform. The IBM Secure Service Container is a container technology through which you can more quickly and securely deploy firmware and software appliances on IBM Z and IBM LinuxONE (LinuxONE) servers. A Secure Service Container partition (or LPAR) is a specialized container for installing and running specific firmware or software appliances. An appliance is an integration of operating system, middleware, and software components that work autonomously and provide core services and infrastructures that focus on consumability and security.