GDPS Metro HyperSwap Manager

In this chapter, we discuss the capabilities and prerequisites of the GDPS Metro HyperSwap Manager (GDPS HM) offering.

GDPS HM extends the availability attributes of a Parallel Sysplex to disk subsystems, whether the Parallel Sysplex and disk subsystems are in a single site, or whether the Parallel Sysplex and the primary/secondary disk subsystems span across two sites.

It provides the ability to transparently switch primary disk subsystems with the secondary disk subsystems for either a planned or unplanned disk reconfiguration. It also supports disaster recovery capability across two sites by enabling the creation of a consistent set of secondary disks in case of a disaster or potential disaster.

However, unlike the full GDPS Metro offering, GDPS HM does not provide any resource management or recovery management capabilities.

The following functions are for protecting data provided by GDPS HM:

•Ensuring the consistency of the secondary data in case there is a disaster or suspected disaster, including the option to also ensure zero data loss

•Switching to the secondary disk by using HyperSwap

•Managing the remote copy configuration for IBM Z and other platform data

Because GDPS HM is a subset of the GDPS Metro offering, you might want to review the comparison that is presented in Table 4-1 on page 147 if you read Chapter 3, “GDPS Metro” on page 53.

This chapter includes the following topics:

4.1 Introduction to GDPS HM

GDPS HM provides a subset of GDPS Metro capability with the emphasis being more on the remote copy and disk management aspects. At its most basic, GDPS HM extends Parallel Sysplex availability to disk subsystems by delivering the HyperSwap capability to mask disk outages caused by planned disk maintenance or unplanned disk failures. It also provides monitoring and management of the data replication environment, including the freeze capability.

In the multisite environment, GDPS HM provides an entry-level disaster recovery offering. Because GDPS HM does not include the systems management and automation capabilities of GDPS Metro, it cannot provide in and of itself the short RTO that is achievable with GDPS Metro. However, GDPS HM does provide a cost-effective route into full GDPS Metro at a later time if your recovery time objectives change.

4.1.1 Protecting data integrity and data availability with GDPS HM

In 2.2, “Data consistency” on page 17, we point out that data integrity across primary and secondary volumes of data is essential to perform a database restart and accomplish an RTO of less than an hour. This section provides details about how GDPS automation in GDPS HM provides both data consistency if there are mirroring problems and data availability if there are disk problems.

The following types of disk problems trigger a GDPS automated reaction:

•Metro Mirror replication problems (Freeze triggers)

There is no problem with writing to the primary disk subsystem, but there is a problem mirroring the data to the secondary disk subsystem. For more information, see “GDPS Freeze function for mirroring failures” on page 115.”

•Primary disk problems (HyperSwap triggers)

There is a problem writing to the primary disk: Either a hard failure, or the disk subsystem is not accessible or not responsive. For more information, see “GDPS HyperSwap function” on page 119.

GDPS Freeze function for mirroring failures

GDPS uses automation, keyed off events or messages, to stop all mirroring when a remote copy failure occurs. In particular, the GDPS automation uses the IBM PPRC Freeze and Run architecture, which has been implemented as part of Metro Mirror on IBM disk subsystems and also by other enterprise disk vendors. In this way, if the disk hardware supports the Freeze/Run architecture, GDPS can ensure consistency across all data in the sysplex (consistency group) regardless of disk hardware type. This preferred approach differs from proprietary hardware approaches that work only for one type of disk hardware. For more information about data consistency with synchronous disk mirroring, see “Metro Mirror data consistency” on page 24.

When a mirroring failure occurs, this problem is classified as a Freeze trigger and GDPS stops activity across all disk subsystems at the time the initial failure is detected, thus ensuring that the dependent write consistency of the remote disks is maintained. This is what happens when a GDPS performs a Freeze:

•Remote copy is suspended for all device pairs in the configuration.

•While the suspend command is being processed for each LSS, each device goes into a long busy state. When the suspend completes for each device, z/OS marks the device unit control block (UCB) in all connected operating systems to indicate an Extended Long Busy (ELB) state.

•No I/Os can be issued to the affected devices until the ELB is thawed with the PPRC Run (or “thaw”) action or until it times out (the consistency group timer setting commonly defaults to 120 seconds, although for most configurations a longer ELB is recommended).

•All paths between the Metro Mirrored disks are removed, preventing further I/O to the secondary disks if Metro Mirror is accidentally restarted.

Because no I/Os are processed for a remote-copied volume during the ELB, dependent write logic ensures the consistency of the remote disks. GDPS performs Freeze for all LSS pairs that contain GDPS managed mirrored devices.

|

Important: Because of the dependent write logic, it is not necessary for all LSSs to be frozen at the same instant. In a large configuration with many thousands of remote copy pairs, it is not unusual to see short gaps between the times when the Freeze command is issued to each disk subsystem. However, because of the ELB, such gaps are not a problem.

|

After GDPS performs the Freeze and the consistency of the remote disks is protected, what GDPS does depends on the client’s PPRC Failure policy (also known as Freeze policy). The policy, as described in “Freeze policy (PPRC Failure policy) options” on page 117, tells GDPS to take one of these three possible actions:

•Perform a Run action against all LSSs. This removes the ELB and allow production systems to continue using these devices. The devices are in remote copy-suspended mode, meaning that any further writes to these devices are no longer being mirrored. However, the changes are being tracked by the hardware so that, later, only the changed data are resynchronized to the secondary disks. For more information about this policy option, see “Freeze and Go” on page 118.

•System-reset all production systems. This ensures that no more updates can occur to the primary disks, because such updates are not mirrored and it is not possible to achieve RPO zero (zero data loss) if a failure occurs (or if the original trigger was an indication of a catastrophic failure). For more information about this option, see “Freeze and Stop” on page 118.

•Try to determine if the cause of the Metro Mirror suspension event was a permanent or temporary problem with any of the secondary disk subsystems in the GDPS configuration. If GDPS can determine that the Metro Mirror failure was caused by the secondary disk subsystem, this is not a potential indicator of a disaster in the primary site. In this case, GDPS performs a Run action and allows production to continue using the suspended primary devices. However, if the cause cannot be determined to be a secondary disk problem, GDPS resets all systems, which guarantees zero data loss. For more information, see “Freeze and Stop conditionally” on page 119.

GDPS HM uses a combination of storage subsystem and sysplex triggers to automatically secure, at the first indication of a potential disaster, a data-consistent secondary site copy of your data using the Freeze function. In this way, the secondary copy of the data is preserved in a consistent state, perhaps even before production applications are aware of any issues. Ensuring the data consistency of the secondary copy ensures that a normal system restart can be performed, instead of having to perform DBMS forward recovery actions. This is an essential design element of GDPS to minimize the time to recover the critical workloads if there is a disaster in the primary site.

You will appreciate why such a process must be automated. When a device suspends, there is simply not enough time to launch a manual investigation process. The entire mirror must be frozen by stopping further I/O to it, and then letting production run with mirroring temporarily suspended, or stopping all systems to guarantee zero data loss based on the policy.

In summary, freeze is triggered as a result of a Metro Mirror suspension event for any primary disk in the GDPS configuration, that is, at the first sign of a duplex mirror that is going out of duplex state. When a device suspends, all attached systems are sent a State Change Interrupt (SCI). A message is issued in all of those systems and then each system must issue multiple I/Os to investigate the reason for the suspension event.

When GDPS performs a freeze, all primary devices in the Metro Mirror configuration suspend. This results in significant SCI traffic and many messages in all of the systems. GDPS, with z/OS and microcode on the DS8000 disk subsystems, supports reporting suspensions in a summary message per LSS instead of at the individual device level. When compared to reporting suspensions on a per device basis, the Summary Event Notification for PPRC Suspends (PPRCSUM) dramatically reduces the message traffic and extraneous processing associated with PPRC suspension events and freeze processing.

Freeze policy (PPRC Failure policy) options

As described, when a mirroring failure is detected, GDPS automatically and unconditionally performs a Freeze to secure a consistent set of secondary volumes in case the mirroring failure could be the first indication of a site failure. Because the primary disks are in an Extended Long Busy state as a result of the freeze and the production systems are locked out, GDPS must take some action. There is no time to interact with the operator on an event-by-event basis. The action must be taken immediately and is determined by a customer policy setting, namely the PPRC Failure policy option (also known as the Freeze policy option). GDPS will use this same policy setting after every Freeze event to determine what its next action should be. The options are listed here:

•PPRCFAILURE=STOP (Freeze and STOP)

GDPS resets production systems while I/O is suspended.

•PPRCFAILURE=GO (Freeze and Go)

GDPS allows production systems to continue operation after mirroring is suspended.

•PPRCFAILURE=COND (Freeze and Stop, conditionally)

GDPS tries to determine if a secondary disk caused the mirroring failure. If so, GDPS performs a Go. If not, GDPS performs a Stop.

Freeze and Stop

If your RPO is zero (that is, you cannot tolerate any data loss), you must select the Freeze and Stop policy to reset all production systems. With this setting, you can be assured that no updates are made to the primary volumes after the Freeze because all systems that can update the primary volumes are reset. You can choose to restart them when you see fit. For example, if this was a false freeze (that is, a false alarm), then you can quickly resynchronize the mirror and restart the systems only after the mirror is duplex.

If you are using duplexed coupling facility (CF) structures along with a Freeze and Stop policy, it might seem that you are guaranteed to be able to use the duplexed instance of your structures if you have to recover and restart your workload with the frozen secondary copy of your disks. However, this is not always the case. There can be rolling disaster scenarios where before, following, or during the freeze event, there is an interruption (perhaps a failure of CF duplexing links) that forces CFRM to drop out of duplexing.

There is no guarantee that the structure instance in the surviving site is the one that is kept. It is possible that CFRM keeps the instance in the site that is about to totally fail. In this case, there will not be an instance of the structure in the site that survives the failure.

To summarize, with a Freeze and Stop policy, if there is a surviving, accessible instance of application-related CF structures, that instance will be consistent with the frozen secondary disks. However, depending on the circumstances of the failure, even with structures duplexed across two sites you are not 100% guaranteed to have a surviving, accessible instance of the application structures and therefore you must have the procedures in place to restart your workloads without the structures.

A Stop policy ensures no data loss. However, if this was a false Freeze event, that is, it was a transient failure that did not necessitate recovering using the frozen disks, it will stop the systems unnecessarily.

Freeze and Go

If you can accept an RPO that is not necessarily zero, you might decide to let the production systems continue operation after the secondary volumes have been protected by the Freeze. In this case you would use a Freeze and Go policy. With this policy you avoid an unnecessary outage for a false freeze event, that is, if the trigger is simply a transient event.

However, if the trigger turns out to be the first sign of an actual disaster, you might continue operating for an amount of time before all systems actually fail. Any updates made to the primary volumes during this time will not have been replicated to the secondary disk, and therefore are lost. In addition, because the CF structures were updated after the secondary disks were frozen, the CF structure content is not consistent with the secondary disks. Therefore, the CF structures in either site cannot be used to restart workloads and log-based restart must be used when restarting applications.

This is not full forward recovery. It is forward recovery of any data such as DB2 group buffer pools that might have existed in a CF but might not have been written to disk yet. This results in prolonged recovery times. The extent of this elongation will depend on how much such data existed in the CFs at that time. With a Freeze and Go policy you may consider tuning applications such as DB2 to harden such data on disk more frequently than otherwise.

Freeze and Go is a high-availability option that avoids production outage for false Freeze events. However, it carries a potential for data loss.

Freeze and Stop conditionally

Field experience has shown that most occurrences of freeze triggers are not necessarily the start of a rolling disaster, but are “false freeze” events, which do not necessitate recovery on the secondary disk. Examples of such events include connectivity problems to the secondary disks and secondary disk subsystem failure conditions.

With a COND (conditional) specification, the action that GDPS takes after it performs the Freeze is conditional. GDPS tries to determine if the mirroring problem was as a result of a permanent or temporary secondary disk subsystem problem:

•If GDPS can determine that the freeze was triggered as a result of a secondary disk subsystem problem, then GDPS performs a Go. That is, it allows production systems to continue to run using the primary disks. However, updates are not mirrored until the secondary disk can be fixed and Metro Mirror can be resynchronized.

•If GDPS cannot ascertain that the cause of the freeze was a secondary disk subsystem, then GDPS deduces that this could be the beginning of a rolling disaster in the primary site. Therefore, it performs a Stop, resetting all of the production systems to ensure zero data loss. GDPS cannot always detect that a particular freeze trigger was caused by a secondary disk, and some freeze events that are truly caused by a secondary disk could still result in a Stop.

For GDPS to determine whether a freeze trigger could have been caused by the secondary disk subsystem, the IBM DS8000 disk subsystems provide a special query capability known as the Query Storage Controller Status microcode function. If all disk subsystems in the GDPS managed configuration support this feature, GDPS uses this special function to query the secondary disk subsystems in the configuration to understand the state of the secondaries and whether one of those secondaries could have caused the freeze. If you use the COND policy setting but all disks your configuration do not support this function, then GDPS cannot query the secondary disk subsystems, and the resulting action will be a Stop.

This option could provide a useful compromise wherein you can minimize the chance that systems would be stopped for a false freeze event, and increase the chance of achieving zero data loss for a real disaster event.

PPRC Failure policy selection considerations

As described, the PPRC Failure policy option specification directly relates to Recovery Time and recovery point objectives, which are business objectives. Therefore, the policy option selection is really a business decision, rather than an IT decision. If data associated with your transactions is of high value, it might be more important to ensure that no data associated with your transactions is ever lost, so you might decide on a Freeze and Stop policy. If you have huge volumes of relatively low value transactions, you might be willing to risk some lost data in return for avoiding unneeded outages with a Freeze and Go policy. The Freeze and Stop Conditional policy attempts to minimize the chance of unnecessary outages and the chance of data loss; however, there is still a risk of either, however small.

Most installations start with a Freeze and Go policy. Companies that have an RPO of zero typically then move on and implement a Freeze and Stop Conditional or Freeze and Stop policy after the implementation is proven to be stable.

GDPS HyperSwap function

If there is a problem writing or accessing the primary disk because of a failing, failed, or non-responsive primary disk, there is a need to swap from the primary disks to the secondary disks.

GDPS HM delivers a powerful function known as HyperSwap, which provides the ability to swap from using the primary devices in a mirrored configuration to using what had been the secondary devices, apparent to the production systems and applications that are using these devices. Before the availability of HyperSwap, a transparent disk swap was not possible. All systems using the primary disk would have been shut down (or could have failed, depending on the nature and scope of the failure) and would have been re-IPLed using the secondary disks. Disk failures were often a single point of failure for the entire sysplex.

With HyperSwap, such a switch can be accomplished without IPL and with simply a brief hold on application I/O. The HyperSwap function is completely controlled by automation, thus allowing all aspects of the disk configuration switch to be controlled through GDPS.

HyperSwap can be invoked in two ways:

•Planned HyperSwap

A planned HyperSwap is invoked by operator action using GDPS facilities. One example of a planned HyperSwap is where a HyperSwap is initiated in advance of planned disruptive maintenance to a disk subsystem.

•Unplanned HyperSwap

An unplanned HyperSwap is invoked automatically by GDPS, triggered by events that indicate a primary disk problem.

Primary disk problems can be detected as a direct result of an I/O operation to a specific device that fails because of a reason that indicates a primary disk problem such as these:

– No paths available to the device

– Permanent error

– I/O timeout

In addition to a disk problem being detected as a result of an I/O operation, it is also possible for a primary disk subsystem to proactively report that it is experiencing an acute problem. The IBM DS8000 models have a special microcode function known as the Storage Controller Health Message Alert capability. Problems of different severity are reported by disk subsystems that support this capability. Those problems classified as acute are also treated as HyperSwap triggers. After systems are swapped to use the secondary disks, the disk subsystem and operating system can try to perform recovery actions on the former primary without impacting the applications using those disks.

Planned and unplanned HyperSwap have requirements in terms of the physical configuration, such as having a symmetrically configured configuration. If a client’s environment meets these requirements, there is no special enablement required to perform planned swaps. Unplanned swaps are not enabled by default and must be enabled explicitly as a policy option. For more information, see “HyperSwap (Primary Failure) policy options” on page 122.

When a swap is initiated, GDPS will always validate various conditions to ensure that it is safe to swap. For example, if the mirror is not fully duplex, that is, not all volume pairs are in a duplex state, then a swap cannot be performed. The way that GDPS reacts to such conditions will change depending on the condition detected and whether the swap is a planned or unplanned swap.

Assuming that there are no show-stoppers and the swap proceeds, for both planned and unplanned HyperSwap, the systems that are using the primary volumes will experience a temporary pause in I/O processing. GDPS will block I/O both at the channel subsystem level by performing a Freeze, which will result in all disks going into Extended Long Busy, and also in all systems I/O being quiesced at the operating system (UCB) level. This is to ensure that no systems use the disks until the switch is complete. During this time when I/O is paused, the following actions occur:

•The Metro Mirror configuration is physically switched. This involves physically changing the secondary disk status to primary. Secondary disks are protected and cannot be used by applications. Changing their status to primary allows them to come online to systems and be used.

•The disks will be logically switched in each of the systems in the GDPS configuration. This involves switching the internal pointers in the operating system control blocks (UCBs). The operating system will point to the former secondary devices instead of the current primary devices.

•For planned swaps, the mirroring direction can be reversed (optional).

•Finally, the systems resume operation using the new, swapped-to primary devices even though applications are not aware of the fact that different devices are now being used.

This brief pause during which systems are locked out of performing I/O is known as the User Impact Time. In benchmark measurements at IBM using currently supported releases of GDPS and IBM DS8000 disk subsystems, the User Impact Time to swap 10,000 pairs across 16 systems during an unplanned HyperSwap was less than 10 seconds. Most implementations are actually much smaller than this and typical impact times using the most current storage and server hardware are measured in seconds. Although results will depend on your configuration, these numbers give you a high-level idea of what to expect.

GDPS HM HyperSwaps all devices in the managed configuration. Just as the Freeze function applies to the entire consistency group, HyperSwap is similarly for the entire consistency group. For example, if a single mirrored volume fails and HyperSwap is invoked, processing is swapped to the secondary copy of all mirrored volumes in the configuration, including those in other, unaffected, subsystems. This is because, to maintain disaster readiness, all primary volumes must be in the same site. If HyperSwap were to swap only the failed LSS, you would then have several primaries in one site, and the remainder in the other site. This also makes for a complex environment to operate and administer I/O configurations.

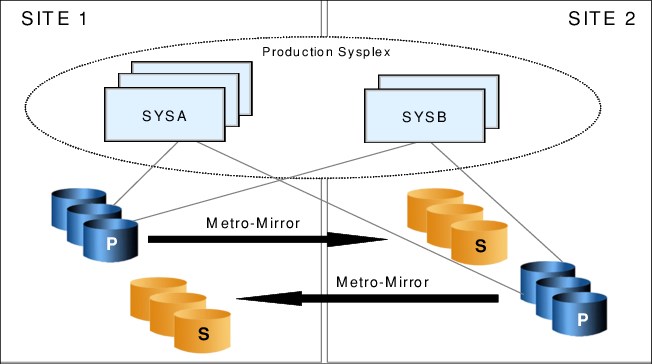

Why is this necessary? Consider the configuration that is shown in Figure 4-1. This is what might happen if only the volumes of a single LSS or subsystem were hyperswapped without swapping the whole consistency group. What happens if a remote copy failure occurs at 15:00? The secondary disks in both sites are frozen at 15:00 and the primary disks (in the case of a Freeze and Go policy) continue to receive updates.

Figure 4-1 Unworkable Metro Mirror disk configuration

Now assume that either site is hit by another failure at 15:10. What do you have? Half the disks are now at 15:00 and the other half are at 15:10 and neither site has consistent data. In other words, the volumes are of virtually no value to you.

If you had all of the secondaries in Site2, all volumes in that site would be consistent. If you had the disaster at 15:10, you would lose 10 minutes of data with the Go policy, but at least all of the data in Site2 would be usable. Using a Freeze and Stop policy is no better for this partial swap scenario because, with a mix of primary disks in either site, you must maintain I/O configurations that can match every possible combination simply to IPL any systems.

More likely, you must first restore mirroring across the entire consistency group before recovering systems, and this is not really practical. Therefore, for disaster recovery readiness, it is necessary that all of the primary volumes are in one site and all of the secondaries in the other site.

HyperSwap with less than full channel bandwidth

You may consider enabling unplanned HyperSwap even if you do not have sufficient cross-site channel bandwidth to sustain the full production workload for normal operations. Assuming that a disk failure is likely to cause an outage and you will need to switch to using disk in the other site, the unplanned HyperSwap might at least present you with the opportunity to perform an orderly shutdown of your systems first. Shutting down your systems cleanly avoids the complications and restart time elongation associated with a crash-restart of application subsystems.

HyperSwap (Primary Failure) policy options

Clients might prefer not to immediately enable their environment for unplanned HyperSwap when they first implement GDPS. For this reason, HyperSwap is not enabled by default. However, we strongly suggest that all GDPS HM clients enable their environment for unplanned HyperSwap.

An unplanned swap is the action that makes most sense when a primary disk problem is encountered. However, other policy specifications that will not result in a swap are available. When GDPS detects a primary disk problem trigger, the first thing it will do will be a Freeze (the same as it performs when a mirroring problem trigger is detected).

GDPS then uses the selected Primary Failure policy option to determine what action it will take next:

•PRIMARYFAILURE=GO

No swap is performed. The action GDPS takes is the same as for a freeze event with policy option PPRCFAILURE=GO. A Run action is performed, which allows systems to continue using the original primary disks. Metro Mirror is suspended and therefore updates are not replicated to the secondary. However, depending on the scope of the primary disk problem, it might be that all or some production workloads simply cannot run or cannot sustain required service levels. Such a situation might necessitate restarting the systems on the secondary disks. Because of the freeze, the secondary disks are in a consistent state and can be used for restart. However, any transactions that ran after the Go action will be lost.

•PRIMARYFAILURE=STOP

No swap is performed. The action GDPS takes is the same as for a freeze event with policy option PPRCFAILURE=STOP. GDPS resets all the production systems. This ensures that no further I/O occurs. After performing situation analysis, if it is determined that this was not a transient issue and that the secondaries should be used to re-IPL the systems, no data will be lost.

•PRIMARYFAILURE=SWAP,swap_disabled_action

The first parameter, SWAP, indicates that after performing the Freeze, GDPS will proceed with an unplanned HyperSwap. When the swap is complete, the systems are running on the new, swapped-to primary disks (the former secondaries). Metro Mirror is in a suspended state. Because the primary disks are known to be in a problematic state, there is no attempt to reverse mirroring. After the problem with the primary disks is fixed, you can instruct GDPS to resynchronize Metro Mirror from the current primaries to the former ones (which are now considered to be secondaries).

The second part of this policy, swap_disabled_action, indicates what GDPS should do if HyperSwap had been temporarily disabled by operator action at the time the trigger was encountered. Effectively, an operator action has instructed GDPS not to perform a HyperSwap, even if there is a swap trigger. GDPS has already done a freeze. So the second part of the policy says what GDPS should do next.

The following options are available for the second parameter, which comes into play only if HyperSwap was disabled by the operator (remember, disk is already frozen):

GO This is the same action as GDPS would have performed if the policy option had been specified as PRIMARYFAILURE=GO.

STOP This is the same action as GDPS would have performed if the policy option had been specified as PRIMARYFAILURE=STOP.

Primary Failure policy specification considerations

As indicated previously, the action that best serves RTO/RPO objectives when there is a primary disk problem is to perform an unplanned HyperSwap. Therefore, the SWAP policy option is the recommended policy option.

For the Stop or Go choice, either as the second part of the SWAP specification or if you will not be using SWAP, similar considerations apply as discussed for the PPRC Failure policy options to Stop or Go. Go carries the risk of data loss if it becomes necessary to abandon the primary disk and restart systems on the secondary. Stop carries the risk of taking an unnecessary outage if the problem was transient.

The key difference is that with a mirroring failure, the primary disks are not broken. When you allow the systems to continue to run on the primary disk with the Go option, other than a disaster which is low probability, the systems are likely to run with no problems. With a primary disk problem, with the Go option, you are allowing the systems to continue running on what are known to be disks that experienced a problem just seconds ago. If this was a serious problem with widespread impact, such as an entire disk subsystem failure, the applications are going to experience severe problems. Some transactions might continue to commit data to those disks that are not broken. Other transactions might be failing or experiencing serious service time issues.

Finally, if there is a decision to restart systems on the secondaries because the primary disks are simply not able to support the workloads, there will be data loss. The probability that a primary disk problem is a real problem that will necessitate restart on the secondary disks is much higher when compared to a mirroring problem. A Go specification in the Primary Failure policy increases your overall risk for data loss.

If the primary failure was of a transient nature, a Stop specification results in an unnecessary outage. However, with primary disk problems it is likely that the problem could necessitate restart on the secondary disks. Therefore, a Stop specification in the Primary Failure policy avoids data loss and facilitates faster restart.

The considerations relating to CF structures with a PRIMARYFAILURE event are similar to a PPRCFAILURE event. If there is an actual swap, the systems continue to run and continue to use the same structures as they did before the swap; the swap is transparent. With a Go action, you continue to update the CF structures along with the primary disks after the Go. If you need to abandon the primary disks and restart on the secondary, the structures are inconsistent with the secondary disks and are not usable for restart purposes. This will prolong the restart and, therefore, your recovery time. With Stop, if you decide to restart the systems using the secondary disks, there is no consistency issue with the CF structures because no further updates occurred on either set of disks after the trigger was detected.

GDPS use of DS8000 functions

GDPS strives to use (when it makes sense) enhancements to the IBM DS8000 disk technologies. In this section we provide information about the key DS8000 technologies that GDPS supports and uses.

Failover/Failback support

When a primary disk failure occurs and the disks are switched to the secondary devices, PPRC Failover/Failback (FO/FB) support eliminates the need to do a full copy when reestablishing replication in the opposite direction. Because the primary and secondary volumes are often in the same state when the freeze occurred, the only differences between the volumes are the updates that occur to the secondary devices after the switch. Failover processing sets the secondary devices to primary suspended status and starts change recording for any subsequent changes made. When the mirror is reestablished with failback processing, the original primary devices become secondary devices and a resynchronization of changed tracks takes place.

GDPS HM requires Metro Mirror FO/FB capability to be available on all disk subsystems in the managed configuration.

PPRC Extended Distance (PPRC-XD)

PPRC-XD (also known as Global Copy) is an asynchronous form of the PPRC copy technology. GDPS uses PPRC-XD rather than synchronous PPRC (Metro Mirror) to reduce the performance impact of certain remote copy operations that potentially involve a large amount of data. For more information, see section 4.6.2, “GDPS HM reduced impact initial copy and resynchronization” on page 143.

Storage Controller Health Message Alert

This facilitates triggering an unplanned HyperSwap proactively when the disk subsystem reports an acute problem that requires extended recovery time. For more information about unplanned HyperSwap triggers, see “GDPS HyperSwap function” on page 119.

PPRCS Summary Event Messages

GDPS supports the DS8000 PPRC Summary Event Messages (PPRCSUM) function, which is aimed at reducing the message traffic and the processing of these messages for Freeze events. For more information, see “GDPS Freeze function for mirroring failures” on page 115.

Soft Fence

Soft Fence provides the capability to block access to selected devices. As discussed in “Protecting secondary disks from accidental update” on page 127, GDPS uses Soft Fence to avoid write activity on disks that are exposed to accidental update in certain scenarios.

On-demand dump (also known as non-disruptive statesave)

When problems occur with disk subsystems, such as those that result in an unplanned HyperSwap, mirroring suspension or performance issues can happen. The lack of diagnostic information can be associated with any of the things that can happen. This function is designed to reduce the likelihood of missing diagnostic information.

Taking a full statesave can lead to temporary disruption to the host I/O and is often disliked by clients for this reason. The on-demand dump (ODD) capability of the disk subsystem facilitates taking a non-disruptive statesave (NDSS) at the time that such an event occurs. The microcode does this automatically for certain events, such as taking a dump of the primary disk subsystem that triggers a Metro Mirror freeze event, and also allows an NDSS to be requested. This enables first failure data capture (FFDC) and thus ensures that diagnostic data is available to aid problem determination. Be aware that not all information that is contained in a full statesave is contained in an NDSS. Therefore, there might still be failure situations where a full statesave is requested by the support organization.

GDPS provides support for taking an NDSS using the remote copy panels (or GDPS GUI). In addition to this support, GDPS autonomically takes an NDSS if there is an unplanned freeze or HyperSwap event.

Query Host Access

When a Metro Mirror disk pair is being established, the device that is the target (secondary) must not be used by any system. The same is true when establishing a FlashCopy relationship to a target device. If the target is in use, the establishment of the Metro Mirror or FlashCopy relationship fails. When such failures occur, it can be a tedious task to identify which system is holding up the operation.

The Query Host Access disk function provides the means to query and identify what system is using a selected device. GDPS uses this capability and adds usability in several ways:

•Query Host Access identifies the LPAR that is using the selected device through the CPC serial number and LPAR number. It is still a tedious job for operations staff to translate this information to a system or CPC and LPAR name. GDPS does this translation and presents the operator with more readily usable information, avoiding this additional translation effort.

•Whenever GDPS is requested to perform a Metro Mirror or FlashCopy establish operation, GDPS first performs Query Host Access to see if the operation is expected to succeed or fail because of one or more target devices being in use. It alerts operations if the operation is expected to fail, and identifies the target devices in use and the LPARs holding them.

•GDPS continually monitors the target devices defined in the GDPS configuration and alerts operations that target devices are in use when they should not be. This allows operations to fix the reported problems in a timely manner.

•GDPS provides the ability for the operator to perform ad hoc Query Host Access to any selected device using the GDPS panels (or GDPS GUI).

Easy Tier Heat Map Transfer

IBM DS8000 Easy Tier optimizes data placement (placement of logical volumes) across the various physical tiers of storage within a disk subsystem in order to optimize application performance. The placement decisions are based on learning the data access patterns and can be changed dynamically and transparently to the applications using this data.

Metro Mirror mirrors the data from the primary to the secondary disk subsystem; however, the Easy Tier learning information is not included in Metro Mirror scope. The secondary disk subsystems are optimized according to the workload on these subsystems, which differs from the activity on the primary (there is only write workload on the secondary; there is read/write activity on the primary). As a result of this difference, during a disk switch or disk recovery, the secondary disks that you switch to are likely to display different performance characteristics compared to the former primary.

Easy Tier Heat Map Transfer is the DS8000 capability to transfer the Easy Tier learning from a Metro Mirror primary to the secondary disk subsystem so that the secondary disk subsystem can also be optimized based on this learning and has similar performance characteristics if it is promoted to become the primary.

GDPS integrates support for Heat Map Transfer. The appropriate Heat Map Transfer actions (such as start or stop of the processing and reversing transfer direction) are incorporated into the GDPS managed processes. For example, if Metro Mirror is temporarily suspended by GDPS for a planned or unplanned secondary disk outage, Heat Map Transfer is also suspended; or if Metro Mirror direction is reversed as a result of a HyperSwap, Heat Map Transfer direction is also reversed.

Protecting secondary disks from accidental update

A system cannot be IPLed by using a disk that is physically a Metro Mirror secondary disk because Metro Mirror secondary disks cannot be brought online to any systems. However, a disk can be secondary from a GDPS (and application use) perspective but physically have a simplex or primary status from a Metro Mirror perspective.

For planned and unplanned HyperSwap, and a disk recovery, GDPS changes former secondary disks to primary or simplex state. However, these actions do not modify the state of the former primary devices, which remain in the primary state. Therefore, the former primary devices remain accessible and usable even though they are considered to be the secondary disks from a GDPS perspective. This makes it is possible to accidentally update or IPL from the wrong set of disks. Accidentally using the wrong set of disks can result in a potential data integrity or data loss problem.

GDPS HM provides IPL protection early in the IPL process. During initialization of GDPS, if GDPS detects that the system coming up has just been IPLed using the wrong set of disks, GDPS will quiesce that system, preventing any data integrity problems that could be experienced had the applications been started.

GDPS also uses an IBM DS8000 disk subsystem capability, which is called Soft Fence, for configurations where the disks support this function. Soft Fence provides the means to fence, that is to block access to a selected device. GDPS uses Soft Fence when appropriate to fence devices that might otherwise be exposed to accidental update.

4.1.2 Protecting distributed (FB) data

|

Terminology: The following definitions describe the terminology that we use in this book when referring to the various types of disks:

•IBM Z or Count-Key-Data (CKD) disks

GDPS can manage disks that are formatted as CKD disks (the traditional mainframe format) that are used by any of the following IBM Z operating systems: z/VM, VSE, KVM, and Linux on IBM Z.

We refer to the disks that are used by a system that is running on the mainframe as IBM Z disks, CKD disks, or CKD devices. These terms are used interchangeably.

•FB disks

Disks that are used by systems other than those systems that are running on IBM Z are traditionally formatted as Fixed Block (FB) and are referred to as FB disks or FB devices in this book.

|

GDPS HM can manage the mirroring of FB devices that are used by non-mainframe operating systems. The FB devices can be part of the same consistency group as the mainframe CKD devices, or they can be managed separately in their own consistency group.

For more information about FB disk management, see 4.3, “Fixed Block disk management” on page 132.

4.1.3 Protecting other CKD data

Systems that are fully managed by GDPS are known as GDPS managed systems or GDPS systems. These are the z/OS systems in the GDPS sysplex.

GDPS HM can also manage the disk mirroring of CKD disks that are used by systems outside the sysplex: Other z/OS systems, Linux on IBM Z, VM, and VSE systems that are not running any GDPS HM or xDR automation. These are known as “foreign systems.”

Because GDPS manages Metro Mirror for the disks used by these systems, these disks must be attached to the GDPS controlling systems. With this setup, GDPS is able to capture mirroring problems and will perform a freeze. All GDPS managed disks belonging to the GDPS systems and these foreign systems are frozen together, regardless of whether the mirroring problem is encountered on the GDPS systems’ disks or the foreign systems’ disks.

GDPS HM is not able to directly communicate with these foreign systems. For this reason, GDPS automation will not be aware of certain other conditions such as a primary disk problem that is detected by these systems. Because GDPS will not be aware of such conditions that would have otherwise driven autonomic actions such as HyperSwap, GDPS cannot react to these events.

If an unplanned HyperSwap occurs (because it triggered on a GDPS managed system), the foreign systems cannot and do not swap to using the secondaries. Mechanisms are provided to prevent these systems from continuing to use the former primary devices after the GDPS systems are swapped. You can then use GDPS automation facilities to reset these systems and re-IPL them by using the swapped-to primary disks.

4.2 GDPS Metro HyperSwap Manager configurations

A basic GDPS Metro HyperSwap Manager configuration consists of at least one production system, at least one controlling system, primary disks, and secondary disks. The entire configuration can be in either a single site to provide protection from disk outages with HyperSwap, or it can be spread across two data centers within metropolitan distances as the foundation for a disaster recovery solution. The actual configuration depends on your business and availability requirements.

4.2.1 Controlling system

Why does a GDPS Metro HyperSwap Manager configuration need a controlling system? At first, you might think this is an additional infrastructure overhead. However, when you have an unplanned outage that affects production systems or the disk subsystems, it is crucial to have a system such as the controlling system that can survive failures that might have impacted other portions of your infrastructure. The controlling system allows you to perform situation analysis after the unplanned event to determine the status of the production systems or the disks. The controlling system plays a vital role in a GDPS Metro HyperSwap Manager configuration.

The controlling system must be in the same sysplex as the production system (or systems) so it can see all the messages from those systems and communicate with those systems. However, it shares an absolute minimum number of resources with the production systems (typically only the sysplex couple data sets). By being configured to be as self-contained as possible, the controlling system will be unaffected by errors that might stop the production systems (for example, an ELB event on a primary volume).

The controlling system must have connectivity to all the Site1 and Site2 primary and secondary devices that it will manage. If available, it is preferable to isolate the controlling system infrastructure on a disk subsystem that is not housing mirrored disks that are managed by GDPS.

The controlling system is responsible for carrying out all Metro Mirror and STP-related recovery actions following a disaster or potential disaster, for managing the disk mirroring configuration, for initiating a HyperSwap, for initiating a freeze and implementing the freeze policy actions following a freeze event, for reassigning STP roles, and so on.

The availability of the dedicated GDPS controlling system (or systems) in all configurations is a fundamental requirement of GDPS. It is not possible to merge the function of the controlling system with any other system that accesses or uses the primary volumes or other production resources.

Especially in 2-site configurations, configuring GDPS HM with two controlling systems, one in each site is highly recommended. This is because a controlling system is designed to survive a failure in the opposite site of where the primary disks are. Primary disks are normally in Site1 and the controlling system in Site2 is designed to survive if Site1 or the disks in Site1 fail. However, if you reverse the configuration so that primary disks are in Site2, the controlling system is in the same site as the primary disks. It will certainly not survive a failure in Site2 and might or might not survive a failure of the disks in Site2, depending on the configuration. Configuring a controlling system in both sites ensures the same level of protection no matter which site is the primary disk site. When two controlling systems are available, GDPS manages by assigning a Master role to the controlling system that is in the same site as the secondary disks and switching the Master role if there is a disk switch.

Improved controlling system availability: Enhanced timer support

Normally, a loss of synchronization with the sysplex timing source will generate a disabled console WTOR that suspends all processing on the LPAR, until a response is made to the WTOR. The WTOR message is IEA394A in STP timing mode.

In a GDPS environment, z/OS is aware that a given system is a GDPS controlling system and will allow a GDPS controlling system to continue processing even when the server it is running on loses its time source and becomes unsynchronized. The controlling system is therefore able to complete any freeze or HyperSwap processing it might have started and is available for situation analysis and other recovery actions, instead of being in a disabled WTOR state.

In addition, because the controlling system is operational, it can be used to help in problem determination and situation analysis during the outage, thus reducing further the recovery time needed to restart applications.

The controlling system is required to perform GDPS automation if there is a failure. That might include these actions:

•Performing the freeze processing to guarantee secondary data consistency

•Coordinating HyperSwap processing

•Aiding with situation analysis

Because the controlling system needs to run only with a degree of time synchronization that allows it to correctly participate in heartbeat processing with respect to the other systems in the sysplex, this system should be able to run unsynchronized for a period of time (80 minutes) using the local time-of-day (TOD) clock of the server (referred to as local timing mode), rather than generating a WTOR.

Automated response to STP sync WTORs

GDPS on the controlling systems, using the BCP Internal Interface, provides automation to reply to WTOR IEA394A when the controlling systems are running in local timing mode (for more information, see “Improved controlling system availability: Enhanced timer support” on page 129). A server in an STP network might have recovered from an unsynchronized to a synchronized timing state without client intervention. By automating the response to the WTORs, potential time outs of subsystems and applications in the client’s enterprise might be averted, thus potentially preventing a production outage.

If either WTOR IEA394A is posted for production systems, GDPS uses the BCP Internal Interface to automatically reply RETRY to the WTOR. If z/OS determines that the CPC is in a synchronized state, either because STP recovered or the CTN was reconfigured, it will no longer spin and continue processing. If the CPC is still in an unsynchronized state when GDPS automation responded with RETRY to the WTOR, however, the WTOR will be reposted.

The automated reply for any given system is retried for 60 minutes. After 60 minutes, you will need to manually respond to the WTOR.

4.2.2 GDPS Metro HyperSwap Manager in a single site

In the single-site configuration, the controlling systems, primary disks, and secondary disks are all in the same site, as shown in Figure 4-2. This configuration allows you to benefit from the capabilities of GDPS Metro HyperSwap Manager to manage the mirroring environment, and HyperSwap across planned and unplanned disk reconfigurations. A single site configuration does not provide disaster recovery capabilities, because all the resources are in the same site, and if that site suffers a disaster, then the systems and disk are all gone.

|

Note: We continue to refer to Site1 and Site2 in this section, although this terminology here refers to the two copies of the production data in the same site.

|

Although having a single controlling system might be acceptable, we suggest having two controlling systems to provide the best availability and protection. The K1 controlling system can use Site2 disks, and K2 can use the Site1 disks. In this manner, a single failure will not affect availability of at least one of the controlling systems, and it will be available to perform GDPS processing.

Figure 4-2 GDPS Metro HyperSwap Manager single-site configuration

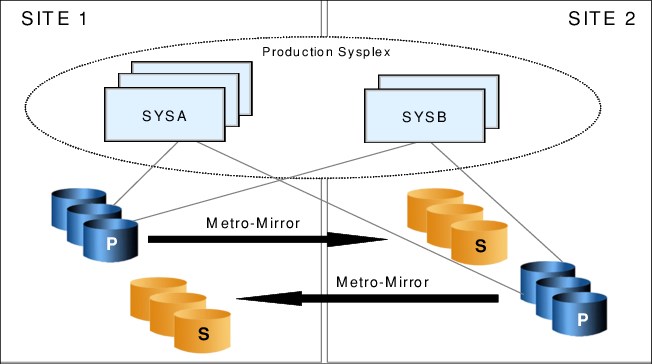

4.2.3 GDPS Metro HyperSwap Manager in a 2-site configuration

Another option is to use GDPS Metro HyperSwap Manager with the primary disk in one site, and the secondaries in a second site, as shown in Figure 4-3. This configuration does provide the foundation for disaster recovery because the secondary copy of disk is in a separate site protected from a disaster in Site1. GDPS Metro HyperSwap Manager also delivers the freeze capability, which ensures a consistent set of secondary disk in case of a disaster.

Figure 4-3 GDPS Metro HyperSwap Manager 2-site configuration

If you have a 2-site configuration, and chose to implement only one controlling system, it is highly recommended that you place the controlling system in the recovery site. The advantage of this is that the controlling system will continue to be available even if a disaster takes down the whole production site. Placing the controlling system in the second site creates a multisite sysplex, meaning that you must have the appropriate connectivity between the sites. To avoid cross-site sysplex connections, you might also consider the BRS configuration that is described in 3.2.4, “Business Recovery Services configuration” on page 73.

To get the full benefit of HyperSwap and the second site, ensure that there is sufficient bandwidth for the cross-site connectivity from the primary site servers to the secondary site disk. Otherwise, although you might be able to successfully perform the HyperSwap to the second site, the I/O performance following the swap might not be acceptable.

4.2.4 GDPS Metro HyperSwap Manager in a 3-site configuration

GDPS Metro HyperSwap Manager can be combined with GDPS XRC or GDPS GM in a 3-site configuration. In this configuration, GDPS Metro HyperSwap Manager provides protection from disk outages across a metropolitan area or within the same local site, and GDPS XRC or GDPS GM provides disaster recovery capability in a remote site.

We call these combinations GDPS Metro Global - GM (GDPS MGM) or GDPS Metro Global - XRC (GDPS MzGM). For more information about the capabilities and limitations of using GDPS Metro HyperSwap Manager in a GDPS MGM and GDPS MzGM solution, see Chapter 9, “Combining local and metro continuous availability with out-of-region disaster recovery” on page 267.

4.2.5 Other important considerations

The availability of the dedicated GDPS controlling system (or systems) in all scenarios is a fundamental requirement in GDPS. It is not possible to merge the function of the controlling system with any other system that accesses or uses the primary volumes.

4.3 Fixed Block disk management

As discussed in 3.3.1, “Fixed Block disk management” on page 77, most enterprises today run applications that update data across multiple platforms. For these enterprises, there is a need to manage and protect not just the IBM Z data, but also the data that is on FB devices for IBM Z and non IBM Z servers. GDPS HM provides the capability to manage a heterogeneous environment of IBM Z and distributed systems data through a function called that is Fixed Block Disk Management (FB Disk Management).

The FB Disk Management function allows GDPS to be a single point of control to manage business resiliency across multiple tiers in the infrastructure, which improves cross-platform system management and business processes. GDPS HM can manage the Metro Mirror remote copy configuration and FlashCopy for distributed systems storage.

Specifically, FB disk support extends the GDPS HM Freeze capability to FB devices that are in supported disk subsystems to provide data consistency for the IBM Z data and the data on the FB devices.

With FB devices included in your configuration, you can select one of the following options to specify how Freeze processing is to be handled for FB disks and IBM Z (CKD disks), when mirroring or primary disk problems are detected:

•Freeze all devices that are managed by GDPS.

If this option is used, the CKD and FB devices are in a single consistency group. Any Freeze trigger (for the IBM Z or FB devices) results in the FB and the IBM Z LSSs managed by GDPS being frozen. This option allows you to have consistent data across heterogeneous platforms if a disaster occurs, which allows you to restart systems in the site where secondary disks are located. This option is especially suitable when there are distributed units of work on IBM Z and distributed servers that update the same data; for example, using IBM DB2 DRDA, which is the IBM Distributed Relational Database Architecture.

•Freeze devices by group.

If this option is selected, the CKD devices are in a separate consistency group from the FB devices. Also, the FB devices can be separated into multiple consistency groups by distributed workloads, for example. The Freeze is performed on only the group for which the Freeze trigger was received. If the Freeze trigger occurs for an IBM Z disk device, only the CKD devices are frozen. If the trigger occurs for a FB disk, only the FB disks within the same group as that disk are frozen.

4.3.1 FB disk management prerequisites

GDPS requires the disk subsystems that contain the FB devices to support the z/OS Fixed Block Architecture (zFBA) feature. GDPS runs on z/OS and therefore communicates to the disk subsystems directly over a channel connection. The z/OS Fixed Block Architecture (zFBA) provides GDPS the ability to send the commands that are necessary to manage Metro Mirror and FlashCopy directly to FB devices over a channel connection.

It also enables GDPS to receive notifications for specific error conditions (for example, suspension of an FB device pair). These notifications allow the GDPS controlling system to drive autonomic action, such as performing a freeze for a mirroring failure.

|

Note: HyperSwap for FB disks is not supported for any IBM Z or non IBM Z servers.

|

4.4 Managing the GDPS Metro HyperSwap Manager environment

The bulk of the functions delivered with GDPS Metro HyperSwap Manager relate to maintaining the integrity of the secondary disks and being able to nondisruptively switch to the secondary volume of the Metro Mirror pair.

However, there is an additional aspect of remote copy management that is available with GDPS Metro HyperSwap Manager, namely the ability to query and manage the remote copy environment using the GDPS panels.

In this section, we describe this other aspect of GDPS Metro HyperSwap Manager. Specifically, GDPS Metro HyperSwap Manager provides multiple mechanisms including user interfaces, a command line interface, and multiple application programming interfaces (APIs) to let you:

•Be alerted to any changes in the remote copy environment

•Display the remote copy configuration

•Start, stop, and change the direction of remote copy

•Perform HyperSwap operations

•Start and stop FlashCopy

|

Note: GDPS Metro HyperSwap Manager does not provide script support. For scripting support with added capabilities, the full function GDPS Metro product is required.

|

4.4.1 User interfaces

Two primary user interface options are available for GDPS HM: the NetView 3270 panels and a browser-based graphical user interface (also referred to as the GDPS GUI in this book).

An example of the main GDPS HM 3270-based panel is shown in Figure 4-4.

Figure 4-4 GDPS Metro HyperSwap Manager main GDPS panel

Notice that several option choices are in blue instead of black. These blue options are supported by the GDPS Metro offering, but are not part of GDPS Metro HyperSwap Manager.

This panel includes a summarized configuration status at the top and a menu of choices. For example, to view the disk mirroring (Dasd Remote Copy) panels, enter 1 at the Selection prompt, and then press Enter.

GDPS graphical user interface

The GDPS GUI is a browser-based interface designed to improve operator productivity. The GDPS GUI provides the same functional capability as the 3270-based panels, such as providing management capabilities for Remote Copy Management, Configuration Management, SDF Monitoring, and browsing the CANZLOG using simple point-and-click procedures. Advanced sorting and filtering is available in most of the views provided by the GDPS GUI. In addition, users can open multiple windows or tabs to allow for continuous status monitoring, while performing other GDPS Metro HyperSwap Manager management functions.

The GDPS GUI display has four main sections:

•The application header at the top of the page that provides an Actions button for carrying out a number of GDPS tasks, along with the help function and the ability to log off or switch between target systems.

•The application menu is down the left hand side of the window. This menu gives access to various features and functions available through the GDPS GUI.

•The active window that shows context-based content depending on the selected function. This tabbed area is where the user can switch context by clicking a different tab.

•A status summary area is shown at the bottom of the display.

The initial status panel of the GDPS Metro HyperSwap Manager GDPS GUI is shown in Figure 4-5. This panel provides an instant view of the status and direction of replication, and HyperSwap status. Hovering over the various icons provides more information by using pop-up windows.

|

Note: For the remainder of this section, only the GDPS GUI is shown to illustrate the various GDPS management functions. The equivalent traditional 3270 panels are not shown here.

|

Figure 4-5 Full view of GDPS GUI main panel

Monitoring function: Status Display Facility

GDPS also provides many monitors to check the status of disks, sysplex resources, and so on. Any time there is a configuration change, or something in GDPS that requires manual intervention, GDPS will raise an alert. GDPS uses the Status Display Facility (SDF) provided by System Automation as the primary status feedback mechanism for GDPS.

GDPS provides a dynamically updated window, as shown in Figure 4-6. A summary of all current alerts is provided at the bottom of each window. The initial view presented is for the SDF trace entries so you can follow, for example, script execution. Simply click one the other alert categories to view the different alerts associated with automation or remote copy in either site, or select All to see all alerts. You can sort and filter the alerts based on a number of the fields presented, such as severity.

Figure 4-6 GDPS GUI SDF panel

By default, the GDPS GUI refreshes the alerts automatically every 10 seconds. As with the 3270 window, if there is a configuration change or a condition that requires special attention, the color of the icons will change based on the severity of the alert. By pointing to and clicking any of the highlighted fields, you can obtain detailed information regarding the alert.

Remote copy panels

The z/OS Advanced Copy Services capabilities are powerful, but the native command-line interface (CLI), z/OS TSO, and ICKDSF interfaces are not as user-friendly as the DASD remote copy panels are. To more easily check and manage the remote copy environment, use the DASD remote copy panels provided by GDPS.

For GDPS to manage the remote copy environment, you must first define the configuration (primary and secondary LSSs, primary and secondary devices, and PPRC links) to GDPS in a file called the GEOPARM file. This GEOPARM file can be edited and introduced to GDPS directly from the GDPS GUI.

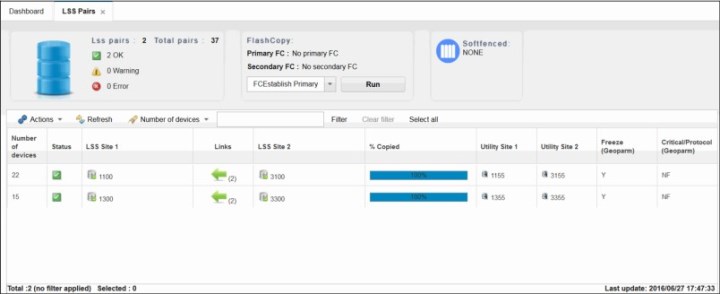

After the configuration is known to GDPS, you can use the panels to check that the current configuration matches the one you want. You can start, stop, suspend, and resynchronize mirroring at the volume or LSS level. These actions can be done at the device or LSS level, or both, as appropriate. Figure 4-7 shows the mirroring panel for CKD devices at the LSS level.

Figure 4-7 GDPS GUI Dasd Remote Copy SSID panel

The Dasd Remote Copy panel is organized into three sections:

•Upper left provides a summary of the device pairs in the configuration and their status.

•Upper right provides the ability to invoke GDPS-managed FlashCopy operations.

•A table with one row for each LSS pair in your GEOPARM. In addition to the rows for each LSS, there is a header row with an Action menu to enable you to carry out the various DASD management tasks, and the ability to filter the information presented.

To perform an action on a single SSID-pair, double click a row in the table. A panel is then displayed, where you can perform the same actions as those available as line commands on the top section of the 3270 panel.

After an individual SSID-pair is selected, the frame shown in Figure 4-8 is displayed. The table in this frame shows each of the mirrored device pairs within a single SSID-pair, along with the current status of each pair. In this example, all the pairs are fully synchronized and in duplex status, as summarized in the upper left area. Additional details can be viewed for each pair by double-clicking the row, or selecting the row with a single click and then selecting Query from the Actions menu.

Figure 4-8 GDPS GUI Dasd Remote Copy: View Devices detail panel

If you are familiar with using the TSO or ICKDSF interfaces, you might appreciate the ease of use of the DASD remote copy panels.

Remember that these panels provided by GDPS are not intended to be a remote copy monitoring tool. Because of the overhead involved in gathering the information for every device to populate the panels, GDPS gathers this data only on a timed basis, or on demand following an operator instruction. The normal interface for finding out about remote copy status or problems is the Status Display Facility (SDF).

Similar panels are provided for controlling the Open LUN devices.

4.4.2 NetView commands

Even though GDPS Metro HyperSwap Manager does not support using scripts as GDPS Metro does, certain GDPS operations are started through the use of NetView commands that perform similar actions to the equivalent script command. These commands are entered at a NetView command prompt.

There are commands to perform the following types of actions (not an all inclusive list). One command would accomplish all of the following actions:

•Temporarily disable HyperSwap and subsequently re-enable HyperSwap.

•List systems in the GDPS and identify which are controlling systems.

•Perform a planned HyperSwap disk switch.

•Perform a planned freeze of the disk mirror.

•Make the secondary disks usable through a PPRC failover or recover action.

•Restore Metro Mirror mirroring to a duplex state.

•Take a point-in-time copy of the current set of secondary CKD devices. FlashCopy using COPY or NOCOPY options and the NOCOPY2COPY option to convert an existing FlashCopy taken with NOCOPY to COPY are supported. The CONSISTENT option (FlashCopy Freeze) is supported in conjunction with the COPY. and NOCOPY options.

•Reconfigure an STP-only CTN by reassigning the Preferred Time Server (PTS) and Current Time Server (CTS) roles and the Backup Time Server (BTS) and Arbiter (ARB) roles to one or more CPCs.

•Unfence disks that were blocked by Soft Fence.

4.4.3 Application programming interfaces

GDPS provides two primary programming interfaces to allow other programs that are written by clients: Independent Software Vendors, and other IBM product areas to communicate with GDPS. These APIs allow clients, ISVs, and other IBM product areas to complement GDPS automation with their own automation code. The following sections describe the APIs that are provided by GDPS.

GDPS Metro HyperSwap Manager Query Services

GDPS maintains configuration information and status information in NetView variables for the various elements of the configuration that it manages. Query Services is a capability that allows client-written NetView REXX programs to query the value of numerous GDPS internal variables. The variables that can be queried pertain to the Metro Mirror configuration, the system and sysplex resources that are managed by GDPS, and other GDPS facilities, such as HyperSwap and GDPS Monitors.

In addition to the Query Services function that is part of the base GDPS product, GDPS provides several samples in the GDPS SAMPLIB library to demonstrate how Query Services can be used in client-written code.

GDPS also makes available to clients a tool that is called the Preserve Mirror Tool (PMT), which facilitates adding disks to the GDPS Metro HyperSwap Manager configuration and bringing these disks to duplex.

RESTful APIs

As described in “GDPS Metro HyperSwap Manager Query Services” above, GDPS maintains configuration information and status information about the various elements of the configuration that it manages. Query Services can be used by REXX programs to query this information.

The GDPS RESTful API also provides the ability for programs to query this information. Because it is a RESTful API, it can be used by programs that are written in various programming languages, including REXX, that are running on various server platforms.

In addition to querying information about the GDPS environment, the GDPS RESTful API allows programs that are written by clients, ISVs, and other IBM product areas to start actions against various elements of the GDPS environment. Examples of these actions include starting and stopping Metro Mirror, executing HyperSwap operations, and starting GDPS monitor processing. These capabilities enable clients, ISVs, and other IBM product areas provide an even richer set of functions to complement the GDPS functionality.

GDPS provides samples in the GDPS SAMPLIB library to demonstrate how the GDPS RESTful API can be used in programs.

4.5 GDPS Metro HyperSwap Manager monitoring and alerting

The GDPS SDF panel, as discussed in “Monitoring function: Status Display Facility” on page 135, is where GDPS dynamically displays color-coded alerts.

Alerts can be posted as a result of an unsolicited error situation for which GDPS listens. For example, if one of the multiple PPRC links that provide the path over which Metro Mirror operations take place is broken, there is an unsolicited error message issued. GDPS listens for this condition and raises an alert on the SDF panel notifying the operator of the fact that a PPRC link is not operational.

Clients run with multiple PPRC links and if one is broken, Metro Mirror still continues over any remaining links. However, it is important for the operations staff to be aware of the fact that a link is broken and fix this situation because a reduced number of links results in reduced Metro Mirror bandwidth and reduced redundancy. If this problem is not fixed in a timely manner, and more links have a failure, it can result in production impact because of insufficient mirroring bandwidth or total loss of Metro Mirror connectivity (which results in a freeze).

Alerts can also be posted as a result of GDPS periodically monitoring key resources and indicators that relate to the GDPS Metro HyperSwap Manager environment. If any of these monitoring items are found to be in a state deemed to be not normal by GDPS, an alert is posted on SDF.

Various GDPS monitoring functions are executed on the GDPS controlling systems and on the production systems. This is because, from a software perspective, it is possible that different production systems have a different view of some of the resources in the environment and although status can be normal in one production system, it might be not normal in another. All GDPS alerts generated on one system in the GDPS sysplex are propagated to all other systems in the GDPS. This propagation of alerts provides a single focal point of control. It is sufficient for operators to monitor SDF on the master controlling system to be aware of all alerts that are generated in the entire GDPS complex.

When an alert is posted, the operator will have to investigate (or escalate, as appropriate) and corrective action will need to be taken for the reported problem as soon as possible. After the problem is corrected, this is detected during the next monitoring cycle and the alert is cleared by GDPS automatically.

GDPS Metro HyperSwap Manager monitoring and alerting capability is intended to ensure that operations are notified of and can take corrective action for any problems in their environment that can affect the ability of GDPS Metro HyperSwap Manager to do recovery operations. This will maximize the chance of achieving your IT resilience commitments.

4.5.1 GDPS Metro HyperSwap Manager health checks

In addition to the GDPS Metro HyperSwap Manager monitoring described, GDPS provides health checks. These health checks are provided as a plug-in to the z/OS Health Checker infrastructure to check that certain settings related to GDPS adhere to preferred practices.

The z/OS Health Checker infrastructure is intended to check a variety of settings to see whether these settings adhere to z/OS optimum values. For settings that are not in-line with preferred practices, exceptions are raised in the Spool Display and Search Facility (SDSF) and optionally, SDF alerts also are raised. If these settings do not adhere to recommendations, this issue can hamper the ability of GDPS to perform critical functions in a timely manner.

Often, if there are changes in the client environment, this might necessitate adjustment of various parameter settings associated with z/OS, GDPS, and other products. It is possible that you can miss making these adjustments, which might affect GDPS. The GDPS health checks are intended to detect such situations and avoid incidents where GDPS is unable to perform its job because of a setting that is perhaps less than ideal.

For example, GDPS Metro HyperSwap Manager requires that the controlling systems’ data sets are allocated on non-mirrored disks in the same site where the controlling system runs. The Site1 controlling systems’ data sets must be on a non-mirrored disk in Site1 and the Site2 controlling systems’ data sets must be on a non-mirrored disk in Site2. One of the health checks provided by GDPS Metro HyperSwap Manager checks that each controlling system’s data sets are allocated in line with the GDPS preferred practices recommendations.

Similar to z/OS and other products that provide health checks, GDPS health checks are optional. Several optimum values that are checked and the frequency of the checks can be customized to cater to unique client environments and requirements.

Several z/OS preferred practices conflict with GDPS preferred practices. The z/OS and GDPS health checks for these result in conflicting exceptions being raised. For such health check items, to avoid conflicting exceptions, z/OS provides the capability to define a coexistence policy where you can indicate which preferred practice is to take precedence; GDPS or z/OS. GDPS includes sample coexistence policy definitions for the GDPS checks that are known to be conflicting with those for z/OS.

GDPS also provides a useful interface for managing the health checks using the GDPS panels. You can perform actions such as activate/deactivate or run any selected health check, view the customer overrides in effect for any preferred practices values, and so on.

Figure 4-9 shows a sample of the GDPS Health Check management panel. In this example you see that all the health checks are enabled. The status of the last run is also shown indicating that some were successful and some resulted in a medium exception. The exceptions can also be viewed using other options on the panel.

Figure 4-9 GDPS Metro HyperSwap Manager Health Check management panel

4.6 Other facilities related to GDPS

In this section, we describe miscellaneous facilities that are provided by GDPS HM that can assist in various ways, such as reducing the window during which disaster recovery capability is not available.

4.6.1 HyperSwap coexistence

In the following sections we discuss the GDPS enhancements that remove various restrictions that had existed regarding HyperSwap coexistence with products such as Softek Transparent Data Migration Facility (TDMF) and IMS Extended Recovery Facility (XRF).

HyperSwap and TDMF coexistence

To minimize disruption to production workloads and service levels, many enterprises use TDMF for storage subsystem migrations and other disk relocation activities. The migration process is transparent to the application, and the data is continuously available for read and write activities throughout the migration process.

However, the HyperSwap function is mutually exclusive with software that moves volumes around by switching UCB pointers. The good news is that currently supported versions of TDMF and GDPS allow operational coexistence. With this support, TDMF automatically temporarily disables HyperSwap as part of the disk migration process only during the short time where it switches UCB pointers. Manual operator interaction is not required. Without this support, through operator intervention, HyperSwap is disabled for the entire disk migration, including the lengthy data copy phase.

HyperSwap and IMS XRF coexistence

HyperSwap also has a technical requirement that RESERVEs cannot be allowed in the hardware because the status cannot be reliably propagated by z/OS during the HyperSwap to the new primary volumes. For HyperSwap, all RESERVEs need to be converted to GRS global enqueue through the GRS RNL lists.

IMS/XRF is a facility by which IMS can provide one active subsystem for transaction processing, and a backup subsystem that is ready to take over the workload. IMS/XRF issues hardware RESERVE commands during takeover processing and these cannot be converting to global enqueues through GRS RNL processing. This coexistence problem has also been resolved so that GDPS is informed before IMS issuing the hardware RESERVE, allowing it to automatically disable HyperSwap. After IMS has finished processing and releases the hardware RESERVE, GDPS is again informed and reenables HyperSwap.

4.6.2 GDPS HM reduced impact initial copy and resynchronization