Voice Activity Detection-Based Home Automation System for People With Special Needs

Dharm Singh Jat⁎; Anton Sokamato Limbo⁎; Charu Singh† ⁎ Namibia University of Science and Technology, Windhoek, Namibia

† Sat-Com (PTY) Ltd., Windhoek, Namibia

Abstract

Speech-controlled applications and devices that support human speech, processing, and communication are becoming popular especially for the smart home system. Modern mobile devices like smartphones and Internet of Things can be operated by using spoken commands. Physically challenged people, including the elderly, rely on caregivers to assist them in doing everyday tasks in homes. But, there are some who would appreciate the convenience of automation in a home. This chapter suggests conceptual design of a system that enables people to have automated processes in the home that use voice commands. This will reduce the reliance on caregivers by elderly and physically challenged people and help them regain some independence.

Keywords

Speech processing; Voice activity detection; IoT; Smart home

6.1 Introduction

Voice activity detection (VAD) is a technique in which the presence or absence of human speech is detected. The detection can be used to trigger a process. VAD has been applied in speech-controlled applications and devices like smartphones, which can be operated by using speech commands. According to the Namibia Statistics Agency (NSA) Disability Report [1], the 2011 census revealed that there are 98,413 people living with disability in Namibia. This report further states that about 64% of the people living with a disability use the radio as an ICT asset for communication, implying that these people are not utilizing other technologies available today that can automate processes in a home. Most voice-based home automation systems are based on remote control or smartphones to control the home, or they depend on commercial ASR (automatic speech recognition) application programing interface (API), which is intended for general use, therefore not specially designed for home automation commands [2].

Speech-controlled applications and devices that support human speech communication are becoming more and more popular. An example of this is in modern mobile devices like smartphones, which can be operated by using spoken commands for controlling home devices. In the automotive industry, speech-controlled applications are in hands-free telephony, and speech-controlled applications that enable the driver to interact with the car infotainment systems while driving without being distracted. VAD can also be applied to homes to automate day-to-day processes using voice commands. This approach can benefit a wide range of people, especially people who face challenges in doing everyday tasks like operating electronic appliances in a home. This can include people living with physical disabilities or with special needs, and the elderly; anyone who would who would appreciate the convenience of automated processes in a home. People with special needs and the elderly, especially those who live alone suffer the difficulty to control home appliances, and often need another person to assist them in performing everyday tasks like switching on a light.

A VAD system in homes can work by receiving voice commands from the user and taking the appropriate action based on the received spoken command, without requiring the user to have physical interaction with the system.

A lot of work has been done in the areas of voice processing and home automation, some of which have employed innovative approaches using Internet of Things (IoTs) to automate processes. Price et al. [3] propose an architecture that uses deep neural networks for ASR and VAD with improved accuracy, programmability, and scalability. The architecture is designed to minimize off-chip memory bandwidth, which is the main driver of system power consumption.

A new approach for real-time fault detection in a microphone array was proposed for reliable voice-based, human-robot interaction by intercorrelation of features in voice activity detection for each microphone [4].

In a fighter aircraft communication system, a voice operated switch (VOS) can be used for the optimal use of resources in the aircraft. This system provides a hands-free environment for a fighter pilot to perform some crucial tasks, and it can be a better option compared with a push to talk function. A reliable, robust VAD scheme was proposed for VOS applications in aircraft, which are based on fuzzy logic, energy-based detectors, and artificial neural networks (ANN). The VOS applications were compared with real-time robust VAD mechanisms [5]. Further, the evaluation of the VAD algorithms was performed by a MATLAB simulation platform using recordings of actual fighter aircraft cockpit noise.

The study presented a speech recognition system in robots designed for distant speech, which is useful for operating in human-occupied, outdoor military warehouse. Further, this study introduced a voice activity detector system by using multiple microphones and channel selection method [6].

Thanu and Uthra [7] a software system was developed which has features of interacting with home appliances remotely using the Internet. This allows users to have flexible control mechanisms remotely through a secured Internet web connection. Users are able to control appliances while away from home, or monitor the condition of these appliances.

Folea et al. [8] developed a smart home architecture, which employed a universal remote that will enable the user to control the alarm, lighting, and temperature in all rooms of the house. Furthermore, the system will be able to monitor dust in the air, if windows and doors are closed.

Soumya et al. [9] used a Raspberry Pi to develop a home monitoring system. The Raspberry Pi has a few components connected to it, which include an ultrasonic sensor and light dependent resistor (LDR), light emitting diodes (LEDs). When a person enters the room, the ultrasonic sensor detects a human presence, and based on whether the room is bright or dark, which is decided by the LDR, the LED turns on.

Xu et al. [10] developed a VAD algorithm that is highly sensitive to background noise. The algorithm calculates permutation entropy (PE), and determines the presence or absence of speech, as well as distinguishing between voiced and unvoiced parts of speech. Experiments done under several noise scenarios demonstrated that the proposed method can obtain conspicuous improvements on false alarm rates while maintaining comparable speech detection rates when compared to the reference method.

In order to suppress noise and reverberation, a direction of arrival estimation and localization (DOAE) is essential in many applications including multiparty teleconferencing for steering and beamforming and automatic steering of video cameras. This will also improve speech intelligibility [11].

As more and more physical devices are being connected to computer networks, home automation can employ IoT while using VAD as a way to interact with IoT devices using voice commands. The systems mentioned above use different approaches to home automation and speech processing, but lack the integration of using speech processing in home automation.

This chapter describes a VAD-based home automation system to assist people with special needs or some elderly people to provide support to control home appliances. This chapter details the overall design of a VAD-based home automation system, which can be integrated with existing infrastructure of home appliances. The system determines the suitable VAD algorithm for people who need assistance, especially those who live alone.

6.2 Conceptual Design of the System

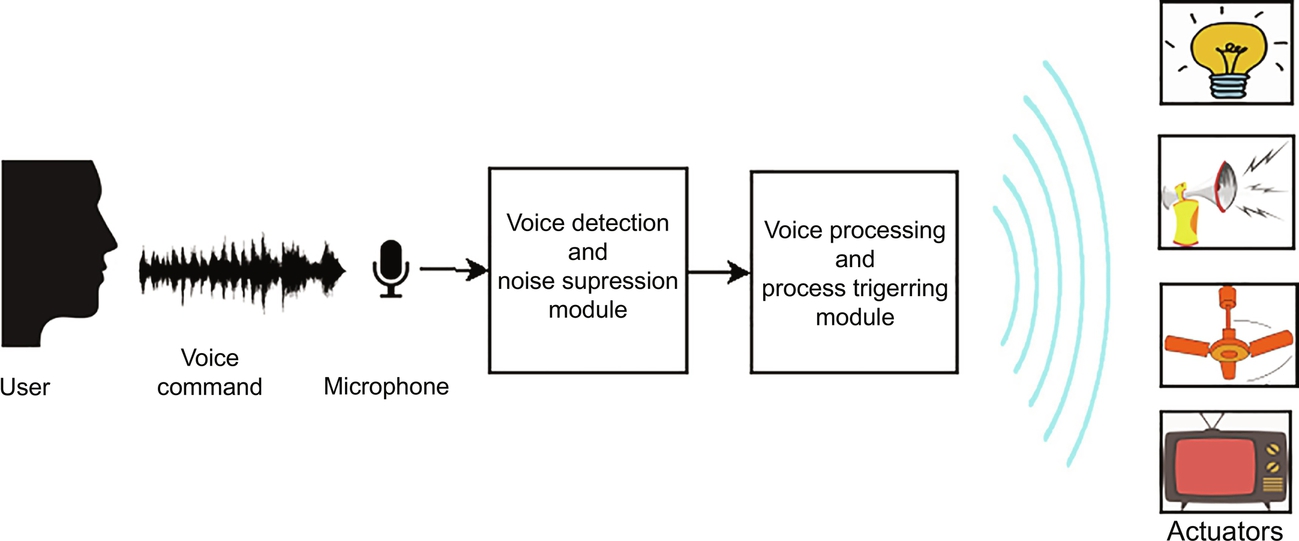

The system will be designed to integrate with existing home appliances while requiring little physical interaction with the user. Fig. 6.1 shows the conceptual design of the voice activity detection-based home automation system for people with special needs.

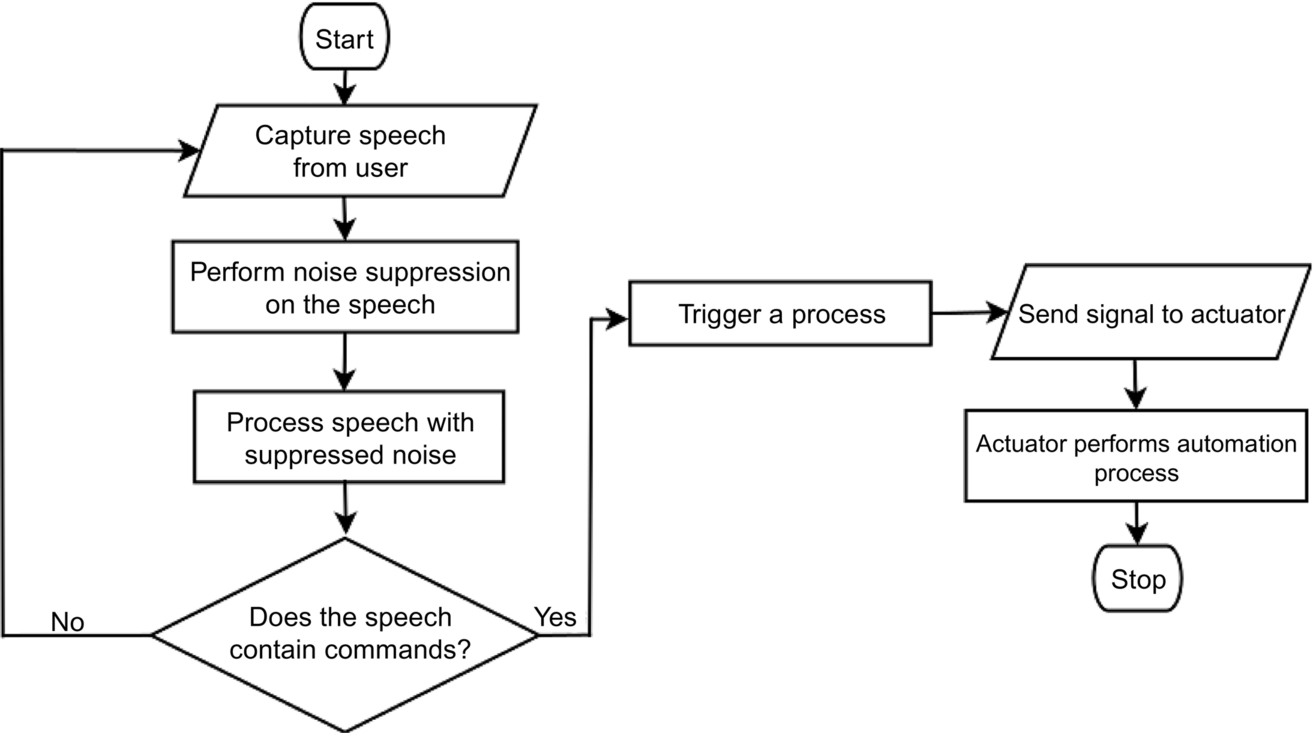

In the conceptual design of the system, the user gives a voice command to the system, which is captured by the microphone and sent to the module responsible for detecting voice and performing noise suppression on the received speech. The noise suppressed speech is transferred to another module, which is responsible for processing the speech by converting it to text. The text is then analyzed to determine to see if it contains known commands. If so, the system then triggers an automation process that is in line with the received spoken command. Fig. 6.2 shows the flowchart of the VAD-based home automation system, and explains the process of capturing speech signals, reducing background noise, and activating the devices.

For the system to be fully operational, a few additional components must be added to ensure that the system is able to make the decisions by interacting with speech. This includes having other sensors and actuators working concurrently with the system to ensure that all operations are automated. The implementation of the VAD-based system is explained in the next section.

6.3 System Implementation

The home automation system is implemented using a Raspberry Pi (RP) 3 Model B +, shown in Fig. 6.3.

The RP is the central point of the system capable of capturing the spoken commands and processing these commands by converting them into text using a Speech-to-Text engine. The converted text can then be used as the basis of the action that the system will take.

Specifications of a Raspberry Pi 3 include a 1.2 GHZ quad-core processor, 1 GB RAM, 4 USB ports and communication modules such as an Ethernet port, Wireless LAN, and Bluetooth. The most useful part of the Raspberry Pi, which will make it very ideal for an automation project, are the general purpose input output (GPIO) pins. They enable the user to connect the sensors and transmitters that will be communicating to the home appliances.

The system employs an LED infrared transmitter and infrared receiver, which is connected to the Raspberry Pi. The infrared receiver will be used to synchronize an appliance remote control with the automation system. The LED infrared transmitter will act like a remote control by emitting an infrared signal from the system to an appliance. Other hardware components required include a USB microphone, and because the Raspberry Pi has multiple communications modules, the system can be configured to control other appliances that do not have infrared by employing components like infrared controlled power plugs, light bulbs, and electric fans. In cases where devices cannot be operated using infrared, the system can control smart devices using Wireless LAN, or Bluetooth. This is discussed later in this chapter.

6.3.1 Speech Recognition

The CMU’s fastest PocketSphinx speech recognition system is able to translate speech in real-time. PocketSphinx is an open source speech recognition system, and it is a library written in C language. It is the most accurate engine for real-time application, and therefore it is a good choice for home Automation live applications [12] [13]. The chapter describes an application of controlling a home device from a research and commercial perspective.

PocketSphinx captures speech in a waveform, then splits it at utterances, maybe a cough, an “uh,” or a breath in a speech. It then tries to recognize what is being said in each utterance. To achieve this, PocketSphinx takes all the possible combinations of words and tries to match them with the audio. Then, it chooses the best matching combination.

PocketSphinx is also capable of performing noise suppression. For this reason, the automation system employs PocketSphinx as both a noise-suppression model and a Speech-to-Text engine. This is done by using keyword spotting and by using an acoustic and language model. By using keyword spotting as a wake word, PocketSphinx only starts recording speech after the keyword is spoken or received. This reduces false positives and improves the accuracy of the system. CMUSphinx has several acoustic and language models such as U.S. English, German, Spanish, Indian English, etc. This allows PocketSphinx to adapt to the accent of the user. In this case, U.S. English was used in conjunction with a list from a dictionary file. The file had words representing electrical components that are to be switched on and off, and explains how the user should pronounce the words.

Fig. 6.3 shows the Raspberry Pi connected to a microphone using a USB webcam. Table 6.1 depicts the phonetic used by PocketSphinx for this research. The table contains sequences of phonemes and their respective vocabulary words that we are interested in recognizing. The dictionary file can be generated on the CMUSphinx website and be used when a narrow search of pertinent words is preferred instead of using an entire language model [14]. The dictionary file guides both PocketSphinx and the user on how words should be pronounced. That makes it easy for PocketSphinx to recognize spoken words, and reduce the words that it has to search. This improves both performance and rate of which spoken commands are converted into text. The words below represent operations the system is able to do. For example, if the user wants to switch on a light, the user would say “LIGHT” which is “L AY T” according to the PocketSphinx dictionary.

6.3.2 System Automation

The overall objective of this home automation system is to integrate with existing infrastructure in a home and have little implications on the usage of existing home appliances. Because of this, the system employs infrared as a form of communication with appliances in a home. This approach enables the system to control most types of appliances without requiring the appliances to be smart or network enabled. However, the system can also be integrated with smart devices by mapping a voice command with the appropriate action.

To control appliances the system uses Linux Infrared Remote Control (LIRC). LIRC enables the system to send and receive infrared signals. The system uses LIRC to synchronize home appliance remote controls to the automation system, and to transmit infrared signals to the appliances as if it the signals were coming from the remote controls. LIRC enables the system to have command over multiple devices that can be controlled using infrared remotes. In this case, LIRC was synchronized with an air conditioner remote to turn on/off whenever the word “FAN” is said. This was done by pointing the remote control and pressing the power button to get the infrared signal of the button. Python can be used as the scripting language to match the text with an infrared signal to switch on the air conditioning unit every time the word “FAN” is detected in a spoken command.

6.3.3 Other Applications

IoT devices have evolved over the years, which means there are so many applications of IoT in our everyday lives that we don’t realize we are utilizing. IoT can enhance an automation system by adding various sensing components to the system. This part of the chapter looks at enhancements that can be added to the system to automate other tasks in a home. The first is the addition of a light and ultrasonic sensor to the system. An ultrasonic sensor transmits ultrasonic sound waves and can be used to detect the presence of motion in a room. This sensor can be used to automate lighting and other appliances in a home. For example, if the system detects that there is no motion in a room, it will determine what appliance are on that do not need to be because no one is in the room. After this determination, the system can then decide to turn all of them off. This can include a TV or lights in a room. Of course, it can be overridden with user voice commands. If the user walks in a room the system can turn the appliances back on, unless otherwise specified by the user.

With a light intensity sensor, working in conjunction with the system, it will be able to keep lights on in a room only when necessary. If the light intensity in the room is high, the system will determine that there is no need to have the lights on and turn them off. Similarly, if there is no motion in a room for a specified amount of time, the system can switch off the light until such a time that there is motion detected.

A soil moisture sensor can also be added to the system to assist in automated irrigation for small plants in a home [15]. The sensor can connect to the system and operate autonomously to give the user control over plant irrigation. Automation will also require monitoring different aspects of the system to ensure that it is operating as expected. For this reason, we can develop a local website and mobile application that can show the status of different components of the automation system. The mobile application can also be used to control the devices in the automation system.

6.3.4 Results and Discussion

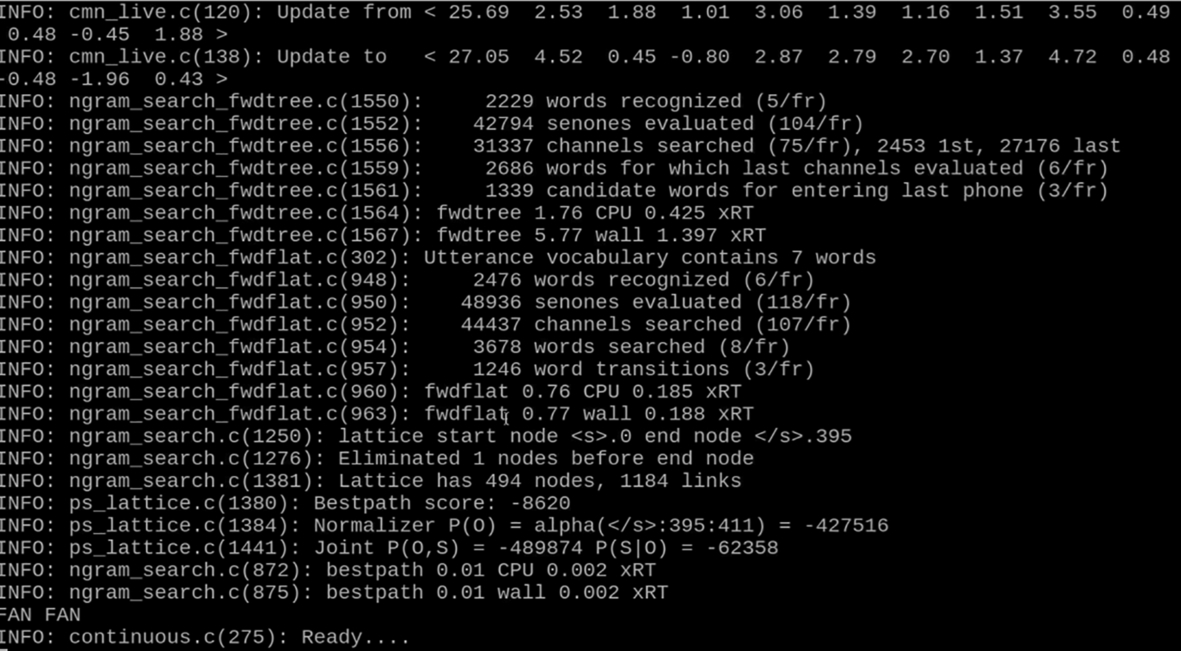

Fig. 6.4 shows a sample of the system processing speech. In this output we, the system attempts to recognize the word “FAN” from which is contained in the dictionary file. Here is the output of one speech recognition.

The results show a speaker saying the word “FAN”. The results show that 2063 words were recognized at a rate of 6 words per frame, 41,553 senones evaluated at 121 senones per frame and taking a combined total time of 0.186 extract performance data (xRT). This indicates that the system is able to convert speech to text when it is spoken by users with different accents based on their ethnic backgrounds. With the use of LIRC, the spoken command can be matched to a corresponding infrared signal that the system can transmit with an infrared transmitter to turn the air condition unit on/off. With additional hardware like an ultrasonic sensor and light intensity sensors the system can also control lighting in rooms or manage appliances by turning them on/off based on the presence or absence of a person or lighting in a room.

6.4 Significance/Contribution

Home appliances are also using wireless technologies and can be accessed by radio communication and also accessed from outside the home by the Internet, which will make life easier. Although this technology is advancing in many other sectors like road traffic, hotels, etc., implementing it in homes, especially in developing countries, has not been very successful. That’s because of the high cost for the hardware to enable a home to be fully automated and monitored by using human speech. Most people in homes rely on caregivers to assist them in doing everyday tasks, but given the choice, some people might appreciate the convenience of automation in a home. The approach shown in this chapter aims at enabling people to be able to automate processes in the home by using voice commands. This will reduce the reliance on caregivers by elderly and physically challenged people. The proposed system approach shows the possibility of using an STT engine to convert spoken commands into text, and then using the text as a form of commands that the system will use to transmit infrared signal to the appliance.

The system uses PocketSphinx to capture speech signal, and then convert the speech to text. The system then analyses the converted text to determine if it contains any known commands. The system also employs LIRC in conjunction with the infrared receiver and transmitter. A component of the system is used to train the system to use the correct infrared to transmit to an appliance. This training is done using an infrared receiver to synchronize the system with certain buttons of the remote control of the home appliance. Once the system is synchronized, the system uses the infrared transmitter to control the appliance as if the signal was being transmitted from the appliance remote control.

This innovative approach to home automation enables the system to integrate into homes by being able to control home appliances without requiring the additional purchase of other hardware or new home appliances, which makes it cost-effective.

Limitation: The proposed Smart Home Automation System cannot be used by speech-impaired people.

6.5 Conclusion

This chapter presented a VAD home automation system that uses low-cost hardware to enable users to automate processes in a home. This automation can be beneficial to users that rely on caregivers to do day-to-day tasks like controlling appliances in homes. These users include the elderly, physically challenged people and other users who have the need to automate processes and tasks in a home. The system uses a STT engine to convert spoken commands to text, and uses the text to transmit a corresponding infrared signal to an appliance as if the infrared signal was coming from a remote control of the appliance. The system can further be enhanced by incorporating other sensors and actuators like ultrasonic sensors, light intensity sensors, which can make the system act autonomously in controlling other appliances in a home. This indicates that we can all benefit from home automation approaches that can integrate with our current appliances.