Performance Evaluation

This chapter studies the performance of interconnection networks, analyzing the effect of network traffic and the impact of many design parameters discussed in previous chapters. Whenever possible, suggestions will be given for some design parameters. However, we do not intend to study the whole design space. Therefore, this chapter also presents general aspects of network evaluation, so that you can evaluate the effect of parameters that are not considered in this chapter.

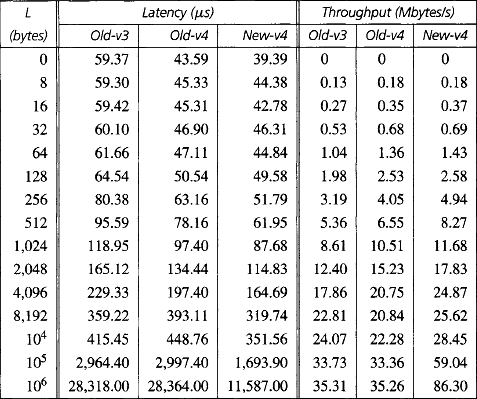

As indicated in Chapter 8, a high percentage of the communication latency in multicomputers is produced by the overhead in the software messaging layer. At first glance, it may seem that spending time in improving the performance of the interconnection network hardware is useless. However, for very long messages, communication latency is still dominated by the network hardware latency. In this case, networks with a higher channel bandwidth may achieve a higher performance. On the other hand, messages are usually sent by the cache controller in distributed, shared-memory multiprocessors with coherent caches. In this architecture there is no software messaging layer. Therefore, the performance of the interconnection network hardware is much more critical. As a consequence, in this chapter we will mainly evaluate the performance of the interconnection network hardware. We will consider the overhead of the software messaging layer in Section 9.12.

Network load has a very strong influence on performance. In general, for a given distribution of destinations, the average message latency of a wormhole-switched network is more heavily affected by network load than by any design parameter, provided that a reasonable choice is made for those parameters. Also, throughput is heavily affected by the traffic pattern (distribution of destinations). Therefore, modeling the network workload is very important. Most performance evaluation results have only considered a uniform distribution of message destinations. However, several researchers have considered other synthetic workloads, trying to model the behavior of real applications. Up to now, very few researchers have evaluated networks using the traffic patterns produced by real applications. Therefore, in this chapter we will mainly present results obtained by using synthetic workloads.

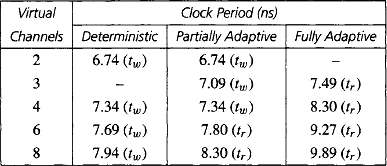

Most evaluation results presented in this chapter do not consider the impact of design parameters on clock frequency. However, some design choices may considerably increase router complexity, therefore reducing clock frequency accordingly. Router delay is mostly affected by the number of dimensions of the network, the routing algorithm, and the number of virtual channels per physical channel. The evaluation presented in Section 9.10 considers a very detailed model, trying to be as close as possible to the real behavior of the networks. As will be seen, conclusions are a bit different when the impact of design parameters on clock frequency is considered.

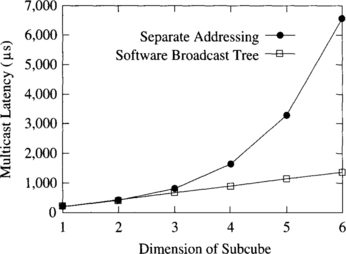

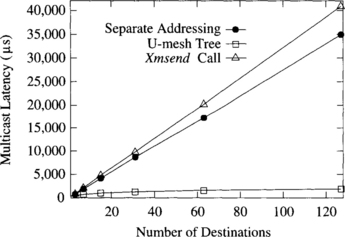

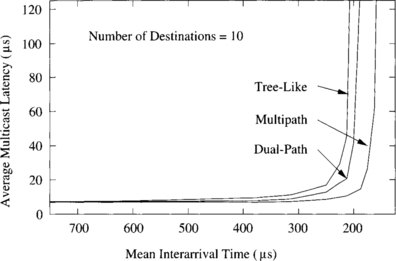

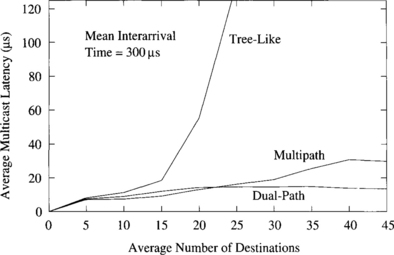

In addition to unicast messages, many parallel applications perform some collective communication operations. Providing support for those operations may reduce latency significantly. Collective communication schemes range from software approaches based on unicast messages to specific hardware support for multidestination messages. These schemes are evaluated in Section 9.11.

Finally, most network evaluations only focus on performance. However, reliability is also important. A wide spectrum of routing protocols has been proposed to tolerate faulty components in the network, ranging from software approaches for wormhole switching to very resilient mechanisms based on different switching techniques. Thus, we will also present some results concerning network fault tolerance in Section 9.13.

9.1 Performance Metrics and Normalized Results

This section introduces performance metrics and defines some standard ways to illustrate performance results. In the absence of faults, the most important performance metrics of an interconnection network are latency and throughput.

Latency is the time elapsed from when the message transmission is initiated until the message is received at the destination node. This general definition is vague and can be interpreted in different ways. If the study only considers the network hardware, latency is usually defined as the time elapsed from when the message header is injected into the network at the source node until the last unit of information is received at the destination node. If the study also considers the injection queues, the queuing time at the source node is added to the latency. This queuing time is usually negligible unless the network is close to its saturation point. When the messaging layer is also being considered, latency is defined as the time elapsed from when the system call to send a message is initiated at the source node until the system call to receive that message returns control to the user program at the destination node.

Latency can also be defined for collective communication operations. In this case, latency is usually measured from when the operation starts at some node until all the nodes involved in that operation have completed their task. For example, when only the network hardware is being considered, the latency of a multicast operation is the time elapsed from when the first fragment of the message header is injected into the network at the source node until the last unit of information is received at the last destination node.

The latency of individual messages is not important, especially when the study is performed using synthetic workloads. In most cases, the designer is interested in the average value of the latency. The standard deviation is also important because the execution time of parallel programs may increase considerably if some messages experience a much higher latency than the average value. A high value of the standard deviation usually indicates that some messages are blocked for a long time in the network. The peak value of the latency can also help in identifying these situations.

Latency is measured in time units. However, when comparing several design choices, the absolute value is not important. As many comparisons are performed by using network simulators, latency can be measured in simulator clock cycles. Unless otherwise stated, the latency plots presented in this chapter for unicast messages measure the average value of the time elapsed from when the message header is injected into the network at the source node until the last unit of information is received at the destination node. In most cases, the simulator clock cycle is the unit of measurement. However, in Section 9.10, latency is measured in nanoseconds.

Throughput is the maximum amount of information delivered per time unit. It can also be defined as the maximum traffic accepted by the network, where traffic, or accepted traffic is the amount of information delivered per time unit. Throughput could be measured in messages per second or messages per clock cycle, depending on whether absolute or relative timing is used. However, throughput would depend on message and network size. So, throughput is usually normalized, dividing it by message size and network size. As a result, throughput can be measured in bits per node and microsecond, or in bits per node and clock cycle. Again, when comparing different design choices by simulation, and assuming that channel width is equal to flit size, throughput can be measured in flits per node and clock cycle. Alternatively, accepted traffic and throughput can be measured as a fraction of network capacity. A uniformly loaded network is operating at capacity if the most heavily loaded channel is used 100% of the time [72]. Again, network capacity depends on the communication pattern.

A standard way to measure accepted traffic and throughput was proposed at the Workshop on Parallel Computer Routing and Communication (PCRCW’94). It consists of representing them as a fraction of the network capacity for a uniform distribution of destinations, assuming that the most heavily loaded channels are located in the network bisection. This network capacity is referred to as normalized bandwidth. So, regardless of the communication pattern used, it is recommended to measure applied load, accepted traffic, and throughput as a fraction of normalized bandwidth. Normalized bandwidth can be easily derived by considering that 50% of uniform random traffic crosses the bisection of the network. Thus, if a network has bisection bandwidth B bits/s, each node in an N-node network can inject 2B/N bits/s at the maximum load. Unless otherwise stated, accepted traffic and throughput are measured as a fraction of normalized bandwidth. While this is acceptable when comparing different design choices in the same network, it should be taken into account that those choices may lead to different clock cycles. In this case, each set of design parameters may produce a different bisection bandwidth, therefore invalidating the normalized bandwidth as a traffic unit. In that case, accepted traffic and throughput can be measured in bits (flits) per node and microsecond. We use this unit in Section 9.10.

A common misconception consists of using throughput instead of traffic. As mentioned above, throughput is the maximum accepted traffic. Another misconception consists of considering throughput or traffic as input parameters instead of measurements, even representing latency as a function of traffic. When running simulations with synthetic workloads, the applied load (also known as offered traffic, generation rate, or injection rate) is an input parameter while latency and accepted traffic are measurements. So, latency-traffic graphs do not represent functions. It should be noted that the network may be unstable when accepted traffic reaches its maximum value. In this case, increasing the applied load may reduce the accepted traffic until a stable point is reached. As a consequence, for some values of the accepted traffic there exist two values for the latency, clearly indicating that the graph does not represent a function.

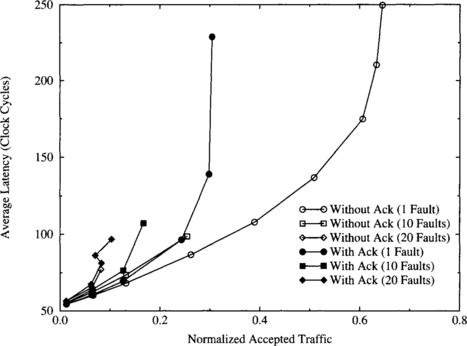

In the presence of faults, both performance and reliability are important. When presenting performance plots, the Chaos Normal Form (CNF) format (to be described below) should be preferred in order to analyze accepted traffic as a function of applied load. Plots can be represented for different values of the number of faults. In this case, accepted traffic can be smaller than applied load because the network is saturated or because some messages cannot be delivered in the presence of faults. Another interesting measure is the probability of message delivery as a function of the number of failures.

The next sections describe two standard formats to represent performance results. These formats were proposed at PCRCW’94. The CNF requires paired accepted traffic versus applied load and latency versus applied load graphs. The Burton Normal Form (BNF) uses a single latency versus accepted traffic graph. Use of only latency (including source queuing) versus applied load is discouraged because it is impossible to gain any data about performance above saturation using such graphs.

Chaos Normal Form (CNF)

CNF graphs display accepted traffic on one graph and network latency on a second graph. In both graphs, the X-axis corresponds to normalized applied load. By using two graphs, the latency is shown both below and above saturation, and the accepted traffic above saturation is visible. While BNF graphs show the same data, CNF graphs are more clear in their presentation of the data.

Burton Normal Form (BNF)

BNF graphs, advocated by Burton Smith, provide a single-graph plot of both latency and accepted traffic. The X-axis corresponds to accepted traffic, and the Y-axis corresponds to latency. Because the X-axis is a dependent variable, the resulting plot may not be a function. This causes the graph to be a bit hard to comprehend at first glance.

9.2 Workload Models

The evaluation of interconnection networks requires the definition of representative workload models. This is a difficult task because the behavior of the network may differ considerably from one architecture to another and from one application to another. For example, some applications running in multicomputers generate very long messages, while distributed, shared-memory multiprocessors with coherent caches generate very short messages. Moreover, in general, performance is more heavily affected by traffic conditions than by design parameters.

Up to now, there has been no agreement on a set of standard traces that could be used for network evaluation. Most performance analysis used synthetic workloads with different characteristics. In what follows, we describe the most frequently used workload models. These models can be used in the absence of more detailed information about the applications.

The workload model is basically defined by three parameters: distribution of destinations, injection rate, and message length. The distribution of destinations indicates the destination for the next message at each node. The most frequently used distribution is the uniform one. In this distribution, the probability of node i sending a message to node j is the same for all i and j, i ≠ j [288]. The case of nodes sending messages to themselves is excluded because we are interested in message transfers that use the network. The uniform distribution makes no assumptions about the type of computation generating the messages. In the study of interconnection networks, it is the most frequently used distribution. The uniform distribution provides what is likely to be an upper bound on the mean internode distance because most computations exhibit some degree of communication locality.

Communication locality can be classified as spatial or temporal [288]. An application exhibits spatial locality when the mean internode distance is smaller than in the uniform distribution. As a result, each message consumes less resources, also reducing contention. An application has temporal locality when it exhibits communication affinity among a subset of nodes. As a consequence, the probability of sending messages to nodes that were recently used as destinations for other messages is higher than for other nodes. It should be noted that nodes exhibiting communication affinity need not be near one another in the network.

When network traffic is not uniform, we would expect any reasonable mapping of a parallel computation to place those tasks that exchange messages with high frequency in close physical locations. Two simple distributions to model spatial locality are the sphere of locality and the decreasing probability distribution [288], In the former, a node sends messages to nodes inside a sphere centered on the source node with some usually high probability φ, and to nodes outside the sphere with probability 1 − φ. All the nodes inside the sphere have the same probability of being reached. The same occurs for the nodes outside the sphere. It should be noted that when the network size varies, the ratio between the number of nodes inside and outside the sphere is not constant. This distribution models the communication locality typical of programs solving structured problems (e.g., the nearest-neighbor communication typical of iterative partial differential equation solvers coupled with global communication for convergence checking). In practice, the sphere can be replaced by other geometric figures depending on the topology. For example, it could become a square or a cube in 2-D and 3-D meshes, respectively.

In the decreasing probability distribution, the probability of sending a message to a node decreases as the distance between the source and destination nodes increases. Reed and Grunwald [288] proposed the distribution function Φ(d) = Decay(l, dmax) × ld, 0 < l < 1, where d is the distance between the source and destination nodes, dmax is the network diameter, and l is a locality parameter. Decay (l, dmax) is a normalizing constant for the probability Φ, chosen such that the sum of the probabilities is equal to one. Small values of the locality parameter l mean a high degree of locality; larger values of l mean that messages can travel larger distances. In particular, when l is equal to 1/e, we obtain an exponential distribution. As l approaches one, the distribution function Φ approaches the uniform distribution. Conversely, as l approaches zero, Φ approaches a nearest-neighbor communication pattern. It should be noted that the decreasing probability distribution is adequate for the analysis of networks of different sizes. Simply, Decay (l, dmax) should be computed for each network.

The distributions described above exhibit different degrees of spatial locality but have no temporal locality. Recently, several specific communication patterns between pairs of nodes have been used to evaluate the performance of interconnection networks: bit reversal, perfect shuffle, butterfly, matrix transpose, and complement. These communication patterns take into account the permutations that are usually performed in parallel numerical algorithms [175, 200, 239]. In these patterns, the destination node for the messages generated by a given node is always the same. Therefore, the utilization factor of all the network links is not uniform. However, these distributions achieve the maximum degree of temporal locality. These communication patterns can be defined as follows:

![]() Bit reversal. The node with binary coordinates an−1, an−2, …,a1, a0 communicates with the node a0, a1, …, an−2, an−1.

Bit reversal. The node with binary coordinates an−1, an−2, …,a1, a0 communicates with the node a0, a1, …, an−2, an−1.

![]() Perfect shuffle. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node an−2, an−3, …, a0, an−1 (rotate left 1 bit).

Perfect shuffle. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node an−2, an−3, …, a0, an−1 (rotate left 1 bit).

![]() Butterfly. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node a0, an−2, …, a1, an−1 (swap the most and least significant bits).

Butterfly. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node a0, an−2, …, a1, an−1 (swap the most and least significant bits).

![]() Matrix transpose. The node with binary coordinates an−1, an−2, …, a1, a0communicates with the node

Matrix transpose. The node with binary coordinates an−1, an−2, …, a1, a0communicates with the node ![]() .

.

![]() Complement. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node

Complement. The node with binary coordinates an−1, an−2, …, a1, a0 communicates with the node ![]() .

.

Finally, a distribution based on a least recently used stack model has been proposed in [288] to model temporal locality. In this model, each node has its own stack containing the m nodes that were most recently sent messages. For each position in the stack there is a probability of sending a message to the node in that position. The sum of probabilities for nodes in the stack is less than one. Therefore, a node not currently in the stack may be chosen as the destination for the next transmission. In this case, after sending the message, its destination node will be included in the stack, replacing the least recently used destination.

For synthetic workloads, the injection rate is usually the same for all the nodes. In most cases, each node is chosen to generate messages according to an exponential distribution. The parameter λ of this distribution is referred to as the injection rate. Other possible distributions include a uniform distribution within an interval, bursty traffic, and traces from parallel applications. For the uniform distribution, the injection rate is the mean value of the interval. Bursty traffic can be generated either by injecting a burst of messages every time a node has to inject information into the network or by changing the injection rate periodically.

The network may use some congestion control mechanism. This mechanism can be implemented by placing a limit on the size of the buffer on the injection channels [36], by restricting injected messages to use some predetermined virtual channel(s) [73], or by waiting until the number of free output virtual channels at a node is higher than a threshold [220]. If a congestion control mechanism is used, the effective injection rate is limited when the network approaches the saturation point. This situation should be taken into account when analyzing performance graphs.

Message length can also be modeled in different ways. In most simulation runs, message length is chosen to be fixed. In this case, message length may be varied from one run to another in order to study the effect of message length. Also, message length can be computed according to a normal distribution or a uniform distribution within an interval. In some cases, it is interesting to analyze the mutual effect of messages with very different lengths. For example, injecting even a small fraction of very long messages into the network may increase the latency of some short messages considerably, therefore increasing the standard deviation of latency. In these cases, a weighted mix of short and long messages should be used. Both short and long messages may be of fixed size, or be normally or uniformly distributed as indicated above. Finally, it should be noted that message length has a considerable influence on network performance. So, the selected message length distribution should be representative of the intended applications. Obviously, application traces should be used if available.

In addition to the workload parameters described above, collective communication requires the generation of the set of nodes involved in each collective communication operation. The number of nodes involved in the operation may be fixed or randomly generated. Once the number of nodes has been determined, node addresses can be computed according to any of the models described above. For example, a multicast operation may start by computing the number of destinations using some statistical distribution. Then, the address of each destination can be computed according to a uniform distribution. Although most performance analyses have been performed by executing only collective communication operations, both unicast messages and multidestination messages coexist in real traffic. Therefore, workload models for collective communication operations should consider a mixture of unicast and multidestination messages. Both the percentage of multidestination messages and the number of nodes involved in the collective communication operation should match as much as possible the characteristics of the intended applications.

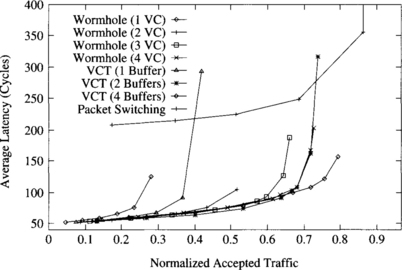

9.3 Comparison of Switching Techniques

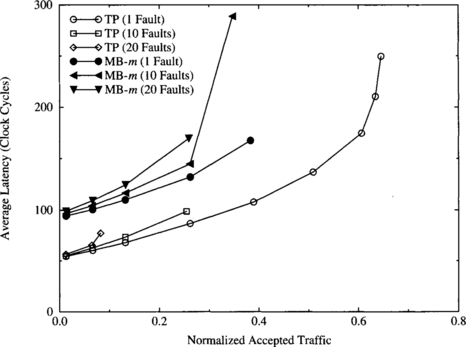

In this section, we compare the performance of several switching techniques. In particular, we analyze the performance of networks using packet switching, VCT switching, and wormhole switching. Previous comparisons [294] showed that VCT and wormhole switching achieve similar latency for low loads. For packet switching, latency is much higher. On the other hand, VCT and packet switching achieve similar throughput. This throughput is more than twice the value achieved by wormhole switching, which saturates at a lower applied load.

In this section, we take into account the effect of adding virtual channels. We will mainly focus on the comparison between VCT and wormhole switching. As mentioned in Chapter 2, routers implementing wormhole switching are simpler and can be clocked at a higher frequency. In this comparison, we are not taking into account the impact of the delay of router components on clock frequency. However, in order to make the comparison more fair, we assume that VCT and packet switching use edge buffers instead of central buffers. By doing so, the complexity of the flow control hardware is similar for all the switching techniques. Also, as packet switching does not pipeline packet transmission, it is assumed that there are no output buffers, transmitting data directly from an input edge buffer through the switch to the corresponding output channel.

For packet switching, we consider edge buffers with capacities of four packets. For VCT, we consider edge buffers with capacities of one, two, and four packets. For wormhole switching, we show the effect of adding virtual channels. The number of virtual channels is varied from one to four. In this comparison, the buffer capacity of each virtual channel is kept constant (4 flits) regardless of the number of virtual channels. Therefore, adding virtual channels also increases the total buffer capacity associated with each physical channel. The effect of adding virtual channels while keeping the total buffer capacity constant will be studied in Sections 9.7.1 and 9.10.5. The total buffer capacity per physical channel is equal to one packet when using four virtual channels. Note that a blocked packet will span four channels in wormhole switching regardless of the number of virtual channels used. Total buffer capacity will differ from one plot to another. The remaining router parameters (routing time, channel bandwidth, etc.) are the same for all the switching techniques.

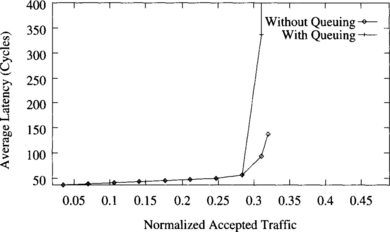

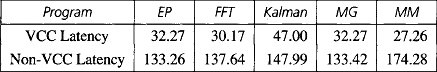

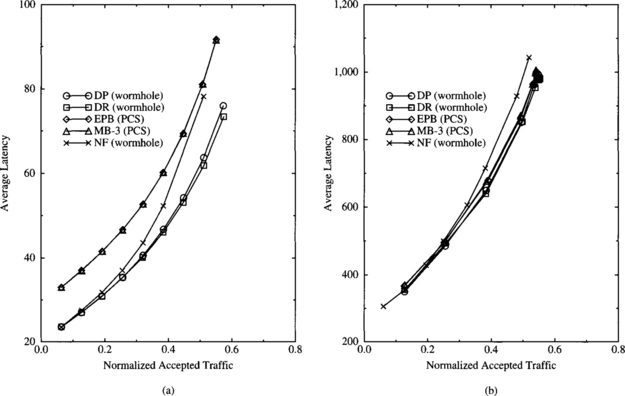

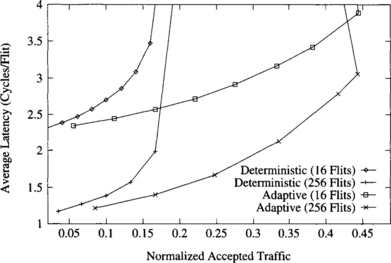

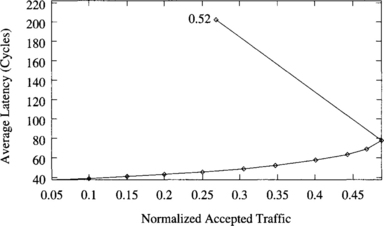

Figure 9.1 shows the average packet latency versus normalized accepted traffic for different switching techniques on a 16 × 16 mesh using dimension-order routing, 16-flit packets, and a uniform distribution of message destinations. As expected from the expressions for the base latency in Chapter 2, VCT and wormhole switching achieve the same latency for low traffic. This latency is much lower than the one for packet switching. However, when traffic increases, wormhole switching without virtual channels quickly saturates the network, resulting in low channel utilization.

Figure 9.1 Average packet latency versus normalized accepted traffic on a 16 × 16 mesh for different switching techniques and buffer capacities. (VC = virtual channel; VCT = virtual cut-through.)

The low channel utilization of wormhole switching can be improved by adding virtual channels. As virtual channels are added, network throughput increases accordingly. As shown in [72], adding more virtual channels yields diminishing returns. Similarly, increasing queue size for VCT switching also increases throughput considerably. An interesting observation is that the average latency for VCT and for wormhole switching with virtual channels is almost identical for the entire range of applied load until one of the curves reaches the saturation point. Moreover, when the total buffer capacity per physical channel is the same as in VCT, wormhole switching with virtual channels achieves a much higher throughput. It should be noted that in this case wormhole switching uses four virtual channels. However, VCT switching has capacity for a single packet per physical channel. Thus, the channel remains busy until the packet is completely forwarded. Although blocked packets in wormhole switching span multiple channels, the use of virtual channels allow other packets to pass blocked packets.

When VCT switching is implemented by using edge queues with capacity for several packets, channels are freed after transmitting each packet. Therefore, a blocked packet does not prevent the use of the channel by other packets. As a consequence, network throughput is higher than the one for wormhole switching with four virtual channels. Note, however, that the improvement is relatively small, despite the fact that the total buffer capacity for VCT switching is two or four times the buffer capacity for wormhole switching. In particular, we obtained the same results for wormhole switching with four virtual channels and VCT switching with two buffers per channel. We also run simulations for longer packets, keeping the size of the flit buffers used in wormhole switching. In this case, results are more favorable to VCT switching, but buffer requirements also increase accordingly.

Finally, when the network reaches the saturation point, VCT switching has to buffer packets very frequently, therefore preventing pipelining. As a consequence, VCT and packet switching with the same number of buffers achieve similar throughput when the network reaches the saturation point.

The most important conclusion is that wormhole switching is able to achieve latency and throughput comparable to those of VCT switching, provided that enough virtual channels are used and total buffer capacity is similar. If buffer capacity is higher for VCT switching, then this switching technique achieves better performance, but the difference is small if enough virtual channels are used in wormhole switching. These conclusions differ from the ones obtained in previous comparisons because virtual channels were not considered [294]. An additional advantage of wormhole switching is that it is able to handle messages of any size without splitting them into packets. However, VCT switching limits packet size, especially when buffers are implemented in hardware.

As we will see in Section 9.10.5, adding virtual channels increases router delay, decreasing clock frequency accordingly. However, similar considerations can be made when adding buffer space for VCT switching. In what follows we will focus on networks using wormhole switching unless otherwise stated.

9.4 Comparison of Routing Algorithms

In this section we analyze the performance of deterministic and adaptive routing algorithms on several topologies under different traffic conditions. The number of topologies and routing algorithms proposed in the literature is so high that it could take years to evaluate all of them. Therefore, we do not intend to present an exhaustive evaluation. Instead, we will focus on a few topologies and routing algorithms, showing the methodology that can be applied to obtain some preliminary evaluation results. These results are obtained by simulating the behavior of the network under synthetic loads. A detailed evaluation requires the use of representative traces from intended applications.

Most current multicomputers and multiprocessors use low-dimensional (2-D or 3-D) meshes (Intel Paragon [164], Stanford DASH [203], Stanford FLASH [192], MIT Alewife [4], MIT J-Machine [256], MIT Reliable Router [74]) or tori (Cray T3D [259], Cray T3E [313]). Therefore, we will use 2-D and 3-D meshes and tori for the evaluation presented in this section. Also, most multicomputers and multiprocessors use dimension-order routing. However, fully adaptive routing has been recently introduced in both experimental and commercial machines. This is the case for the MIT Reliable Router and the Cray T3E. These routing algorithms are based on the design methodology presented in Section 4.4.4. Therefore, the evaluation presented in this section analyzes the behavior of dimension-order routing algorithms and fully adaptive routing algorithms requiring two sets of virtual channels: one set for dimension-order routing and another set for fully adaptive minimal routing. We also include some performance results for true fully adaptive routing algorithms based on deadlock recovery techniques.

A brief description of the routing algorithms follows. The deterministic routing algorithm for meshes crosses dimensions in increasing order. It does not require virtual channels. When virtual channels are used, the first free virtual channel is selected. The fully adaptive routing algorithm for meshes was presented in Example 3.8. When there are several free output channels, preference is given to the fully adaptive channel in the lowest useful dimension, followed by adaptive channels in increasing useful dimensions. When more than two virtual channels are used, all the additional virtual channels allow fully adaptive routing. In this case, virtual channels are selected in such a way that channel multiplexing is minimized. The deterministic routing algorithm for tori requires two virtual channels per physical channel. It was presented in Example 4.1 for unidirectional channels. The algorithm evaluated in this section uses bidirectional channels. When more than two virtual channels are used, every pair of additional channels has the same routing functionality as the first pair. As this algorithm produces a low channel utilization, we also evaluate a dimension-order routing algorithm that allows a higher flexibility in the use of virtual channels. This algorithm is based on the extension of the routing algorithm presented in Example 3.3 for unidirectional rings. The extended algorithm uses bidirectional channels following minimal paths. Also, dimensions are crossed in ascending order. This algorithm will be referred to as “partially adaptive” because it offers two routing choices for many destinations. The fully adaptive routing algorithm for tori requires one additional virtual channel for fully adaptive minimal routing. The remaining channels are used as in the partially adaptive algorithm. This algorithm was described in Exercise 4.4. Again, when there are several free output channels, preference is given to the fully adaptive channel in the lowest useful dimension, followed by adaptive channels in increasing useful dimensions. When more than two virtual channels are used, all the additional virtual channels allow fully adaptive routing. In this case, virtual channels are selected in such a way that channel multiplexing is minimized. Finally, the true fully adaptive routing algorithm allows fully adaptive minimal routing on all the virtual channels. Again, virtual channels are selected in such a way that channel multiplexing is minimized. This algorithm was described in Section 4.5.3. Deadlocks may occur and are handled by using Disha (see Section 3.6).

Unless otherwise stated, simulations were run using the following parameters. It takes one clock cycle to compute the routing algorithm, to transfer one flit from an input buffer to an output buffer, or to transfer one flit across a physical channel. Input and output flit buffers have a variable capacity, so that the total buffer capacity per physical channel is kept constant. Each node has four injection and four delivery channels. Also, unless otherwise stated, message length is kept constant and equal to 16 flits (plus 1 header flit).

9.4.1 Performance under Uniform Traffic

In this section, we evaluate the performance of several routing algorithms by using a uniform distribution for message destinations.

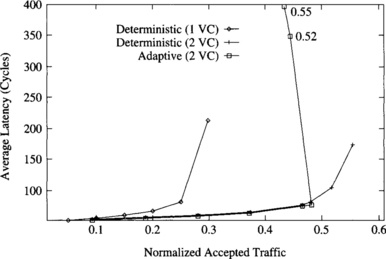

Deterministic versus Adaptive Routing

Figure 9.2 shows the average message latency versus normalized accepted traffic on a 2-D mesh when using a uniform distribution for message destinations. The graph shows the performance of deterministic routing with one and two virtual channels and of fully adaptive routing (with two virtual channels). As can be seen, the use of two virtual channels almost doubles the throughput of the deterministic routing algorithm. The main reason is that when messages block, channel bandwidth is not wasted because other messages are allowed to use that bandwidth. Therefore, adding a few virtual channels reduces contention and increases channel utilization. The adaptive algorithm achieves 88% of the throughput achieved by the deterministic algorithm with the same number of virtual channels. However, latency is almost identical, being slightly lower for the adaptive algorithm. So, the additional flexibility of fully adaptive routing is not able to improve performance when traffic is uniformly distributed. The reason is that the network is almost uniformly loaded. Additionally, meshes are not regular, and adaptive algorithms tend to concentrate traffic in the central part of the network bisection, thus reducing channel utilization in the borders of the mesh.

Figure 9.2 Average message latency versus normalized accepted traffic on a 16 × 16 mesh for a uniform distribution of message destinations.

It should be noted that there is a small performance degradation when the adaptive algorithm reaches the saturation point. If the injection rate is sustained at this point, latency increases considerably while accepted traffic decreases. This behavior is typical of routing algorithms that allow cyclic dependencies between channels and will be studied in Section 9.9.

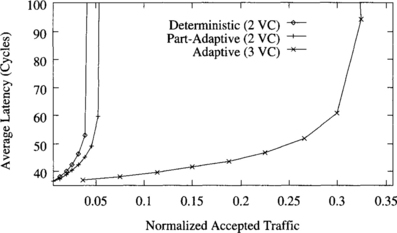

Figure 9.3 shows the average message latency versus normalized accepted traffic on a 3-D mesh when using a uniform distribution for message destinations. This graph is quite similar to the one for 2-D meshes. However, there are some significant differences. The advantages of using two virtual channels in the deterministic algorithm are more noticeable on 3-D meshes. In this case, throughput is doubled. Also, the fully adaptive algorithm achieves the same throughput as the deterministic algorithm with the same number of virtual channels. The latency reduction achieved by the fully adaptive routing algorithm is also more noticeable on 3-D meshes. The reason is that messages have an additional channel to choose from at most intermediate nodes. Again, there is some performance degradation when the adaptive algorithm reaches the saturation point. This degradation is more noticeable than on 2-D meshes.

Figure 9.3 Average message latency versus normalized accepted traffic on an 8 × 8 × 8 mesh for a uniform distribution of message destinations.

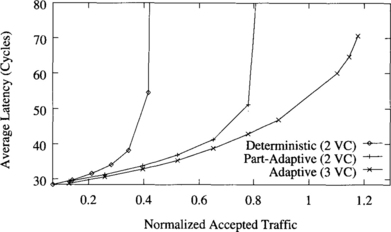

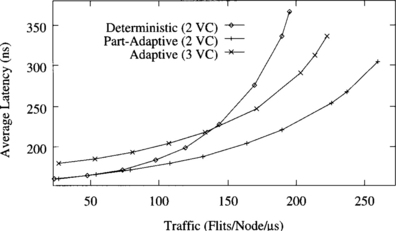

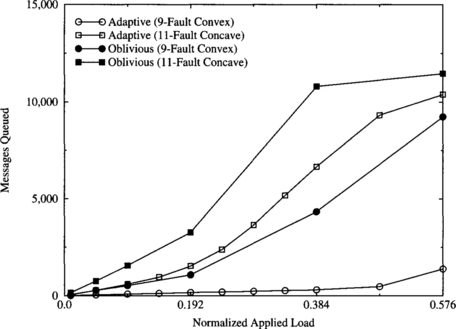

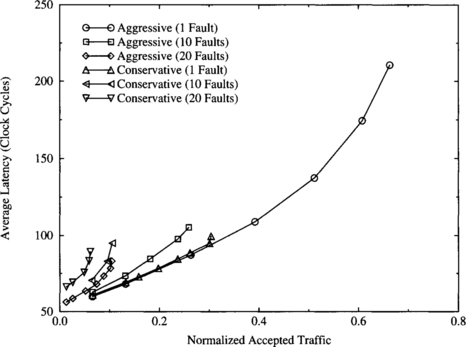

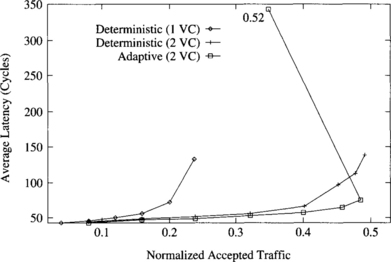

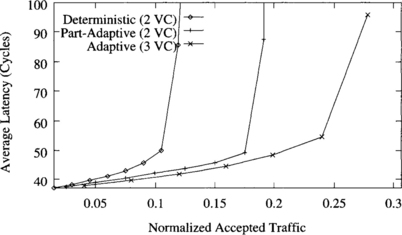

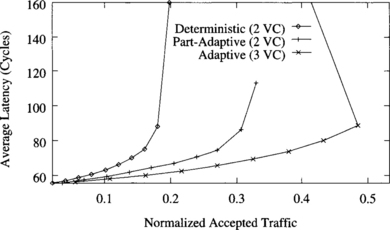

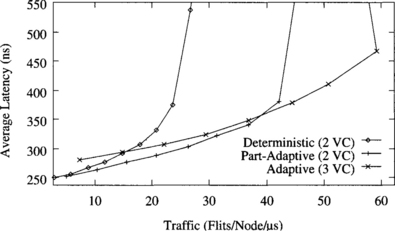

Figure 9.4 shows the average message latency versus normalized accepted traffic on a 2-D torus when using a uniform distribution for message destinations. The graph shows the performance of deterministic routing with two virtual channels, partially adaptive routing with two virtual channels, and fully adaptive routing with three virtual channels.

Figure 9.4 Average message latency versus normalized accepted traffic on a 16 × 16 torus for a uniform distribution of message destinations.

Both partially adaptive and fully adaptive algorithms considerably increase performance over the deterministic one. The partially adaptive algorithm increases throughput by 56%. The reason is that channel utilization is unbalanced in the deterministic routing algorithm. However, the partially adaptive algorithm allows most messages to choose between two virtual channels instead of one, therefore reducing contention and increasing channel utilization. Note that the additional flexibility is achieved without increasing the number of virtual channels. The fully adaptive algorithm increases throughput over the deterministic one by a factor of 2.5. This considerable improvement is mainly due to the ability to cross dimensions in any order. Unlike meshes, tori are regular topologies. So, adaptive algorithms are able to improve channel utilization by distributing traffic more uniformly across the network. Partially adaptive and fully adaptive algorithms also achieve a reduction in message latency with respect to the deterministic one for the full range of network load. Similarly, the fully adaptive algorithm reduces latency with respect to the partially adaptive one. However, performance degradation beyond the saturation point reduces accepted traffic to 55% of its maximum value.

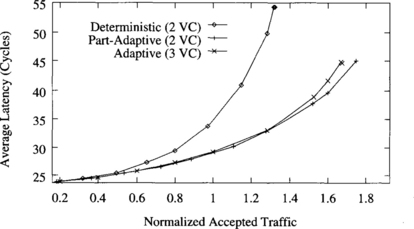

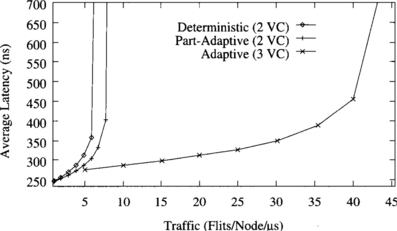

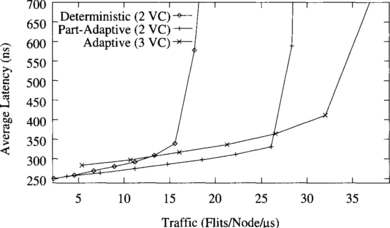

Figure 9.5 shows the average message latency versus normalized accepted traffic on a 3-D torus when using a uniform distribution for message destinations. In addition to the routing algorithms analyzed in Figure 9.4, this graph also shows the performance of the partially adaptive routing algorithm with three virtual channels. Similarly to meshes, adaptive routing algorithms perform comparatively better on a 3-D torus than on a 2-D torus. In this case, the partially adaptive and fully adaptive algorithms increase throughput by factors of 1.7 and 2.6, respectively, over the deterministic one. Latency reduction is also more noticeable than on a 2-D torus. This graph also shows that adding one virtual channel to the partially adaptive algorithm does not improve performance significantly. Although throughput increases by 18%, latency is also increased. The reason is that the partially adaptive algorithm with two virtual channels already allows the use of two virtual channels to most messages, therefore allowing them to share channel bandwidth. So, adding another virtual channel has a small impact on performance. The effect of adding virtual channels will be analyzed in more detail in Section 9.7.1. This result is similar for other traffic distributions. Therefore, in what follows we will only consider the partially adaptive algorithm with two virtual channels. This result also confirms that the improvement achieved by the fully adaptive algorithm is mainly due to the ability to cross dimensions in any order.

Figure 9.5 Average message latency versus normalized accepted traffic on an 8 × 8 × 8 torus for a uniform distribution of message destinations.

The relative behavior of deterministic and adaptive routing algorithms on 2-D and 3-D meshes and tori when message destinations are uniformly distributed is similar for other traffic distributions. In what follows, we will only present simulation results for a single topology. We have chosen the 3-D torus because most performance results published up to now focus on meshes. So, unless otherwise stated, performance results correspond to a 512-node 3-D torus.

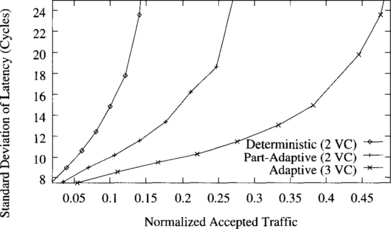

Figure 9.6 shows the standard deviation of latency versus normalized accepted traffic on a 3-D torus when using a uniform distribution for message destinations. The scale for the Y-axis has been selected to make differences more visible. As can be seen, a higher degree of adaptivity also reduces the deviation with respect to the mean value. The reason is that adaptive routing considerably reduces contention at intermediate nodes, making latency more predictable. This occurs in all the simulations we run. So, in what follows, we will only present graphs for the average message latency.

Figure 9.6 Standard deviation of latency versus normalized accepted traffic on an 8 × 8 × 8 torus for a uniform distribution of destinations.

In Figure 9.5, latency is measured from when a message is injected into the network. It does not consider queuing time at the source node. When queuing time is considered, latency should not differ significantly unless the network is close to saturation. When the network is close to saturation, queuing time increases considerably. Figure 9.7 shows the average message latency versus normalized accepted traffic for the partially adaptive algorithm on a 3-D torus. This figure shows that latency is not affected by queuing time unless the network is close to saturation.

Deadlock Avoidance versus Deadlock Recovery

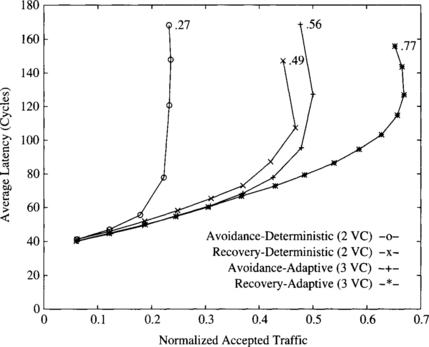

Figure 9.8 plots the average message latency versus normalized accepted traffic for deadlock-recovery-based and avoidance-based deterministic and adaptive routing algorithms. The simulations are based on a 3-D torus (512 nodes) with uniform traffic distribution and 16-flit messages. Each node has a single injection and delivery channel. The timeout used for deadlock detection is 25 cycles. The recovery-based deterministic routing algorithm with two virtual channels is able to use both of the virtual channels without restriction and is therefore able to achieve a 100% improvement in throughput over avoidance-based deterministic routing. Avoidance-based fully adaptive routing is able to achieve a slightly higher throughput and lower latency than recovery-based deterministic routing when using an additional virtual channel. Note that this algorithm allows unrestricted adaptive routing on only one of its three virtual channels. By freely using all three virtual channels, recovery-based true fully adaptive routing is able to achieve a 34% higher throughput than its avoidance-based counterpart.

Figure 9.8 Average message latency versus normalized accepted traffic on an 8 × 8 × 8 torus for a uniform distribution of message destinations.

These results show the potential improvement that can be achieved by using deadlock recovery techniques like Disha to handle deadlocks. It should be noted, however, that a single injection/delivery channel per node has been used in the simulations. If the number of injection/delivery channels per node is increased, the additional traffic injected into the network increases the probability of deadlock detection at saturation, and deadlock buffers are unable to recover from deadlock fast enough. Similarly, when messages are long, deadlock buffers are occupied for a long time every time a deadlock is recovered from, thus degrading performance considerably when the network reaches saturation. In this case, avoidance-based adaptive routing algorithms usually achieve better performance than recovery-based algorithms. This does not mean that recovery-based algorithms are not useful as general-purpose routing algorithms. Simply, currently available techniques are not able to recover from deadlock fast enough when messages are long or when several injection/delivery channels per node are used. The main reason is that currently available deadlock detection techniques detect many false deadlocks, therefore saturating the bandwidth provided by the deadlock buffers. However, this situation may change when more powerful techniques for deadlock detection are developed. In what follows, we will only analyze avoidance-based routing algorithms.

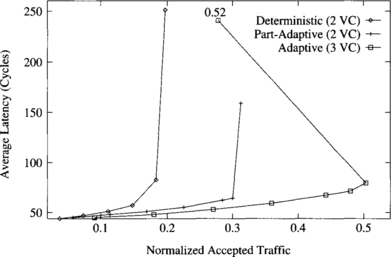

9.4.2 Performance under Local Traffic

Figure 9.9 shows the average message latency versus normalized accepted traffic when messages are sent locally. In this case, message destinations are uniformly distributed inside a cube centered at the source node with each side equal to four channels.

The partially adaptive algorithm doubles throughput with respect to the deterministic one when messages are sent locally. The fully adaptive algorithm performs even better, reaching a throughput three times higher than the deterministic algorithm and 50% higher than the partially adaptive algorithm. Latency is also smaller for the full range of accepted traffic.

When locality increases even more, the benefits of using adaptive algorithms are smaller because the distance between source and destination nodes are short, and the number of alternative paths is much smaller. Figure 9.10 shows the average message latency versus normalized accepted traffic when messages are uniformly distributed inside a cube centered at the source node with each side equal to two channels. In this case, partially and fully adaptive algorithms perform almost the same. All the improvement with respect to the deterministic algorithm comes from a better utilization of virtual channels.

9.4.3 Performance under Nonuniform Traffic

As stated in [73], adaptive routing is especially interesting when traffic is not uniform. Figures 9.11 and 9.12 compare the performance of routing algorithms for the bit reversal and perfect shuffle communication patterns, respectively. In both cases, the deterministic and partially adaptive algorithms achieve poor performance because both of them offer a single physical path for every source/destination pair.

Figure 9.11 Average message latency versus normalized accepted traffic for the bit reversal traffic pattern.

Figure 9.12 Average message latency versus normalized accepted traffic for the perfect shuffle traffic pattern.

The fully adaptive algorithm increases throughput by a factor of 2.25 with respect to the deterministic algorithm when using the perfect shuffle communication pattern, and it increases throughput by a factor of 8 in the bit reversal communication pattern. Standard deviation of message latency is also much smaller for the fully adaptive algorithm. Finally, note that there is no significant performance degradation when the fully adaptive algorithm reaches the saturation point, especially for the perfect shuffle communication pattern.

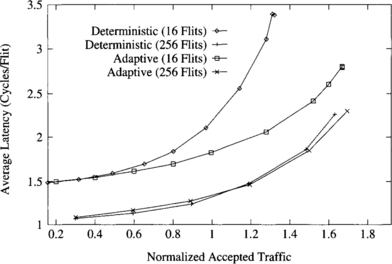

9.5 Effect of Message Length

In this section we analyze the effect of message length on performance. We will only consider the traffic patterns for which the adaptive algorithm behaves more differently: uniform distribution and very local traffic (side = 2). Figures 9.13 and 9.14 show the average message latency divided by message length for uniform and local traffic, respectively. For the sake of clarity, plots only show the behavior of deterministic and fully adaptive algorithms with short (16-flit) and long (256-flit) messages. We also run simulations for other message lengths. For 64-flit messages, plots were close to the plots for 256-flit messages. For 128-flit messages, plots almost overlapped the ones for 256-flit messages. Similar results were obtained for messages longer than 256 flits.

Figure 9.13 Average message latency divided by message length versus normalized accepted traffic for a uniform distribution of message destinations.

Figure 9.14 Average message latency divided by message length versus normalized accepted traffic for local traffic (side = 2).

The average flit latency is smaller for long messages. The reason is that messages are pipelined. Path setup time is amortized among more flits when messages are long. Moreover, data flits can advance faster than message headers because headers have to be routed, waiting for the routing control unit to compute the output channel, and possibly waiting for the output channel to become free. Therefore, when the header reaches the destination node, data flits advance faster, thus favoring long messages. Throughput is also smaller for short messages when the deterministic algorithm is used. However, the fully adaptive algorithm performs comparatively better for short messages, achieving almost the same throughput for short and long messages. Hence, this routing algorithm is more robust against variations in message size. This is due to the ability of the adaptive algorithm to use alternative paths. As a consequence, header blocking time is much smaller than for the deterministic algorithm, achieving a better channel utilization.

9.6 Effect of Network Size

In this section we study the performance of routing algorithms when network size increases. Figure 9.15 shows the average message latency versus normalized accepted traffic on a 16-ary 3-cube (4,096 nodes) when using a uniform distribution for message destinations.

Figure 9.15 Average message latency versus normalized accepted traffic for a uniform distribution of message destinations. Network size = 4K nodes.

The shape of the plots and the relative behavior of routing algorithms are similar to the ones for networks with 512 nodes (Figure 9.5). Although normalized throughput is approximately the same, absolute throughput is approximately half the value obtained for 512 nodes. The reason is that there are twice as many nodes in each dimension and the average distance traveled by each message is doubled. Also, bisection bandwidth increases by a factor of 4 while the number of nodes sending messages across the bisection increases by a factor of 8. However, latency only increases by 45% on average, clearly showing the advantages of message pipelining across the network. In summary, scalability is acceptable when traffic is uniformly distributed. However, the only way to make networks really scalable is by exploiting communication locality.

9.7 Impact of Design Parameters

This section analyzes the impact of several design parameters on network performance: number of virtual channels, number of ports, and buffer size.

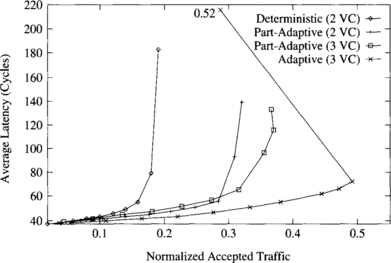

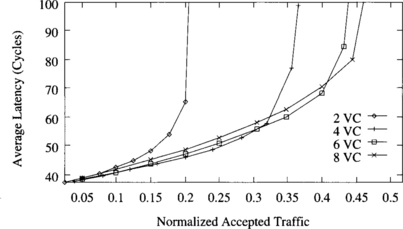

9.7.1 Effect of the Number of Virtual Channels

Splitting each physical channel into several virtual channels increases the number of routing choices, allowing messages to pass blocked messages. On the other hand, flits from several messages are multiplexed onto the same physical channel, slowing down both messages. The effect of increasing the number of virtual channels has been analyzed in [72] for deterministic routing algorithms on a 2-D mesh topology.

In this section, we analyze the effect of increasing the number of virtual channels on all the algorithms under study, using a uniform distribution of message destinations. As in [72], we assume that the total buffer capacity associated with each physical channel is kept constant and is equal to 16 flits (15 flits and 18 flits for three and six virtual channels, respectively).

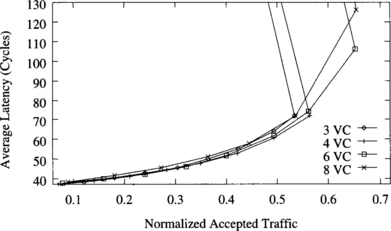

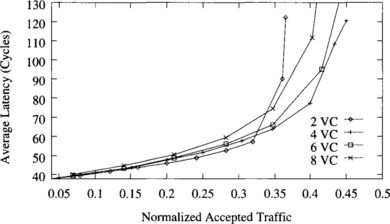

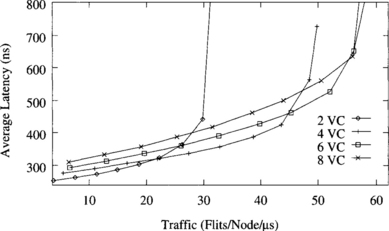

Figure 9.16 shows the behavior of the deterministic algorithm with two, four, six, and eight virtual channels per physical channel. Note that the number of virtual channels for this algorithm must be even. The higher the number of virtual channels, the higher the throughput. However, the highest increment is produced when changing from two to four virtual channels. Also, latency slightly increases when adding virtual channels. These results are very similar to the ones obtained in [72]. The explanation is simple: Adding the first few virtual channels allows messages to pass blocked messages, increasing channel utilization and throughput. Adding more virtual channels does not increase routing flexibility considerably. Moreover, buffer size is smaller, and blocked messages occupy more channels. As a result, throughput increases by a small amount. Adding virtual channels also has a negative effect [88]. Bandwidth is shared among several messages. However, bandwidth sharing is not uniform. A message may be crossing several physical channels with different degrees of multiplexing. The more multiplexed channel becomes a bottleneck, slightly increasing latency.

Figure 9.16 Effect of the number of virtual channels on the deterministic algorithm. Plots show the average message latency versus normalized accepted traffic for a uniform distribution of message destinations.

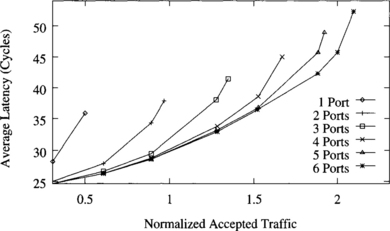

Figure 9.17 shows the behavior of the partially adaptive algorithm with two, four, six, and eight virtual channels. In this case, adding two virtual channels increases throughput by a small amount. However, adding more virtual channels increases latency and even reduces throughput. Moreover, the partially adaptive algorithm with two and four virtual channels achieves almost the same throughput as the deterministic algorithm with four and eight virtual channels, respectively. Note that the partially adaptive algorithm is identical to the deterministic one, except that it allows most messages to share all the virtual channels. Therefore, it effectively allows the same degree of channel multiplexing using half the number of virtual channels as the deterministic algorithm. When more than four virtual channels are used, the negative effects of channel multiplexing mentioned above outweigh the benefits.

Figure 9.17 Effect of the number of virtual channels on the partially adaptive algorithm. Plots show the average message latency versus normalized accepted traffic for a uniform distribution of message destinations.

Figure 9.18 shows the behavior of the fully adaptive algorithm with three, four, six, and eight virtual channels. Note that two virtual channels are used for deadlock avoidance and the remaining channels are used for fully adaptive routing. In this case, latency is very similar regardless of the number of virtual channels. Note that the selection function selects the output channel for a message in such a way that channel multiplexing is minimized. As a consequence, channel multiplexing is more uniform and channel utilization is higher, obtaining a higher throughput than the other routing algorithms. As indicated in Section 9.4.1, throughput decreases when the fully adaptive algorithm reaches the saturation point. This degradation can be clearly observed in Figure 9.18. Also, as indicated in [91], it can be seen that increasing the number of virtual channels increases throughput and even removes performance degradation.

9.7.2 Effect of the Number of Ports

Network hardware has become very fast. In some cases, network performance may be limited by the bandwidth available at the source and destination nodes to inject and deliver messages, respectively. In this section, we analyze the effect of that bandwidth. As fully adaptive algorithms achieve a higher throughput, this issue is more critical when adaptive routing is used. Therefore, we will restrict our study to fully adaptive algorithms. Injection and delivery channels are usually referred to as ports. In this study, we assume that each port has a bandwidth equal to the channel bandwidth.

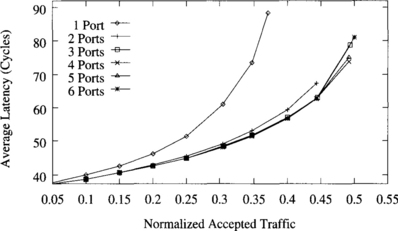

Figure 9.19 shows the effect of the number of ports for a uniform distribution of message destinations. For the sake of clarity, we removed the part of the plots corresponding to the performance degradation of the adaptive algorithm close to saturation. As can be seen, the network interface is a clear bottleneck when using a single port. Adding a second port decreases latency and increases throughput considerably. Adding a third port increases throughput by a small amount, and adding more ports does not modify the performance. It should be noted that latency does not consider source queuing time. When a single port is used, latency is higher because messages block when the header reaches the destination node, waiting for a free port. Those blocked messages remain in the network, therefore reducing channel utilization and throughput.

Figure 9.19 Effect of the number of ports for a uniform distribution of message destinations. Plots show the average message latency versus normalized accepted traffic.

As can be expected, more bandwidth is required at the network interface when messages are sent locally. Figure 9.20 shows the effect of the number of ports for local traffic with side = 2 (see Section 9.4.2). In this case, the number of ports has a considerable influence on performance. It has a stronger impact on throughput than the routing algorithm or even the topology used. The higher the number of ports, the higher the throughput. However, as the number of ports increases, adding more ports has a smaller impact on performance. Therefore, in order to avoid mixing the effect of different design parameters, we run all the simulations in the remaining sections of this chapter by using four ports.

Figure 9.20 Effect of the number of ports for local traffic (side = 2). Plots show the average message latency versus normalized accepted traffic.

Taking into account that the only way to make parallel machines really scalable is by exploiting locality, the effect of the number of ports is extremely important. However, most current multicomputers have a single port. In most cases, the limited bandwidth at the network interface does not limit the performance. The reason is that there is an even more important bottleneck in the network interface: the software messaging layer (see Section 9.12). The latency of the messaging layer reduces network utilization, hiding the effect of the number of ports. However, the number of ports may become the bottleneck in distributed, shared-memory multiprocessors.

9.7.3 Effect of Buffer Size

In this section, we analyze the effect of buffer size on performance. Buffers are required to allow continuous flit injection at the source node in the absence of contention, therefore hiding routing time and allowing windowing protocols between adjacent routers. Also, if messages are short enough, using deeper buffers allows messages to occupy a smaller number of channels when contention arises and buffers are filled. As a consequence, contention is reduced and throughput should increase. Finally, buffers are required to hold flits while a physical channel is transmitting flits from other buffers (virtual channels).

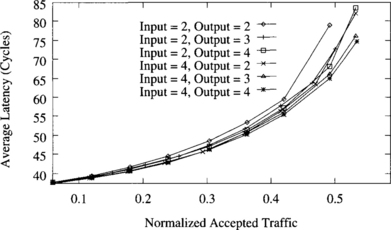

If buffers are kept small, buffer size does not affect clock frequency. However, using very deep buffers may slow down clock frequency, so there is a trade-off. Figure 9.21 shows the effect of input and output buffer size on performance using the fully adaptive routing algorithm with three virtual channels. In this case, no windowing protocol has been implemented. Minimum buffer size is two flits because flit transmission across physical channels is asynchronous. Using smaller buffers would produce bubbles in the message pipeline.

Figure 9.21 Effect of input and output buffer size. Plots show the average message latency versus normalized accepted traffic for the fully adaptive routing algorithm with three virtual channels using a uniform distribution of message destinations.

As expected, the average message latency decreases when buffer size increases. However, the effect of buffer size on performance is small. The only significant improvement occurs when the total flit capacity changes from 4 to 5 flits. Adding more flits to buffer capacity yields diminishing returns as buffer size increases. Increasing input buffer size produces almost the same effect as increasing output buffer size, as far as total buffer size remains the same. The reason is that there is a balance between the benefits of increasing the size of each buffer. On the one hand, increasing the input buffer size allows more data flits to make progress if the routing header is blocked for some cycles until an output channel is available. On the other hand, increasing the output buffer size allows more data flits to cross the switch while the physical channel is assigned to other virtual channels.

Moreover, when a message blocks, buffers are filled. In this case, it does not matter how total buffer capacity is split among input and output buffers. The only important issue is whether buffers are deep enough to allow the blocked message to leave the source node so that some channels are freed.

Note that the plots in Figure 9.21 correspond to short messages (16 flits). We also ran simulations for longer messages. In this case, buffer size has a more noticeable impact on performance. However, increasing buffer capacity does not increase performance significantly if messages are longer than the diameter of the network times the total buffer capacity of a virtual channel. The reason is that a blocked message keeps all the channels it previously reserved regardless of buffer size.

9.8 Comparison of Routing Algorithms for Irregular Topologies

In this section we analyze the performance of the routing algorithms described in Section 4.9 on switch-based networks with randomly generated irregular topologies under uniform traffic. We also study the influence of network size and message length.

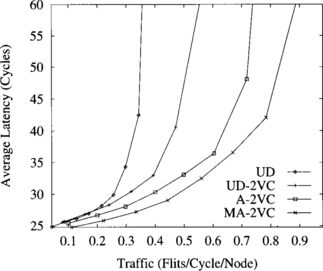

A brief description of the routing algorithms follows. A breadth-first spanning tree on the network graph is computed first using a distributed algorithm. Routing is based on an assignment of direction to the operational links. The up end of each link is defined as (1) the end whose switch is closer to the root in the spanning tree or (2) if both ends are at switches at the same tree level, the end whose switch has the lower ID. The up/down routing algorithm uses the following up/down rule: a legal route must traverse zero or more links in the up direction followed by zero or more links in the down direction. We will refer to this routing scheme as UD. It does not require virtual channels. When virtual channels are used, the first free virtual channel is selected. We will refer to the up/down routing scheme in which physical channels are split into two virtual channels as UD-2VC.

The adaptive routing algorithm proposed in [320] also splits each physical channel into two virtual channels (original and new channels). Newly injected messages can use the new channels following any minimal path, but the original channels can only be used according to the up/down rule. However, once a message reserves one of the original channels, it can no longer reserve any of the new channels again. When a message can choose among new and original channels, a higher priority is given to the new channels at any intermediate switch. We will refer to this routing algorithm as A-2VC.

Finally, an enhanced version of the A-2VC algorithm can be obtained as follows [319]: Newly injected messages can only leave the source switch using new channels belonging to minimal paths and never using original channels. When a message arrives at a switch from another switch through a new channel, the routing function gives a higher priority to the new channels belonging to minimal paths. If all of them are busy, then the routing algorithm selects an original channel belonging to a minimal path (if any). If none of the original channels provides minimal routing, then the original channel that provides the shortest path will be used. We will refer to this routing scheme as MA-2VC, since it provides minimal adaptive routing with two virtual channels.

Unless otherwise stated, simulations were run using the following parameters. Network topology is completely irregular and was generated randomly. However, for the sake of simplicity, we imposed three restrictions to the topologies that can be generated. First, we assumed that there are exactly four nodes (processors) connected to each switch. Also, two neighboring switches are connected by a single link. Finally, all the switches in the network have the same size. We assumed eight-port switches, thus leaving four ports available to connect to other switches. We evaluated networks with a size ranging from 16 switches (64 nodes) to 64 switches (256 nodes). For each network size, several distinct irregular topologies were analyzed. However, the average latency values achieved by each topology for each traffic rate were almost the same. The only differences arose when the networks were heavily loaded, close to saturation. Additionally, the throughput achieved by all the topologies was almost the same. Hence, we only show the results obtained by one of those topologies, chosen randomly. Input and output flit buffers have capacity for 4 flits. Each node has one injection and one delivery channel. For message length, 16-, 64-, and 256-flit messages were considered. Finally, it takes one clock cycle to compute the routing algorithm, to transfer one flit from an input buffer to an output buffer, or to transfer one flit across a physical channel.

Note that we assumed that virtual channel multiplexing can be efficiently implemented. In practice, implementing virtual channels is not trivial because switch-based networks with irregular topology are usually used in the context of networks of workstations. In this environment, link wires may be long, increasing signal propagation delay and making flow control more complex.

Figure 9.22 shows the average message latency versus accepted traffic for each routing scheme on a randomly generated irregular network with 16 switches. Message size is 16 flits. It should be noted that accepted traffic has not been normalized because normalized bandwidth differs from one network to another even for the same size. As can be seen, when virtual channels are used in the up/down routing scheme (UD-2VC), throughput increases by a factor of 1.5. The A-2VC routing algorithm doubles throughput and reduces latency with respect to the up/down routing scheme. The improvement with respect to UD-2VC is due to the additional adaptivity provided by A-2VC. When the MA-2VC routing scheme is used, throughput is almost tripled. Moreover, the latency achieved by MA-2VC is lower than the one for the rest of the routing strategies for the whole range of traffic. The improvement achieved by MA-2VC with respect to A-2VC is due to the use of shorter paths. This explains the reduction in latency as well as the increment in throughput because less bandwidth is wasted in nonminimal paths.

Figure 9.22 Average message latency versus accepted traffic for an irregular network with 16 switches. Message length is 16 flits.

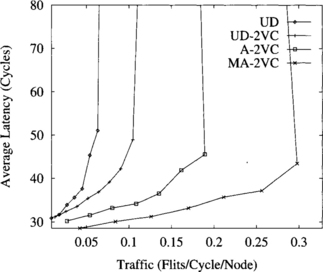

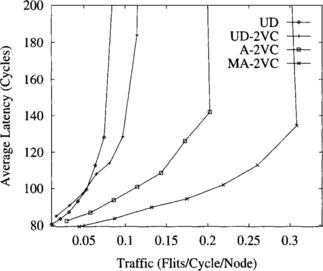

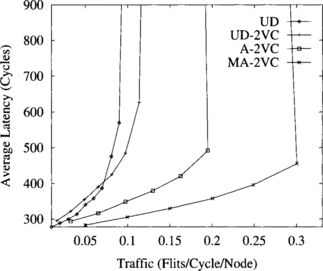

The MA-2VC routing scheme scales very well with network size. Figure 9.23 shows the results obtained on a network with 64 switches. In this network, throughput increases by factors of 4.2 and 2.7 with respect to the UD and UD-2VC schemes, respectively, when using the MA-2VC scheme. Latency is also reduced for the whole range of traffic. However, the factor of improvement in throughput achieved by the A-2VC scheme with respect to UD is only 2.6. Hence, when network size increases, the performance improvement achieved by the MA-2VC scheme also increases because there are larger differences among the minimal distance between any two switches and the routing distance imposed by the up/down routing algorithm.

Figure 9.23 Average message latency versus accepted traffic for an irregular network with 64 switches. Message length is 16 flits.

Figures 9.23, 9.24, and 9.25 show the influence of message size on the behavior of the routing schemes. Message size ranges from 16 to 256 flits. As message size increases, the benefits of using virtual channels become smaller. In particular, the UD-2VC routing scheme exhibits a higher latency than the UD scheme for low to medium network traffic. This is due to the fact that when a long message waits for a channel occupied by a long message, it is delayed. However, when two long messages share the channel bandwidth, both of them are delayed. Also, the UD routing scheme increases throughput by a small amount as message size increases. The routing schemes using virtual channels, and in particular the adaptive ones, achieve a similar performance regardless of message size. This behavior matches the one for regular topologies, as indicated in Section 9.5. These results show the robustness of the UD-2VC, A-2VC, and MA-2VC routing schemes against message size variation. Additionally, the MA-2VC routing scheme achieves the highest throughput and lowest latency for all message sizes.

Figure 9.24 Average message latency versus accepted traffic for an irregular network with 64 switches. Message length is 64 flits.

Figure 9.25 Average message latency versus accepted traffic for an irregular network with 64 switches. Message length is 256 flits.

Finally, it should be noted that the improvement achieved by using the theory proposed in Section 3.1.3 for the design of adaptive routing algorithms is much higher in irregular topologies than in regular ones. This is mainly due to the fact that most paths in irregular networks are nonminimal if those techniques are not used.

9.9 Injection Limitation

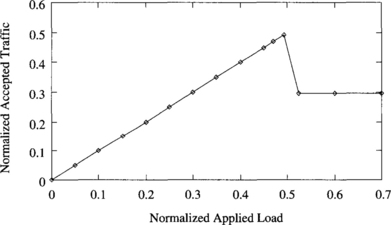

As indicated in previous sections, the performance of the fully adaptive algorithm degrades considerably when the saturation point is reached. Figure 9.26 shows the average message latency versus normalized accepted traffic for a uniform distribution of message destinations. This plot was already shown in Figure 9.5. Note that each point in the plot corresponds to a stable working point of the network (i.e., the network has reached a steady state). Also, note that Figure 9.26 does not represent a function. The average message latency is not a function of accepted traffic (traffic received at destination nodes). Both average message latency and accepted traffic are functions of the applied load.

Figure 9.26 Average message latency versus normalized accepted traffic for the fully adaptive algorithm using four ports and a uniform distribution of message destinations.

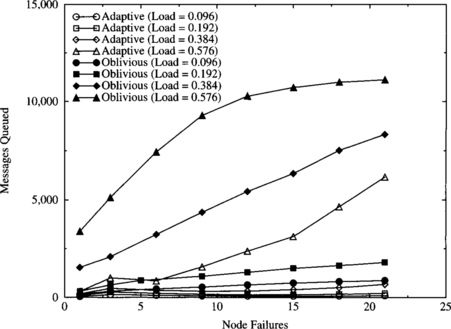

Figure 9.27 shows the normalized accepted traffic as a function of normalized applied load. As can be seen, accepted traffic increases linearly with the applied load until the saturation point is reached. Indeed, they have the same value because each point corresponds to a steady state. However, when the saturation point is reached, accepted traffic decreases considerably. Further increments of the applied load do not modify accepted traffic. Simply, injection buffers at source nodes grow continuously. Also, average message latency increases by an order of magnitude when the saturation point is reached, remaining constant as applied load increases further. Note that latency does not include source queuing time.

Figure 9.27 Normalized accepted traffic as a function of normalized applied load for the fully adaptive algorithm using four ports and a uniform distribution of message destinations.

This behavior typically arises when the routing algorithm allows cyclic dependencies between resources. As indicated in Chapter 3, deadlocks are avoided by using a subset of channels without cyclic dependencies between them to escape from cyclic waiting chains (escape channels). The bandwidth provided by the escape channels should be high enough to drain messages from cyclic waiting chains as fast as they are formed. The speed at which waiting chains are formed depends on network traffic. The worst case occurs when the network is beyond saturation. At this point congestion is very high. As a consequence, the probability of messages blocking cyclically is not negligible. Escape channels should be able to drain at least one message from each cyclic waiting chain fast enough so that those escape channels are free when they are requested by another possibly deadlocked message. Otherwise, performance degrades and throughput is considerably reduced. The resulting accepted traffic at this point depends on the bandwidth offered by the escape channels.

An interesting issue is that the probability of messages blocking cyclically depends not only on applied load. Increasing the number of virtual channels per physical channel decreases that probability. Reducing the number of injection/delivery ports also decreases that probability. However, those solutions may degrade performance considerably. Also, as indicated in Section 9.4.3, some communication patterns do not produce performance degradation. For those traffic patterns, messages do not block cyclically or do it very infrequently.

The best solution consists of designing the network correctly. A good network design should provide enough bandwidth for the intended applications. The network should not work close to the saturation point because contention at this point is high, increasing message latency and decreasing the overall performance. Even if the network reaches the saturation point for a short period of time, performance will not degrade as much as indicated in Figures 9.26 and 9.27. Note that the points beyond saturation correspond to steady states, requiring a sustained message generation rate higher than the one corresponding to saturation. Moreover, the simulation time required to reach a steady state for those points is an order of magnitude higher than for other points in the plot, indicating that performance degradation does not occur immediately after reaching the saturation point. Also, after reaching the saturation point for some period of time, performance improves again when the message generation rate falls below the value at the saturation point.

If the traffic requirements of the intended applications are not known in advance, it is still possible to avoid performance degradation by using simple hardware mechanisms. A very effective solution consists of limiting message injection when network traffic is high. For efficiency reasons, traffic should be estimated locally. Injection can be limited by placing a limit on the size of the buffer on the injection channels [36], by restricting injected messages to use some predetermined virtual channel(s) [73], or by waiting until the number of free output virtual channels at a node is higher than a threshold [220]. This mechanism can be implemented by keeping a count of the number of free virtual channels at each router. When this count is higher than a given threshold, message injection is allowed. Otherwise, messages have to wait at the source queue. Note that these mechanisms have no relationship with the injection limitation mechanism described in Section 3.3.3. Injection limitation mechanisms may produce starvation if the network works beyond the saturation point for long periods of time.

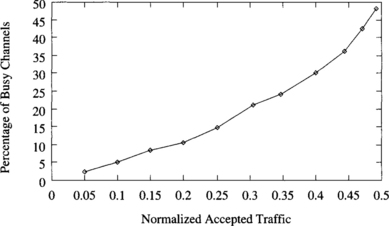

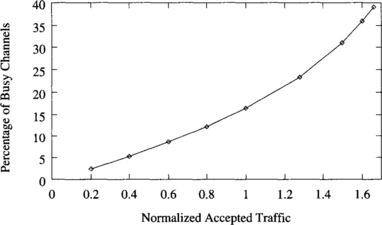

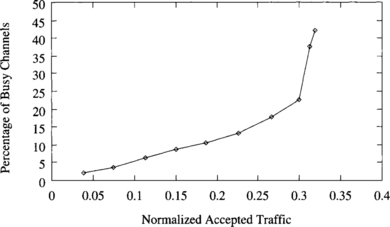

The injection limitation mechanism described above requires defining a suitable threshold for the number of free output virtual channels. Additionally, this threshold should be independent of the traffic pattern. Figures 9.28, 9.29, and 9.30 show the percentage of busy output virtual channels versus normalized accepted traffic for a uniform distribution, local traffic (side = 2), and the bit reversal traffic pattern, respectively. Interestingly enough, the percentage of busy output virtual channels at the saturation point is similar for all the distributions of destinations. It ranges from 40% to 48%. Other traffic patterns exhibit a similar behavior. Since a 3-D torus with three virtual channels per physical channel has 18 output virtual channels per router, seven or eight virtual channels are occupied. Therefore, 10 or 11 virtual channels are free on average at the saturation point. Thus, messages should only be injected if 11 or more virtual channels are free at the current router. The latency/traffic plots obtained for different traffic patterns when injection is limited are almost identical to the ones without injection limitation, except that throughput does not decrease and latency does not increase beyond the saturation point.

Figure 9.28 Percentage of busy output virtual channels versus normalized accepted traffic for a uniform distribution of message destinations.

Figure 9.29 Percentage of busy output virtual channels versus normalized accepted traffic for local traffic (side = 2).

Figure 9.30 Percentage of busy output virtual channels versus normalized accepted traffic for the bit reversal traffic pattern.

Despite the simplicity and effectiveness of the injection limitation mechanism described above, it has not been tried with traffic produced by real applications. Extensive simulations should be run before deciding to include it in a router design. Moreover, if network bandwidth is high enough, this mechanism is not necessary, as indicated above. Nevertheless, new and more powerful injection limitation mechanisms are currently being developed.

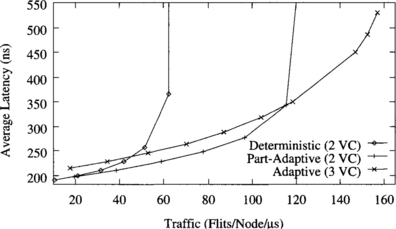

9.10 Impact of Router Delays on Performance

In this section, we consider the impact of router and wire delays on performance, using the model proposed in [57].

9.10.1 A Speed Model

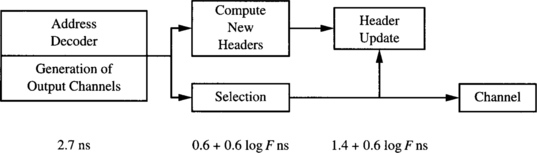

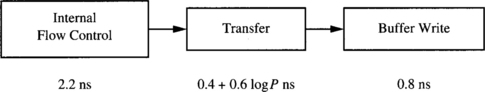

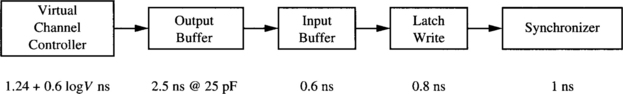

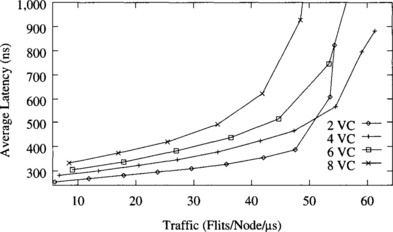

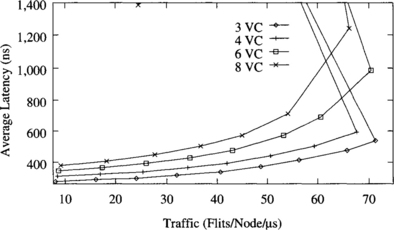

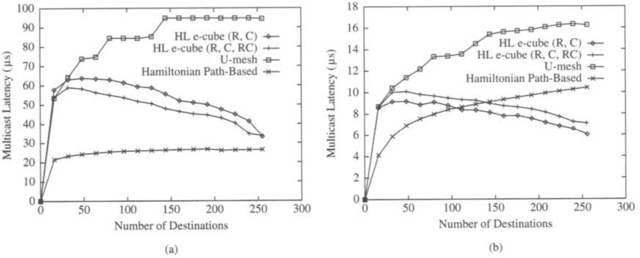

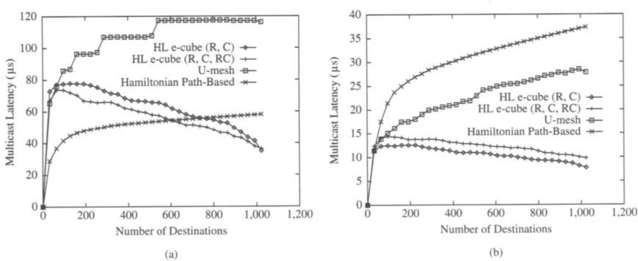

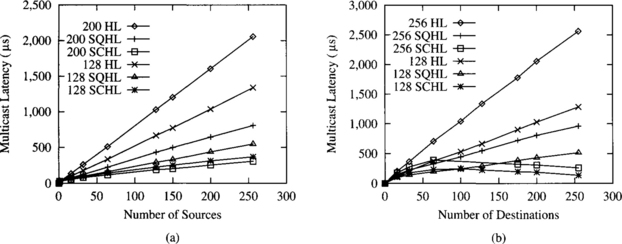

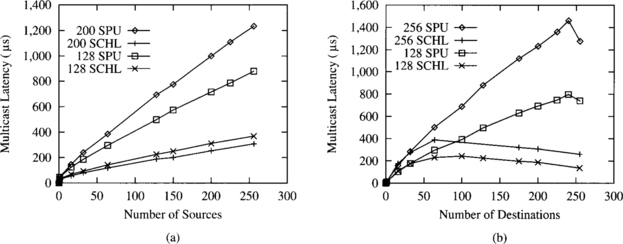

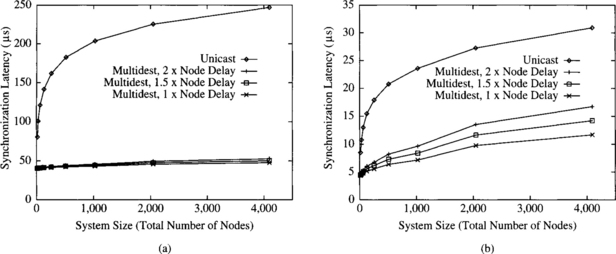

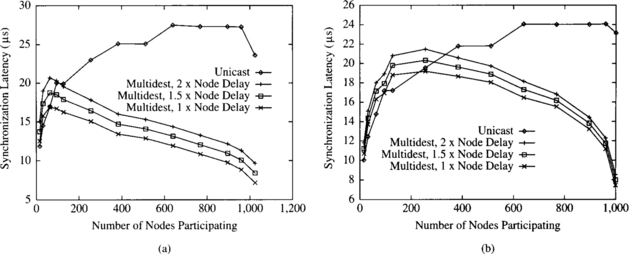

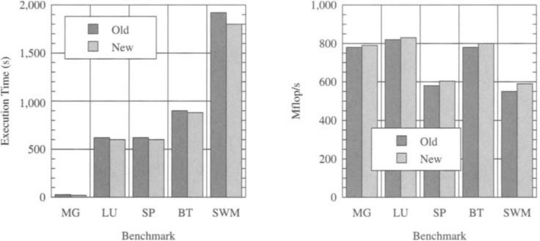

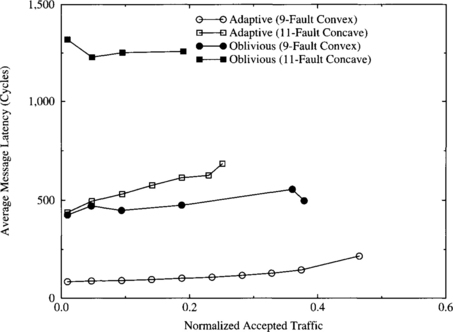

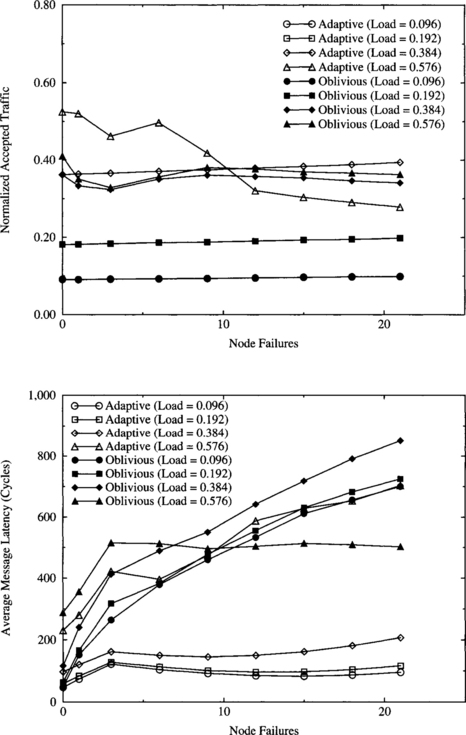

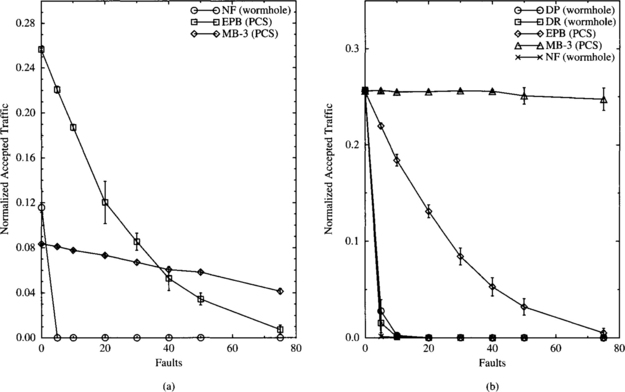

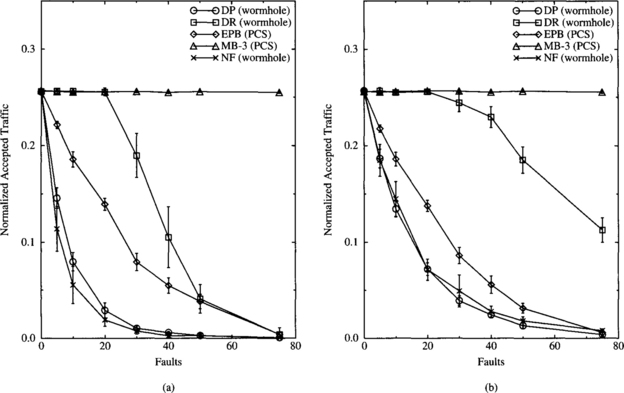

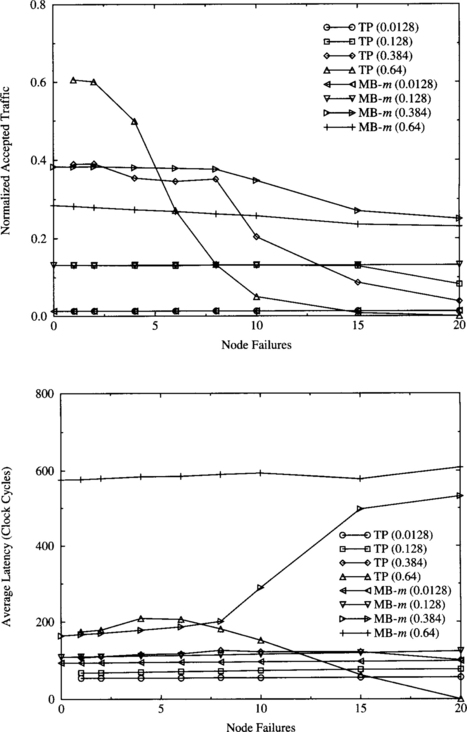

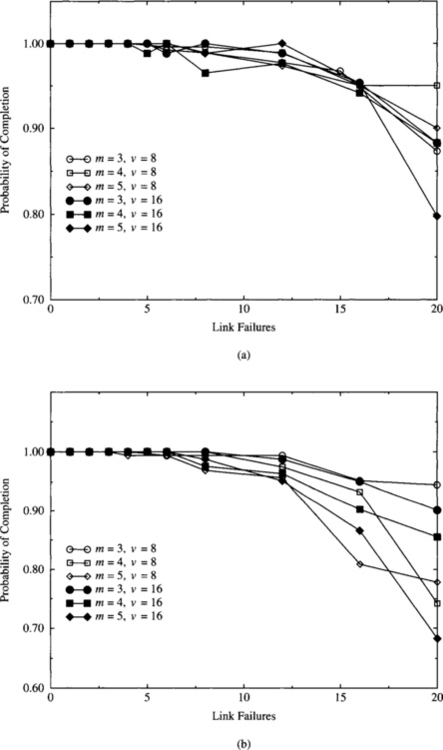

Most network simulators written up to now for wormhole switching work at the flit level. Writing a simulator that works at the gate level or at the transistor level is a complex task. Moreover, execution time would be extremely high, considerably reducing the design space that can be studied. A reasonable approximation to study the effect of design parameters on the performance of the interconnection network consists of modeling the delay of each component of the router. Then, for a given set of design parameters, the delay of each component is computed, determining the critical path and the number of clock cycles required for each operation, and computing the clock frequency for the router. Then, the simulation is run using a flit-level simulator. Finally, simulation results are corrected by using the previously computed clock frequency.