Modeling is the critical process at the heart of every application development. We could easily dedicate a whole book to this topic, so devoting just one chapter to this topic inevitably leads to many omissions. However, our goal here is to give you a brief introduction to the process of modeling and factors affecting it and to explain a document-based approach.

In this chapter, we will first look into the conceptual overview of modeling. After that, we will remind ourselves how we model data in relational databases, what affects this process, and what limitations are. Next, we will look into the document-based modeling approach, the ideas behind this concept, and its main characteristics. After that, we will address some common doubts you might have during a transition period from relational modeling to document-based modeling. Finally, you will see standard techniques to model relationships between documents in the NoSQL database.

Abstraction and Generalization

The British statistician George E. P. Box famously said, “All models are wrong, but some are useful.” This statement is true not only for mathematics and statistics but in every situation where we need to describe reality. From building mental models for understanding life events to writing invoice-processing applications in C#, you will always need to create a representation of reality.

Software developers are writing computer programs that describe a particular domain of life and enable users to track real-life entities, their relations, and various ways in which these entities are affecting each other. In reality, environments are not simple (consisting of few objects) but usually complex (many components).

Also, the reality is not intuitive (easy to grasp) but complicated. In such systems, connections and interactions between objects are not obvious. Many moving parts are highly interconnected. Consequentially, every change that happens to one or more entities affects them and is also propagating through the rest of the system. This rippling effect is hard to grasp, and those unforeseen consequences of changes are hard to understand and tame and describe in a programming language.

Luckily, software developers are not helpless when faced with these challenges, and there are many tools and approaches we developed over the years. One of the most important ones is an abstraction . We will narrow our scope to the subdomain that only covers entities we are interested in when modeling. Furthermore, we will look at the properties of these entities and isolate major ones, ignoring all details which are not contributing to the representation of our subdomain. This process is at the heart of abstraction – removing various information that are not contributing to our understanding of the subdomain we are modeling. We can say that this is very similar to the process of generalization – selecting a specific group of objects, observing their common or shared properties, and then using these properties to describe them.

For example, imagine that we are building an application for a small neighborhood bookstore. The bookstore owner intends to create a members club for customers. They could join in, populate their areas of interest and basic personal information, and receive weekly reading recommendations, exclusive discounts, and alerts about new arrivals of interest. Faced with the challenge of building such a service, you would first understand the domain, users, interactions between them, and outcomes your application would have to produce. Next, you would approach modeling of all identified entities in the system.

Looking at just one of these entities – customer – reveals an almost endless set of details about them. Customers have first and last names, email addresses, and dates of birth. But they also have eye color, favorite perfume, and favorite restaurant. Coming back to our goal, which is building a book recommendation service, will reveal which of these customer attributes are helpful for our effort and which ones we can ignore. After a short conversation with a bookstore owner, we conclude that eye color, perfume, and restaurant choice are not affecting book choice, and we decide to omit them from our model.

We can repeatedly apply this mental process to remove one by one any attributes irrelevant to our goal. Any model property that is not contributing to our intention will be removed, resulting in the simplification of our model. This way, our application will contain only relevant data, which will have a narrow scope. Less data means a simpler (less complex) model, and it will result in less complicated processes we need to implement. Interestingly, when modeling, we will reach a better outcome if we ignore the attributes of entities. Hence, a less precise and less comprehensive model will be better in describing our domain and serving the needs of our application.

Also, we must be aware that the software we are building and the models we are creating are not the goals by themselves. Models are means for supporting our users. Hence, as real life and circumstances are evolving, we should also develop, modify, and expand our applications to support these changes. Our models should describe the present state and support processes occurring at the initial phase of building application. Still, they must also be sustainable to modifications to support changes with reasonable effort from our side. Domains will expand, unexpected things will happen in life, and our model will inevitably evolve with it to keep these changes.

Modeling in Relational Databases

A data model is a collection of structures and shapes to describe and manipulate our data. During the dominance of relational databases , the dominant data model was the relational data model (RDM) . It consists of a set of tables, with each table holding a collection of rows. Every row represents an entity that is composed of columns (cells). A column may contain a reference to a row in the same or some other table, implementing the concept of the relationship between two entities.

A screenshot of the purchase order for the rattlesnake grocery. The labels listed are vendor, and ship to, and a table of 4 columns indicates the description, quantity, unit price, and total.

Purchase Order Document

An illustration depicts a model of relational data. 2 tables with titles, the order lines, and orders are listed.

Relational Database Model for Order

As you can see, this relational database model provides us with the means to store order documents. Every row is a collection of simple values. Rows are incapable of representing anything complex – you cannot store lists or nested structures. For that reason, we could not keep order lines which are an integral part of the Order. In order words, lack of advanced capabilities forced us to split complex entities like Order into two tables: orders and order_lines.

As we have just seen, RDBMS tables are very similar to Excel sheets – row after row of cells, containing simple linear information – like the number, string, or date. Order row cannot persist the list of Order Lines, so we had to introduce one more table to hold Order Lines and establish a relationship between these two tables to denote parent-child connection.

- 1.

Create table orders.

- 2.

Create table order_lines.

- 3.

Define ownership connection between these two tables.

We started with a document that exists as a single sheet of paper in real life. To model this paper document in our relational database, we have to create two entities and establish a connection. This separation of Order from its Lines is forced upon us by the technical limitations of the RDBMS itself.

Data Encapsulation Challenge

One of the practices with relational databases is their overexposure to direct access. The database should be looked upon as a way to persist entities from memory. A thick domain layer should shield the database from direct access. That domain can and should implement domain rules, both static and dynamic ones. Database structure should not be exposed and available to the clients using your application. The integrity of your persisted data has many levels, and they are checked by your domain business logic, implemented in your programming language.

Modeling in NoSQL Databases

This section will look at the genesis of the modeling approach with NoSQL databases, techniques that can represent your data, and some of the best practices.

Looking at programming languages, you can express your ideas in any language – it is possible, indeed, to develop an ERP system in assembly. But assembly is not the best tool for that task – as years passed, our industry developed better tools for developing business applications.

The same goes with databases – you can model the same domain in relational databases and NoSQL/document databases. There are no technical limitations. However, many developers will find document-oriented modeling as more natural compared to relational modeling. Document-oriented models are closer to real-life documents that are modeled, and you need to make fewer adjustments than dictated by the technical aspects of your database.

Your habits will be the single biggest obstacle when working with document databases. Over the years, if not decades, you were doing things in one manner, and now, all of a sudden, you need to give up, forget about all the best practices, and start following a new set of best practices in a leap of faith.

Relational databases are based on tables and relationships between them. And, when modeling for RDBMS, you are following that. We can model everything as a set of tables in a relational database, but that same thing can also be modeled as a document in the NoSQL database. The real challenge is creating a model that would enable you to leverage features offered by the concrete database you are using. A suitable and appropriate document data model can make your life easier and make you almost completely forget about the database – your database can become a “boring” component of your development cycle.

So, the first rule of good document-oriented modeling is “Do not apply relational database modeling techniques to NoSQL.” Relational and Document databases are two different worlds. They apply different paradigms, have different approaches, and have completely different philosophies.

If you fail to follow this advice, you will end up with an inappropriate and suboptimal model. That can make your life harder and make you fight your database even with simple tasks. However, you should treat your database not as your enemy. It would help if you treated it as your ally and friend. And friends are there to help.

JSON Documents

The first essential characteristic of NoSQL document databases is the format in which they are storing documents. For most NoSQL databases, JSON is either a native or supported format. Specified in the early 2000s by Douglas Crockford, JSON stands for JavaScript Object Notation . This format is textual, standardized by ECMA, and even though it is a subset of JavaScript, JSON is language-independent.

Unlike relational databases, we do not have technical limitations forcing us to split this Order into multiple entities. Furthermore, JSON is an industry-standard format for data exchange. All major languages like C#, Java, or Python support serialization of objects to JSON format and deserialization from JSON back to objects.

Hence, when modeling the data domain for a NoSQL database, our goal is to develop a set of JSON documents describing our subdomain. In the next section, we will see how to approach this nontrivial task.

Properties of Well-Modeled Documents

There is no algorithm you could apply to reach a perfect model. Modeling skills are gained through experience over many projects. You will attempt to produce a model, implement it, and then evaluate it over time, as your application is working and as change requests are arriving. Also, there is never just one way to model things. There are many variants, and you can never label any of the variants as “proper,” “best,” “most appropriate,” or “by the book.” Many factors will affect this process – you will have to think about performance, storage allocation, type of queries, business rules, and directions. Your application will most likely evolve.

Independent: Document should have its separate existence from any other documents.

Isolated: Document should be able to change independently from other documents.

Coherent: Document should be legible on its own without referencing other documents.

JSON Order Document

Now, let us consider if we can model this order line as a separate document from the perspective of our first goal – independence . Is this order line independent? Can it have meaningful existence? Thinking further, have you ever seen an order line printed on paper, without any more details? The answer is clear – the order line cannot have an independent existence; it does not have a meaning outside of the scope of its parent Order. We were modeling Order Line as a separate document that would result in JSON depleted of the substance.

In this example, we decided to expand company info to keep track of total orders. Consequently, every time we create a new Order, we need to update all existing orders for the same Company and increase TotalOrders value by one. Such an expanded model is not isolated anymore. Changes we make within one Document’s scope generate the ripple effect, resulting in a mandatory change to one or more other documents.

In this section, we learned what the three properties of well-modeled documents are. After that, we checked our initial Order model against these principles and finally corrected our initial model into the final version. This final Order document holds complete information about the Order (coherence), and any changes we make to it will not force us to update other documents (isolation). Also, the independent nature of this model provides us with the freedom to produce a paper version and send it within the envelope – the recipient will be able to examine and understand it without the need to ask additional questions.

We managed to achieve one more aspect with this modeling – we created a temporal snapshot model . Our Document captures all relevant information as they were at the time of document creation. For example, we recorded prices for both of the products – if these products become cheaper or more expensive after the creation of the Order, that change will not affect our Document. Also, the shipment address is verified to be a valid one. If it changes in the future, e.g., if Company relocates, we will have a precise record that the shipment went to Albuquerque, USA, and not some other city or country. The importance of this data corruption prevention cannot be understated.

In the next section, we will see that besides these properties of quality models, we also have one more powerful mental model for the development of document models.

Aggregates

As we saw in the previous chapter, the NoSQL ecosystem has many different flavors and approaches implemented by various databases. However, three categories – key-value, document, and column-family store – share a common approach by providing you with the ability to define aggregates.

Aggregate is a term originating in domain-driven design (DDD) , where it represents a collection of related objects treated as one single unit. Suppose you think about the separation of your paper document into information pieces as an act of normalization. In that case, Aggregate orientation represents the opposite process - denormalization - deciding which of these separated chunks of data belong together and bringing them together into one unit.

A natural question that follows is “how big and comprehensive this unit should be”? If the third normal form is one extreme, shall we go to the other extreme and model the whole domain as one large unit? To answer this question, we need to look into the gist of what aggregate as a unit represents.

Unit of Change and Unit of Consistency

An aggregate is a unit of change and a unit of consistency.

A unit of change will be every entity in your database model that can handle all requested changes without a need to go to other documents. In other words, changes we make are propagating within one aggregate.

Independent and coherent – they are a collection of related objects joined together in one Document. These objects are containing complete and rounded information and can exist as a separate unit. We can understand an aggregate without a need to look into related aggregates.

Isolated – as a unit of change, aggregates can be modified independently of other documents.

The second important property of an aggregate is that they represent a unit of consistency . A consistent database will, at any point in time, contain only valid data. All information stored in a database will conform to all business rules, constraints, and validation checks. No matter what changes we apply to our aggregates, if we use our validation rules, they will remain valid when saved to the database.

Going back to our ubiquitous Order example, if we add one more line to the Lines collection, we also need to update the Total property by recalculating the new total value of our Order. If it contains some more properties, like VAT or shipping costs, they will need to be updated. The system we are building can have some more advanced rules – e.g., we might offer a volume discount for shipping overseas. In that case, adding just one more order line will trigger a set of updates, rule checks, validation checks, and some more updates resulting from various calculations. After applying all updates, we will persist Order in the database in an atomic manner; our Order aggregate contains a set of units that changed together. This changeset was validated to conform to all business and other validation rules. We are now sure that our database holds Order that contains only valid data.

Distributed Systems

However, a database is just one component of the software system we are building. Taking a broader look, we will see that modern applications are complex. They consist of many components, coming in various forms – from utility classes, over in-process services to physically separated services and executing across a network of interconnected machines. Services are communicating synchronously or asynchronously.

Webshop application

Email server

ERP system

These are three isolated components existing on separated machines, communicating over the network. A system composed in this way is considered to be a distributed system.

Sending confirmation email, creating a new Order, and pushing it into the ERP system are one transactional operation from the perspective of your business logic. However, in a distributed system like this one is, the standard notion of transaction you are used to does not apply anymore. For example, what happens if the ERP system is not available when you try to send it new Order? Shall you abandon it? In that scenario, when will we send a confirmation email?

Distributed systems are challenging because they are complex and because many assumptions you usually make do not hold anymore. Operations you were executing as synchronous ones before might be asynchronous in the distributed system. Each time you communicate over the network, you must provide a fallback for the scenario of communication failure. Messages sent between distributed components will sometimes be queued and delivered dozens of seconds, maybe even minutes after you initially send them.

As a result, in most cases, in distributed systems, you will not be able to count on the transactional nature of your applications. Distributed systems have a notion of eventual consistency. Your business transaction will eventually complete, but there are no guarantees how long it will take for changes you ordered to be delivered and accepted by the components of the system. At the end of this propagation process , your distributed system will become consistent, but this fact is something that you must be aware of all the time.

Aggregates in the Distributed System

Revisiting the notion of the aggregate in the context of distributed systems reveals a significant impact on the design of aggregate boundaries. Namely, the scope of aggregate boundaries will determine units of data that will be part of it. Coming back to the fact that an aggregate is a unit of change and a unit of consistency, we can conclude that modifying and saving aggregate into the database will represent one transaction.

What is a transaction? The transaction represents an individual indivisible operation that either succeeds or fails as a complete unit. Partial completeness of the transaction is not possible, and when transaction processing is finished, you will have precise and reliable feedback about the outcome. This property is usually called atomicity, precisely because of the indivisible nature of the transaction.

Create a new order line with a product.

Recalculate order total to include the amount of new order line.

What would happen if just one of these two operations is executed? You would either charge for a product that would not be included in the Order or have the product in the Order without increasing the Total. In other words, you would either create financial damage to yourself or your customer.

Hence, it is clear that we need to treat both of these operations as one atomic unit. As a result, both of them will succeed (new order line is created and Total is correctly updated), or both fail (order total remains the same since no new order lines were added). Transaction failure is nothing dramatic per se. You can handle it in several standard ways, including retrying it, before showing an error message. However, what is essential is that in both cases of successful or unsuccessful outcomes, your data will remain in a consistent state.

Aggregates as Transaction Boundaries

We can conclude that aggregates are supporting transactions. We will load a Document that represents aggregate, update it, and save it back to the database in a transactional manner on a database level, which results in a guarantee of consistency of our Document.

You can ask yourself – why can we not update two documents in one transaction? This is possible indeed. As we already mentioned, modeling is a subtle intellectual activity. There are no complex rules, and everything you read so far is a recommendation based on best practices learned through the practical application of these principles. So, updating two aggregate documents in one transaction is possible. However, recommended approach would be to group things that change together transactionally into one aggregate and then propagate changes through the rest of the system in an eventually consistent manner. Also, on many occasions, you will be forced by the nature of your distributed system to go with eventual consistency.

One typical example would be a separation of your application into microservices. Every microservice will usually have its database. Transactions that are spanning two or more aggregates hosted within different databases are unviable and hard. This approach will create more problems than benefits for you as you develop, maintain, and evolve such application.

Hence, it would help if you looked at the boundaries of your aggregate as transactional boundaries. For most of the changes in your application, you will have to load, change, and save just one Document – a document that represents your aggregate.

Everything else that happens in your system will not be transactional but eventually consistent. You should take this into account and implement error handling mechanisms. Also, you should carefully approach every single business scenario in your application and determine when synchronous communication is a must and when you can use asynchronous communication.

Modeling in RavenDB

This section will show how RavenDB handles documents and how you can create and inspect documents. Also, we will take a look at identifiers assigned to each RavenDB document.

Documents

RavenDB is storing documents in JSON format, and they are almost unlimited in size – they can grow up to 2Gb. However, you should limit the size of your documents to be no bigger than a couple of megabytes. JSON larger than that is cumbersome to load and save back into the database and is a usually strong signal that your model is suboptimal.

A screenshot of 34 lines of code to create an order document, with options to save, clone and delete.

Order Document in RavenDB

Identifier orders/830-A

JSON body

These two are mandatory. Every RavenDB Document must have a unique identifier and non-empty JSON content.

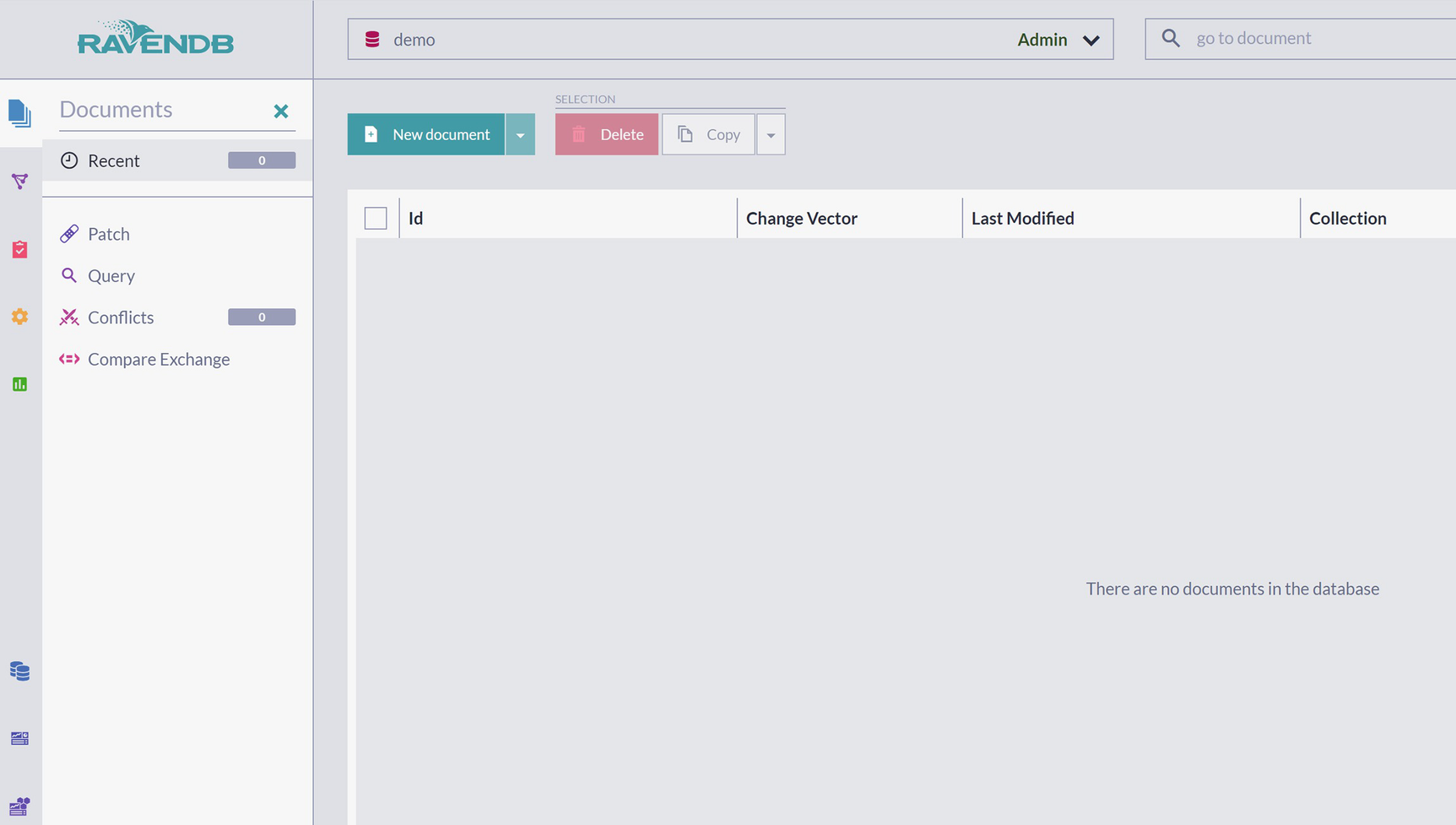

A screenshot of an empty database of Raven D B. It has 2 sections. Under the documents section, the icons of recent, patch, query, conflicts, and compare exchange are listed. The other section is a table of 4 columns with headers listed as I d, change vector, last modified, and collection.

Empty RavenDB Database

A screenshot of the creation of a new document with written codes.

Creation of New Document

A screenshot of the new document population. An arbitrary string of 4 lines indicates the user name and the I D field is populated with users forward slash.

Populating New Document

A screenshot depicts new document content. The screen indicates the users details after the document is saved.

Content of a New Document

There are few interesting things to notice here, so let’s go over each of them.

Above Save, Clone, and Delete buttons , value users/0000000000000000001-A is the ID that was generated for you. As you remember, you populated users/ in the ID field. RavenDB took this value as a prefix of future ID, generated unique suffix 0000000000000000001-A, and appended it to your prefix. Once assigned, ID cannot be changed – it is a unique identifier for this Document.

As you can see, @metadata is essentially just one more JSON property. The only difference from other properties is that @metadata is a reserved name. All RavenDB system names start with @ sign, so they will not clash with your properties. Within @metadata , property @collection contains the name of the collection.

A screenshot depicts the icon of show raw output, of a document that was modified in less than a minute.

Show Raw Output Icon

@collection - the name of the collection where your Document will be placed in.

@change-vector – change vector. This is a more advanced concept, but we will briefly mention that change vector provides means to partially order changes of the same Document across multiple nodes in a database cluster.

@id – identifier of your Document.

@last-modified – UTC timestamp of the last change to Document.

Identifiers

The identifier is a mandatory part of every RavenDB document. Once assigned, an identifier cannot be modified. As long as the Document exists, the Document will have the same identifier assigned to it at the moment of creation. Also, RavenDB will ensure that every Document has a unique identifier on the database level.

Empty string – when you pass an empty string as id, RavenDB will generate a GUID and assign it as id.

Collection_name/ – an arbitrary string followed by a forward slash. Passing users/ would be an example. The string users will be the name of the collection, and the slash will be a signal to RavenDB to generate a unique identifier for a document. This identifier will be generated as a number prepended with zeros, with the addition of the node name. For example. passing users/ as an Id for a document will result in RavenDB assigning users/0000000000000000001-A as the unique identifier.

Collection_name| - arbitrary string followed by a pipe character. The arbitrary string will be taken as a name of a collection, and the pipe character will trigger cluster-wide coordination to produce a unique number on the cluster level. So, passing users| as an Id for a document will result in RavenDB assigning users/1.

In all other cases, when a string is not empty and does not contain slash nor pipe character, RavenDB will accept it and, without modifying, assign as an id of your new Document .

If you do not have a strong reason for your IDs, it is best to let RavenDB generate IDs. Going with collection_name/ will produce unique identifiers that will also be sortable, enabling RavenDB to store them optimally for searching and fetching. If you, for some reason, need to generate your identifiers, it is recommended to find a library that can produce lexicographically sortable identifiers.

Modeling Document Relationships

The fact that you are using a database that does not contain a relation in its name does not mean you will be incapable of modeling relationships between aggregates represented by documents. This section will examine possible types of relationships between documents in RavenDB, various approaches to modeling them, and how to validate stronger and weaker aspects of possible solutions.

While the previous section concentrated on the JSON content of RavenDB documents, this section is focusing on connections between those documents. These relations are not the actual data but meta-data, and the gist of modeling relations is how to expand documents to contain that additional information.

Even though there are many ways entities can be interconnected , we will look at the three most common and basic ones: one-to-one, one-to-many, and many-to-many.

One-to-One Relationship

An order has its physical identity (usually coming in the form of a paper document) and its unique identifier orders/1. This unique identifier provides us with a means of recognizing multiple instances of the same orders – comparing two Orders comes down to comparing their Id values.

On the other hand, if we examine ShippingAddress , we will realize that it does not have an identity in the sense of distinguished unique existence. Every Order is unique, while we can have multiple shipments addressed for dispatch to the same address. To determine if two shipments are going to the exact location, we must compare all three components of our address: City, Country, Line1. Hence, we can conclude that its value represents the identity of the address. These objects are labeled as Value Objects in domain-driven design.

Imagine being presented with the number 63. What does this number represent? Someone’s weight? Temperature? Hours? Price? You simply cannot tell – 63 can be so many things on its own, even a random number that does not represent anything.

Now, augmenting it with the property name, "Age": 63, we can understand it represents age. But we cannot tell who has 63 years? Maybe it’s the age of a bridge or a building? Unfortunately, we lack context again.

Finally, observing this property within the entity it belongs to, we have a complete picture – John has 63 years.

This mental exercise gives us a simple recipe for recognizing Value Objects. Whenever you need a context to determine the precise meaning of the information, you will detect Value Object (VO). As you saw, VO can be as simple as a number or complex structure like a shipping address, and they are fundamental building blocks of entities.

Embedding

With both age and shipping address, we applied a technique called embedding . Their separate existence was not justified in both cases, and we inserted these small documents inside bigger documents representing entities.

Referencing

Employee document model

Resume document model

These two models are simplified, but they are showing basic info. Looking closely at the Resume model, we can see property Employee: “employees/1” directly linking Resume to an Employee.

This is a reference. References in RavenDB are nothing more than properties containing the string ID of some other document.

Employee and Resume documents with reversed reference

What we now have is Employee holding a reference to a Resume: Resume: "resumes/1". This reference can be read as owns – Employee owns a Resume.

Employee and Resume documents with mutual references

Employee and Resume are now pointing at each other via properties containing the other Document’s ID. In an application displaying a screen with Employee details, we can easily offer the Resume screen because Employee holds a reference to his CV. Similarly, the screen showing Resume can offer a link leading to an accompanied Employee since we can easily access reference from the Employee property.

- 1.

Unidirectional: Resume pointing to Employee.

- 2.

Unidirectional: Employee pointing to Resume.

- 3.

Bidirectional: Both Employee and Resume hold a reference to the other one.

An employee with embedded Resume

In this case, we embedded not a Value Object without identity but an Entity with a clear identity. This is possible due to a clear ownership situation – Employee has exactly one Resume, and every Resume belongs to a specific Employee.

This fourth approach to modeling one-to-one relationships is entirely legitimate. It is up to you to examine all possible directions, think about processes and workflows in your application, and determine acceptable modeling solutions.

Also, it would be best if you thought about the future. What will be possible ways your application will evolve? What might be requests coming from your users? What will happen as the amount of data grows or if your documents are growing?

With this in mind, let’s observe the modeling variant of Employee presented in Listing 2-5. For simplicity purposes, we intentionally made both Employee and Resume artificially oversimplified. However, if you recall that real-life Resumes can be pretty big, sometimes three or four pages, you will realize that you will embed a substantially extensive Document. Not only that, in the scenario where Resume contains a list of projects Employee participated in, you will see Resume growing over months and years. If you need to load, manipulate, and save Employee to the database, that means that the size of the Employee document will grow over time.

Furthermore, suppose you decide to apply same technique and embed some more documents in the same manner. In that case, you will inevitably see your Employee document becoming very large and very cumbersome to manipulate.

Finally, what will happen if requirements change and company management decided to introduce more than one Resume for every Employee – scenario where the Company applies for contracts and, as a part of a bid campaign, generates custom-tailored Resume of all project members. This will break your one-to-one modeling, which will transform into one-to-many (one Employee can have many Resumes). How much effort will it take to modify the database model and your code to accommodate this change request? Will a straightforward sentence like “we want to enable one Employee to have more than one Resume in our database” result in an inappropriately large amount of work?

All of this is showing that modeling is intricate. You will never be in a situation where you complete the model and declare it as “best possible.” Hence, you should never strive for perfection and “one right way.” Also, it would help if you never tried to anticipate the future and then build all variants you can foresee as a part of your domain model. What you should do instead is to make the simplest possible model that is covering present needs but also does not obstruct change requests that will be a normal and expected part of your application life cycle.

Referential Integrity in RavenDB

In this example, we created a reference to a nonexistent Resume. There was no mechanism on the database level to prevent us from introducing data corruption. Hence, it is natural to ask – relational databases were protecting us from data corruption at the database level, is this behavior exposed by RavenDB a step back? Why would I want to work with a database that is not safeguarding me from making mistakes that would corrupt my data?

Additionally, with JSON format , you will not be able to specify a type of specific property. Hence, if you have a property “age” of type integer, RavenDB will not prevent you from storing “John” in it. With a relational database, such an attempt will result in an error. So, how to explain this? Why would you go with a database that would make your application less reliable and error-prone? With a database that would let you introduce corruption in your data?

Yes, looking at the database as an isolated part of your system, that is true indeed. Relational databases like SQL Server or PostgreSQL will provide you with a way to strongly type columns and to check referential integrity, preventing you from establishing a reference to a nonexistent entity. However, observing databases in the broader context of data persistence gives a significantly different angle.

Unlike the 1980s and 1990s, today’s modeling methodologies (such as domain-driven design) are not centered around databases as a starting point in the modeling process. Instead, they recommend modeling the business domain in the programming language and then persisting domain entities into the database. In the past, most planning meetings were starting in front of the whiteboard, where developers would draw relational database tables, fields, and lots of Foreign Keys establishing relationships between them. This process looked very similar to UML diagrams construction, except that the database was “center of the world” and whole systems planning started with modeling a database. Following persistence model, entities in the programming language were modeled, along with CRUD operations to manage these entities.

From the perspective of database “centrism ,” offloading validation to the database is something you are naturally striving for. However, as domain modeling techniques advanced and developed, we reached the present day where modeling is done at the conceptual level of Aggregates, Entities, and Value Objects. We perform analysis of the processes, divide enterprise projects into subdomains, and conduct modeling at the scope of a subdomain. This activity is performed to look purely at the business and process aspect of our subdomain, keeping in mind that artifacts of this activity will have to be eventually persisted. Nevertheless, persistence is a minor consideration, not a major one.

Every subdomain usually has its entities. And not only that – every subdomain has its own set of business rules, and entities should be validated according to these business constraints. As a general rule of thumb, a carefully crafted business domain should prevent the instantiation of invalid objects. One of the good rules is a principle that “impossible states should be irrepresentable.” In other words, you can declare someone’s age as an integer and offload that check to the database, but would it be correct from the perspective of the business domain to allow the creation and storing of an Employee with age -50 or zero? Data integrity mechanisms exposed by the relational database can cover just one minor part of the overall business validity of your data. A complete set of validation and data integrity rules is a vast one. It usually contains various static and dynamic constraints, often encompassing multiple different entities in various states. For example, a minor shopper should not be able to add a beer to a shopping cart.

Examining the primary role of a database , we can conclude that when you are storing entities into it, the database should provide a reliable mechanism to persist in-memory objects in a verbatim and sound way. Hence, you can look at the database as a way to mirror your object graph from memory into persistent media (usually a hard drive).

So, looking through narrow lenses, it is true that relational database is indeed capable of performing type-level and reference integrity checks, throwing errors at attempts to store invalid data. However, layers of business rules and checking mechanisms of high-level rules should be present in every reliable application. With a good software development approach, you will never let your objects exist in an invalid state.

Hence, the database should be placed at the proper position during application development – not in the center anymore, but as a persistence mechanism. Its role should be to store validated objects reliably and provide fast and reliable means to index and query such data.

One-to-Many Relationship

For this type of relationship, we will again turn to Orders, but this time, we will observe how they relate to Companies. Every Company can have one or more orders. Furthermore, we will consider modeling possibilities with benefits and constraints for every approach.

Revisiting principles of independence, isolation, and coherence, we can see that Company and Order should be modeled as separate documents. Choice of embedding all orders within Company would be a poor one – not only that Company and Order have a separate existence and change independently of each other – but growing number of orders as time goes by would mean that Company document would grow to be a huge one. Also, the existence of multiple orders in one Document would introduce concurrency problems ; editing existing orders simultaneously with a creation of new one would introduce an optimistic concurrency violation problem. As you can see, this introspection process gave us enough arguments to determine with high security that embedding is a suboptimal choice. Hence, it is clear that Company and Order should be separate documents.

An employee with a list of order IDs

We gave up on embedding, among other reasons, because we envisioned a growing number of orders over time. Essentially, we have the same issue here. Imagine hundreds, if not thousands, of orders that the Company might have. That would cause Orders property to grow significantly. Manipulating a list of orders would be cumbersome and more and more difficult as time goes by.

Loading orders for a Company would require first loading Company, process its Orders property, and fetch orders by obtained IDs. This is not a deal-breaker, but it represents unwanted complexity in our code and logic, generating additional database requests.

As the number of orders for a Company grows, there is an ever-increasing number of reads and writes to a Company document. Over time, chances of optimistic concurrency violations grow proportionally.

Orders pointing to a Company

Companies and Orders are two separate collections. Each of them has their own identity, and they respect principles of isolation and independence.

As the number of Orders grows over months or years, this will not affect the size of the documents.

Performing queries to fetch all orders for a specific company does not require us to load Company document first.

As you can see, a model we reached is passing our checks. We can conclude we managed to establish a relationship between Companies and Orders that will support operations we can foresee.

In this example, we discovered one of the established principles of one-to-many modeling. It is colloquially called “smaller side reference ,” denoting the side of the relationship where ID references should be stored. As a general best practice , you should always start with a model where many documents will hold one reference to a single document, instead of going with one Document holding a long list of IDs to many documents.

Companies and Orders we just observed

Professors and Students, with Students pointing to Professor

Companies and Products, with Products pointing to Company

Publishers and Books, with Books pointing to Publisher

Parents and children, where Parent might hold references to all Children

Owner and Companies, with Owner pointing to Companies

Team and Players, with Team having references to Players

Compared to relational databases , we do not have technical limitations – our relationships can point both ways. It is up to us to determine what direction we need. We can achieve this by putting things into context, thinking about what we are modeling, and also investing equal or maybe even more energy into projecting future growth of our application and databases.

Many-to-Many Relationship

Communities and Members – each Community can have many Members, and each person can belong to many Communities.

Grandparents and Grandchildren – Grandparent can have several Grandchildren, and each Grandchild has many Grandparents.

Students and Courses – Students can enroll in many Courses, and each Course has many Students participants.

Books and Authors – a Book can have many Authors, and each Author can write one or more Books.

Member will have a list of his Community IDs since Communities can have thousands of Members.

A child is likely to have fewer Grandparents than Grandparents grandchildren so that we will keep a list of associations on the child document.

Courses can have hundreds of students, so we will expand Student to have a list of courses.

Prolific authors can write dozens of books, while books usually have a few authors so that we will model a list of Author IDs as a property on a book document.

Now that we know how to model documents stored in a RavenDB database and establish relationships between them, the next chapter will show how we can query these documents.

Summary

In this chapter, we introduced general modeling principles in NoSQL document databases. After comparing NoSQL and SQL approaches, we covered the basics of JSON documents, aggregates, properties of well-modeled documents, and the concept of eventual consistency in distributed systems. Finally, specifics of RavenDB modeling were examined, showcasing identifiers, relationships, and an approach to referential integrity.

In the next chapter, we will focus on the most common operation in modern applications - querying. You will learn to perform essential functions like filtering, ordering, and paging. We will also cover more advanced topics, including projections, aggregations, and dereferencing relations.