Chapter 12: Predictive and Diagnostic Maintenance for Rod Pumps

Artificial Intelligence Team, Samsung SDSA, San Jose, CA, United States

algorithmica technologies GmbH, Küchlerstrasse 7, Bad Nauheim, Germany

Abstract

Approximately 20% of all oil wells in the world use a beam pump to raise crude oil to the surface. The proper maintenance of these pumps is thus an important issue in oilfield operations. We wish to know, preferably before the failure, what is wrong with the pump. Maintenance issues on the downhole part of a beam pump can be reliably diagnosed from a plot of the displacement and load on the traveling valve; a diagram known as a dynamometer card. This chapter shows that this analysis can be fully automated using machine learning techniques that teach themselves to recognize various classes of damage in advance of the failure. We use a dataset of 35292 sample cards drawn from 299 beam pumps in the Bahrain oilfield. We can detect 11 different damage classes from each other and from the normal class with an accuracy of 99.9%. This high accuracy makes it possible to automatically diagnose beam pumps in real-time and for the maintenance crew to focus on fixing pumps instead of monitoring them, which increases overall oil yield and decreases environmental impact.

Keywords

12.1. Introduction

12.1.1. Beam pumps

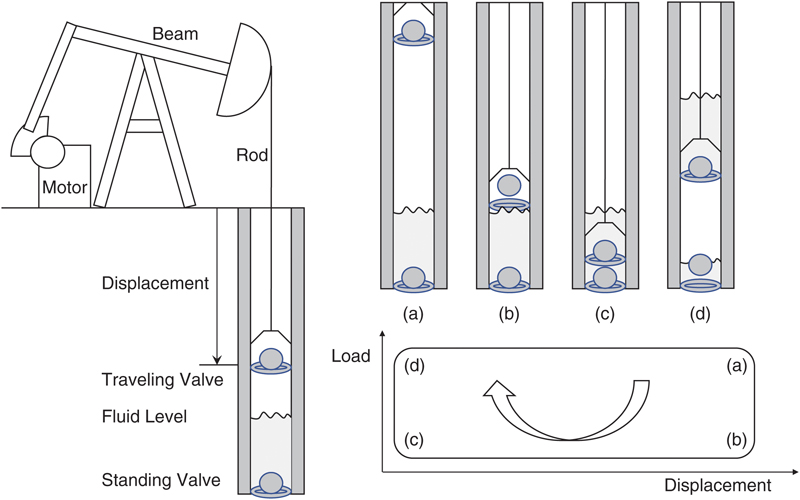

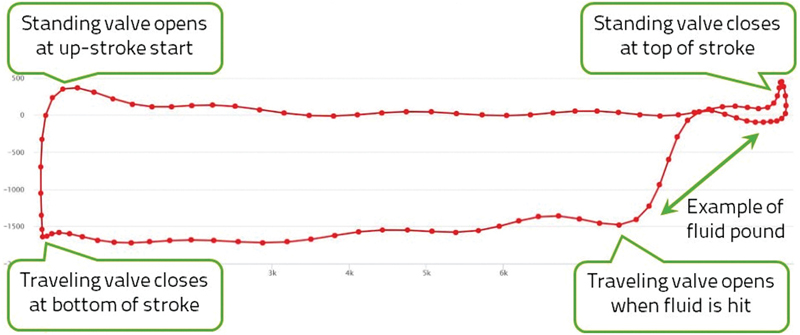

Plotting of displacement and load against each other over the stroke produces the dynamometer card on the bottom right.

12.1.2. Beam pump problems

- 1. Normal

- 2. Full Pump (Fluid Friction)

- 3. Full Pump (Fluid Acceleration)

- 4. Fluid Pound (Slight)

- 5. Fluid Pound (Severe)

- 6. Pumped Off

- 7. Parted Tubing

- 8. Barrel Bent or Sticking

- 9. Barrel Worn or Split

- 10. Gas Locked

- 11. Gas Interference (Slight)

- 12. Gas Interference (Severe)

- 13. Traveling Valve or Plunger Leak

- 14. Traveling Valve Leak and Unanchored Tubing

- 15. Traveling and Standing Valve Balls Split in Half

- 16. Standing Valve Leak

- 17. Standing Valve Leak and Gas Interference

- 18. Standing Valve, Traveling Valve Leak, or Gas Interference

- 19. Pump Hitting Down

- 20. Pump Hitting Up

- 21. Pump Sanded Up

- 22. Pump Worn (Slightly)

- 23. Pump Worn (Severe)

- 24. Pump Plunger Sticking on the Upstroke

- 25. Pump Incomplete Fillage

- 26. Tubing Anchor Malfunction

- 27. Choked Pump

- 28. Hole in Barrel or Plunger Pulling out of Barrel

- 29. Inoperative Pump

- 30. Pump Hitting Up and Down

- 31. Inoperative Pump, Hitting Down

Please see the text for a complete list of conditions. (A) Normal; (B) Fluid pound (Slight); (C) Fluid pound (Severe); (D) Pumped off; (E) Gas interference (Severe); (F) Traveling valve or plunger leak (G) Standing valve, traveling valve leak, or gas interference (H) Pump hitting down; (I) Hole in barrel or plunger pulling out of barrel (J) Inoperative pump; (K) Pump hitting up and down; (L) Inoperative pump, hitting down.

12.1.3. Problem statement

12.2. Feature engineering

Table 12.1

| Summary | Type | Cards to learn | Cards to test | Test error | References | |

|---|---|---|---|---|---|---|

| 1. | Metric is sum of differences in both dimensions | Library | — | — | — | Keating et al. (1991) |

| 2. | Fourier series or gray level | Library | — | — | — | Dickinson and Jennings (1990) |

| 3. | Geometric moments | Library | — | — | 2.6% | Abello et al. (1993) |

| 4. | Fourier series and geometric characteristics | Library | — | 1500 | 13.4% | de Lima et al. (2012) |

| 5. | Extremal points | Library | ? | 2166 | 5% | Schnitman et al. (2003) |

| 6. | Average over segments | Model | 2400 | 3701 | 2.2% | Bezerra Marco et al. (2009); Souza et al. (2009) |

| 7. | Centroid and geometric characteristics | Model | 230 | 100 | 11% | Gao et al. (2015) |

| 8. | Fourier series | Model | 102 | ? | 5% | de Lima et al. (2009) |

| 9. | Line angles | Segment | 6132 | ? | 24% | Reges Galdir et al. (2015) |

| 10. | Statistical moments | Segment | 88 | 40 | 2% | Li et al. (2013a) |

| 11. | Geometric characteristics | Segment | — | — | — | Li et al. (2013a) |

12.2.1. Library-based methods

12.2.2. Model-based methods

12.2.3. Segment-based methods

12.2.4. Other methods

12.2.5. Selection of features

- • S1: Fourier series with 1 moment and all 5 geometrical features of (Gao et al., 2015).

- • S2: The 5 geometrical features of (Gao et al., 2015).

- • S3: The centroid coordinates of the card.

- • S4: The centroid coordinates and the average line length.

- • S5: Fourier series with 1 moment, the centroid coordinates, and the average line length.

- • S6: Fourier series with 1 moment and the centroid coordinates.

- • S7: Fourier series with 1 moment, the centroid coordinates, and the two area integrals.

See text for an explanation.

12.3. Project method to validate our model

12.3.1. Data collection

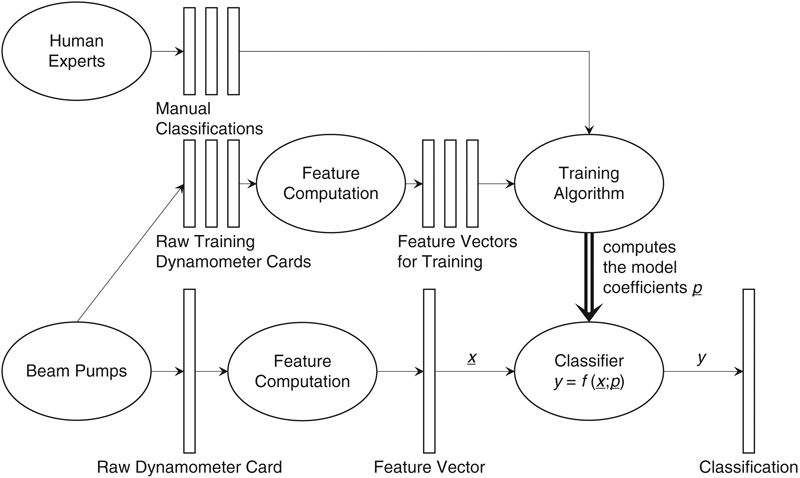

12.3.2. Generation of training data

12.3.3. Feature engineering

12.3.4. Machine learning

Table 12.2

| Category | Training samples | Testing samples | Incorrect |

|---|---|---|---|

| Normal | 8557 | 1529 | 0 |

| Fluid pound (Slight) | 5347 | 955 | 0 |

| Fluid pound (Severe) | 93 | 15 | 0 |

| Inoperative pump | 1981 | 379 | 0 |

| Pump hitting down | 1740 | 303 | 2 |

| Pump hitting up and down | 2258 | 407 | 2 |

| Inoperative pump, hitting down | 9045 | 1626 | 1 |

| Traveling valve or plunger leak | 98 | 15 | 1 |

| Standing valve, traveling valve leak, or gas interference | 345 | 62 | 0 |

| Pumped off | 234 | 39 | 1 |

| Hole in barrel or plunger pulling out of barrel | 132 | 20 | 0 |

| Gas interference (Severe) | 101 | 11 | 0 |

| Total | 29931 | 5361 | 7 |

For each class, we specify how many training and testing samples were used. The model performed perfectly on all training samples but made a few errors on testing samples, as specified.

12.3.5. Summary of methodology