Data exploration is the process of analyzing and refactoring structured or non-structured data and is commonly done before going onto actual data analysis. Operations such as performing a duplicate cleanup and finding whitespace data can be done at the data exploration stage.

We can keep data exploration as the pre-emptive operation before performing heavy-cost operations such as running various batches and jobs, which is quite expensive in computing, and finding irrelevant data in that stage would be painful.

Data exploration can be very useful in various scenarios. Suppose you have large dataset of DNA diversion of people living in New York or terabytes of data from NASA about Mars' temperature records. There is a huge possibility that the data is error prone. So, instead of directly uploading terabytes of data to the program written in R, we can try to make the data less error prone, which will surely process faster results.

Concepts such as those in Chapter 1, The RethinkDB Architecture and Data Model and Chapter 2, RethinkDB Query Language can be used to perform some data exploration and ad-hoc queries.

However, we will not just cover the duplicate data removal operation. In this chapter we are also going to learn how to perform data exploration using RethinkDB along with:

- Importing a large dataset in RethinkDB using the

HTTPmethod - Importing a large dataset from a filesystem in RethinkDB

- Performing various queries to explore the data

Along with this, we will also cover a free web service to generate lots of mock data with random values for our task. Before jumping into anything else, let's dive in and create some mock data for our exploration, say 1,000 records.

We used the mockaroo online mock data generator to create some mock data. Unfortunately, it only allows 1,000 records for free so we were limited to that. For our case study, we have generated data for employees with some basic personal information, along with salary.

Refer to the following image to understand the schema of data:

Refer to the following link: https://www.mockaroo.com

As you can see in the preceding image, we have chosen various keys related to employees and downloaded it as a JSON file. It will return the data format as 1,000 objects residing in one array; that is exactly what we need to dump the data in the RethinkDB database.

RethinkDB provides two ways in which you can import your data into the RethinkDB database for further analysis:

- Importing data in RethinkDB using HTTP

- Importing data via file read

In this section, we will cover both of them in detail along with uploading our dataset to RethinkDB.

In Chapter 2, RethinkDB Query Language, we covered Calling HTTP APIs using ReQL, which is quite an impressive feature. Can we use the same method to import our data from an external resource? Yes, we can.

We have already generated the sample mock data using http://mockaroo.com/ in the previous section with 1,000 JSON documents; let's upload it on an external resource. I am going to use GitHub here, but you are free to use other options.

I have uploaded the file in my repository and gotten the static file link. You can view the same on this link as well as the figure shown next:

The link is available at https://github.com/codeforgeek/sample-mockup-data:

We have everything in place. All we need now is our RethinkDB database and table. I am not going to use a test database this time; I am going to create one using the administrative screen. Lift up your RethinkDB Server and visit the administrative screen.

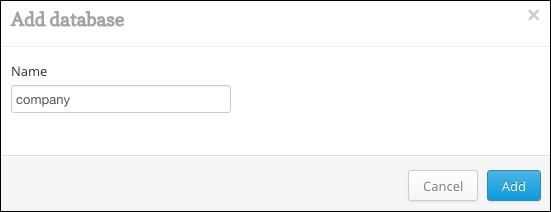

In the table section of the administrative screen, you can click on Tables and create a new database and table in it. I have created a database named company:

Here is my query to import our data from GitHub into our database:

r.db("company").table("employees")

.insert(r.http('https://raw.githubusercontent.com/codeforgeek/sample-mockup-data/master/employeeRecordMockup.json',

{

timeout : 220,

resultFormat : 'json'

}))

Execute the query from Data Explorer and sit back or go get a coffee! It will take time depending on the Internet connection and the dataset.

Here are a few points regarding the query as you can see, we have passed two extra parameters:

- The first parameter is

timeout, which is going to vary from system to system. This determines that the connection stays alive to external resources if there is no activity for a particular time. - The second parameter is very important if your response doesn't have the header set by default. In our case, we are actually calling a file. Hence, the response header is not JSON, which is what we need. So we have set the response format to JSON using

resultFormat:

As you can see, it took me about 2.32 seconds to get all those records in our table. It may vary for various reasons. One of the key points is the size of the data over the HTTP connection. RethinkDB uses streams; hence, the size of data is really not an issue until there is no timeout.

This is one of the cases explained for importing data, but most of the time; you are going to have it in your filesystem. Let us look at importing data using files.

RethinkDB provides the import utility to read the file from the filesystem and dump it into the table. This utility comes in the Python driver at the time of writing this book. You need to install Python and then the RethinkDB driver for the same before moving ahead.

Assuming you have Python installed, the following command will install the RethinkDB driver:

sudo pip install rethinkdb

The command structure of the file import utility is as follows:

rethinkdb import -f FILE_PATH { parameters }

The following are mandatory parameters if you are importing it from a local system in one RethinkDB instance:

--table DB.table_name--format JSON/CSV

The following are optional but may be mandatory in some cases:

-c HOST:PORT: Connect to the given host and port-p: Password file path--pkey: Primary key--shards: Provide the number of shards--replicas: Provide the number of replicas

We will be looking at these parameters in the next chapter, covering administration in detail. Here is the command to import our file into the respective table:

rethinkdb import -f employeeRecordMockup.json --table company.employees_importviafile

If the table is already present, apply --force at the end of the command. After running the previous command using force parameter because I already had a table in the database I got the following output:

Let us check the records in our RethinkDB table. For this, we are going to use count().

In the ReQL method, we have to find out whether the data is inserted correctly or not. Here is the query:

r.db("company").table("employees_importviafile").count()

We have successfully imported the data into our RethinkDB table using the HTTP method and using the import command. Let us move ahead and perform some data exploration on the same.