Chapter 6

Creating and Configuring Storage Devices

Storage has always been a critical element for any environment, and the storage infrastructure supporting vSphere is no different. This chapter will help you with all the elements required for a proper storage subsystem design, starting with vSphere storage fundamentals at the datastore and VM level and extending to best practices for configuring the storage array. Good storage design is critical for anyone building a virtual datacenter.

In this chapter, you will learn to

- Differentiate and understand the fundamentals of shared storage, including SANs and NAS

- Understand vSphere storage options

- Configure storage at the vSphere layer

- Configure storage at the VM layer

- Leverage best practices for SAN and NAS storage with vSphere

Reviewing the Importance of Storage Design

Storage design has always been important, but it becomes more so as vSphere is used for larger workloads, for mission-critical applications, for larger clusters, and as the basis for offerings based on Infrastructure as a Service (IaaS) in a nearly 100 percent virtualized datacenter. You can probably imagine why this is the case:

Advanced Capabilities Many of vSphere's advanced features depend on shared storage; vSphere High Availability (HA), vSphere Distributed Resource Scheduler (DRS), vSphere Fault Tolerance (FT), and VMware vCenter Site Recovery Manager all have a critical dependency on shared storage.

Performance People understand the benefits that virtualization brings—consolidation, higher utilization, more flexibility, and higher efficiency. But often, people have initial questions about how vSphere can deliver performance for individual applications when it is inherently consolidated and oversubscribed. Likewise, the overall performance of the VMs and the entire vSphere cluster both depend on shared storage, which is also highly consolidated and oversubscribed.

Availability The overall availability of your virtualized infrastructure—and by extension, the VMs running on that infrastructure—depend on the shared storage infrastructure. Designing high availability into this infrastructure element is paramount. If the storage is not available, vSphere HA will not be able to recover and the aggregate community of VMs can be affected. (We discuss vSphere HA in detail in Chapter 7, “Ensuring High Availability and Business Continuity.”)

While design choices at the server layer can make the vSphere environment relatively more or less optimal, design choices for shared resources such as networking and storage can sometimes make the difference between virtualization success and failure. This is especially true for storage because of its critical role. The importance of storage design and storage design choices remains true regardless of whether you are using storage area networks (SANs), which present shared storage as disks or logical units (LUNs); network attached storage (NAS), which presents shared storage as remotely accessed file systems; or a mix of both. Done correctly, you can create a shared storage design that lowers the cost and increases the efficiency, performance, availability, and flexibility of your vSphere environment.

This chapter breaks down these topics into the following main sections:

- “Examining Shared Storage Fundamentals” covers broad topics of shared storage that are critical with vSphere, including hardware architectures, protocol choices, and key terminology. Although these topics apply to any environment that uses shared storage, understanding these core technologies is a prerequisite to understanding how to apply storage technology in a vSphere implementation.

- “Implementing vSphere Storage Fundamentals” covers how storage technologies covered in the previous main section are applied and used in vSphere environments. This main section is broken down into a section on VMFS datastores (“Working with VMFS Datastores”), raw device mappings (“Working with Raw Device Mappings”), NFS datastores (“Working with NFS Datastores”), and VM-level storage configurations (“Working with VM-Level Storage Configuration”).

- “Leveraging SAN and NAS Best Practices” covers how to pull together all the topics discussed to move forward with a design that will support a broad set of vSphere environments.

Examining Shared Storage Fundamentals

vSphere 5.5 offers numerous storage choices and configuration options relative to previous versions of vSphere or to nonvirtualized environments. These choices and configuration options apply at two fundamental levels: the virtualization layer and the VM layer. The storage requirements for a vSphere environment and the VMs it supports are unique, making broad generalizations impossible. The requirements for any given vSphere environment span use cases ranging from virtual servers and desktops to templates and virtual CD/DVD (ISO) images. The virtual server use cases vary from light utility VMs with few storage performance considerations to the largest database workloads possible, with incredibly important storage layout considerations.

Let's start by examining this at a fundamental level. Figure 6.1 shows a simple three-host vSphere environment attached to shared storage.

It's immediately apparent that the ESXi hosts and the VMs will be contending for the shared storage asset. In a way similar to how ESXi can consolidate many VMs onto a single ESXi host, the shared storage consolidates the storage needs of all the VMs.

FIGURE 6.1 When ESXi hosts are connected to that same shared storage, they share its capabilities.

When sizing or designing the storage solution, you focus on attributes like capacity (gigabytes or terabytes) and performance, which is measured in bandwidth (megabytes per second, or MBps), throughput (I/O operations per second, or IOPS), and latency (in milliseconds). It goes sometimes without saying, but designing for availability, redundancy, and fault tolerance is also of paramount importance.

DETERMINING PERFORMANCE REQUIREMENTS

How do you determine the storage performance requirements of an application that will be virtualized, a single ESXi host, or even a complete vSphere environment? There are many rules of thumb for key applications, and the best practices for every application could fill a book. Here are some quick considerations:

- Online transaction processing (OLTP) databases need low latency (as low as you can get, but a few milliseconds is a good target). They are also sensitive to input/output operations per second (IOPS), because their I/O size is small (4 KB to 8 KB). TPC-C and TPC-E benchmarks generate this kind of I/O pattern.

- Decision support system/business intelligence databases and SQL Server instances that support Microsoft Office SharePoint Server need high bandwidth, which can be hundreds of megabytes per second because their I/O size is large (64 KB to 1 MB). They are not particularly sensitive to latency; TPC-H benchmarks generate the kind of I/O pattern used by these use cases.

- Copying files, deploying from templates, using Storage vMotion, and backing up VMs (within the guest or from a proxy server via vSphere Storage APIs) without using array–based approaches generally all need high bandwidth. In fact, the more, the better.

So, what does vSphere need? The answer is basic—the needs of the vSphere environment are the aggregate sum of all the use cases across all the VMs, which can cover a broad set of requirements. If the VMs are all small-block workloads and you don't do backups inside guests (which generate large-block workloads), then it's all about IOPS. If the VMs are all large-block workloads, then it's all about MBps. More often than not, a virtual datacenter has a mix, so the storage design should be flexible enough to deliver a broad range of capabilities and capacity—but without overbuilding.

How can you best determine what you will need? With small workloads, too much planning can result in overbuilding. You can use simple tools, including VMware Capacity Planner, Windows Perfmon, and top in Linux, to determine the I/O pattern of the applications and OSes that will be virtualized.

Also, if you have many VMs, consider the aggregate performance requirements, and don't just look at capacity requirements. After all, 1000 VMs with 10 IOPS each need an aggregate of 10000 IOPS, which is 50 to 80 fast spindle's worth, regardless of the capacity (in gigabytes or terabytes) needed.

Use large pool designs for generic, light workload VMs.

Conversely, focused, larger VM I/O workloads (such as virtualized SQL Server instances, SharePoint, Exchange, and other use cases) should be where you spend some time planning and thinking about layout. There are numerous VMware published best practices and a great deal of VMware partner reference architecture documentation that can help with virtualizing Exchange, SQL Server, Oracle, and SAP workloads. We have listed a few resources for you:

- Exchange

www.vmware.com/solutions/business-critical-apps/exchange/index.html

- SQL Server

www.vmware.com/solutions/business-critical-apps/sql-virtualization/overview.html

- Oracle

www.vmware.com/solutions/business-critical-apps/oracle-virtualization/index.html

- SAP

www.vmware.com/solutions/business-critical-apps/sap-virtualization/index.html

As with performance, the overall availability of the vSphere environment and the VMs depends on the same shared storage infrastructure, so a robust design is paramount. If the storage is not available, vSphere HA will not be able to recover and the consolidated community of VMs will be affected.

Note that we said the “consolidated community of VMs.” That statement underscores the need to put more care and focus on the availability of the configuration than on the performance or capacity requirements. In virtual configurations, the availability impact of storage issues is more pronounced, so you must use greater care in an availability design than in the physical world. It's not just one workload being affected—it's multiple workloads.

At the same time, advanced vSphere options such as Storage vMotion and advanced array techniques allow you to add, move, or change storage configurations nondisruptively, making it unlikely that you'll create a design where you can't nondisruptively fix performance issues.

Before going too much further, it's important to cover several basics of storage:

- Local storage versus shared storage

- Common storage array architectures

- RAID technologies

- Midrange and enterprise storage array design

- Protocol choices

We'll start with a brief discussion of local storage versus shared storage.

Comparing Local Storage with Shared Storage

An ESXi host can have one or more storage options actively configured, including the following:

- Local SAS/SATA/SCSI storage

- Fibre Channel

- Fibre Channel over Ethernet (FCoE)

- iSCSI using software and hardware initiators

- NAS (specifically, NFS)

- InfiniBand

Traditionally, local storage has been used in a limited fashion with vSphere because so many of vSphere's advanced features—such as vMotion, vSphere HA, vSphere DRS, and vSphere FT—required shared external storage. With vSphere Auto Deploy and the ability to deploy ESXi images directly to RAM at boot time coupled with host profiles to automate the configuration, in some environments local storage from vSphere 5.0 serves even less of a function than it did in previous versions.

With vSphere 5.0, VMware introduced a way to utilize local storage though the installation of a virtual appliance called the vSphere Storage Appliance, or simply VSA. At a high level, the VSA takes local storage and presents it back to ESXi hosts as a shared NFS mount. There are some limitations however. It can be configured with only two or three hosts, there are strict rules around the hardware that can run the VSA, and on top of this, it is licensed as a separate product. While it does utilize the underused local storage of servers, the use case for the VSA simply is not valid for many organizations.

vSphere 5.5, however, has two new features that are significantly more relevant to organizations than the VSA. vSphere Flash Read Cache and VSAN both take advantage of local storage, in particular, local flash storage. vSphere Flash Read Cache takes flash-based storage and allows administrators to allocate portions of it as a read cache for VM read I/O. VSAN extends on the idea behind the VSA and presents the local storage as a distributed datastore across many hosts. While this concept is similar to the VSA, the use of a virtual appliance is not required, nor are NFS mounts; it's entirely built into the ESXi hypervisor. Think of this as shared internal storage. Later in this chapter we'll explain how VSAN works and you can find information on vSphere Flash Read Cache in Chapter 11, “Managing Resource Allocation.”

So, how carefully do you need to design your local storage? The answer is simple—generally speaking, careful planning is not necessary for storage local to the ESXi installation. ESXi stores very little locally, and by using host profiles and distributed virtual switches, it can be easy and fast to replace a failed ESXi host. During this time, vSphere HA will make sure the VMs are running on the other ESXi hosts in the cluster. However, taking advantage of new features within vSphere 5.5 such as VSAN will certainly require careful consideration. Storage underpins your entire vSphere environment. Make the effort to ensure that your shared storage design is robust, taking into consideration internal- and external-based shared storage choices.

![]() Real World Scenario

Real World Scenario

NO LOCAL STORAGE? NO PROBLEM!

What if you don't have local storage? (Perhaps you have a diskless blade system, for example.) There are many options for diskless systems, including booting from Fibre Channel/iSCSI SAN and network-based boot methods like vSphere Auto Deploy (discussed in Chapter 2, “Planning and Installing VMware ESXi”). There is also the option of using USB boot, a technique that we've employed on numerous occasions in lab and production environments. Both Auto Deploy and USB boot give you some flexibility in quickly reprovisioning hardware or deploying updated versions of vSphere, but there are some quirks, so plan accordingly. Refer to Chapter 2 for more details on selecting the configuration of your ESXi hosts.

Shared storage is the basis for most vSphere environments because it supports the VMs themselves and because it is a requirement for many of vSphere's features. Shared external storage in SAN configurations (which encompasses Fibre Channel, FCoE, and iSCSI) and NAS (NFS) is always highly consolidated. This makes it efficient. SAN/NAS or VSAN can take the direct attached storage in physical servers that are 10 percent utilized and consolidate them to 80 percent utilization.

As you can see, shared storage is a key design point. Whether it's shared external storage or you're planning to share the local storage system out, it's important to understand some of the array architectures that vendors use to provide shared storage to vSphere environments. The high-level overview in the following section is neutral on specific storage array vendors because the internal architectures vary tremendously.

Defining Common Storage Array Architectures

This section is remedial for anyone with basic storage experience, but it's needed for vSphere administrators with no storage knowledge. For people unfamiliar with storage, the topic can be a bit disorienting at first. Servers across vendors tend to be relatively similar, but the same logic can't be applied to the storage layer because core architectural differences between storage vendor architectures are vast. In spite of that, storage arrays have several core architectural elements that are consistent across vendors, across implementations, and even across protocols.

The elements that make up a shared storage array consist of external connectivity, storage processors, array software, cache memory, disks, and bandwidth:

External Connectivity The external (physical) connectivity between the storage array and the hosts (in this case, the ESXi hosts) is generally Fibre Channel or Ethernet, though InfiniBand and other rare protocols exist. The characteristics of this connectivity define the maximum bandwidth (given no other constraints, and there usually are other constraints) of the communication between the ESXi host and the shared storage array.

Storage Processors Different vendors have different names for storage processors, which are considered the brains of the array. They handle the I/O and run the array software. In most modern arrays, the storage processors are not purpose-built application-specific integrated circuits (ASICs) but instead are general-purpose CPUs. Some arrays use PowerPC, some use specific ASICs, and some use custom ASICs for specific purposes. But in general, if you cracked open an array, you would most likely find an Intel or AMD CPU.

Array Software Although hardware specifications are important and can define the scaling limits of the array, just as important are the functional capabilities the array software provides. The capabilities of modern storage arrays are vast—similar in scope to vSphere itself—and vary wildly among vendors. At a high level, the following list includes some examples of these array capabilities and key functions:

- Remote storage replication for disaster recovery. These technologies come in many flavors with features that deliver varying capabilities. These include varying recovery point objectives (RPOs)—which reflect how current the remote replica is at any time, ranging from synchronous to asynchronous and continuous. Asynchronous RPOs can range from less than minutes to more than hours, and continuous is a constant remote journal that can recover to varying RPOs. Other examples of remote replication technologies are technologies that drive synchronicity across storage objects (or “consistency technology”), compression, and many other attributes, such as integration with VMware vCenter Site Recovery Manager.

- Snapshot and clone capabilities for instant point-in-time local copies for test and development and local recovery. These also share some of the ideas of the remote replication technologies like “consistency technology,” and some variations of point-in-time protection and replicas also have TiVo-like continuous journaling locally and remotely where you can recover/copy any point in time.

- Capacity-reduction techniques such as archiving and deduplication.

- Automated data movement between performance/cost storage tiers at varying levels of granularity.

- LUN/file system expansion and mobility, which means reconfiguring storage properties dynamically and nondisruptively to add capacity or performance as needed.

- Thin provisioning, which typically involves allocating storage on demand as applications and workloads require it.

- Storage quality of service (QoS), which means prioritizing I/O to deliver a given MBps, IOPS, or latency.

The array software defines the “persona” of the array, which in turn impacts core concepts and behavior. Arrays generally have a “file server” persona (sometimes with the ability to do some block storage by presenting a file as a LUN) or a “block” persona (generally with no ability to act as a file server). In some cases, arrays are combinations of file servers and block devices.

Cache Memory Every array differs as to how cache memory is implemented, but all have some degree of nonvolatile memory used for various caching functions—delivering lower latency and higher IOPS throughput by buffering I/O using write caches and storing commonly read data to deliver a faster response time using read caches. Nonvolatility (meaning ability to survive a power loss) is critical for write caches because the data is not yet committed to disk, but it's not critical for read caches. Cached performance is often used when describing shared storage array performance maximums (in IOPS, MBps, or latency) in specification sheets. These results generally do not reflect real-world scenarios. In most real-world scenarios, performance tends to be dominated by the disk performance (the type and number of disks) and is helped by write caches in most cases, but only marginally by read caches (with the exception of large relational database management systems, which depend heavily on read-ahead cache algorithms). One vSphere use case that is helped by read caches is a situation where many boot images are stored only once (through the use of vSphere or storage array technology), but this is also a small subset of the overall VM I/O pattern.

Disks Arrays differ as to which type of disks (often called spindles) they support and how many they can scale to support. Drives are described according to two different attributes. First, drives are often separated by the drive interface they use: Fibre Channel, serial-attached SCSI (SAS), and serial ATA (SATA). In addition, drives—with the exception of enterprise flash drives (EFDs)—are described by their rotational speed, noted in revolutions per minute (RPM). Fibre Channel drives typically come in 15K RPM and 10K RPM variants, SATA drives are usually found in 5400 RPM and 7200 RPM variants, and SAS drives are usually 15K RPM or 10K RPM variants. Second, EFDs, which are now mainstream, are solid state and have no moving parts; therefore rotational speed does not apply. The type and number of disks are very important. Coupled with how they are configured, this determines how a storage object (either a LUN for a block device or a file system for a NAS device) performs. Shared storage vendors generally use disks from the same disk vendors, so this is an area of commonality across shared storage vendors. The following list is a quick reference on what to expect under a random read/write workload from a given disk drive:

- 7,200 RPM SATA: 80 IOPS

- 10K RPM SATA/SAS/Fibre Channel: 120 IOPS

- 15K RPM SAS/Fibre Channel: 180 IOPS

- A commercial solid-state drive (SSD) based on Multi-Level Cell (MLC) technology: 1,000–2,000 IOPS

- An Enterprise Flash Drive (EFD) based on Single-Level Cell (SLC) technology and much deeper, very high-speed memory buffers: 6,000–30,000 IOPS

Bandwidth (Megabytes per Second) Performance tends to be more consistent across drive types when large-block, sequential workloads are used (such as single-purpose workloads like archiving or backup to disk), so in these cases, large SATA drives deliver strong performance at a low cost.

Explaining RAID

Redundant Array of Inexpensive (sometimes “Independent”) Disks (RAID) is a fundamental and critical method of storing the same data several times. RAID is used to increase data availability (by protecting against the failure of a drive) and to scale performance beyond that of a single drive. Every array implements various RAID schemes (even if it is largely invisible in file server persona arrays where RAID is done below the file system, which is the primary management element).

Think of it this way: Disks are mechanical, spinning, rust-colored surfaces. The read/write heads are flying microns above the surface while reading minute magnetic field variations and writing data by affecting surface areas also only microns in size.

THE “MAGIC” OF DISK DRIVE TECHNOLOGY

It really is a technological miracle that magnetic disks work at all. What a disk does all day long is analogous to a pilot flying a 747 at 600 miles per hour 6 inches off the ground and reading pages in a book while doing it!

In spite of the technological wonder of hard disks, they have unbelievable reliability statistics. But they do fail—and fail predictably, unlike other elements of a system. RAID schemes address this by leveraging multiple disks together and using copies of data to support I/O until the drive can be replaced and the RAID protection can be rebuilt. Each RAID configuration tends to have different performance characteristics and different capacity overhead impact.

We recommend that you view RAID choices as a significant factor in your design. Most arrays layer additional constructs on top of the basic RAID protection. (These constructs have many different names, but common ones are metas, virtual pools, aggregates, and volumes.)

Remember, all the RAID protection in the world won't protect you from an outage if the connectivity to your host is lost, if you don't monitor and replace failed drives and allocate drives as hot spares to automatically replace failed drives, or if the entire array is lost. It's for these reasons that it's important to design the storage network properly, to configure hot spares as advised by the storage vendor, and to monitor for and replace failed elements. Always consider a disaster-recovery plan and remote replication to protect from complete array failure.

Let's examine the RAID choices:

RAID 0 This RAID level offers no redundancy and no protection against drive failure (see Figure 6.2). In fact, it has a higher aggregate risk than a single disk because any single disk failing affects the whole RAID group. Data is spread across all the disks in the RAID group, which is often called a stripe. Although it delivers fast performance, this is the only RAID type that is usually not appropriate for any production vSphere use because of the availability profile.

FIGURE 6.2 In a RAID 0 configuration, the data is striped across all the disks in the RAID set, providing very good performance but very poor availability.

RAID 1, 1+0, 0+1 These mirrored RAID levels offer high degrees of protection but at the cost of 50 percent loss of usable capacity (see Figure 6.3). This is versus the raw aggregate capacity of the sum of the capacity of the drives. RAID 1 simply writes every I/O to two drives and can balance reads across both drives (because there are two copies). This can be coupled with RAID 0 to form RAID 1+0 (or RAID 10), which mirrors a stripe set, or to form RAID 0+1, which stripes data across pairs of mirrors. This has the benefit of being able to withstand multiple drives failing, but only if the drives fail on different elements of a stripe on different mirrors, thus making RAID 1+0 more fault tolerant than RAID 0+1. The other benefit of a mirrored RAID configuration is that, in the case of a failed drive, rebuild times can be very rapid, which shortens periods of exposure.

FIGURE 6.3 This RAID 10 2+2 configuration provides good performance and good availability, but at the cost of 50 percent of the usable capacity.

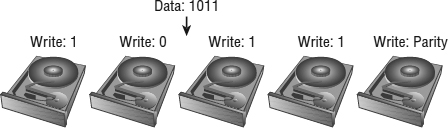

Parity RAID (RAID 5, RAID 6) These RAID levels use a mathematical calculation (an XOR parity calculation) to represent the data across several drives. This tends to be a good compromise between the availability of RAID 1 and the capacity efficiency of RAID 0. RAID 5 calculates the parity across the drives in the set and writes the parity to another drive. This parity block calculation with RAID 5 is rotated among the arrays in the RAID 5 set.

Parity RAID schemes can deliver very good performance, but there is always some degree of write penalty. For a full-stripe write, the only penalty is the parity calculation and the parity write, but in a partial-stripe write, the old block contents need to be read, a new parity calculation needs to be made, and all the blocks need to be updated. However, generally modern arrays have various methods to minimize this effect.

Read performance, on the other hand, is generally excellent because a larger number of drives can be read from than with mirrored RAID schemes. RAID 5 nomenclature refers to the number of drives in the RAID group, so Figure 6.4 would be referred to as a RAID 5 4+1 set. In the figure, the storage efficiency (in terms of usable to raw capacity) is 80 percent, which is much better than RAID 1 or 10.

FIGURE 6.4 A RAID 5 4+1 configuration offers a balance between performance and efficiency.

RAID 5 can be coupled with stripes, so RAID 50 is a pair of RAID 5 sets with data striped across them.

When a drive fails in a RAID 5 set, I/O can be fulfilled using the remaining drives and the parity drive, and when the failed drive is replaced, the data can be reconstructed using the remaining data and parity.

One downside to RAID 5 is that only one drive can fail in the RAID set. If another drive fails before the failed drive is replaced and rebuilt using the parity data, data loss occurs. The period of exposure to data loss because of the second drive failing should be mitigated.

The period of time that a RAID 5 set is rebuilding should be as short as possible to minimize the risk. The following designs aggravate this situation by creating longer rebuild periods:

- Very large RAID groups (think 8+1 and larger), which require more reads to reconstruct the failed drive.

- Very large drives (think 1 TB SATA and 500 GB Fibre Channel drives), which cause more data to be rebuilt.

- Slower drives that struggle heavily during the period when they are providing the data to rebuild the replaced drive and simultaneously support production I/O (think SATA drives, which tend to be slower during the random I/O that characterizes a RAID rebuild). The period of a RAID rebuild is actually one of the most stressful parts of a disk's life. Not only must it service the production I/O workload, but it must also provide data to support the rebuild, and it is known that drives are statistically more likely to fail during a rebuild than during normal duty cycles.

The following technologies all mitigate the risk of a dual drive failure (and most arrays do various degrees of each of these items):

- Using proactive hot sparing, which shortens the rebuild period substantially by automatically starting the hot spare before the drive fails. The failure of a disk is generally preceded with read errors (which are recoverable; they are detected and corrected using on-disk parity information) or write errors, both of which are noncatastrophic. When a threshold of these errors occurs before the disk itself fails, the failing drive is replaced by a hot spare by the array. This is much faster than the rebuild after the failure, because the bulk of the failing drive can be used for the copy and because only the portions of the drive that are failing need to use parity information from other disks.

- Using smaller RAID 5 sets (for faster rebuild) and striping the data across them using a higher-level construct.

- Using a second parity calculation and storing this on another disk.

As described in the sidebar “A Key RAID 5 Consideration,” one way to protect against data loss in the event of a single drive failure in a RAID 5 set is to use another parity calculation. This type of RAID is called RAID 6 (RAID-DP is a RAID 6 variant that uses two dedicated parity drives, analogous to RAID 4). This is a good choice when large RAID groups and SATA are used.

Figure 6.5 shows an example of a RAID 6 4+2 configuration. The data is striped across four disks, and a parity calculation is stored on the fifth disk. A second parity calculation is stored on another disk. RAID 6 rotates the parity location with I/O, and RAID-DP uses a pair of dedicated parity disks. This provides good performance and good availability but a loss in capacity efficiency. The purpose of the second parity bit is to withstand a second drive failure during RAID rebuild periods. It is important to use RAID 6 in place of RAID 5 if you meet the conditions noted in the previous sidebar and are unable to otherwise use the mitigation methods noted.

FIGURE 6.5 A RAID 6 4+2 configuration offers protection against double drive failures.

While this is a reasonably detailed discussion of RAID levels, what you should take from it is that you shouldn't worry about it too much. Just don't use RAID 0 unless you have a proper use case for it. Use hot spare drives and follow the vendor best practices on hot spare density. EMC, for example, generally recommends one hot spare for every 30 drives in its arrays, whereas Compellent recommends one hot spare per drive type and per drive shelf. Just be sure to check with your storage vendor for their specific recommendations.

For most vSphere implementations, RAID 5 is a good balance of capacity efficiency, performance, and availability. Use RAID 6 if you have to use large SATA RAID groups or don't have proactive hot spares. RAID 10 schemes still make sense when you need significant write performance. Remember that for your vSphere environment it doesn't all have to be one RAID type; in fact, mixing different RAID types can be very useful to deliver different tiers of performance/availability.

For example, you can use most datastores with RAID 5 as the default LUN configuration, sparingly use RAID 10 schemes where needed, and use storage-based policy management, which we'll discuss later in this chapter, to ensure that the VMs are located on the storage that suits their requirements.

You should definitely make sure that you have enough spindles in the RAID group to meet the aggregate workload of the LUNs you create in that RAID group. The RAID type will affect the ability of the RAID group to support the workload, so keep RAID overhead (like the RAID 5 write penalty) in mind. Fortunately, some storage arrays can nondisruptively add spindles to a RAID group to add performance as needed, so if you find that you need more performance, you can correct it. Storage vMotion can also help you manually balance workloads.

Now let's take a closer look at some specific storage array design architectures that will impact your vSphere storage environment.

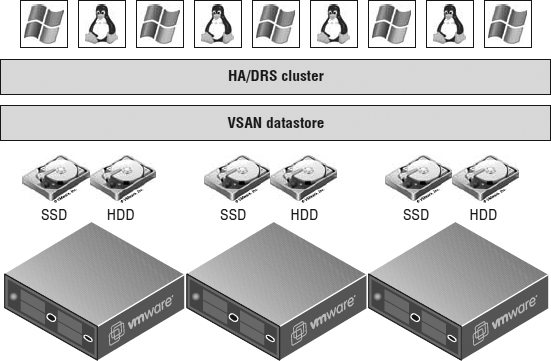

Understanding VSAN

vSphere 5.5 introduces a brand-new storage feature, virtual SAN, or simply VSAN. At a high level, VSAN pools the locally attached storage from members of a VSAN–enabled cluster and presents the aggregated pool back to all hosts within the cluster. This could be considered an “array” of sorts because just like a normal SAN, it has multiple disks presented to multiple hosts, but we would take it one step further and consider it an “internal array.” While VMware has announced VSAN as a new feature in vSphere 5.5, there are a few caveats. During the first few months of its availability it will be considered “beta only” and therefore not for production use. Also note that VSAN is licensed separately from vSphere itself.

As we mentioned earlier, in the section “Comparing Local Storage with Shared Storage,” VSAN does not require any additional software installations. It is built directly into ESXi. Managed from vCenter Server, VSAN is compatible with all the other cluster features that vSphere offers, such as vMotion, HA, and DRS. You can even use Storage DRS to migrate VMs on or off a VSAN datastore.

VSAN uses the disks directly attached to the ESXi hosts and is simple to set up, but there are a few specific requirements. Listed here is what you'll need to get VSAN up and running:

- ESXi 5.5 hosts

- vCenter 5.5

- One or more SSDs per host

- One or more HDDs per host

- Minimum of three hosts per VSAN cluster

- Maximum of eight hosts per VSAN cluster

- 1 Gbps network between hosts (10 Gbps recommended)

As you can see from the list, VSAN requires at least one flash-based device in each host. What may not be apparent from the requirements list is that the capacity of the SSD is not actually added to the overall usable space of the VSAN datastore. VSANs use the SSD as a read and write cache just as some external SANs do. When blocks are written to the underlying datastore, they are written to the SSDs first, and later the data can be relocated to the (spinning) HDDs if it's not considered to be frequently accessed.

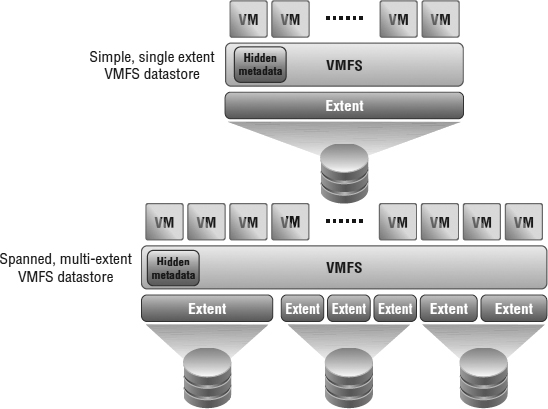

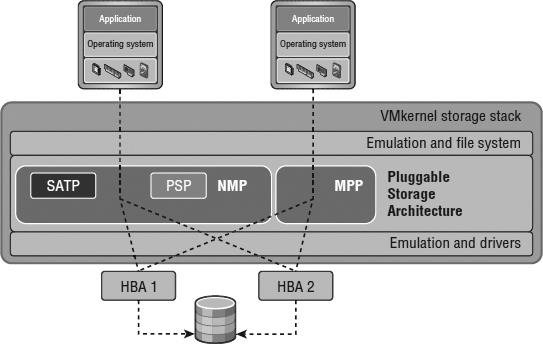

VSAN doesn't use the traditional RAID concepts that we explained in the previous section; it uses what VMware is calling RAIN, or Reliable Array of Independent Nodes. So, if there's no RAID, how do you achieve the expected reliability when using VSAN? VSAN uses a combination of VASA and Storage Service Policies to ensure that VMs are located on more than one disk and/or host to achieve their performance and availability requirements. This is why VMware recommends 10 Gbps networking between ESXi hosts when using VSAN. A VM's virtual disk could be located on one physical host but could be running on another host's CPU and memory. The storage system is fully abstracted from the compute resources, as you can see in Figure 6.6. In all likelihood the VMs virtual disk files could be located on multiple hosts in the cluster to ensure a level of redundancy.

FIGURE 6.6 VSAN abstracts the ESXi host's local disks and presents them to the entire VSAN cluster to consume.

Understanding Midrange and External Enterprise Storage Array Design

There are some major differences in physical array design that can be pertinent in a vSphere design.

Traditional external midrange storage arrays are generally arrays with dual-storage processor cache designs where the cache is localized to one storage processor or another but commonly mirrored between them. (Remember that all vendors call storage processors something slightly different; sometimes they are called controllers, heads, engines, or nodes.) In cases where one of the storage processors fails, the array remains available, but in general, performance is degraded (unless you drive the storage processors to only 50 percent storage processor utilization during normal operation).

External enterprise storage arrays are generally considered to be those that scale to many more controllers and a much larger global cache (memory can be accessed through some common shared model). In these cases, multiple elements can fail while the array is being used at a very high degree of utilization—without any significant performance degradation. Enterprise arrays can also include support for mainframes, and there are other characteristics that are beyond the scope of this book.

Hybrid designs exist as well, such as scale-out designs where they can scale out to more than two storage processors but without the features otherwise associated with enterprise storage arrays. Often these are iSCSI-only arrays and leverage iSCSI redirection techniques (which are not options of the Fibre Channel or NAS protocol stacks) as a core part of their scale-out design.

Design can be confusing, however, because VMware and storage vendors use the same words to express different things. To most storage vendors, an active-active storage array is an array that can service I/O on all storage processor units at once, and an active-passive design is a system where one storage processor is idle until it takes over for the failed unit. VMware has specific nomenclature for these terms that is focused on the model for a specific LUN. VMware defines active-active and active-passive arrays in the following way (this information is taken from the vSphere Storage Guide):

Active-Active Storage System An active-active storage system provides access to LUNs simultaneously through all available storage ports without significant performance degradation. Barring a path failure, all paths are active at all times.

Active-Passive Storage System In an active-passive storage system, one storage processor is actively providing access to a given LUN. Other processors act as backup for the LUN and can be actively servicing I/O to other LUNs. In the event of the failure of an active storage port, one of the passive storage processors can be activated to handle I/O.

Asymmetrical Storage System An asymmetrical storage system supports Asymmetric Logical Unit Access (ALUA), which allows storage systems to provide different levels of access per port. This permits the hosts to determine the states of target ports and establish priority for paths. (See the sidebar “The Fine Line between Active-Active and Active-Passive” for more details on ALUA.)

Virtual Port Storage System Access to all LUNs is provided through a single virtual port. These are active-active devices where the multiple connections are disguised behind the single virtual port. Virtual port storage systems handle failover and connection balancing transparently, which is often referred to as “transparent failover.”

This distinction between array types is important because VMware's definition is based on the multipathing mechanics, not whether you can use both storage processors at once. The active-active and active-passive definitions apply equally to Fibre Channel (and FCoE) and iSCSI arrays, and the virtual port definition applies to only iSCSI (because it uses an iSCSI redirection mechanism that is not possible on Fibre Channel/FCoE).

THE FINE LINE BETWEEN ACTIVE-ACTIVE AND ACTIVE-PASSIVE

Wondering why VMware specifies “without significant performance degradation” in the active-active definition? The reason is found within ALUA, a standard supported by many midrange arrays. vSphere supports ALUA with arrays that implement ALUA compliant with the SPC-3 standard.

Midrange arrays usually have an internal interconnect between the two storage processors used for write cache mirroring and other management purposes. ALUA was an addition to the SCSI standard that enables a LUN to be presented on its primary path and on an asymmetrical (significantly slower) path via the secondary storage processor, transferring the data over this internal interconnect.

The key is that the “non-optimized path” generally comes with a significant performance degradation. The midrange arrays don't have the internal interconnection bandwidth to deliver the same response on both storage processors because there is usually a relatively small, or higher-latency, internal interconnect used for cache mirroring that is used for ALUA versus enterprise arrays that have a very-high-bandwidth internal model.

Without ALUA, on an array with an active-passive LUN ownership model, paths to a LUN are shown as active, standby (designates that the port is reachable but is on a processor that does not have the LUN), and dead. When the failover mode is set to ALUA, a new state is possible: active non-optimized. This is not shown distinctly in the vSphere Web Client GUI, but it looks instead like a normal active path. The difference is that it is not used for any I/O.

So, should you configure your midrange array to use ALUA? Follow your storage vendor's best practice. For some arrays this is more important than others. Remember, however, that the non-optimized paths will not be used (by default) even if you select the Round Robin policy. An active-passive array using ALUA is not functionally equivalent to an active-passive array where all paths are used. This behavior can be different if using a third-party multipathing module—see the section “Reviewing Multipathing” later in this chapter.

By definition, all enterprise arrays are active-active arrays (by VMware's definition), but not all midrange arrays are active-passive. To make things even more confusing, not all active-active arrays (again, by VMware's definition) are enterprise arrays!

So, what do you do? What kind of array architecture is the right one for VMware? The answer is simple: All of them on VMware's Hardware Compatibility List (HCL) work; you just need to understand how the one you have works.

Most customers' needs are well met by midrange arrays, regardless of whether they have an active-active, active-passive, or virtual port (iSCSI-only) design or whether they are NAS devices. Generally, only the most mission-critical virtual workloads at the highest scale require the characteristics of enterprise-class storage arrays. In these cases, scale refers to VMs that number in the thousands, datastores that number in the hundreds, local and remote replicas that number in the hundreds, and the highest possible workloads—all that perform consistently even after component failures.

The most important considerations are as follows:

- If you have a midrange array, recognize that it is possible to oversubscribe the storage processors significantly. In such a situation, if a storage processor fails, performance will be degraded. For some customers, that is acceptable because storage processor failure is rare. For others, it is not, in which case you should limit the workload on either storage processor to less than 50 percent or consider an enterprise array.

- Understand the failover behavior of your array. Active-active arrays use the fixed-path selection policy by default, and active-passive arrays use the most recently used (MRU) policy by default. (See the section “Reviewing Multipathing” for more information.)

- Do you need specific advanced features? For example, if you want disaster recovery, make sure your array has integrated support on the VMware vCenter Site Recovery Manager HCL. Or, do you need array-integrated VMware snapshots? Do they have integrated management tools? More generally, do they support the vSphere Storage APIs? Ask your array vendor to illustrate its VMware integration and the use cases it supports.

We're now left with the last major area of storage fundamentals before we move on to discussing storage in a vSphere-specific context. The last remaining area deals with choosing a storage protocol.

Choosing a Storage Protocol

vSphere offers several shared storage protocol choices, including Fibre Channel, FCoE, iSCSI, and Network File System (NFS), which is a form of NAS. A little understanding of each goes a long way in designing the storage for your vSphere environment.

REVIEWING FIBRE CHANNEL

SANs are most commonly associated with Fibre Channel storage because Fibre Channel was the first protocol type used with SANs. However, SAN refers to a network topology, not a connection protocol. Although people often use the acronym SAN to refer to a Fibre Channel SAN, you can create a SAN topology using different types of protocols, including iSCSI, FCoE, and InfiniBand.

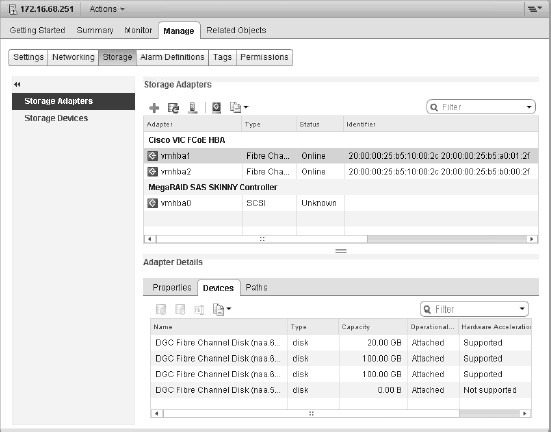

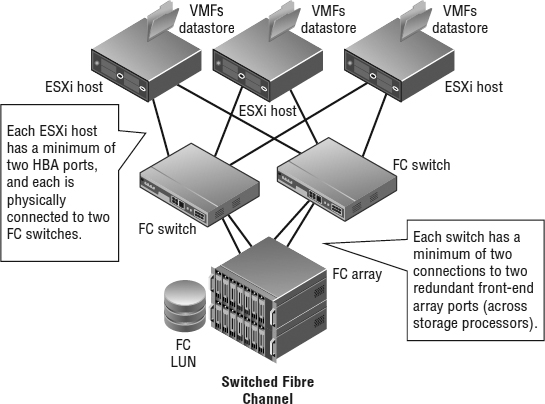

SANs were initially deployed to mimic the characteristics of local or direct attached SCSI devices. A SAN is a network where storage devices (logical units—or LUNs—just as on a SCSI or SAS controller) are presented from a storage target (one or more ports on an array) to one or more initiators. An initiator is usually a host bus adapter (HBA) or converged network adapter (CNA), though software-based initiators are available for iSCSI and FCoE. See Figure 6.7.

Today, Fibre Channel HBAs have roughly the same cost as high-end multiported Ethernet interfaces or local SAS controllers, and the per-port cost of a Fibre Channel switch is about twice that of a high-end managed Ethernet switch.

Fibre Channel uses an optical interconnect (though there are copper variants), which is used since the Fibre Channel protocol assumes a very high-bandwidth, low-latency, and lossless physical layer. Standard Fibre Channel HBAs today support very-high-throughput, 4 Gbps, 8 Gbps, and even 16 Gbps connectivity in single-, dual-, or quad-ported options. Older, obsolete HBAs supported up to only 2 Gbps. Some HBAs supported by ESXi are the QLogic QLE2462 and Emulex LP10000. You can find the authoritative list of supported HBAs on the VMware HCL at www.vmware.com/resources/compatibility/search.php. For end-to-end compatibility (in other words, from host to HBA to switch to array), every storage vendor maintains a similar compatibility matrix.

FIGURE 6.7 A Fibre Channel SAN presents LUNs from a target array (in this case an EMC VNX) to a series of initiators (in this case a Cisco Virtual Interface Controller).

Although in the early days of Fibre Channel there were many types of cables and interoperability of various Fibre Channel initiators, firmware revisions, switches, and targets (arrays) were not guaranteed, today interoperability is broad. Still, it is always a best practice to maintain your environment with the vendor interoperability matrix. From a connectivity standpoint, almost all cases use a common OM2 (orange-colored cables) multimode duplex LC/LC cable. The newer OM3 and OM4 (aqua-colored cables) are used for longer distances and are generally used for 10 Gbps Ethernet and 8/16 Gbps Fibre Channel (which otherwise have shorter distances using OM2). They all plug into standard optical interfaces.

The Fibre Channel protocol can operate in three modes: point-to-point (FC-P2P), arbitrated loop (FC-AL), and switched (FC-SW). Point-to-point and arbitrated loop are rarely used today. FC-AL is commonly used by some array architectures to connect their backend spindle enclosures (vendors give different hardware names to them, but they're the hardware elements that contain and support the physical disks) to the storage processors, but even in these cases, most modern array designs are moving to switched designs, which have higher bandwidth per disk enclosure.

As Figure 6.8 shows, each ESXi host has a minimum of two HBA ports, and each is physically connected to two Fibre Channel switches. Each switch has a minimum of two connections to two redundant front-end array ports (across storage processors).

FIGURE 6.8 The most common Fibre Channel configuration: a switched Fibre Channel (FC-SW) SAN. This enables the Fibre Channel LUN to be easily presented to all the hosts while creating a redundant network design.

HOW DIFFERENT IS FCOE?

Aside from discussions of the physical media and topologies, the concepts for FCoE are almost identical to those of Fibre Channel. This is because FCoE was designed to be seamlessly interoperable with existing Fibre Channel–based SANs.

All the objects (initiators, targets, and LUNs) on a Fibre Channel SAN are identified by a unique 64-bit identifier called a worldwide name (WWN). WWNs can be worldwide port names (a port on a switch) or node names (a port on an endpoint). For anyone unfamiliar with Fibre Channel, this concept is simple. It's the same technique as Media Access Control (MAC) addresses on Ethernet. Figure 6.8 shows an ESXi host with FCoE CNAs, where the highlighted CNA has the following worldwide node name: worldwide port name (WWnN: WWpN) in the identifier column:

20:00:00:25:b5:10:00:2c 20:00:00:25:b5:a0:01:2f

Like Ethernet MAC addresses, WWNs have a structure. The most significant two bytes are used by the vendor (the four hexadecimal characters starting on the left) and are unique to the vendor, so there is a pattern for QLogic or Emulex HBAs or array vendors. In the previous example, these are Cisco CNAs connected to an EMC VNX storage array.

Fibre Channel and FCoE SANs also have a critical concept of zoning. Fibre Channel switches implement zoning to restrict which initiators and targets can see each other as if they were on a common bus. If you have Ethernet networking experience, the idea is somewhat analogous to non-routable VLANs with Ethernet.

IS THERE A FIBRE CHANNEL EQUIVALENT TO VLANS?

Actually, yes, there is. Virtual storage area networks (VSANs) were adopted as a standard in 2004. Like VLANs, VSANs provide isolation between multiple logical SANs that exist on a common physical platform. This enables SAN administrators greater flexibility and another layer of separation in addition to zoning. These are not to be confused with VMware's new VSAN feature described earlier in this chapter.

Zoning is used for the following two purposes:

- To ensure that a LUN that is required to be visible to multiple hosts in a cluster (for example in a vSphere cluster, a Microsoft cluster, or an Oracle RAC cluster) has common visibility to the underlying LUN while ensuring that hosts that should not have visibility to that LUN do not. For example, it's used to ensure that VMFS volumes aren't visible to Windows servers (with the exception of backup proxy servers using software that leverages the vSphere Storage APIs for Data Protection).

- To create fault and error domains on the SAN fabric, where noise, chatter, and errors are not transmitted to all the initiators/targets attached to the switch. Again, it's somewhat analogous to one of the uses of VLANs to partition very dense Ethernet switches into broadcast domains.

Zoning is configured on the Fibre Channel switches via simple GUIs or CLI tools and can be configured by port or by WWN:

- Using port-based zoning, you would zone by configuring your Fibre Channel switch to “put port 5 and port 10 into a zone that we'll call zone_5_10.” Any device (and therefore any WWN) you physically plug into port 5 could communicate only to a device (or WWN) physically plugged into port 10.

- Using WWN-based zoning, you would zone by configuring your Fibre Channel switch to “put WWN from this HBA and these array port WWNs into a zone we'll call ESXi_55_ host1_CX_SPA_0.” In this case, if you moved the cables, the zones would move to the ports with the matching WWNs.

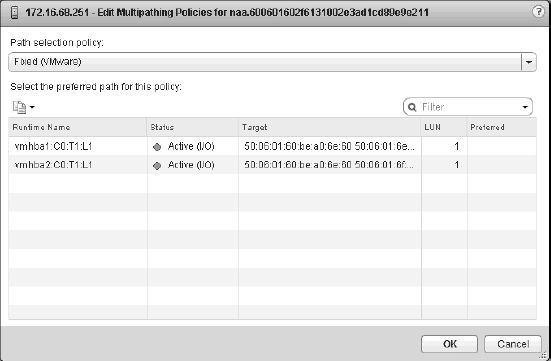

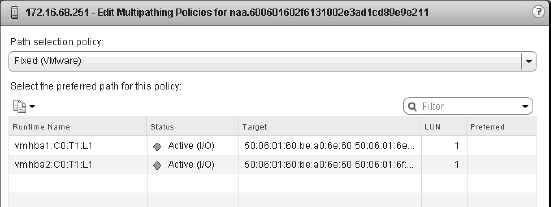

The ESXi configuration shown in Figure 6.9 shows the LUN by its runtime or “shorthand” name. Masked behind this name is an unbelievably long name that that combines the initiator WWN, the Fibre Channel switch ports, and the Network Address Authority (NAA) identifier. This provides an explicit name that uniquely identifies not only the storage device but also the full end-to-end path.

We'll give you more details on storage object naming later in this chapter, in the sidebar titled “What Is All the Stuff in the Storage Device Details List?”

Zoning should not be confused with LUN masking. Masking is the ability of a host or an array to intentionally ignore WWNs that it can actively see (in other words, that are zoned to it). Masking is used to further limit what LUNs are presented to a host (commonly used with test and development replicas of LUNs).

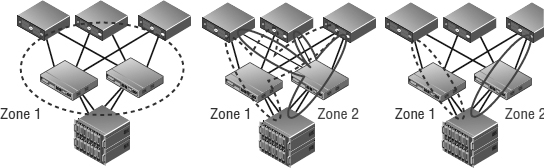

You can put many initiators and targets into a zone and group zones together, as illustrated in Figure 6.10. For features like vSphere HA and vSphere DRS, ESXi hosts must have shared storage to which all applicable hosts have access. Generally, this means that every ESXi host in a vSphere environment must be zoned such that it can see each LUN. Also, every initiator (HBA or CNA) needs to be zoned to all the front-end array ports that could present the LUN. So, what's the best configuration practice? The answer is single initiator/single target zoning. This creates smaller zones, creates less cross talk, and makes it more difficult to administratively make an error that removes a LUN from all paths to a host or many hosts at once with a switch configuration error.

FIGURE 6.9 The Edit Multipathing Policies dialog box shows the storage runtime (short-hand) name.

FIGURE 6.10 There are many ways to configure zoning. From left to right: multi-initiator/multi-target zoning, single-initiator/multi-target zoning, and single-initiator/single-target zoning.

Remember that the goal is to ensure that every LUN is visible to all the nodes in the vSphere cluster. The left side of the figure is how most people who are not familiar with Fibre Channel start—multi-initiator zoning, with all array ports and all the ESXi Fibre Channel initiators in one massive zone. The middle is better—with two zones, one for each side of the dual-fabric Fibre Channel SAN design, and each zone includes all possible storage processors' front-end ports (critically, at least one from each storage processor!). The right one is the best and recommended zoning configuration—single-initiator/single-target zoning—however this method requires more administrative overhead to initially create all the zones.

When you're using single-initiator/single-target zoning as shown in the figure, each zone consists of a single initiator and a single target array port. This means you'll end up with multiple zones for each ESXi host, so that each ESXi host can see all applicable target array ports (again, at least one from each storage processor/controller!). This reduces the risk of administrative error and eliminates HBA issues affecting adjacent zones, but it takes a little more time to configure and results in a larger number of zones overall. It is always critical to ensure that each HBA is zoned to at least one front-end port on each storage processor.

REVIEWING FIBRE CHANNEL OVER ETHERNET

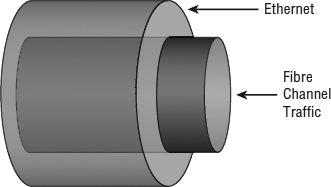

We mentioned in the sidebar titled “How Different Is FCoE?” that FCoE was designed to be interoperable and compatible with Fibre Channel. In fact, the FCoE standard is maintained by the same T11 body as Fibre Channel (the current standard is FC-BB-5). At the upper layers of the protocol stacks, Fibre Channel and FCoE look identical.

It's at the lower levels of the stack that the protocols diverge. Fibre Channel as a protocol doesn't specify the physical transport it runs over. However, unlike TCP, which has retransmission mechanics to deal with a lossy transport, Fibre Channel has far fewer mechanisms for dealing with loss and retransmission, which is why it requires a lossless, low-jitter, high-bandwidth physical layer connection. It's for this reason that Fibre Channel traditionally is run over relatively short optical cables rather than the unshielded twisted-pair (UTP) cables that Ethernet uses.

To address the need for lossless Ethernet, the IEEE created a series of standards—all of which had been approved and finalized at the time of this writing—that make 10 GbE lossless for FCoE traffic. Three key standards, all part of the Data Center Bridging (DCB) effort, make this possible:

- Priority Flow Control (PFC, also called Per-Priority Pause)

- Enhanced Transmission Selection (ETS)

- Datacenter Bridging Exchange (DCBX)

Used together, these three protocols allow Fibre Channel frames to be encapsulated into Ethernet frames, as illustrated in Figure 6.11, and transmitted in a lossless manner. Thus, FCoE uses whatever physical cable plant that 10 Gb Ethernet uses. Today, 10 GbE connectivity is generally optical (same cables as Fibre Channel) and Twinax (which is a pair of coaxial copper cables), InfiniBand-like CX cables, and some emerging 10 Gb unshielded twisted pair (UTP) use cases via the new 10GBase-T standard. Each has its specific distance-based use cases and varying interface cost, size, and power consumption.

FIGURE 6.11 FCoE simply encapsulates Fibre Channel frames into Ethernet frames for transmission over a lossless Ethernet transport.

WHAT ABOUT DATACENTER ETHERNET OR CONVERGED ENHANCED ETHERNET?

Datacenter Ethernet (DCE) and Converged Enhanced Ethernet (CEE) are prestandard terms used to describe a lossless Ethernet network. DCE describes Cisco's prestandard implementation of the DCB standards; CEE was a multivendor effort of the same nature.

Because FCoE uses Ethernet, why use FCoE instead of NFS or iSCSI over 10 Gb Ethernet? The answer is usually driven by the following two factors:

- There are existing infrastructure, processes, and tools in large enterprises that are designed for Fibre Channel, and they expect WWN addressing, not IP addresses. This provides an option for a converged network and greater efficiency, without a “rip and replace” model. In fact, early prestandard FCoE implementations did not include elements required to cross multiple Ethernet switches. These elements, part of something called FCoE Initialization Protocol (FIP), are part of the official FC-BB-5 standard and are required in order to comply with the final standard. This means that most FCoE switches in use today function as FCoE/LAN/Fibre Channel bridges. This makes them excellent choices to integrate and extend existing 10 GbE/1 GbE LANs and Fibre Channel SAN networks. The largest cost savings, power savings, cable and port reduction, and impact on management simplification are on this layer from the ESXi host to the first switch.

- Certain applications require a lossless, extremely low-latency transport network model—something that cannot be achieved using a transport where dropped frames are normal and long-window TCP retransmit mechanisms are the protection mechanism. Now, this is a very high-end set of applications, and those historically were not virtualized. However, in the era of vSphere 5.5, the goal is to virtualize every workload, so I/O models that can deliver those performance envelopes while still supporting a converged network become more important.

In practice, the debate of iSCSI versus FCoE versus NFS on 10 Gb Ethernet infrastructure is not material. All FCoE adapters are converged adapters, referred to as converged network adapters (CNAs). They support native 10 GbE (and therefore also NFS and iSCSI) as well as FCoE simultaneously, and they appear in the ESXi host as multiple 10 GbE network adapters and multiple Fibre Channel adapters. If you have FCoE support, in effect you have it all. All protocol options are yours.

A list of FCoE CNAs supported by vSphere can be found in the I/O section of the VMware compatibility guide.

UNDERSTANDING ISCSI

iSCSI brings the idea of a block storage SAN to customers with no Fibre Channel infrastructure. iSCSI is an Internet Engineering Task Force (IETF) standard for encapsulating SCSI control and data in TCP/IP packets, which in turn are encapsulated in Ethernet frames. Figure 6.12 shows how iSCSI is encapsulated in TCP/IP and Ethernet frames. TCP retransmission is used to handle dropped Ethernet frames or significant transmission errors. Storage traffic can be intense relative to most LAN traffic. This makes it important that you minimize retransmits, minimize dropped frames, and ensure that you have “bet-the-business” Ethernet infrastructure when using iSCSI.

FIGURE 6.12 Using iSCSI, SCSI control and data are encapsulated in both TCP/IP and Ethernet frames.

Although Fibre Channel is often viewed as having higher performance than iSCSI, in many cases iSCSI can more than meet the requirements for many customers, and a carefully planned and scaled-up iSCSI infrastructure can, for the most part, match the performance of a moderate Fibre Channel SAN.

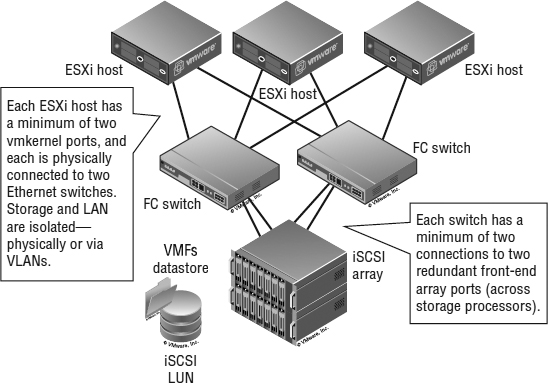

Also, iSCSI and Fibre Channel SANs are roughly comparable in complexity and share many of the same core concepts. Arguably, getting the first iSCSI LUN visible to an ESXi host is simpler than getting the first Fibre Channel LUN visible for people with expertise with Ethernet but not Fibre Channel because understanding worldwide names and zoning is not needed. In practice, designing a scalable, robust iSCSI network requires the same degree of diligence that is applied to Fibre Channel. You should use VLAN (or physical) isolation techniques similarly to Fibre Channel zoning, and you need to scale up connections to achieve comparable bandwidth. Look at Figure 6.13, and compare it to the switched Fibre Channel network diagram in Figure 6.8.

FIGURE 6.13 Notice how the topology of an iSCSI SAN is the same as a switched Fibre Channel SAN.

Each ESXi host has a minimum of two VMkernel ports, and each is physically connected to two Ethernet switches. (Recall from Chapter 5, “Creating and Configuring Virtual Networks,” that VMkernel ports are used by the hypervisor for network traffic such as IP-based storage traffic, like iSCSI or NFS.) Storage and LAN are isolated—physically or via VLANs. Each switch has a minimum of two connections to two redundant front-end array network interfaces (across storage processors).

The one additional concept to focus on with iSCSI is the concept of fan-in ratio. This applies to all shared storage networks, including Fibre Channel, but the effect is often most pronounced with Gigabit Ethernet (GbE) networks. Across all shared networks, there is almost always a higher amount of bandwidth available across all the host nodes than there is on the egress of the switches and front-end connectivity of the array. It's important to remember that the host bandwidth is gated by congestion wherever it occurs. Don't minimize the array port-to-switch configuration. If you connect only four GbE interfaces on your array and you have 100 hosts with two GbE interfaces each, then expect contention, because your fan-in ratio is too large.

Also, when iSCSI and iSCSI SANs are examined, many core ideas are similar to Fibre Channel and Fibre Channel SANs, but in some cases there are material differences. Let's look at the terminology:

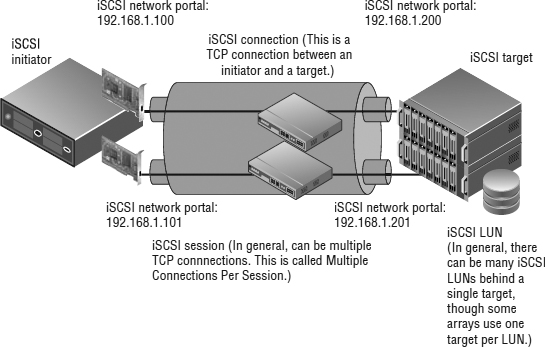

iSCSI Initiator An iSCSI initiator is a logical host-side device that serves the same function as a physical host bus adapter in Fibre Channel/FCoE or SCSI/SAS. iSCSI initiators can be software initiators (which use host CPU cycles to load/unload SCSI payloads into standard TCP/IP packets and perform error checking) or hardware initiators (the iSCSI equivalent of a Fibre Channel HBA or FCoE CNA). Examples of software initiators that are pertinent to vSphere administrators are the native ESXi software initiator and the guest software initiators available in Windows XP and later and in most current Linux distributions. Examples of iSCSI hardware initiators are add-in cards like the QLogic QLA 405x and QLE 406x host bus adapters. These cards perform all the iSCSI functions in hardware. An iSCSI initiator is identified by an iSCSI qualified name (referred to as an IQN). An iSCSI initiator uses an iSCSI network portal that consists of one or more IP addresses. An iSCSI initiator “logs in” to an iSCSI target.

iSCSI Target An iSCSI target is a logical target-side device that serves the same function as a target in Fibre Channel SANs. It is the device that hosts iSCSI LUNs and masks to specific iSCSI initiators. Different arrays use iSCSI targets differently—some use hardware, some use software implementations—but largely this is unimportant. More important is that an iSCSI target doesn't necessarily map to a physical port as is the case with Fibre Channel; each array does this differently. Some have one iSCSI target per physical Ethernet port; some have one iSCSI target per iSCSI LUN, which is visible across multiple physical ports; and some have logical iSCSI targets that map to physical ports and LUNs in any relationship the administrator configures within the array. An iSCSI target is identified by an iSCSI qualified name (an IQN). An iSCSI target uses an iSCSI network portal that consists of one or more IP addresses.

iSCSI Logical Unit An iSCSI LUN is a LUN hosted by an iSCSI target. There can be one or more LUNs behind a single iSCSI target.

iSCSI Network Portal An iSCSI network portal is one or more IP addresses that are used by an iSCSI initiator or iSCSI target.

iSCSI Qualified Name An iSCSI qualified name (IQN) serves the purpose of the WWN in Fibre Channel SANs; it is the unique identifier for an iSCSI initiator, target, or LUN. The format of the IQN is based on the iSCSI IETF standard.

Challenge Authentication Protocol CHAP is a widely used basic authentication protocol, where a password exchange is used to authenticate the source or target of communication. Unidirectional CHAP is one-way; the source authenticates to the destination, or, in the case of iSCSI, the iSCSI initiator authenticates to the iSCSI target. Bidirectional CHAP is two-way; the iSCSI initiator authenticates to the iSCSI target, and vice versa, before communication is established. Although Fibre Channel SANs are viewed as intrinsically secure because they are physically isolated from the Ethernet network, and although initiators not zoned to targets cannot communicate, this is not by definition true of iSCSI. With iSCSI, it is possible (but not recommended) to use the same Ethernet segment as general LAN traffic, and there is no intrinsic zoning model. Because the storage and general networking traffic could share networking infrastructure, CHAP is an optional mechanism to authenticate the source and destination of iSCSI traffic for some additional security. In practice, Fibre Channel and iSCSI SANs have the same security and same degree of isolation (logical or physical).

IP Security IPsec is an IETF standard that uses public-key encryption techniques to secure the iSCSI payloads so that they are not susceptible to man-in-the-middle security attacks. Like CHAP for authentication, this higher level of optional security is part of the iSCSI standards because it is possible (but not recommended) to use a general-purpose IP network for iSCSI transport—and in these cases, not encrypting data exposes a security risk (for example, a man-in-the-middle attack could determine data on a host it can't authenticate to by simply reconstructing the data from the iSCSI packets). IPsec is relatively rarely used because it has a heavy CPU impact on the initiator and the target.

Static/Dynamic Discovery iSCSI uses a method of discovery where the iSCSI initiator can query an iSCSI target for the available LUNs. Static discovery involves a manual configuration, whereas dynamic discovery issues an iSCSI-standard SendTargets command to one of the iSCSI targets on the array. This target then reports all the available targets and LUNs to that particular initiator.

iSCSI Naming Service The iSCSI Naming Service (iSNS) is analogous to the Domain Name System (DNS); it's where an iSNS server stores all the available iSCSI targets for a very large iSCSI deployment. iSNS is rarely used.

Figure 6.14 shows the key iSCSI elements in an example logical diagram. This diagram shows iSCSI in the broadest sense.

In general, the iSCSI session can be multiple TCP connections, called Multiple Connections Per Session. Note that this cannot be done in VMware. An iSCSI initiator and iSCSI target can communicate on an iSCSI network portal that can consist of one or more IP addresses. The concept of network portals is done differently on each array; some arrays always have one IP address per target port, while some arrays use network portals extensively. The iSCSI initiator logs into the iSCSI target, creating an iSCSI session. You can have many iSCSI sessions for a single target, and each session can have multiple TCP connections (Multiple Connections Per Session, which isn't currently supported by vSphere). There can be varied numbers of iSCSI LUNs behind an iSCSI target—many or just one. Every array does this differently. We'll discuss the particulars of the vSphere software iSCSI initiator implementation in detail in the section “Adding a LUN via iSCSI.”

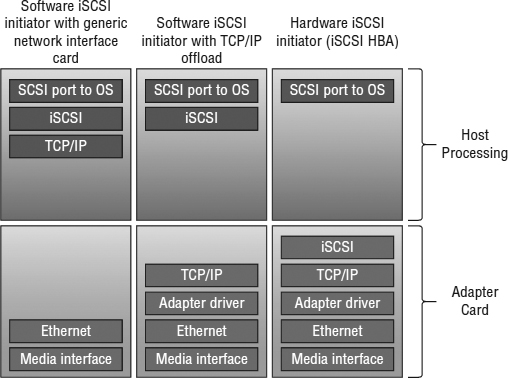

What about the debate regarding hardware iSCSI initiators (iSCSI HBAs) versus software iSCSI initiators? Figure 6.15 shows the differences among software iSCSI on generic network interfaces, network interfaces that do TCP/IP offload, and full iSCSI HBAs. Clearly there are more things the ESXi host needs to process with software iSCSI initiators, but the additional CPU is relatively light. Fully saturating several GbE links will use only roughly one core of a modern CPU, and the cost of iSCSI HBAs is usually less than the cost of slightly more CPU. Keep the CPU overhead in mind as you craft your storage design, but don't let it be your sole criterion.

FIGURE 6.14 The iSCSI IETF standard has several different elements.

FIGURE 6.15 Some parts of the stack are handled by the adapter card versus the ESXi host CPU in various implementations.

Also note the difference between a dependent hardware iSCSI adapter and an independent hardware iSCSI adapter. As the name suggests, the former depends on vSphere networking and iSCSI configuration, whereas the latter uses its own networking and iSCSI configuration.

Prior to vSphere 5.0, one thing that remained the exclusive domain of the iSCSI HBAs was booting from an iSCSI SAN. From version 5.0, vSphere includes support for iSCSI Boot Firmware Table (iBFT), a mechanism that enables booting from iSCSI SAN with a software iSCSI initiator. You must have appropriate support for iBFT in the hardware. One might argue that using Auto Deploy would provide much of the same benefit as booting from an iSCSI SAN, but each approach has its advantages and disadvantages.

iSCSI is the last of the block-based shared storage options available in vSphere; now we move on to the Network File System (NFS), the only NAS protocol that vSphere supports.

JUMBO FRAMES ARE SUPPORTED

VMware ESXi does support jumbo frames for all VMkernel traffic, including both iSCSI and NFS, and they should be used when needed. However, it is then critical to configure a consistent, larger maximum transfer unit (MTU) size on all devices in all the possible networking paths; otherwise, Ethernet frame fragmentation will cause communication problems. Depending on the network hardware and traffic type, jumbo frames may or may not yield significant benefits. As always, you will need to weigh the benefits against the operational overhead of supporting this configuration.

UNDERSTANDING THE NETWORK FILE SYSTEM

NFS protocol is a standard originally developed by Sun Microsystems to enable remote systems to access a file system on another host as if it were locally attached. vSphere implements a client compliant with NFSv3 using TCP.

When NFS datastores are used by vSphere, no local file system (such as VMFS) is used. The file system is on the remote NFS server. This means that NFS datastores need to handle the same access control and file-locking requirements that vSphere delivers on block storage using the vSphere Virtual Machine File System, or VMFS (we'll describe VMFS in more detail later in this chapter in the section “Examining the vSphere Virtual Machine File System”). NFS servers accomplish this through traditional NFS file locks.

The movement of the file system from the ESXi host to the NFS server also means that you don't need to handle zoning/masking tasks. This makes an NFS datastore one of the easiest storage options to simply get up and running. On the other hand, it also means that all of the high availability and multipathing functionality that is normally part of a Fibre Channel, FCoE, or iSCSI storage stack is replaced by the networking stack. We'll discuss the implications of this in the section titled “Crafting a Highly Available NFS Design.”

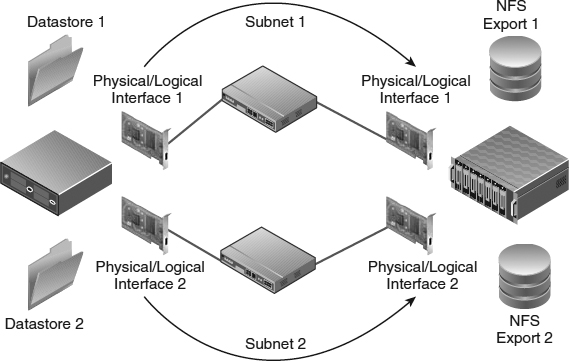

Figure 6.16 shows the topology of an NFS configuration. Note the similarities to the topologies in Figure 6.8 and Figure 6.13.

Technically, any NFS server that complies with NFSv3 over TCP will work with vSphere (vSphere does not support NFS over UDP), but similar to the considerations for Fibre Channel and iSCSI, the infrastructure needs to support your entire vSphere environment. Therefore, we recommend you use only NFS servers that are explicitly on the VMware HCL.

Using NFS datastores moves the elements of storage design associated with LUNs from the ESXi hosts to the NFS server. Instead of exposing block storage—which uses the RAID techniques described earlier for data protection—and allowing the ESXi hosts to create a file system (VMFS) on those block devices, the NFS server uses its block storage—protected using RAID—and creates its own file systems on that block storage. These file systems are then exported via NFS and mounted on your ESXi hosts.

FIGURE 6.16 The topology of an NFS configuration is similar to iSCSI from a connectivity standpoint but very different from a configuration standpoint.

In the early days of using NFS with VMware, NFS was categorized as being a lower-performance option for use with ISOs and templates but not production VMs. If production VMs were used on NFS datastores, the historical recommendation would have been to relocate the VM swap to block storage. Although it is true that NAS and block architectures are different and, likewise, their scaling models and bottlenecks are generally different, this perception is mostly rooted in how people have used NAS historically.

The reality is that it's absolutely possible to build an enterprise-class NAS infrastructure. NFS datastores can support a broad range of virtualized workloads and do not require you to relocate the VM swap. However, in cases where NFS will be supporting a broad set of production VM workloads, you will need to pay attention to the NFS server backend design and network infrastructure. You need to apply the same degree of care to bet-the-business NAS as you would if you were using block storage via Fibre Channel, FCoE, or iSCSI. With vSphere, your NFS server isn't being used as a traditional file server, where performance and availability requirements are relatively low. Rather, it's being used as an NFS server supporting a mission-critical application—in this case the vSphere environment and all the VMs on those NFS datastores.

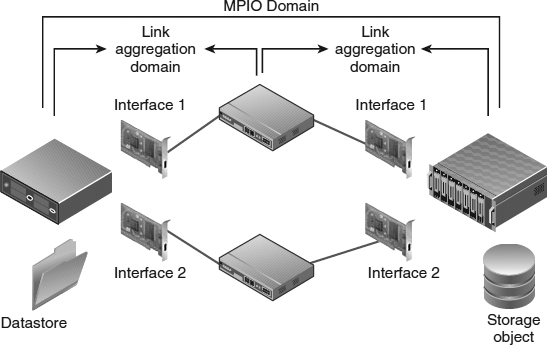

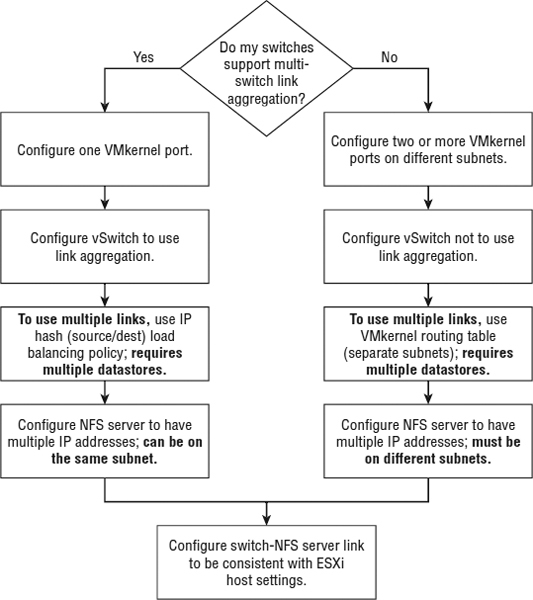

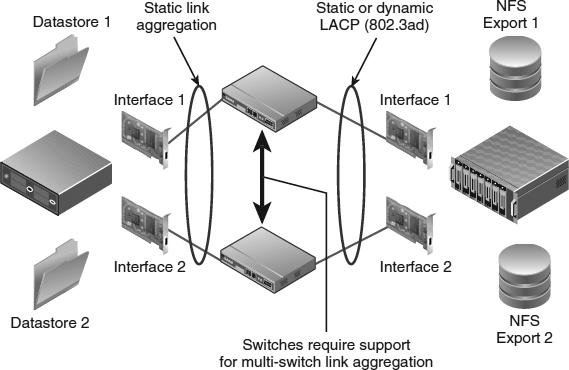

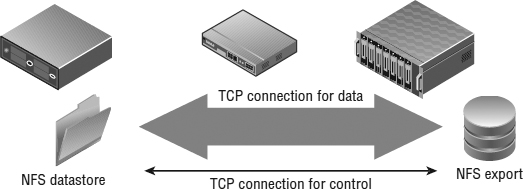

We mentioned previously that vSphere implements an NFSv3 client using TCP. This is important to note because it directly impacts your connectivity options. Each NFS datastore uses two TCP sessions to the NFS server: one for NFS control traffic and the other for NFS data traffic. In effect, this means that the vast majority of the NFS traffic for a single datastore will use a single TCP session. Consequently, this means that link aggregation (which works on a per-flow basis from one source to one target) will use only one Ethernet link per datastore, regardless of how many links are included in the link aggregation group. To use the aggregate throughput of multiple Ethernet interfaces, you need multiple datastores, and no single datastore will be able to use more than one link's worth of bandwidth. The approach available to iSCSI (multiple iSCSI sessions per iSCSI target) is not available in the NFS use case. We'll discuss techniques for designing high-performance NFS datastores in the section titled “Crafting a Highly Available NFS Design.”

As in the previous sections that covered the common storage array architectures, the protocol choices available to the vSphere administrator are broad. You can make most vSphere deployments work well on all protocols, and each has advantages and disadvantages. The key is to understand and determine what will work best for you. In the following section, we'll summarize how to make these basic storage choices.

Making Basic Storage Choices

Most vSphere workloads can be met by midrange array architectures (regardless of active-active, active-passive, asymmetrical, or virtual port design). Use enterprise array designs when mission-critical and very large-scale virtual datacenter workloads demand uncompromising availability and performance linearity.

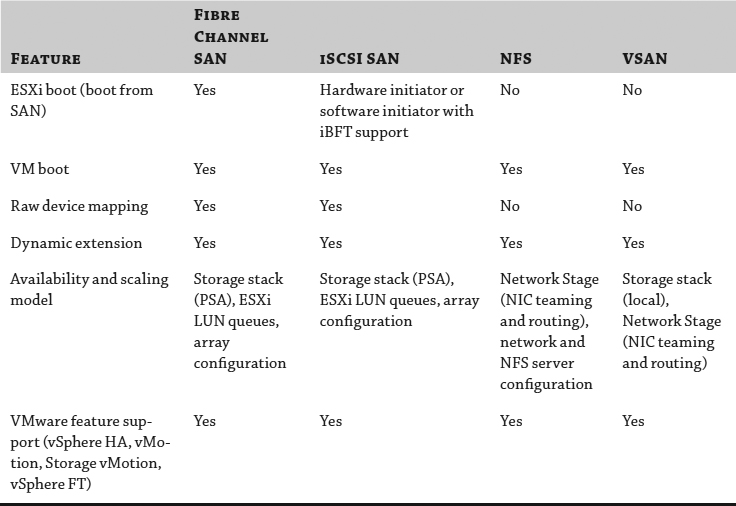

As shown in Table 6.1, each storage choice can support most use cases. It's not about one versus the other but rather about understanding and leveraging their differences and applying them to deliver maximum flexibility.

TABLE 6.1: Storage choices

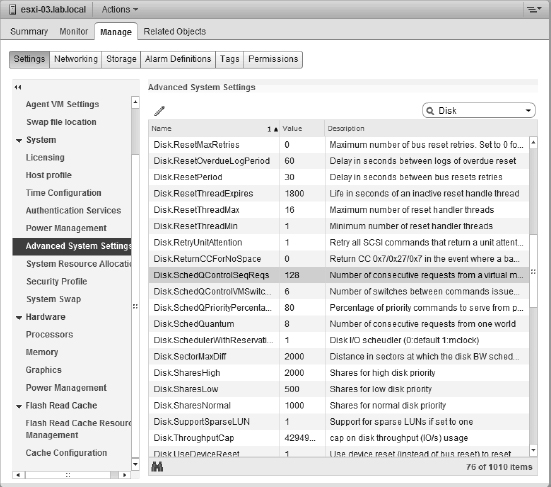

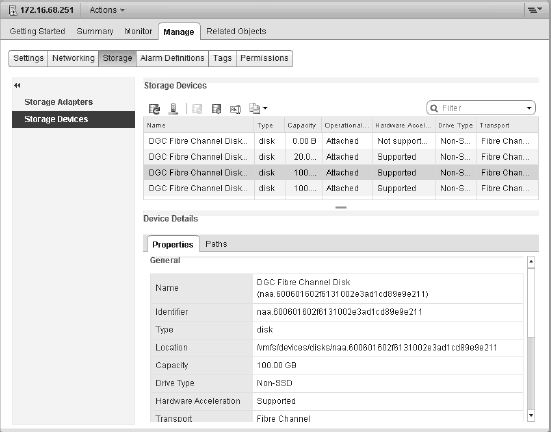

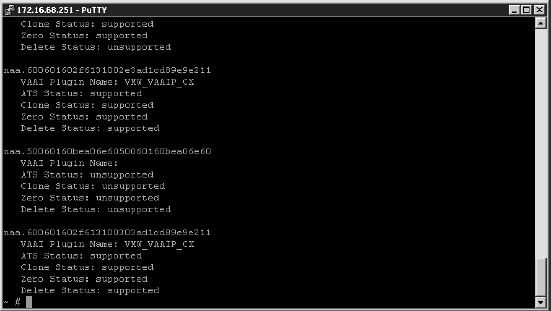

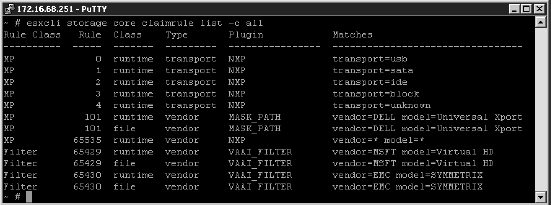

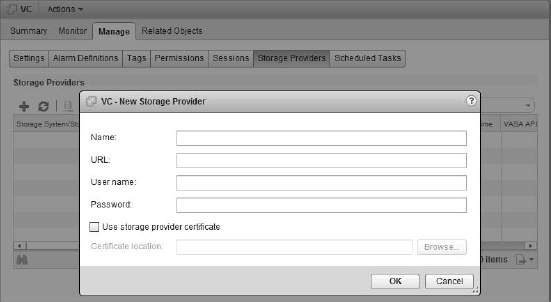

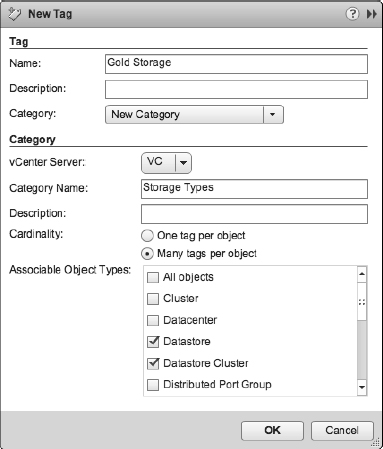

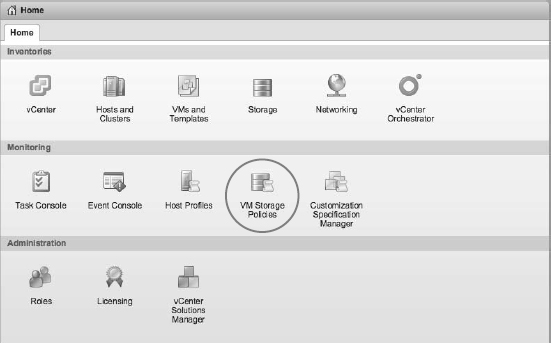

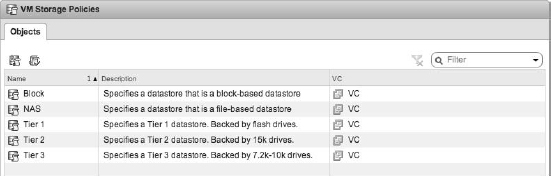

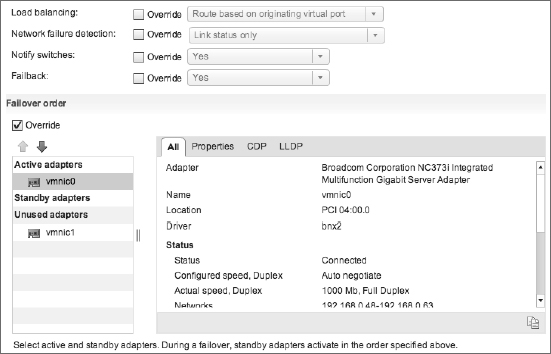

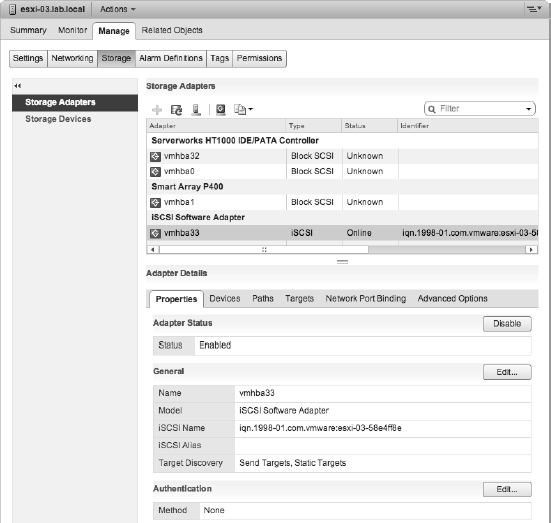

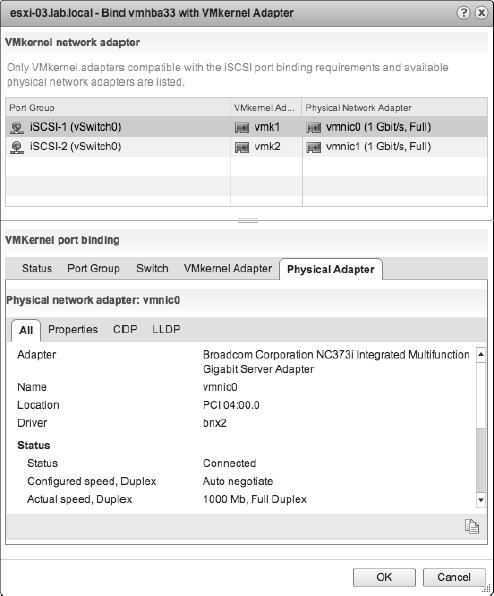

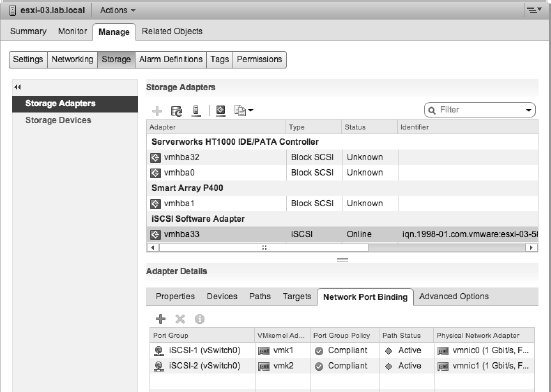

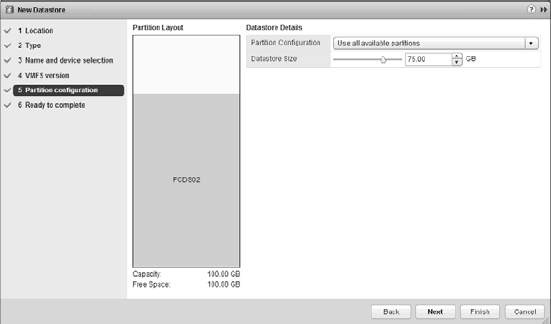

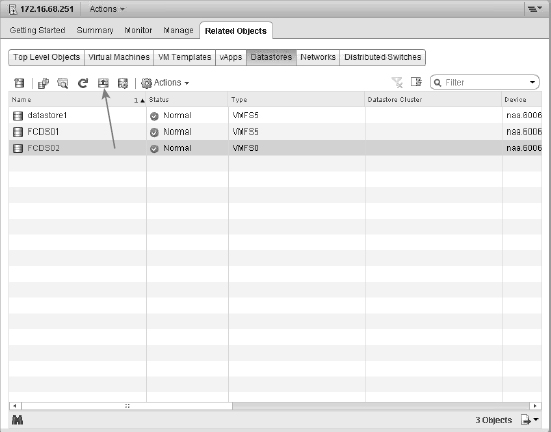

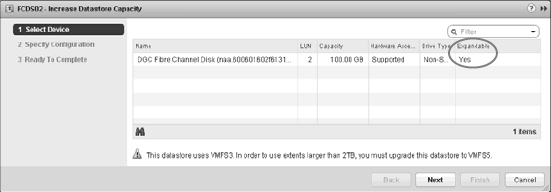

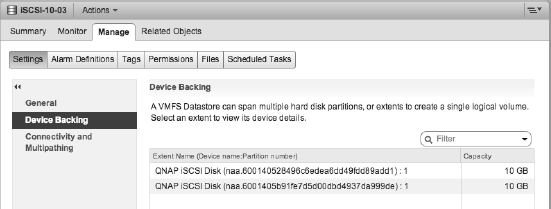

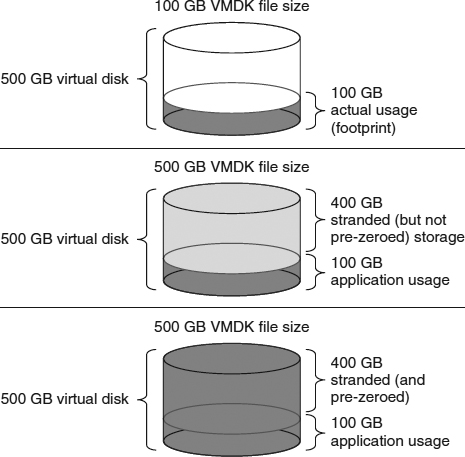

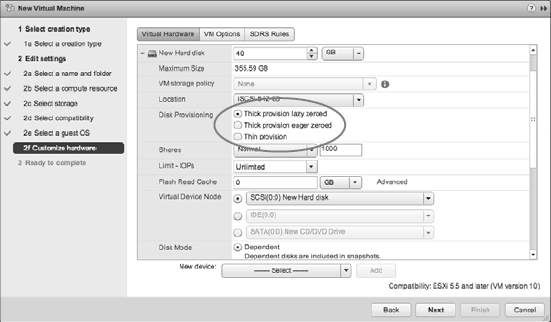

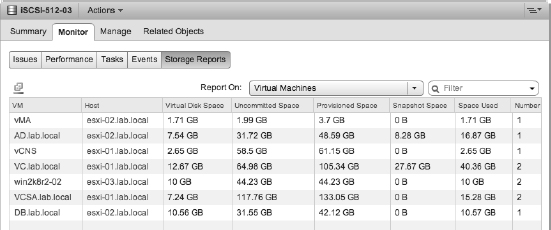

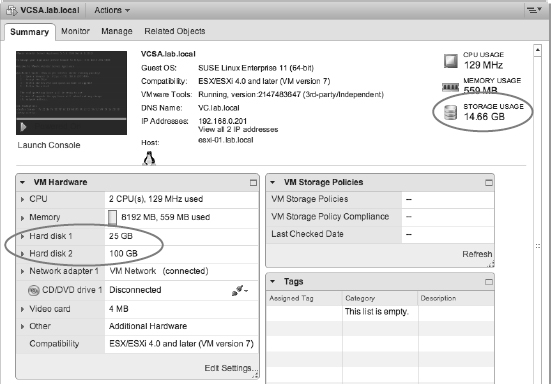

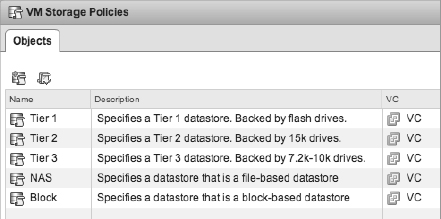

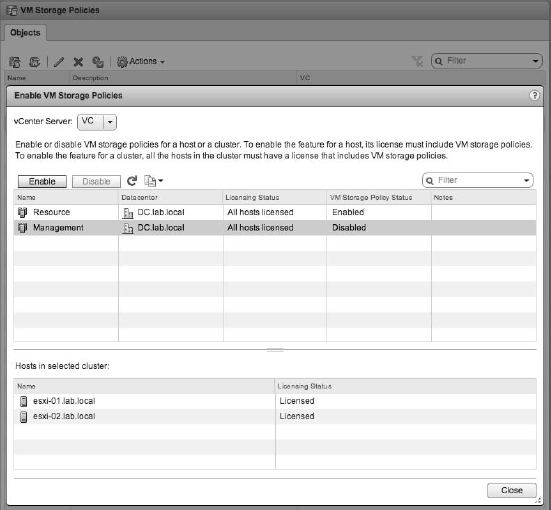

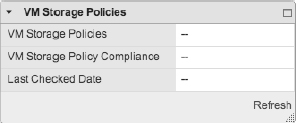

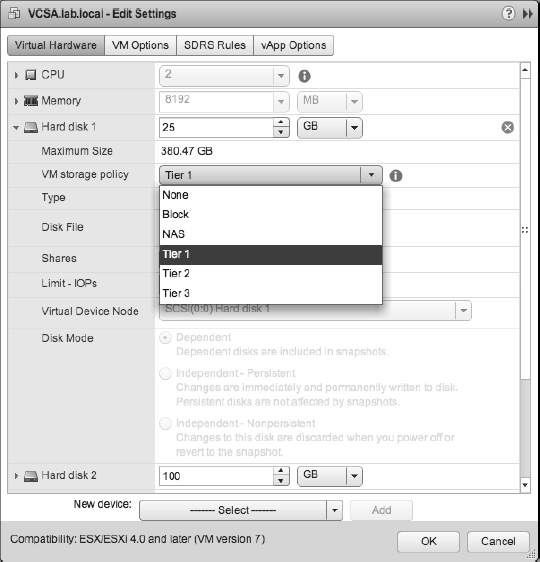

Picking a protocol type has historically been focused on the following criteria: