Poisson Events

I want to establish some of the properties of Poisson processes that were employed in Chapters 3 and 6 and were either left unproven there or explained by simple heuristics. Certain standard results, such as the mean and variance of a Poisson distribution, can be found in the books by Ross [99, 100], the ones chosen among the many other possible references in probability that could have been cited.

Note that throughout this appendix I assume a certain familiarity with the notion of conditioning one random variable on another, as explained, for example, in Chapters 6 and 7 of Ross [99] and in Appendix D.

To begin with, let’s return to two results stated but not proved in Section 3.2. First, Let Ni(t) be independent Poisson random variables at rates λi, for i = 1, 2, . . . , m. Then the sum N(t) = N1(t) + N2(t) + · · · + Nm(t) is also Poisson, at rate λ = λ1 + λ2 + · · · + λm.

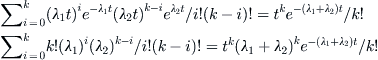

To establish this, begin with the case m = 2. Since the event N(t) = k occurs in k + 1 disjoint ways, namely, N1(t) = i and N2(t) = k – i, for i = 0, 1, . . . , k, we can sum over k + 1 events to obtain

![]()

Moreover, the separate counting processes are statistically independent, and so the last sum becomes

using the binomial theorem. Now proceed by induction. If the sum of the first m – 1 processes is already Poisson, then all we need do is to add the last one to this sum, which is again the case of two Poisson processes considered earlier.

Before I give the next result, recall that a continuous random variable T is said to be exponentially distributed at rate μ if

![]()

The expected value of T is readily verified to be 1/μ (Ross [100]), and we show later that the interarrival time between two Poisson arrivals is exponential. I assume that for now, and suppose there are two concurrent and independent Poisson processes at rates μ1 and μ2, respectively. A patient observer will see the next arrival from either the first or the second process. The probability that the next occurrence is actually from process i, 1 ≤ i ≤ 2, is μi/μ, where μ is the sum μ1 + μ2. We prove this in terms of the interarrival times T and T ′ of two simultaneous Poisson processes. Our second result from Section 3.2 is then:

Let T and T′ be independent and exponentially distributed random variables at rates μ1 and μ2. These define the interarrival times from two Poisson processes at rates μ1 and μ2. The probability that the first arrival occurs from the process having rate i is μi/μ, where μ is the sum of μ1 and μ2.

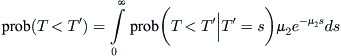

To prove this, let i = 1. A similar argument then applies to i = 2. We need to compute prob(T < T′). The density function of the variable T′ is ![]() , and so, by conditioning on the variable T′, we obtain

, and so, by conditioning on the variable T′, we obtain

However, since T and T ′ are independent, the last integral becomes

I now want to sketch some details regarding the intimate connection that exists between Poisson processes and the exponential random variables. We need to assume the properties of independence and stationary increments that were detailed in Section 3.2 for a Poisson counting process N(t) having the rate constant λ.

To begin, let P0(t) denote prob(N(t) = 0). Then P0(t + h) = prob(N(t) = 0, N(t + h) – N(t) = 0) = P0(t)P0(h). But P0(h) = 1 – prob(N(t) ≥ 1) = 1 – λh + o(h). It follows that [P0{(t + h) – P0(t)}]/h = [–P0(t)λh + o(h)]/h = λP0(t) + o(h)/h, which tends to –P0(t)λ as h goes to zero. This gives us the elementary differential equation P0′(t) = –P0(t)λ, whose solution is P0(t) = e–λt.

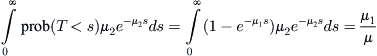

Let T1, T2, . . . be the interarrival times of the Poisson process in which T1 is the time of the first arrival, T2 the time from the first to the second arrival, and so forth. We have just established that T1 is exponentially distributed since prob(T1 < t) = 1 – e–λt. Consider, next, the event T2 > t. From Appendix D we know, using the expected value, that prob(T2 > t) = E(prob(T2 > t|T1)). But for each sample value s of T1 we get prob(T2 > t|T1 = s) = prob(N(s + t) – N(s) = 0|T1 = s) = prob(N(t) = 0) = e–λt. Therefore E(prob(T2 > t|T1)) = ∫ e–λt λe–λs ds = eλt. It follows that T2 is also exponentially distributed and that T1, T2 are independent random variables, each having mean 1/λ. By induction this remains true for all interarrival times. Since N(t) is the number of arrivals taking place up to time t, then, as a little thought shows,

![]()

if and only if N(t) ≤ n = 1. It follows from this that

![]()

which, by differentiation, gives the density function of Sn as

![]()

This is known as the gamma density for the random variable Sn, which is the sum of n independent and exponentially distributed variables.

Conversely, suppose that T1, T2, . . . are independent and exponentially distributed with a sum Sn that is gamma distributed as previously. Now, prob(N(t) = n) = prob(N(t) ≥ n) – prob(N(t) ≥ n – 1) = prob(Sn ≤ t) – prob(Sn–1 ≤ t) =

and, consequently, after integrating by parts, we find that prob(N(t) = n) = (λt)ne–λt/n!, which establishes that N(t) is Poisson-distributed.

The Poisson probability distribution is sometimes considered the most random of random processes. To explain this, suppose that there are exactly N arrivals from a Poisson process on an interval of length T. Then, ignoring their order of appearance, the N arrival times can be obtained by throwing darts at random, that is, as N independent samples from a uniform distribution; the darts are just as likely to penetrate one part of [0, T] as any other. Put another way, the N arrival events are indistinguishable from N points chosen independently in the interval [0, T], with no bias in favor of choosing one part of the interval over another. This means, of course, that the points are unlikely to be evenly distributed; indeed, some parts of the interval will see clumps of points, whereas other segments will be sparsely populated. This is another version of the clustering phenomenon that is so characteristic of randomness.

It is this link between the Poisson and uniform distributions that makes a good fit of empirical data to a Poisson distribution the quintessential tool for inferring the data’s randomness. Because of the significance of this result and because its proof is a bit tricky, I give a detailed argument shortly.

First, however, a random variable X is said to be uniformly distributed on [0, T] if prob(Y ≤ y) = y/T, and its density function f(t) = prob(Y = y) is therefore the constant 1/T. Let Y1, . . . , Yn be independent random variables uniformly distributed on [0, T]. Then their joint density function is ∏ f(yk) = 1/T n, with k summed from 1 to n.

Define random variables Y(k) for k = 1, . . . , n, by Y(k) is the kth-smallest value among the Yk. That is, if 0 < y1 < … < yn < T are values taken on by the Yk written in increasing order, then Y(1) = y1, . . . , Y(n) = yn. For example, if n = 3 and Y1 = 3, Y2 = 1, Y3 = 4, then Y(1) = 1, Y(2) = 3, Y(3) = 4.

There are n! distinct ways that the Yk variables can take on the values y1 < … < yn. Suppose that yj1, . . . , yjn is one of these permutations. Then

![]()

Each of the permutations corresponds to one of n! disjoint events describing how the Yk variables can take on the values y1, . . . , yn. Therefore,

![]()

This expression is the density function obtained by ordering in increasing rank the n! distinct possibilities for n independent uniform variables to take on a set of n values. This is known as the ordered statistics of the uniform variables.

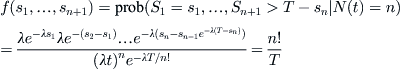

Now suppose that there are n arrivals of a Poisson process in [0, T], and let Sk, k = 1, 2, . . . , n + 1 denote the successive gap lengths of these arrivals. That is, if the first and second arrival take place at times s1 and s2, then S1 = s1 and S2 = s2 – s1, and so forth, with Sn+1 > T – sn. From Bayes’ rule we find that

We therefore obtain that the n arrival times of a Poisson process in [0, T], considered as unordered random variables, have the same distribution as the ordered statistics of n independent uniformly distributed random variables. This is the property of randomness that we were seeking, as mentioned earlier.

A similar result holds for spatial Poisson processes. A formal definition of such processes is given in terms of a region R of area A(R) and a function N(R) that counts the number of arrivals in R. Then prob(N(R) = k) = (λA(R))ke–λA(R)/k!, where λ is the average number of arrivals per unit area. The probability depends on area but not on the shape of R.

Suppose we know that there is one arrival in R, then the probability that the arrival lies within the subset S is, because of independence, prob(N(S) = 1|N(R) = 1) = prob(N(S) = 1, N(R – A) = 0)/prob(N(R) = 1) = λA(S)e–λA(S)e–λ(R-S)/λA(R)e–λA(R) = A(S)/A(R). That is, the arrival is uniformly distributed within R. The result extends to n arrivals.

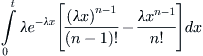

In Section 6.6 a statistical technique, the chi-squared test, was introduced to assess whether a set of sample data can be plausibly said to come from a Poisson distribution, namely, if we are willing to reject, with some degree of confidence, the null hypothesis that the data is not Poisson. I want to mention here another simple but rarely quoted test for the uniform distribution of n variables known as Sherman’s statistic, which dates from 1950 (Bartholomew [9]). The setting is familiar. We are given n arrival points xk in, say, an interval of length T, which results in n + 1 interval gaps between the arrivals, defined by sk, with s1 = x1, s2 = x2 – x1, . . . , sn+1 = T – xn. We want to determine if the unordered arrivals are n samples from a uniform distribution on [0, T], and to this end we define a quantity

![]()

which measures the deviation between the gap lengths and the average gap T/(n + 1). Note that a large value of wn is unlikely to arrive by chance, since it would imply that some intervals between events are too large and others too small, which suggests a nonrandom mechanism. On the other hand, a very small value of wn tells us that the intervals are spaced fairly regularly; again we do not attribute this to happenstance.