Now that our Notes application can save its data in a database, we can think about the next phase of making this a real application—namely, authenticating our users.

It's so natural to log in to a website to use its services. We do it every day, and we even trust banking and investment organizations to secure our financial information through login procedures on a website. The HyperText Transfer Protocol (HTTP) is a stateless protocol, and a web application cannot tell much about one HTTP request compared with another. Because HTTP is stateless, HTTP requests do not natively know the user's identity, whether the user driving the web browser is logged in, or even whether the HTTP request was initiated by a human being.

The typical method for user authentication is to send a cookie containing a token to the browser, to carry the user's identity, and indicate whether that browser is logged in.

With Express, the best way to do this is with the express-session middleware, which handles session management with a cookie. It is easy to configure but is not a complete solution for user authentication since it does not handle user login/logout.

The package that appears to be leading the pack in user authentication is Passport (http://passportjs.org/). In addition to authenticating users against local user information, it supports a long list of third-party services against which to authenticate. With this, a website can be developed that lets users sign up with credentials from another website—Twitter, for example.

We will use Passport to authenticate users against either a locally stored database or a Twitter account. We'll also take this as an opportunity to explore a representational state transfer (REST)-based microservice with Node.js.

The rationale is the greater opportunity to increase security by storing user information in a highly protected enclave. Many application teams store user information in a well-protected barricaded area with a strictly controlled application programming interface (API), and even physical access to the user information database, implementing as many technological barriers as possible against unapproved access. We're not going to go quite that far, but by the end of the book, the user information service will be deployed in its own Docker container.

In this chapter, we'll discuss the following three aspects of this phase:

- Creating a microservice to store user profile/authentication data.

- Authenticating a user with a locally stored password.

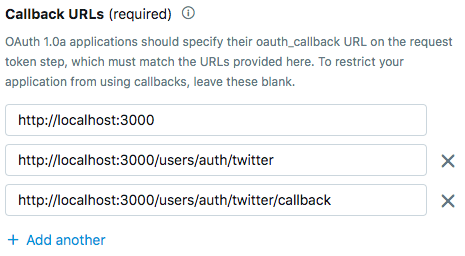

- Using OAuth2 to support authentication via third-party services. Specifically, we'll use Twitter as a third-party authentication service.

Let's get started!

The first thing to do is to duplicate the code used for the previous chapter. For example, if you kept that code in the chap07/notes directory, create a new directory, chap08/notes.

Creating a user information microservice

We could implement user authentication and accounts by simply adding a user model and a few routes and views to the existing Notes application. While that's easy, is this what is done in a real-world production application?

Consider the high value of user identity information and the super-strong need for robust and reliable user authentication. Website intrusions happen regularly, and it seems the item most frequently stolen is user identities. To that end, we declared earlier an intention to develop a user information microservice, but we must first discuss the technical rationale for doing so.

Microservices are not a panacea, of course, meaning we shouldn't try to force-fit every application into the microservice box. By analogy, microservices fit with the Unix philosophy of small tools, each doing one thing well, which we mix/match/combine into larger tools. Another word for this is composability. While we can build a lot of useful software tools with that philosophy, does it work for applications such as Photoshop or LibreOffice?

This is why microservices are popular today among application teams. Microservice architectures are more agile if used well. And, as we noted earlier, we're aiming for a highly secured microservice deployment.

With that decision out of the way, there are two other decisions to be made with regard to security implications. They are as follows:

- Do we create our own REST application framework?

- Do we create our own user login/authentication framework?

In many cases, it is better to use a well-regarded existing library where the maintainers have already stomped out lots of bugs, just as we used the Sequelize ORM (Object-Relational Mapping) library in the previous chapter, because of its maturity. We have identified two libraries for this phase of the Notes project.

We already mentioned using Passport for user login support, as well as authenticating Twitter users.

For REST support, we could have continued using Express, but instead will use Restify (http://restify.com/), which is a popular REST-centric application framework.

To test the service, we'll write a command-line tool for administering user information in the database. We won't be implementing an administrative user interface in the Notes application, and will instead rely on this tool to administer the users. As a side effect, we'll have a tool for testing the user service.

Once this service is functioning correctly, we'll set about modifying the Notes application to access user information from the service, while using Passport to handle authentication.

The first step is to create a new directory to hold the user information microservice. This should be a sibling directory to the Notes application. If you created a directory named chap08/notes to hold the Notes application, then create a directory named chap08/users to hold the microservice.

Then, in the chap08/users directory, run the following commands:

$ cd users $ npm init .. answer questions .. name - user-auth-server $ npm install debug@^4.1.x fs-extra@^9.x js-yaml@^3.14.x restify@^8.5.x restify-clients@^2.6.x sequelize@^6.x sqlite3@^5.x commander@^5.x [email protected] --save

This gets us ready to start coding. We'll use the debug module for logging messages, js-yaml to read the Sequelize configuration file, restify for its REST framework, and sequelize/sqlite3 for database access.

In the sections to come, we will develop a database model to store user information, and then create a REST service to manage that data. To test the service, we'll create a command-line tool that uses the REST API.

Developing the user information model

We'll be storing the user information using a Sequelize-based model in a SQL database. We went through that process in the previous chapter, but we'll do it a little differently this time. Rather than go for the ultimate flexibility of using any kind of database, we'll stick with Sequelize since the user information model is very simple and a SQL database is perfectly adequate.

The project will contain two modules. In this section, we'll create users-sequelize.mjs, which will define the SQUser schema and a couple of utility functions. In the next section, we'll start on user-server.mjs, which contains the REST server implementation.

First, let's ponder an architectural preference. Just how much should we separate between the data model code interfacing with the database from the REST server code? In the previous chapter, we went for a clean abstraction with several implementations of the database storage layer. For a simple server such as this, the REST request handler functions could contain all database calls, with no abstraction layer. Which is the best approach? We don't have a hard rule to follow. For this server, we will have database code more tightly integrated to the router functions, with a few shared functions.

Create a new file named users-sequelize.mjs in users containing the following code:

import Sequelize from "sequelize";

import { default as jsyaml } from 'js-yaml';

import { promises as fs } from 'fs';

import * as util from 'util';

import DBG from 'debug';

const log = DBG('users:model-users');

const error = DBG('users:error');

var sequlz;

export class SQUser extends Sequelize.Model {}

export async function connectDB() {

if (sequlz) return sequlz;

const yamltext = await fs.readFile(process.env.SEQUELIZE_CONNECT,

'utf8');

const params = await jsyaml.safeLoad(yamltext, 'utf8');

if (typeof process.env.SEQUELIZE_DBNAME !== 'undefined'

&& process.env.SEQUELIZE_DBNAME !== '') {

params.dbname = process.env.SEQUELIZE_DBNAME;

}

if (typeof process.env.SEQUELIZE_DBUSER !== 'undefined'

&& process.env.SEQUELIZE_DBUSER !== '') {

params.username = process.env.SEQUELIZE_DBUSER;

}

if (typeof process.env.SEQUELIZE_DBPASSWD !== 'undefined'

&& process.env.SEQUELIZE_DBPASSWD !== '') {

params.password = process.env.SEQUELIZE_DBPASSWD;

}

if (typeof process.env.SEQUELIZE_DBHOST !== 'undefined'

&& process.env.SEQUELIZE_DBHOST !== '') {

params.params.host = process.env.SEQUELIZE_DBHOST;

}

if (typeof process.env.SEQUELIZE_DBPORT !== 'undefined'

&& process.env.SEQUELIZE_DBPORT !== '') {

params.params.port = process.env.SEQUELIZE_DBPORT;

}

if (typeof process.env.SEQUELIZE_DBDIALECT !== 'undefined'

&& process.env.SEQUELIZE_DBDIALECT !== '') {

params.params.dialect = process.env.SEQUELIZE_DBDIALECT;

}

log('Sequelize params '+ util.inspect(params));

sequlz = new Sequelize(params.dbname, params.username,

params.password, params.params);

SQUser.init({

username: { type: Sequelize.STRING, unique: true },

password: Sequelize.STRING,

provider: Sequelize.STRING,

familyName: Sequelize.STRING,

givenName: Sequelize.STRING,

middleName: Sequelize.STRING,

emails: Sequelize.STRING(2048),

photos: Sequelize.STRING(2048)

}, {

sequelize: sequlz,

modelName: 'SQUser'

});

await SQUser.sync();

}

As with our Sequelize-based model for Notes, we will use a YAML Ain't Markup Language (YAML) file to store connection configuration. We're even using the same environment variable, SEQUELIZE_CONNECT, and the same approach to overriding fields of the configuration. The approach is similar, with a connectDB function setting up the connection and initializing the SQUsers table.

With this approach, we can use a base configuration file in the SEQUELIZE_CONNECT variable and then use the other environment variables to override its fields. This will be useful when we start deploying Docker containers.

The Passport project developed this object by harmonizing the user information given by several third-party services into a single object definition. To simplify our code, we're simply using the schema defined by Passport.

There are several functions to create that will be an API to manage user data. Let's add them to the bottom of users-sequelize.mjs, starting with the following code:

export function userParams(req) {

return {

username: req.params.username,

password: req.params.password,

provider: req.params.provider,

familyName: req.params.familyName,

givenName: req.params.givenName,

middleName: req.params.middleName,

emails: JSON.stringify(req.params.emails),

photos: JSON.stringify(req.params.photos)

};

}

In Restify, the route handler functions supply the same sort of request and response objects we've already seen. We'll go over the configuration of the REST server in the next section. Suffice to say that REST parameters arrive in the request handlers as the req.params object, as shown in the preceding code block. This function simplifies the gathering of those parameters into a simple object that happens to match the SQUser schema, as shown in the following code block:

export function sanitizedUser(user) {

var ret = {

id: user.username,

username: user.username,

provider: user.provider,

familyName: user.familyName,

givenName: user.givenName,

middleName: user.middleName

};

try {

ret.emails = JSON.parse(user.emails);

} catch(e) { ret.emails = []; }

try {

ret.photos = JSON.parse(user.photos);

} catch(e) { ret.photos = []; }

return ret;

}

When we fetch an SQUser object from the database, Sequelize obviously gives us a Sequelize object that has many extra fields and functions used by Sequelize. We don't want to send that data to our callers. Furthermore, we think it will increase security to not provide the password data beyond the boundary of this server. This function produces a simple, sanitized, anonymous JavaScript object from the SQUser instance. We could have defined a full JavaScript class, but would that have served any purpose? This anonymous JavaScript class is sufficient for this simple server, as illustrated in the following code block:

export async function findOneUser(username) {

let user = await SQUser.findOne({ where: { username: username } });

user = user ? sanitizedUser(user) : undefined;

return user;

}

export async function createUser(req) {

let tocreate = userParams(req);

await SQUser.create(tocreate);

const result = await findOneUser(req.params.username);

return result;

}

The pair of functions shown in the preceding code block provides some database operations that are used several times in the user-server.mjs module.

In findOneUser, we are looking up a single SQUser, and then returning a sanitized copy. In createUser, we gather the user parameters from the request object, create the SQUser object in the database, and then retrieve that newly created object to return it to the caller.

If you refer back to the connectDB function, there is a SEQUELIZE_CONNECT environment variable for the configuration file. Let's create one for SQLite3 that we can name sequelize-sqlite.yaml, as follows:

dbname: users

username:

password:

params:

dialect: sqlite

storage: users-sequelize.sqlite3

This is just like the configuration files we used in the previous chapter.

That's what we need for the database side of this service. Let's now move on to creating the REST service.

Creating a REST server for user information

The user information service is a REST server to handle user information data and authentication. Our goal is, of course, to integrate that with the Notes application, but in a real project, such a user information service could be integrated with several web applications. The REST service will provide functions we found useful while developing the user login/logout support in Notes, which we'll show later in the chapter.

In the package.json file, change the main tag to the following line of code:

"main": "user-server.mjs",

This declares that the module we're about to create, user-server.mjs, is the main package of this project.

Make sure the scripts section contains the following script:

"start": "cross-env DEBUG=users:* PORT=5858 SEQUELIZE_CONNECT=sequelize-sqlite.yaml node ./user-server.mjs"

Clearly, this is how we'll start our server. It uses the configuration file from the previous section and specifies that we'll listen on port 5858.

Then, create a file named user-server.mjs containing the following code:

import restify from 'restify';

import * as util from 'util';

import { SQUser, connectDB, userParams, findOneUser,

createUser, sanitizedUser } from './users-sequelize.mjs';

import DBG from 'debug';

const log = DBG('users:service');

const error = DBG('users:error');

///////////// Set up the REST server

var server = restify.createServer({

name: "User-Auth-Service",

version: "0.0.1"

});

server.use(restify.plugins.authorizationParser());

server.use(check);

server.use(restify.plugins.queryParser());

server.use(restify.plugins.bodyParser({

mapParams: true

}));

server.listen(process.env.PORT, "localhost", function() {

log(server.name +' listening at '+ server.url);

});

process.on('uncaughtException', function(err) {

console.error("UNCAUGHT EXCEPTION - "+ (err.stack || err));

process.exit(1);

});

process.on('unhandledRejection', (reason, p) => {

console.error(`UNHANDLED PROMISE REJECTION: ${util.inspect(p)} reason: ${reason}`);

process.exit(1);

});

We're using Restify, rather than Express, to develop this server. Obviously, the Restify API has similarities with Express, since both point to the Ruby framework Sinatra for inspiration. We'll see even more similarities when we talk about the route handler functions.

What we have here is the core setup of the REST server. We created the server object and added a few things that, in Express, were called middleware, but what Restify simply refers to as handlers. A Restify handler function serves the same purpose as an Express middleware function. Both frameworks let you define a function chain to implement the features of your service. One calls it a middleware function and the other calls it a handler function, but they're almost identical in form and function.

We also have a collection of listener functions that print a startup message and handle uncaught errors. You do remember that it's important to catch the uncaught errors?

An interesting thing is that, since REST services are often versioned, Restify has built-in support for handling version numbers. Restify supports semantic versioning (SemVer) version matching in the Accept-Version HTTP header.

In the handlers that were installed, they obviously have to do with authorization and parsing parameters from the Uniform Resource Locator (URL) query string and from the HTTP body. The handlers with names starting with restify.plugins are maintained by the Restify team, and documented on their website.

That leaves the handler simply named check. This handler is in user-server.mjs and provides a simple mechanism of token-based authentication for REST clients.

Add the following code to the bottom of user-server.mjs:

// Mimic API Key authentication.

var apiKeys = [

{ user: 'them', key: 'D4ED43C0-8BD6-4FE2-B358-7C0E230D11EF' } ];

function check(req, res, next) {

if (req.authorization && req.authorization.basic) {

var found = false;

for (let auth of apiKeys) {

if (auth.key === req.authorization.basic.password

&& auth.user === req.authorization.basic.username) {

found = true;

break;

}

}

if (found) next();

else {

res.send(401, new Error("Not authenticated"));

next(false);

}

} else {

res.send(500, new Error('No Authorization Key'));

next(false);

}

}

This handler executes for every request and immediately follows restify.plugins.authorizationParser. It looks for authorization data—specifically, HTTP basic authorization—to have been supplied in the HTTP request. It then loops through the list of keys in the apiKeys array, and if the Basic Auth parameters supplied matched, then the caller is accepted.

This should not be taken as an example of a best practice since HTTP Basic Auth is widely known to be extremely insecure, among other issues. But it demonstrates the basic concept, and also shows that enforcing token-based authorization is easily done with a similar handler.

This also shows us the function signature of a Restify handler function—namely, that it is the same signature used for Express middleware, the request and result objects, and the next callback.

There is a big difference between Restify and Express as to how the next callback is used. In Express, remember that a middleware function calls next unless that middleware function is the last function on the processing chain—for example if the function has called res.send (or equivalent) to send a response to the caller. In Restify, every handler function calls next. If a handler function knows it should be the last function on the handler chain, then it uses next(false); otherwise, it calls next(). If a handler function needs to indicate an error, it calls next(err), where err is an object where instanceof Error is true.

Consider the following hypothetical handler function:

server.use((req, res, next) => {

// ... processing

if (foundErrorCondition) {

next(new Error('Describe error condition'));

} else if (successfulConclusion) {

res.send(results);

next(false);

} else {

// more processing must be required

next();

}

});

This shows the following three cases:

- Errors are indicated with next(new Error('Error description')).

- Completion is indicated with next(false).

- The continuation of processing is indicated with next().

We have created the starting point for a user information data model and the matching REST service. The next thing we need is a tool to test and administer the server.

What we want to do in the following sections is two things. First, we'll create the REST handler functions to implement the REST API. At the same time, we'll create a command-line tool that will use the REST API and let us both test the server and add or delete users.

Creating a command-line tool to test and administer the user authentication server

To give ourselves assurance that the user authentication server works, let's write a tool with which to exercise the server that can also be used for administration. In a typical project, we'd create not only a customer-facing web user interface, but also an administrator-facing web application to administer the service. Instead of doing that here, we'll create a command-line tool.

The tool will be built with Commander, a popular framework for developing command-line tools in Node.js. With Commander, we can easily build a command-line interface (CLI) tool supporting the program verb --option optionValue parameter pattern.

Any command-line tool looks at the process.argv array to know what to do. This array contains strings parsed from what was given on the command line. The concept for all this goes way back to the earliest history of Unix and the C programming language.

By using Commander, we have a simpler path of dealing with the command line. It uses a declarative approach to handling command-line parameters. This means we use Commander functions to declare the options and sub-commands to be used by this program, and then we ask Commander to parse the command line the user supplies. Commander then calls the functions we declare based on the content of the command line.

Create a file named cli.mjs containing the following code:

import { default as program } from 'commander';

import { default as restify } from 'restify-clients';

import * as util from 'util';

var client_port;

var client_host;

var client_version = '*';

var client_protocol;

var authid = 'them';

var authcode = 'D4ED43C0-8BD6-4FE2-B358-7C0E230D11EF';

const client = (program) => {

if (typeof process.env.PORT === 'string')

client_port = Number.parseInt(process.env.PORT);

if (typeof program.port === 'string')

client_port = Number.parseInt(program.port);

if (typeof program.host === 'string') client_host = program.host;

if (typeof program.url === 'string') {

let purl = new URL(program.url);

if (purl.host && purl.host !== '') client_host = purl.host;

if (purl.port && purl.port !== '') client_port = purl.port;

if (purl.protocol && purl.protocol !== '') client_protocol =

purl.protocol;

}

let connect_url = new URL('http://localhost:5858');

if (client_protocol) connect_url.protocol = client_protocol;

if (client_host) connect_url.host = client_host;

if (client_port) connect_url.port = client_port;

let client = restify.createJsonClient({

url: connect_url.href,

version: client_version

});

client.basicAuth(authid, authcode);

return client;

}

program

.option('-p, --port <port>',

'Port number for user server, if using localhost')

.option('-h, --host <host>',

'Port number for user server, if using localhost')

.option('-u, --url <url>',

'Connection URL for user server, if using a remote server');

This is just the starting point of the command-line tool. For most of the REST handler functions, we'll also implement a sub-command in this tool. We'll take care of that code in the subsequent sections. For now, let's focus on how the command-line tool is set up.

The Commander project suggests we name the default import program, as shown in the preceding code block. As mentioned earlier, we declare the command-line options and sub-commands by calling methods on this object.

In order to properly parse the command line, the last line of code in cli.mjs must be as follows:

program.parse(process.argv);

The process.argv variable is, of course, the command-line arguments split out into an array. Commander, then, is processing those arguments based on the options' declarations.

For the REST client, we use the restify-clients package. As the name implies, this is a companion package to Restify and is maintained by the Restify team.

At the top of this script, we declare a few variables to hold connection parameters. The goal is to create a connection URL to access the REST service. The connect_url variable is initialized with the default value, which is port 5858 on the localhost.

The function named client looks at the information Commander parses from the command line, as well as a number of environment variables. From that data, it deduces any modification to the connect_url variable. The result is that we can connect to this service on any server from our laptop to a faraway cloud-hosted server.

We've also hardcoded the access token and the use of Basic Auth. Put on the backlog a high-priority task to change to a stricter form of authentication.

Where do the values of program.port, program.host, and program.url come from? We declared those variables—that's where they came from.

Consider the following line of code:

program.option('-p, --port <port>', 'Long Description of the option');

This declares an option, either -p or --port, that Commander will parse out of the command line. Notice that all we do is write a text string and, from that, Commander knows it must parse these options. Isn't this easy?

When it sees one of these options, the <port> declaration tells Commander that this option requires an argument. It will parse that argument out of the command line, and then assign it to program.port.

Therefore, program.port, program.host, and program.url were all declared in a similar way. When Commander sees those options, it will create the matching variables, and then our client function will take that data and modify connect_url appropriately.

One of the side effects of these declarations is that Commander can generate help text automatically. The result we'll achieve is being able to type the following code:

$ node cli.mjs --help

Usage: cli.mjs [options] [command]

Options:

-p, --port <port> Port number for user server, if using localhost

-h, --host <host> Port number for user server, if using localhost

-u, --url <url> Connection URL for user server, if using a remote

server

-h, --help output usage information

Commands:

add [options] <username> Add a user to the user server

find-or-create [options] <username> Add a user to the user server

update [options] <username> Add a user to the user server

destroy <username> Destroy a user on the user server

find <username> Search for a user on the user server

list-users List all users on the user server

The text comes directly from the descriptive text we put in the declarations. Likewise, each of the sub-commands also takes a --help option to print out corresponding help text.

With all that out of the way, let's start creating these commands and REST functions.

Creating a user in the user information database

We have the starting point for the REST server, and the starting point for a command-line tool to administer the server. Let's start creating the functions—and, of course, the best place to start is to create an SQUser object.

In user-server.mjs, add the following route handler:

server.post('/create-user', async (req, res, next) => {

try {

await connectDB();

let result = await createUser(req);

res.contentType = 'json';

res.send(result);

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

This handles a POST request on the /create-user URL. This should look very similar to an Express route handler function, apart from the use of the next callback. Refer back to the discussion on this. As we did with the Notes application, we declare the handler callback as an async function and then use a try/catch structure to catch all errors and report them as errors.

The handler starts with connectDB to ensure the database is set up. Then, if you refer back to the createUser function, you see it gathers up the user data from the request parameters and then uses SQUser.create to create an entry in the database. What we will receive here is the sanitized user object, and we simply return that to the caller.

Let's also add the following code to user-server.mjs:

server.post('/find-or-create', async (req, res, next) => {

try {

await connectDB();

let user = await findOneUser(req.params.username);

if (!user) {

user = await createUser(req);

if (!user) throw new Error('No user created');

}

res.contentType = 'json';

res.send(user);

return next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

This is a variation on creating an SQUser. While implementing login support in the Notes application, there was a scenario in which we had an authenticated user that may or may not already have an SQUser object in the database. In this case, we look to see whether the user already exists and, if not, then we create that user.

Let's turn now to cli.mjs and implement the sub-commands to handle these two REST functions, as follows:

program

.command('add <username>')

.description('Add a user to the user server')

.option('--password <password>', 'Password for new user')

.option('--family-name <familyName>',

'Family name, or last name, of the user')

.option('--given-name <givenName>', 'Given name, or first name,

of the user')

.option('--middle-name <middleName>', 'Middle name of the user')

.option('--email <email>', 'Email address for the user')

.action((username, cmdObj) => {

const topost = {

username, password: cmdObj.password, provider: "local",

familyName: cmdObj.familyName,

givenName: cmdObj.givenName,

middleName: cmdObj.middleName,

emails: [], photos: []

};

if (typeof cmdObj.email !== 'undefined')

topost.emails.push(cmdObj.email);

client(program).post('/create-user', topost,

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log('Created '+ util.inspect(obj));

});

});

By using program.command, we are declaring a sub-command—in this case, add. The <username> declaration says that this sub-command takes an argument. Commander will provide that argument value in the username parameter to the function passed in the action method.

The structure of a program.command declaration is to first declare the syntax of the sub-command. The description method provides user-friendly documentation. The option method calls are options for this sub-command, rather than global options. Finally, the action method is where we supply a callback function that will be invoked when Commander sees this sub-command in the command line.

Any arguments declared in the program.command string end up as parameters to that callback function.

Any values for the options for this sub-command will land in the cmdObj object. By contrast, the value for global options is attached to the program object.

With that understanding, we can see that this sub-command gathers information from the command line and then uses the client function to connect to the server. It invokes the /create-user URL, passing along the data gathered from the command line. Upon receiving the response, it will print either the error or the result object.

Let's now add the sub-command corresponding to the /find-or-create URL, as follows:

program

.command('find-or-create <username>')

.description('Add a user to the user server')

.option('--password <password>', 'Password for new user')

.option('--family-name <familyName>',

'Family name, or last name, of the user')

.option('--given-name <givenName>', 'Given name, or first name,

of the user')

.option('--middle-name <middleName>', 'Middle name of the user')

.option('--email <email>', 'Email address for the user')

.action((username, cmdObj) => {

const topost = {

username, password: cmdObj.password, provider: "local",

familyName: cmdObj.familyName,

givenName: cmdObj.givenName,

middleName: cmdObj.middleName,

emails: [], photos: []

};

if (typeof cmdObj.email !== 'undefined')

topost.emails.push(cmdObj.email);

client(program).post('/find-or-create', topost,

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log('Found or Created '+ util.inspect(obj));

});

});

This is very similar, except for calling /find-or-create.

We have enough here to run the server and try the following two commands:

$ npm start

> [email protected] start /home/david/Chapter08/users

> DEBUG=users:* PORT=5858 SEQUELIZE_CONNECT=sequelize-sqlite.yaml node ./user-server.mjs

users:service User-Auth-Service listening at http://127.0.0.1:5858 +0ms

We run this in one command window to start the server. In another command window, we can run the following command:

$ node cli.mjs add --password w0rd --family-name Einarrsdottir --given-name Ashildr --email [email protected] me

Created {

id: 'me',

username: 'me',

provider: 'local',

familyName: 'Einarrsdottir',

givenName: 'Ashildr',

middleName: null,

emails: [ '[email protected]' ],

photos: []

}

Over in the server window, it will print a trace of the actions taken in response to this. But it's what we expect: the values we gave on the command line are in the database, as shown in the following code block:

$ node cli.mjs find-or-create --password foooo --family-name Smith --given-name John --middle-name Snuffy --email [email protected] snuffy-smith

Found or Created {

id: 'snuffy-smith',

username: 'snuffy-smith',

provider: 'local',

familyName: 'Smith',

givenName: 'John',

middleName: 'Snuffy',

emails: [ '[email protected]' ],

photos: []

}

Likewise, we have success with the find-or-create command.

That gives us the ability to create SQUser objects. Next, let's see how to read from the database.

Reading user data from the user information service

The next thing we want to support is to look for users in the user information service. Instead of a general search facility, the need is to retrieve an SQUser object for a given username. We already have the utility function for this purpose; it's just a matter of hooking up a REST endpoint.

In user-server.mjs, add the following function:

server.get('/find/:username', async (req, res, next) => {

try {

await connectDB();

const user = await findOneUser(req.params.username);

if (!user) {

res.send(404, new Error("Did not find "+ req.params.username));

} else {

res.contentType = 'json';

res.send(user);

}

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

And, as expected, that was easy enough. For the /find URL, we need to supply the username in the URL. The code simply looks up the SQUser object using the existing utility function.

A related function retrieves the SQUser objects for all users. Add the following code to user-server.mjs:

server.get('/list', async (req, res, next) => {

try {

await connectDB();

let userlist = await SQUser.findAll({});

userlist = userlist.map(user => sanitizedUser(user));

if (!userlist) userlist = [];

res.contentType = 'json';

res.send(userlist);

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

We know from the previous chapter that the findAll operation retrieves all matching objects and that passing an empty query selector such as this causes findAll to match every SQUser object. Therefore, this performs the task we described, to retrieve information on all users.

Then, in cli.mjs, we add the following sub-command declarations:

program

.command('find <username>')

.description('Search for a user on the user server')

.action((username, cmdObj) => {

client(program).get(`/find/${username}`,

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log('Found '+ util.inspect(obj));

});

});

program

.command('list-users')

.description('List all users on the user server')

.action((cmdObj) => {

client(program).get('/list', (err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log(obj);

});

});

This is similarly easy. We pass the username provided on our command line in the /find URL and then print out the result. Likewise, for the list-users sub-command, we simply call /list on the server and print out the result.

After restarting the server, we can test the commands, as follows:

$ node cli.mjs find me

Found {

id: 'me',

username: 'me',

provider: 'local',

familyName: 'Einarrsdottir',

givenName: 'Ashildr',

middleName: null,

emails: [ '[email protected]' ],

photos: []

}

$ node cli.mjs list-users

[

{

id: 'snuffy-smith',

username: 'snuffy-smith',

provider: 'local',

familyName: 'Smith',

givenName: 'John',

middleName: 'Snuffy',

emails: [ '[email protected]' ],

photos: []

},

{

id: 'me',

username: 'me',

provider: 'local',

familyName: 'Einarrsdottir',

givenName: 'Ashildr',

middleName: null,

emails: [ '[email protected]' ],

photos: []

}

]

And, indeed, the results came in as we expected.

The next operation we need is to update an SQUser object.

Updating user information in the user information service

The next functionality to add is to update user information. For this, we can use the Sequelize update function, and simply expose it as a REST operation.

To that end, add the following code to user-server.mjs:

server.post('/update-user/:username', async (req, res, next) => {

try {

await connectDB();

let toupdate = userParams(req);

await SQUser.update(toupdate, { where: { username:

req.params.username }});

const result = await findOneUser(req.params.username);

res.contentType = 'json';

res.send(result);

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

The caller is to provide the same set of user information parameters, which will be picked up by the userParams function. We then use the update function, as expected, and then retrieve the modified SQUser object, sanitize it, and send it as the result.

To match that function, add the following code to cli.mjs:

program

.command('update <username>')

.description('Add a user to the user server')

.option('--password <password>', 'Password for new user')

.option('--family-name <familyName>',

'Family name, or last name, of the user')

.option('--given-name <givenName>', 'Given name, or first name,

of the user')

.option('--middle-name <middleName>', 'Middle name of the user')

.option('--email <email>', 'Email address for the user')

.action((username, cmdObj) => {

const topost = {

username, password: cmdObj.password,

familyName: cmdObj.familyName,

givenName: cmdObj.givenName,

middleName: cmdObj.middleName,

emails: [], photos: []

};

if (typeof cmdObj.email !== 'undefined')

topost.emails.push(cmdObj.email);

client(program).post(`/update-user/${username}`, topost,

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log('Updated '+ util.inspect(obj));

});

});

As expected, this sub-command must take the same set of user information parameters. It then bundles those parameters into an object, posting it to the /update-user endpoint on the REST server.

Then, to test the result, we run the command, like so:

$ node cli.mjs update --password fooooey --family-name Smith --given-name John --middle-name Snuffy --email [email protected] snuffy-smith

Updated {

id: 'snuffy-smith',

username: 'snuffy-smith',

provider: 'local',

familyName: 'Smith',

givenName: 'John',

middleName: 'Snuffy',

emails: [ '[email protected]' ],

photos: []

}

And, indeed, we managed to change Snuffy's email address.

The next operation is to delete an SQUser object.

Deleting a user record from the user information service

Our next operation will complete the create, read, update, and delete (CRUD) operations by letting us delete a user.

Add the following code to user-server.mjs:

server.del('/destroy/:username', async (req, res, next) => {

try {

await connectDB();

const user = await SQUser.findOne({

where: { username: req.params.username } });

if (!user) {

res.send(404,

new Error(`Did not find requested ${req.params.username}

to delete`));

} else {

user.destroy();

res.contentType = 'json';

res.send({});

}

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

This is simple enough. We first look up the user to ensure it exists, and then call the destroy function on the SQUser object. There's no need for any result, so we send an empty object.

To exercise this function, add the following code to cli.mjs:

program

.command('destroy <username>')

.description('Destroy a user on the user server')

.action((username, cmdObj) => {

client(program).del(`/destroy/${username}`,

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log('Deleted - result= '+ util.inspect(obj));

});

});

This is simply to send a DELETE request to the server on the /destroy URL.

And then, to test it, run the following command:

$ node cli.mjs destroy snuffy-smith

Deleted - result= {}

$ node cli.mjs find snuffy-smith

finding snuffy-smith

NotFoundError: {}

at Object.createHttpErr (/home/david/Chapter08/users/node_modules/restify-clients/lib/helpers/errors.js:91:26)

at ClientRequest.onResponse (/home/david/Chapter08/users/node_modules/restify-clients/lib/HttpClient.js:309:26)

at Object.onceWrapper (events.js:428:26)

at ClientRequest.emit (events.js:321:20)

at HTTPParser.parserOnIncomingClient [as onIncoming] (_http_client.js:602:27)

at HTTPParser.parserOnHeadersComplete (_http_common.js:116:17)

at Socket.socketOnData (_http_client.js:471:22)

at Socket.emit (events.js:321:20)

at addChunk (_stream_readable.js:305:12)

at readableAddChunk (_stream_readable.js:280:11)

First, we deleted Snuffy's user record, and it gave us an empty response, as expected. Then, we tried to retrieve his record and, as expected, there was an error.

While we have completed the CRUD operations, we have one final task to cover.

Checking the user's password in the user information service

How can we have a user login/logout service without being able to check their password? The question is: Where should the password check occur? It seems, without examining it too deeply, that it's better to perform this operation inside the user information service. We earlier described the decision that it's probably safer to never expose the user password beyond the user information service. As a result, the password check should occur in that service so that the password does not stray beyond the service.

Let's start with the following function in user-server.mjs:

server.post('/password-check', async (req, res, next) => {

try {

await connectDB();

const user = await SQUser.findOne({

where: { username: req.params.username } });

let checked;

if (!user) {

checked = {

check: false, username: req.params.username,

message: "Could not find user"

};

} else if (user.username === req.params.username

&& user.password === req.params.password) {

checked = { check: true, username: user.username };

} else {

checked = {

check: false, username: req.params.username,

message: "Incorrect password"

};

}

res.contentType = 'json';

res.send(checked);

next(false);

} catch(err) {

res.send(500, err);

next(false);

}

});

This lets us support the checking of user passwords. There are three conditions to check, as follows:

- Whether there is no such user

- Whether the passwords matched

- Whether the passwords did not match

The code neatly determines all three conditions and returns an object indicating, via the check field, whether the user is authenticated. The caller is to send username and password parameters that will be checked.

To check it out, let's add the following code to cli.mjs:

program

.command('password-check <username> <password>')

.description('Check whether the user password checks out')

.action((username, password, cmdObj) => {

client(program).post('/password-check', { username, password },

(err, req, res, obj) => {

if (err) console.error(err.stack);

else console.log(obj);

});

});

And, as expected, the code to invoke this operation is simple. We take the username and password parameters from the command line, send them to the server, and then print the result.

To verify that it works, run the following command:

$ node cli.mjs password-check me w0rd

{ check: true, username: 'me' }

$ node cli.mjs password-check me w0rdy

{ check: false, username: 'me', message: 'Incorrect password' }

Indeed, the correct password gives us a true indicator, while the wrong password gives us false.

We've done a lot in this section by implementing a user information service. We successfully created a REST service while thinking about architectural choices around correctly handling sensitive user data. We were also able to verify that the REST service is functioning using an ad hoc testing tool. With this command-line tool, we can easily try any combination of parameters, and we can easily extend it if the need arises to add more REST operations.

Now, we need to start on the real goal of the chapter: changing the Notes user interface to support login/logout. We will see how to do this in the following sections.

Providing login support for the Notes application

Now that we have proved that the user authentication service is working, we can set up the Notes application to support user logins. We'll be using Passport to support login/logout, and the authentication server to store the required data.

Among the available packages, Passport stands out for simplicity and flexibility. It integrates directly with the Express middleware chain, and the Passport community has developed hundreds of so-called strategy modules to handle authentication against a long list of third-party services.

Let's start this by adding a module for accessing the user information REST server we just created.

Accessing the user authentication REST API

The first step is to create a user data model for the Notes application. Rather than retrieving data from data files or a database, it will use REST to query the server we just created. Recall that we created this REST service in the theory of walling off the service since it contains sensitive user information.

Earlier, we suggested duplicating Chapter 7, Data Storage and Retrieval, code for Notes in the chap08/notes directory and creating the user information server as chap08/users.

Earlier in this chapter, we used the restify-clients module to access the REST service. That package is a companion to the Restify library; the restify package supports the server side of the REST protocol and restify-clients supports the client side.

However nice the restify-clients library is, it doesn't support a Promise-oriented API, as is required to play well with async functions. Another library, SuperAgent, does support a Promise-oriented API and plays well in async functions, and there is a companion to that package, SuperTest, that's useful in unit testing. We'll use SuperTest in Chapter 13, Unit Testing and Functional Testing when we talk about unit testing.

To install the package (again, in the Notes application directory), run the following command:

$ npm install superagent@^5.2.x --save

Then, create a new file, models/users-superagent.mjs, containing the following code:

import { default as request } from 'superagent';

import util from 'util';

import url from 'url';

const URL = url.URL;

import DBG from 'debug';

const debug = DBG('notes:users-superagent');

const error = DBG('notes:error-superagent');

var authid = 'them';

var authcode = 'D4ED43C0-8BD6-4FE2-B358-7C0E230D11EF';

function reqURL(path) {

const requrl = new URL(process.env.USER_SERVICE_URL);

requrl.pathname = path;

return requrl.toString();

}

The reqURL function is similar in purpose to the connectDB functions that we wrote in earlier modules. Remember that we used connectDB in earlier modules to open a database connection that will be kept open for a long time. With SuperAgent, we don't leave a connection open to the service. Instead, we open a new server connection on each request. For every request, we will formulate the request URL. The base URL, such as http://localhost:3333/, is to be provided in the USER_SERVICE_URL environment variable. The reqURL function modifies that URL, using the new Web Hypertext Application Technology Working Group (WHATWG) URL support in Node.js, to use a given URL path.

We also added the authentication ID and code required for the server. Obviously, when the backlog task comes up to use a better token authentication system, this will have to change.

To handle creating and updating user records, run the following code:

export async function create(username, password,

provider, familyName, givenName, middleName,

emails, photos) {

var res = await request

.post(reqURL('/create-user'))

.send({ username, password, provider,

familyName, givenName, middleName, emails, photos

})

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

} export async function update(username, password,

provider, familyName, givenName, middleName,

emails, photos) {

var res = await request

.post(reqURL(`/update-user/${username}`))

.send({ username, password, provider,

familyName, givenName, middleName, emails, photos

})

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

}

These are our create and update functions. In each case, they take the data provided, construct an anonymous object, and POST it to the server. The function is to be provided with the values corresponding to the SQUser schema. It bundles the data provided in the send method, sets various parameters, and then sets up the Basic Auth token.

The SuperAgent library uses an API style called method chaining. The coder chains together method calls to construct a request. The chain of method calls can end in a .then or .end clause, either of which takes a callback function. But leave off both, and it will return a Promise, and, of course, Promises let us use this directly from an async function.

The res.body value at the end of each function contains the value returned by the REST server. All through this library, we'll use the .auth clause to set up the required authentication key.

In this case, we've purposely chosen variable names for the parameters to match the field names of the object with parameter names used by the server. In doing so, we can use this shortened notation for anonymous objects, and our code is a little cleaner by using consistent variable names from beginning to end.

Now, add the following function to support the retrieval of user records:

export async function find(username) {

var res = await request

.get(reqURL(`/find/${username}`))

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

}

This is following the same pattern as before. The set methods are, of course, used for setting HTTP headers in the REST call. This means having at least a passing knowledge of the HTTP protocol.

The Content-Type header says the data sent to the server is in JavaScript Object Notation (JSON) format. The Accept header says that this REST client can handle JSON data. JSON is, of course, easiest for a JavaScript program—such as what we're writing—to utilize.

Let's now create the function for checking passwords, as follows:

export async function userPasswordCheck(username, password) {

var res = await request

.post(reqURL(`/password-check`))

.send({ username, password })

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

}

One point about this method is worth noting. It could have taken the parameters in the URL instead of the request body, as is done here. But since request URLs are routinely logged to files, putting the username and password parameters in the URL means user identity information would be logged to files and be part of activity reports. That would obviously be a very bad choice. Putting those parameters in the request body not only avoids that bad result but if an HTTPS connection to the service were used, the transaction would be encrypted.

Then, let's create our find-or-create function, as follows:

export async function findOrCreate(profile) {

var res = await request

.post(reqURL('/find-or-create'))

.send({

username: profile.id, password: profile.password,

provider: profile.provider,

familyName: profile.familyName,

givenName: profile.givenName,

middleName: profile.middleName,

emails: profile.emails, photos: profile.photos

})

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

}

The /find-or-create function either discovers the user in the database or creates a new user. The profile object will come from Passport, but take careful note of what we do with profile.id. The Passport documentation says it will provide the username in the profile.id field, but we want to store it as username instead.

Let's now create a function to retrieve the list of users, as follows:

export async function listUsers() {

var res = await request

.get(reqURL('/list'))

.set('Content-Type', 'application/json')

.set('Acccept', 'application/json')

.auth(authid, authcode);

return res.body;

}

As before, this is very straightforward.

With this module, we can interface with the user information service, and we can now proceed with modifying the Notes user interface.

Incorporating login and logout routing functions in the Notes application

What we've built so far is a user data model, with a REST API wrapping that model to create our authentication information service. Then, within the Notes application, we have a module that requests user data from this server. As yet, nothing in the Notes application knows that this user model exists. The next step is to create a routing module for login/logout URLs and to change the rest of Notes to use user data.

The routing module is where we use passport to handle user authentication. The first task is to install the required modules, as follows:

$ npm install passport@^0.4.x [email protected] --save

The passport module gives us the authentication algorithms. To support different authentication mechanisms, the passport authors have developed several strategy implementations—the authentication mechanisms, or strategies, corresponding to the various third-party services that support authentication, such as using OAuth to authenticate against services such as Facebook, Twitter, or GitHub.

Passport also requires that we install Express Session support. Use the following command to install the modules:

$ npm install [email protected] [email protected] --save

The strategy implemented in the passport-local package authenticates solely using data stored locally to the application—for example, our user authentication information service. Later, we'll add a strategy module to authenticate the use of OAuth with Twitter.

Let's start by creating the routing module, routes/users.mjs, as follows:

import path from 'path';

import util from 'util';

import { default as express } from 'express';

import { default as passport } from 'passport';

import { default as passportLocal } from 'passport-local';

const LocalStrategy = passportLocal.Strategy;

import * as usersModel from '../models/users-superagent.mjs';

import { sessionCookieName } from '../app.mjs';

export const router = express.Router();

import DBG from 'debug';

const debug = DBG('notes:router-users');

const error = DBG('notes:error-users');

This brings in the modules we need for the /users router. This includes the two passport modules and the REST-based user authentication model.

In app.mjs, we will be adding session support so our users can log in and log out. That relies on storing a cookie in the browser, and the cookie name is found in this variable exported from app.mjs. We'll be using that cookie in a moment.

Add the following functions to the end of routes/users.mjs:

export function initPassport(app) {

app.use(passport.initialize());

app.use(passport.session());

}

export function ensureAuthenticated(req, res, next) {

try {

// req.user is set by Passport in the deserialize function

if (req.user) next();

else res.redirect('/users/login');

} catch (e) { next(e); }

}

The initPassport function will be called from app.mjs, and it installs the Passport middleware in the Express configuration. We'll discuss the implications of this later when we get to app.mjs changes, but Passport uses sessions to detect whether this HTTP request is authenticated. It looks at every request coming into the application, looks for clues about whether this browser is logged in, and attaches data to the request object as req.user.

The ensureAuthenticated function will be used by other routing modules and is to be inserted into any route definition that requires an authenticated logged-in user. For example, editing or deleting a note requires the user to be logged in and, therefore, the corresponding routes in routes/notes.mjs must use ensureAuthenticated. If the user is not logged in, this function redirects them to /users/login so that they can log in.

Add the following route handlers in routes/users.mjs:

router.get('/login', function(req, res, next) {

try {

res.render('login', { title: "Login to Notes", user: req.user, });

} catch (e) { next(e); }

});

router.post('/login',

passport.authenticate('local', {

successRedirect: '/', // SUCCESS: Go to home page

failureRedirect: 'login', // FAIL: Go to /user/login

})

);

Because this router is mounted on /users, all these routes will have /user prepended. The /users/login route simply shows a form requesting a username and password. When this form is submitted, we land in the second route declaration, with a POST on /users/login. If passport deems this a successful login attempt using LocalStrategy, then the browser is redirected to the home page. Otherwise, it is redirected back to the /users/login page.

Add the following route for handling logout:

router.get('/logout', function(req, res, next) {

try {

req.session.destroy();

req.logout();

res.clearCookie(sessionCookieName);

res.redirect('/');

} catch (e) { next(e); }

});

When the user requests to log out of Notes, they are to be sent to /users/logout. We'll be adding a button to the header template for this purpose. The req.logout function instructs Passport to erase their login credentials, and they are then redirected to the home page.

This function deviates from what's in the Passport documentation. There, we are told to simply call req.logout, but calling only that function sometimes results in the user not being logged out. It's necessary to destroy the session object, and to clear the cookie, in order to ensure that the user is logged out. The cookie name is defined in app.mjs, and we imported sessionCookieName for this function.

Add the LocalStrategy to Passport, as follows:

passport.use(new LocalStrategy(

async (username, password, done) => {

try {

var check = await usersModel.userPasswordCheck(username,

password);

if (check.check) {

done(null, { id: check.username, username: check.username });

} else {

done(null, false, check.message);

}

} catch (e) { done(e); }

}

));

Here is where we define our implementation of LocalStrategy. In the callback function, we call usersModel.userPasswordCheck, which makes a REST call to the user authentication service. Remember that this performs the password check and then returns an object indicating whether the user is logged in.

A successful login is indicated when check.check is true. In this case, we tell Passport to use an object containing username in the session object. Otherwise, we have two ways to tell Passport that the login attempt was unsuccessful. In one case, we use done(null, false) to indicate an error logging in, and pass along the error message we were given. In the other case, we'll have captured an exception, and pass along that exception.

You'll notice that Passport uses a callback-style API. Passport provides a done function, and we are to call that function when we know what's what. While we use an async function to make a clean asynchronous call to the backend service, Passport doesn't know how to grok the Promise that would be returned. Therefore, we have to throw a try/catch around the function body to catch any thrown exception.

Add the following functions to manipulate data stored in the session cookie:

passport.serializeUser(function(user, done) {

try {

done(null, user.username);

} catch (e) { done(e); }

});

passport.deserializeUser(async (username, done) => {

try {

var user = await usersModel.find(username);

done(null, user);

} catch(e) { done(e); }

});

The preceding functions take care of encoding and decoding authentication data for the session. All we need to attach to the session is the username, as we did in serializeUser. The deserializeUser object is called while processing an incoming HTTP request and is where we look up the user profile data. Passport will attach this to the request object.

Login/logout changes to app.mjs

A number of changes are necessary in app.mjs, some of which we've already touched on. We did carefully isolate the Passport module dependencies to routes/users.mjs. The changes required in app.mjs support the code in routes/users.mjs.

Add an import to bring in functions from the User router module, as follows:

import { router as indexRouter } from './routes/index.mjs';

import { router as notesRouter } from './routes/notes.mjs';

import { router as usersRouter, initPassport } from './routes/users.mjs';

The User router supports the /login and /logout URLs, as well as using Passport for authentication. We need to call initPassport for a little bit of initialization.

And now, let's import modules for session handling, as follows:

import session from 'express-session';

import sessionFileStore from 'session-file-store';

const FileStore = sessionFileStore(session);

export const sessionCookieName = 'notescookie.sid';

Because Passport uses sessions, we need to enable session support in Express, and these modules do so. The session-file-store module saves our session data to disk so that we can kill and restart the application without losing sessions. It's also possible to save sessions to databases with appropriate modules. A filesystem session store is suitable only when all Notes instances are running on the same server computer. For a distributed deployment situation, you'll need to use a session store that runs on a network-wide service, such as a database.

We're defining sessionCookieName here so that it can be used in multiple places. By default, express-session uses a cookie named connect.sid to store the session data. As a small measure of security, it's useful when there's a published default to use a different non-default value. Any time we use the default value, it's possible that an attacker might know a security flaw, depending on that default.

Add the following code to app.mjs:

app.use(session({

store: new FileStore({ path: "sessions" }),

secret: 'keyboard mouse',

resave: true,

saveUninitialized: true,

name: sessionCookieName

}));

initPassport(app);

Here, we initialize the session support. The field named secret is used to sign the session ID cookie. The session cookie is an encoded string that is encrypted in part using this secret. In the Express Session documentation, they suggest the keyboard cat string for the secret. But, in theory, what if Express has a vulnerability, such that knowing this secret can make it easier to break the session logic on your site? Hence, we chose a different string for the secret, just to be a little different and—perhaps—a little more secure.

Similarly, the default cookie name used by express-session is connect.sid. Here's where we change the cookie name to a non-default name.

FileStore will store its session data records in a directory named sessions. This directory will be auto-created as needed.

In case you see errors on Windows that are related to the files used by session-file-store, there are several alternate session store packages that can be used. The attraction of the session-file-store is that it has no dependency on a service like a database server. Two other session stores have a similar advantage, LokiStore, and MemoryStore. Both are configured similarly to the session-file-store package. For example, to use MemoryStore, first use npm to install the memorystore package, then use these lines of code in app.mjs:

import sessionMemoryStore from 'memorystore';

const MemoryStore = sessionMemoryStore(session);

...

app.use(session({

store: new MemoryStore({}),

secret: 'keyboard mouse',

resave: true,

saveUninitialized: true,

name: sessionCookieName

}));

This is the same initialization, but using MemoryStore instead of FileStore.

Mount the User router, as follows:

app.use('/', indexRouter);

app.use('/notes', notesRouter);

app.use('/users', usersRouter);

These are the three routers that are used in the Notes application.

Login/logout changes in routes/index.mjs

This router module handles the home page. It does not require the user to be logged in, but we want to change the display a little if they are logged in. To do so, run the following code:

router.get('/', async (req, res, next) => {

try {

let keylist = await notes.keylist();

let keyPromises = keylist.map(key => { return notes.read(key) });

let notelist = await Promise.all(keyPromises);

res.render('index', {

title: 'Notes', notelist: notelist,

user: req.user ? req.user : undefined

});

} catch (e) { next(e); }

});

Remember that we ensured that req.user has the user profile data, which we did in deserializeUser. We simply check for this and make sure to add that data when rendering the views template.

We'll be making similar changes to most of the other route definitions. After that, we'll go over the changes to the view templates, in which we use req.user to show the correct buttons on each page.

Login/logout changes required in routes/notes.mjs

The changes required here are more significant but still straightforward, as shown in the following code snippet:

import { ensureAuthenticated } from './users.mjs';

We need to use the ensureAuthenticated function to protect certain routes from being used by users who are not logged in. Notice how ES6 modules let us import just the function(s) we require. Since that function is in the User router module, we need to import it from there.

Modify the /add route handler, as shown in the following code block:

router.get('/add', ensureAuthenticated, (req, res, next) => {

try {

res.render('noteedit', {

title: "Add a Note",

docreate: true, notekey: "",

user: req.user, note: undefined

});

} catch (e) { next(e); }

});

We'll be making similar changes throughout this module, adding calls to ensureAuthenticated and using req.user to check whether the user is logged in. The goal is for several routes to ensure that the route is only available to a logged-in user, and—in those and additional routes—to pass the user object to the template.

The first thing we added is to call usersRouter.ensureAuthenticated in the route definition. If the user is not logged in, they'll be redirected to /users/login thanks to that function.

Because we've ensured that the user is authenticated, we know that req.user will already have their profile information. We can then simply pass it to the view template.

For the other routes, we need to make similar changes.

Modify the /save route handler, as follows:

router.post('/save', ensureAuthenticated, (req, res, next) => {

..

});

The /save route only requires this change to call ensureAuthenticated in order to ensure that the user is logged in.

Modify the /view route handler, as follows:

router.get('/view', (req, res, next) => {

try {

var note = await notes.read(req.query.key);

res.render('noteview', {

title: note ? note.title : "",

notekey: req.query.key,

user: req.user ? req.user : undefined,

note: note

});

} catch (e) { next(e); }

});

For this route, we don't require the user to be logged in. We do need the user's profile information, if any, sent to the view template.

Modify the /edit and /destroy route handlers, as follows:

router.get('/edit', ensureAuthenticated, (req, res, next) => {

try {

var note = await notes.read(req.query.key);

res.render('noteedit', {

title: note ? ("Edit " + note.title) : "Add a Note",

docreate: false,

notekey: req.query.key,

user: req.user,

note: note

});

} catch (e) { next(e); }

});

router.get('/destroy', ensureAuthenticated, (req, res, next) => {

try {

var note = await notes.read(req.query.key);

res.render('notedestroy', {

title: note ? `Delete ${note.title}` : "",

notekey: req.query.key,

user: req.user,

note: note

});

} catch (e) { next(e); }

});

router.post('/destroy/confirm', ensureAuthenticated, (req, res, next) => {

..

});

Remember that throughout this module, we have made the following two changes to router functions:

- We protected some routes using ensureAuthenticated to ensure that the route is available only to logged-in users.

- We passed the user object to the template.

For the routes using ensureAuthenticated, it is guaranteed that req.user will contain the user object. In other cases, such as with the /view router function, req.user may or may not have a value, and in case it does not, we make sure to pass undefined. In all such cases, the templates need to change in order to use the user object to detect whether the user is logged in, and whether to show HTML appropriate for a logged-in user.

Viewing template changes supporting login/logout

So far, we've created a backend user authentication service, a REST module to access that service, a router module to handle routes related to logging in and out of the website, and changes in app.mjs to use those modules. We're almost ready, but we've got a number of changes left that need to be made to the templates. We're passing the req.user object to every template because each one must be changed to accommodate whether the user is logged in.

This means that we can test whether the user is logged in simply by testing for the presence of a user variable.

In partials/header.hbs, make the following additions:

...

<nav class="navbar navbar-expand-md navbar-dark bg-dark">

<a class="navbar-brand" href='/'><i data-feather="home"></i></a>

<button class="navbar-toggler" type="button"

data-toggle="collapse" data-target="#navbarLogIn"

aria-controls="navbarLogIn"

aria-expanded="false"

aria-label="Toggle navigation">

<span class="navbar-toggler-icon"></span>

</button>

{{#if user}}

<div class="collapse navbar-collapse" id="navbarLogIn">

<span class="navbar-text text-dark col">{{ title }}</span>

<a class="btn btn-dark col-auto" href="/users/logout">

Log Out <span class="badge badge-light">{{ user.username

}}

</span></a>

<a class="nav-item nav-link btn btn-dark col-auto"

href='/notes/add'>ADD Note</a>

</div>

{{else}}

<div class="collapse navbar-collapse" id="navbarLogIn">

<a class="btn btn-primary" href="/users/login">Log in</a>

</div>

{{/if}}

</nav>

...

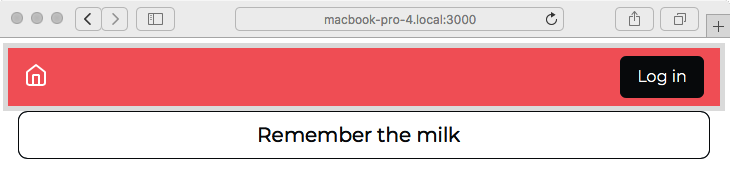

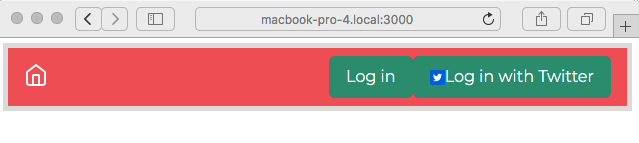

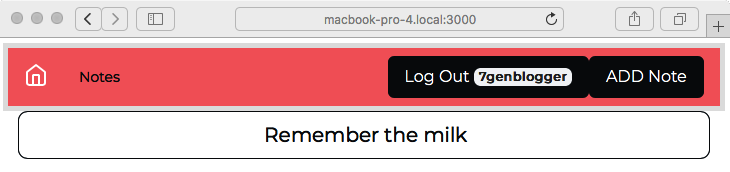

What we're doing here is controlling which buttons to display at the top of the screen, depending on whether the user is logged in. The earlier changes ensure that the user variable will be undefined if the user is logged out; otherwise, it will have the user profile object. Therefore, it's sufficient to check the user variable, as shown in the preceding code block, to render different user interface elements.

A logged-out user doesn't get the ADD Note button and gets a Log in button. Otherwise, the user gets an ADD Note button and a Log Out button. The Log in button takes the user to /users/login, while the Log Out button takes them to /users/logout. Both of those buttons are handled in routes/users.js and perform the expected function.

The Log Out button has a Bootstrap badge component displaying the username. This adds a little visual splotch in which we'll put the username that's logged in. As we'll see later, it will serve as a visual clue to the user as to their identity.

Because nav is now supporting login/logout buttons, we have changed the navbar-toggler button so that it controls a <div> with id="navbarLogIn".

We need to create views/login.hbs, as follows:

<div class="container-fluid">

<div class="row">

<div class="col-12 btn-group-vertical" role="group">

<form method='POST' action='/users/login'>

<div class="form-group">

<label for="username">User name:</label>

<input class="form-control" type='text' id='username'

name='username' value='' placeholder='User Name'/>

</div>

<div class="form-group">

<label for="password">Password:</label>

<input class="form-control" type='password' id='password'

name='password' value='' placeholder='Password'/>

</div>

<button type="submit" class="btn btn-default">Submit</button>

</form>

</div>

</div>

</div>

This is a simple form decorated with Bootstrap goodness to ask for the username and password. When submitted, it creates a POST request to /users/login, which invokes the desired handler to verify the login request. The handler for that URL will start the Passport process to decide whether the user is authenticated.

In views/notedestroy.hbs, we want to display a message if the user is not logged in. Normally, the form to cause the note to be deleted is displayed, but if the user is not logged in, we want to explain the situation, as illustrated in the following code block:

<form method='POST' action='/notes/destroy/confirm'>

<div class="container-fluid">

{{#if user}}

<input type='hidden' name='notekey' value='{{#if

note}}{{notekey}}{{/if}}'>

<p class="form-text">Delete {{note.title}}?</p>

<div class="btn-group">

<button type="submit" value='DELETE'