So far in this book, we've created a Node.js-based application stack comprising two Node.js microservices, a pair of MySQL databases, and a Redis instance. In the previous chapter, we learned how to use Docker to easily launch those services, intending to do so on a cloud hosting platform. Docker is widely used for deploying services such as ours, and there are lots of options available to us for deploying Docker on the public internet.

Because Amazon Web Services (AWS) is a mature feature-filled cloud hosting platform, we've chosen to deploy there. There are many options available for hosting Notes on AWS. The most direct path from our work in Chapter 11, Deploying Node.js Microservices with Docker, is to create a Docker Swarm cluster on AWS. That enables us to directly reuse the Docker compose file we created.

Docker Swarm is one of the available Docker orchestration systems. These systems manage a set of Docker containers on one or more Docker host systems. In other words, building a swarm requires provisioning one or more server systems, installing Docker Engine on each, and enabling swarm mode. Docker Swarm is built into Docker Engine, and it's a matter of a few commands to join those servers together in a swarm. We can then deploy Docker-based services to the swarm, and the swarm distributes the containers among the server systems, monitoring each container, restarting any that crash, and so on.

Docker Swarm can be used in any situation with multiple Docker host systems. It is not tied to AWS because we can rent suitable servers from any of hundreds of web hosting providers around the world. It's sufficiently lightweight that you can even experiment with Docker Swarm using virtual machine (VM) instances (Multipass, VirtualBox, and so on) on a laptop.

In this chapter, we will use a set of AWS Elastic Compute Cloud (EC2) instances. EC2 is the AWS equivalent of a virtual private server (VPS) that we would rent from a web hosting provider. The EC2 instances will be deployed within an AWS virtual private cloud (VPC), along with a network infrastructure on which we'll implement the deployment architecture we outlined earlier.

Let's talk a little about the cost since AWS can be costly. AWS offers what's called the Free Tier, where, for certain services, the cost is zero as long as you stay below a certain threshold. In this chapter, we'll strive to stay within the free tier, except that we will have three EC2 instances deployed for a while, which is beyond the free tier for EC2 usage. If you are sensitive to the cost, it is possible to minimize it by destroying the EC2 instances when not needed. We'll discuss how to do this later.

The following topics will be covered in this chapter:

- Signing up with AWS and configuring the AWS command-line interface (CLI)

- An overview of the AWS infrastructure to be deployed

- Using Terraform to create an AWS infrastructure

- Setting up a Docker Swarm cluster on AWS EC2

- Setting up Elastic Container Registry (ECR) repositories for Notes Docker images

- Creating a Docker stack file for deployment to Docker Swarm

- Provisioning EC2 instances for a full Docker Swarm

- Deploying the Notes stack file to the swarm

You will be learning a lot in this chapter, starting with how to get started with the AWS Management Console, setting up Identity and Access Management (IAM) users on AWS, and how to set up the AWS command-line tools. Since the AWS platform is so vast, it is important to get an overview of what it entails and the facilities we will use in this chapter. Then, we will learn about Terraform, a leading tool for configuring services on all kinds of cloud platforms. We will learn how to use it to configure AWS resources such as the VPC, the associated networking infrastructure, and how to configure EC2 instances. We'll next learn about Docker Swarm, the orchestration system built into Docker, how to set up a swarm, and how to deploy applications in a swarm.

For that purpose, we'll learn about Docker image registries, the AWS Elastic Container Registry (ECR), how to push images to a Docker registry, and how to use images from a private registry in a Docker application stack. Finally, we'll learn about creating a Docker stack file, which lets you describe Docker services to deploy in a swarm.

Let's get started.

Signing up with AWS and configuring the AWS CLI

To use AWS services you must, of course, have an AWS account. The AWS account is how we authenticate ourselves to AWS and is how AWS charges us for services.

The Amazon Free Tier is a way to experience AWS services at zero cost: https://aws.amazon.com/free/.

Documentation is available at https://docs.aws.amazon.com.

AWS has two kinds of accounts that we can use, as follows:

- The root account is what's created when we sign up for an AWS account. The root account has full access to AWS services.

- An IAM user account is a less privileged account you can create within your root account. The owner of a root account creates IAM accounts, assigning the scope of permissions to each IAM account.

It is bad form to use the root account directly since the root account has complete access to AWS resources. If the account credentials for your root account were to be leaked to the public, significant damage could be done to your business. If the credentials for an IAM user account were leaked, the damage is limited to the resources controlled by that user account as well as by the privileges assigned to that account. Furthermore, IAM user credentials can be revoked at any time, and then new credentials generated, preventing anyone who is holding the leaked credentials from doing any further damage. Another security measure is to enable multi-factor authentication (MFA) for all accounts.

If you have not already done so, proceed to the AWS website at one of the preceding links and sign up for an account. Remember that the account created that way is your AWS root account.

Our first step is to familiarize ourselves with the AWS Management Console.

Finding your way around the AWS account

Because there are so many services on the AWS platform, it can seem like a maze of twisty little passages, all alike. However, with a little orientation, we can find our way around.

First, look at the navigation bar at the top of the window. On the right, there are three dropdowns. The first has your account name and has account-related choices. The second lets you select which AWS region is your default. AWS has divided its infrastructure into regions, which essentially means the area of the world where AWS data centers are located. The third connects you with AWS Support.

On the left is a dropdown marked Services. This shows you the list of all AWS services. Since the Services list is unwieldy, AWS gives you a search box. Simply type in the name of the service, and it will show up. The AWS Management Console home page also has this search box.

While we are finding our way around, let's record the account number for the root account. We'll need this information later. In the Account dropdown, select My Account. The account ID is there, along with your account name.

It is recommended to set up MFA on your AWS root account. MFA simply means to authenticate a person in multiple ways. For example, a service might use a code number sent via a text message as a second authentication method, alongside asking for a password. The theory is that the service is more certain of who we are if it verifies both that we've entered a correct password and that we're carrying the same cell phone we had carried on other days.

To set up MFA on your root account, go to the My Security Credentials dashboard. A link to that dashboard can be found in the AWS Management Console menu bar. This brings you to a page controlling all forms of authentication with AWS. From there, you follow the directions on the AWS website. There are several possible tools for implementing MFA. The simplest tool is to use the Google Authenticator application on your smartphone. Once you set up MFA, every login to the root account will require a code to be entered from the authenticator app.

So far, we have dealt with the online AWS Management Console. Our real goal is to use command-line tools, and to do that, we need the AWS CLI installed and configured on our laptop. Let's take care of that next.

Setting up the AWS CLI using AWS authentication credentials

The AWS CLI tool is a download available through the AWS website. Under the covers, it uses the AWS application programming interface (API), and it also requires that we download and install authentication tokens.

Once you have an account, we can prepare the AWS CLI tool.

Instructions to install the AWS CLI can be found here: https://docs.aws.amazon.com/cli/latest/userguide/install-cliv2.html.

Instructions to configure the AWS CLI can be found here: https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-configure.html.

Once you have installed the AWS CLI tool on your laptop, we must configure what is known as a profile.

AWS supplies an AWS API that supports a broad range of tools for manipulating the AWS infrastructure. The AWS CLI tools use that API, as do third-party tools such as Terraform. Using the API requires access tokens, so of course, both the AWS CLI and Terraform require those same tokens.

To get the AWS API access tokens, go to the My Security Credentials dashboard and click on the Access Keys tab.

There will be a button marked Create New Access Key. Click on this and you will be shown two security tokens, the Access Key ID and the Secret Access Key. You will be given a chance to download a comma-separated values (CSV) file containing these keys. The CSV file looks like this:

$ cat ~/Downloads/accessKeys.csv

Access key ID,Secret access key

AKIAZKY7BHGBVWEKCU7H,41WctREbazP9fULN1C5CrQ0L92iSO27fiVGJKU2A

You will receive a file that looks like this. These are the security tokens that identify your account. Don't worry, as no secrets are being leaked in this case. Those particular credentials have been revoked. The good news is that you can revoke these credentials at any time and download new credentials.

Now that we have the credentials file, we can configure an AWS CLI profile.

The aws configure command, as the name implies, takes care of configuring your AWS CLI environment. This asks a series of questions, the first two of which are those keys. The interaction looks like this:

$ aws configure --profile root-user

AWS Access Key ID [****************E3GA]: ... ENTER ACCESS KEY

AWS Secret Access Key [****************J9cp]: ... ENTER SECRET KEY

Default region name [us-west-2]:

Default output format [json]:

For the first two prompts, paste in the keys you downloaded. The Region name prompt selects the default Amazon AWS data center in which your service will be provisioned. AWS has facilities all around the world, and each locale has a code name such as us-west-2 (located in Oregon). The last prompt asks how you wish the AWS CLI to present information to you.

For the region code, in the AWS console, take a look at the Region dropdown. This shows you the available regions, describing locales, and the region code for each. For the purpose of this project, it is good to use an AWS region located near you. For production deployment, it is best to use the region closest to your audience. It is possible to configure a deployment that works across multiple regions so that you can serve clients in multiple areas, but that implementation is way beyond what we'll cover in this book.

By using the --profile option, we ensured that this created a named profile. If we had left off that option, we would have instead created a profile named default. For any of the aws commands, the --profile option selects which profile to use. As the name suggests, the default profile is the one used if we leave off the --profile option.

A better choice is to be explicit at all times in which an AWS identity is being used. Some guides suggest to not create a default AWS profile at all, but instead to always use the --profile option to be certain of always using the correct AWS profile.

An easy way to verify that AWS is configured is to run the following commands:

$ aws s3 ls

Unable to locate credentials. You can configure credentials by running "aws configure".

$ aws s3 ls --profile root-user

$ export AWS_PROFILE=root-user

$ aws s3 ls

The AWS Simple Storage Service (S3) is a cloud file-storage system, and we are running these commands solely to verify the correct installation of the credentials. The ls command lists any files you have stored in S3. We don't care about the files that may or may not be in an S3 bucket, but whether this executes without error.

The first command shows us that execution with no --profile option, and no default profile, produces an error. If there were a default AWS profile, that would have been used. However, we did not create a default profile, so therefore no profile was available and we got an error. The second shows the same command with an explicitly named profile. The third shows the AWS_PROFILE environment variable being used to name the profile to be deployed.

Using the environment variables supported by the AWS CLI tool, such as AWS_PROFILE, lets us skip using command-line options such as --profile while still being explicit about which profile to use.

As we said earlier, it is important that we interact with AWS via an IAM user, and therefore we must learn how to create an IAM user account. Let's do that next.

Creating an IAM user account, groups, and roles

We could do everything in this chapter using our root account but, as we said, that's bad form. Instead, it is recommended to create a second user—an IAM user—and give it only the permissions required by that user.

To get to the IAM dashboard, click on Services in the navigation bar, and enter IAM. IAM stands for Identity and Access Management. Also, the My Security Credentials dashboard is part of the IAM service, so we are probably already in the IAM area.

The first task is to create a role. In AWS, roles are used to associate privileges with a user account. You can create roles with extremely limited privileges or an extremely broad range of privileges.

In the IAM dashboard, you'll find a navigation menu on the left. It has sections for users, groups, roles, and other identity management topics. Click on the Roles choice. Then, in the Roles area, click on Create Role. Perform the following steps:

- Under Type of trusted identity, select Another AWS account. Enter the account ID, which you will have recorded earlier while familiarizing yourself with the AWS account. Then, click on Next.

- On the next page, we select the permissions for this role. For our purpose, select AdministratorAccess, a privilege that grants full access to the AWS account. Then, click on Next.

- On the next page, you can add tags to the role. We don't need to do this, so click Next.

- On the last page, we give a name to the role. Enter admin because this role has administrator permissions. Click on Create Role.

You'll see that the role, admin, is now listed in the Role dashboard. Click on admin and you will be taken to a page where you can customize the role further. On this page, notice the characteristic named Role ARN. Record this Amazon Resource Name (ARN) for future reference.

ARNs are identifiers used within AWS. You can reliably use this ARN in any area of AWS where we can specify a role. ARNs are used with almost every AWS resource.

Next, we have to create an administrator group. In IAM, users are assigned to groups as a way of passing roles and other attributes to a group of IAM user accounts. To do this, perform the following steps:

- In the left-hand navigation menu, click on Group, and then, in the group dashboard, click on Create Group.

- For the group name, enter Administrators.

- Skip the Attach Policy page, click Next Step, and then, on the Review page, simply click Create Group.

- This creates a group with no permissions and directs you back to the group dashboard.

- Click on the Administrators group, and you'll be taken to the overview page. Record the ARN for the group.

- Click on Permissions to open that tab, and then click on the Inline policies section header. We will be creating an inline policy, so click on the Click here link.

- Click on Custom Policy, and you'll be taken to the policy editor.

- For the policy name, enter AssumeAdminRole. Below that is an area where we enter a block of JavaScript Object Notation (JSON) code describing the policy. Once that's done, click the Apply Policy button.

The policy document to use is as follows:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:iam::ACCOUNT-ID:role/admin"

}

]

}

This describes the policy created for the Administrators group. It gives that group the rights we specified in the admin role earlier. The Resource tag is where we enter the ARN for the admin group that was created earlier. Make sure to put the entire ARN into this field.

Navigate back to the Groups area, and click on Create Group again. We'll create a group, NotesDeveloper, for use by developers assigned to the Notes project. It will give those user accounts some additional privileges. Perform the following steps:

- Enter NotesDeveloper as the group name. Then, click Next.

- For the Attach Policy page, there is a long list of policies to consider; for example, AmazonRDSFullAccess, AmazonEC2FullAccess, IAMFullAccess, AmazonEC2ContainerRegistryFullAccess, AmazonS3FullAccess, AdministratorAccess, and AmazonElasticFileSystemFullAccess.

- Then, click Next, and if everything looks right on the Review page, click Create Group.

These policies cover the services required to finish this chapter. AWS error messages that stipulate that the user is not privileged enough to access that feature do a good job of telling you the required privilege. If it is a privilege the user needs, then come back to this group and add the privilege.

In the left-hand navigation, click on Users and then on Create User. This starts the steps involved in creating an IAM user, described as follows:

- For the username, enter notes-app, since this user will manage all resources related to the Notes application. For Access type, click on both Programmatic access and AWS management console access since we will be using both. The first grants the ability to use the AWS CLI tools, while the second covers the AWS console. Then, click on Next.

- For permissions, select Add User to Group and then select both the Administrators and NotesDeveloper groups. This adds the user to the groups you select. Then, click on Next.

- There is nothing more to do, so keep clicking Next until you get to the Review page. If you're satisfied, click on Create user.

You'll be taken to a page that declares Success. On this page, AWS makes available access tokens (a.k.a. security credentials) that can be used with this account. Download these credentials before you do anything else. You can always revoke the credentials and generate new access tokens at any time.

Your newly created user is now listed in the Users section. Click on that entry, because we have a couple of data items to record. The first is obviously the ARN for the user account. The second is a Uniform Resource Locator (URL) you can use to sign in to AWS as this user. For that URL, click on the Security Credentials tab and the sign-in link will be there.

It is recommended to also set up MFA for the IAM account. The My Security Credentials choice in the AWS taskbar gets you to the screen containing the button to set up MFA. Refer back a few pages to our discussion of setting up MFA for the root account.

To test the new user account, sign out and then go to the sign-in URL. Enter the username and password for the account, and then sign in.

Before finishing this section, return to the command line and run the following command:

$ aws configure --profile notes-app

... Fill in configuration

This will create another AWS CLI profile, this time for the notes-app IAM user.

Using the AWS CLI, we can list the users in our account, as follows:

$ aws iam list-users --profile root-user

{

"Users": [ {

"Path": "/",

"UserName": "notes-app",

"UserId": "AIDARNEXAMPLEYM35LE",

"Arn": "arn:aws:iam::USER-ID:user/notes-app",

"CreateDate": "2020-03-08T02:19:39+00:00",

"PasswordLastUsed": "2020-04-05T15:34:28+00:00"

}

]

}

This is another way to verify that the AWS CLI is correctly installed. This command queries the user information from AWS, and if it executes without error then you've configured the CLI correctly.

AWS CLI commands follow a similar structure, where there is a series of sub-commands followed by options. In this case, the sub-commands are aws, iam, and list-users. The AWS website has extensive online documentation for the AWS CLI tool.

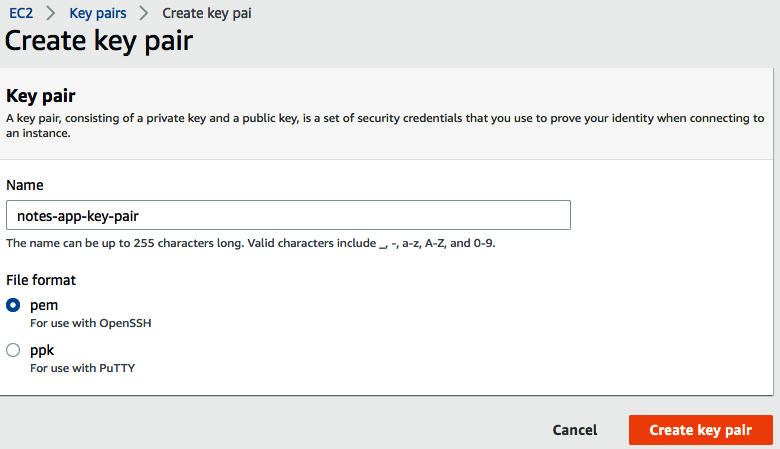

Creating an EC2 key pair

Since we'll be using EC2 instances in this exercise, we need an EC2 key pair. This is an encrypted certificate that serves the same purpose as the normal Secure Shell (SSH) key we use for passwordless login to a server. In fact, the key-pair file serves the same purpose, allowing passwordless login with SSH to EC2 instances. Perform the following steps:

- Log in to the AWS Management Console and then select the region you're using.

- Next, navigate to the EC2 dashboard—for example, by entering EC2 in the search box.

- In the navigation sidebar, there is a section labeled Network & Security, containing a link for Key pair.

- Click on that link. In the upper-right corner is a button marked Create key pair. Click on this button, and you will be taken to the following screen:

- Enter the desired name for the key pair. Depending on the SSH client you're using, use either a pem (used for the ssh command) or a ppk (used for PuTTY) formatted key-pair file.

- Click on Create key pair and you'll be returned to the dashboard, and the key-pair file will download in your browser.

- After the key-pair file is downloaded, it is required to make it read-only, which you can do by using the following command:

$ chmod 400 /path/to/keypairfile.pem

Substitute here the pathname where your browser downloaded the file.

For now, just make sure this file is correctly stored somewhere. When we deploy EC2 instances, we'll talk more about how to use it.

We have familiarized ourselves with the AWS Management Console, and created for ourselves an IAM user account. We have proved that we can log in to the console using the sign-in URL. While doing that, we copied down the AWS access credentials for the account.

We have completed the setup of the AWS command-line tools and user accounts. The next step is to set up Terraform.

An overview of the AWS infrastructure to be deployed

AWS is a complex platform with dozens of services available to us. This project will touch on only the part required to deploy Notes as a Docker swarm on EC2 instances. In this section, let's talk about the infrastructure and AWS services we'll put to use.

An AWS VPC is what it sounds like—namely, a service within AWS where you build your own private cloud service infrastructure. The AWS team designed the VPC service to look like something that you would construct in your own data center, but implemented on the AWS infrastructure. This means that the VPC is a container to which everything else we'll discuss is attached.

The AWS infrastructure is spread across the globe into what AWS calls regions. For example, us-west-1 refers to Northern California, us-west-2 refers to Oregon, and eu-central-1 refers to Frankfurt. For production deployment, it is recommended to use a region nearer your customers, but for experimentation, it is good to use the region closest to you. Within each region, AWS further subdivides its infrastructure into availability zones (a.k.a. AZs). An AZ might correspond to a specific building at an AWS data center site, but AWS often recommends that we deploy infrastructure to multiple AZs for reliability. In case one AZ goes down, the service can continue in the AZs that are running.

When we allocate a VPC, we specify an address range for resources deployed within the VPC. The address range is specified with a Classless Inter-Domain Routing (CIDR) specifier. These are written as 10.3.0.0/16 or 10.3.20.0/24, which means any Internet Protocol version 4 (IPv4) address starting with 10.3 and 10.3.20, respectively.

Every device we attach to a VPC will be attached to a subnet, a virtual object similar to an Ethernet segment. Each subnet will be assigned a CIDR from the main range. A VPC assigned the 10.3.0.0/16 CIDR might have a subnet with a CIDR of 10.3.20.0/24. Devices attached to the subnet will have an IP address assigned within the range indicated by the CIDR for the subnet.

EC2 is AWS's answer to a VPS that you might rent from any web hosting provider. An EC2 instance is a virtual computer in the same sense that Multipass or VirtualBox lets you create a virtual computer on your laptop. Each EC2 instance is assigned a central processing unit (CPU), memory, disk capacity, and at least one network interface. Hence, an EC2 instance is attached to a subnet and is assigned an IP address from the subnet's assigned range.

By default, a device attached to a subnet has no internet access. The internet gateway and network address translation (NAT) gateway resources on AWS play a critical role in connecting resources attached to a VPC via the internet. Both are what is known as an internet router, meaning that both handle the routing of internet traffic from one network to another. Because a VPC contains a VPN, these gateways handle traffic between that network and the public internet, as follows:

- Internet gateway: This handles two-way routing, allowing a resource allocated in a VPC to be reachable from the public internet. An internet gateway allows external traffic to enter the VPC, and it also allows resources in the VPC to access resources on the public internet.

- NAT gateway: This handles one-way routing, meaning that resources on the VPC will be able to access resources on the public internet, but does not allow external traffic to enter the VPC. To understand the NAT gateway, think about a common home Wi-Fi router because they also contain a NAT gateway. Such a gateway will manage a local IP address range such as 192.168.0.0/16, while the internet service provider (ISP) might assign a public IP address such as 107.123.42.231 to the connection. Local IP addresses, such as 192.168.1.45, will be assigned to devices connecting to the NAT gateway. Those local IP addresses do not appear in packets sent to the public internet. Instead, the NAT gateway translates the IP addresses to the public IP address of the gateway, and then when reply packets arrive, it translates the IP address to that of the local device. NAT translates IP addresses from the local network to the IP address of the NAT gateway.

In practical terms, this determines the difference between a private subnet and a public subnet. A public subnet has a routing table that sends traffic for the public internet to an internet gateway, whereas a private subnet sends its public internet traffic to a NAT gateway.

Routing tables describe how to route internet traffic. Inside any internet router, such as an internet gateway or a NAT gateway, is a function that determines how to handle internet packets destined for a location other than the local subnet. The routing function matches the destination address against routing table entries, and each routing table entry says where to forward matching packets.

Attached to each device deployed in a VPC is a security group. A security group is a firewall controlling what kind of internet traffic can enter or leave that device. For example, an EC2 instance might have a web server supporting HTTP (port 80) and HTTPS (port 443) traffic, and the administrator might also require SSH access (port 22) to the instance. The security group would be configured to allow traffic from any IP address on ports 80 and 443 and to allow traffic on port 22 from IP address ranges used by the administrator.

A network access control list (ACL) is another kind of firewall that's attached to subnets. It, too, describes which traffic is allowed to enter or leave the subnet. The security groups and network ACLs are part of the security protections provided by AWS.

If a device connected to a VPC does not seem to work correctly, there might be an error in the configuration of these parts. It's necessary to check the security group attached to the device, and to the NAT gateway or internet gateway, and that the device is connected to the expected subnet, the routing table for the subnet, and any network ACLs.

Using Terraform to create an AWS infrastructure

Terraform is an open source tool for configuring a cloud hosting infrastructure. It uses a declarative language to describe the configuration of cloud services. Through a long list of plugins, called providers, it has support for a variety of cloud services. In this chapter, we'll use Terraform to describe AWS infrastructure deployments.

Alternatively, you will find the Terraform CLI available in many package management systems.

Once installed, you can view the Terraform help with the following command:

$ terraform help

Usage: terraform [-version] [-help] <command> [args]

The available commands for execution are listed below.

The most common, useful commands are shown first, followed by

less common or more advanced commands. If you're just getting

started with Terraform, stick with the common commands. For the

other commands, please read the help and docs before usage.

Common commands:

apply Builds or changes infrastructure

console Interactive console for Terraform interpolations

destroy Destroy Terraform-managed infrastructure

...

init Initialize a Terraform working directory

output Read an output from a state file

plan Generate and show an execution plan

providers Prints a tree of the providers used in the configuration

...

Terraform files have a .tf extension and use a fairly simple, easy-to-understand declarative syntax. Terraform doesn't care which filenames you use or the order in which you create the files. It simply reads all the files with a .tf extension and looks for resources to deploy. These files do not contain executable code, but declarations. Terraform reads these files, constructs a graph of dependencies, and works out how to implement the declarations on the cloud infrastructure being used.

An example declaration is as follows:

variable "base_cidr_block" { default = "10.1.0.0/16" }

resource "aws_vpc" "main" {

cidr_block = var.base_cidr_block

}

The first word, resource or variable, is the block type, and in this case, we are declaring a resource and a variable. Within the curly braces are the arguments to the block, and it is helpful to think of these as attributes.

Blocks have labels—in this case, the labels are aws_vpc and main. We can refer to this specific resource elsewhere by joining the labels together as aws_vpc.main. The name, aws_vpc, comes from the AWS provider and refers to VPC elements. In many cases, a block—be it a resource or another kind—will support attributes that can be accessed. For example, the CIDR for this VPC can be accessed as aws_vpc.main.cidr_block.

The general structure is as follows:

<BLOCK TYPE> "<BLOCK LABEL>" "<BLOCK LABEL>" {

# Block body

<IDENTIFIER> = <EXPRESSION> # Argument

}

The block types include resource, which declares something related to the cloud infrastructure, variable, which declares a named value, output, which declares a result from a module, and a few others.

The structure of the block labels varies depending on the block type. For resource blocks, the first block label refers to the kind of resource, while the second is a name for the specific instance of that resource.

The type of arguments also varies depending on the block type. The Terraform documentation has an extensive reference to every variant.

A Terraform module is a directory containing Terraform scripts. When the terraform command is run in a directory, it reads every script in that directory to build a tree of objects.

Within modules, we are dealing with a variety of values. We've already discussed resources, variables, and outputs. A resource is essentially a value that is an object related to something on the cloud hosting platform being used. A variable can be thought of as an input to a module because there are multiple ways to provide a value for a variable. The output values are, as the name implies, the output from a module. Outputs can be printed on the console when a module is executed, or saved to a file and then used by other modules. The code relating to this can be seen in the following snippet:

variable "aws_region" {

default = "us-west-2"

type = "string"

description = "Where in the AWS world the service will be hosted"

}

output "vpc_arn" { value = aws_vpc.notes.arn }

This is what the variable and output declarations look like. Every value has a data type. For variables, we can attach a description to aid in their documentation. The declaration uses the word default rather than value because there are multiple ways (such as Terraform command-line arguments) to specify a value for a variable. Terraform users can override the default value in several ways, such as the --var or --var-file command-line options.

Another type of value is local. Locals exist only within a module because they are neither input values (variables) nor output values, as illustrated in the following code snippet:

locals {

vpc_cidr = "10.1.0.0/16"

cidr_subnet1 = cidrsubnet(local.vpc_cidr, 8, 1)

cidr_subnet2 = cidrsubnet(local.vpc_cidr, 8, 2)

cidr_subnet3 = cidrsubnet(local.vpc_cidr, 8, 3)

cidr_subnet4 = cidrsubnet(local.vpc_cidr, 8, 4)

}

In this case, we've defined several locals related to the CIDR of subnets to be created within a VPC. The cidrsubnet function is used to calculate subnet masks such as 10.1.1.0/24.

Another important feature of Terraform is the provider plugin. Each cloud system supported by Terraform requires a plugin module that defines the specifics of using Terraform with that platform.

One effect of the provider plugins is that Terraform makes no attempt to be platform-independent. Instead, all declarable resources for a given platform are unique to that platform. You cannot directly reuse Terraform scripts for AWS on another system such as Azure because the resource objects are all different. What you can reuse is the knowledge of how Terraform approaches the declaration of cloud resources.

Another task is to look for a Terraform extension for your programming editor. Some of them have support for Terraform, with syntax coloring, checking for simple errors, and even code completion.

That's enough theory, though. To really learn this, we need to start using Terraform. In the next section, we'll begin by implementing the VPC structure within which we'll deploy the Notes application stack.

Configuring an AWS VPC with Terraform

An AWS VPC is what it sounds like—namely, a service within AWS to hold cloud services that you've defined. The AWS team designed the VPC service to look something like what you would construct in your own data center, but implemented on the AWS infrastructure.

In this section, we will construct a VPC consisting of a public subnet and a private subnet, an internet gateway, and security group definitions.

In the project work area, create a directory, terraform-swarm, that is a sibling to the notes and users directories.

In that directory, create a file named main.tf containing the following:

provider "aws" {

profile = "notes-app"

region = var.aws_region

}

This says to use the AWS provider plugin. It also configures this script to execute using the named AWS profile. Clearly, the AWS provider plugin requires AWS credential tokens in order to use the AWS API. It knows how to access the credentials file set up by aws configure.

As shown here, the AWS plugin will look for the AWS credentials file in its default location, and use the notes-app profile name.

In addition, we have specified which AWS region to use. The reference, var.aws_region, is a Terraform variable. We use variables for any value that can legitimately vary. Variables can be easily customized to any value in several ways.

To support the variables, we create a file named variables.tf, starting with this:

variable "aws_region" { default = "us-west-2" }

The default attribute sets a default value for the variable. As we saw earlier, the declaration can also specify the data type for a variable, and a description.

With this, we can now run our first Terraform command, as follows:

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Checking for available provider plugins...

- Downloading plugin for provider "aws" (hashicorp/aws) 2.56.0...

The following providers do not have any version constraints in configuration, so the latest version was installed.

...

* provider.aws: version = "~> 2.56"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work.

This initializes the current directory as a Terraform workspace. You'll see that it creates a directory, .terraform, and a file named terraform.tfstate containing data collected by Terraform. The .tfstate files are what is known as state files. These are in JSON format and store the data Terraform collects from the platform (in this case, AWS) regarding what has been deployed. State files must not be committed to source code repositories because it is possible for sensitive data to end up in those files. Therefore, a .gitignore file listing the state files is recommended.

The instructions say we should run terraform plan, but before we do that, let's declare a few more things.

To declare the VPC and its related infrastructure, let's create a file named vpc.tf. Start with the following command:

resource "aws_vpc" "notes" {

cidr_block = var.vpc_cidr

enable_dns_support = var.enable_dns_support

enable_dns_hostnames = var.enable_dns_hostnames

tags = {

Name = var.vpc_name

}

}

This declares the VPC. This will be the container for the infrastructure we're creating.

The cidr_block attribute determines the IPv4 address space that will be used for this VPC. The CIDR notation is an internet standard, and an example would be 10.0.0.0/16. That CIDR would cover any IP address starting with the 10.0 octets.

The enable_dns_support and enable_dns_hostnames attributes determine whether Domain Name System (DNS) names will be generated for certain resources attached to the VPC. DNS names can assist with one resource finding other resources at runtime.

The tags attribute is used for attaching name/value pairs to resources. The name tag is used by AWS to have a display name for the resource. Every AWS resource has a computer-generated, user-unfriendly name with a long coded string and, of course, we humans need user-friendly names for things. The name tag is useful in that regard, and the AWS Management Console will respond by using this name in the dashboards.

In variables.tf, add this to support these resource declarations:

variable "enable_dns_support" { default = true }

variable "enable_dns_hostnames" { default = true }

variable "project_name" { default = "notes" }

variable "vpc_name" { default = "notes-vpc" }

variable "vpc_cidr" { default = "10.0.0.0/16" }

These values will be used throughout the project. For example, var.project_name will be widely used as the basis for creating name tags for deployed resources.

Add the following to vpc.tf:

data "aws_availability_zones" "available" {

state = "available"

}

Where resource blocks declare something on the hosting platform (in this case, AWS), data blocks retrieve data from the hosting platform. In this case, we are retrieving a list of AZs for the currently selected region. We'll use this later when declaring certain resources.

Configuring the AWS gateway and subnet resources

Remember that a public subnet is associated with an internet gateway, and a private subnet is associated with a NAT gateway. The difference determines what type of internet access devices attached to each subnet have.

Create a file named gw.tf containing the following:

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.notes.id

tags = {

Name = "${var.project_name}-IGW"

}

}

resource "aws_eip" "gw" {

vpc = true

depends_on = [ aws_internet_gateway.igw ]

tags = {

Name = "${var.project_name}-EIP"

}

}

resource "aws_nat_gateway" "gw" {

subnet_id = aws_subnet.public1.id

allocation_id = aws_eip.gw.id

tags = {

Name = "${var.project_name}-NAT"

}

}

This declares the internet gateway and the NAT gateway. Remember that internet gateways are used with public subnets, and NAT gateways are used with private subnets.

An Elastic IP (EIP) resource is how a public internet IP address is assigned. Any device that is to be visible to the public must be on a public subnet and have an EIP. Because the NAT gateway faces the public internet, it must have an assigned public IP address and an EIP.

For the subnets, create a file named subnets.tf containing the following:

resource "aws_subnet" "public1" {

vpc_id = aws_vpc.notes.id

cidr_block = var.public1_cidr

availability_zone = data.aws_availability_zones.available.names[0]

tags = {

Name = "${var.project_name}-net-public1"

}

}

resource "aws_subnet" "private1" {

vpc_id = aws_vpc.notes.id

cidr_block = var.private1_cidr

availability_zone = data.aws_availability_zones.available.names[0]

tags = {

Name = "${var.project_name}-net-private1"

}

}

This declares the public and private subnets. Notice that these subnets are assigned to a specific AZ. It would be easy to expand this to support more subnets by adding subnets named public2, public3, private2, private3, and so on. If you do so, it would be helpful to spread these subnets across AZs. Deployment is recommended in multiple AZs so that if one AZ goes down, the application is still running in the AZ that's still up and running.

This notation with [0] is what it looks like—an array. The value, data.aws_availability_zones.available.names, is an array, and adding [0] does access the first element of that array, just as you'd expect. Arrays are just one of the data structures offered by Terraform.

Each subnet has its own CIDR (IP address range), and to support this, we need these CIDR assignments listed in variables.tf, as follows:

variable "vpc_cidr" { default = "10.0.0.0/16" }

variable "public1_cidr" { default = "10.0.1.0/24" }

variable "private1_cidr" { default = "10.0.3.0/24" }

These are the CIDRs corresponding to the resources declared earlier.

For these pieces to work together, we need appropriate routing tables to be configured. Create a file named routing.tf containing the following:

resource "aws_route" "route-public" {

route_table_id = aws_vpc.notes.main_route_table_id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

resource "aws_route_table" "private" {

vpc_id = aws_vpc.notes.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.gw.id

}

tags = {

Name = "${var.project_name}-rt-private"

}

}

resource "aws_route_table_association" "public1" {

subnet_id = aws_subnet.public1.id

route_table_id = aws_vpc.notes.main_route_table_id

}

resource "aws_route_table_association" "private1" {

subnet_id = aws_subnet.private1.id

route_table_id = aws_route_table.private.id

}

To configure the routing table for the public subnets, we modify the routing table connected to the main routing table for the VPC. What we're doing here is adding a rule to that table, saying that public internet traffic is to be sent to the internet gateway. We also have a route table association declaring that the public subnet uses this route table.

For aws_route_table.private, the routing table for private subnets, the declaration says to send public internet traffic to the NAT gateway. In the route table associations, this table is used for the private subnet.

Earlier, we said the difference between a public and private subnet is whether public internet traffic is sent to the internet gateway or the NAT gateway. These declarations are how that's implemented.

In this section, we've declared the VPC, subnets, gateways, and routing tables—in other words, the infrastructure within which we'll deploy our Docker Swarm.

Before attaching the EC2 instances in which the swarm will live, let's deploy this to AWS and explore what gets set up.

Deploying the infrastructure to AWS using Terraform

We have now declared the bones of the AWS infrastructure we'll need. This is the VPC, the subnets, and routing tables. Let's deploy this to AWS and use the AWS console to explore what was created.

Earlier, we ran terraform init to initialize Terraform in our working directory. When we did so, it suggested that we run the following command:

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

data.aws_availability_zones.available: Refreshing state...

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_eip.gw will be created

+ resource "aws_eip" "gw" {

+ allocation_id = (known after apply)

+ association_id = (known after apply)

+ customer_owned_ip = (known after apply)

+ domain = (known after apply)

+ id = (known after apply)

+ instance = (known after apply)

+ network_interface = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ public_ipv4_pool = (known after apply)

+ tags = {

+ "Name" = "notes-EIP"

}

+ vpc = true

}

...

This command scans the Terraform files in the current directory and first determines that everything has the correct syntax, that all the values are known, and so forth. If any problems are encountered, it stops right away with error messages such as the following:

Error: Reference to undeclared resource

on outputs.tf line 8, in output "subnet_public2_id":

8: output "subnet_public2_id" { value = aws_subnet.public2.id }

A managed resource "aws_subnet" "public2" has not been declared in the root

module.

Terraform's error messages are usually self-explanatory. In this case, the cause was a decision to use only one public and one private subnet. This code was left over from there being two of each. Therefore, this error referred to stale code that was easy to remove.

The other thing terraform plan does is construct a graph of all the declarations and print out a listing. This gives you an idea of what Terraform intends to deploy on to the chosen cloud platform. It is therefore your opportunity to examine the intended infrastructure and make sure it is what you want to use.

Once you're satisfied, run the following command:

$ terraform apply

data.aws_availability_zones.available: Refreshing state...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

...

Plan: 10 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

...

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.

Outputs:

aws_region = us-west-2

igw_id = igw-006eb101f8cb423d4

private1_cidr = 10.0.3.0/24

public1_cidr = 10.0.1.0/24

subnet_private1_id = subnet-0a9044daea298d1b2

subnet_public1_id = subnet-07e6f8ed6cc6f8397

vpc_arn = arn:aws:ec2:us-west-2:098106984154:vpc/vpc-074b2dfa7b353486f

vpc_cidr = 10.0.0.0/16

vpc_id = vpc-074b2dfa7b353486f

vpc_name = notes-vpc

With terraform apply, the report shows the difference between the actual deployed state and the desired state as reflected by the Terraform files. In this case, there is no deployed state, so therefore everything that is in the files will be deployed. In other cases, you might have deployed a system and have made a change, in which case Terraform will work out which changes have to be deployed based on the changes you've made. Once it calculates that, Terraform asks for permission to proceed. Finally, if we have said yes, it will proceed and launch the desired infrastructure.

Once finished, it tells you what happened. One result is the values of the output commands in the scripts. These are both printed on the console and are saved in the backend state file.

To see what was created, let's head to the AWS console and navigate to the VPC area, as follows:

Compare the VPC ID in the screenshot with the one shown in the Terraform output, and you'll see that they match. What's shown here is the main routing table, and the CIDR, and other settings we made in our scripts. Every AWS account has a default VPC that's presumably meant for experiments. It is a better form to create a VPC for each project so that resources for each project are separate from other projects.

The sidebar contains links for further dashboards for subnets, route tables, and other things, and an example dashboard can be seen in the following screenshot:

For example, this is the NAT gateway dashboard showing the one created for this project.

Another way to explore is with the AWS CLI tool. Just because we have Terraform doesn't mean we are prevented from using the CLI. Have a look at the following code block:

$ aws ec2 describe-vpcs --vpc-ids vpc-074b2dfa7b353486f

{

"Vpcs": [ {

"CidrBlock": "10.0.0.0/16",

"DhcpOptionsId": "dopt-e0c05d98",

"State": "available",

"VpcId": "vpc-074b2dfa7b353486f",

"OwnerId": "098106984154",

"InstanceTenancy": "default",

"CidrBlockAssociationSet": [ {

"AssociationId": "vpc-cidr-assoc-0f827bcc4fbb9fd62",

"CidrBlock": "10.0.0.0/16",

"CidrBlockState": {

"State": "associated"

}

} ],

"IsDefault": false,

"Tags": [ {

"Key": "Name",

"Value": "notes-vpc"

} ]

} ]

}

This lists the parameters for the VPC that was created.

Remember to either configure the AWS_PROFILE environment variable or use --profile on the command line.

To list data on the subnets, run the following command:

$ aws ec2 describe-subnets --filters "Name=vpc-id,Values=vpc-074b2dfa7b353486f"

{

"Subnets": [

{ ... },

{ ... }

]

}

To focus on the subnets for a given VPC, we use the --filters option, passing in the filter named vpc-id and the VPC ID for which to filter.

For documentation relating to the EC2 sub-commands, refer to https://docs.aws.amazon.com/cli/latest/reference/ec2/index.html.

The AWS CLI tool has an extensive list of sub-commands and options. These are enough to almost guarantee getting lost, so read carefully.

In this section, we learned how to use Terraform to set up the VPC and related infrastructure resources, and we also learned how to navigate both the AWS console and the AWS CLI to explore what had been created.

Our next step is to set up an initial Docker Swarm cluster by deploying an EC2 instance to AWS.

Setting up a Docker Swarm cluster on AWS EC2

What we have set up is essentially a blank slate. AWS has a long list of offerings that could be deployed to the VPC that we've created. What we're looking to do in this section is to set up a single EC2 instance to install Docker, and set up a single-node Docker Swarm cluster. We'll use this to familiarize ourselves with Docker Swarm. In the remainder of the chapter, we'll build more servers to create a larger swarm cluster for full deployment of Notes.

A Docker Swarm cluster is simply a group of servers running Docker that have been joined together into a common pool. The code for the Docker Swarm orchestrator is bundled with the Docker Engine server but it is disabled by default. To create a swarm, we simply enable swarm mode by running docker swarm init and then run a docker swarm join command on each system we want to be part of the cluster. From there, the Docker Swarm code automatically takes care of a long list of tasks. The features for Docker Swarm include the following:

- Horizontal scaling: When deploying a Docker service to a swarm, you tell it the desired number of instances as well as the memory and CPU requirements. The swarm takes that and computes the best distribution of tasks to nodes in the swarm.

- Maintaining the desired state: From the services deployed to a swarm, the swarm calculates the desired state of the system and tracks its current actual state. Suppose one of the nodes crashes—the swarm will then readjust the running tasks to replace the ones that vaporized because of the crashed server.

- Multi-host networking: The overlay network driver automatically distributes network connections across the network of machines in the swarm.

- Secure by default: Swarm mode uses strong Transport Layer Security (TLS) encryption for all communication between nodes.

- Rolling updates: You can deploy an update to a service in such a manner where the swarm intelligently brings down existing service containers, replacing them with updated newer containers.

We will use this section to not only learn how to set up a Docker Swarm but to also learn something about how Docker orchestration works.

To get started, we'll set up a single-node swarm on a single EC2 instance in order to learn some basics, before we move on to deploying a multi-node swarm and deploying the full Notes stack.

Deploying a single-node Docker Swarm on a single EC2 instance

For a quick introduction to Docker Swarm, let's start by installing Docker on a single EC2 node. We can kick the tires by trying a few commands and exploring the resulting system.

This will involve deploying Ubuntu 20.04 on an EC2 instance, configuring it to have the latest Docker Engine, and initializing swarm mode.

Adding an EC2 instance and configuring Docker

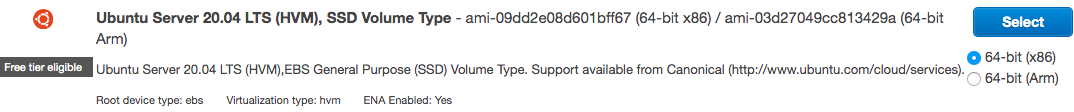

To launch an EC2 instance, we must first select which operating system to install. There are thousands of operating system configurations available. Each of these configurations is identified by an AMI code, where AMI stands for Amazon Machine Image.

To find your desired AMI, navigate to the EC2 dashboard on the AWS console. Then, click on the Launch Instance button, which starts a wizard-like interface to launch an instance. You can, if you like, go through the whole wizard since that is one way to learn about EC2 instances. We can search the AMIs via the first page of that wizard, where there is a search box.

For this exercise, we will use Ubuntu 20.04, so enter Ubuntu and then scroll down to find the correct version, as illustrated in the following screenshot:

This is what the desired entry looks like. The AMI code starts with ami- and we see one version for x86 CPUs, and another for ARM (previously Advanced RISC Machine). ARM processors, by the way, are not just for your cell phone but are also used in servers. There is no need to launch an EC2 instance from here since we will instead do so with Terraform.

Another attribute to select is the instance size. AWS supports a long list of sizes that relate to the amount of memory, CPU cores, and disk space. For a chart of the available instance types, click on the Select button to proceed to the second page of the wizard, which shows a table of instance types and their attributes. For this exercise, we will use the t2.micro instance type because it is eligible for the free tier.

Create a file named ec2-public.tf containing the following:

resource "aws_instance" "public" {

ami = var.ami_id

instance_type = var.instance_type

subnet_id = aws_subnet.public1.id

key_name = var.key_pair

vpc_security_group_ids = [ aws_security_group.ec2-public-sg.id ]

associate_public_ip_address = true

tags = {

Name = "${var.project_name}-ec2-public"

}

depends_on = [ aws_vpc.notes, aws_internet_gateway.igw ]

user_data = join("

", [

"#!/bin/sh",

file("sh/docker_install.sh"),

"docker swarm init",

"sudo hostname ${var.project_name}-public"

])

}

In the Terraform AWS provider, the resource name for EC2 instances is aws_instance. Since this instance is attached to our public subnet, we'll call it aws_instance.public. Because it is a public EC2 instance, the associate_public_ip_address attribute is set to true.

The attributes include the AMI ID, the instance type, the ID for the subnet, and more. The key_name attribute refers to the name of an SSH key we'll use to log in to the EC2 instance. We'll discuss these key pairs later. The vpc_security_group_ids attribute is a reference to a security group we'll apply to the EC2 instance. The depends_on attribute causes Terraform to wait for the creation of the resources named in the array. The user_data attribute is a shell script that is executed inside the instance once it is created.

For the AMI, instance type, and key-pair data, add these entries to variables.tf, as follows:

variable "ami_id" { default = "ami-09dd2e08d601bff67" }

variable "instance_type" { default = "t2.micro" }

variable "key_pair" { default = "notes-app-key-pair" }

The AMI ID shown here is specifically for Ubuntu 20.04 in us-west-2. There will be other AMI IDs in other regions. The key_pair name shown here should be the key-pair name you selected when creating your key pair earlier.

It is not necessary to add the key-pair file to this directory, nor to reference the file you downloaded in these scripts. Instead, you simply give the name of the key pair. In our example, we named it notes-app-key-pair, and downloaded notes-app-key-pair.pem.

The user_data feature is very useful since it lets us customize an instance after creation. We're using this to automate the Docker setup on the instances. This field is to receive a string containing a shell script that will execute once the instance is launched. Rather than insert that script inline with the Terraform code, we have created a set of files that are shell script snippets. The Terraform file function reads the named file, returning it as a string. The Terraform join function takes an array of strings, concatenating them together with the delimiter character in between. Between the two we construct a shell script. The shell script first installs Docker Engine, then initializes Docker Swarm mode, and finally changes the hostname to help us remember that this is the public EC2 instance.

Create a directory named sh in which we'll create shell scripts, and in that directory create a file named docker_install.sh. To this file, add the following:

sudo apt-get update

sudo apt-get upgrade -y

sudo apt-get -y install apt-transport-https

ca-certificates curl gnupg-agent software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg

| sudo apt-key add -

sudo apt-key fingerprint 0EBFCD88

sudo add-apt-repository

"deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

sudo apt-get update

sudo apt-get upgrade -y

sudo apt-get install -y docker-ce docker-ce-cli containerd.io

sudo groupadd docker

sudo usermod -aG docker ubuntu

sudo systemctl enable docker

This script is derived from the official instructions for installing Docker Engine Community Edition (CE) on Ubuntu. The first portion is support for apt-get to download packages from HTTPS repositories. It then configures the Docker package repository into Ubuntu, after which it installs Docker and related tools. Finally, it ensures that the docker group is created and ensures that the ubuntu user ID is a member of that group. The Ubuntu AMI defaults to this user ID, ubuntu, to be the one used by the EC2 administrator.

For this EC2 instance, we also run docker swarm init to initialize the Docker Swarm. For other EC2 instances, we do not run this command. The method used for initializing the user_data attribute lets us easily have a custom configuration script for each EC2 instance. For the other instances, we'll only run docker_install.sh, whereas for this instance, we'll also initialize the swarm.

Back in ec2-public.tf, we have two more things to do, and then we can launch the EC2 instance. Have a look at the following code block:

resource "aws_security_group" "ec2-public-sg" {

name = "${var.project_name}-public-security-group"

description = "allow inbound access to the EC2 instance"

vpc_id = aws_vpc.notes.id

ingress {

protocol = "TCP"

from_port = 22

to_port = 22

cidr_blocks = [ "0.0.0.0/0" ]

}

ingress {

protocol = "TCP"

from_port = 80

to_port = 80

cidr_blocks = [ "0.0.0.0/0" ]

}

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = [ "0.0.0.0/0" ]

}

}

This is the security group declaration for the public EC2 instance. Remember that a security group describes the rules of a firewall that is attached to many kinds of AWS objects. This security group was already referenced in declaring aws_instance.public.

The main feature of security groups is the ingress and egress rules. As the words imply, ingress rules describe the network traffic allowed to enter the resource, and egress rules describe what's allowed to be sent by the resource. If you have to look up those words in a dictionary, you're not alone.

We have two ingress rules, and the first allows traffic on port 22, which covers SSH traffic. The second allows traffic on port 80, covering HTTP. We'll add more Docker rules later when they're needed.

The egress rule allows the EC2 instance to send any traffic to any machine on the internet.

These ingress rules are obviously very strict and limit the attack surface any miscreants can exploit.

The final task is to add these output declarations to ec2-public.tf, as follows:

output "ec2-public-arn" { value = aws_instance.public.arn }

output "ec2-public-dns" { value = aws_instance.public.public_dns }

output "ec2-public-ip" { value = aws_instance.public.public_ip }

output "ec2-private-dns" { value = aws_instance.public.private_dns }

output "ec2-private-ip" { value = aws_instance.public.private_ip }

This will let us know the public IP address and public DNS name. If we're interested, the outputs also tell us the private IP address and DNS name.

Launching the EC2 instance on AWS

We have added to the Terraform declarations for creating an EC2 instance.

We're now ready to deploy this to AWS and see what we can do with it. We already know what to do, so let's run the following command:

$ terraform plan

...

Plan: 2 to add, 0 to change, 0 to destroy.

If the VPC infrastructure were already running, you would get output similar to this. The addition is two new objects, aws_instance.public and aws_security_group.ec2-public-sg. This looks good, so we proceed to deployment, as follows:

$ terraform apply

...

Plan: 2 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

...

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

Outputs:

aws_region = us-west-2

ec2-private-dns = ip-10-0-1-55.us-west-2.compute.internal

ec2-private-ip = 10.0.1.55

ec2-public-arn = arn:aws:ec2:us-west-2:098106984154:instance/i-0046b28d65a4f555d

ec2-public-dns = ec2-54-213-6-249.us-west-2.compute.amazonaws.com

ec2-public-ip = 54.213.6.249

igw_id = igw-006eb101f8cb423d4

private1_cidr = 10.0.3.0/24

public1_cidr = 10.0.1.0/24

subnet_private1_id = subnet-0a9044daea298d1b2

subnet_public1_id = subnet-07e6f8ed6cc6f8397

vpc_arn = arn:aws:ec2:us-west-2:098106984154:vpc/vpc-074b2dfa7b353486f

vpc_cidr = 10.0.0.0/16

vpc_id = vpc-074b2dfa7b353486f

vpc_name = notes-vpc

This built our EC2 instance, and we have the IP address and domain name. Because the initialization script will have required a couple of minutes to run, it is good to wait for a short time before proceeding to test the system.

The ec2-public-ip value is the public IP address for the EC2 instance. In the following examples, we will put the text PUBLIC-IP-ADDRESS, and you must of course substitute the IP address your EC2 instance is assigned.

We can log in to the EC2 instance like so:

$ ssh -i ~/Downloads/notes-app-key-pair.pem ubuntu@PUBLIC-IP-ADDRESS

The authenticity of host '54.213.6.249 (54.213.6.249)' can't be established.

ECDSA key fingerprint is SHA256:DOGsiDjWZ6rkj1+AiMcqqy/naAku5b4VJUgZqtlwPg8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '54.213.6.249' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-1009-aws x86_64)

...

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@notes-public:~$ hostname

notes-public

On a Linux or macOS system where we're using SSH, the command is as shown here. The -i option lets us specify the Privacy Enhanced Mail (PEM) file that was provided by AWS for the key pair. If on Windows using PuTTY, you'd instead tell it which PuTTY Private Key (PPK) file to use, and the connection parameters will otherwise be similar to this.

This lands us at the command-line prompt of the EC2 instance. We see that it is Ubuntu 20.04, and the hostname is set to notes-public, as reflected in Command Prompt and the output of the hostname command. This means that our initialization script ran because the hostname was the last configuration task it performed.

Handling the AWS EC2 key-pair file

Earlier, we said to safely store the key-pair file somewhere on your computer. In the previous section, we showed how to use the PEM file with SSH to log in to the EC2 instance. Namely, we use the PEM file like so:

$ ssh -i /path/to/key-pair.pem USER-ID@HOST-IP

It can be inconvenient to remember to add the -i flag every time we use SSH. To avoid having to use this option, run this command:

$ ssh-add /path/to/key-pair.pem

As the command name implies, this adds the authentication file to SSH. This has to be rerun on every reboot of the computer, but it conveniently lets us access EC2 instances without remembering to specify this option.

Testing the initial Docker Swarm

We have an EC2 instance and it should already be configured with Docker, and we can easily verify that this is the case as follows:

ubuntu@notes-public:~$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

0e03bdcc26d7: Pull complete

...

The setup script was also supposed to have initialized this EC2 instance as a Docker Swarm node, and the following command verifies whether that happened:

ubuntu@notes-public:~$ docker info

...

Swarm: active

NodeID: qfb1ljmw2fgp4ij18klowr8dp

Is Manager: true

ClusterID: 14p4sdfsdyoa8el0v9cqirm23

...

The docker info command, as the name implies, prints out a lot of information about the current Docker instance. In this case, the output includes verification that it is in Docker Swarm mode and that this is a Docker Swarm manager instance.

Let's try a couple of swarm commands, as follows:

ubuntu@notes-public:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

qfb1ljmw2fgp4ij18klowr8dp * notes-public Ready Active Leader 19.03.9

ubuntu@notes-public:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

The docker node command is for managing the nodes in a swarm. In this case, there is only one node—this one, and it is shown as not only a manager but as the swarm leader. It's easy to be the leader when you're the only node in the cluster, it seems.

The docker service command is for managing the services deployed in the swarm. In this context, a service is roughly the same as an entry in the services section of a Docker compose file. In other words, a service is not the running container but is an object describing the configuration for launching one or more instances of a given container.

To see what this means, let's start an nginx service, as follows:

ubuntu@notes-public:~$ docker service create --name nginx --replicas 1 -p 80:80 nginx

ephvpfgjwxgdwx7ab87e7nc9e

overall progress: 1 out of 1 tasks

1/1: running

verify: Service converged

ubuntu@notes-public:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ephvpfgjwxgd nginx replicated 1/1 nginx:latest *:80->80/tcp

ubuntu@notes-public:~$ docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ag8b45t69am1 nginx.1 nginx:latest notes-public Running Running 15 seconds ago

We started one service using the nginx image. We said to deploy one replica and to expose port 80. We chose the nginx image because it has a simple default HTML file that we can easily view, as illustrated in the following screenshot:

Simply paste the IP address of the EC2 instance into the browser location bar, and we're greeted with that default HTML.

We also see by using docker node ls and docker service ps that there is one instance of the service. Since this is a swarm, let's increase the number of nginx instances, as follows:

ubuntu@notes-public:~$ docker service update --replicas 3 nginx

nginx

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service converged

ubuntu@notes-public:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ephvpfgjwxgd nginx replicated 3/3 nginx:latest *:80->80/tcp

ubuntu@notes-public:~$ docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ag8b45t69am1 nginx.1 nginx:latest notes-public Running Running 9 minutes ago

ojvbs4n2iriy nginx.2 nginx:latest notes-public Running Running 13 seconds ago

fqwwk8c4tqck nginx.3 nginx:latest notes-public Running Running 13 seconds ago

Once a service is deployed, we can modify the deployment using the docker service update command. In this case, we told it to increase the number of instances using the --replicas option, and we now have three instances of the nginx container all running on the notes-public node.

We can also run the normal docker ps command to see the actual containers, as illustrated in the following code block:

ubuntu@notes-public:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6dc274c30fea nginx:latest "nginx -g 'daemon of…" About a minute ago Up About a minute 80/tcp nginx.2.ojvbs4n2iriyjifeh0ljlyvhp

4b51455fb2bf nginx:latest "nginx -g 'daemon of…" About a minute ago Up About a minute 80/tcp nginx.3.fqwwk8c4tqckspcrrzbs0qyii

e7ed31f9471f nginx:latest "nginx -g 'daemon of…" 10 minutes ago Up 10 minutes 80/tcp nginx.1.ag8b45t69am1gzh0b65gfnq14

This verifies that the nginx service with three replicas is actually three nginx containers.

In this section, we were able to launch an EC2 instance and set up a single-node Docker swarm in which we launched a service, which gave us the opportunity to familiarize ourselves with what this can do.

While we're here, there is another thing to learn—namely, how to set up the remote control of Docker hosts.

Setting up remote control access to a Docker Swarm hosted on EC2

A feature that's not well documented in Docker is the ability to control Docker nodes remotely. This will let us, from our laptop, run Docker commands on a server. By extension, this means that we will be able to manage the Docker Swarm from our laptop.

One method for remotely controlling a Docker instance is to expose the Docker Transmission Control Protocol (TCP) port. Be aware that miscreants are known to scan an internet infrastructure for Docker ports to hijack. The following technique does not expose the Docker port but instead uses SSH.

The following setup is for Linux and macOS, relying on features of SSH. To do this on Windows would rely on installing OpenSSH. From October 2018, OpenSSH became available for Windows, and the following commands may work in PowerShell (failing that, you can run these commands from a Multipass or Windows Subsystem for Linux (WSL) 2 instance on Windows):

ubuntu@notes-public:~$ logout

Connection to PUBLIC-IP-ADDRESS closed.

Exit the shell on the EC2 instance so that you're at the command line on your laptop.

Run the following command:

$ ssh-add ~/Downloads/notes-app-key-pair.pem

Identity added: /Users/david/Downloads/notes-app-key-pair.pem (/Users/david/Downloads/notes-app-key-pair.pem)

We discussed this command earlier, noting that it lets us log in to EC2 instances without having to use the -i option to specify the PEM file. This is more than a simple convenience when it comes to remotely accessing Docker hosts. The following steps are dependent on having added the PEM file to SSH, as shown here.

To verify you've done this correctly, use this command:

$ ssh ubuntu@PUBLIC-IP-ADDRESS

Normally with an EC2 instance, we would use the -i option, as shown earlier. But after running ssh-add, the -i option is no longer required.

That enables us to create the following environment variable:

$ export DOCKER_HOST=ssh://ubuntu@PUBLIC-IP-ADDRESS

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ephvpfgjwxgd nginx replicated 3/3 nginx:latest *:80->80/tcp

The DOCKER_HOST environment variable enables the remote control of Docker hosts. It relies on a passwordless SSH login to the remote host. Once you have that, it's simply a matter of setting the environment variable and you've got remote control of the Docker host, and in this case, because the host is a swarm manager, a remote swarm.

But this gets even better by using the Docker context feature. A context is a configuration required to access a remote node or swarm. Have a look at the following code snippet:

$ unset DOCKER_HOST

We begin by deleting the environment variable because we'll replace it with something better, as follows:

$ docker context create ec2 --docker host=ssh://ubuntu@PUBLIC-IP-ADDRESS

ec2

Successfully created context "ec2"

$ docker --context ec2 service ls

ID NAME MODE REPLICAS IMAGE PORTS

ephvpfgjwxgd nginx replicated 3/3 nginx:latest *:80->80/tcp

$ docker context use ec2

ec2

Current context is now "ec2"

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ephvpfgjwxgd nginx replicated 3/3 nginx:latest *:80->80/tcp

We create a context using docker context create, specifying the same SSH URL we used in the DOCKER_HOST variable. We can then use it either with the --context option or by using docker context use to switch between contexts.

With this feature, we can easily maintain configurations for multiple remote servers and switch between them with a simple command.

For example, the Docker instance on our laptop is the default context. Therefore, we might find ourselves doing this:

$ docker context use default

... run docker commands against Docker on the laptop

$ docker context use ec2

... run docker commands against Docker on the AWS EC2 machines

There are times when we must be cognizant of which is the current Docker context and when to use which context. This will be useful in the next section when we learn how to push the images to AWS ECR.

We've learned a lot in this section, so before heading to the next task, let's clean up our AWS infrastructure. There's no need to keep this EC2 instance running since we used it solely for a quick familiarization tour. We can easily delete this instance while leaving the rest of the infrastructure configured. The most effective way to so is by renaming ec2-public.tf to ec2-public.tf-disable, and to rerun terraform apply, as illustrated in the following code block:

$ mv ec2-public.tf ec2-public.tf-disable

$ terraform apply

...

Plan: 0 to add, 0 to change, 2 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.